Abstract

Surrogate models are often used to replace expensive simulations of engineering problems. The common approach is to construct a series of metamodels based on a training set, and then, from these surrogates, pick out the best one with the highest accuracy as an approximation of the computationally intensive simulation. However, because the choice of approximate model depends on design of experiments (DOEs), the traditional strategy thus increases the risk of adopting an inappropriate model. Furthermore, in the design of complex product system, because of its feature of one-of-a-kind production, acquiring more samples is very expensive and intensively time-consuming, and sometimes even impossible. Therefore, in order to save sampling cost, it is a reasonable strategy to take full advantage of all the stand-alone surrogates and then combine them into an ensemble model. Ensemble technique is an effective way to make up for the shortfalls of traditional strategy. Motivated by the previous research on ensemble of surrogates, a new technique for constructing of a more accurate ensemble of surrogates is proposed in this paper. The weights are obtained using a recursive process, in which the values of these weights are updated in each iteration until the last ensemble achieves a desirable prediction accuracy. This technique has been evaluated using five benchmark problems and one reality problem. The results show that the proposed ensemble of surrogates with recursive arithmetic average provides more ideal prediction accuracy than the stand-alone surrogates and for most problems even exceeds the previously presented ensemble techniques. Finally, we should point out that the advantages of combination over selection are still difficult to illuminate. We are still using an “insurance policy” mode rather than offering significant improvements.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the continuing updating of CPU and escalation of memory, the computer processing power has drastically increased, but the computational cost of complex high-fidelity engineering simulations often makes it impractical to rely exclusively on simulation for design optimization (Jin et al. 2001). Just taking Ford Motor Company as an example, it reported that it takes the company about 36–160 h to run one crash simulation (Wang and Shan 2007). For a two-dimension optimization problem, assuming that on average 50 iterations are needed in the optimization process, and assuming that each iteration requires one crash simulation, then the total amount of computation time would reach to as much as 75 days to 11 months, which is unacceptable in practice. In order to reduce the computational cost, surrogate models (also referred to as “metamodels”) are used to replace the expensive simulation models (Queipo et al. 2005; Viana et al. 2010). Surrogate evolves from the classical Design of Experiments (DOE) theory, in which the polynomial model is known as “response surface model”. Essentially, it is also a kind of surrogate. In addition to commonly used polynomial model, Sacks et al. (1989a, b) proposed a stochastic model, i.e., Kriging (Cresssie 1988), to treat the deterministic computer response as a realization of a random function with respect to the actual system response. Neural networks are also often applied to simulate the responses for complex systems (Papadrakakis et al. 1998). Other types of metamodels include radial basis functions (RBF) (Fang and Horstemeyer 2006), multivariate adaptive regression splines (MARS) (Friedman 1991), least interpolating polynomials (De Boor and Ron 1990), inductive learning (Langley and Simon 1995), support vector regression (SVR), and so on. In general, Kriging model is more accurate for non-linear problems than other models due to its capacity of interpolating the sample points and filtering noisy data, but it is difficult to be obtained and used because a global optimization process is involved to identify the maximum likelihood estimators. In contrary to Kriging model, polynomial models are relatively easy to be built up and clear on parameter sensitivity but unsatisfactory in accuracy because of the difficulty in determining its model structure (its highest order and the number of items) (Jin et al. 2001). The RBF model, particularly the multi-quadric RBF, can interpolate sample points and is easy to build, which thus seems to reach a trade-off between Kriging models and polynomial models. SVR has been intensively studied in the area of machine learning but seldom used in computer experiment. Its capacity of fitting of data has been tested and verified in Clarke et al. (2005), which shows that the higher accuracy was achieved, compared with all other metamodeling techniques including Kriging, polynomials, RBF and MARS in a series of test problems. Just as the author pointed out, the basic reasons why SVR outperforms others are not clear. More recent and comprehensive reviews of metamodeling can be traced to Kleijnen et al. (2005), Wang and Shan (2007), Simpson et al. (2008) and Forrester and Keane (2009).

If only one single predictor is desired, there are two strategies for us to obtain the final prediction surrogate. One is selection, which can be done using cross validation (Picard and Cook 1984; Kohavi 1995); the other is combination, which can be traced to the development of committees of neural networks by Perrone and Cooper (1993) with further refinement by Bishop (1995). Zerpa et al. (2005) and Goel et al. (2007) extended this idea to the ensemble of metamodels. Goel et al. (2007) found that multiple metamodels can be used to identify the regions of possible high errors where predictions of metamodels differ widely. Thereby this can guide the engineer to gather more sample points in this uncertain region to achieve more accurate result. In addition, the authors also found that combining of metamodels can provide us with a more robust ensemble, which can effectively eliminate the negative impact brought by inappropriate stand-alone metamodel, that is, the use of multiple surrogates acts like an insurance policy against poorly fitted models, which is also confirmed by Viana et al. (2009). Acar and Rais-Rohani (2009) proposed a combining technique with optimized weight coefficients, which are obtained by solving an optimization problem. The technique in Acar and Rais-Rohani (2009) could achieve a certain satisfactory result in some cases, nevertheless, it has several deficiencies as following: (1) The optimization problem used to determine the weight coefficients could not ensure obtaining a global optimal solution, and is easily trapped into a local optimum, and even has no local optimal solution; and (2) The range of weight coefficients are not constrained to w i ≥ 0 when solving the optimization problem, as w i < 0 is difficult to be explained in actual problems. In Acar and Rais-Rohani (2009), authors get the weights by minimizing GMSE or RMSEv using a formal optimization algorithm in MATLAB. In terms of minimizing RMSEv, the technique is essentially the same as the Bishop’s approach on minimizing the mean square error (MSE). Inspired by the works of Bishop (1995) and Acar and Rais-Rohani (2009), Viana et al. (2009) also obtained the weight coefficients by minimizing MSE. Viana et al. (2009) got the solution of the weight via Lagrange multipliers, and the authors replaced the real error covariance matrix C with cross-validation error matrix, with the corresponding method named OWS (optimal weighted surrogate) in the literature. However, OWS is essentially the same as the approach based on minimizing GMSE in Acar and Rais-Rohani (2009). In order to make the solution range between zero and one, Viana et al. (2009) only used the diagonal elements of C, with the corresponding method named OWS diag in the literature, and just as the authors said in their paper, this method has similar structure and prediction accuracy to the approach named heuristic computation of the weights in Goel et al. (2007). In addition to these ensemble techniques mentioned above, there are several other ensemble techniques appeared in the literatures, such as BestPRESS (Goel et al. 2007), OWS ideal Viana et al. (2009), and so on. Essentially, OWS ideal Viana et al. (2009) is the same as minimizing RMSEv in Acar and Rais-Rohani (2009). The difference between them is that RMSEv in Acar and Rais-Rohani (2009) employs a formal optimization algorithm, while OWS ideal Viana et al. (2009) is obtained via Lagrange multipliers.

Motivated by the existing works, the ensemble technique with recursive arithmetic average is proposed in this paper. The weights are obtained using a recursive process, in which the values of these weights are updated in each iteration until the last ensemble reach to a desirable prediction accuracy. This technique builds an ensemble of metamodels by recursive arithmetic average several times rather than arithmetically averaging the responses of the stand-alone metamodels just once. In order to illustrate the performance of the proposed technique, four types of metamodeling techniques (polynomial function, Kriging, RBF and SVR) are used to build up the ensemble, and these four stand-alone metamoels as well as the existing ensemble techniques are compared with the ensemble technique proposed in this paper. The performances of these stand-alone metamodels and all of the ensembles are evaluated by several commonly used criteria (e.g., correlation (denoted by R), maximum absolute error (MAE), average absolute error (AAE), root of mean square error (RMSE), etc.). The experimental results showed that the proposed ensemble of metamodels with recursive arithmetic average provides more accurate predictions than the stand-alone metamodels and for most problems even exceeds the previously presented ensemble techniques.

The remainder of this paper is organized as follows. In the next section, we present the basic weighted-sum formulation and the different techniques that can be used to select the weight factors for the stand-alone metamodels. In Section 3, the test problems are considered and the numerical procedure for finding an ensemble with recursive arithmetic average is presented. The presentation and discussion of results is displayed in Section 4. At last, the summary of several important conclusions is discussed in Section 5.

2 Ensemble of surrogates

For a given problem, if all the candidate metamodels developed for a given high-fidelity simulation happen to have the same level of accuracy, then a very straightforward form for the ensemble would be a simple average of the surrogates. However, for a specified problem the usual case is that there are some models that are more accurate than others. Therefore, in order to improve the accuracy of ensemble, the stand-alone surrogates have to be multiplied by different weight coefficients. Using ensemble of surrogates for approximation of response can be expressed as:

where x is input variable, \(\widehat{y}_s (x)\) is the ensemble response, N is the number of surrogates in the ensembles, w i (x) is the weight coefficient for the ith surrogate, \(\widehat{y}_i (x)\) is the response estimated by the ith surrogate.

Generally, the weight coefficients are selected such that the surrogates with high accuracy have large weight factor and vice versa.

All of the ensembles of surrogates in literatures can be divided into three categories:

-

(1)

Combining surrogates by minimizing cross-validation errors (GMSE; PRESS in particular), e.g., heuristic computation of the weight coefficient (Goel et al. 2007), the approach based on minimizing GMSEv in Acar and Rais-Rohani (2009), OWS, OWS diag (Viana et al. 2009), and BestPRESS (Goel et al. 2007; Viana et al. 2009);

-

(2)

Combining surrogates using prediction variance, e.g., the approach obtaining the weights based on variance reciprocal (Bishop 1995; Zerpa et al. 2005);

-

(3)

Combining surrogates by minimizing mean square error (or root of mean square error (RMSE)), e.g., OWS ideal (Viana et al. 2009), the approach based on minimizing RMSEv in Acar and Rais-Rohani (2009).

In the first category, the weights are determined using training points, but, in the second and third category, the weight is determined using several validation points in test set. The techniques determining the weights using cross validation are time-consuming, while the ones using validation points all require additional simulations for response determination. Depending on the type of surrogate and the computational cost of simulation calculation, one error metric (PRESS or RSME) would be less expensive to evaluate than the others (PRESS or RSME). If the cost of obtaining data required for developing surrogate models is high, choosing PRESS as error metric would be a reasonable strategy, for additional response validations at test set are needed with RMSE. On the contrary, if the surrogate-constructing is computationally costly, RMSE (or MSE) used as error metric would be a better choice, for only a single surrogate would be constructed with RMSE. The technique proposed in this paper belongs to the third category. Next, the details of all the ensembles are presented below.

2.1 Weight coefficients selection based on prediction variance

Based on the work of Bishop (1995), Zerpa et al. (2005) used the ensemble of surrogates including response surface (RS) model, Kriging model and RBF model in the optimization of an alkali-surfactant polymer flooding process, and chose the prediction variance as the error metric. The values of the weight coefficients are determined by the following formula:

where V i is the prediction variance of the ith surrogate.

2.2 Combining surrogates by minimizing cross-validation errors

2.2.1 Heuristic computation of the weight coefficient

Goel et al. (2007) proposed a heuristic method for calculating the weight coefficients, which is known as PRESS (predicted residual sum of squares) weighted average surrogate, where the weight coefficients are computed as:

where E i is the PRESS error of the ith surrogate, α, β are used to control the importance of averaging and individual PRESS respectively. Goel et al. (2007) suggested α = 0.05,β = − 1.

2.2.2 The approach based on minimizing GMSEv

Acar and Rais-Rohani (2009) proposed a method for determining the weight coefficients, which is achieved through minimizing some error metric, such as PRESS error. The optimization problem is presented as:

where Err{·} is the selected error metric which measures the accuracy of the ensemble-predicted response \(\widehat{y}_s \). The author adopted the generalized mean square cross-validation error (GMSE; leave-one-out cross validation or PRESS in polynomial response surface approximation terminology) as one kind of the error metric.

2.2.3 OWS (Optimal weighted surrogate)

Employing an ensemble of neural networks, Bishop (1995) proposed a weighted surrogate obtained by approximating the covariance between surrogates from residuals at training or test points, whose approach is based on mimizing the MSE:

where \(e_{\rm WAS}^{}({\bf x}) = y({\bf x}) - {y_{\rm WAS}}({\bf x})\) is the error associated with the prediction of the WAS ensemble model, and the integral, which is taken over the domain interest, permits the calculation of the elements of C as:

where e i (x) and e j (x) are the errors associated with the prediction given by the surrogate model i and j respectively.

C plays the same role as the the covariance matrix in Bishop’s formulation. But C is approximated by the vectors of cross validation errors, \(\tilde{e}\),

where p is the number of data points and the i and j indicate different surrogates.

Given the C matrix, the optimal weighted surrogate (OWS) is obtained by minimizing the MSE as:

s.t. 1 T w = 1.

Using Lagrange multipliers, the solution is obtained as:

The weight in the formulation above may less than zero or larger than one, whose meaning is difficult to explain in real world, and, as pointed out by Viana et al. (2009), allowing this freedom was found to amplify errors coming from the approximation of matrix (7). In Viana et al. (2009), the author enforced the weight positive by solving (9) using only the diagonal elements of C. The approach is named OWS diag .

After examining formulas (4) and (9), we can find that both approaches actually the same, for both of them are all based on minimizing cross validation (especially PRESS; GMSE). The difference between them is that the approach in Acar and Rais-Rohani (2009) obtains the weights through a optimization process, while the approach in Viana et al. (2009) obtains the weights through an analysis expression, however, both approaches have exactly the same solution. Thereby, in order to avoid replication, OWS is not included in the rest of this paper.

2.2.4 BestPRESS

The traditional method of using an ensemble of surrogates is to select the best surrogate among all of the considered models. However, once the choice is made, the surrogate is fixed even though the design of experiments is changed. If the choice is refined for each new DOE, we can included it in the strategies for multiple surrogates, where the model with least error is assigned a weight of one and all others are assigned zero weight. Just as many literatures do, we also call this strategy BestPRESS model.

2.3 Combining surrogates by minimizing mean square error (MSE) (or root of mean square error (RMSE))

2.3.1 OWS ideal : the approach based on minimizing RMSEv in Acar and Rais-Rohani (2009)

In formula (7), if \(\tilde{e}\) is the real MSE in validation point set rather than the cross-validation in training set, then C is not the cross-validation error covariance matrix but the real error covariance matrix in formula (9). Just as we have pointed out above, OWS ideal is exactly the same as the approach based on minimizing RMSEv in Acar and Rais-Rohani (2009). In Acar and Rais-Rohani (2009), the RMSEv (where v is number of validation points in test set) is chosen as the error metric in formula (4). Therefore, in order to avoid replication, the remainder of this paper doesn’t include OWS ideal .

2.3.2 The strategy proposed in this paper-ensemble of surrogates with recursive arithmetic average

As having been mentioned above, most of the ensemble techniques obtain the weights by either minimizing cross-validation errors or minimizing RMSE (or MSE). Although the techniques using cross-validation errors don’t require additional validation points, they must be constructed many times, thereby, they are time-consuming. On the contrary, the techniques with RMSE (or MSE) need additional validation points, but these approaches only need to construct the surrogates once, so they are time-saving. In addition, when the value of RMSE at the test points is used as the error criterion, the techniques using RSME usually have better results, for the error metric employed in obtaining the weights is the same as that in measuring the prediction accuracy (they all use RMSE). The technique proposed in this paper also employs the prediction mean square error as the error metric.

In all of the combining techniques, the simplest and straight forward approach is to arithmetically average these single surrogates. Nevertheless, arithmetically averaging the stand-alone surrogates just once would not minimize the prediction mean square error. In order to make the prediction mean square error as low as possible, we consider to employ recursive process. Generally, the iteration in recursive process should be repeated several times, how many of which depends on the specified stop criterion. In this strategy, the algorithm stops when the prediction MSE of the worst surrogate approaches to that of the best surrogate. In other words, all the updated surrogates in the last iteration have similar prediction results (i.e., similar prediction MSEs). Furthermore, we should point out that the surrogates in the recursive process are not the initial single surrogates but the combining surrogates obtained using arithmetically averaging. The basic frame of this algorithm is as follows:

-

Input: Initial weight coefficients

-

Step 0: Fit the training data {x j } ,j = 1,2,....,T (where T is the number of the training points) with N candidate surrogates;

-

Step 1: Calculate their prediction mean square errors: \({e_i} = \frac{1}{T}\sum\limits_{j = 1}^T {({Sur_{ij}} - \widehat{Sur}_{ij})^2} ,{{\rm }}i = 1,2,....,N\) (where \(\widehat{Sur}_{ij}\) is the prediction value on the jth validation point of the ith individual surrogate) on the validation points;

-

Step 2: Find out the worst individual surrogate (i.e., the surrogate that has the largest prediction MSE, denoted by Sur worst , and its corresponding prediction MSE is denoted by MSE WorstSur ) and the best surrogate (i.e., the surrogate that has the smallest prediction MSE, denoted by Sur best , and its corresponding prediction MSE is denoted by MSE BestSur ).

While(MSE WorstSur − MSE BestSur > tol )D

-

Step 3: Obtain the arithmetic average of the candidate N surrogates; that is, all the candidate single surrogates are added, and then divided by the total number of all the candidate surrogates; denote this average ensemble model using Sur ave ;

-

Step 4: Replace the surrogate which has the largest prediction MSE (i.e. Sur worst ) with the simple average surrogate (i.e. Sur ave ) made in step 3 (this surrogate replaced by average surrogate may be one of the initial candidate surrogates or the average ensemble model in the previous time), then we can get N new surrogates, of which N − 1 surrogates are not changed; calculate and then update the weights for the initial individual surrogates;

-

Step 5: Do the same work as that in step 2; if the condition in while (·) is met, then return to step 3, otherwise break out of the loop.

EndWhile

-

Output: Optimal weight cofficients

Such iteration will be taken until the prediction MSE has no significant improvement. In the algorithm above, tol is the tolerant value determined in advance (e.g., tol = 0.01). Next, the convergence of the above-mentioned algorithm is presented as follows.

For a problem, there are N kinds of surrogates Sur 1, Sur 2, ..., Sur N , the weight for Sur i is w i , and \(\sum\limits_{i = 1}^N {{w_i} = 1}\). Assume the prediction value and prediction error of the ith surrogate Sur i on the jth data point respectively are Sur ij and e ij , j = 1,2,....,T (where T is the number of the training points), then the prediction value and prediction error of the simple average surrogate Sur ave on the jth data point respectively are \(Sur_{ave}(j) = \sum\limits_{i = 1}^N {{w_i}Sur_{ij}} \) and \(e_{ave}(j) = \sum\limits_{i = 1}^N {{w_i}e_{ij}} \). Denote the weight vector by W = [w 1,w 2,...w N ]T, denote the prediction error vector of the Sur i by E i = [e i1,e i2,...e iT ]T, denote the prediction error matrix by e = [E 1,E 2,...E N ], and denote the sum of prediction square error of the simple average surrogate by J, then the following stands:

where

and where

Apparently, E ii is the sum of prediction square error of Sur i .

Based on the description above, we have the following lemma.

Lemma 1

Assume the prediction error vector E 1, E 2, ..., E N is linear independent, and denote the sum of prediction square error of the simple average surrogate by J A , then

Proof

The weights of the simple average surrogate is

and

Because E 1, E 2, ..., E N is linear independent, then

so,

The proof is finished.□

Theorem 1

Denote the error vector which is obtained by replacing the worst surrogate with the simple average surrogate (i.e. Sur ave ) in k th iteration by

then

where, d = MSE BestSur .

Proof

Denote \(E_{\max }^{(0)} = \max \{ {E_{ii}}\} \) and \(E_{\max }^{(k)} = \max \{ E_{ii}^{(k)}\} \), where i = 1,2,...N. Because the worst surrogate is replaced by the simple average surrogate in each iteration, according to lemma 1, \(E_{\max }^{(0)} > E_{\max }^{(1)} > ... > E_{\max }^{(k)} > ...\). On the other hand, the best initial surrogate is not changed in each iteration, then \(E_{\max }^{(k)} \ge MS{E_{BestSur}}\). Because it is monotonous and bounded, the data serial \(\left\{ {E_{\max }^{(k)}} \right\}_{k = 0}^\infty \) has its limit, denoted by d, i.e., \(\mathop {\lim }\limits_{k \to \infty } E_{\max }^{(k)} = d\).

Apparently, d ≥ MSE BestSur . Next, we will prove d = MSE BestSur . In fact, if d > MSE BestSur , according to lemma 1, we can replace the worst surrogate with the simple average surrogate, then the prediction MSE of the worst surrogate will less than d in the next iteration, which is contradict to the conclusion \(\mathop {\lim }\limits_{k \to \infty } E_{\max }^{(k)} = d\). Therefore, d = MSE BestSur , i.e., \(\mathop {\lim }\limits_{k \to \infty } E_{max}^{(k)} = MS{E_{BestSur}}\).

Furthermore, denote \(E_{\min }^{(0)} = \min \{ {E_{ii}}\} \) and \(E_{\min }^{(k)} = \min \{ E_{ii}^{(k)}\} \), we can easily know \(E_{\min }^{(0)} = E_{\min }^{(1)} = ...= E_{\min }^{(k)} = ...=MSE_{BestSur}\). So, \(\mathop {\lim }\limits_{k \to \infty } {E^{(k)}} = (d,d,....d)\), where d = MSE BestSur . The proof is finished.□

The technique proposed in this paper has several differences from the existing ensemble techniques:

-

(1)

Because cross-validation often tends to overestimate errors, the real gain in accuracy of the ensemble technique based on cross-validation is limited, the illustration about which is presented in Viana et al. (2009). However, as for the third class of ensemble technique based on minimizing RMSE mentioned above, if the validation points are acquired easily, we can consider to get more validation points to construct the ensemble. Generally, the more validation points are used to determine the weights in ensemble of surrogates, the better prediction accuracy can be achieved by the ensemble. If the validation point set is large, the prediction MSE of the ensemble of surrogates would approache to that of the BestRMSE (Viana et al. 2009). In the process of obtaining the weights, the validation points are also needed in the technique proposed here, and with recursive scheme, the proposed technique can achieve desirable results. In a word, the technique proposed in this paper is based on minimizing RMSE, and, because it adopt recursive process, has an ideal prediction capacity, which is the difference of the proposed technique in this paper from those techniques based on minimizing cross-validation (especially, GMSE; PRESS).

-

(2)

As for OWS ideal (Bishop 1995; Viana et al. 2009), using Lagrange multipliers to get the weight solution can neither ensure the weights larger than or equal to one nor ensure not less than zero, whose physical meaning in many circumstances is difficult to explain. Similarly, the approach based on minimizing RMSEv (Acar and Rais-Rohani 2009) also hasn’t added the condition w i ≥ 0 into formula (4). If w i ≥ 0 is added into formula (4), the analysis expression like (9) cannot been obtained, and a lot of iterations in simplex method of operational research or other formal intelligent optimization algorithm would be needed. Thereby, when the dimension of the problem is large, the optimization process is also time-consuming. So, a simple and straight-forward approach is needed. Arithmetic average ensemble surrogate proposed in this paper can ensure the weights nonnegative and not larger than one, which is convenient to explain the importance of each candidate single surrogate.

-

(3)

As mentioned in (2), the optimization process is also time-consuming, especially in problems with large dimensions. On the contrary, recursive process is time-saving compared to optimization process. The number of iterations is effected by tol and usually is a dozen or dozens, so it executes more quickly than optimization process. The experiment results presented in the end of Section 4 confirm this.

3 Experiments

3.1 Benchmark problems

In order to test the proposed technique in this paper, we choose the following analytic functions that are commonly used as benchmark problems in literatures.

Branin–Hoo:

where x 1 ∈ [ − 5, 10], x 2 ∈ [0, 15].

CamelBack:

where x 1 ∈ [ − 3, 3], x 2 ∈ [ − 2, 2].

Goldstein–Price:

where x 1 , x 2 ∈ [ − 2, 2].

Hartman:

where x i ∈ [0, 1].

Both the three-variables (n = 3) and the six-variables (n = 6) models of this function are considered. The values of function parameters c i ,p ij ,a ij for Hartman-3 and Hartman-6 models, given in Tables 1 and 2, are taken from Goel et al. (2007) and Acar and Rais-Rohani (2009). For the chosen examples, m = 4.

3.2 Abalone problems

In the prediction of the life-span of abalone, every sample of abalone includes the following eight indicators: sex, length, diameter, thickness, total weight, the weight apart from shell, the weight of guts, and the weight of shell. The life-span of abalone is predicted according to the above-mentioned indicators. We choose 200 samples for this experiment from http://archive.ics.uci.edu/ml/datasets/Abalone.

3.3 Design and analysis of computer experiments

As for these five test functions presented in formulas (17)–(19) and the Abalone problem, all of them use the Latin hypercube sampling (LHS). Some people also call it the symmetrical LHS sample to distinguish from Latin hypercube(LH), which keeps the mid-point principle. These kinds of sampling have a better nature than Monte-Carlo sampling (or call it simple random sampling). In this paper we have adopted the principle of maximizing the minimum distance, which refers to finding the set of sample that meets the formula \(\max \{\mathop {\min }\limits_{i\ne j} d(x_i ,x_j )\}\) (where d is some kind of criterion to measure distance) in n (n = 20 in benchmark problems and n = 80 in Abalone problem) times repeated sampling.

In order to reduce the influence of random factors, we randomly select 1,000 training sets for these three test functions expressed in formulas (17)–(19) and the Hartman-3. However, considering the computational cost, we select 200 training sets for Hartman-6 and 500 ones for Abalone. Depending on the number of input variables, and considering the computational cost, the training set for each benchmark problem is composed of 12–60 design points, which are the same as that in Acar and Rais-Rohani (2009). For these ensembles which depend on minimizing RMSE (or prediction MSE), there are additional validation points needed. Depending on the precision level sought for estimating the error, the number of validation points, denoted by V, will vary with different problem. V = 0.8N (where N is the no. of training points) was used in these approaches based on minimizing RMSE (certainly including the technique proposed in this paper). Hence, all the corresponding surrogates, including stand-alone surrogates and ensembles, are constructed multiple times with the error estimation being the average value corresponding to multiple replication of the same surrogate. Additional information about the training and test data sets is provided in Table 3.

The accuracies of each stand-alone and ensemble model for the benchmark problems are measured using correlation coefficient (denoted by R), root mean square error (RMSE), average absolute error (AAE), and max absolute error (MAE). Their definitions are expressed as:

Root mean square error:

Average absolute error:

Max absolute error:

Correlation coefficient:

In these four definitions above, n error is the number of the samples in the test set, y i is the actual response, \(\overline y \) is average value of actual response, \(\widehat{y}\) is the metamodel response, \(\overline {\widehat{y}} \) is the average value of metamodel response.

Because the experiments are repeated 1,000 (200 or 500) times, the mean and the coefficient of variation (CV) of R, RMAE, AAE, and MAE are used to evaluate the prediction accuracy of each stand-alone metamodel and ensemble model. The definition of CV is expressed as:

where δ is the standard variance of samples, and μ is the mean of samples.

3.4 Ensemble techniques

There are four techniques considered in this paper: PRS, KRG, SVR, and RBF. These surrogates are used as the four members of the ensemble that is developed based on the several previously described techniques. All the parameters are identified using cross-validation (leave-one-out (LOO) is adopted in this paper) such that they minimize the MSE. In all the above-mentioned surrogates, the following parameters should be identified: the highest order (denoted by d) in PRS, the parameter (c) in multiquadrics of RBF, the parameter (θ) in Gassian correlation function of Kriging, and the parameter (C,ε,σ) in SVR. The LOO cross-validation results are presented in Table 4. The mathematical descriptions of the five metamodels are provided in the Appendix A.

4 Results and analysis of experiments

Part of the marks used to label the ensemble techniques is inherited from Acar and Rais-Rohani (2009). The model based on the simple average is denoted by EA; the one based on the heuristic method of Goel et al. (2007) is labeled as EG; the one based on the prediction variance of Zerpa et al. (2005) is denoted by EV; the one based on minimizing PRESS (GMSE) in Acar and Rais-Rohani (2009) is labeled as EP; the one based on minimizing RMSEv in Acar and Rais-Rohani (2009) is labeled as EM; OWS diag in Viana et al. (2009) is denoted by Od; BestPRESS is denoted by BP; and the one proposed in this paper is denoted by ER. The results of different benchmark problems are shown with the help of boxplots (the description of boxplot is provided in the Appendix B), and the means and CVs of the error metrics are presented with several tables. Additionally, to facilitate comparison of the performances of the the ensembles and single surrogates, the frequencies of the rank of them in terms of R, RMSE, AAE, and MAE are also presented with other several tables.

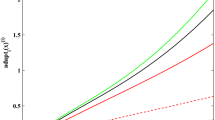

4.1 Correlation coefficient

The correlation coefficients for different test functions are shown in Fig. 1, from which we can see: (1) No single metamodel works best for all test functions and correlation coefficient for different stand-alone metamodel varied with DOE significantly; the eight ensemble models work better than the worst stand-alone metamodel, and correlation coefficient for ensemble model varied with DOE insignificantly; (2) In almost all of the test problems, although EM and ER have similar median, and have better performance than the other ensemble models, EM has longer tail, which indicates that EM is less robust than ER; (3) EP has the worst performance among all the ensembles for A, B, and C; (4) BP has the second worst performance in A and B, and has the worst performance in D, which reveals that BP can not capture the real error perfectly, that is, BP can not find the best single surrogate according to the cross-validation in most of the replications; and (5) At last, it is worthy noting that, in all the test problems, EG and Od have the similar results.

Table 5 shows the mean and the coefficient of variation for different test functions to assess the performance of different metamodels. It is clear that the average correlation coefficient for ER was the best for almost all the test functions except Branin–Hoo and Hartman-6. On the contrary, EM has a best performance in Branin–Hoo and Hartman-6. In addition, it is interesting that, in low dimensional problems, such as Branin–Hoo, Camelback, and Goldstein–Price, EG and Od have exactly the same result, and in high dimensional problems, such as Hartman-3, Hartman-6, and Abalone, although their results are not the same, their results are similar. Combining Table 6 to Table 5, we can find that besides four times of 1st, there are two times of 3rd in ER, that is, ER has an ideal result in all of the six test problems, which indicates ER has a robust prediction capacity. On the other hand, the performances of the other ensembles and all the individual surrogates vary apparently with test problems. Even the second best ensemble, EM, performs well just in two test problems, but in the other problems, it doesn’t perform perfectly, just one time of 4th and three times of 5th. The third best model is RBF. Though RBF is inferior to ER and EM, it is still the best in all of the individual surrogates, and it seems has certain reasonable robust results.

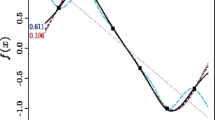

4.2 RMSE

Next, we compare different metamodels based on the RMSE in predictions at test points. As shown in Fig. 2, we can see: (1) RBF has the best performance in all of the stand-alone metamodels in problem B, C, and F, its prediction accurate is par with the best ensemble model; In addition, PRS was either the best or the second best for all the test problems in all of the stand-alone metamodels; (2) Generally, all of these eight ensemble models are better than the worst stand-alone metamodel, and RMSE for ensemble models didn’t vary with DOE significantly, which suggestes the ensemble models are more robust; (3) Stand-alone model on the whole has worse prediction accuracy than ensemble model, which indicates the necessity of adopting the ensemble techniques; and (4) The technique of ER proposed in this paper has better performance than the other ensemble models in RMSE.

Table 7 shows that the average RMSE for ER was the best for almost all the test functions except Branin-Hoo and Hartman-6. Although the average RMSE for ER in Branin–Hoo is gently larger than EM, ER has a lower CV, which indicates that ER is more robust than EM in Branin–Hoo.

Table 8 complements Table 7 and shows the frequencies of the rank of all the ensembles and individual surrogates in the ensembles. From the table, we can see that the result is similar to that in Table 6. For all of the benchmark problems and Abalone problem, ER is the first for four times, is the second for one time, and is the third for one time. Apparently, ER is the best model in all of the ensembles and individual models in terms of RMSE. The second best model is EM, and the third best model is the single surrogate RBF.

4.3 AAE

Figure 3 shows the AAE for different metamodels on different test functions. It shows us the following findings: (1) For problem A, PRS has a higher AAE than the rest individual metamodels; there are three individual metamodels which have similar AAEs, which may be the reason why these eight ensemble models also have similar AAEs. (2) For the test problem D, E, and F, the ensemble models have significantly lower AAE than the worst individual surrogate. (3) For problem F, PRS has the worst result, which possibly suggest that PRS is actually not suitable for such kind of problems; and because of PRS’s bad performance, EA has a similarly bad result. (4) Being similar to PRS in F, RBF is also not suit for D; but in A, B, C and F, RBF has ideal results, which indicates the performance of surrogate is problem-dependent. Additionally, from Table 9, we can see that RBF performance best in Camelback and Abalone, EV performance best in Goldstein–Price and Hartman-6, and ER performance best just in Branin-Hoo. In addition, Table 10 shows that RBF and EV have the highest frequency of 1st, and the EM and ER have the second highest frequency of 1st. But ER has the highest times (four times) of 2nd in all of the ensembles and individual surrogates. Combining the times in the 1st, 2nd, and 3rd, and considering the robustness, we think that the best robust model should be ER, the second should be EV, and the third should be EV.

4.4 MAE

Next, the MAEs of different metamodels for different test functions are compared. Figure 4 shows that, for A and C, all of these models, including ensembles and single surrogates, have similar MAEs, but in the other problems, the difference in MAE is apparent; for B, EP has a worst performance in all of the ensembles, and it has a long tail in the figure, which means it has a larger deviation; for D and F, the worst models is RBF and PRS respectively.

Numerical quantification of the results is given in Table 11, where we can observe that ER is not the best model in all of the problems, EM perform best in three test problems, and other three single surrogates all perform best in one problem. With the help of Table 12, we also find that the best model may be EM. But combining the times of the 1st, 2nd, and 3rd, it is easy to find that ER is also a more reasonable robust model than the single surrogate, such as, RBF, SVR, and PRS.

4.5 The effect of the number of the validation points

All of the results above all are under the consideration of V = 0.8N in ER, EM, Od, and EV. In order to examine the effect of the number of the validation points V on the prediction results of all the ensemble surrogates, V = 0.3N and V = 0.5N are also considered in the following experiments. Considering the length of this article, however, we just take Camelback as an example. Different from Tables 5–12, where the Rs (or RMSEs; AAEs; MAEs) of all the test problems are get together in a same table, here, we get together the R, RMSE, AAE, and MAE for Camelback and presented them in a same table, thereby, the total number is four (the number of the error metrics (R, RMSE, AAE, and MAE)) rather than six (the number of the test problems). Tables 13, 14 and 15 presents the results for V = 0.3N, V = 0.5N, and V = 0.8N respectively. From the three tables, we can obtain the following findings: (1) The prediction accuracies of the ensemble models (ER, EM, Od, EV), which base on the validation points, improved with the increasing number of validation points; (2) Nevertheless, their speed of improvement is different; varying from V = 0.3N to V = 0.8N, ER has an apparent improvement, the frequency of 1st improves from zero to two. on the other hand, the improvement in EM is not so apparent; and (3) when V = 0.3N, RBF has the best performance, so, when the validation points is not easy to obtain, choosing a single surrogate may be a reasonable strategy, but in practice, we have no the prior knowledge about which is the best single surrogate.

Additionally, we should point out that the performance of BP (BestPRESS) is not ideal according to the results presented in Tables 5–15, which may suggest (1) it is difficult for cross-validation to capture the real errors, so, the best single surrogate can not be picked out according to the cross-validation; and (2) even if it can perfectly estimate the real error, its prediction accuracy would only be similar to the best single surrogate (after all, BestPRESS is like assigning a unit weight for the surrogate with smallest PRESS and zeroing all the others), but according to the experiments results, the capacity of single surrogate may be worse than ensemble models. Finally, we compare the efficiency between EM and ER, because they are both based on minimizing RSME (or prediction MSE). The time consumption of EM and ER is presented in Table 16. In this experience, we choose a low dimensional problem, a median high dimensional problem, and a high dimensional problem as test problems. From the table, we can see that in low dimensional problem BH (two dimensions), the cost in time consumption using EP is nearly two times as much as that in ER; for median high dimensional problem Hartman-3 (three dimensions), the cost of time consumption using EP is 14.19 times as much as that in ER; Furthermore, in high dimensional problem Hartman-6 (six dimensions), the cost in time consumption using EP is 7.935 times as much as that in ER. The experiment result reveals that when the dimension in problem is large (especially when dozens of variables appear in real-life problems), choosing recursive arithmetic average ensemble technique rather than the ensemble techniques based on optimization process may be a reasonable strategy. The results support the viewpoint presented in the last paragraph of Section 2.3.2.

5 Conclusion

In this paper, we examined several existing combining techniques, proposed recursive arithmetic average ensemble technique, and finally discussed the experiment results.

-

1.

After examination of the existing combining techniques, we find (1) OWS idea is essentially the same as EM; and (2) OWS is also the same as EP. The difference between them is just the expression used to obtain the weights.

-

2.

After examination of the results for these five test functions and Abalone problem, we can see clearly that the ensemble technique proposed in this paper has more significant prediction accuracy than stand-alone metamodels in most problems, and for almost all of problems presented in this paper even surpasses the previously reported ensemble techniques.

-

3.

Because of adopting cross validation in choosing of the best parameters in stand-alone metamodels, all of the models, including individual models and ensemble models, have significantly improved their prediction accuracy.

-

4.

EG and Od have the similar results in terms of R, RMSE, AAE, MAE in all of the test problems, especially in low dimensional problem. The cause is that EG and Od have the similar structure, which we have pointed out in Section 1.

-

5.

In this paper, we limit our conclusion to low dimension problems (less than seven dimensions), what about the high dimension problems is our future research work.

Although the technique proposed in this paper achieves desirable results, the advantages of combination over selection are still difficult to clarify (Yang 2003). This is, despite our efforts, we are still operating using the “insurance policy” mode rather than offering substantial improvements. In addition, finding more efficient methods to improve the prediction accuracy of the ensemble model is also our future work.

References

Acar E, Rais-Rohani M (2009) Ensemble of metamodels with optimized weight factors. Struct Multidisc Optim 37:279–294

Bishop C (1995) Neural networks for pattern recognition. Oxford University Press, New York

Clarke SM, Griebsch JH, Simpson TW (2005) Analysis of support vector regression for approximation of complex engineering analyses. Trans ASME J Mech Des 127(6):1077–1087

Cresssie N (1988) Spatial prediction and ordinary kriging. Math Geol 20(4):405–421

De Boor C, Ron A (1990) On multivariate polynomial interpolation. Constr Approx 6:287–302

Fang H, Horstemeyer MF (2006) Global response approximation with radial basis functions. Eng Optim 38(4):407–424

Forrester AIJ, Keane AJ (2009) Recent advances in surrogate-based optimization. Prog Aerosp Sci 45(1–3):50–79

Friedman JH (1991) Multivariate adaptive regressive splines. Ann Stat 19(1):1–67

Goel T, Haftka RT, Shyy W, Queipo NV (2007) Ensemble of surrogates. Struct Multidisc Optim 33:199–216

Hardy R (1971) Multiquadratic equations of topography and other irregular surfaces. J Geophys Res 76:1905–1915

Jin R, Chen W, Simpson TW (2001) Comparative studies of metamodeling techniques under multiple modeling criteria. Struct Multidisc Optim 23(1):1–13

Kleijnen JPC, Sanchez SM, Lucas TW, Cioppa TM (2005) A users guide to the brave new world of designing simulation experiments. INFORMS J Comput 17(3):263–289

Kohavi R (1995) A study of cross-validation and bootstrap for accuracy estimation and model selection. In: Fourteenth international joint conference on artificial intelligence, pp 1137–1143

Langley P, Simon HA (1995) Applications of machine learning and rule induction. Commun ACM 38(11):55–64

McDonald D, Grantham W, Tabor W, Murphy M (2000) Response surface model development for global/local optimization using radial basis functions. In: The 8th AIAA symposium on multidisciplinary analysis and optimization, Long Beach, CA

Meckesheimer M, Barton R, Simpson T, Limayemn F, Yannou B (2001) Metamodeling of combined discrete/continuous responses. AIAA J 39(10):1950–1959

Meckesheimer M, Barton R, Simpson T, Booker A (2002) Computationally inexpensive metamodel assessment strategies. AIAA J 40(10):2053–2060

Papadrakakis M, Lagaros M, Tsompanakis Y (1998) Structural optimization using evolution strategies and neural networks. Comput Methods Appl Mech Eng 156(1–4):309–333

Perrone M, Cooper L (1993) When networks disagree: ensemble methods for hybrid neural networks. In: Mammone RJ (ed) Artificial neural networks for speech and vision. Chapman and Hall, London, pp 126–142

Picard R, Cook R (1984) Cross-validation of regression models. J Am Stat Assoc 79(387):575–583

Powell M (1987) Radial basis functions for multivariable interpolation: a review. In: Mason JC, Cox MG (eds) Proceedings of the IMA conference on algorithms for the approximation of functions and data. Oxford University Press, London, pp 143–167

Queipo NV, Haftka RT, Shyy W, Goel T, Vaidyanathan R, Tucker PK (2005) Surrogate-based analysis and optimization. Prog Aerosp Sci 41:1–28

Sacks J, Schiller SB, Welch WJ (1989a) Designs for computer experiments. Technometrics 31(1):41–47

Sacks J, Welch WJ, Mitchell TJ, Wynn HP (1989b) Design and analysis of computer experiments. Stat Sci 4(4):409–435

Simpson TW, Toropov V, Balabanov V, Viana FAC (2008) Design and analysis of computer experiments in multidisciplinary design optimization: a review of how far we have come or not. In: 12th AIAA/ISSMO multidisciplinary analysis and optimization conference, AIAA20085802, Victoria, BC, Canada

Viana FAC, Haftka RT, Steffen V (2009) Multiple surrogate: how cross-validation errors can help us to obtain the best predictor. Struct Multidisc Optim 39:439–457

Viana FAC, Gogu C, Haftka RT (2010) Making the most out of surrogate models: tricks of the trade. In: ASME 2010 international design engineering technical conferences and computers and information in engineering conference, DETC2010-8813, Montreal, Canada

Wang GG, Shan S (2007) Review of metamodeling techniques in support of engineering design optimization. Trans ASME J Mech Des 129(4):370–381

Yang Y (2003) Regression with multiple candidate models: selecting or mixing? Stat Sin 13(5):783–809

Zerpa L, Queipo N, Pintos S, Salager J (2005) An optimization methodology of alkaline-surfactant-polymer flooding processes using field scale numerical simulation and multiple surrogates. J Pet Sci Eng 47:197–208

Acknowledgements

The funding provided for this study by the National Science Foundation of China under Grant NO.70931002 and NO.70672088 is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Additional information

Part of the work was presented at the 2010 2nd International Conference on Industrial Mechatronics and Automation (ICIMA 2010), Wuhan, China.

Appendices

Appendix A: Several metamodeling techniques

Here, there are four metamodeling techniques (PRS, RBF, Kriging, SVR) are considered.

1.1 A.1 PRS

For PRS, the highest order is allowed to be 4 in this paper, but the used order in a specific problem is determined by the selected sample set. When the highest order of a polynomial model is 4, it can be expressed as:

where \(\widetilde{F}\) is the response surface approximation of the actual response function, N is the number of variables in the input vector x, and a,b,c,d,e are the unknown coefficients to be determined by the least squares technique.

Notice that 3rd and 4th order models in polynomial model do not have any mixed polynomial terms (interactions) of order 3 and 4. Only pure cubic and quadratic terms are included to reduce the amount of data required for model construction. A lower order model (Linear, Quadratic, and Cubic) includes only lower order polynomial terms (only linear, quadratic, or cubic terms correspondingly).

1.2 A.2 RBF

The general form of the RBF approximation can be expressed as:

Powell (1987) considers several forms for the basis function φ(·):

-

1.

\(\varphi (r) = {e^{\left( {{{{ - {r^2}}} \left/ {{{c^2}}} \right.}} \right)}}{\text{Gaussian}}\)

-

2.

\(\varphi (r)=(r^2+c^2)^{\frac{1}{2}}\) Multiquadrics

-

3.

\(\varphi (r)=(r^2+c^2)^{-\frac{1}{2}}\) Reciprocal Multiquadrics

-

4.

\(\varphi (r)=\left( { r} \left/ {c^2} \right. \right)\log \left( {{ {r} \left/ {{{c}}} \right.}} \right)\) Thin-Plate Spline

-

5.

\(\varphi (r)=\frac{1}{1+e^{{r} \!\mathord/{c}}}\) Logistic

where c ≥ 0. Particularly, the multi-quadratic RBF form has been applied by Meckesheimer et al. (2001, 2002) to construct an approximation after Hardy (1971), who used linear combinations of a radically symmetric function based on the Euclidean distance of the form:

where \(\left\| \cdot \right\|\) represents the Euclidean norm. Replacing φ(x) with the vector of response observations, y yields a linear system of n equations and n variables, which is used to solve β. As described above, this technique can be viewed as an interpolating process. RBF surrogates have produced good fits to arbitrary contours of both deterministic and stochastic responses (Powell 1987). Different RBF forms were compared by McDonald et al. (2000) on a hydro code simulation, and the author found that the Gaussian and the multi-quadratic RBF forms performed best generally.

1.3 A.3 Kriging

For computer experiments, kriging is viewed from a Bayesian perspective where the response is regarded as a realization of a stationary random process. The general form of this model is expressed as:

Where f j ,j = 1,....,k is assumed as a known vector of function, β j is an unknown constant needed to estimated, and Z(·) is a stochastic process, commonly assumed to be Gaussian, with mean zero and covariance

where σ 2 is the process variance. In practice, the linear model component in (20) is often reduced to only an intercept b since the inclusion of a more complex linear model does not necessarily yield a better prediction.

1.4 ε-SVR

Given the data set {(x 1 ,y 1 ),......,(x l ,y l )}(where l denotes the number of samples) and the kernel matrix K ij = K(x i ,x j ), and if the loss function in SVR is ε-insensitive loss function

then the ε-SVR is written as:

The Lagrange dual model of the above model is expressed as:

where \(K\left( {\cdot ,\cdot } \right)\) is kernel function. After being worked out the parameter α ( ∗ ), the regression function f(x) can be gotten.

Appendix B: Box plots

In a box plot, the box is composed of lower quartile (25%), median (50%), and upper quartile (75%) values. Besides the box, there are two lines extended from each end of the box, whose upper limit and lower limit are defined as follows:

where Q1 is the value of the line at lower quartile, Q3 is the value of the line at upper quartile, IQR = Q3 − Q 1, X minimum and X maximum are the minimum and maximum value of the data. Outliers are data with values beyond the ends of the lines by placing a “+” sign for each point.

Rights and permissions

About this article

Cite this article

Zhou, X.J., Ma, Y.Z. & Li, X.F. Ensemble of surrogates with recursive arithmetic average. Struct Multidisc Optim 44, 651–671 (2011). https://doi.org/10.1007/s00158-011-0655-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-011-0655-6