Abstract

Normal Boundary Intersection (NBI) and (Enhanced) Normalised Normal Constraint (E)NNC are attractive and popular approaches to generate an approximation of the Pareto set in nonlinear multi-objective optimisation problems. All three methods are based on similar ideas, but do not always yield identical results, which may confuse practitioners. Hence, the current paper provides theoretical insights in the conditions under which identical results are obtained. Typically, NBI and ENNC are able to generate the same candidate Pareto points, if all additional inequalities in the ENNC subproblem are active. In general, NBI and NNC do not return the same points when three or more objectives are considered. Equivalence relations between the resulting lagrange multipliers for the additional NBI and ENNC (in)equality constraints have been derived. Moreover, the obtained relations have lead to novel criteria for detecting non-Pareto optimal points that in adverse situations maybe generated by these methods. The major advantage is that the removal criteria do not rely on a time-consuming pairwise comparison but only need matrix multiplications. A Matlab implementation has been added for completeness. The insights are illustrated for a general nonlinear bi-objective and three-objective optimisation problem, and a dynamic three-objective tubular reactor optimisation problem from chemical engineering. Finally, practical guidelines are added.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In many engineering optimisation problems multiple and conflicting objectives are present (Marler and Arora 2004). This gives rise to a set of Pareto optimal solutions instead of a single optimum (Miettinen 1999). To generate the Pareto set scalarisation approaches are often used. These convert the multi-objective optimisation problem (MOOP) in a series of parametric single objective optimisation problems (Marler and Arora 2004). The most common scalarisation approach is still the convex Weighted Sum (WS) of the individual objectives. However, during the last fifteen years, scalarisation approaches as Normal Boundary Intersection (NBI) (Das and Dennis 1998) and (Enhanced) Normalised Normal Constraint ((E)NNC) (Messac et al. 2003; Messac and Mattson 2004; Sanchis et al. 2008) have been reported to mitigate the drawbacks of the WS (Das and Dennis 1997). Moreover, NBI and (E)NNC can be combined with gradient-based deterministic optimisation routines allowing to find (at least locally) optimal solutions to large-scale and highly constrained multi-objective optimisation problems in a fast and efficient way (e.g., Logist et al. 2010a).

Although there exists a high number of similarities between NBI and (E)NNC, the authors observed that these approaches do not always provide identical results. These differences can confuse practitioners when they compare results from different methods. The main goal of the paper is to provide theoretical insights in the conditions under which NBI and (E)NNC yield the same solutions. An important additional result is that criteria have been elaborated for identifying non-Pareto optimal points that may be generated by these methods (Messac et al. 2003). Finally, also practical guidelines are provided.

The paper is structured as follows. Section 2 introduces the NBI and (E)NNC methods using a uniform notation. Section 3 provides theoretical considerations for novel insights (i.e., equivalence conditions and removal criteria). The obtained knowledge is illustrated on three case-studies in Section 4. Finally, Section 5 summarises practical guidelines for practitioners.

2 Mathematical formulation and methods

2.1 Preliminaries

An MOOP can be formulated as follows:

with m ≥ 2. Here \(f_i(\mathbf{x}): \mathbb{R}^n \rightarrow \mathbb{R}\) denote the individual objective functions, which are grouped into the cost vector \(\mathbf{f(\mathbf{x})} = [f_1(\mathbf{x}),f_2(\mathbf{x}),\ldots,f_m(\mathbf{x})]^\intercal\) : ℝn →ℝm. The vectors \(\mathbf{g} = [g_1(\mathbf{x}), g_2(\mathbf{x}),\ldots, g_{n_{\mathrm{ineq}}} (\mathbf{x})]^\intercal\) : ℝn →ℝand h = [h 1(x), h 2(x), \(\ldots, h_{n_{\mathrm{eq}}} (\mathbf{x})]^\intercal \) : ℝn →ℝrepresent the inequality and equality constraints, respectively. Hence, the feasible decision space is \(\mathcal{S} = \{ \mathbf{x}: \mathbf{g}(\mathbf{x}) \leq 0 \mathrm{~and~} \mathbf{h}(\mathbf{x}) = 0\}\) and its mapping into the cost space yields the feasible cost space \(\cal{J} = \{ \mathbf{f}(\mathbf{x}): \mathbf{x} \in \cal{S} \}\).

The concept of Pareto optimality is adopted as optimality criterion for multi-objective optimisation (Miettinen 1999). However, computational methods for general nonlinear multicriteria optimisation can at best guarantee local Pareto optimality, i.e., Pareto optimality in a certain neighbourhood (Das and Dennis 1998).

A point \(\mathbf{x}^* \in \cal{S}\), is Pareto optimal if there does not exist another point \(\mathbf{x} \in \cal{S}\), such that \(f_i(\mathbf{x}) \leq f_i(\mathbf{x}^*)\) for all i and \(f_i(\mathbf{x}) < f_i(\mathbf{x}^*)\) for at least one objective function (Miettinen 1999).

Pareto optimality refers to the decision space. The equivalent concept in the cost space is (non-)dominance (Miettinen 1999).

A point \(\mathbf{f}(\mathbf{x}^*) \in \cal{J}\) is non-dominated if there does not exist another point, \(\mathbf{f}(\mathbf{x}) \in \cal{J}\), such that \(f_i(\mathbf{x}) \leq f_i(\mathbf{x}^*)\) for all i with at least one \(f_i(\mathbf{x}) < f_i(\mathbf{x}^*)\). Otherwise, f(x *) is dominated.

Furthermore, the following items are introduced:

-

the minimiser \(\mathbf{x}^*_i\) of i-th cost function f i (x),

-

the utopia point \(\mathbf{f}^*=[f_1^*,f_2^*,\ldots,f_m^*]^\intercal\) which contains the minima of the individual objective functions \(f_i(\mathbf{x}_i^*)\),

-

the individual minima cost vectors \(\mathbf{f}(\mathbf{x}_i^*)\), which is the cost vector evaluated for the individual minimiser \(\mathbf{x}_i^*\),

-

the pay-off matrix \(\boldsymbol\Phi\), whose i-th column is \(\mathbf{f}(\mathbf{x}^*_i)-\mathbf{f}^*\), and

-

a scalarisation parameter or weight vector w = \([w_1,w_2,\ldots,w_m]^\intercal\) ∈ ℝ(with \(\sum_{i=1}^{m}w_i=1\)).

In addition, let \(\mathbf{e} =[1,1,...,1]^\intercal \in \mathbb{R}^m\) be the m-dimensional vector containing all ones, \(\mathbf{e}_i = [0,\ldots,0,1,0,\ldots,0]^\intercal\) ∈ ℝm the i-th natural basis vector and \(\mathbf{e}'_i = \mathbf{e} - \mathbf{e}_i \in \mathbb{R}^m\), the vector corresponding to the i-th vertex that contains exactly one zero in an m-dimensional unit hypercube. Let \(\mathbf{E} = [\mathbf{e}'_1,\mathbf{e}'_2,\ldots,\mathbf{e}'_m] \in \mathbb{R}^{m \times m}\) be the matrix with as columns the m vertices of an m-dimensional unit hypercube that contain exactly one zero (see Fig. 1).

2.2 Normal Boundary Intersection

The Normal Boundary Intersection method reformulates the original MOOP in the following way (Das and Dennis 1998):

Here \({\boldmath {\Phi}}\mathbf{w}\) indicates a point on the hyperplane containing all individual minima and \(-l {\boldmath {\Phi}} \mathbf{e}\) describes the (quasi-)normal direction to this plane. The rationale behind the method is that the intersection between the (quasi-)normal from any point \({\boldmath {\Phi}}\mathbf{w}\) and the boundary of the feasible cost space closest to the utopia point is expected to be Pareto optimal. To this end, (4) introduces the maximisation of the length l along the (quasi-)normal described by m additional equality constraints described by (7).

2.3 (Enhanced) Normalised Normal Constraint

(Enhanced) Normalised Normal Constraint reformulates the original MOOP in an alternative way:

where ¯ indicates variables based on normalised objectives. The rationale is to minimise the single most important objective described by (8), while reducing the feasible cost space by adding m − 1 hyperplanes given by (11) that are orthogonal to the plane through the (normalised) individual minima. Normalisation can be achieved by first shifting the objectives such that the utopia point coincides with the origin and afterwards pre-multiplying them with a matrix T ∈ ℝm ×m:

Because Messac and Mattson (2004) considered only the shifting and scaling of the individual objectives in the classic Normalised Normal Constraint method (NNC), the matrix T is diagonal. The diagonal elements are the following:

where \(f_i^{\square} = \max \{ f_i(\mathbf{x}^*_j), j=1,\ldots,m\}\) is the maximum for objective function i for the set of individual minimisers \(\mathbf{x}^*_j\). In their Enhanced Normalised Normal Constraint method (ENNC), Sanchis et al. (2008) introduce a different matrix T for a three-objective case based on the solution of a system of 9 linear equations. This matrix T can be generalised as follows:

with E the matrix containing zeros on the diagonal and ones on the off-diagonal. As \(\overline{{\boldmath {\Phi}}} = \mathbf{T} {\boldmath {\Phi}} = \mathbf{E} {\boldmath {\Phi}}^{-1} {\boldmath {\Phi}} = \mathbf{E}\), it is clear that the normalisation based on (14) maps the individual minima to the m vertices of an m-dimensional unit hypercube that each contain exactly one zero.

3 Novel insights from theoretical considerations

In the current section conditions are derived under which NBI and ENNC return the same candidate Pareto optimal solution. In a first step, the conditions for equivalence are derived in Section 3.1 for a normalised case. Here, normalised means that the individual minima are located at the m vertices of an m-dimensional unit hypercube which contain each exactly one zero element. In the second step, it is shown in Section 3.2 that ENNC and NBI are invariant with respect to the normalisation \(\overline{\mathbf{f}}(\mathbf{x}) = \mathbf{T} (\mathbf{f}(\mathbf{x})-\mathbf{f}^*)\). The results do not change by mapping the original cost functions to the m vertices of an m-dimensional unit hypercube which each contain exactly one zero element. Based on these conditions practical procedures are elaborated in Section 3.3 for the removal of candidate solutions returned by NBI and ENNC that are non-Pareto optimal. The main advantage is that only matrix multiplications are required and no pairwise comparisons have to be performed.

3.1 Equivalence in an m-dimensional unit hypercube

The necessary conditions are presented under which the NBI and ENNC subproblems allow the same stationary point in a unit hypercube (when solved to global optimality). Here, a stationary point indicates a point satisfying the first-order conditions for optimality (also called Karush-Kuhn-Tucker or KKT conditions). However, due to the general nonlinear (and, thus, possibly multi-modal) character of the optimisation problems, it cannot be guaranteed that an actual optimisation run with fast gradient based deterministic solvers will return identical stationary points.

Remark 1

If different points are returned by NBI and ENNC in an actual optimisation run, a practical check is to restart the multi-objective optimisation method that gave the worse of both solutions with the better one of both solutions as initial guess. This test indicates whether at least locally the same stationary point is possible.

As a stationary point satisfies the KKT-conditions, the proof is based on a comparison of these conditions for the ENNC and NBI subproblems. To cast everything within a mimisation framework, the maximisation of l in the NBI is replaced by the minimisation of − l. Hence, the lagrangian for NBI is:

with lagrange multipliers \({\boldmath {\lambda}}_{\textrm{\tiny{NBI}}} \in \mathbb{R}^{n_{\mathrm{eq}}}\), \({\boldmath {\mu}}_{\textrm{\tiny{NBI}}} \in \mathbb{R}_+^{n_{\mathrm{ineq}}}\) and \({\boldmath {\nu}}_{\textrm{\tiny{NBI}}} \in \mathbb{R}^{m}\). The KKT conditions require the existence of a solution \(\mathbf{x}^*_{\textrm{\tiny{NBI}}}\), \(l^*_{\textrm{\tiny{NBI}}}\), \({\boldmath {\lambda}}^*_{\textrm{\tiny{NBI}}}\), \({\boldmath {\mu}}^*_{\textrm{\tiny{NBI}}}\), \({\boldmath {\nu}}^*_{\textrm{\tiny{NBI}}}\) satisfying:

Equation (16) gives rise to

The assumption that all additional inequalities specified by (11) are active, yields the following lagrangian for ENNC:

with lagrange multipliers \({\boldmath {\lambda}}_{\textrm{\tiny{ENNC}}} \in \mathbb{R}^{n_{\mathrm{eq}}}\), \({\boldmath {\mu}}_{\textrm{\tiny{ENNC}}} \in \mathbb{R}_+^{n_{\mathrm{ineq}}}\) and \({\boldmath {\nu}}_{\textrm{\tiny{ENNC}}} \in \mathbb{R}^{m-1}\). Hence, any solution \(\mathbf{x}^*_{\textrm{\tiny{ENNC}}}\), \({\boldmath {\lambda}}^*_{\textrm{\tiny{ENNC}}}\), \({\boldmath {\mu}}^*_{\textrm{\tiny{ENNC}}}\), \({\boldmath {\nu}}^*_{\textrm{\tiny{ENNC}}}\) has to satisfy the KKT conditions:

where (23) is

In order to prove the equivalence, for every solution \((\boldmath{x}^*_{\textrm{\tiny{NBI}}}, l^*_{\textrm{\tiny{NBI}}}, {\boldmath {\lambda}}^*_{\textrm{\tiny{NBI}}}, {\boldmath {\mu}}^*_{\textrm{\tiny{NBI}}}, {\boldmath {\nu}}^*_{\textrm{\tiny{NBI}}})\) of the NBI problem, an equivalent solution \((\boldmath{x}^*_{\textrm{\tiny{ENNC}}}, {\boldmath {\lambda}}^*_{\textrm{\tiny{ENNC}}}, {\boldmath {\mu}}^*_{\textrm{\tiny{ENNC}}},{\boldmath {\nu}}^*_{\textrm{\tiny{ENNC}}})\) for the ENNC problem exist.

The assumption that the solutions \(\mathbf{x}^*_{\textrm{\tiny{NBI}}} = \mathbf{x}^*_{\textrm{\tiny{ENNC}}}\) are the same, induces that the original equalities h(x) and inequalities g(x) are satisfied and that the same inequalities are active. Hence, based on (19) and (26) the lagrange multipliers \({\boldmath {\mu}}^*_{\textrm{\tiny{NBI}}}\) and \({\boldmath {\mu}}^*_{\textrm{\tiny{ENNC}}}\) are proportional, i.e., \({\boldmath {\mu}}^*_{\textrm{\tiny{NBI}}} = a {\boldmath {\mu}}^*_{\textrm{\tiny{ENNC}}}\) with a ∈ ℝ + 0. Consequently, only the equivalence of (i) the additional constraints described by (20) and (27) and (ii) the lagrange gradients given by (21) and (28) needs to be checked.

All individual minima are located at the vertices of an m-dimensional unit hypercube that contain one zero: \(\mathbf{f}(\mathbf{x}^*_i) = \mathbf{e}'_i = \mathbf{e} - \mathbf{e}_i\). This results in the utopia vector \(\mathbf{f}^*= \overline{\mathbf{f}}^* = \mathbf{0}\), the matrix T = I, the cost vector \(\mathbf{f}(\mathbf{x}) = \overline{\mathbf{f}}(\mathbf{x})\) and the pay-off matrix \({\boldmath {\Phi}} = \overline{{\boldmath {\Phi}}} = \mathbf{E}\). Hence, all objective functions in (16)–(21), can be replaced by their normalised versions. To indicate that the optimisation is performed in the normalised space, the normalised lagrange multipliers and length l are denoted by ¯.

Equivalence of the additional constraints

The m additional equality constraints in NBI resulting from (20) can be reformulated as:

The optimal value for the length along the quasi-normal is determined as:

Eliminating this variable from (30) yields m − 1 constraints of the form:

Alternatively, the m − 1 additional and active inequality constraints in ENNC originating from (27) are:

This expression leads to:

which is the same as (32). Clearly, the additional inequality constraints in ENNC are identical to the additional equalities imposed in NBI, under the assumption that they are active.

Equivalence of the lagrangian gradients

For NBI, the second line of (21) is rewritten as:

from which the last lagrange multiplier is eliminated:

Substituting this variable in the first line of (21) and rewriting the result yields:

Alternatively, based on the normalised setting, (28) for ENNC yields:

which simplifies to:

Hence, when selecting the lagrange multipliers as: \( \overline{{\boldmath {\lambda}}}^*_{\textrm{\tiny{NBI}}} = \frac{1}{m-1} \overline{{\boldmath {\lambda}}}^*_{\textrm{\tiny{ENNC}}}\), \(\overline{{\boldmath {\mu}}}^*_{\textrm{\tiny{NBI}}} = \frac{1}{m-1} \overline{{\boldmath {\mu}}}^*_{\textrm{\tiny{ENNC}}}\) and \(\overline{\nu}^*_{\textrm{\tiny{NBI}},i} = \frac{1}{m-1} \overline{\nu}^*_{\textrm{\tiny{ENNC}},i}\) for i = 1,...,m − 1, (38) and (40) are the same provided that the ENNC and NBI solutions are the same, i.e., \(\mathbf{x}^*_{\textrm{\tiny{NBI}}} = \mathbf{x}^*_{\textrm{\tiny{ENNC}}}\). Note that, as required, the lagrange multipliers \(\overline{{\boldmath {\mu}}}^*_{\textrm{\tiny{NBI}}}\) and \(\overline{{\boldmath {\mu}}}^*_{\textrm{\tiny{ENNC}}}\) are proportional with a positive constant \(\frac{1}{m-1}\). Moreover, based on (37), it directly follows that:

Consequently, it is concluded that for a multi-objective optimisation problem in a normalised setting, any stationary point for a specific ENNC subproblem is also a stationary point for the corresponding NBI subproblem, provided that all additional inequality constraints for ENNC are active.

3.2 Invariance of NBI and ENNC under normalisation

Here, it is shown that the results of NBI and ENNC are invariant under the normalisation T(f(x) − f *) with T non-singular. Hence, shifting, rescaling and rotating the multi-objective problem to an m-dimensional unit hypercube will not change the resulting stationary points and, hence, the candidate Pareto solutions.

Clearly, as ENNC intrinsically maps the m objectives to an m-dimensional unit hypercube, a prior normalisation will not affect the resulting candidate points. As mentioned already in the original paper by Das and Dennis (1998), NBI is invariant with respect to shifting and scaling. However, this property can be extended to the normalisation \(\overline{\mathbf{f}} = \mathbf{T} (\mathbf{f}-\mathbf{f}^*)\). Taking into account that \(\mathbf{f} - \mathbf{f}^* = \mathbf{T}^{-1} \overline{\mathbf{f}}\), \(\overline{\mathbf{f}}^* = \mathbf{0}\), \({\boldmath {\Phi}} = \mathbf{T}^{-1} \overline{{\boldmath {\Phi}}}\) and \(\nabla_{\mathbf{x}} \mathbf{f} = \nabla_{\mathbf{x}} \overline{\mathbf{f}} (\mathbf{T}^{-1})^{^{\intercal}}\) the KKT conditions described by (16) to (21) for NBI are rewritten as follows:

When choosing the multipliers \({\boldmath {\mu}}^*_{\textrm{\tiny{NBI}}} = \overline{{\boldmath {\mu}}}^*_{\textrm{\tiny{NBI}}}\), \({\boldmath {\lambda}}^*_{\textrm{\tiny{NBI}}} = \overline{{\boldmath {\lambda}}}^*_{\textrm{\tiny{NBI}}}\) and \((\mathbf{T}^{-1})^{^{\intercal}} {\boldmath {\nu}}^*_{\textrm{\tiny{NBI}}} = \overline{{\boldmath {\nu}}}^*_{\textrm{\tiny{NBI}}}\), the set of (42) to (47) corresponds to the KKT conditions for the NBI problem with normalised objective functions. Hence, the same stationary point is returned by both the original NBI and its variant which first normalises all objectives, i.e., maps them to an m-dimensional unit hypercube. The corresponding lagrange multipliers are the same, except for the multipliers of the additional equality constraints, which are related through the matrix T.

Remark 2

It has been shown that ENNC and NBI subproblems yield the same solutions when all additional inequalities for ENNC are active. However, it should be noted that this result is in general not true for the original NNC method. For bi-objective problems NNC and ENNC are identical and no differences with NBI will be observed. However, for three or more objectives NNC and ENNC yield the same results only when the different objective values \(f_i(\mathbf{x}^*_j)\) for the individual minimisers \(\mathbf{x}^*_j\) are identical, except when i equals j. In this situation, the original problem can be mapped to a unit hypercube by shifting and scaling only (i.e., without rotating), and the matrix T will be diagonal.

Remark 3

Equation (50) allows making the connection from ENNC to the corresponding weights \(w_{\mathrm{\tiny{WS}},i}\) of a WS via (Das and Dennis 1998):

Remark 4

For practical reasons, the vector

is called the augmented vector of ENNC multipliers. In addition, the vectors

are introduced to facilitate comparisons and further derivations.

As a result, (50) is rewritten as:

3.3 Implications for the removal of non-Pareto optimal points from NBI and ENNC candidate solutions

It is well-known that in adverse situations NBI and (E)NNC can generate non-Pareto optimal points (Das and Dennis 1998; Messac et al. 2003). To mitigate this situation, a Pareto filter algorithm based on a pairwise comparison of the generated candidate points has been proposed by Messac et al. (2003). However, this approach has some drawbacks as observed by the authors. Due to the discrete nature of the candidate set, the exact boundary of the Pareto set may be hard to determine and non-Pareto optimal points close to the boundary may be retained (see, e.g., Section 4.1.3). Also the algorithm becomes time consuming for large sets of candidate points. Hence, any a priori criterion that can assist in removing dominated points is welcome.

Due to the less restrictive additional inequalities, (E)NNC is able to avoid some of the dominated points that are returned by NBI (caused by to the more strict additional equalities). This is typically achieved by leaving one (or more) of the additional hyperplanes constraining the feasible criterion space and making the corresponding inequality constraint(s) inactive. The corresponding multiplier(s) \(\overline{{\boldmath {\nu}}}^*_{\textrm{\tiny{ENNC}}}\) are then no longer strictly positive but become zero (see, e.g., Section 4.1.2).

The rationale behind the removal criteria is that in general a candidate solution which is not locally Pareto optimal will leave the constraining hyperplanes determined by ENNC. This tendency can be checked via the lagrange multipliers and relation (50). When evaluating a candidate solution generated by NBI or ENNC, it is assumed that the candidate will not leave the constraints set by ENNC and that all additional ENNC inequalities are active. Hence, the candidate can have been generated by both NBI and ENNC. Moreover, relation (50) holds and the first m − 1 elements of both the left and right hand side have to be positive. Based on the multipliers returned by the optimiser (i.e., \({{\boldmath {\nu}}}^*_{\mathrm{\tiny{NBI}}}\) and \({\boldmath {\nu}}^*_{\mathrm{\tiny{ENNC}}}\)) the left and right hand side vectors of relation (50) can be computed, i.e., \(\hat{{\boldmath {\nu}}}^*_{\mathrm{\tiny{NBI}}}\) and \(\hat{{\boldmath {\nu}}}^*_{\mathrm{\tiny{ENNC}}}\). If the positivity of the first m − 1 elements of these vectors is not satisfied, the assumption was wrong. Consequently, the candidate can be removed as a possible Pareto optimal solution.

However, whether or not a possible solution leaves the constraints imposed by ENNC also depends on the behaviour of the selected objective f m in the admissible directions given by the corresponding additional inequalities determined by the selection of m. As a result, m cases have to be checked, i.e., one for each objective f k as selected objective f m . However, no additional optimisations are needed to check this but only matrix multiplications.

To this end, the following permutation matrices:

and \(\mathbf{P}^{\intercal}\) are exploited. The product PM yields a matrix in which all the rows of the matrix M are shifted down one row and the last row becomes the first. Similarly, the product \(\mathbf{M}\mathbf{P}^{\intercal}\) shifts all columns of M one column to the right and puts the last column first. Of course, this procedure can be repeated, e.g., pre-multiplying with PP shifts all rows two rows down and puts the second to last and the last rows in the first and second place, respectively. An important feature of permutation matrices is that they are orthogonal, i.e., \(\mathbf{P}^{\intercal} = \mathbf{P}^{-1}\).

Assume that x [m]* is obtained for an objective sequence [f 1,f 2,...,f m ] with corresponding multipliers \(\overline{{\boldmath {\nu}}}^{[m]*}_{\mathrm{\tiny{NBI}}}\) for NBI and \(\overline{{\boldmath {\nu}}}^{[m]*}_{\mathrm{\tiny{ENNC,aug}}}\) for ENNC. The corresponding pay-off and rotation matrices are \(\boldsymbol{\Phi}^{[m]}\) and T [m], respectively. For a sequence [f m ,f 1,f 2,...,f m − 1] the objective to be minimised is f m − 1 and the pay-off and rotation matrices can be computed as follows:

Still under the assumption that all additional equations set by ENNC are active (which is always so for NBI), it can be shown that the following equations hold:

These formulae can be applied recursively to account for all m possible choices. However, each time the m − 1 first elements of these vectors have to be positive, which can be summarised as follows.

Remark 5

These criteria are easily implemented requiring about 10 lines of Matlab code (see Appendix). This code is also made available as Supplementary Material to this article. Note that the criterion is only based on local information. It can help for a first removal of local non-Pareto optimal candidates, before using the Pareto filter which acts globally. However, computations can be done efficiently as only matrix multiplications are required and the computation time is only linear in the number of candidates. Moreover, the criterion can be applied without knowledge about the other candidates.

4 Results

In this section the results from the theoretical considerations are illustrated. First, a bi-objective case is discussed in Section 4.1 as this allows a clear explanation and visualisation of the insights gained. Second, the extension is made to a more general three-objective case in Section 4.2. The aim is to illustrate: (i) the sets of candidate solutions generated by the different methods, (ii) the equivalence conditions and (iii) the application of the removal criteria. Afterwards, a complex dynamic optimisation problem involving a tubular chemical reactor is studied (Section 4.3).

4.1 Case 1

As a first example, the bi-objective case studied by Messac et al. (2003) is revisited:

The criterion space is depicted in Fig. 2, in which the infeasible region is coloured in gray. The segments from A to B and D to E comprise the global Pareto front. The region from C to D includes local Pareto optimal points. Points in the segment from B to C are non-Pareto optimal.

4.1.1 Candidate solutions

Candidate solutions are generated with all three methods (i.e., NBI, NNC and ENNC) for the two possible sequences of objective functions (i.e., [f 1,f 2] and [f 2,f 1]). As in Messac et al. (2003) 60 uniformly distributed w vectors are taken. The results for the different methods can be seen in Fig. 3. For the sequence [f 1,f 2] identical results as in Messac et al. (2003) are obtained for NBI and NNC. Because there are only two objectives NNC and ENNC provide identical candidate solutions, which is indicated by the coinciding circles ∘ and triangles \(\triangle\). As indicated by the crosses ×, NBI returns solutions that are non-Pareto optimal (in segment BC) and only locally Pareto optimal (in segment CD) for both sequences. However, depending on the sequence of objective functions used, NNC and ENNC can avoid some of the non-Pareto points from segment BC.

This aspect is clarified in Fig. 4 which elaborates on the solution of the NBI and (E)NNC subproblems. NBI reduces the feasible region by constraining a solution to be on the (quasi-)normal. This is indicated by coloring the entire feasible region gray except for the (quasi-)normal. Moreover, NBI aims for a solution as close as possible to the origin without going infeasible, which is indicated by the arrow. As a result, the cross ×, lying in the non-Pareto optimal segment is obtained. Also this result is independent of the sequence of objectives used. Alternatively, (E)NNC reduce the feasible region by adding inequality constraints, represented by gray halfplanes. These methods aim to minimise a selected objective (f 2 for the sequence [f 1,f 2] and f 1 for the sequence [f 2,f 1]) which is indicated by the arrow. They return as a candidate point the filled circles ∙. By leaving the additional constraining halfplanes, these methods are able to escape from the non-Pareto optimal points. This leaving is for instance observed when minimizing f 2. Alternatively, when minimizing f 1 for the same case, it is seen that leaving the constraining halfplane is impossible. This fact can be attributed to the monotonous decrease of the f 1 value when following the border of the feasible region. Clearly f 2 does not exhibit this monotonous behaviour.

4.1.2 Equivalence conditions

As NNC and ENNC yield identical results for bi-objective problems, only results for ENNC and NBI are compared. Figures 5 and 6 depict the left and right hand side of (50) for both objective function sequences. The equivalence relation holds whenever the additional ENNC constraints are active as indicated by the coinciding \(\square\) and + markers. Also the ENNC and NBI solutions coincide. However, the results differ when \(\hat{\nu}_1\) multiplier becomes negative for NBI (⋆), while the corresponding variable for ENNC (\(\Diamond\)) is zero. Here, the solution has left the constraining halfplane in the ENNC subproblem in order to avoid a non-Pareto optimal solution. This is not possible in NBI due to the constraining (quasi-)normal. A classification of the candidate points in the criterion space according to their \(\hat{\nu}_1\)-value is also depicted. It is seen that points with a negative \(\hat{\nu}_1\)-value can be removed. However, it should be noted that the \(\hat{\nu}_1\)-value depends on the selected sequence. NBI produces the same candidate points for both sequences. However, when using [f 2,f 1] as a basis for removal, it is seen that \(\hat{\nu}_1\) is always positive. Hence, non-Pareto optimal candidate points that are removed when using [f 1,f 2] as a basis, are not removed now.

4.1.3 Removal criteria

Based on the previous section, checking the positivity of \(\hat{\nu}_1\) is a fair criterion for detecting non-Pareto optimal points. But this check has to be performed for both objective sequences. Fortunately, no additional optimisations are needed as analytical relations can be used. For Fig. 7 the set of candidate solutions generated by NBI and ENNC for the sequence [f 2,f 1] has been taken. As mentioned, it is not possible to remove any of the non-Pareto optimal solutions when solely looking at the multipliers \(\hat{\nu}^{[1]*}_{\mathrm{\tiny{NBI}},1}\) and \(\hat{\nu}^{[1]*}_{\mathrm{\tiny{ENNC},1}}\). However, based on the (61) and (60) the corresponding values \(\nu^{[2]*}_{\mathrm{\tiny{NBI}},1}\) and \(\hat{\nu}^{[2]*}_{\mathrm{\tiny{ENNC},1}}\) for the sequence [f 1,f 2] can be computed. The figure depicts the \(\nu^{*}_{1}\)-values obtained from NBI and ENNC with the sequence [f 1,f 2] (\(\square\) and × markers), and the \(\nu^{*}_{1}\)-values obtained when applying the relations (61) and (60) to the candidates obtained from NBI and ENNC with the sequence [f 2,f 1] (\(\triangle\) and ∗ markers). Note that the latter values correspond to the first element of the vector obtained with relations (62) and (63) for j = 1. These values coincide when all additional constraints are active. Moreover, the computed values become negative for non-Pareto optimal points. This allows also removing non-Pareto optimal candidates generated by NBI and ENNC for the sequence [f 2,f 1] without additional optimisations. This observation corroborates the removal criteria (62) and (63).

The performance of the Pareto filter (PF) and removal criterion (RC) are compared for the candidate sets generated by ENNC and NBI for the sequence [f 2,f 1]. Also the combination is made in which the removal criterion is used followed by the Pareto filter (RC+PF). Results are displayed in Table 1. Each time the number of removed candidates (n p,rem) and the computational expense relative to the time required for the Pareto filter are given.

The Pareto filter looks for globally Pareto optimal solutions and removes 11 from the 60 candidates. On the other hand the removal criterion only acts locally and is able to remove five candidates, which are non-Pareto optimal. However, the removal criterion requires roughly only 2% of the time taken by the Pareto filter. The pairwise comparison in the Pareto filter is time consuming and the computation time grows faster than linearly. Hence, the removal criterion can be used as a first step to prune the set of candidate solution. In a second step the Pareto filter can be employed to further reduce the set of solutions to global Pareto optimal solutions. Interestingly this two-step procedure only takes around 85% of the CPU time that is required for solely applying the Pareto filter.

The retained solutions after post processing the candidate solutiuons with the PF, the RC and the combined RC+PF are indicated in Fig. 8 by the ×, ∘ and ∇ markers, respectively. In general, the removal criterion removes non-Pareto optimal points in the segment BC, but cannot remove the points in segment CD as these points are locally but not globally Pareto optimal. In contrast, the Pareto filter removes points that are not globally Pareto optimal in the regions BC and CD. None of the methods removes points in the global Pareto optimal regions AB and DE.

An interesting observation is that the combined RC and PF is able to remove one candidate more than the PF. When zooming in on the region around point B, it is seen that still one cross exist on the right side of this point, i.e., in the non-Pareto optimal segment BC. This point remains after filtering as the PF is only based on pairwise comparisons of the finite number of points in the candidate set. In contrast, the RC is able to remove this point because it only uses the lagrange multipliers for the point and not a set of candidate solutions.

4.2 Case 2: General three-objective problem

The second example extends the two-objective example presented by, e.g., Das and Dennis (1998) and Kim and de Weck (2005) by introducing an additional objective function f 3. As can be seen, this example is highly nonlinear due to the different objectives and constraints.

Each time the weight vector is uniformly constructed with a stepsize of 0.1. This results in a total of 66 subproblems of which the 1st, the 11th and the 66th, correspond to the minimisation of the individual objective functions f 1, f 2 and f 3, respectively.

4.2.1 Candidate solutions

Candidate solutions have been generated for all three methods (NNC, ENNC and NBI) and for all three sequences [f 1,f 2,f 3], [f 3,f 1,f 2] and [f 2,f 3,f 1]. In view of brevity, only the results for [f 1,f 2,f 3] are discussed.

Figure 9 displays the resulting 66 points in the 3D criterion space for each of the methods. In contrast to the original NNC (\(\triangle\)), ENNC (∘) provides exactly the same results as NBI (×) for the Pareto optimal points (when all additional inequalities are active). The differences between NNC on the one hand and ENNC on the other are due to the fact that more than two objectives have to be minimized and that the matrix T is not a diagonal matrix. As already mentioned by Das and Dennis (1998), NBI can return dominated solutions. On the other hand, NNC and ENNC are able to avoid this in the current situation. This is seen in the upper right corner of Fig. 9 where the \(\triangle\) and ∘ markers have moved away from the × markers to align at the border of the Pareto set.

It should be noted that the same results are obtained for the sequence [f 2,f 3,f 1]. However, for [f 3,f 1,f 2] the (ENNC) results are identical to the NBI results, as apparently it is not possible in this case to leave the constraining hyperplanes.

4.2.2 Equivalence conditions

As only ENNC and NBI can yield identical candidates, only the candidates obtained from these methods are further investigated. All additional lagrange multipliers for both methods are depicted as a function of the subproblem number in Fig. 10. The three plots present the multipliers related to the additional (in)equality constraints imposed by NBI and ENNC. Figure 10 shows that the values for these multipliers are indeed equal when all additional ENNC inequalities are active (i.e., when the first two multipliers \(\hat{\nu}_{1}\) and \(\hat{\nu}_{2}\) are larger than zero). The results are indicated for ENNC and NBI by the \(\square\) and + markers, respectively. Consequently, this illustrates that the derived relations between the multipliers of NBI and ENNC hold.

Figure 10 also depicts the resulting 66 candidate points for ENNC and NBI in the 3D criterion space but now they are classified based on the values of the lagrange multipliers. For NBI, a classification is made between points in which either none or at least one of the two first multipliers of \(\hat{{\boldmath{\nu}}}_{\mathrm{NBI }}\) is negative. In the latter situation a ⋆ marker is used. For ENNC, a similar classification is introduced based on whether or not at least one of the first two multipliers \(\hat{{\boldmath{\nu}}}_{\mathrm{ENNC}}\) related to the additional inequalities is equal to zero. In the latter situation, at least one of these inequalities is inactive. These situations are indicated by the \(\Diamond\) markers. It is noted that the cases for which ENNC has inactive constraints, correspond to cases for which NBI returns non-Pareto optimal solutions. Hence, ENNC is able to escape from these non-Pareto points by leaving at least one of the additional constraining hyperplanes and setting the corresponding inequality constraint(s) to inactive. These observations substantiate the generality of the equivalence relations as also cases with more than two objectives are correctly included.

4.2.3 Removal criteria

Comparing the performance of the Pareto filter and removal criterion is this time done based on the candidate sets generated by ENNC and NBI for the sequence [f 3,f 1,f 2]. Again also the combination (RC+PF) is studied. Computational results are given in Table 2, i.e., the number of removed candidates and the computational expense relative to the time taken by the Pareto filter. The remaining candidate solutions are displayed in Fig. 11.

Pareto filter removes six from the 66 candidates, while the removal criterion removes 13 points, including the six removed by the Pareto filter. Hence, in the current situation no local Pareto optimal points are found. The points that are removed are all non-Pareto optimal. However, the fact that the Pareto filter removes seven points less is because these seven lie close to the boundary of the Pareto set. As seen before, the exact boundary is hard to determine when using pairwise comparisons based on a finite and limited number of candidate points.

With respect to the computational time, the removal criterion requires roughly only 2% of the time taken by the Pareto filter. Moreover, although the removal criterion reduces the number of candidates by approximately 20%, the combination of removal criteria followed by the Pareto filter reduces the computation time by around 30%. Hence, the removal criteria are also applicable for cases with more than two objectives.

4.3 Case 3: Tubular reactor

As a third and final example a jacketed tubular reactor under steady-state conditions is considered. Inside the reactor an irreversible first-order reaction takes place. Figure 12 illustrates the reactor configuration of length L, with C the reactant concentration, P the product concentration, T the reactor temperature and T w the jacket temperature.

The mass and energy balances give rise to two coupled ordinary differential equations. The independent variable is the position z along the reactor (see, e.g., Logist et al. 2008). Three conflicting objectives can be considered as in Logist et al. (2010b): (i) a conversion cost, i.e., the reactant concentration at the outlet, (ii) an energy cost based on the heat recuperation via the jacket and (iii) an installation cost, which is proportional to the reactor length L. The aim is to find the optimal reactor length L and the optimal temperature profile for the jacket T w (z). For constructive reasons the reactor and the jacket temperature must be in between 280 and 400 K. The reactor length is bounded between 0.5 and 1. A lower limit of 0.85 is imposed on the conversion for economic reasons. For brevity, the reader is referred to Logist et al. (2008, 2010b) for the detailed model and parameter values.

This optimal control or dynamic optimisation problem is solved exploiting ACADO (Houska et al. 2011), a freely available toolkit for Automatic Control And Dynamic Optimisation (http://www.acadotoolkit.org). ACADO also includes scalarisation-based multi-objective optimisation techniques as WS, NBI, NNC and ENNC (Logist et al. 2010a). To solve the optimal control problem numerically, a multiple shooting technique with a piecewise constant control discretisation of 50 uniform pieces is used.

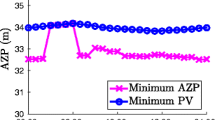

Figure 13 depicts the generated Pareto fronts. Again NBI (×) and ENNC (∘) yield identical results while slight differences are visible for the results based on NNC (\(\triangle\)). The different additional multipliers for NBI and ENNC are not depicted as they satisfy the relations specified by (48)–(50). In addition no non-Pareto optimal points have been found as neither the removal criterion, nor the Pareto filter is able to remove any of the candidates.

The different trade-offs can be recognised based on insight into the process. Higher reactor temperatures increase the reaction rates and, thus, the conversion. However, higher temperatures require more energy transfer from the jacket and increase the net energy cost. Longer reactors increase the residence times for the reactants in the reactor, and as a consequence, allow more product to be formed. Unfortunately longer reactors also have a higher installation cost. In addition, longer tubes recover on average more energy, but require a higher investment. Several Pareto points, i.e., the three individual minima as well as three intermediate points, are indicated with black markers. The optimal profiles for the jacket temperature corresponding to these Pareto points indicated in black are displayed in Fig. 14.

5 Practical guidelines

To conclude the following messages are given for practitioners with respect the methods.

-

NBI, NNC and ENNC all give an accurate and efficient approximation of the Pareto set. No systematic advantage in computational effort has been found for one of the three methods.

-

For bi-objective cases, all three methods will yield the same solution, while for more objectives only NBI and ENNC can give identical results. NNC and ENNC results will in these cases most often differ. The major underlying assumption for equivalence is always that all additional inequalities are active.

-

When the equivalence between ENNC and NBI holds, the results can also be related to weights for a corresponding WS optimisation.

-

NBI always generates the same set of candidate points, while the set generated by (E)NNC can depend on the selected objective J m to be minimised.

-

All methods can return non-Pareto optimal candidates, but NNC and ENNC will in general produce less non-Pareto optimal points due to the presence of the less restrictive additional inequalities compared to the more strict additional equalities in NBI.

With respect to the removal of possible non-Pareto optimal candidates the following messages are given.

-

Non-Pareto optimal points in the set of candidate solutions obtained with NBI and ENNC can be identified via the removal criteria for both methods. As these criteria only require simple matrix multiplications and no additional optimisations, they can be checked extremely fast.

-

These removal criteria can remove locally non-Pareto optimal candidate solutions close to the Pareto boundary that may be missed by the Pareto filter which is based on a pairwise comparison.

-

As these criteria are not based on the comparison of a set of candidates, they can be checked directly when the candidate is generated and also the outcome is independent of the number of points in the set.

-

However, the removal criteria look only locally while the Pareto filter takes a more global view. Hence, a first reduction based on the removal criteria followed by a further pruning based the Pareto filter is often a valuable strategy to reduce the computation time.

Based on the considerations above, the authors suggest that practitioners preferably try ENNC first, when solving their own multi-objective optimisation problems. The removal criterion can then be used to remove locally non-Pareto optimal candidate solutions. Afterwards, a Pareto filter can take the global view and ensure global Pareto optimality.

6 Conclusions

This paper has provided novel insights and practical implications for scalarisation-based multi-objective optimisation methods as Normal Boundary Intersection and (Enhanced) Normalised Constraint. It has been rigorously shown that NBI and ENNC generate the same candidate Pareto points if all additional inequalities in the ENNC subproblem are active. Relations between the resulting lagrange multipliers for the additional NBI and ENNC (in)equality constraints have been derived. Moreover, these relations have lead to a novel criteria for removing (part of) the non-Pareto optimal solutions that may be returned by NBI and ENNC. The presented criteria are able to remove the locally non-Pareto optimal points that would also be eliminated by a Pareto filter. In addition, the criteria have the advantage that they are able to remove also non-Pareto optimal points close to the border of the Pareto set that may be missed by the filter. This is because the filter is only based on a pairwise comparison of a finite number of candidate solutions. However, the Pareto criterion only looks locally while the Pareto filter takes a global view.

The novel insights have been illustrated on general mathematical and engineering examples involving both static and dynamic multi-objective optimisation problems. Three cases have been studied: a general bi- and three-objective problem and a dynamic optimisation of a tubular reactor. Case 1 and 2 illustrated numerically (i) the generation of candidate results with ENNC and NBI, (ii) the equivalence conditions and (iii) the removal criteria. In case 3 a real engineering application illustrated that the results for ENNC and NBI were the same when all additional ENNC inequalities are active. In contrast, NNC yielded slightly different results as expected. Afterwards a number of practical recommendations were summarised. In summary, the current analysis provides further insights in the (E)NNC and NBI methods. Hence, it paves the way for an increased use in various engineering disciplines as well as implementations in advanced software for real-time decision making.

List of acronyms

- ENNC:

-

Enhanced Normalised Normal Constraint

- MOOP:

-

Multi-Objective Optimisation Problem

- NBI:

-

Normal Boundary Intersection

- NNC:

-

Normalised Normal Constraint

- PF:

-

Pareto filter

- RC:

-

Removal criterion

- WS:

-

Weighted Sum

References

Das I, Dennis J (1997) A closer look at drawbacks of minimizing weighted sums of objectives for Paretoset generation in multicriteria optimization problems. Struct Optim 14:63–69

Das I, Dennis J (1998) Normal-boundary intersection: a new method for generating the Pareto surface in nonlinear multicriteria optimization problems. SIAM J Optim 8:631–657

Houska B, Ferreau H, Diehl M (2011) ACADO Toolkit—an open-source framework for automatic control and dynamic optimization. Optim Control Appl Methods 32:298–312

Kim I, de Weck O (2005) Adaptive weighted-sum method for bi-objective optimization: Pareto front generation. Struct Multidisc Optim 29:149–158

Logist F, Smets I, Van Impe J (2008) Derivation of generic optimal reference temperature profiles for steady-state exothermic jacketed tubular reactors. J Process Control 18:92–104

Logist F, Houska B, Diehl M, Van Impe J (2010a) Fast pareto set generation for nonlinear optimal control problems with multiple objectives. Struct Multidisc Optim 42:591–603

Logist F, Sager S, Kirches C, Van Impe J (2010b) Efficient multiple objective optimal control of dynamic systems with integer controls. J Process Control 20:810–822

Marler R, Arora J (2004) Survey of multi-objective optimization methods for engineering. Struct Multidisc Optim 26:369–395

Messac A, Mattson C (2004) Normal constraint method with guarantee of even representation of complete Pareto frontier. AIAA J 42:2101–2111

Messac A, Ismail-Yahaya A, Mattson C (2003) The normalized normal constraint method for generating the Pareto frontier. Struct Multidisc Optim 25:86–98

Miettinen K (1999) Nonlinear multiobjective optimization. Kluwer Academic Publishers, Boston

Sanchis J, Martinez M, Blasco X, Salcedo J (2008) A new perspective on multiobjective optimization by enhanced normalized normal constraint method. Struct Multidisc Optim 36:537–546

Acknowledgments

Work supported in part by Projects OT/10/035, OPTEC (Center-of-Excellence Optimization in Engineering) PFV/10/002 and SCORES4CHEM KP/09/005 of the Katholieke Universiteit Leuven, and by the Belgian Program on Interuniversity Poles of Attraction, initiated by the Belgian Federal Science Policy Office. J.F. Van Impe holds the chair Safety Engineering sponsored by the Belgian chemistry and life sciences federation essenscia.

Author information

Authors and Affiliations

Corresponding author

Electronic Supplementary Material

Appendix

Appendix

The matlab code for both removal criteria is depicted in Figs. 15 and 16. The corresponding matlab m-files are available as Supplementary Material to this article.

Rights and permissions

About this article

Cite this article

Logist, F., Van Impe, J. Novel insights for multi-objective optimisation in engineering using Normal Boundary Intersection and (Enhanced) Normalised Normal Constraint. Struct Multidisc Optim 45, 417–431 (2012). https://doi.org/10.1007/s00158-011-0698-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-011-0698-8