Abstract

This paper proposes a new effective scale-aware edge-smoothing weighting constraint-based weighted guided image filter (ESAESWC-WGIF) for single image dehazing. Edge-weighting constraint incorporated in this method is multi-scale and less sensitive to regularization parameter. It removes halo artifacts and over-smoothing strongly and preserves edge information in both flat and sharp regions more accurately than the guided image filter (GIF) and weighted guided image filter (WGIF). There are three main steps in the proposed method: In the first step, dark channel prior method is applied to hazy input image to estimate atmospheric map and transmission map. In the next step, we refine the initial transmission map using the proposed ESAESWC-WGIF. It removes halo artifacts, over-smoothing effect strongly and preserves edge information in both flat and sharp regions. In the final step, the haze-free image is recovered from the scene radiance. About 3200 images from Fattal, NYU2, D-HAZY, Haze-RD, and O-Haze datasets are used to compare the performance of the proposed filter with the existing image dehazing methods. Experimental results prove that the proposed method is independent of the nature of the input image. Moreover, it produces better visual quality. It is noteworthy that the proposed method is faster than the existing methods for a given resolution of images.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The visual quality of the outdoor images is seriously degraded due to hazy weather. Haze, fog, water droplets, suspended particles, etc., are different conditions of bad weather. The outdoor images captured under this situation have poor color, contrast, and visibility [7, 33, 38]. The reason for the formation of these types of turbid mediums is scattering and absorption of light by different aerosols present in the atmosphere [22, 36]. Image dehazing is extremely desired in computational photography, image processing, and computer vision [10, 32]. It can significantly reduce color and visibility and increase the contrast of hazy images. To improve the performance of hazy images, many algorithms have been proposed in different applications of computational photography, computer vision, and image processing. Due to unknown distances from the camera to scene points as well as unknown airlight, it is tough to remove haze from the input image. Several methods have been developed to remove haze. Tan et al. proposed a local contrast optimization-based haze removal method [38]. However, experimental results produce color distortion and halo artifacts. He et al. proposed a novel dark channel prior (DCP) [12] method for single image dehazing. However, this method is not applicable to large sky regions and halo artifacts, as well as color distortions, persist in both flat and sharp regions. To reduce halo artifacts, different edge-preserving image filters have been proposed [13, 23, 26, 39, 44]. Bilateral filters and their improved versions are widely used in image processing [39, 44]. But, the pixels cannot maintain consistency near the edges, and hence, edge information is not preserved appropriately. Further, He et al. developed local linear model-based guided image filter (GIF) [13] to overcome the drawback of bilateral filter [39]. It is a good edge-preserving filter. Here, the contents of the guided image or different images are considered as filter input. It has wide applications in image processing like detail enhancement, reduction in edge smoothing, image feathering, denoising, etc. However, halo artifacts and over smoothing persist in the sharp regions. Further, a weighted guided image filter (WGIF) [26] was proposed to reduce the halo artifacts by introducing an edge-aware weighting factor in the existing GIF [13]. But, due to the local linear model, it is unable to preserve edge information in the sharp regions. In this paper, we propose an effective scale-aware edge-smoothing weighting constraint-based weighted guided image filter (ESAESWC-WGIF) for single image dehazing. The main contributions of this work are summarized as

-

In this paper, we propose a new effective scale-aware edge-smoothing weighting constraint-based weighted guided image filter (ESAESWC-WGIF) for single image dehazing.

-

The proposed edge-smoothing weighting is a multi-scale-based local linear filter, and it is less sensitive to the regularization parameter than the GIF, WGIF, GGIF, and EGIF methods.

-

This filter is proposed by incorporating of ESAESWC into the cost function of GIF.

-

It is an excellent edge-preserving filter and removes halo artifacts and over-smoothing strongly in both flat and sharp regions.

-

To analyze the effectiveness of the proposed method, we have evaluated PSNR, SSIM, FADE, and CIEDE2000 metrics for different datasets, viz. Fattal, NYU2, D-HAZY, Haze-RD, and O-Haze datasets. Experimental results prove that the proposed method achieves favorable performance against the existing haze removal methods.

The remaining part of this paper is framed as follows. In Sect. 2, we shortly review the related works. Section 3 covers the preliminary work essential for understanding the GIF and related edge-preserving filtering concept. The proposed dehaze algorithm is detailed in Sect. 4. Experimental outcomes are discussed in Sect. 5, and Sect. 6 concludes the paper.

2 Related Work

This work is related to prior-based, edge-preserving filter-based, and deep-learning-based haze removal methods.

2.1 Prior-Based Haze Removal Methods

Various prior-based haze removal methods are in existence. The prior-based well-known haze removal methods are DCP [12], CAP [48], DSPP [14], CEP [4], BDPK [19], IDGCP [20], SIPSID [29], and IDBP [21]. He et al. proposed a dark channel prior (DCP) [12] method for single image dehazing. In DCP, dark channel is defined as at least one color channel has very low intensity in the non-sky regions. However, it fails when bright objects are present in the scene. Moreover, it is computationally inefficient due to soft matting. It generates halo artifacts and color distortions at depth discontinuity. Zhu et al. proposed a color attenuation prior (CAP) method in [48] for single image dehazing. Here, a linear model-based supervised learning method is used to evaluate the depth information of the input hazy image. Next, He et al. proposed an optimal transmission map-based difference structure preservation prior [14] method for single image dehazing. This method used an image patch as a sparse linear combination of the elements to obtain accurate transmission map. Next, Bui et al. proposed a color ellipsoid prior [4] method for single image dehazing. This method used a color ellipsoid geometry to calculate the transmission map which increases contrast of the restored image pixels, while preventing over-saturated pixels. In this prior, Ju et al. proposed an adaptive and more reliable atmospheric scattering model (RASM)-based algorithm known as Bayesian dehazing algorithm (BDPK) [19]. This method directly converts the image dehazing process into an optimization function using Bayesian theory and prior knowledge and restore the scene albedo with an alternating minimizing technique (AMT). Next, an effective gamma correction prior (GCP)-based atmospheric scattering model (ASM) is proposed in [20] for image dehazing. In this model, first an input image is transformed into a virtual image and it is combined with an input image to calculate scene depth of image dehazing. Next, Lu et al. proposed a saturation-based iterative dark channel prior (IDCP) [29] method for single image dehazing. In IDCP, dark channel is reformulated in saturation and brightness terms and estimates the transmission map without computing the dark channel. In [21], Lu et al. proposed a novel blended prior model for single image dehazing (IDBP). This method has two modules such as atmospheric light estimation (ALE) and a multiple prior constraint (MPC) to remove haze from input image. The prior-based methods remove haze efficiently. However, they fail to preserve edge information in the sharp regions and halo artifacts are observed in the dehazed image.

2.2 Edge-Preserving Filtering-Based Haze Removal Methods

Halo artifact is a major problem in haze removal algorithms. Therefore, He et al. developed a novel guided image filter (GIF) [13] for single image dehazing. In this algorithm, a local linear model is used to represent iterated output with the help of a guided image. This method reduces the halo artifacts and preserves the edge information more accurately. But, GIF [13] fails to preserve edge information in sharp regions due to local linear model and large computational complexity. Next, Li et al. proposed a weighted guided image filter (WGIF) [26] for image dehazing. It removes halo artifacts strongly and preserves the edge information more accurately in the sharp regions than the GIF [13]. However, due to local linear model and fixed regularization parameter, over-smoothing takes place in the sharp regions. Next, Kou et al. proposed a multi-scale edge-aware weighting-based gradient domain guided image filter (GGIF) [23] to avoid over smooth images in flat regions and reduce halo artifacts more strongly than WGIF [26]. But, over-smoothing in the sharp regions increases with an increase in the regularization parameter. In EGIF [28], the average of local variances for all pixels is incorporated in the cost function of GIF [13]. It removes halo artifacts effectively and preserves edge information more precisely than GIF [13], WGIF [26], GGIF [23] methods. However, it also over smooth images in the sharp regions depending on the value of the regularization parameter. Next, Geethu et al. proposed a weighted guided image filter for image dehazing [11]. In [15], Hong et al. developed weighted guided image filtering-based a local stereo matching algorithm to improve scene depth of hazy image. Chen et al. proposed a weighted aggregation model using guided image filter for single image dehazing in [6]. In [16], Hong et al. proposed a fast guided image filtering-based real-time local stereo matching algorithm for image dehazing.

2.3 Deep-Learning-Based Haze Removal Methods

With the popularity of convolutional neural network (CNN), many deep-learning-based haze removal methods such as DehazeNet [5], AOD-Net [24], Proximal dehazeNet[43], PDR-Net[25], FFA-Net[34], and RefineDNet [47] have been proposed for image dehazing. In [5], Cai et al. proposed a trainable deep learning architecture called DehazeNet to estimate transmission map and then remove haze by atmospheric scattering model (ATSM). In [5], a nonlinear activation function called Bilateral Rectified Linear Unit (BReLU) is proposed to restore the haze-free image more accurately. Next, Aod et al. proposed an end-to-end CNN-based deep learning model called AOD-Net [24] to remove haze. It is designed by re-formulating ATSM and directly generates the haze-free image through a lightweight CNN. Yang et al. [43] presented a CNN-based deep architecture for single image dehazing by learning dark channel prior and transmission map. This method used a proximal learning-based iterative deep learning algorithm called proximal DehazeNet for single image dehazing. Next, Li et al. proposed a deep CNN for single image dehazing named PDR-Net [25]. Here, a perception-driven image dehazing sub-network is designed for single image dehazing. The refined sub-network improves the contrast and visual quality of the dehazed image. Next, Qin et al. proposed an end-to-end feature fusion attention network (FFA-Net) [34] which combines the channel attention and pixel attention approach to directly recover the haze-free image. This method performs outstanding in case of thick haze and rich texture details. Zhao et al. proposed a two stage weakly supervised framework named RefineDNet [47] for single image dehazing. In [47], first prior-based DCP is used to recovered the visibility and then introduced generative adversarial network (GAN) to enhance the contrast and realness of the dehazed image. However, these methods are impractical and most of the models are trained on synthetic hazy datasets and often fail when tested on real hazy datasets. They require enormous computation and memory resources, especially with the increase in network depth.

3 Background

In GIF [13], the filtered output is linearly related to the guidance image, and it is expressed as

where \( I_{i}\) represents an input the guidance image and \( q_{i}\) represents its linear transform in window \({\omega }_{\zeta {_1}}\) at ith pixel position with radius \(\zeta _{1}\) and (\({ a_{k},b_k}\)) represent constant linear coefficients in window \( {{\omega }_{\zeta _1}}\) at pixel position kth.

The minimized cost function in window \(\omega _{\zeta _1}(k)\) can be expressed as

where \( p_{i}\) represents a filter input and \(\varepsilon \) represents a regularization parameter used to penalize large \(a_{k}\). The cost function in Eq. (2) is a linear ridge regression model [8, 49], and its optimal solution is given in terms of linear coefficients \((a_k, b_k)\) as

where \({\vert {\omega }\vert }\) represents number of pixels, \(\mu _{k}\) is mean, \({\sigma _k^2}\) is variance and \({\overline{p}}_{k}\) is average or mean of p in window \({\omega _{\zeta {_1}}(k)}\). The regularization parameter (\(\varepsilon \)) and variance \({\sigma _k^{2}}\) play a vital role in preserving edges in smooth and sharp regions. Specifically, \(\varepsilon \) should be larger than \({\sigma _k^{2}}\) to preserve edges in smooth regions and \(\varepsilon \) should be smaller than \({\sigma _k^{2}}\) to preserve edges in the sharp regions.

In order to keep the edge information more accurately than the GIF [13], a weighted guided image filter (WGIF) is proposed in [26]. In WGIF [26], local variance is replaced by a new edge-aware weighting \(\Gamma _{I}(k)\), and it can be expressed as

where N indicates the pixel number of the guidance image I and the parameter \(\lambda \) is a small constant and its value is selected by as \((0.001\times M)^2\) with M being the dynamic intensity range of the image. \({\sigma _{I,1}^{2}(k)}\) and \({\sigma _{I,1}^{2}(i)}\) are the local variance of I in the windows \(\omega _k\) and \(\omega _i\), respectively. The optimized cost function for WGIF [26] can be expressed as

The optimal value of \( a_{k}\) and \( b_{k}\) is obtained by the following expression:

where \(\mu _{I*p}\) represents the mean of \(({I*p})\).

4 Proposed Method

In this paper, an effective scale-aware edge-smoothing weighting constraint-based weighted guided image filter (ESAESWC-WGIF) is proposed for single image dehazing. The basic framework of the proposed method is shown in Fig. 1. The proposed method has three main steps as follows: In the first step, the dark channel prior (DCP) [12] method is used to compute the atmospheric and transmission maps accurately. In the second step, we refined the raw transmission map by ESAESWC-WGIF algorithm to remove halo artifacts, over smooth, and preserve edge information more accurately in both flat and sharp regions. Finally, we recovered the dehazed image from the scene radiance.

4.1 Dark Channel Prior (DCP)-Based Atmospheric Map and Transmission Map Estimation

The popular Koschmieder’s law [18] is generally used to represent the haze formation. However, McCartney [30] improved Koschmieder’s law by estimating the atmospheric map as well as transmission map more accurately. In this model, haze formation is represented by the following expression:

where x is pixel’s position into the image, I(x) is input hazy image, J(x) is output dehaze image or scene radiance, t(x) is the medium transmission map, and A is the global atmospheric light or map. In Eq. (9), the first term J(x)t(x) and the second term \({A(1-t(x))}\) are called direct attenuation and airlight, respectively.

The relation of medium transmission map t(x) with the object’s distance d(x) can be expressed as

where \({0\le d(x)\le \infty }\) is the depth (distance) of scene point (pixel) from camera and \(\beta \) is the scattering coefficient related to the wavelength of light and it is exponentially attenuated with the scene depth d(x). The single image haze removal result can be obtained by putting t(x) and A value in Eq. (9).

According to dark channel prior (DCP) [12] method, J can be estimated after assuming some prior information. In DCP [12], dark pixel (lowest pixel) concept is used to calculate transmission map t(x) and A(x). In DCP [12], the atmospheric map A is estimated by selecting top 0.1% of brightest pixels in hazy image. For a given atmospheric map A, Eq. (9) can be modified as

where c denotes color channels (r, g, b). \(A^{c}\) and \(J^{c}\) represent the atmospheric map and dehaze image for color channel, respectively. Due to constant behavior of transmission map t(x) in a local patch \(\Omega (x)\), it is denoted by \({\tilde{t}}(x)\) [12]. Dark channel is computed after substituting the minimum operator on both sides of Eq. (11).

According to DCP [12], to restore the scene radiance J as haze-free image, the dark channel of the scene radiance should be zero, and it can be expressed as

where \(J^{dark}\) is a scene radiance for dark channel. Since \(A^{c}\) should always positive, Eq. (12) can be modified as

After simplification, \({{\tilde{t}}}(x)\) can be expressed as

We know that the DCP [12] method is not valid for large sky, sea, or white regions because the color of sky or ocean during haze is mostly similar to atmospheric map. Due to that, the transmission map becomes close to 0 [12, 41, 42]. Finally, the transmission map can be expressed as

Here, a constant parameter w \((0 < w \le 1)\) is used to retain a very limited amount of haze for distant objects.

4.2 Effective Scale-Aware Edge-Smoothing Weighting Constraint-Based Weighted Guided Image Filter (ESAESWC-WGIF)

In this paper, a new multi-scale edge-aware weighting constraint-based an effective weighted guided image filter is proposed for single image dehazing. The new multi-scale edge-weighting constraint is incorporated in the cost function of the GIF [13]. The proposed method removes halo artifacts and over-smoothing effect strongly and preserves edge information appropriately in both flat and sharp regions.

In GIF [13], the regularization parameter \(\varepsilon \) is identical for all local windows and due to that it is unable to preserve sharp edges appropriately and hence halo artifacts exhibit near edges in the output images. To overcome this problem, a single scale edge-aware weighting was initially proposed in weighted guided image filter (WGIF) [26]. In this filter, a \({3 \times 3}\) window has been considered for every pixel while computing local variance and the regularization parameter \(\varepsilon \) is replaced with \({\varepsilon }{\Gamma _{I}(k)}\). It removes halo artifacts and preserves edge information more accurately than GIF. However, over-smoothing effect persists in the sharp regions due to single scale edge-aware weighting. In this paper, we are proposing a method to overcome this issue by introducing a new effective scale-aware edge-smoothing weighting constraint-based weighted guided image filter (ESAESWC-WGIF). It is multi-scale edge-aware weighting constraint which is defined using local variance of both \({3 \times 3}\) and \({(2\zeta _1+1) \times (2\zeta _1+1)}\) windows of each pixel in the guidance image I. The following expression can be used to express the average of local variances for all pixels:

and \({\overline{\sigma }}^{2}\) is expressed as

where N indicates the pixel number of the guidance image I, \({\sigma _{I},1(k)}\) and \({\sigma ^{2}_{I, {\zeta _{1}}}(k)}\) are the local variances of I in \({3 \times 3}\) windows and \({(2\zeta _1+1) \times (2\zeta _1+1)}\) windows of all pixels.

The minimum cost function for the proposed ESAESWC-WGIF can be expressed as

The modified \(a_{k}\) value is calculated by the following expression:

and \(b_{k}\) is calculated as mentioned in Eq. (8). For better analysis, I and p can be assumed identical and it can be expressed after simplification as

and

After substituting Eqs. (21) and (22) in Eq. (20), we obtain

and

or it is simply written as

After dividing Eq. (25) by \({\sigma ^{2}_{I, {\zeta _{1}}}(k)}\), we get

Substituting value of \({\overline{\psi }}_I(k)\) from (17) into (26)

Finally, \(q_{{{i}}}\) is expressed as

After obtaining linear constants \( a_{k}\) and \( b_{k}\), the filtered output \(q_i\) is now the refined transmission map (refined filtered output). It can be expressed as

where \({\overline{a}}_{i}\) and \({\overline{b}}_{i}\) terms in the above expression represent mean of \( a_{k}\) and \( b_{k}\), respectively, in the corresponding window of all pixels, and it is computed as

In order to preserve edge information in sharp regions, \(a_{k}\) should be 1 and \(b_{k}\) close to 0, whereas for smooth regions \(a_{k}\) close to 0 and \(b_{k}\) become 1 [26]. Finally, the dehazed output image is calculated by following expression:

where the value of \({t_0}\) is set to 0.1 [13] for avoiding noise amplification.

5 Experimental Results and Analysis

The proposed algorithm is experimented and evaluated using MATLAB R2018a on a PC with Intel (R) Core (TM) i7-6700 CPU @ 3.40 GHz of a 64-bit operating system with RAM-8GB. The performance of the proposed method is tested on natural hazy, non-hazy and synthetic images of different datasets, viz. Fattal’s (580 images) [10], NYU2 (650 images) [37], D-HAZY (400 images) [1], Haze-RD (760 images) [46], and O-HAZE (810 images) [2] and the outcomes are compared with existing DCP [12] GIF [13], WGIF [26], GGIF [23], EGIF [28], DehazeNet [5], and RYF-Net [9] haze removal methods for effective analysis.

5.1 Qualitative Analysis

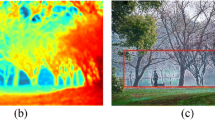

In this paper, the proposed method is tested on about 3200 images of hazy, non-hazy and synthetic images from Fattal [10], NYU2 [37], D-HAZY [1], Haze-RD [46], and O-HAZE [2] datasets and the outcomes are compared with 7 state-of-the-art haze removal methods, out of which DCP [12] is prior-based dehazing method, GIF [13], WGIF [26], GGIF [23], and EGIF [28] are four edge-preserving image dehazing filters, and DehazeNet [5] and RYF-Net [9] are two deep-learning-based image dehazing methods. The refined transmission map by GIF [13], WGIF [26], GGIF [23], EGIF [28] and the proposed method for input hazy building image [10] is shown in Fig. 2. It is clear from Fig. 2 that the proposed method is refined the raw transmission map more accurately than the rest of the existing methods. It removes halo artifacts, over-smoothing, color distortion strongly and preserves edge information more precisely than the existing GIF [13], WGIF [26], GGIF [23], and EGIF [28] methods. Several hazy images from different datasets, viz. Fattal [10], NYU2 [37], D-HAZY [1], Haze-RD [46], and O-HAZE [2], are tested for better analysis of the proposed method, and their outcomes are compared with existing DCP [12], GIF [13], WGIF [26], GGIF [23], EGIF [28], DehazeNet [5], and RYF-Net [9] haze removal methods. The dehazed outcomes of DCP [12], GIF [13], WGIF [26], GGIF [23], EGIF [28], DehazeNet [5], and RYF-Net [9] and the proposed method are calculated for five benchmark hazy images [10] and presented in Figs. 3, 4, 5, 6, and 7, respectively, for effective visual comparison. It is clear from Figs. 3, 4, 5, 6, and 7 that the proposed method removes halo artifacts, over-smoothing strongly and preserves edge information more precisely in both flat and sharp regions than the existing DCP [12], GIF [13], WGIF [26], GGIF [23], EGIF [28], DehazeNet [5], and RYF-Net [9] methods.

5.2 Quantitative Analysis

The objective evaluation of the proposed filter is compared with the existing GIF [13], WGIF [26], GGIF [23], and EGIF [28] methods using an effective blind object image quality metric [31]. Score of these filters are calculated for different values of the regularization parameter \(\varepsilon \) and their values are listed in Table 1. As we can seen clearly from Table 1 that the scores of GIF [13] and WGIF [26] initially increase and then decrease for large \(\varepsilon \) value. However, GGIF [23] and EGIF [28] both have higher scores than GIF [13] and WGIF [26] but they also generate lower scores for large \(\varepsilon \) values \((\varepsilon = 0.4^{2}, 0.8^{2})\), whereas the scores of the proposed method increase with the increase in \(\varepsilon \) and decrease slightly even for large \(\varepsilon \) values. In this paper, some performance metrics such as peak signal-to-noise ratio (PSNR) [17], structural similarity index (SSIM) [40], fog aware density evaluator (FADE) [7], and CIEDE2000 [35] are used for effective assessment of the proposed method. The performance metrics of DCP [12], GIF [13], WGIF [26], GGIF [23], EGIF [28], DehazeNet [5], RYF-Net [9] and the proposed method are calculated for hazy images from Fattal [10], NYU2 [37], D-HAZY [1], Haze-RD [46], and O-HAZE [2] datasets, and their outcomes are furnished in Tables 2, 3, 4, 5, and 6, respectively. The blue bold faces in Tables 2, 3, 4, 5, and 6 indicate the best value.

5.2.1 Peak Signal-to-Noise Ratio (PSNR)

The higher peak signal-to-noise ratio (PSNR) [17] indicates better image restoration result. It is expressed as

where \(f_{\max }\) is the maximum gray level (255 for 8-bit image). The mean squared error (MSE) measure by the original and the restored image. It is written as

where \(m\times n\) represents the size of the image and \(f_\textrm{o}\) and \(f_\textrm{r}\) are the original and restored images, respectively.

5.2.2 Structural Similarity Index (SSIM)

The structural similarity index (SSIM) [40] metric is used to quantify the structural difference between the source image and recovered image. It has a range \([-1,~1]\) and is expressed as

where \(L_c, C_c\), and \(S_c\) represent the luminance, contrast, and saturation comparison, respectively.

5.2.3 Fog Aware Density Evaluator (FADE)

The fog aware density evaluator (FADE) [7] metric evaluate the perceptual fog density in the restored image. The lower FADE value indicates lower haze concentration.

5.2.4 CIEDE2000

The CIEDE2000 metric [35] measures the color fidelity between source image and the dehaze image with ranging [0, 100]. The lower CIEDE2000 value indicates better color correction.

5.3 Discussion

The performance metrics PSNR [17], SSIM [40], FADE [7] and CIEDE2000 [35] of existing DCP [12] GIF [13], WGIF [26], GGIF [23], EGIF [28], DehazeNet [5], RYF-Net [9] and the proposed method are calculated for natural hazy, non-hazy and synthetic images from Fattal [10], NYU2 [37], D-HAZY [1], Haze-RD [46], and O-HAZE [2] datasets. For the best performance, PSNR [17] and SSIM [40] values must be higher, whereas FADE [7] and CIEDE2000 [35] values must be lower. These results are furnished in Tables 2, 3, 4, 5 and 6. The blue bold face values in each row indicate best measured value. PSNR [17] and SSIM [40] values of EGIF [28], DehazeNet [5] and RYF-Net [9] methods are comparable and usually, these values are low for DCP [12] GIF [13], WGIF [26], GGIF [23] methods. Next, RYF-Net [9] is more comparable method which is capable of retaining structures more accurately than the rest of existing methods. FADE [7] and CIEDE2000 [35] values should be small for a better image dehazing. However, these values are higher in DCP [12] GIF [13], WGIF [26], GGIF [23], and EGIF [28] methods. Usually, deep-learning-based DehazeNet [5], and RYF-Net [9] methods produced more comparable FADE [7] values than the existing DCP [12] GIF [13], WGIF [26], GGIF [23], EGIF [28] methods and similar is the case for CIEDE2000 [35] metric scores. It is clear from Tables 2, 3, 4, 5, and 6 that for all the aforesaid datasets the PSNR and SSIM values of the proposed method are higher than the rest of the existing methods, as expected. The FADE values are the least for all the datasets with the proposed method, as expected, and CIEDE2000 metric values are also the least for Fattal [10], NYU2 [37], Haze-RD [46], and O-HAZE [2] datasets except D-HAZY [1] dataset. This entails that the proposed method is better than the existing dehaze methods. The objective assessment of the performance metrics PSNR [17], SSIM [40], FADE [7], and CIEDE2000 [35] for different existing dehaze methods and the proposed method are computed and tested on images of Fattal [10], NYU2 [37], D-HAZY [1], Haze-RD [46], and O-HAZE [2] datasets. Next, the execution time of DCP [12] GIF [13], WGIF [26], GGIF [23], EGIF [28], DehazeNet [5], RYF-Net [9], and the proposed method for input hazy images in Figs. 3, 4, 5, 6, and 7 having a resolution of \(250\times 200\), \(550\times 400\), \(850\times 600\), \(1000\times 950\), and \(1400\times 1200\) is calculated and listed in Table 7. It is clear from Table 7 that the proposed method executes, more faster than the fastest reported methods. The statistical analysis of quality metrics PSNR [17], SSIM [40], FADE [7] and CIEDE2000 [35] for DCP [12] GIF [13], WGIF [26], GGIF [23], EGIF [28], DehazeNet [5], RYF-Net [9] and the proposed method represented by a box plot [27] shown in Fig. 8a–d, respectively. It is clear from the box plot figures that the proposed method has a higher median value for PSNR [17] and SSIM [40], whereas lower median value for FADE [7] and CIEDE2000 [35] metrics in comparison with other existing methods. The horizontal line within the box plot represents median value. Thus, it proves that the proposed method provides better dehaze outcomes than the existing DCP [12] GIF [13], WGIF [26], GGIF [23], EGIF [28], DehazeNet [5], and RYF-Net [9]. Due to limited visibility and poor contrast, the proposed method fails in case of dense haze and nighttime hazy conditions. The failure case of the existing DCP, GIF, WGIF, GGIF, EGIF, DehazeNet, RYF-Net methods and the proposed method on dense haze dataset [3] and nighttime haze dataset [45] is shown in Figs. 9 and 10, respectively. Finally, it is proved from Figs. 2, 3, 4, 5, 6, 7, and 8 and Tables 1, 2, 3, 4, 5, 6, and 7 that the proposed method removes halo artifacts, over-smoothing strongly and preserves edge information more accurately than the existing DCP [12] GIF [13], WGIF [26], GGIF [23], EGIF [28], DehazeNet [5] and RYF-Net [9] methods in both regions. Moreover, the proposed method is fast and preserves edge information in sharp region more accurately compared to the existing haze removal methods.

6 Conclusion

In this paper, an effective scale-aware edge-smoothing weighting constraint-based weighted guided image filter (ESAESWC-WGIF) is proposed to remove haze efficiently. In this filter, a new edge-aware weighting is incorporated into the cost function of the GIF. It refines the initial transmission map more accurately than the existing GIF, WGIF, GGIF, and EGIF methods. It removes halo artifacts, over-smoothing strongly and preserves edge information in both flat and sharp regions. Experimental results prove that the proposed method has better visual quality than the existing methods. About 3,200 images from Fattal, NYU2, D-HAZY, Haze-RD, and O-Haze datasets are used to test the performance of the existing and the proposed dehaze method. We analyzed performance parameters such as PSNR, SSIM, FADE, and CIEDE2000 on these images and experimental results prove that the proposed method restore the images with excellent visual quality. Moreover, the proposed method is independent of the nature of the input image. It performs equally well for all datasets compared to the existing dehaze methods. It is noteworthy that the proposed method is faster than the existing methods for a given resolution of images. But, this method fails in case of dense hazy and nighttime hazy conditions. The failure results in dense hazy and nighttime hazy conditions are shown in Figs. 9 and 10, respectively. So, there is a scope to devise a new method which can satisfy these requirements. We will be exploring the suitability of the proposed method for wider applications such as satellite image, underwater image, and low-light images dehazing.

Data Availability

The datasets generated or analyzed during the present study are available from the corresponding author upon reasonable request.

References

C. Ancuti, C.O. Ancuti, C. De Vleeschouwer, D-HAZY: a dataset to evaluate quantitatively dehazing algorithms. In 2016 IEEE International Conference on Image Processing (ICIP), pp. 2226–2230. IEEE (2016). https://doi.org/10.1109/ICIP.2016.7532754

C.O. Ancuti, C. Ancuti, R. Timofte, C. De Vleeschouwer, O-HAZE: a dehazing benchmark with real hazy and haze-free outdoor images. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 867–8678 (2018). https://doi.org/10.1109/CVPRW.2018.00119

C.O. Ancuti, C. Ancuti, M. Sbert, R. Timofte, Dense-Haze: a benchmark for image dehazing with dense-haze and haze-free images. In 2019 IEEE International Conference on Image Processing (ICIP), pp. 1014–1018 (2019). https://doi.org/10.1109/ICIP.2019.8803046

T.M. Bui, W. Kim, Single image dehazing using color ellipsoid prior. IEEE Trans. Image Process. 27(2), 999–1009 (2017). https://doi.org/10.1109/TIP.2017.2771158

B. Cai, X. Xu, K. Jia, C. Qing, D. Tao, DehazeNet: an end-to-end system for single image haze removal. IEEE Trans. Image Process. 25(11), 5187–5198 (2016). https://doi.org/10.1109/TIP.2016.2598681

B. Chen, S. Wu, Weighted aggregation for guided image filtering. Signal Image Video Process. 14(3), 491–498 (2020). https://doi.org/10.1007/s11760-019-01579-1

L.K. Choi, J. You, A.C. Bovik, Referenceless prediction of perceptual fog density and perceptual image defogging. IEEE Trans. Image Process. 24(11), 3888–3901 (2015). https://doi.org/10.1109/TIP.2015.2456502

N.R. Draper, H. Smith, Applied Regression Analysis, vol. 326 (Wiley, New York, 1998)

A. Dudhane, S. Murala, RYF-Net: deep fusion network for single image haze removal. IEEE Trans. Image Process. 29, 628–640 (2020). https://doi.org/10.1109/TIP.2019.2934360

R. Fattal, Single image dehazing. ACM Trans. Graph. (TOG) 27(3), 1–9 (2008). https://doi.org/10.1145/1360612.1360671

H. Geethu, S. Shamna, J.J. Kizhakkethottam, Weighted guided image filtering and haze removal in single image. Procedia Technol. 24, 1475–1482 (2016). https://doi.org/10.1016/j.protcy.2016.05.248

K. He, J. Sun, X. Tang, Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2010). https://doi.org/10.1109/TPAMI.2010.168

K. He, J. Sun, X. Tang, Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 35(6), 1397–1409 (2012). https://doi.org/10.1109/TPAMI.2012.213

L. He, J. Zhao, N. Zheng, D. Bi, Haze removal using the difference–structure–preservation prior. IEEE Trans. Image Process. 26(3), 1063–1075 (2016). https://doi.org/10.1109/TIP.2016.2644267

G.-S. Hong, B.-G. Kim, A local stereo matching algorithm based on weighted guided image filtering for improving the generation of depth range images. Displays 49, 80–87 (2017). https://doi.org/10.1016/j.displa.2017.07.006

G.-S. Hong, J.-K. Park, B.-G. Kim, Near real-time local stereo matching algorithm based on fast guided image filtering. In 2016 6th European Workshop on Visual Information Processing (EUVIP), pp. 1–5. IEEE (2016). https://doi.org/10.1109/EUVIP.2016.7764595

Q. Huynh-Thu, M. Ghanbari, Scope of validity of PSNR in image/video quality assessment. Electron. Lett. 44(13), 800–801 (2008). https://doi.org/10.1049/el:20080522

H. Israël, F. Kasten, Koschmieders theorie der horizontalen sichtweite. In Die Sichtweite im Nebel und die Möglichkeiten ihrer künstlichen Beeinflussung, pp. 7–10. Springer (1959). https://doi.org/10.1007/978-3-663-04661-5_2

M. Ju, C. Ding, D. Zhang, Y.J. Guo, BDPK: Bayesian dehazing using prior knowledge. IEEE Trans. Circuits Syst. Video Technol. 29(8), 2349–2362 (2018). https://doi.org/10.1109/TCSVT.2018.2869594

M. Ju, C. Ding, Y.J. Guo, D. Zhang, IDGCP: image dehazing based on Gamma correction prior. IEEE Trans. Image Process. 29, 3104–3118 (2019). https://doi.org/10.1109/TIP.2019.2957852

M. Ju, C. Ding, W. Ren, Y. Yang, IDBP: image dehazing using blended priors including non-local, local, and global priors. IEEE Trans. Circuits Syst. Video Technol. (2021). https://doi.org/10.1109/TCSVT.2021.3101503

J. Kopf, B. Neubert, B. Chen, M. Cohen, D. Cohen-Or, O. Deussen, M. Uyttendaele, D. Lischinski, Deep photo: model-based photograph enhancement and viewing. ACM Trans. Graph. (TOG) 27(5), 1–10 (2008). https://doi.org/10.1145/1409060.1409069

F. Kou, W. Chen, C. Wen, Z. Li, Gradient domain guided image filtering. IEEE Trans. Image Process. 24(11), 4528–4539 (2015). https://doi.org/10.1109/TIP.2015.2468183

B. Li, X. Peng, Z. Wang, J. Xu, D. Feng, AOD-Net: All-in-one dehazing network. In 2017 IEEE International Conference on Computer Vision (ICCV), pp. 4780–4788 (2017). https://doi.org/10.1109/ICCV.2017.511

C. Li, C. Guo, J. Guo, P. Han, H. Fu, R. Cong, PDR-Net: perception-inspired single image dehazing network with refinement. IEEE Trans. Multimed. 22(3), 704–716 (2019). https://doi.org/10.1109/TMM.2019.2933334

Z. Li, J. Zheng, Z. Zhu, W. Yao, S. Wu, Weighted guided image filtering. IEEE Trans. Image process. 24(1), 120–129 (2014). https://doi.org/10.1109/TIP.2014.2371234

S. Lin, C. Wong, G. Jiang, M. Rahman, T. Ren, N. Kwok, H. Shi, Y.-H. Yu, T. Wu, Intensity and edge based adaptive unsharp masking filter for color image enhancement. Optik 127(1), 407–414 (2016). https://doi.org/10.1016/j.ijleo.2015.08.046

Z. Lu, B. Long, K. Li, F. Lu, Effective guided image filtering for contrast enhancement. IEEE Signal Process. Lett. 25(10), 1585–1589 (2018). https://doi.org/10.1109/LSP.2018.2867896

Z. Lu, B. Long, S. Yang, Saturation based iterative approach for single image dehazing. IEEE Signal Process. Lett. 27, 665–669 (2020). https://doi.org/10.1109/LSP.2020.2985570

E.J. McCartney, Optics of the Atmosphere: Scattering by Molecules and Particles (Wiley, New York, 1976)

A.K. Moorthy, A.C. Bovik, A two-step framework for constructing blind image quality indices. IEEE Signal Process. Lett. 17(5), 513–516 (2010). https://doi.org/10.1109/LSP.2010.2043888

S.G. Narasimhan, S.K. Nayar, Chromatic framework for vision in bad weather. In Proceedings IEEE Conference on Computer Vision and Pattern Recognition. CVPR 2000 (Cat. No. PR00662), volume 1, pp. 598–605. IEEE (2000). https://doi.org/10.1109/CVPR.2000.855874

S.G. Narasimhan, S.K. Nayar, Vision and the atmosphere. Int. J. Comput. Vis. 48(3), 233–254 (2002). https://doi.org/10.1023/A:1016328200723

X. Qin, Z. Wang, Y. Bai, X. Xie, H. Jia, FFA-Net: feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 11908–11915 (2020). https://doi.org/10.1609/aaai.v34i07.6865

G. Sharma, W. Wu, E.N. Dalal, The CIEDE2000 color-difference formula: implementation notes, supplementary test data, and mathematical observations. Color Res. Appl. 30(1), 21–30 (2005). https://doi.org/10.1002/col.20070

S. Shwartz, E. Namer, Y.Y. Schechner, Blind haze separation. In 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), volume 2, pp. 1984–1991. IEEE (2006). https://doi.org/10.1109/CVPR.2006.71

N. Silberman, D. Hoiem, P. Kohli, R. Fergus, Indoor segmentation and support inference from rgbd images. In European Conference on Computer Vision, pp. 746–760. Springer (2012). https://doi.org/10.1007/978-3-642-33715-4_54

R.T. Tan, Visibility in bad weather from a single image. In 2008 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8. IEEE (2008). https://doi.org/10.1109/CVPR.2008.4587643

C. Tomasi, R. Manduchi, Bilateral filtering for gray and color images. In Sixth International Conference on Computer Vision, pp. 839–846. IEEE (1998). https://doi.org/10.1109/ICCV.1998.710815

Z. Wang, A.C. Bovik, H.R. Sheikh, E.P. Simoncelli, Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004). https://doi.org/10.1109/TIP.2003.819861

S.K. Yadav, K. Sarawadekar, Single image dehazing using adaptive gamma correction method. In TENCON 2019: 2019 IEEE Region 10 Conference (TENCON), pp. 1752–1757. IEEE (2019). https://doi.org/10.1109/TENCON.2019.8929383

S.K. Yadav, K. Sarawadekar, Steering kernel-based guided image filter for single image dehazing. In 2020 IEEE Region 10 Conference (TENCON), pp. 444–449. IEEE (2020). https://doi.org/10.1109/TENCON50793.2020.9293825

D. Yang, J. Sun, Proximal Dehaze-Net: a prior learning-based deep network for single image dehazing. In Proceedings of the European Conference on Computer Vision (ECCV), pp. 729–746 (2018). https://doi.org/10.1007/978-3-030-01234-2_43

B. Zhang, J.P. Allebach, Adaptive bilateral filter for sharpness enhancement and noise removal. IEEE Trans. Image Process. 17(5), 664–678 (2008). https://doi.org/10.1109/TIP.2008.919949

J. Zhang, Y. Cao, S. Fang, Y. Kang, C.W. Chen, Fast haze removal for nighttime image using maximum reflectance prior. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7016–7024 (2017). https://doi.org/10.1109/CVPR.2017.742

Y. Zhang, L. Ding, G. Sharma, HazeRD: an outdoor scene dataset and benchmark for single image dehazing. In 2017 IEEE International Conference on Image Processing (ICIP), pp. 3205–3209. IEEE (2017). https://doi.org/10.1109/ICIP.2017.8296874

S. Zhao, L. Zhang, Y. Shen, Y. Zhou, RefineDNet: a weakly supervised refinement framework for single image dehazing. IEEE Trans. Image Process. 30, 3391–3404 (2021). https://doi.org/10.1109/TIP.2021.3060873

Q. Zhu, J. Mai, L. Shao, A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 24(11), 3522–3533 (2015). https://doi.org/10.1109/TIP.2015.2446191

E.R. Ziegel, The elements of statistical learning. Technometrics 45(3), 267–268 (2003). https://doi.org/10.1198/tech.2003.s770

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yadav, S.K., Sarawadekar, K. An Effective Scale-Aware Edge-Smoothing Weighting Constraint-Based Weighted Guided Image Filter for Single Image Dehazing. Circuits Syst Signal Process 42, 6136–6159 (2023). https://doi.org/10.1007/s00034-023-02389-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-023-02389-0