Abstract

This paper presents a novel hierarchical least squares algorithm for a class of non-uniformly sampled systems. Based on the hierarchical identification principle, the identification model with a high dimensional parameter vector is decomposed into a group of submodels with lower dimensional parameter vectors. By using the least squares method to identify the submodels and taking a coordinated measure to address the associated items between the submodels, all the system parameters can be estimated. The proposed algorithm can save the computation cost. The performance analysis indicates that parameter estimates converge to their true values. The simulation tests confirm the convergence results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Conventional discrete-time control systems are mainly developed for single-rate systems in which all input and output variables are sampled at a single (uniform) rate [2]. Identification techniques for single-rate systems have been well investigated [1, 16, 25, 26]. However, the single-rate sampling scheme sometimes may not be applicable in practical industrial processes, because the involved physical variables might be sampled at different sampling rates; see some examples in [13, 20]. Such systems with more than one sampling rate are called multirate systems. Recently, multirate systems have been a hot topic in control and identification fields, and many related achievements have been reported [3, 4, 15, 18, 23, 33, 39].

Most of the sampling schemes for multirate systems in the literature are uniform sampling, that is, the sampling interval for all variables are fixed. However, a more general class of multirate systems feature the following characteristics: The involved variables are measured at non-uniform intervals, e.g., in the cases when manual sampling or laboratory analysis is required. Systems whose sampling intervals for the input and/or output channels are non-equidistant in time are termed as non-uniformly sampled multirate systems [8, 21, 24, 28]. The systems with irregular missing samples can be characterized under the framework of the non-uniformly sampled system model [10, 14, 17, 31, 32, 38, 40, 41, 43, 45]. In this paper, we consider a class of periodically non-uniformly sampled systems which are special cases of the general non-uniformly sampled systems. It has been shown that the periodically non-uniform sampling has some promising properties, such as preserving controllability and observability [8, 28] and being capable of uniquely recovering the continuous-time system [8]. The related identification and control problems have been investigated for periodically non-uniformly sampled systems [8, 9, 21, 22, 24, 27, 28]. Specifically, some interesting identification methods have been proposed for the non-uniformly sampled systems, for example, the subspace methods [21, 27], the auxiliary model-based least squares method [24], the multi-innovation gradient method [37] and the partially coupled least squares method [9]. In this work, the main objective is to propose a computationally efficient identification algorithm for periodically non-uniformly sampled systems based on a hierarchical identification principle.

It is known that multirate and non-uniformly sampled systems involve more parameters than single-rate systems, which will unavoidably result in heavy computational costs of the identification algorithms. This motivates us to develop computationally efficient algorithms for online identification, which is of both theoretical merit and practical needs. The decomposition technique is useful in many areas such as the image procession [34–36]. Based on the decomposition technique, the hierarchical identification principle is very effective in reducing the complexity of the identification algorithm for large-scale systems [5]. The key is to decompose a system into subsystems and to address the associated items [6, 11]. For system identification models with different structures, the ways of doing decomposition are different. The multivariable system in [5] was decomposed into two subsystems, one with a parameter vector and the other with a parameter matrix. The dual-rate state-space model in [6] was also decomposed into two subsystems according to the lifted state-space structure. In this paper, we propose to decompose the system identification model into N (2⩽N⩽dim(θ)) submodels, where dim(θ) represents the dimension of the parameter vector θ. Based on such a decomposition, the proposed algorithm can greatly reduce the computational complexity.

The rest of the paper is organized as follows. Section 2 derives the identification model of the non-uniformly sampled systems and describes the problem formulation. Section 3 gives the recursive least squares algorithm for the non-uniformly sampled systems for comparisons. Section 4 presents a hierarchical least squares algorithm and compares the computational load with the recursive least squares algorithm. Section 5 analyzes the performance of the proposed algorithm. Section 6 provides an illustration example. Finally, we offer some concluding remarks in Sect. 7.

2 Model description and problem formulation

Consider the systems with a periodically non-uniform updating and uniform sampling scheme, which can refer to the Fig. 3 in [24]. For such a non-uniformly sampled system, the control input is non-uniformly updated r times with intervals τ i (i=1,2,…,r) at the time instants t=kT+t i i=0,1,2,…,r−1. Here, t i :=t i−1+τ i =τ 1+τ 2+⋯+τ i , t 0=0 and T:=τ 1+τ 2+⋯+τ r =t r is known as the frame period; the output is sampled uniformly with the frame period T. In the following, we establish the discrete-time state-space model and the input–output representation of the non-uniformly sampled systems from its continuous-time state-space model.

Consider a continuous-time process with the controllable and observable state-space representation:

where x(t)∈ℝn is the state vector, u(t)∈ℝ the control input, y(t)∈ℝ the output, A c , B c , C are constant matrices of appropriate dimensions and D is a constant. Because of the non-uniform zero-order hold at the input port, the input u(t) is a square wave signal with the following expression:

The output y(t) is sampled by a sampler with the frame period T, yielding a discrete-time signal y(kT).

The solution for the state equation in (1) is given by

For a non-pathological frame period T, by letting t 0=kT and t=kT+T in (3) and using (2) to discretize the state-space model in (1), we have [8, 24],

where

Transforming the state-space model in (4) into an input–output representation and taking into account the disturbance v(kT), we have

where {v(kT)} is a white noise sequence with zero mean, a(z) and b i (z) are polynomials, in the unit backward shift operator z −1, related to A, B i , C and D and have the form of [24]

Remark 1

Equation (5) is the input–output representation for the non-uniformly sampled systems. In the following, we will develop the identification algorithm for the model in (5). {u(kT+t i ), y(kT): i=0,1,2,…,r−1; k=1,2,…} are the available input–output data. a i and b ij are the parameters to be identified. In [24], an auxiliary model-based least squares method has been developed for the non-uniformly sampled systems with an output error model. In this paper, we aim to develop a new method to reduce the computational burden of the least squares algorithm.

Remark 2

The expression in (5) can be viewed as a multiple-input single-output system model with r fictitious inputs u(kT+t i ), i=0,1,2,…,r−1. When r=1, it becomes a discrete-time model for single-rate systems. It can be seen from (5) that the number of parameters for the non-uniformly sampled model is (r+1)n+1, which is much larger than 2n+1 of the single-rate model. Thus the computational burden of the corresponding identification algorithm for the non-uniformly sampled model is heavier than that for the single-rate model.

3 The recursive least squares algorithm

In order to show the advantages of the proposed algorithm in this paper, we recall the well-established recursive least squares (RLS) algorithm in this section.

Let the superscript T denote the vector transpose and define the parameter vector θ and the information vector φ(kT) as

Equation (5) can be written in a vector form

The estimate \(\hat{\boldsymbol{{\theta}}}(kT)\) of the parameter vector θ in (6) can be obtained from the following RLS algorithm:

where \(\boldsymbol{P}_{0}(kT)\in{\mathbb{R}}^{n_{0}\times n_{0}}\) is the covariance matrix and I is an identity matrix of appropriate sizes.

Remark 3

It is known that the RLS algorithm has the advantage of fast convergence rate, but its computational burden is relatively heavy. From (7)–(8), we can see that the RLS algorithm for non-uniformly sampled systems requires calculating the covariance matrix P 0(kT) of a large size n 0×n 0 at each recursion step. Since the number n 0=(r+1)n is larger than the parameter number 2n+1 of single-rate systems, the RLS algorithm in (7)–(8) tolerates a heavier computational burden than the RLS algorithm for single-rate systems. This motivates us to present a highly computationally efficient algorithm to estimate the parameter vector θ in (6).

4 The hierarchical estimation algorithm

The hierarchical identification principle is an effective way of handling large-scale systems by reducing the computational load [5–7, 11]. Here, we use the hierarchical identification principle to derive the hierarchical least squares (HLS) algorithm for the system in (5).

Decompose the information vector φ(kT) into N sub-information vectors and the parameter vector θ into N sub-parameter vectors with dimension n i , i.e.,

Then the overall identification model in (6) can be written as

Let \(\hat{\boldsymbol{{\theta}}}_{i}(kT)\) be the estimate of θ i at time t=kT. According to the least squares principle, the estimation algorithm for θ i in (9) can be expressed as

where P i (kT) is the covariance matrix of the ith subsystem.

Remark 4

Because the expression on the right-hand side of (10) contains the unknown parameter vectors θ j , j=1,2,…,i−1,i+1,…,N, using the hierarchical identification principle and replacing these unknown vectors θ j (j≠i) in (10) with their estimates \(\hat{\boldsymbol{{\theta }}}_{j}(kT-T)\) at the preceding time t=kT−T yields

Equations (12) and (11) form the decomposition-based least squares (HLS) identification algorithm.

To initialize the algorithm, we take \(\boldsymbol {P}_{i}(0)=p_{0}\boldsymbol{I}_{n_{i}}\), with p 0 being normally a large positive number (e.g., p 0=106) and \(\hat{\boldsymbol{{\theta}}}_{i}(0)=\mathbf{1}_{n_{i}}/p_{0}\), with \(\mathbf{1}_{n_{i}}\) being an n i -dimensional column vector whose elements are all 1.

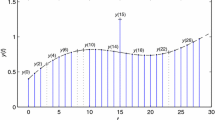

Remark 5

The diagram of the HLS algorithm is shown in Fig. 1. From the HLS algorithm in (11)–(12) and Fig. 1, we can see that the parameter estimate \(\hat{\boldsymbol{{\theta}}}_{i}(kT)\) at time t=kT depends not only on \(\hat{\boldsymbol{{\theta}}}_{i}(kT-T)\) at the preceding time t=kT−T, but also on the estimates \(\hat{\boldsymbol{{\theta}}}_{j}(kT-T)\) (j=1,2,…,i−1,i+1,…,N) of all the other submodels at time t=kT−T.

Remark 6

The proposed HLS algorithm in (11)–(12) has less computational burden than the RLS algorithm in (7)–(8). The computational burden of the two algorithms are compared in Table 1, where the numbers of multiplications and additions are for each step. Taking a non-uniformly sampled system with n 0=6 and N=2 as an example, i.e., n 1=n 2=3, the times of multiplication and addition are shown in the braces.

Remark 7

From Table 1, we can see that the computation cost of the HLS algorithm in (11)–(12) is lower than that of the RLS algorithm, and the computational cost of the HLS algorithm depends on the choice of the number N. The larger N, the lower the computation cost. When N=n 0, i.e., n i =1, we get the least computational cost.

5 Main convergence results

In this section, we establish the main convergence results of the HLS algorithm for non-uniformly sampled systems, using the martingale convergence theorem (Lemma D.5.3 in [16]). Two problems are addressed: (1) Do the parameter estimates given by the HLS algorithm converge to the true parameters? (2) How fast does the algorithm converge?

Let us introduce some notations. The symbols λ max[X] and λ min[X] represent the maximum and minimum eigenvalues of the positive definite matrix X, respectively; ∥X∥2:=tr[XX T]. The relation f(k)=O(g(k)) means that there exist positive constants δ 1 and k 0 such that |f(k)|⩽δ 1 g(k) for g(k)⩾0 and k⩾k 0.

Assume that \(\{v(kT), \mathcal{F}_{kT}\}\) is a martingale difference sequence defined on a probability space \(\{\varOmega, \mathcal{F}, P\}\), where \(\{\mathcal{F}_{kT}\}\) is the σ algebra sequence generated by the observations up to and including time t=kT [16]. The noise sequence {v(kT)} satisfies the following assumptions:

Lemma 1

For the algorithm in (11)–(12), the following inequalities hold:

The proof can be done in a similar way to that of Lemma 1 in [24].

Theorem 1

For the system in (6) and the HLS algorithm in (11)–(12), if

then we take \(\hat{\boldsymbol{{\theta}}}(kT)=\hat{\boldsymbol {{\theta}}}(kT-T)\). Define

and assume that (A1) and (A2) hold, then for any c>1, we have

Proof

Define the parameter estimation error vector \(\tilde{\boldsymbol{{\theta}}}(kT)\) and a nonnegative definite functions Q i (kT) as

and

Define the innovation

Using (9), we have

Inserting (12) into (14) gives

Define the stochastic Lyapunov function

Since {v(kT)} is a white noise sequence, taking the conditional expectation of both sides of (18) with respect to \(\mathcal{F}_{kT-T}\) and using (A1)–(A2) give

If γ(kT)⩾0, we have

otherwise, we have \(\hat{\boldsymbol{{\theta}}}(kT):=\hat {\boldsymbol{{\theta}}}(kT-T)\) and Q(kT):=Q(kT−T). Thus, we always have

Define

Using (20), we have

According to Lemma 1, the summation of the last term of the right-hand side of (21) for k from 1 to ∞ is finite. Applying the martingale convergence theorem (Lemma D.5.3 in [16]) to (21), we conclude that Z(kT) converges \(\mathrm{a.s.}\) to a finite random variable, say Z 0, i.e.,

Thus we have

From the definition of Q i (kT), we have

This proves Theorem 1. □

Corollary 1

For the system in (6) and the HLS algorithm in (11)–(12), assume that the conditions of Theorem 1 hold and that there exist positive constants c 1, c 2, c 0, and k 0 such that for k⩾k 0, the following general persistent excitation condition holds:

Then the parameter estimation errors converge to zero like \(\|\tilde{\boldsymbol{{\theta}}}_{i}(kT)\|^{2}\rightarrow0\), a.s., i=1,2,…,N.

Proof

From the definitions of P i (kT) and the condition (A3), we have

Using Theorem 1, we have

The proof is completed. □

Remark 8

Corollary 1 shows that the estimation errors \(\|\tilde{\boldsymbol{{\theta}}}_{i}(kT)\|^{2}\) converge to zero at the rate of N[lnk]c/k as k approaches infinity. This implies that the convergence rate depends on the number N and that the larger N, the slower the convergence rate. Therefore, the HLS algorithm in (11)–(12) reduces the computational load at the cost of slowing down the convergence rate. In order to guarantee a certain convergence rate, the number N should not be too large.

6 Example

Consider a stable continuous-time process with the following state-space representation:

Take r=2, τ 1=0.3 s, τ 2=0.5 s, then t 1=τ 1=0.3 s, t 2=τ 1+τ 2=T=0.8 s. Discretizing this system gives

The corresponding input–output relationship is

In the simulation, the inputs {u(kT+t i ), i=0,1} are taken as persistent excitation signal sequences with zero mean and unit variance, and {v(kT)} as a white noise sequence with zero mean and variance σ 2=0.102. The corresponding noise-to-signal ratio is δ ns=11.82 %. The parameter estimates and their errors \(\delta:=\|\hat{\boldsymbol{{\theta}}}(kT)-\boldsymbol {{\theta}}\|/\|\boldsymbol{{\theta}}\|\) given by the RLS algorithm in (7)–(8) are shown in Table 2. In this simulation, the number of parameters is n 0=6. Let N=2 and n 1=n 2=3, then apply the HLS algorithm in (11)–(12) to estimate the parameters of this non-uniformly sampled system. The simulation results are shown in Table 3. The estimation errors δ versus k of the two algorithms are shown in Fig. 2.

From Tables 1–3 and Fig. 2, we have the following conclusions:

-

The identification model is a fictitious two-input single-output system because the input is non-uniformly sampled twice over the frame period. Table 2 and Fig. 2 show that RLS algorithm can give highly accurate estimation.

-

In this simulation, r=2, n 0=6 and N=2. From Table 1, we can see that the numbers of multiplication and addition at each step for the HLS algorithm are 60 and 48, respectively, and are fewer than 96 and 84 of the RLS algorithm. Compared to the RLS algorithm, the HLS algorithm saves 40 % computational cost.

-

The parameter estimation errors for the HLS algorithm become smaller and gradually get close to zero as the data length k increases.

-

The convergence rate of the HLS algorithm is a little slower than that of the RLS algorithm.

7 Conclusions

In this paper, an HLS algorithm is presented for a class of non-uniformly sampled systems based on the hierarchical identification principle. The main advantage of the proposed algorithm is that it can reduce the computation load compared with the traditional RLS algorithm. The convergence analysis indicates that the parameter estimates given by the HLS algorithm can converge to their true values, and the number N cannot be too large in the algorithm implementation because the number of the submodels really affects the convergence rate. A numerical example shows that the HLS algorithm can give effective parameter estimates with lower computational effort. The proposed hierarchical method based on decomposing the parameter vector and the information vector can be extended to a variety of system models such as the multivariable systems with a complicated colored noise [12]. Due to its benefit of reduced computational complexity, the proposed identification method could be applied to resource-constrained networked dynamic systems [19, 29, 30, 42, 44].

References

A. Al-Smadi, A least-squares-based algorithm for identification of non-Gaussian ARMA models. Circuits Syst. Signal Process. 26(5), 715–731 (2007)

T. Chen, B. Francis, Optimal Sampled-Data Control Systems (Springer, London, 1995)

M. Cimino, P.R. Pagilla, Design of linear time-invariant controllers for multirate systems. Automatica 46(8), 1315–1319 (2010)

M. Cimino, P.R. Pagilla, Conditions for the ripple-free response of multirate systems using linear time-invariant controllers. Syst. Control Lett. 59(8), 510–516 (2010)

F. Ding, T. Chen, Hierarchical least squares identification methods for multivariable systems. IEEE Trans. Autom. Control 50(3), 397–402 (2005)

F. Ding, T. Chen, Hierarchical identification of lifted state-space models for general dual-rate systems. IEEE Trans. Circuits Syst. I, Regul. Pap. 52(6), 1179–1187 (2005)

F. Ding, J. Yang, Hierarchical identification of large scale systems. Acta Autom. Sin. 25(5), 647–654 (1999)

F. Ding, L. Qiu, T. Chen, Reconstruction of continuous-time systems from their non-uniformly sampled discrete-time systems. Automatica 45(2), 324–332 (2009)

F. Ding, G. Liu, X.P. Liu, Partially coupled stochastic gradient identification methods for non-uniformly sampled systems. IEEE Trans. Autom. Control 55(8), 1976–1981 (2010)

F. Ding, G. Liu, X.P. Liu, Parameter estimation with scarce measurements. Automatica 47(8), 1646–1655 (2011)

J. Ding, F. Ding, X.P. Liu, G. Liu, Hierarchical least squares identification for linear SISO systems with dual-rate sampled-data. IEEE Trans. Autom. Control 56(11), 2677–2683 (2011)

F. Ding, Y.J. Liu, B. Bao, Gradient based and least squares based iterative estimation algorithms for multi-input multi-output systems. Proc. Inst. Mech. Eng., Part I, J. Syst. Control Eng. 226(1), 43–55 (2012)

M. Embiruçu, C. Fontes, Multirate multivariable generalized predictive control and its application to a slurry reactor for ethylene polymerization. Chem. Eng. Sci. 61(17), 5754–5767 (2006)

J. Feng, Z. Wang, M. Zeng, Recursive robust filtering with finite-step correlated process noises and missing measurements. Circuits Syst. Signal Process. 30(6), 1355–1368 (2011)

H. Fujimoto, Y. Hori, High-performance servo systems based on multirate sampling control. Control Eng. Pract. 10(7), 773–781 (2002)

G.C. Goodwin, K.S. Sin, Adaptive Filtering Prediction and Control (Prentice-Hall, Englewood Cliffs, 1984)

R.B. Gopaluni, A particle filter approach to identification of nonlinear processes under missing observations. Can. J. Chem. Eng. 86(6), 1081–1092 (2008)

S.C. Kadu, M. Bhushan, R. Gudi, Optimal sensor network design for multirate systems. J. Process Control 18(6), 594–609 (2008)

H. Li, Y. Shi, Robust H ∞ filtering for nonlinear stochastic systems with uncertainties and random delays modeled by Markov chains. Automatica 48(1), 159–166 (2012)

D. Li, S.L. Shah, T. Chen, K.Z. Qi, Application of dual-rate modeling to CCR octane quality inferential control. IEEE Trans. Control Syst. Technol. 11(1), 43–51 (2003)

W. Li, S.L. Shah, D. Xiao, Kalman filters in non-uniformly sampled multirate systems: for FDI and beyond. Automatica 44(1), 199–208 (2008)

Y.J. Liu, F. Ding, Decomposition based least squares estimation algorithm for non-uniformly sampled multirate systems, in Proceedings of the 48th IEEE Conference on Decision and Control, 2009 Held Jointly with the 2009 28th Chinese Control Conference, CDC/CCC 2009. Shanghai, China (2009)

X. Liu, J. Lu, Least squares based iterative identification for a class of multirate systems. Automatica 46(3), 549–554 (2010)

Y.J. Liu, L. Xie, F. Ding, An auxiliary model based on a recursive least-squares parameter estimation algorithm for non-uniformly sampled multirate systems. Proc. Inst. Mech. Eng., Part I, J. Syst. Control Eng. 223(4), 445–454 (2009)

Y.J. Liu, L. Yu, F. Ding, Multi-innovation extended stochastic gradient algorithm and its performance analysis. Circuits Syst. Signal Process. 29(4), 649–667 (2010)

L. Ljung, System Identification: Theory for the User (Prentice-Hall, Englewood Cliffs, 1999)

B. Ni, D. Xiao, Identification of non-uniformly sampled multirate systems with application to process data compression. IET Control Theory Appl. 4(6), 970–984 (2010)

J. Sheng, T. Chen, S.L. Shah, Generalized predictive control for non-uniformly sampled systems. J. Process Control 12(8), 875–885 (2002)

Y. Shi, T. Chen, Optimal design of multichannel transmultiplexers with stopband energy and passband magnitude constraints. IEEE Trans. Circuits Syst. II, Analog Digit. Signal Process. 50(9), 659–662 (2004)

Y. Shi, B. Yu, Robust mixed H 2/H ∞ control of networked control systems with random time delays in both forward and backward communication links. Automatica 47(4), 754–760 (2011)

M. Srinivasarao, S.C. Patwardhan, R.D. Gudi, Nonlinear predictive control of irregularly sampled multirate systems using blackbox observers. J. Process Control 17(1), 17–35 (2007)

Y.S. Suh, Stability and stabilization of nonuniform sampling systems. Automatica 44(12), 3222–3226 (2008)

A.K. Tanc, A.H. Kayran, Maximum entropy power spectrum estimation for 2-D multirate systems. Circuits Syst. Signal Process. 31(1), 271–281 (2012)

D.N. Vizireanu, Generalizations of binary morphological shape decomposition. J. Electron. Imaging 16(1), 1–6 (2007)

D.N. Vizireanu, Morphological shape decomposition interframe interpolation method. J. Electron. Imaging 17(1), 1–5 (2008)

D.N. Vizireanu, S. Halunga, G. Marghescu, Morphological skeleton decomposition interframe interpolation method. J. Electron. Imaging 19(2), 1–3 (2010)

L. Xie, H.Z. Yang, F. Ding, Modeling and identification for non-uniformly periodically sampled-data systems. IET Control Theory Appl. 4(5), 784–794 (2010)

H. Yang, Y. Xia, P. Shi, Stabilization of networked control systems with nonuniform random sampling periods. Int. J. Robust Nonlinear Control 21(5), 501–526 (2011)

B. Yu, Y. Shi, H. Huang, l 2–l ∞ Filtering for multirate systems based on lifted models. Circuits Syst. Signal Process. 27(5), 699–711 (2008)

H. Zhang, Y. Shi, A. Saadat Mehr, Robust energy-to-peak filtering for networked systems with time-varying delays and randomly missing data. IET Control Theory Appl. 4(12), 2921–2936 (2010)

H. Zhang, Y. Shi, A. Saadat Mehr, Robust weighted H ∞ filtering for networked systems with intermitted measurements of multiple sensors. Int. J. Adapt. Control Signal Process. 25(4), 313–330 (2011)

H. Zhang, Y. Shi, A. Saadat Mehr, Robust static output feedback control and remote PID design for networked motor systems. IEEE Trans. Ind. Electron. 58(12), 5396–5405 (2011)

H. Zhang, Y. Shi, A. Saadat Mehr, H. Huang, Robust energy-to-peak FIR equalization for time-varying communication channels with intermittent observations. Signal Process. 91(7), 1651–1658 (2011)

H. Zhang, Y. Shi, A. Saadat Mehr, Robust H ∞ PID control for multivariable networked control systems with disturbance/noise attenuation. Int. J. Robust Nonlinear Control 22(2), 183–204 (2012)

Y. Zhu, H. Telkamp, J. Wang, Q. Fu, System identification using slow and irregular output samples. J. Process Control 19(1), 58–67 (2009)

Acknowledgements

This work was partially supported by the National Natural Science Foundation of China (60973043, 61075008), the 111 Project (B12018), the University Graduate Innovation Research Program of Jiangsu Province (CX10D_229Z) and the Fundamental Research Funds for the Central Universities (JUDCF09029).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, Y., Ding, F. & Shi, Y. Least squares estimation for a class of non-uniformly sampled systems based on the hierarchical identification principle. Circuits Syst Signal Process 31, 1985–2000 (2012). https://doi.org/10.1007/s00034-012-9421-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-012-9421-2