Abstract

Synchronization of small-world neuronal network with synapse plasticity is explored in this paper. The variation properties of synapse weights are studied first, and then the effects of synapse learning coefficient, the coupling strength and the adding probability on synchronization of the neuronal network are studied respectively. It is shown that appropriate learning coefficient is helpful for improving synchronization, and complete synchronization can be obtained by increasing the coupling strength and the adding probability.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Synchronization is thought to play a key role in intercommunications among neurons. With the developing of the theories of complex networks, people pay more attention on the synchronization of complex neuronal networks, especially small-world ones. Most of the research focuses on non-weighted or constant-weighted neuronal networks, however, the weights of synapses among neurons keep changing in the growth of neurons and in the studying and memorizing processes. In other words, synapse can learn, which is also called synapse plasticity. In this paper, the synchronization of small-world neuronal networks with synapse plasticity is explored numerically.

2 The Mathematical Model

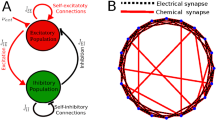

By using the Hindmarsh-Rose neuronal model [1] and the Newman-Watts small-world strategy [2], we can set up electrically coupled neuronal network. The number of neurons is set as \(N=100\).

As for the synapse plasticity, we define the variation equation for the weight w ij between neuron i and neuron j as following [3]:

where L is a positive synapse learning coefficient and x i is the membrane potential of neuron i.

Then the neuronal network with synapse plasticity can be expressed as:

where σ is the coupling strength, \(\mathbf G=\{g_{ij}\}_{N\times N}\) is the connectivity matrix of the network, I is the external current. The parameters in the system are set as \(a=1,\break b=3,c=1, d=5, s=4, r=0.006, \chi=1.6, I=3\). As ions in channels are transmitted mutually, bi-directional non-equal connections are adopted here. That is, if there is a connection between neuron i and neuron \(j\,(i \neq j)\), then there exists a weight \(w_{ij}\neq w_{ji}\), if not, then \(w_{ij}=w_{ji}=0\). And let \(w_{ii}=0\), which means that there is no self-connection for all the neurons in the network.

3 Simulation Results

The variation properties of synapse weights under the influence of the synapse learning coefficient is studied first. Figure 1 shows the variations of membrane potentials, connection weights and connection strengths between two arbitrarily chosen connected neurons in the network. The connection strength of neuron i is defined as \(s_i(t)=\sum_{j=1}^N w_{ij}(t)\).

The variations of membrane potentials, connection weights and connection strengths between two arbitrarily chosen connected neurons \((L=8,\sigma=0.05, p=0.1)\): a x i and x j are the membrane potentials of neuron i and neuron j respectively; b w ij and w ji are the positive and negative weights between neuron i and neuron j respectively; c s i and s j are the connection strengths of neuron i and neuron j respectively

It can be seen from Fig. 1 that, if both of the two neurons are in rest, the connection weights and the connection strengths between them do not change; if neuron i is excited and neuron j is in rest, the connection weight from neuron i to neuron j is strengthened, and the connection weight from neuron j to neuron i is weakened. That means, when ion currents conduct from one neuron to another by the connection channels between them, the positive connection weight would be strengthened and the negative connection weight would be weakened. These phenomena conform with actual variations of synapses during the excitement transmission process among real neurons.

Then we discuss synchronization properties of the network under the influence of synapse plasticity. We use average synchronization error as characteristic measure, which can be represented as

where \(\langle \cdot \rangle\) represents average on time, \(i=2,3,\cdots,N\).

By numerical simulation, the relationship of the average synchronization error and the synapse learning coefficient can be obtained as in Fig. 2.

It can be seen that, the average synchronization error decreases sharply first, then oscillates around 0.5 finally, which means that the increase of synapse learning coefficient is helpful for synchronization, but can not make the network achieve synchronization. It implies that in neuronal networks, the learning function of electric synapses must be neither too strong nor too weak, but be appropriate to maintain the synchronizability.

The variations of the average synchronization error with the increasing coupling strength and the adding probability are shown in Fig. 3a, b respectively.

It can be seen from Fig. 3a that, when the coupling strength increases to \(\sigma=0.06\), the average synchronization error decreases to zero, which means the network achieves complete synchronization. Hence, increasing the coupling strengths among neurons can finally make the network synchronize completely. It also can be seen from Fig. 3b that, when the adding probability increases to \(p\approx 0.7\), the average synchronization error decreases to zero. So, in neuronal networks with synapse plasticity, the introduction of shortcuts also can improve synchronizability of neuronal networks.

4 Conclusion

Based on that actual biological neuronal networks have the properties of small-world connectivity and the connection strengths among neurons change dynamically, we study the synchronization of electrically coupled neuronal networks with synapse plasticity. The variation properties of connection weights are studied first. Then the effects of the synapse learning coefficient, the coupling strength and the adding probability on synchronization are studied respectively. It is shown that appropriate learning coefficient is helpful for improving the synchronizability of the network, and increasing the coupling strength and the adding probability can finally make the neuronal network synchronize completely.

References

Hindmarsh, J.L., Rose, R.M.: A model of neuronal bursting using three coupled first order differential equations. Proc. R. Soc. Lond. Ser. B. 221 (1984) 87–102.

Newman, M.E.J., Watts, D.J.: Scaling and percolation in the small-world network model. Phys. Rev. E. 60 (1999) 7332–7342.

Zheng, H.Y., Luo, X.S., Lei, Wu: Excitement and optimality properties of small-world biological neural networks with updated weights (in Chinese). Acta. Phisica. Sin. 57(6) (2008) 3380–3384.

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No.10872014).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2011 Springer Science+Business Media B.V.

About this paper

Cite this paper

Han, F., Lu, Q., Meng, X., Wang, J. (2011). Synchronization of Small-World Neuronal Networks with Synapse Plasticity. In: Wang, R., Gu, F. (eds) Advances in Cognitive Neurodynamics (II). Springer, Dordrecht. https://doi.org/10.1007/978-90-481-9695-1_46

Download citation

DOI: https://doi.org/10.1007/978-90-481-9695-1_46

Published:

Publisher Name: Springer, Dordrecht

Print ISBN: 978-90-481-9694-4

Online ISBN: 978-90-481-9695-1

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)