Abstract

Eye tracking is a promising technology for human-computer interactions, which is however rarely used in practical applications. We argue that the main drawback of the contemporary eye trackers is their limited accuracy. There is no standard way of specifying this accuracy what leads to underestimating the accuracy error by eye tracker manufacturers. In this work we perform a subjective perceptual experiment measuring the accuracy of two typical eye trackers: a commercial corneal reflection-based device mounted under a display and a head-mounted do-it-yourself device of our construction. During the experiment, various conditions are taken into consideration including viewing angle, human traits, visual fatigue, etc. The results indicate that eye tracker accuracy is observer-referred and measured gaze directions exhibit a large variance. Interestingly, the perceptually measured accuracy of the low-cost do-it-yourself device is close to the accuracy of the professional device.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Eye tracking is a technique of capturing the gaze direction of human eyes. Interestingly, although there is a choice of eye-tracking devices, they are rarely used in practical applications. The main drawback of the contemporary eye trackers is their limited accuracy. A average precision below 0.5\(^\circ \) of the viewing angle (roughly 20 pixels on a 22” display observed from 65 cm distance) is possible to obtain only using very expensive and/or intrusive eye trackers together with the chin-rest or bite bar. Moreover, accuracy of eye trackers is observer-referred and difficult to reproduce even for the same observer in the subsequent eye tracking sessions.

To the best of our knowledge, there are no any formal standard, which specifies how to measure the accuracy of eye tracker [1]. This leads to underestimating the accuracy error by eye trackers manufacturers. In this work we propose a technique which measures the accuracy in a straightforward manner and can be applied for any type of eye tracker. This technique does not interfere with eye trackers’ software and hardware components, in particular, we use the native calibration procedure delivered by the manufacturers and measure the accuracy based on the raw and unfiltered gaze data. As a proof of concept we measure the accuracy of two typical eye trackers: commercial RED250 from SensoMotoric Instruments [2] and low-cost Do-It-Yourself (DIY) eye tracker of our construction [3].

We perform a perceptual experiment, in which people are asked to look at the markers of the known locations on the screen. Their gaze direction is captured by eye tracker and the accuracy of the device is analysed in comparison to the reference direction. Additionally, we evaluate the accuracy for different viewing angle and declared level of the visual fatigue of the observer’s eyes.

In Sect. 2 we present a basic information related to the eye tracking technology. A proposed accuracy measurement procedure is introduced in Sect. 3. We provide details on the perceptual experiment in Sect. 4 and analyse its results in Sect. 5.

2 Background

2.1 Tracking of Human Gaze Direction

The human viewing angle spans more than 180\(^\circ \) horizontally and 130\(^\circ \) vertically, although, the binocular field of vision is limited to about 120\(^\circ \) horizontally. The eye can rotate about 45\(^\circ \) in all directions, although it is natural to change head position for angles higher than 20\(^\circ \) rather than move the eyes [4, Sect. 4.2.1], [5, Sect. 24]. Therefore, it is a reasonable that an eye tracker should cover only 40\(^\circ \) (\(\pm 20^\circ \)) of the human viewing angle (it is equivalent of a 21” monitor observed from about 74 cm distance). Tracking the full range of the viewing angle for larger displays should be supported by head tracking devices.

Eye trackers capture two types of eye movement: smooth pursuit and saccades. Smooth pursuit is active when eyes track moving target and are capable of matching its velocity. Saccades represent a rapid eye movement used to reposition the fovea to a new location [6]. Other types of the eye movements like vestibular, miniature eye movements (drift, nystagmus, microsaccades), or vergence have a smaller effect on the determination of the gaze direction [7–9].

The main goal of the gaze tracking is to capture a single location an observer intents to look at. This process is known as a visual fixation [10]. A point of fixation can be estimated as a location where saccades remain stable in space and time. Different computational techniques are used to estimate location of this point based on analysis of the smooth pursuit and saccadic movements (see [10–13] for survey). The fixation is controlled by cognitive processes in human brain and identification of its location strongly depends on the top-down visual mechanism (a task given to observer before eye tracking, [14]). Therefore, it is difficult to propose a general fixation computation model suitable for every application. Interestingly, parameter settings in fixation algorithms have crucial meaning for their operation [13]. Therefore, in our studies, we decided to base the accuracy measurement rather on the raw gaze data than the data processed by the fixation identification algorithms.

2.2 Eye Tracking Technology

Devices called video eye tracker [11, Chap. 5.4] usually consists of an infrared camera and an infrared light source, which are directed at the eye. The camera captures the image of the eye with the dark circle of the pupil and the bright corneal glint, which is a reflection of the infrared light from the outer surface of the cornea. The pupil follows the gaze direction during eye movement while the corneal reflection stays in the same position. The relative position between the reflection and the center of the pupil is used to estimate the gaze direction. This type of eye trackers is called the pupil-corneal reflection (P-CR). Another option is to use a chin rest to stabilized the observer’s head. Assuming that the head is not moving relative to the display, only pupil center location is estimated to compute the gaze direction.

To find a gaze point (called also point-of-regard, POR), at which an observer looks on the screen, the mapping between the camera image and the screen surface must be determined. This mapping is difficult to compute implicitly because of unknown initial position of the head and complex geometry of the eye movement. Therefore, eye trackers employ the calibration based on the non-linear approximation technique [9, 15, 16]. In this technique an observer is asked to look at a set of target points displayed one by one in different positions on the screen. The relation between the calculated position of the pupil centre and known position of the target points is used to approximate coefficients \(a_{0-5}\) and \(b_{0-5}\) of a pair of second-order polynomials:

where \((screen_x,screen_y)\) denotes the gaze point coordinates on the screen and (x, y) are the coordinates of the centre of the pupil in image coordinates. At least, six target points are needed to compute twelve unknown coefficients. Additional target points are used to balance diversity between centre and corners of a screen (larger inaccuracies are induced by wide viewing angles to the target points located in monitor corners).

Accuracy of the mapping presented in Eq. 1 depends on exact estimation of pupil positions during observation of the target points. An observer is asked to look at a point for a few seconds to ensure that she/he gazes directly at this point and some of this data is filtered out.

3 Accuracy Measurement

Eye tracker accuracy is defined as average angular offset (distance in degrees of viewing angle) between the gaze direction measured by an eye tracker and the corresponding direction to a known reference target. The main task of accuracy measurement is to compare the gaze direction captured using an eye tracker with the reference gaze direction. The reference gaze direction is a direction from observer’s eyes to the known point (called target point).

3.1 Procedure

The accuracy measurement procedure consists of three steps: calibration, validation, and data analysis (see Fig. 1).

Calibration is preformed by the proprietary eye tracker software. We assume the calibration as a native feature of the eye tracker and do not test the accuracy of calibration separately. Multiple repetitions of the measurement for one observer provide an objective assessment of the calibration accuracy and take the calibration error into account in the overall results.

In validation phase participants look at the circle marker displayed for 2 s at 25 different positions (see Fig. 1). These positions, called the target points, act as known and imposed fixation points. The marker is moved between target points in random order. Smooth animation of the marker between target points allows for faster observer’s fixation and reduces number of outliers. Additionally, the marker is minified when reaches its target position to focus observer’s attention on a smaller area.

We record gaze direction captured by the eye tracker during the whole validation phase. Locations of the gaze points are transformed from screen coordinates to the accuracy units (see Sect. 3.2). In the data analysis phase, average accuracy, standard deviation and maximum error values are computed to express the accuracy and robustness of an eye tracker (see Sect. 5).

3.2 Accuracy Units

We express the eye tracker accuracy (ac) as the angle between the rays from the eye centre to the point captured by an eye tracker (the gaze point) and to the target point. We compute this angle as arccos of the dot product of the mentioned direction vectors. Both vectors (\(\mathbf v _{gaze}\) and \(\mathbf v _{tp}\)) are calculated based on the distance from the eyes’ plane to the screen (hs ) as well as the distance from the centre of the screen \((x_{c},y_{c})\) to gaze point position \((x_{gaze},y_{gaze})\), and to target point position\((x_{tp},y_{tp})\):

where \((x_{res},y_{res})\) denotes screen resolution is pixels. The screen width and height are expressed in the same units as hs, all other values are expressed in the screen pixel coordinates.

The above formula provides more accurate calculation than the standard computation of the angular deviation from the centre of the screen (see equations in [17, Sect. 9.4]), which introduces distortions at edges of the screen. For example, the calculation precision of a 50 pixels shift between gaze point and target point at the screen corner for our eye tracker setup is 0.2674\(^\circ \), at horizontal and vertical edge: 0.1428\(^\circ \) and 0.0634\(^\circ \), respectively.

3.3 Filtering

Filtering of the outliers is the most problematic issue in the eye tracker accuracy measurement. Eye trackers’ vendors report the accuracy for the estimated fixation points computed by their proprietary software. This solution allows indeed reporting a lower accuracy error because the problematic data is filtered out. Commercial vendors in their eye tracking systems often calculate the accuracy with the use of pre-filtered data (e.g. with the proprietary fixation detection algorithms that are not disclosed [2]), what often artificially lowers the resulting error. However, these results do not reflect the accuracy in practical applications. For example, as we measured in a pilot study, SMI Experimental Center software [17] reports average accuracy of RED250 eye tracker as about 0.5\(^\circ \) what is not consistent with our experimental evaluation (mean accuracy 1.66\(^\circ \), see Sect. 5) and practical insights.

We argue that a more useful accuracy estimation is based on the raw gaze data. We assume that a human is able to fixate at a point for some time constantly (2 s in our evaluation, ability to even 10 s fixation was reported [18]) and do not filter out any data. Obvious exception is skipping gaze points captured during displacement of the marker between target points. Also, we do not record data during blinks.

4 Experimental Evaluation

The main goal of our experimental evaluation was to measure the accuracy of two different eye trackers and analyse how human traits affect the collected results. We examine whether conditions like observation angle, observers’ gender, fatigue, vision correction, measurement repetition affect the eye tracker accuracy.

4.1 Participants

Seven participants, age between 22 and 42 participated in our experiment (average of 33 years, 3 females and 4 males). Four participants had normal vision, one of them had corrected vision with contact lenses and two wore glasses. We asked each participant to repeat the experiment ten times at different times of the day. We conducted 70 measurement sessions per each eye tracker. They were completed within 15 days for RED250 and within 5 days for DIY eye tracker. Before each experiment, participants were asked to assess the fatigue of their vision in a 5-point Likert scale (1-excellent, 2-very good, 3-good, 4-tired, 5-very tired). No session took longer than 2 min for one participant to avoid fatigue. The participants were aware that accuracy of the eye tracker is tested, however they did not know details of the experiment.

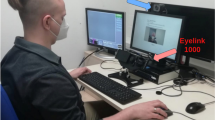

4.2 Apparatus

Our experimental setup is presented in Fig. 2 (left). It consists of RED250 eye tracker controlled by the proprietary SMI iViewX (version 2.5) software running on dedicated PC. RED250 eye tracker is mounted under a 22” Dell E2210 LCD display with the screen dimensions 47.5\(\,\times \,\)30 cm, and the native resolution 1680\(\,\times \,\)1050 pixels (60 Hz). The same display was used for the assessment of the DIY eye tracker (presented in Fig. 2, right). DIY is controlled by the 3rd party ITU Gaze Tracker software (version 2.0 beta) developed at the IT University of Copenhagen [19] and running on the second PC (2.8 GHz Intel i7 930 CPU equipped with NVIDIA GeForce 480 GTI 512 MB graphics card and 8 GB of RAM, Windows 7 OS). This PC was also used to run our validation software which collects eye tracking data received from the external applications (SMI iView X or ITU Gaze Tracker), renders graphics and stores experiment results. The software was implemented in Matlab using Psychtoolbox [20] and additional mex files written in C++. It communicates with iViewX and ITU using the UDP (User Datagram Protocol) protocol.

4.3 Stimuli and Procedure

Observers sat in the front of the display in 65 cm distance and used the chin-rest adopted from an ophthalmic slit lamp. Following ITU-R.REC.BT.500-1 recommendations [21], the experiment started with a training session in which observers could familiarise themselves with the task, interface, chin-rest, and the eye trackers. After that session, they could ask questions or start the main experiment.

The actual experiment started with a 9-point calibration controlled by iViewX or ITU software. This procedure took about 20 s and involved observation of the markers displayed in different areas of the screen. The data processing including computation of the calibration polynomial coefficients (see Sect. 2.2) was performed by the proprietary software. After calibration, the actual validation of eye tracker accuracy was performed based on procedure described in Sect. 3. The locations of the gaze points were still received from iView X or ITU, although the position of the target points and their rendering and display were imposed by our validation software.

Instead of measuring the accuracy separately for each eye, we average gaze position of left and right eye measured by RED250 eye tracker. The DIY eye trackers captures the position of only the left eye.

5 Results

The gaze points captured by RED250 and DIY eye trackers were transformed to the error accuracy units (see Sect. 3.2) and average error for the whole dataset was computed. We achieved an average error equal to 1.66\(^\circ \) for RED250 and to 1.89\(^\circ \) or DIY eye tracker (the detailed results, also for individual observers, are depicted in Table 1).

We express the average eye tracker error as the covariance ellipses (see Fig. 3). The direction of the radii of ellipse corresponds to the eigenvectors of the covariance matrix and their lengths to the square roots of the eigenvalues. It is assumed that an eye tracker has a good accuracy, if the distribution of the error has the circular shape corresponding to the normal distribution and the centre of this circle is located in (0,0). The ellipse radii should be as small as possible.

Average, Median and Maximum Error. The results are characterise by significant standard deviations (2.11\(^\circ \) for RED250 and 2.37\(^\circ \) for DIY) and large maximum accuracy error (39.87\(^\circ \) and 43.89\(^\circ \) for RED205 and DIY, respectively). It suggests that the gaze position cannot be estimated based on separate samples from eye tracker. Larger number of gaze points leads towards the normal distribution and improves the eye tracker accuracy.

Observers. Analysis of variance (ANOVA) shows strong dependence of the eye tracker accuracy on individual observers (see Fig. 4). For example, observer eup achieves significantly worse results than the average and this trend is visible for both eye trackers. On the contrary, most of observers (5 for RED250 and 6 for DIY) achieved comparable results. It suggests that averaging the results for observers is acceptable but some individuals are not able to work with eye tracker due to some technical obstacles (e.g. thick spectacle frames or too strong makeup) or psychophysiological inability to stable focus eyes on a specific object for a longer time.

Multiple comparison of the eye tracker accuracy computed individually for each observer. The mean accuracy values (circles) with confidence intervals (horizontal lines) denote significant difference between some observers for both RED250 and DIY eye trackers. Most of the observers achieved comparable results (the red lines). (Color figure online)

Viewing Angle. We measured how the viewing angle affects the eye tracker accuracy. The target points were divided into two groups: 16 exterior points and 9 interior points (see Fig. 1). As can be seen in Fig. 5, the accuracy for exterior points is lower than for the interior points. For RED250, the mean accuracy equals to 1.45\(^\circ \) and 1.77\(^\circ \) for interior and exterior points respectively (ANOVA reveals significant difference p<0.05). For DIY, the significant difference is not revealed, but the mean accuracy error for exterior points (1.92\(^\circ \)) is higher than for interior points (1.83\(^\circ \)).

Other Factors. ANOVA analysis did not reveal dependence of the mean results on observers’ visual fatigue (p = 0.9025 for RED250, p = 0.2716 for DIY), wearing glasses (p = 0.9392 for RED250, p = 0.5519 for DIY) and gender (p = 0.5634 for RED250, 0.3691 for DIY).

The accuracy strongly differs between repetitions. For example in various repetitions, observer pfo achieved values between 0.99\(^\circ \) and 1.4\(^\circ \) for RED250, and between 0.52\(^\circ \) and 2.58\(^\circ \) for DIY, regardless of the degree of fatigue (see Fig. 6).

Average accuracy for one observer computed for individual experiment repetitions. The values in brackets denote visual fatigue declared by the observer before each session (higher value means larger visual fatigue). The error bars depict the standard error of mean. The black horizontal line determines the mean accuracy for all repetitions.

6 Conclusions and Future Work

Both eye trackers used in the experiments have a low average accuracy close to 60 pixels on a 22” screen observed from 65 cm distance. Moreover, variance of results and maximum errors are large and show low robustness of the devices. The accuracy depends on a particular observer and significantly differs between the eye tracking sessions, which means that we cannot expect stable results for the observer in subsequent uses of the device. The visual fatigue does not affect the results in a systematic way. The accuracy does not depend on observer’s gender and type of vision correction. On the contrary, the viewing angle is important and the eye tracker accuracy can differ for interior and exterior screen areas.

Eye tracking accuracy inevitably determines possible applications of the eye tracking devices [22–25]. As we assessed two representative eye tracking devices (RED250 and DIY), we argue that further development of eye tracking techniques is desirable to improve this accuracy. The major challenge lies not only in designing better hardware but also in better understanding of the visual fixation mechanism. For example an interesting approach has been proposed in the GDOT technique [26, 27], in which fixation direction is determined not only based on the captured gaze direction but also using the information of location and movement of the objects in the scene.

References

TOBII: Accuracy and precision test method for remote eye trackers (version 2.1). Technical report, TOBII (2012)

SMI: RED250 Technical Specification. SensoMotoric Instruments GmbH (2009)

Mantiuk, R., Kowalik, M., Nowosielski, A., Bazyluk, B.: Do-it-yourself eye tracker: low-cost pupil-based eye tracker for computer graphics applications. In: Schoeffmann, K., Merialdo, B., Hauptmann, A.G., Ngo, C.-W., Andreopoulos, Y., Breiteneder, C. (eds.) MMM 2012. LNCS, vol. 7131, pp. 115–125. Springer, Heidelberg (2012). doi:10.1007/978-3-642-27355-1_13

Reinhard, E., Khan, E.A., Akyuz, A.O., Johnson, G.: Color Imaging. Fundamentals and Applications. A K Peters, Wellesley (2008)

Charman, W.N.: Optics of the eye. In: Fundamentals, Techniques and Design, vol. 1, 2nd edn. McGraw-Hill, New York (1995)

Robinson, D.A.: The mechanics of human saccadic eye movement. J. Physiol. 174, 245–264 (1964)

Rayner, K.: Eye movements in reading and information processing: 20 years of research. Psychol. Bull. 124, 372–395 (1998)

Robinson, D.A.: The oculomotor control system: a review. Proc. IEEE 56, 1032–1049 (1968)

Duchowski, A.T., Pelfrey, B., House, D.H., Wang, R.: Measuring gaze depth with an eye tracker during stereoscopic display. In: Proceedings of Symposium on Applied Perception in Graphics and Visualization, France, APGV 2011 (2011)

Salvucci, D.D., Goldberg, J.H.: Identifying fixations and saccades in eye-tracking protocols. In: Proceedings of the 2000 Symposium on Eye Tracking Research & Applications (ETRA), New York, pp. 71–78 (2000)

Duchowski, A.T.: Eye Tracking Methodology: Theory and Practice, 2nd edn. Springer, London (2007)

Blignaut, P.: Fixation identification: the optimum threshold for a dispersion algorithm. Attention Percept. Psychophys. 71, 881–895 (2009)

Shic, F., Scassellati, B., Chawarska, K.: The incomplete fixation measure. In: Proceedings of the 2008 Symposium on Eye Tracking Research & #38; Applications, ETRA 2008, pp. 111–114. ACM, New York (2008)

Hoffman, J.E., Subramaniam, B.: The role of visual attention in saccadic eye movements. Attention Percept. Psychophys. 57, 787–795 (1995)

Morimoto, C., Koons, D., Amir, A., Flickner, M., Zhai, S.: Keeping an eye for HCI. In: Proceedings of the XII Symposium on Computer Graphics and Image Processing, pp. 171–176 (1999)

Morimoto, C.H., Mimica, M.: Eye gaze tracking techniques for interactive applications. Comput. Vis. Image Underst. 98, 4–24 (2005)

SMI: Experiment Center 2. Manual, version 2.4. SensoMotoric Instruments (2010)

Ditchburn, R.W.: The function of small saccades. Vis. Res. 16, 271–272 (1980)

ITU: ITU Gaze Tracker software. IT University of Copenhagen, ITU GazeGroup (2009). http://www.gazegroup.org/home

Kleiner, M., Brainard, D., Pelli, D.: What’s new in Psychtoolbox-3? A free cross-platform toolkit for Psychophysics with Matlab and GNU/Octave. Max Planck Institute for Biological Cybernetics (2008). http://psychtoolbox.org

ITU-R.REC.BT.500-11: Methodology for the subjective assessment of the quality for television pictures (2002)

Duchowski, A.T.: A breadth-first survey of eye-tracking applications. Behav. Res. Meth. Instrum. Comput. 34, 455–470 (2002)

Mantiuk, R., Bazyluk, B., Tomaszewska, A.: Gaze-dependent depth-of-field effect rendering in virtual environments. In: Ma, M., Fradinho Oliveira, M., Madeiras Pereira, J. (eds.) SGDA 2011. LNCS, vol. 6944, pp. 1–12. Springer, Heidelberg (2011). doi:10.1007/978-3-642-23834-5_1

Mantiuk, R., Janus, S.: Gaze-dependent ambient occlusion. In: Bebis, G., Boyle, R., Parvin, B., Koracin, D., Fowlkes, C., Wang, S., Choi, M.-H., Mantler, S., Schulze, J., Acevedo, D., Mueller, K., Papka, M. (eds.) ISVC 2012. LNCS, vol. 7431, pp. 523–532. Springer, Heidelberg (2012). doi:10.1007/978-3-642-33179-4_50

Mantiuk, R., Markowski, M.: Gaze-dependent tone mapping. In: Kamel, M., Campilho, A. (eds.) ICIAR 2013. LNCS, vol. 7950, pp. 426–433. Springer, Heidelberg (2013). doi:10.1007/978-3-642-39094-4_48

Mantiuk, R., Bazyluk, B., Mantiuk, R.K.: Gaze-driven object tracking for real time rendering. Comput. Graph. Forum 32, 163–173 (2013)

Bazyluk, B., Mantiuk, R.: Gaze-driven object tracking based on optical flow estimation. In: Chmielewski, L.J., Kozera, R., Shin, B.-S., Wojciechowski, K. (eds.) ICCVG 2014. LNCS, vol. 8671, pp. 84–91. Springer, Heidelberg (2014). doi:10.1007/978-3-319-11331-9_11

Acknowledgement

The project was funded by the Polish National Science Centre (grant number DEC-2013/09/B/ST6/02270).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Mantiuk, R. (2017). Accuracy of High-End and Self-build Eye-Tracking Systems. In: Kobayashi, Sy., Piegat, A., Pejaś, J., El Fray, I., Kacprzyk, J. (eds) Hard and Soft Computing for Artificial Intelligence, Multimedia and Security. ACS 2016. Advances in Intelligent Systems and Computing, vol 534. Springer, Cham. https://doi.org/10.1007/978-3-319-48429-7_20

Download citation

DOI: https://doi.org/10.1007/978-3-319-48429-7_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-48428-0

Online ISBN: 978-3-319-48429-7

eBook Packages: EngineeringEngineering (R0)