Abstract

This chapter presents the integration of hardware and open-source software to build a low-cost (US$100) and high-speed eye tracker. With minor modifications of PlayStation 3 Eye (PS3 Eye) camera as the main hardware component and the proper coding adjustments in software interface to CL Eye Platform Driver, offered by open-source Code Laboratories, our low-cost eye tracker runs at the speed of 187 frames per second (fps) in 320 × 240 resolution, which is at least three times faster than the ordinary low-cost eye trackers usually running between 30 and 60 fps. We have also developed four application programs, which are ISO 9249-Part 9, Gaze Replay, Heat Map, and the Areas of Interest (AOI). Our design is then compared with the low-cost KSL-240 and commercially available high-price (over US$5,000) Tobii under ISO 9249-Part 9 eye-tracking test. The test performance favored ours in terms of response time and correct response rate.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Eye-Tracker Overview

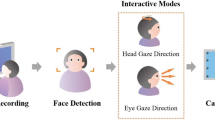

An eye tracker is a device used to analyze eye movements, as the eye scans the environment or fixates on particular objects in the scene, and to simultaneously localize the eye position in the image and track its movement from one location to another over time for determining the gaze direction. Eye-tracking technology is a specialized application of computer vision to do such analyses. However, this technology needs sophisticated software to process each image, captured by a high-speed camera, so that the gaze direction (estimated by the pupil center) is obtained, and the mapping from the gaze direction in the image to the screen is calculated to predict the gaze location on the screen. The mapping between the image and the screen is depicted in Fig. 1. The original gazing point A on screen, captured in the image I, constitutes vector GP, which is mapped back to obtain vector OS on the screen. The coordinates of S(sx,sy) and mapping error Err° (in angle degree) between S and A can be calculated from the coordinates of G(x,y). The mapping calculation is discussed in more detail in the next section [1].

1.2 Cameras and Open-Source Software

Observing from the eye-tracker overview, the high-speed camera and the R&D in software integration might be the main costs to keep the commercial eye-tracker devices at a high price in the market. However, rather cheap commercial over-the-shelf (COTS) cameras and open-source software are now available in the web; some of these are listed as follows:

By minor modifications of the COTS camera and integration with open-source software, some low-cost eye-tracker devices have been developed, as shown in Table 1.

This chapter presents a low-cost (US$100) eye tracker using Sony PS3 Eye camera combining the open-source Code Laboratories [3], which achieved high-speed image capturing of 187 frames per second (fps); a more detailed description is given in the next section. The chapter is organized as follows. Section 2 describes the hardware configuration and background technology. Section 3 describes the integration software. Section 4 presents the application examples. Finally, Section 5 presents the conclusion as well as future works.

2 Hardware Configuration and Background Technology

2.1 Hardware Configuration

The Sony PS3 Eye is reconstructed to become infrared (IR) sensitive only and two IR-LEDs (850 nm) are appended for the dark pupil tracking process. USB 2.0 was used for the communication with the computer. Figure 2 shows our glass-like head-mounted tracking hardware.

2.2 Background Technology

Glint, Bright Pupil, and Dark Pupil. The light reflected from the IR-LED beside the camera caused a white speck on the iris called glint. If the IR light source coax with the camera, most of the light is reflected back, causing a bright pupil; otherwise the pupil is rather dark [5] as shown in Fig. 3.

Glint–Pupil Difference Vector. A camera was used to track the location of the pupil center with reference to the glint of the IR light. Since the cornea of an eye is nearly spherical, the position of the glint remained more or less fixed as an eye moves to focus on different points of regard (POR) and the difference vector between glint and pupil is resulted as shown in Fig. 4. Eye tracker uses these difference vectors (GPs) in the image to determine the gaze vectors (OSs) on screen; a more detailed description of screen mapping is presented next.

Screen Mapping. After the point P(x,y) is calculated in the image, our design used the following least-square polynomial equations to map the estimated gaze point S(sx,sy) on the screen as shown in Fig. 1:

Calibration. The coefficients a 0–a 5 and b 0–b 5 of the aforementioned equations have to be determined before tracking is started. Therefore, at the beginning of the eye-tracking process, the user is asked to look at a set of known position points on the screen, to make the values of (sx,sy) and (x,y) become known, in Eqs. 1 and 2, such that the coefficients a 0–a 5 and b 0–b 5 can all be solved.

3 Integration Software

A sophisticated software system should be developed to control the camera operation in accordance with the related fast image capturing, glint–pupil detection, calibration, and mapping calculation. The above-mentioned open source offered such integrating services.

With minor modifications of the PS3 Eye camera as the main hardware component and proper coding adjustments in the software interface to the CL Eye Platform Driver, offered by the open-source Code Laboratories [3], our low-cost eye tracker runs at the speed of 187 fps in 320 × 240 resolution, which is at least three times faster than the ordinary low-cost eye trackers usually running between 30 and 60 fps [4, 5].

4 Application Examples

4.1 High-Speed Demonstration in ISO Test

ISO test requires that if the participant can make eye movement between two predefined locations on screen within 2,000 ms, it achieves a correct response trial and its response time is recorded. There are 5 sessions with 16 trials each. The rate of correct response trials and its average (correct) response time are displayed in Fig. 5.

After having developed the ISO test application program, our design was then compared with the low-cost KSL-240 and commercially available high-price (over US$5,000) Tobii under the ISO 9249-Part 9 eye-tracking test [4, 6]. The test performance favored ours in terms of response time and correct response rate as shown in Fig. 5.

4.2 Gaze Replay, Heat Map, and AOI

The applications Gaze Replay, Heat Map, and Areas of Interests (AOI) were also developed, whose performances are explained next.

Gaze Replay. Gaze Replay is able to display dynamically the sequence of gaze path as shown in Fig. 6a, where the circle denotes the gaze position while the size of circle is proportional to the gaze time. The numbers in all circles represent their timing sequence.

Heat Map. Heat Map is able to display the total gaze time at each gaze area using “d” magnitude levels as shown in Fig. 6b. The basic gaze unit is 5 ms. By using “d = 3” in this experiment, the area obtained the maximum number of accumulated gaze units assigned red color, the minimum assigned green, and the middle assigned yellow. The number of “d” and the related colors are users’ choices.

AOI. AOI is able to select the areas according to the user’s interests for further processing by using squares, as shown in Fig. 6c, where the data in each AOI are then transformed to Microsoft Excel for further processing in terms of saccade and fixation.

5 Conclusion and Future Works

We have been building a low-cost and high-speed eye tracker, which is a sort of hardware and software integration, and some application programs have been running in the system. Three application results are displayed in Figs. 5 and 6. Currently, we are developing a tracking accuracy application test.

The future work will include head movement estimation. This will require the development of control software involving the complicated issues concerning the measurement of distances and angles through image processing [8]. However, if it is successfully implemented, then the chin rest used in the experiments would become unnecessary.

References

Hansen DW, Ji Q (2010) In the eye of the beholder: a survey of models for eyes and gaze. IEEE Trans Pattern Anal Mach Intell 32(3):478–500

ITU GazeGroup. http://www.gazegroup.org

Code Laboratories. http://codelaboratories.com

Hiley JB, Redekopp AH, Fazel-Rezai R (2006) A low cost human computer interface based on eye tracking. In: Engineering in medicine and biology society, 2006. EMBS’06. 28th annual international conference of the IEEE, New York City, NY

Kowalik M (2011) Do-it-yourself eye tracker: eye tracking accuracy. In: Wimmer M, Hladůvka J, Ilčík M (eds) The 15th central European seminar on computer graphics. University of Technology, Institute of Computer Graphics and Algorithms, Vienna, pp 67–73

Lee S, Shon Y-J, Jung Y-M, Chang M-S, Kim SY, Kwak H-W (2011) Verification of the low-cost eye-tracker KSL-240. In: 2011 5th international conference on new trends in information science and service science, Macao, pp 90–92

Zhang X, MacKenzie IS (2007) Evaluating eye tracking with ISO 9241 – part 9. In: HCI International 2007. Springer, Heidelberg, pp 779–788

Zhu Z, Ji Q (2007) Novel eye gaze tracking techniques under natural head movement. IEEE Trans Biomed Eng 54(12):2246–2260

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this paper

Cite this paper

Huang, CW., Jiang, ZS., Kao, WF., Huang, YL. (2013). Low-Cost and High-Speed Eye Tracker. In: Juang, J., Huang, YC. (eds) Intelligent Technologies and Engineering Systems. Lecture Notes in Electrical Engineering, vol 234. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-6747-2_50

Download citation

DOI: https://doi.org/10.1007/978-1-4614-6747-2_50

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-6746-5

Online ISBN: 978-1-4614-6747-2

eBook Packages: EngineeringEngineering (R0)