Abstract

Pattern classification algorithms usually assume, that the distribution of examples in classes is roughly balanced. However, in many cases one of the classes is dominant in comparison with others. Here, the classifier will become biased towards the majority class. This scenario is known as imbalanced classification. As the minority class is usually the one more valuable, we need to counter the imbalance effect by using one of several dedicated techniques. Cost-sensitive methods assume a penalty factor for misclassifying the minority objects. This way, by assuming a higher cost to minority objects we boost their importance for the classification process. In this paper, we propose a model of cost-sensitive neural network with moving threshold. It relies on scaling the output of the classifier with a given cost function. This way, we adjust our support functions towards the minority class. We propose a novel method for automatically determining the cost, based on the Receiver Operating Characteristic (ROC) curve analysis. It allows us to select the most efficient cost factor for a given dataset. Experimental comparison with state-of-the-art methods for imbalanced classification and backed-up by a statistical analysis prove the effectiveness of our proposal.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Machine learning algorithms have been effectively used for classification purposes in last decades. However, new problems continuously emerge that pose challenge to pattern classifiers. Often these difficulties are embedded in the nature of the data. They may be connected with the volume, non-stationary nature, some specific characteristics, or differing quality of examples. One of such problems is the imbalance between the class representatives.

Standard classifiers assume, that the distribution of objects between classes is roughly equal. When this assumption is violated, they tend to get biased towards the class with higher quantity of examples. This deteriorates the performance over the minority class. Therefore, in order to get a well-balanced classifier that is competent over all of classes, we need to counter the imbalanced distribution.

There is a plethora of methods proposed, usually focusing on some data pre-processing methods or guiding the training process towards the minority examples. Former group concentrates on operations on the dataset in order to re-balance it, and then uses standard classifiers. Latter group works on the original dataset, but changes the training procedure of the classifier. Both of these approaches are considered as an effective aid in the imbalanced classification domain.

Between these two groups of methods lies the cost-sensitive solution. Usually during the design of the classifier one assumes that all classes are identically important and calculate the training error on the basis of quantity of misclassified examples (e.g., as in 0–1 loss function). However, in imbalanced domain usually the minority class is the more interesting one. Therefore, one may associate a higher misclassification cost with the minority class examples in order to boost their recognition rate. This will penalize the training process for errors on the minority objects and counter the problem of uneven class representations. Cost-sensitive paradigm has been successfully introduced to some types of classifiers, like decision trees or neural networks.

In this paper, we concentrate on the design of cost-sensitive neural networks. We propose to work with the neural classifiers that use the moving threshold approach for incorporating the classification cost. Here instead of re-balancing the training set or modifying the learning procedure, we scale the continuous output of a neural network. Therefore, we modify the classification phase instead of the training phase. Such a scaling forces the classification boundary to be moved towards the objects with higher cost, thus alleviating the bias towards the majority class.

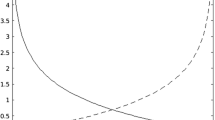

However, the cost factor has a crucial influence on the performance of such a neural classifier and the problem lies in establishing its value. Some problems have the cost supplied by an expert (like in medical domains), but mainly we have no prior information on how to set it. We propose a fully automatic method, based on Receiver Operating Characteristic (ROC) curve [4] analysis. We use it to select the best cost factor for a given dataset, that returns balanced performance on both classes.

Our ROC-based cost-sensitive neural network is compared with a set of reference approaches for handling the class imbalance problem. Experimental analysis carried over a set of datasets with varying imbalance ratio proves the usefulness of our approach.

2 Imbalanced Classification

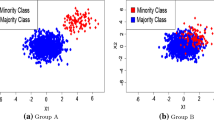

The performance and quality of machine learning algorithms is conventionally evaluated using predictive accuracy. However, this is not appropriate when the data under consideration is strongly imbalanced, since the decision boundary may be strongly biased towards the majority class, leading to poor recognition of the minority class. Disproportion in the number of class examples makes the learning task more complex [12], but is not the sole source of difficulties for machine learning algorithms. It is usually accompanied by difficulties embedded in the structure of data such as small sample size (very limited availability of minority examples), small disjuncts (minority class can consist of several subconcepts) or class overlapping.

Over the last decade there was developed a number of dedicated techniques for handling such difficulties [1]. They can be divided into two major categories. First one consist of data-level approaches that in the pre-processing stage aim at re-balancing the original distribution [3]. Classifier-level approaches try to adapt existing algorithms to the problem of imbalanced datasets and alleviate bias towards the majority class. Third group relies on cost-sensitive classification and assign higher misclassification cost for minority class, while classification is performed so as to reduce the overall learning cost.

Ensemble systems have also been successfully applied to this domain, and mainly combine a committee learning algorithm (such as Bagging or Boosting) with one of the above mentioned methods [5]. One may also propose a hybrid training procedure for such a combined classifier that will apply cost-sensitive learning locally (for each base classifier) and globally (in the ensemble pruning step) [7].

3 Proposed Cost-Sensitive Neural Network

In this paper, we propose to investigate the cost-sensitive neural network model, based on moving threshold [13].This algorithm concentrates on modifying the output of a classifier, instead of changing the structure of the training data or the training algorithm. The cost-sensitive modification is introduced during the classification step - this the model is being trained in a traditional manner.

Let us assume, that we have a binary imbalanced problem with majority and minority classes. Then the continuous output of two neurons in the final layer of a neural network for object x can be denoted as \(O_{maj}(x)\) and \(O_{min}(x)\), where \(O_{maj}(x) + O_{min}(x) = 1\) and both outputs are bounded within [0, 1]. In canonical neural network models, the final class is selected according to winner-takes-all (WTA) procedure: \(\varPsi (x) = \arg \max _{m \in \{maj,min\}} O_m (x)\).

However, in moving-threshold model we modify the outputs of the neural network, thus denoting them as \(O_{maj}^*(x)\) and \(O_{min}^*(x)\). In cost-sensitive threshold-moving model, we compute the output as follows:

and

where Cost[m, n] is the misclassification cost between m-th and n-th class, and \(\eta \) is a normalization parameter such that \(O_{maj}^*(x) + O_{min}^*(x) = 1\) and both outputs are bounded within [0,1].

One should note, that threshold-moving approaches for neural networks have been overlooked for a long time and is not even close in popularity to sampling-based methods for class imbalance [5]. However, some studies report its high usefulness for dealing with datasets with skewed distributions [9]. Other works report, that simply changing the data distribution without considering the imbalance effect on the classification threshold (and thus adjusting it properly) may be misleading [10].

As we can see, the proper settings of cost values has a crucial effect on the performance of this algorithm. Too low cost would lead to insignificant improvements over the standard methods, while too high cost would degrade the performance over the majority class. One must remember, that we cannot sacrifice the majority class, as our ultimate goal is to obtain a classifier with good performance on both classes. In optimal scenario the cost would be supplied by a domain expert according to his/her knowledge. However, in most of real-life imbalanced applications we do not have an access to a pre-defined cost and thus must set it manually. This procedure can be time-consuming, difficult and may lead to an increased classification error when conducted erroneously. So far only simple heuristics were used to calculate the cost, like setting it equal to class imbalance ratio [8].

In this paper, we propose a new method for cost-sensitive neural network training based on ROC curve analysis [7]. Here, we use different values of cost parameter as cut-off points for plotting a ROC curve. Then, we select such a setting of neural classifier that offers the best ratio between the True Positive rate and False Positive rate (in practice - point located closest to the top left corner of the ROC plot). This allows us for an automatic cost selection that offers a balanced performance on both classes. User only needs to supply a search range and the tuning procedure is conducted in a fully automatic manner.

The detailed steps of the cost-sensitive moving-threshold classifier are presented in a form of pseudo-code in Algorithm 1.

4 Experimental Study

The aims of the experiments were to establish the usefulness of the cost-sensitive moving-threshold neural network, compare it with-state-of-the-art methods for imbalanced classification, and asses the quality of ROC-based cost parameter selection.

In the experiments we have used 10 binary imbalanced datasets from the KEEL repositoryFootnote 1. Their details are given in Table 1.

As a base classifier we use a single-layer neural network trained with resilient backpropagation algorithm [11]. The number of input neurons is equal to the number of features, output neurons to the number of classes, and the number of neurons in the hidden layer is equal to \(\frac{neurons_{input}+neurons_{output}}{2}\). Each neural network is trained for 1000 iterations.

As reference methods for dealing with class imbalance, we combine neural networks with Random Oversampling (NN + OS), Random Undersampling (NN + US), Synthetic minority over-sampling technique (NN + SMOTE) [3], and cost-sensitive moving threshold method with Cost\([minority,majority] = IR\) [8] (NN + MV(IR)).

The proposed method with ROC-based cost optimization (NN + MV(ROC)) uses [5, 200] as a possible range of possible cost parameter values.

We use 5\(\,\times \,\)2 CV F-test for training / testing and pairwise statistical analysis, while Friedman ranking test and Shaffer post-hoc tests are applied for statistical comparison over multiple datasets [6].

The results are given in Table 2. The output of Shaffer post-hoc test is reported in Table 3.

From the obtained results one may see, that the proposed ROC-based cost-sensitive neural network outperforms all other methods in 6 out of 10 cases. What is highly interesting it always delivers superior performance in comparison with the cost selected on the basis of the imbalance ratio. This shows, how important is the proper selection of the cost parameter and that imbalance ratio is not the best indicator of the proper misclassification cost. This can be explained by the fact, that IR is not the sole reason behind the imbalanced difficulty. There are other factors, embedded in the nature of data [2]. Therefore, one can easily imagine two hypothetical datasets with identical IR, but completely different classification difficulty. In such situations misclassification cost based purely on IR will definitely fail. This is further confirmed by Shaffer post-hoc test.

When comparing the proposed ROC-based neural network to other solutions, we can clearly see that it easily outperforms Random Oversampling. This is because our cost parameter was optimized to balance the performance on both classes, while RO multiplies the minority class without considering the importance of objects.

On some datasets, the proposed method was inferior to Random Undersampling and SMOTE. This can be explained by the lack of selectiveness of our procedure - it modifies the output for all of examples. Thus it may happen that a correctly recognized examples is weighted towards the incorrect class, which may result in an increased rate of false positives (majority examples misclassified as minority ones). To counter this problem, one would need to introduce a sample selection mechanism, that would apply the cost modification only on examples that are potentially uncertain.

5 Conclusions and Future Works

In this paper we have presented a modification of cost-sensitive neural network classifier based on moving threshold for imbalanced learning domain. We proposed to augment this model with automatic procedure for parameter selection. We applied a ROC-based parameter selection to chose an optimal cut-off point that determined the selected value of misclassification penalty. This allowed for selecting such a parameter, that would offer a balanced performance on both classes.

Experimental evaluation carried out on a number of datasets with varying imbalance ratio confirmed the usefulness of the proposed approach. Our method was always better than the normally used approach for cost parameter estimation. Additionally, in 6 out of 10 cases it was able to outperform the reference methods based on data sampling. This was further backed-up with a thorough statistical analysis. However, the experiments revealed the weak side of our method, that is lack of selectiveness when modifying the output of the classifier.

In future works we plan to develop an active learning solution for selecting important samples to modify the threshold, and propose a dynamic ensemble system based on this classifier.

References

Antonelli, M., Ducange, P., Marcelloni, F.: An experimental study on evolutionary fuzzy classifiers designed for managing imbalanced datasets. Neurocomputing 146, 125–136 (2014)

Błaszczyński, J., Stefanowski, J.: Neighbourhood sampling in bagging for imbalanced data. Neurocomputing 150, 529–542 (2015)

Chawla, N.V., Bowyer, K.W., Hall, L.O., Kegelmeyer, W.P.: Smote: synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357 (2002)

Flach, P.A.: The geometry of ROC space: understanding machine learning metrics through ROC isometrics. In: Proceedings of the Twentieth International Conference on Machine Learning, ICML 2003, 21–24 August 2003, Washington, DC, USA, pp. 194–201 (2003)

Galar, M., Fernandez, A., Barrenechea, E., Bustince, H., Herrera, F.: A review on ensembles for the class imbalance problem: bagging-, boosting- and hybrid-based approaches. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 42(4), 463–484 (2012)

García, S., Fernández, A., Luengo, J., Herrera, F.: Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: Experimental analysis of power. Inf. Sci. 180(10), 2044–2064 (2010)

Krawczyk, B., Woźniak, M., Schaefer, G.: Cost-sensitive decision tree ensembles for effective imbalanced classification. Appl. Soft Comput. 14, 554–562 (2014)

Lopez, V., Fernandez, A., Moreno-Torres, J.G., Herrera, F.: Analysis of preprocessing vs. cost-sensitive learning for imbalanced classification open problems on intrinsic data characteristics. Expert Syst. Appl. 39(7), 6585–6608 (2012)

Maloof, M.A.: Learning when data sets are imbalanced and when costs are unequal and unknown. In: ICML-2003 Workshop on Learning from Imbalanced Data Sets II (2003)

Provost, F.: Machine learning from imbalanced data sets 101. In: Proceedings of the AAAI 2000 Workshop on Imbalanced Data Sets, pp. 1–3 (2000)

Riedmiller, M., Braun, H.: A direct adaptive method for faster backpropagation learning: the RPROP algorithm. In: 1993 IEEE International Conference on Neural Networks, pp. 586–591 (1993)

Sun, Y., Wong, A.K.C., Kamel, M.S.: Classification of imbalanced data: a review. Int. J. Pattern Recogn. Artif. Intell. 23(4), 687–719 (2009)

Zhou, Z.-H., Liu, X.-Y.: Training cost-sensitive neural networks with methods addressing the class imbalance problem. IEEE Trans. Knowl. Data Eng. 18(1), 63–77 (2006)

Acknowledgments

This work was supported by the Polish National Science Center under the grant no. DEC-2013/09/B/ST6/02264.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Krawczyk, B., Woźniak, M. (2015). Cost-Sensitive Neural Network with ROC-Based Moving Threshold for Imbalanced Classification. In: Jackowski, K., Burduk, R., Walkowiak, K., Wozniak, M., Yin, H. (eds) Intelligent Data Engineering and Automated Learning – IDEAL 2015. IDEAL 2015. Lecture Notes in Computer Science(), vol 9375. Springer, Cham. https://doi.org/10.1007/978-3-319-24834-9_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-24834-9_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-24833-2

Online ISBN: 978-3-319-24834-9

eBook Packages: Computer ScienceComputer Science (R0)