Abstract

This article presents a general methodology of state estimation by interval analysis in a dynamic system modeled by difference equations. The methodology is applied to a pineapple osmotic dehydration process, in order to predict the behavior of the process within a range of allowed perturbation. The paper presents simulations and validations.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

When it comes to industrial processes, it is difficult to obtain a precise model for disturbances and noise that may interfere with these processes. This paper presents a methodology for state estimation, where the state equations for the process are known and the disturbances are limited through intervals. Interval algebra has diverse applications as shown in [1, 2].

State estimation is fundamental for analysis, design, control and supervision of processes in engineering [3,4,5,6]. Specifically, state estimation methodology based on intervals has received a lot of attention in the last few years and literature about this subject shows a growing progress [7,8,9,10,11,12].

This work is organized in the following manner: Sect. 2 shows an approach to the problem statement and the process under study. Section 3 reviews the fundamentals of interval algebra, including the definition of interval, box, interval matrix and inclusion function. This section also presents the state estimation algorithm for solving differential equations according to [13]. Initially, the existence and uniqueness of the solution for the difference equations is verified, when the initial conditions belong to an interval vector. Afterwards, the solution is calculated through Taylor’s expansion. Sections 4 and 5 present the state estimation of the studied process, with the interpretation of the obtained results. Finally, Sect. 6 finishes the work, highlighting a few future work perspectives.

2 Theoretical Framework

2.1 Problem Statement

The unknown state x for a dynamic system is defined by:

where \(x(t)\in R^n\) and \(y(t)\in R^m\), and they denote, respectively, the state variables and the outputs of the system. Initial conditions x(0) are supposed to belong to an initial “box” \([X_0]\). The concept of “box” will be described in Sect. 3. Time is \(t\in [0,t_{max}]\). The functions f and g are real and can be differentiable in M, where M is an open set of \(R^n\), such as \(x(t)\in M\) for each \(t\in [0,t_{max}]\). Besides, function f is at least k-times differentiable in the M domain. The output error is defined by:

We assume that \(\underline{v(t)}\) and \(\overline{v(t)}\) represent the lower and upper limit of acceptable output error, respectively. These limits correspond to a bounded noise. The integer number N is the total number of records. The interval arithmetics are used to calculate the guaranteed limits for the solution of Eq. 1 in the sampling times \(\left\{ t_1,t_2,\ldots ,t_N\right\} \). Even though the studied process is a continuous dynamic system, Eq. 2 indicates that the problem statement is applicable for a discrete system, which is governed by difference Equations [4].

2.2 Studied Process (Osmotic Dehydration of Pineapple)

The osmotic dehydration process has highly complex dynamics, which implies that there are a great variety of models and experimental procedures for different kinds of fruits and foods [14,15,16,17]. Independent of the chosen model, some authors coincide that the most significant variables of the process are identified with the food concentration and the concentration of the solution where the food is immersed in [18, 19]. Pineapple is a completely heterogeneous, highly watery and porous food, that when immersed in solutions with high concentration of soluble solids (sugar), provokes two simultaneous upstream main flows. The first flow corresponds to a transfer of soluble solids (sugar) from the solution to the food. The second one is flow of water from the food that goes highly concentrated to the solution. A third secondary and negligible flow of aroma, vitamins and minerals happens, which is less intense, and occurs from the fruit to the solution. The mass transfer mechanisms that are present in the osmotic dehydration at atmospheric pressure and room temperature are mainly discussion (Fick’s laws of diffusion).These mechanisms are originated by the concentration differences between the food and the osmotic solution where the fruit is immersed in [18,19,20]. Figure 1 shows the previously described flows that occur during the osmotic dehydration.

The sugar concentrations found in the osmotic solution and the fruit are registered by refractometers and reported in refraction indexes or Degrees Brix. Degrees Brix can be understood as a percentage from 0 to 1 or a mass fraction, that provides the sugar mass contained in the mass of each analyzed component (solution and fruit). The model that was studied in this paper was extracted from [18] and it considers three state variables: concentration in fruit, concentration in tank 1 solution and volume that enters tank 1 from tank 2. The dehydration plant and its operation is described in [18,19,20]. Figure 2 shows the process.

The model of [18] assumes that there is a perfect mix in tank 1, where the flow is perfectly controlled and the values for concentration in fruit and in tank 1 obey ideally to mass balances. The process is modeled in a discrete manner with a sample time of \(\varDelta T\). The model is represented by difference equations, as indicated below:

-

Variation of sugar concentration for the solution:

(3)

(3) -

Variation of sugar concentration for the food:

$$\begin{aligned}{}[X^S]_{k+1}=[C]_k[X^S]_k\varDelta T+\frac{[U]_k[X^S]_k\varDelta T}{[V]_k} \end{aligned}$$(4)where:

-

\([V]_{k}\) is the variation of tank 1 volume:

$$\begin{aligned}{}[V]_{k+1}=[V]_k+[U]_k\varDelta T \end{aligned}$$(5) -

\([C]_k\) is the specific rate of sugar concentration in food:

$$\begin{aligned}{}[C]_k=\mu \frac{[Y^S]_k}{K_{Y^S}+[Y^S]_k} \end{aligned}$$(6) -

B is a dimentionless proportion factor between the concentration variation of the solution and that of the food. This parameter is calculated using the final (subindex f) and initial (subindex o) values of the concentrations in solution and food during an experimental process:

$$\begin{aligned} B=\frac{[Y^S]_f-[Y^S]_o}{[X^S]_f-[X^S]_o} \end{aligned}$$(7) -

\(\mu \) is the maximum change rate in sugar growth for the food. It is represented by:

$$\begin{aligned} \mu =\frac{\ln (\frac{[X^S]_f}{[X^S]_o})}{t_f-t_o} \end{aligned}$$(8) -

\(K_{Y^S}=0.65\) (gr. of sugar/gr. of osmotic solution in tank 1) is the saturation constant for sugar concentration in tank 1.

The raw material associated to the presented model is pineapple (ananas comosus in the cayena lisa variety) in a geometric shape (eighths of slice of 1 cm of thickness without any previous treatment).

The attributes of the osmotic solution for each tank were:

-

Tank 2: Constant. \([Y^S]_{cte}=0.65^{\circ }\) Brix.

-

Tank 1: Reference. \([Y^S]_{ref}=0.6^{\circ }\) Brix.

The process was simulated for a constant lineal entry flow: \([U2]_k:\) (0 to 2.09) L/min for 180 min. The calculation of the kinetic parameter \([C]_k\) and the kinetic constants \(\mu \) and B are assumed as known and are reported in Table 1.

3 Methodology

3.1 Interval Analysis Fundamentals

At first, interval analysis was a response to explain quantification errors that occurred when real numbers were represented rationally in computers and the technique was extended to validated numerics [31]. According to [31], an interval \([u]=[\underline{u},\overline{u}]\), is a closed and connected subset of R, denoted by IR. Two intervals [u] and [v] are equal, if and only if their inferior and superior limits are the same. Arithmetic operations between two intervals [u] and [v], can be defined by:

The interval vector (or box) [X] is a vector with interval components and it is equivalent to the cartesian product of scalar intervals:

The vector set of real n-dimensional intervals is denoted by \({IR}^n\). A matrix interval is a matrix where its components are intervals. The set of \(n \times m\) real interval matrices is denoted by \({IR}^{n \times m}\). The classic operations for interval vectors or interval matrices are direct extensions of the same operations of point vectors [31].

The operations for punctual vectors can be extended to become classical operations for interval vectors [22]. This way, if \(f:R^n \rightarrow R^m\), the range of function f in an interval vector [u], is given by:

The interval function [f] of \({IR}^n\) to \({IR}^m\) is a function of the inclusion of f if:

An inclusion function of f can be obtained through the substitution of each occurrence of a real variable for its corresponding interval. Said function is called natural inclusion function. In practice, the inclusion function is not unique and it depends on the syntax of f [31].

3.2 Inversion Set

Consider the problem of determining a solution set for the unknowns u, defined by:

where [y] is known a priori, U is a search set for u and \(\phi \) of an invertible nonlinear function, which is not necessarily in the classical sense. [23] includes the calculation of the reciprocal image of \(\phi \) and that is known as a set inversion problem that can be solved using the SIVIA (Set Inversion Via Interval Analysis) algorithm. SIVIA as proposed in [9] is a recursive algorithm that goes through all the searching space so it does not lose any solution. This algorithm makes it possible to derive a guaranteed enclosure of the solution of set S, that meets: S is factible, enough to prove that \(\phi ([u])\subseteq [y]\). Conversely, if it can be proven that \(\phi ([u])\cap [y]=0\), then the box [u] is non-viable. On the contrary, there is no conclusion and the box [u] is said to be undetermined. If the box is undetermined, the box is bisected and tried again until its size reaches a threshold precision, specified for \(\epsilon > 0\). This criterion assures that SIVIA finishes after a limited number of iterations.

3.3 State Estimation

State estimation refers to the integration of Eq. 1. Thus, the goal is to estimate the state of vector x in the sampling times \(\left\{ t_1,t_2,\ldots ,t_N\right\} \), which correspond to the times of output measurements. The box \([x(t_j)]\) is denoted as \([x_j]\), where \(t_j\) represents the sampling time, \(j=1,2,\ldots ,N\) and \(x_j\) represents the solution of (1) at \(t_j\). For models like the one presented in (1), the sets are characterized by not being convex and there could even be several disconected components. Interval analysis consists of enclosing said sets in interval vectors that do not overlap and the usual inconvenient is obtaining wider solution interval vectors each time. This in known as the Wrapping Effect. This way, the wrapping effect yields poor results. The poverty brought by the big width of the set can be reduced through the use of a higher-order k for the Taylor expansion and through the use of mean value forms and matrices of pre-conditioning [13, 24].

3.4 Prediction and Correction

Prediction aims at calculating the accessibility fixed for the state vector, while the correction stage keeps only the parts of the accessibility set that are consistent with the measurements and the error limits defined by Eq. 2. It is assumed that \([X_j]\) is a box that is guaranteed to contain \(x_j\) at \(t_j\). The exterior aproximation of the predicted set \([{X_{j+1}}^+]\) is defined as the validated solution of the difference equation at \(t_{j+1}\). The set \([{X_{j+1}}^+]\) is calculated using the EMV algorithm (extenden mean value), defined in [24]. The set is guaranteed to contain the state at \(t_{j+1}\). At \(t_{j+1}\), a “measurement vector”, \(y_{j+1}\), is obtained and it corresponds to the upper and lower limits for measurement noise.

Then, the set \([g]^{-1}([y_{j+1}])\) is calculated. This evaluation is obtained by the SIVIA algorithm. The expected solution at the sampling time \(t_{j+1}\) is finally given by \([x_{j+1}]=[{X_{j+1}}^+]\cap [g]^{-1}([y_{j+1}])\). The procedure for the state estimation is summarized in the following algorithm: For \(j=0\) to \(j=N-1\) do:

-

Prediction step: compute \([{X_{j+1}}^+]\) using EMV algorithm.

-

Correction step: calculate \([x_{j+1}]\) so that

$$\begin{aligned}{}[x_{j+1}]=[{X_{j+1}}^+]\cap {[g]}^{-1}([y_{j+1}]) \end{aligned}$$

3.5 Extended Mean Value (EMV)

The most efficient methods to solve state estimation for dynamic systems are based on Taylor’s expansions [24]. These methods consist of two parts: the first part verifies the existence and uniqueness of the solution, using the fixed point theorem and the Picard-Lindelf operator. At a time \(t_{j+1}\), a box, a priori \([\tilde{x}_j]\), that contains all the solutions that correspond to all the possible trajectories between \(t_j\) and \(t_{j+1}\) is obtained. In the second part, the solution at \(t_{j+1}\) is calculated using Taylor’s expansion, in the term that remains is \([\tilde{x}_j]\). However, in practice, the set \([\tilde{x}_j]\) often doesn’t contain the true solution [25]. Therefore, the used technique consists of inflating this set until the next inclusion is verified with the following expression:

where h indicates the integration stage and \([x_j]\) is the first solution. This method is summarized in the Enclosure algorithm, which was developed by [26]. The inputs are \([x_j]\) and \(\alpha >0\) and the output is \([\tilde{x}_j]\):

The function inflate for an interval vector \([u]=[\underline{u_1},\overline{u_1}],\dots ,[\underline{u_n},\overline{u_n}]\), operates as follows:

Precision depends of the \(\alpha \) coefficient. If the set \([\tilde{x}_j]\) satisfies the inclusion presented in Eq. 15, then the inclusion \(x(t)\in [\tilde{x}_j]\) is maintained for all \(t\in [t_j,t_{j+1}]\). The solution \(x_{j+1}\) of the differential equation given in Eq. 1 at \(t_{j+1}\) is guaranteed, in the interval vector \([x_{j+1}]\) and it is given by the Taylor expansion [31]:

where k denotes the end of the Taylor expansion and the \(f^{[i]}\) coefficients are the Taylor coefficients of the x(t) solution, which are obtained in a recursive form by:

The application of the inflate function in the set \([\tilde{x}_1]\) leads to increase of its width. The poor quality introduced by the wider set can be reduced through the use of a higher order k for the Taylor expansion in Eq. 17. But the width of the solution always increases, even for higher orders. To sort this obstacle, Rihm [27] proposes evaluating (17) through the extended mean value algorithm, based on mean value forms and pre-conditioning matrices. This algorithm is used to solve the differential equation given in (1). The inputs for this algorithm are \([\tilde{x}_j]\), \([x_j]\), \(\widehat{x}_j\), \([v_j]\), \(p_j\), \(A_j\), h and the outputs are \([\tilde{x}_{j+1}]\), \(\widehat{x}_{j+1}\),\([v_{j+1}]\), \([p_{j+1}]\), \(A_{j+1}\). The variable \(\widehat{x_j}\) is the mean point of a certain interval \(v_j\). The initial conditions may be provided by \(p_0=0\), \(q_0=0\) and \(v_0=x_0\). Up next, the sequence of the algorithm is presented:

-

1.

\([v_{j+1}]=\widehat{x}_j+\sum _{i=1}^{k-1}{h^if^{[i]}(\widehat{x}_j)+h^kf^{[k]}([\tilde{x}_j])}\)

-

2.

\([S_j]=I+\sum _{i=1}^{k-1}{J(f^{[i]};[x_j])h^i}\)

-

3.

\([q_{j+1}]=([S_j]A_j)[P_j]+[S_j]([v_j]-\widehat{x}_j))\)

-

4.

\([x_{j+1}]=[v_{j+1}]+[x_{j+1}]\)

-

5.

\(A_{j+1}=m([S_j]A_j)\)

-

6.

\([p_{j+1}]=A_{j+1}^{-1}([S_j]A_j)[p_j]+(A_{j+1}^{-1}[S_j])([v_j]-\widehat{x}_j)\)

-

7.

\(\widehat{x}_{j+1}=m([v_{j+1}])\)

In the previous algorithm, I represents the identity matrix (with the same dimension of the state vector). \(J(f^{[i]};[x_j])\) is the Jacobian matrix of the Taylor coefficient, \(f^{[i]}\), which is evaluated over \([x_j]\). The variables \(\widehat{x}_j\) and \([v_j]\) are calculated in the state \((t_j-1)\).

4 Results

The state estimation algorithm is applied to the pineapple dehydration model. The analysis is taken to simulation level. Noise R is delimited for the state variables \([Y^S]\), \([X^S]\) and [V] respectively, as follows:

The initial conditions for the state variables \([Y^S]\), \([X^S]\) and [V], are given by:

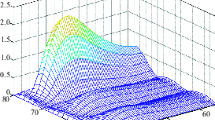

The results of the prediction are calculated for the state variables and the specific rate of sugar concentration in the food, which is a function of the state variables. In Figs. 3, 4, 5 and 6, the dotted lines present the simulated values and solid lines show the model reconstruction. The obtained results for the state variables display that the uncertainty is adequately controlled by the estimation algorithm, in spite of the fixed noise, which is in sync with the experimental conditions of the process. The estimation of the specific concentration rate is a close approximation to the model data. This proves the method’s efficiency, based on the higher order of the Taylor expansion, to solve state equations through center forms and pre-conditioning matrices.

5 Discussion

The state estimation methodology complement the probabilistic methods, where noises and disturbances are assumed as random variables and the problem of state estimation is solved through the election and optimization of adequate criteria [28]. However, in practice, it is often complicated to make a characterization of the random variables that model noise and disturbances, making it difficult to evaluate proposed stochastic hypothesis. This way, the estimation by intervals method offers an alternative approach that is based on the fact that the dynamic system is limited to a defined uncertainty, having a state estimation in an enclosed error context. This approach allows characterizing the values of the state vector, which are consistent with the model structure and the defined error limits. It is important to highlight that when the dynamic system is governed by non-linear differential equations, it is fundamental to linearize the model and afterwards, applying the estimation algorithms presented in this work.

6 Conclusions

State estimation algorithms were applied to a fruit dehydration process, where it was necessary to know beforehand the operation ranges of the process. The used state estimation algorithms allowed for the process to be manipulated so that the necessary state variables were monitored, in order to rebuild the process based on interval analysis.

The SIVIA and EMV algorithms were experimentally validated, which allowed for the definition of an adequate operation zone for the plant. State estimation led to a solution that is in sync with the system’s response, which is based on the dynamics of the process’ model.

State estimation is highly dependent of limited error. If the error is not bound in the proper intervals, the reconstruction of the state variable may be wrong, for when the prediction is made. The bounded noise allows finding important relationships between input and output variables of the process.

In the future, interval analysis may allow using control techniques based on state estimation, that may be able to complement traditional methods of automatic control. The methodology used in this work is applicable to real processes such as those shown in [29,30,31] and may be used for failure detection and diagnostics. It is possible to determine reliable intervals for the correct functioning of the system, just as the precision of the regions. This has a direct relationship with the level of uncertainty and the plant’s instrumentation.

References

Burgos, M., González, A., Vallejo, M., Izquierdo, C.: Selección económica de equipo utilizando matemáticas de intervalos. Información Tecnológica 9(5), 311–316 (1998)

Campos, P., Valdés, H.: Optimización global por intervalos: aplicación a problemas con parámetros inciertos. Información Tecnológica 17(5), 67–74 (2006)

Zapata, G., Cardillo, J., Chacón, E.: Aportes metodológicos para el diseño de sistemas de supervisión de procesos contínuos. Información Tecnológica 22(3), 97–114 (2011)

Jauberthie, C., Verdiere, N., Trave, L.: Fault detection and identification relying on set-membership identifiability. Annu. Rev. Control. 37(1), 129–136 (2013)

Li, Q., Jauberthie, C., Denis, L., Cher, Z.: Guaranteed state and parameter estimation for nonlinear dynamical aerospace models. In: Informatics in Control, Automation and Robotics (ICINCO), Vienna, Austria (2014)

Ortega, F., Pérez, O., López, E.: Comparación del desempeño de estimadores de estado no lineales para determinar la concentración de biomasa y sustrato en un bioproceso. Información Tecnológica 26(5), 35–44 (2015)

Kieffer, M., Jaulin, L., Walter, E.: Guaranteed recursive nonlinear state bounding using interval analysis. Int. J. Adapt. Control. Signal Process. 16(3), 193–218 (2002)

Jaulin, L.: A nonlinear set membership approach for the localization and map building of underwater robots. IEEE Trans. Robot. 25(1), 88–98 (2009)

Jaulin, L., Walter, E.: Set inversion via interval analysis for nonlinear bounded-error estimation. Automatica 294, 1053–1064 (1993)

Paşca, I.: Formally verified conditions for regularity of interval matrices. In: Autexier, S., et al. (eds.) CICM 2010. LNCS (LNAI), vol. 6167, pp. 219–233. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-14128-7_19

Rauh, A., Auer, E.: Modeling, Design, and Simulation of Systems with Uncertainties. Springer, Berlin (2011). https://doi.org/10.1007/978-3-642-15956-5

Jauberthie, C., Chanthery, E.: Optimal input design for a nonlinear dynamical uncertain aerospace system. In: IFAC Symposium on Nonlinear Control Systems, Toulouse, France (2013)

Nedialkov, N., Jackson, K.R.: A new perspective on the wrapping effect in interval methods for initial value problems for ordinary differential equations. In: Kulisch, U., Lohner, R., Facius, A. (eds.) Perspectives on Enclosure Methods, pp. 219–263. Springer, Vienna (2001). https://doi.org/10.1007/978-3-7091-6282-8_13

Arreola, S., Rosas, M.: Aplicación de vacío en la deshidratación osmótica de higos \((\)ficus carica\()\). Información Tecnológica 18(2), 43–48 (2007)

Arballo, J.: Modelado y simulación de la deshidratación combinada osmótica-microondas de frutihortícolas. Ph.D. thesis in Engineering, Universidad de La Plata, Argentina (2013)

García, A.: Análisis comparativo de la cinética de deshidratación osmótica y por flujo de aire caliente de la piña \((\)ananas comosus, variedad cayena lisa\()\). Revista Ciencias Técnicas Agropecuarias 22(1), 62–69 (2013)

García, M., Alvis, A., García, C.: Evaluación de los pretratamientos de deshidratación osmótica y microondas en la obtención de hojuelas de mango \((\)Tommy Atkins\()\). Información Tecnológica 26(5), 63–70 (2015)

Jaller, S., Vargas, S.: Comparación de la transferencia de materia en los procesos de deshidratación osmótica a presión atmosférica y con impregnación de vacío en la piña cayena lisa (ananás comosus l. meer) a través de un modelo matemático. Undergraduate thesis for Agroindustrial Production Engineering, Universidad de La Sabana, Chía, Colombia (2000)

González, G.: Viabilidad de la piña colombiana var. cayena lisa, para su industrialización combinando operaciones de impregnación a vacío, deshidtratación cayena lisa (ananás comosus l. meer). Ph.D. thesis, Universidad Politécnica de Valencia, Valencia, Spain (2000)

Wullner, B.: Instrumentación y control de un deshidratador osmótico a vacío. Undergraduate thesis for Agroindustrial Production Engineering, Universidad de La Sabana, Chía, Colombia (1998)

Moore, R.: Automatic error analysis in digital computation. Technical report LMSD-48421, Lockheed Missiles and Space Co., Palo Alto, CA (1959)

Moore, R.E.: Interval Analysis. Prentice Hall, New Jersey (1966)

Jaulin, L., Walter, E.: Set inversion via interval analysis for nonlinear bounded-error estimation. Automatica 29(4), 1053–1064 (1993)

Nedialkov, N., Jackson, K., Pryce, J.: An effective high-order interval method for validating existence and uniqueness of the solution of an IVP for an ODE. Reliab. Comput. 7, 449–465 (2001)

Milanese, M., Norton, J., Piet-Lahanier, H., Walter, E.: Bounding Approaches to System Identification. Plenum, New York (1996)

Lohner, R.: Enclosing the solutions of ordinary initial and boundary value problems. Wiley-Teubner, Stuttgart, pp. 255–286 (1987)

Rihm, R.: Interval methods for initial value problems in ODEs. In: Herzberger, J. (ed.) Topics in Validated Computations: Proceedings of the IMACS-GAMM International Workshop on Validated Computations, University of Oldenburg. Elsevier Studies in Computational Mathematics. Elsevier, Amsterdam, New York (1994)

Walter, E., Pronzato, L.: Identification de modles paramtriques partir de donnes exprimentales. Masson, Montreal (1994)

Castellanos, H.E., Collazos, C.A., Farfán, J.C., Meléndez-Pertuz, F.: Diseño y Construcción de un Canal Hidráulico de Pendiente Variable. Información Tecnológica 28(6), 103–114 (2017). https://doi.org/10.4067/S0718-07642017000600012. Accessed 20 July 2018

Collazos, C.A., Castellanos, H.E., Burbano, A.M., Cardona, J.A., Cuervo, J.A., Maldonado-Franco, A.: Semi-mechanistic modelling of an osmotic dehydration process. WSEAS Trans. Syst. 16, 27–35 (2017). E-ISSN 2224-2678

Duarte, J., Garcá J., Jiménez, J., Sanjuan, M.E., Bula, A., González, J.: Auto-ignition control in spark-ignition engines using internal model control structure. J. Energy Resour. Technol. 139(2) (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Collazos, C. et al. (2018). State Estimation of a Dehydration Process by Interval Analysis. In: Figueroa-García, J., López-Santana, E., Rodriguez-Molano, J. (eds) Applied Computer Sciences in Engineering. WEA 2018. Communications in Computer and Information Science, vol 915. Springer, Cham. https://doi.org/10.1007/978-3-030-00350-0_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-00350-0_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00349-4

Online ISBN: 978-3-030-00350-0

eBook Packages: Computer ScienceComputer Science (R0)