Abstract

We are concerned with a two sided doubly quasi-birth-and-death process. Under a discrete time setting, this is a two dimensional skip free random walk on the half space whose second component is a nonnegative integer valued while its first component may take positive or negative integers. Our major interest is in the tail decay rate of the stationary distribution of this two sided process as either one of the components goes either to infinity or to minus infinity, provided the stationary distribution exists. The author [1] recently obtained two kinds of decay rates, called weak and exact for the doubly QBD, DQBD for short, in terms of the transition kernel of the DQBD. We extends those results to the two sided DQBD, and apply to the generalized shortest queue. The tail decay rate problem for this queueing model has been only partially answered in the literature. We show that a weak decay rate, that is, the decay rate in the logarithmic sense, is completely specified in terms of the primitive data for the generalized shortest queue. This refines results in Miyazawa [2] and corrects some results in Li, Miyazawa and Zhao [3].

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Introduction

A quasi birth-and-death process, QBD process for short, is a continuous time Markov chain which has a main state, called level, and a background state in such a way that the level is nonnegative integer valued, and its increments are ±1 at most and controlled by the background state. This model has been well studied when the background state space is finite (see, e.g. [4], [5]).

We are concerned with the case that the background space is infinite. Li, Miyazawa and Zhao [3] recently proposed a double sided QBD process for the generalized join shortest queue with two waiting lines, by extending the level of such a QBD process to be integer valued. This queue is a service system with two parallel queues that have three arrival streams, two of which are dedicated to each queue and the other of which chooses the shortest queue with tie breaking. Assume that those arrival streams are independent and subject to Poisson processes, and service times are independently, identically and exponentially distributed at each queue. Then, this queue can be formulated as the QBD process or the two sided QBD process. In particular, the latter model is required when we take the difference of the two queues as level.

It is notable that the transition structure may change in the double sided QBD when the level process goes through zero. This is crucial to formulate the generalized join shortest queue as the double sided QBD. In this chapter, we specialize this double sided QBD in such a way that its background process is birth-and-death. We refer to this process as a two sided doubly quasi birth-and-death process, a two sided DQBD for short. Since those QBD and DQBD can be formulated as discrete time Markov chains, we are only concerned with the discrete time processes throughout the chapter.

We are interested in the asymptotic behaviors of the stationary distributions of the level and background state as their values go to infinity, provided it exists. Due to the special structure of the two sided DQBD, the QBD structure is preserved when the level and background are exchanged. So, we mainly consider the asymptotics for the level. We are concerned with two types of the asymptotic decays of the stationary probabilities as the level goes to infinity.

One type is called a weak decay, which is meant that the logarithm of the stationary probability dived by the level n converges to a constant, say −a, as n goes to infinity. Then, e −a is simply referred to as a weak decay rate. Another type is called an exactly geometric decay, which is meant that the stationary probability multiplied by a power constant to the level n, say αn, converges to an another constant as n goes to infinity. Then, α−1 is referred to as an exactly geometric decay rate. In [1], more general types of exact decay rates are considered, but we are only concerned with these two types of decay rates in this chapter.

The purpose of this chapter is twofold. We first study the decay rate problem for the two sided DQBD process, by extending the approach for the DQBD process in [1]. We completely characterize the weak decay rates in terms of the transition probabilities (Theorems 1.3 and 1.4). For the exactly geometric decay, we find sufficient conditions, which are close to necessary conditions (Theorem 1.3). We secondly apply these results to find the decay rates of the stationary distributions of the minimum of the two queues and their difference in the generalized join shortest queue with two waiting lines.

The decay rates for this queue have been studied in [3] and [6], but they are obtained only for certain limited cases, e.g., under a so called strongly pooled condition. We completely answer to this problem for the weak decay rates, and give weaker sufficient conditions for the exactly geometric decay rates (Theorem 1.5 and Corollary 1.2). In particular, it turns out that the strongly pooled condition still plays an important role for finding the decay rate for the minimum of two queues, which may not be the square of the total traffic intensity in general.

The two sided DQBD is a special case of the double sided QBD introduced in [3] since the latter allows the background process to be a general Markov chain. The exactly geometric decays are studied in [3], but only sufficient conditions are obtained. Furthermore, those sufficient conditions require the stationary probabilities at the boundaries, i.e., at level 0, so they are not easy to verify. Not for the two sided DQBD but for the DQBD, Miyazawa [1] completely solves the decay rate problem recently, developing the ideas in [2].

We here extend this approach in [1]. Thus, many arguments are parallel to those in [1]. Namely, the approach heavily depends on the QBD structure and the Wiener Hopf factorization for the Markov additive process that generate the QBD process, and the key idea is to formulate the decay rate problem as a multidimensional optimization problem. However, the level and background states are not symmetric in the two sided DQBD while they are symmetric in the DQBD. So, we need some further effort to get the decay rates, which is a main contribution of this chapter for a general QBD model.

For the join shortest queue and its generalized versions, the decay rate problem has been widely studied in the literature. One possible approach is to use the large deviation principle. Puhalskii and Vladimirov [7] recently obtained the weak decay rates as the solutions of the variational problem for a much more general class of the generalized join shortest queue with an arbitrary number of parallel queues. However, this variational problem is very hard to not only analytically but also numerically solve even for the case of two queues.

Another approach is either to use the random walk structure or the QBD formulation. For example, Foley and McDonald [6] took the former formulation while Li, Miyazawa and Zhao [3] took the latter formulation. An interesting sufficient condition, i.e., so called strongly pooled condition, is found in [6]. However, those papers mainly consider the decay rate under this limited condition for the case of the two queues. So far, the decay rate problem has not been well answered for the generalized join shortest queue. In this chapter, we completely solve this problem for the case of the two queues (Theorem 1.5 and Corollary 1.2). For simpler arrival processes, there are many other studies on the join shortest queues and the decay rate problem has been relatively well answered (see references in [3], [6]).

This chapter is made up by seven sections. In Sect. 1.2, we introduce the two sided DQBD process formally, and consider its basic property, particularly on the rate matrices for representing the stationary distribution in a matrix geometric form. In Sect. 1.3, we characterize the set of positive eigenvectors of the rate matrices using the moment generating functions of the transition kernels insides and on the boundaries. The weak decay rates are completely answered in Sect. 1.4. We also give sufficient conditions for those decay rates to be exactly geometric. In Sect. 1.5, we consider the generalized join the shortest queue with two queues, and answer to the decay rate problems. We finally give some remarks on the existence results in Sect. 1.6. Conclusions are drawn in Sect. 1.7.

Two Sided DQBD Process

Let {(L 1t ,L 2t );t = 0,1,…} be a two dimensional Markov chain taking values in S ≡ Z × Z+, where Z is the set of all integers and Z+ = {ℓ ∈Z;ℓ≥ 0}, with the following transition probabilities (see Fig. 1.1).

where \(\Sigma _{i,j} p_{ij} = \Sigma _{i,j} p_{ij}^{\left(k \right)} = 1\,{\rm{for}}\,k = 0, \pm,1 \pm 2\) Thus, {(L 1t ,L 2t )} is a skip free random walk in all directions, and reflected at the boundary ∂S 1 ≡ {(i, j) ∈ S;j = 0} and has discontinuous statistics at ∂S 2 ≡ {(i,j) ∈ S;i = 0}.

We first take L 1t as level, and L 2t as background state, and refer to this Markov chain as a discrete-time two sided DQBD (doubly quasi-birth-and-death) process. In the random walk terminology, this process is two dimensional reflected random walk on the half space {(m,n) ∈ Z2;n ≥ 0} with discontinuous statistics at the boundaries where either one of components vanishes. We also note that this model is a special case of the double sided QBD in [3] whose background process is not necessary to be birth-and-death.

To present the transition probability matrix of this Markov chain, we first introduce the following matrices. For k = 0, ± 1 and s = ±,

and for k = 0, ±1,

Then, the two sided DQBD has the following tridiagonal transition matrix P (1).

Throughout this chapter, we assume that P (1) is irreducible and aperiodic, and positive recurrent. The unique stationary distribution of P is denoted by probability row vector:

, where vn for n ∈ Z are row vectors for background states in level n. We also write v as {V ij ; i ∈ Z, j ∈ Z+}. We assume that

-

(i)

For each \(s = \pm,2,A^{\left(s \right)} A_{- 1}^{\left(s \right)} + A_0^{\left(s \right)} + A_1^s \) is irreducible and aperiodic;

-

(ii)

For each s = ±, 2, Markov additive process driven by kernel \(\left\{{A_n^{\left(s \right)};n = 0, \pm 1} \right\}\) is 1-arithmetic in the sense that for every pair (i, j) ∈ S 1 × S 1, the greatest common divisor of \(\left\{{n \in;A_n^{\left(s \right)} \left({i,j} \right) > 0} \right\}\) is one, where Z is the set of all integers (see, e.g., [8]).

Remark 1.1. The irreducibility of A (s) in (i) is satisfied by many applications, but it is stronger than the irreducibility of P. Our arguments in this chapter can be modified so as to be valid without that irreducibility, and the same results are obtained. However, proofs becomes complicated just because we need to consider each case separately depending on the irreducibility or the non irreducibility. So, we here do not consider the non irreducible case, which will be detailed in a technical note.

It is well-known that, for each s = ±, there exists a nonnegative matrix R (s) uniquely determined as a minimal nonnegative solution of the matrix equation:

and the stationary distribution has the following matrix geometric form.

Note that R (s) may not be irreducible, but has a single irreducible class due to (i) and (ii).

We also consider the case that L 2 is taken as level. In this case, the transition matrix is denoted by P (2), and given by

where, for k = 0, ±1,

and for k = 0,1,

In this case, the stationary distribution v = {v ij } is partitioned as

where \(v_n^{\left(2 \right)} = \left\{{v_{in};i \in} \right\}\). Viewing L 1t as the background process, we have the standard process. Then, as is well known, there exists a minimal nonnegative solution r (2) of

and the stationary distribution v has the following form:

We are interested in the geometric decay behaviors of the stationary vector v n as n → ±∞ and \(v_n^{\left(2 \right)} \) as n → ∞. We are interested in two different types of asymptotics. If there are constant α+ > 1 and constant positive vector c + such that

then v n is said to asymptotically have exactly geometric decay rate α+ −1 as n → ∞. Another decay rate is of logarithmic type, which is defined through

where r +(i) ≤ 1. If r +(i) does not depend on i, we write it as r +. In this case, v n is said to asymptotically have weak geometric decay rate r +. Those decay rates are also defined for v n as n → − ∞ and for \(v_n^{\left(2 \right)} \) as n → ∞, which are denoted by r − and r 2, respectively. Since those decay rates may not exist, we also use the following notation:

Similarly, \(\underline r _s \left(i \right)\) and \(\overline {r_s} \left(i \right)\) are defined for s = −,2. These decay rates are referred to as the weak lower and weak upper decay rates, respectively.

It is noticed that \(\overline r _ + \left(i \right)\) in (1.6) is bounded as

Then, from (1.3) and (1.5), it might be expected that the weak decay rate \(r_ + ^{- 1} \) is obtained as the reciprocal of the convergence parameter c p (R (+)) of R (+), which is defined as

This is true under certain situations, but generally not true. In general, we only have the following lower bounds for the decay rates from this information.

Lemma 1.1. The decay rates are bounded below by the corresponding convergence parameters of the rate matrices. That is, we have

where Z2 = Z.

The proof of this lemma is exactly the same as Lemma 2.1 of [1], so it is omitted. This lemma just gives the lower bounds, but it turns out that they are very useful to identify the decay rates as well as to prove their existence.

We next prepare some useful facts for the convergence parameters.

Proposition 1.1 (Theorem 6.3 of [9]). For a nonnegative square matrix T, let X be the set of all nonnegative and nonzero row vectors whose size is the same as that of T. Then we have

We will consider all eigenvectors of r (s), s = ±, 2, to find the decay rate. For this, we use the Markov additive process generated by \(\left\{{A_k^{\left(s \right)};k = 0, \pm 1} \right\}\). Note that (1.1) and (1.2) and the corresponding equations of R (−) and R (2) are equivalent to

where u(s) = − 1 for s = −, u(s) = 1 for s = +,2, and A *(z) and \(G_*^{\left(s \right)} \left(z \right)\)are defined as

The decomposition formula (1.7) is known as the Wiener Hopf factorization (see, e.g. [8]). Then Proposition 1.1 concludes

Lemma 1.2 \(c_p \left({R^{\left(s \right)}} \right) = \sup \left\{{z \ge 1;{\rm{x}}A_*^{\left(s \right)} \left(z \right) \le {\rm{x,x}} \in {\rm{X}}^{\left(s \right)}} \right\}{\rm{for}}\,s = \pm,2,\), where X(s) is the set of all nonnegative and nonzero vectors in R Z+ for s = ± and R Z for s = 2.

Remark 1.2. The proof that \({\rm{x}}A_*^{\left(s \right)} \left(z \right) \le {\rm{x}}\) implies z ≤ cp(R (s))is immediate from (1.7). However, it is not so obvious to find z such that cp(R (s)) ≤ z and \({\rm{x}}A_*^{\left(s \right)} \left(z \right) \le {\rm{x}}{\rm{.}}\) The proof of this can be found in [1].

Similarly to the case of the doubly QBD process in [1], we compute each entry of \(A_*^{\left(s \right)} \left(z \right)\) using the following notations. Let (X 1,X 2) be a random vector subject to distribution {pij}, and let \(\left({X_1^{\left(s \right)},X_2^{\left(s \right)}} \right)\) be those subject to distribution \(\left\{{p_{ij}^{\left(s \right)}} \right\}\) for s = 0, ±, 1±, 2. Define generating functions as

Then, \(A_*^{\left(s \right)} \left(z \right)\) for s = ± has the following QBD structure.

Similarly, we have

Eigenvectors of Rate Matrices

If we take L 1 as level, then the background process {L 2t } is the birth and death process in each half lie [1,∞) or (−∞, −1]. Hence, this case is easier, so we consider r (s) with s = ± first. In what follows, we use the following notations for s = ±, 2.

We first note the following facts, which are easily concluded by the Wiener Hopf factorization.

Lemma 1.3. Let s be either one of −, + or 2. For z > 1, \(\left({z,{\bf{x}}} \right) \in V_R^{\left(s \right)} \) if and only if \(\left({z,{\bf{x}}} \right) \in V_R^{\left(s \right)} \). If there is no (z,x) in \(\left({z,{\bf{x}}} \right){\rm{in}}V_R^{\left(s \right)} \) with z > 1, then c p (R (s))=1.

Then, the following result is immediate from Theorem 3.1 in [1].

Theorem 1.1. Let \(D_1^{\left(- \right)} \) denote the subset of all (−θ1, θ2) in ∝2 such that

where \(\phi _i^{\left({1 -} \right)} \left({\theta _1} \right) = {\rm{E}}\left[ {e^{\theta _1 X_1^{\left({1 -} \right)};} X_2^{\left({1 -} \right)} = j} \right]{\rm{for}}\,j = 0,1.\). Similarly, let \(D_1^{\left(+ \right)} \) denote the subset of all (θ1, θ2) in ∝2 such that

where \(\phi _i^{\left({1 +} \right)} \left({\theta _1} \right) = {\rm{E}}\left[ {e^{\theta _1 X_1^{\left({1 +} \right)}};X_2^{\left({1 +} \right)} = j} \right]\,{\rm{for}}\,j = 0,1.\). Then, for each s = ±, there exists a \(\left({z,{\bf{x}}} \right) \in v_A^{\left(s \right)} \) if and only if there exists a \(\left({\theta _1,\theta _2} \right) \in D_1^{\left(s \right)}.\). Furthermore, we have the following facts.

(1a) For this \(\left({\theta _1,\theta _2} \right),\left({z,{\bf{x}}} \right) \in v_A^{\left(- \right)} \left({{\rm{res}}{\rm{.,}}v_A^{\left(+ \right)}} \right)\) is given by \(z = e^{\theta _1} \) andx = {x n}:

where \(\underline \theta _2,\overline \theta _2 \) are the two solutions of (1.9) (res., (1.11)) for the given θ1 such that \(\underline \theta _2 \le \overline \theta _2,\), and \(c_i,c'_i \) are nonnegative constants satisfying c 1 +c 2 ≠ 0 and \(c'_i + c'_2 \ne 0.\). Furthermore, both of c 1 and c 2 are positive only if the strict inequality holds in (1.9) (res., (1.11)).

(1b) The convergence parameter c p (R (s)) is obtained as the supremum of \(e^{\theta _1} \) over \(D_1^{\left(s \right)} \) for each s = ±.

Similarly to Theorem 1.1, we can prove the following theorem for r (2). Since this result is the core of our arguments, we give its detailed proof in Appendix A.

Theorem 1.2. Let D2 denote the subset of all \(\left({- \eta _1^{\left(- \right)},\eta _1^{\left(+ \right)},\eta _2} \right)\) in ℝ3 such that

where \(\phi _i^{\left(2 \right)} \left({\eta _2} \right) = {\rm{E}}\left[ {e^{\eta _2 X_2^{\left(2 \right)}};X_1^{\left(2 \right)} = i} \right]\,{\rm{for}}\,{\rm{i}}\,{\rm{=}}\,{\rm{0,}} \pm {\rm{1}}{\rm{.}}\). Then, there exists a \(\left({z,{\rm{x}}} \right) \in \,v_A^{\left(2 \right)} \) if and only if there exists a \(\left({- \eta _1^{\left(- \right)},\eta _1^{\left(+ \right)},\eta _2} \right) \in D_2.\). Furthermore, we have the following facts. (2a) For this \(\left({- \eta _1^{\left(- \right)},\eta _1^{\left(+ \right)},\eta _2} \right),\left({z,{\bf{x}}} \right) \in v_A^{\left(2 \right)} \) is given by \(z = e^{\eta _2} \) and x = {x n }:

where \(\underline \eta _1^{\left(- \right)},\overline \eta _1^{\left(- \right)} \) (res., \(\underline \eta _1^{\left(+ \right)},\overline \eta _1^{\left(+ \right)} \)) are the two solutions of (1.14) (res., (1.15)) for the given η2 such that \(\underline \eta _1^{\left(- \right)} \le \overline \eta _1^{\left(- \right)} \) (res., \(\underline \eta _1^{\left(+ \right)} \le \overline \eta _1^{\left(+ \right)} \)), and for each \(s = \pm,c_i^{\left(s \right)},d_i^{\left(s \right)} \) are nonnegative constants satisfying \(c_1^{\left(s \right)} + c_2^{\left(s \right)} \ne 0.\) and \(d_1^{\left(s \right)} + d_2^{\left(s \right)} \ne 0.\). Furthermore, both of \(c_1^{\left(s \right)} \) and \(c_2^{\left(s \right)} \) are positive only if the strict inequality holds in (1.16). (2b) The convergence parameter c p (R (2)) is obtained as the supremum of \(e^{\eta _2} \) over D2.

For convenience, we also introduce the following projections of D2, which will be used in Lemma 1.7.

.

An important observation in these theorems is that z satisfying (z,x) ∈ V(R> (s)) can be found through ∞1 or η2 in sets \(D_1^{\left(- \right)},D_1^{\left(+ \right)} \) and D2, which are in the boundary of convex sets. Furthermore, \(D_1^{\left(s \right)},D_2^{\left(2 \right)} \) and D2 are compact and connected sets for s = ±. This observation is expected to extend Corollary 3.1 of [1] for the two sided DQBD process. However, we have to check the two sided version of Proposition 3.1 of [1]. That is, we need the following lemmas. For convenience, we denote the set of non-positive integers by Z−.

Lemma 1.4. For s = ±, if there exist a positive vector \({\bf{x}}^{\left(s \right)} = \left\{{x_n^{\left(s \right)};n \in _s} \right\}\) such that \(\left({\alpha _s,{\bf{x}}^{\left(s \right)}} \right) \in v_A^{\left(s \right)} \) and some finite \(\underline d _s \left({\bf{x}} \right),\overline d _s \left({\bf{x}} \right) \ge 0\) such that

then, for any nonnegative column vector u (s) satisfying x (s) u (s) < ∞, there are noneg-ative and finite \(\underline d _s^\dag \left({{\bf{x}}^{\left(s \right)}} \right)\) and \(underline d _s^\dag \left({{\bf{x}}^{\left(s \right)}} \right)\) such that

In particular, if \(\underline d _s^\dag \left({{\bf{x}}^{\left(s \right)}} \right) = \overline d _s^\dag \left({x^{\left(s \right)}} \right)\) and \(0 \le d_s^\dag \underline = \underline d _s^\dag \left({{\bf{x}}^{\left(s \right)}} \right) < \infty,\), then

That is, v n u s decays geometrically with rate \(\alpha _s^{- 1} \) as n → s∞.

Lemma 1.5. If there exist a positive vector x = {x n ;n ∈ Z} such that \(\left({\alpha,{\rm{x}}} \right) \in v_A^{\left(2 \right)} \) and some finite \(\underline d ^ - \left({\bf{x}} \right),\overline d ^ - \left({\bf{x}} \right),\underline d ^ + \left({\bf{x}} \right),\overline d ^ + \left({\bf{x}} \right) \ge 0\) such that

then, for any nonnegative column vector u satisfying xu < ∞, there are nonegative and finite \(\underline d ^\dag \) (x) and \(\overline d ^\dag \) (x) such that

In particular, if \(\underline d ^\dag \left({\bf{x}} \right) = \overline d ^\dag \left({\bf{x}} \right)\) and \(0 \le d^\dag \underline = \,\underline d ^\dag \left({\bf{x}} \right) < \infty,\), then

That is, \(v_n^{\left(2 \right)} {\bf{u}}\) decays geometrically with rate α−1 as n goes to infinity.

Since this lemma can be proved in a similar way to Proposition 3.1 of [1], we omit its proof. For each n ≥ 0, let

Then, the next corollary follows from Theorem 1.1, Theorem 1.2 and Lemmas 1.4 and 1.5 similarly to Corollary 3.1 of [1].

Corollary 1.1. Define β s for s±, 2 as

Then, the weak upper decay rates r¯−(i), r¯+(i) and r¯2(j) of v − ni , v ni and v jn , respectively, as n → ∞ are uniformly bounded by \(e^{- \beta _ -},e^{- \beta _ +} \) and \(e^{- \beta 2}.\). In particular, for each s = ±,2, if β s = log c p (R (s)), then the weak decay rate r s exists and \(r_s = e^{- \beta _s}.\). Furthermore, if the asymptotic decay of v 1n,v (−1)n or v n1 and v −n(−1) is exactly geometric as n → ∞, then the corresponding stationary level distribution asymptotically decays in the exactly geometric form.

Answers to Decay Rate Problem

We are now in a position to answer to the decay rate problem. Since \(D_1^{\left(s \right)} \)for s = ± and D2 are compact sets, we can define, for s = ±,

Note that \(\theta _1^{\left({sc} \right)} \) = log c p (R (s)) for s = ± and \(\eta _2^{\left(c \right)} = \log c_p \left({R^{\left(2 \right)}} \right).\). Furthermore, \(\left({\eta _1^{\left({- c} \right)},\eta _1^{\left({+ c} \right)},\eta _2^{\left(c \right)}} \right)\) and \(\left({\theta _1^{\left({sc} \right)},\theta _2^{\left({sc} \right)}} \right)\) are in D2 and \(D_1^{\left(s \right)} \) for s = ±, respectively.

Similarly to Theorem 4.1 of [1], we consider the following nonlinear optimization problems. Let, for s = ±,

We can find solutions α s for s = ±, 2 in the following way.

Lemma 1.6. For the two sided DQBD process satisfying the assumptions (i) and (ii), suppose that its stationary distribution exists, which denoted by v = {v ij }. Then, we have

Proof. We define the following functions of u, u −, u + ≥ 0.

For convenience, let σ s = − log r¯ s for s = ±,2. Suppose that 0 ≤ u s ≤ σ s , which implies that \(\overline r _s \left(1 \right) \le e^{- u_s} \) and \(\overline r _2 \left(s \right) \le e^{- u_2} \) for s = ±. Then, Corollary 1.1 leads that

We next inductively define \(u_s^{\left(n \right)} \) for n = 0,1,… and s = ±, 2 in the following way. Let \(u_s^{\left(0 \right)} = 0,\), and

Then, it is easy to see that \(u_s^{\left(n \right)} \) is non decreasing in n, and satisfies (1.25) for \(u_s = u_s^{\left(n \right)} \) for s = ±,2. Hence, Corollary 1.1 concludes

On the other hand, from the definitions of α s , it is easy to prove by induction that

Then, it can be shown that the limits \(u_s^{\left(\infty \right)} \) are attained in finitely many steps. The detailed proof of this can be found in the proof of Theorem 4.1 of [1]. Hence, we have

Thus, we get (1.24).

For each s = ±, define the following four sets of conditions.

These conditions are exclusive and cover all the cases for each s = ±. Furthermore, (sC4) is impossible since \(\theta _1^{\left({sc} \right)} \, \le \,\eta _1^{\left({sc} \right)} \) implies that \(\eta _2^{\left(c \right)} \, > \,\theta _2^{\left({sc} \right)} \) due to the convexity of the set with boundary (1.9) and (1.11). For the other three cases for each s = ±, we have to consider their combinations, so nine cases in total. For convenience, we denote the condition that (−Ci) and (+Cj) hold by C(i, j) for i, j = 1,2,3.

The next lemma shows how we can compute α s for s = ±, 2.

Lemma 1.7. Under the assumptions of Lemma 1.6, the α−, α+ and α2 of (1.22) and (1.23) are obtained in either one of the following nine ways.

-

(c1)

If C(1,1) holds, then \(\alpha _ - = \exp \left({\theta _1^{\left({- c} \right)}} \right),\alpha _ + = \exp \left({\theta _1^{\left({+ c} \right)}} \right)\) and \(\alpha _2 = \exp \left({\eta _2^{\left(c \right)}} \right).\).

-

(c2)

If C(1,2) holds, then \(\alpha _ - = \exp \left({\theta _1^{\left({- c} \right)}} \right),\alpha _2 = \exp \left({\eta _2^{\left(c \right)}} \right)\)and α+ is the maximum value satisfying \(\left({\log \alpha _ +,\eta _2^{\left(s \right)}} \right) \in D_1^{\left(+ \right)}.\)

-

(c3)

If C(2,1) holds, then \(\alpha _ + = \exp \left({\theta _1^{\left({+ c} \right)}} \right),\alpha _2 = \exp \left({\eta _2^{\left(c \right)}} \right)\)and α− is themaximum value satisfying \(\left({\log \alpha _ -,\eta _2^{\left(c \right)}} \right) \in D_1^{\left(- \right)}.\).

-

(c4)

If C(1,3) holds, then \(\alpha _ + = \exp \left({\theta _1^{\left({+ c} \right)}} \right),\alpha _2 \) is the maximum value satisfying \(\left({\theta _1,\log \alpha _2} \right) \in D_2^{\left(+ \right)} \)with \(\theta _1 \le \theta _1^{\left({+ c} \right)},\), and α− is the maximal value satisfying \(\left({\log \,\alpha _ -,\theta _2} \right) \in D_1^{\left(- \right)} \) with ∞2 ≤ α2.

-

(c5)

If C(3,1) holds, then \(\alpha _ - = \exp \left({\theta _1^{\left({- c} \right)}} \right),\alpha _2 \) is the maximum value satisfying \(\left({\theta _1,\,\log \,\alpha _2} \right) \in D_2^{\left(- \right)} \) with \(\theta _1 \le \theta _1^{\left({- c} \right)},\) and α+ is the maximal value satisfying \(\left({\log \,\alpha _ +,\theta _2} \right) \in D_1^{\left(+ \right)} \)with ∞2 ≤ α2.

-

(c6)

If C(2,2) holds, then \(\alpha _2 = \exp \left({\eta _2^{\left(c \right)}} \right)\) and α s is the maximum value satisfying \(\left({\log \,\alpha _s,\eta _2^{\left(c \right)}} \right) \in D_1^{\left(s \right)} \) for s = ±.

-

(c7)

If C(2,3) holds, then \(\alpha _ + = \exp \left({\theta _1^{\left({+ c} \right)}} \right),\,\alpha _2 \), α2 is the maximum value satisfying \(\left({\theta _1,\log \,\alpha _2} \right) \in D_2^{\left(+ \right)} \)with \(\theta _1 \le \theta _1^{\left({+ c} \right)} \) and α− is the maximum value satisfying \(\left({\log \,\alpha _ -,\log \,\alpha _2} \right) \in D_1^{\left(- \right)}.\).

-

(c8)

If C(3,2) holds, then \(\alpha _ - = \exp \left({\theta _1^{\left({- c} \right)}} \right),\alpha _2 \) is the maximum value satisfying \(\left({\theta _1,\log \,\alpha _2} \right) \in D_2^{\left(- \right)} \) with \(\theta _1 \le \theta _1^{\left({- c} \right)},\), and α+ is the maximum value satisfying \(\left({\log \,\alpha _ +,\log \alpha _2} \right) \in D_1^{\left(+ \right)}.\).

-

(c9)

If C(3,3) holds, then \(\alpha _ - = \,\,\exp \left({\theta _1^{\left({- c} \right)}} \right),\,\alpha _ - \, = \exp \left({\theta _1^{\left({+ c} \right)}} \right)\)and α2 is the maximum value satisfying \(\left({\theta _1^{\left(- \right)},\theta _1^{\left(+ \right)},\log \alpha _2} \right) \in D_2 \) with \(\theta _1^{\left(- \right)} \le \theta _1^{\left({- c} \right)} \)and \(\theta _1^{\left(+ \right)} \le \theta _1^{\left({+ c} \right)} \).

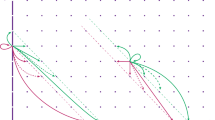

This theorem can be proved in the same way as Lemma 4.2 of [1]. So, instead of proving it, we give figures to explain how those decay rates are obtained. They can be found in Figs. 1.2, 1.3 and 1.4. Since cases (c3), (c5) and (c7) are symmetric with (c2), (c4) and (c6), respectively, we omit their figures. We shall see more figures for specific examples in Sect. 1.5.

Theorem 1.3. Under the assumptions of Lemma 1.6, we have \(r_s = \alpha _s^{- 1} \) for s = ±,2. Namely, \(\alpha _ - ^{- 1},\alpha _ + ^{- 1} \) and \(\alpha _2^{- 1} \) are the weak decay rates of \(v_{- n}^{\left(- \right)},v_n^{\left(+ \right)} \) and \(v_n^{\left(2 \right)} \), respectively, as n → ∞. Furthermore, the marginal probabilities, \(v_{- n}^{\left(- \right)} 1,\,v_n^{\left(+ \right)} 1\) and \(v_n^{\left(2 \right)} 1\), have the same decay rates \(\alpha _ - ^{- 1},\alpha _ + ^{- 1} \) and \(\alpha _2^{- 1} \), respectively, if they are less than 1, respectively.

Proof. We first consider r¯ s (1) for s = ±, 2, which are the weak upper decay rates of V −n1, V n1 and V 1n as n → ∞, respectively, are obtained by (1.24). Hence, Lemmas 1.1, 1.4 and 1.5 yield

From Lemma 1.7, at least one of α−, α+ and α2 agree with the corresponding convergence parameter c p (R (s)). Hence, we have \(r_s = \alpha _s^{- 1} \) at least for one s. This together with Corollary 1.1 and Lemmas 1.4 and 1.5 conclude that the same equality must hold for the other s's. This completes the proof.

We can refine the decay rates in this theorem from weak to exact ones in a similar way as Theorem 4.2 of [1] using Proposition 3.1 of [1] and Lemmas 1.4 and 1.5 for the case that the decay rates are exactly geometric. However, for the other cases, we can not directly use Theorem 5 of [10] which was used in [1] since the level or background state is two sided. Thus, we here only present the case that the exactly geometric decay occurs. We omit its proof since it is similar to Theorem 4.2 of [1].

Theorem 1.4. Under the assumptions of Theorem 1.3 with α− > 1, α+ > 1 or α2 > 1, let, for s = ±,

Then, we have the exactly geometric decay rates for the following cases.

-

(d1)

If either (−C2) or (−C3) holds, then both asymptotic decays of {v (−n)k } and {v ln } as n → ∞ are exactly geometric with the decay rates \(\alpha _ - ^{- 1} \) and \(\alpha _2^{- 1} \), respectively.

-

(d2)

If either (+C2)) or (+C3) holds, then both asymptotic decays of {v nk } and {v ln } as n → ∞ are exactly geometric with the decay rates \(\alpha _ + ^{- 1} \) and \(\alpha _2^{- 1} \), respectively.

-

(d3)

If (C1) holds and if \(\theta _1^{s\max} \notin D_1^{\left(s \right)} \) and \({\rm{\eta}}_1^{\left(c \right)} < \theta _1^{\left({sc} \right)} \), then the asymptotic decay of {v (sn)k } ({V ln }) as n → ∞ is exactly geometric with the rate \(\alpha _s^{- 1} \left({\alpha _2^{- 1}} \right)\) for s = ±.

Generalized Join Shortest Queue

Let us apply Theorems 1.3 and 1.4 to the generalized join shortest queue which is studied in [3], [6] and explained in Sect. 1.1. We first introduce notations for this model. It has two parallel queues, numbered as queues 1 and 2. For each i = 1,2, queue i serves customers in the First-Come First-Served manner with i.i.d. service times subject to the exponential distribution with rate μ i . There are three exogenous Poisson arrival streams. The first and second streams go to queues 1 and 2 with the mean arrival rate λ1 and λ2, respectively, while arriving customers in the third stream with the mean rate δ choose the shorter queue with tie breaking. The decay rates does not depend on the probability that customer with tie breaking choose queue 1, so we simply assume it to be 1/2.

We are interested to see how the stationary tail probabilities of the shorter queue lengths and the difference of the two queues decay. Due to the dedicated stream to each queue, this problem is much harder than the one for the standard joining the shortest queue. Since we only consider the stationary distribution, we can formulate this continuous time model as a discrete time Markov chain. For this, we assume without loss of generality that

Let Q 1t and Q 2t be the queue lengths including customers being served at time t = 0,1,…, and let L 1t = Q 2t − Q 1t and L 2t = min(Q 1t , Q 2t ). It is not hard to see that (L 1t ,L 2t ) is a skip free random walk on each region (Z+ ∪ \{0}) × (Z+ \ {0}) reflected at the boundary Z × {0} and has different transitions at {0} × Z+ (see Fig. 1.5).

Then, the transition probabilities are give by

where all other transitions are null. To exclude obvious cases, we assume that δ, μ1, μ2 are all positive.

Denote traffic intensities by

Then, it is known that this generalizedjoin shortest queue is stable if and only if ρ1 < 1,ρ2 < 1 and ρ < 1 (eg., see [6]). This stability condition is assumed throughout this section. We will also use the following notation, which were introduced and shown to be very useful in computations in [3].

We apply Theorem 1.3 to this model. For this, we need to compute θ(−c), θ(+c) and \({\rm{\eta}}_2^{\left(c \right)} \). In the view of Theorems 1.1 and 1.2, they are obtained if we can solve the following three sets of equations.

For convenience, let \(z = e^{- \theta _1} \) and \({\rm{\xi =}}e^{\theta _2} \) in (1.26). Then, we have

Solving these equations for z ≠ 1, we have \(z = \xi = \rho _1^{- 1}.\) for \(z = {\rm{\xi =}}\rho _1^{- 1} \), (1.29) yields \({\rm{\xi =}}\rho _1^{- 1},\frac{{{\rm{\mu}}_2}}{{{\rm{\lambda}}_2 + \delta}}\rho _1^{- 1} \). Note that \(\rho _1^{- 1} < \frac{{{\rm{\mu}}_2}}{{{\rm{\lambda}}_2 + \delta}}\rho _1^{- 1} \) if and only if μ2 > λ2 + δ. Hence, reminding the definitions of \(\theta _i^{- \max} \):

, we have

It is also notable that \(\theta _1^{- \max} \ge \log \rho _1^{- 1} \), so we always have that \(\theta _1^{\left({- c} \right)} \ge \log \rho _1^{- 1} \).

Remark 1.3. The \(\theta _1^{- \max} \) for i = 1,2 are computed from their definitions as

where

Similarly, letting \(z = e^{\theta _1} \) and \({\rm{\xi =}}e^{\theta _2} \) in (1.27),

Solving these equations for z ≠ 1, we have \(z = {\rm{\xi =}}\rho _2^{- 1} \). for \(z = \rho _2^{- 1} \), (1.32) yields \({\rm{\xi =}}\rho _2^{- 1},\,\frac{{{\rm{\mu}}_1}}{{{\rm{\lambda}}_1 + \delta}}\rho _2^{- 1} \). Reminding that

we have that \(\theta _1^{\left({+ c} \right)} \ge \log \rho _2^{- 1} \) and

We also consider to solve (1.28). In this case, let \({\rm{\xi =}}e^{{\rm{\eta}}_2},z1 = e^{- {\rm{\eta}}_1^{\left(- \right)}} \) and \(z2 = e^{{\rm{\eta}}_1^{\left(+ \right)}} \). Then, (1.28) becomes

These equations have been solved in [3]. That is, if z ≠ 1, then ξ, = ρ−2 and z 1 = z 2 = ρ−1. For ξ = ρ−2, the first equation has solutions z 1 = ρ−1, \(\frac{{\gamma _1 + \delta}}{{\gamma _2}}\rho ^{- 1} \), and the second equation yields \(z_2 = \rho ^{- 1},\frac{{\gamma _2 + \delta}}{{\gamma _1}}\rho ^{- 1} \). In this case, \(\eta _2^{\left(c \right)} \) is obtained as the maximum ξ that satisfies (1.35), (1.36) and

Thus, we need to solve a convex optimization problem. We here already know that (z 1,z 2,ξ) = (1,1,1), (ρ−1,ρ−1,ρ−2) are the extreme points of the constrains. To identify the latter point on the convex curves (1.35) and (1.36), it is convenient to introduce the following classifications:

where we exclude the case that γ2 + δ ≤ γ1 and γ1 + δ ≤ γ2, which is impossible since δ > 0. Note that (1.39) is equivalent to

which is introduced and called strongly pooled in [6].

We now find \(\eta _2^{\left(c \right)} \) by solving the convex optimization problem.

Lemma 1.8. If the strongly pooled condition (1.39) holds, then

Otherwise, if (1.40) holds, then

and, if (1.41) holds, then

We defer the proof of this lemma to Appendix B.

We next consider to apply Theorem 1.3 to the generalized join shortest queue. To this end, we introduce another classifications.

where we do not consider the case that ρ1 ≥ ρ and ρ2 ≥ ρ, which is impossible since δ > 0. The condition (1.42) is referred to as a weakly pooled condition in [6]. Under the conditions (1.39) and (1.42), the asymptotic decay of

is shown to be exactly geometric with decay rate ρ2 for each fixed l in [3], [6]. This is the only known results for the decay rate for the minimum of the two queues. Using the two sets of the classifications, we can answer to the decay rate problem for all the cases but for the weak decay rates.

Theorem 1.5. For the generalized join shortest queue with two queues, suppose that the stability conditions ρ < 1, ρ1 < 1 and ρ2 < 1 are satisfied. Then, the weak decay rate r 2 exists for the minimum of the two queues in the sense of marginal distribution as well as jointly with each fixed difference of the two queues, and one of the following three cases occurs.

-

(g1)

If (1.39) holds, then either one of the following cases happens.

-

(g2)

If (1.40) holds, then either one of the following cases happens.

-

(g2a)

(1.42) implies \(\left\{{\begin{array}{*{20}c} {e^{- \theta _2^{- \max}},\eta _1^{\left({+ c} \right)} \le \theta _1^{\left({+ c} \right)}} \\ {\frac{{\lambda _1 + \delta}}{{\mu _1}}\rho _{2,} \eta _1^{\left({+ c} \right)} > \theta _1^{\left({+ c} \right)}} \\\end{array}} \right.\).

-

(g2b)

(1.43) implies \(r_2 = \left\{{\begin{array}{*{20}c} {e^{- \theta _2^{- \max}},\eta _1^{\left({- c} \right)} < \log \rho _1^{- 1},\eta _1^{\left({+ c} \right)} < \theta _1^{\left({+ c} \right)}} \\ {\frac{{\lambda _2 + \delta}}{{\mu _2}}\rho _1,\,\eta _1^{\left({- c} \right)} \ge \log \rho _1^{- 1},\eta _1^{\left({+ c} \right)} < \theta _1^{\left({+ c} \right)}} \\ {\frac{{\lambda _1 + \delta}}{{\mu _1}}\rho _2,\,\eta _1^{\left({- c} \right)} < \log \rho _1^{- 1},\eta _1^{\left({+ c} \right)} \ge \theta _1^{\left({+ c} \right)}} \\ {\min \left({\frac{{\lambda _2 + \delta}}{{\mu _2}}\rho _1,\frac{{\lambda _1 + \delta}}{{\mu _1}}\rho _2} \right),\,\eta _1^{\left({- c} \right)} \ge \log \rho _1^{- 1},\eta _1^{\left({+ c} \right)} \ge \theta _1^{\left({+ c} \right)}} \\\end{array}} \right.\)

-

(g2c)

(1.44) implies \(r_2 = \frac{{\lambda _1 + \delta}}{{\mu _1}}\rho _2 \).

-

(g2a)

-

(g3)

If (1.41) holds, then either one of the following cases happens.

-

(g3a)

(1.42) implies \(r_2 = \left\{{\begin{array}{*{20}c} {e^{- \theta _2^{+ \max}},\,\eta _1^{\left({- c} \right)} \le \theta _1^{\left({- c} \right)}} \\ {\frac{{\lambda _2 + \delta}}{{\mu _2}}\rho _1,\,\eta _1^{\left({- c} \right)} > \theta _1^{\left({- c} \right)}} \\\end{array}} \right.\)

-

(g3b)

(1.43) implies \(r_2 = \frac{{\lambda _2 + \delta}}{{\mu _2}}\rho _1 \).

-

(g3c)

(1.44) implies \(r_2 = \left\{{\begin{array}{*{20}c} {e^{- \theta _2^{+ \max}},\,\eta _1^{\left({- c} \right)} < \theta _1^{\left({- c} \right)},\eta _1^{\left({+ c} \right)} < \log \rho _2^{- 1}} \\ {\frac{{\lambda _2 + \delta}}{{\mu _2}}\rho _1,\,\eta _1^{\left({- c} \right)} \ge \theta _1^{\left({- c} \right)},\eta _1^{\left({+ c} \right)} < \log \rho _2^{- 1}} \\ {\frac{{\lambda _1 + \delta}}{{\mu _1}}\rho _2,\,\eta _1^{\left({- c} \right)} < \theta _1^{\left({- c} \right)},\eta _1^{\left({+ c} \right)} \ge \log \rho _2^{- 1}} \\ {\min \left({\frac{{\lambda _2 + \delta}}{{\mu _2}}\rho _1,\frac{{\lambda _1 + \delta}}{{\mu _1}}\rho _2} \right),\,\eta _1^{\left({- c} \right)} \ge \theta _1^{\left({- c} \right)},\eta _1^{\left({+ c} \right)} \ge \log \rho _2^{- 1}} \\\end{array}} \right.\)

-

(g3a)

Furthermore, the decay rates are exactly geometric for the cases (g1), (g2) unless \(\eta _1^{\left({- c} \right)} = \theta _1^{- \max} \) and (g3) unless \(\eta _1^{\left({+ c} \right)} = \theta _1^{+ \max} \).

Proof. This theorem is concluded applying Theorem 1.3 together with Lemma 1.7 for \(\left({\theta _1^{\left({sc} \right)},\theta _1^{\left({sc} \right)}} \right)\) for s = ± and Lemma 1.8. We first consider case (g1a). In this case, we suppose that the strongly pooled condition (1.39) and the weakly pooled condition (1.42) hold, then \(\theta _1^{\left({sc} \right)} \ge \eta _1^{\left({sc} \right)} \) for s = ± from (1.31), (1.34) and Lemma 1.6. Hence, either one of C(1,1). C(1,2) or C(2,1) occurs in Lemma 1.7, which implies that \(r_2 = \alpha _2^{- 1} = e^{- \eta _2^{\left(c \right)}} = \rho ^2 \).

We next consider (g1b). In this case, (1.39) and (1.43) are assumed. Note that ρ1 ≥ ρ in (1.43) implies that

Hence, we always have \(\theta _1^{\left({- c} \right)} = \log \rho _1^{- 1} \) from (1.31) in this case. Since \(\log \rho _1^{- 1} \le \log \rho ^{- 1} = \eta _1^{\left({- c} \right)} \) and \(\eta _1^{+ c} = \log \rho ^{- 1} < \log \rho _2^{- 1} \le \theta _1^{\left({+ c} \right)} \), we have (g1b) from (c5) or (c8) of Lemma 1.7.

The other cases are similarly proved. So, we omit their details.

To visualize the results of Theorem 1.5, we draw equations (1.26) and (1.27) on the (θ1,θ2) plane simultaneously for some examples. We here consider the four cases (g1a), (g1b), (g2a) and (g2b).

These four cases are given in Figs. 1.6 and 1.7. In case (g1a) of Fig. 1.6,

which implies that \(\rho _1 = \frac{1}{4},\rho _2 = \frac{1}{2}\) and \(\rho = \frac{3}{5}\). In case (g1b),

which implies that ρ1 = 0.6, ρ2 = 0.5 and \(\rho = \frac{{11}}{{18}}\). Figure 1.7 shows the case where the weakly pooled condition (1.39) does not hold. In case (g2a), we set

which implies that ρ1 = 0.9, \(\rho _2 = \frac{{17}}{{30}}\) and ρ = 0.7. This example shows that the strongly pooled condition (1.39) does not imply the weakly pooled condition (1.42). In case (g2b),

which implies that ρ1 = 0.7, ρ2 = 0.5 and \(\rho = \frac{2}{3}\).

Similarly to Theorem 1.5, we can get the following corollary for the decay rates for the difference of the two queues. We omit its proof since it is parallel to the arguments in Theorem 1.5.

Corollary 1.2. Under the assumptions of Theorem 1.5, the weak decay rates r − and r + for the difference Q 2 − Q 1 in the negative and positive directions, respectively, exist in the sense of marginal distributions as well as jointly with each fixed minimum of the two queues, and we have the following cases, where \(\left({\theta _1^{\left({- c} \right)},\theta _2^{\left({- c} \right)}} \right)\) and \(\left({\theta _1^{\left({+ c} \right)},\theta _2^{\left({+ c} \right)}} \right)\) are given by (1.31) and (1.34), respectively, and

-

(h1)

If (1.39) holds, then either one of the following cases happens. (h1a) (1.42) implies

$$r_ - = \left\{{\frac{{\mathop {e^{- \theta _1^{\left({- c} \right)}}}\limits_{\gamma _2},}}{{\gamma _1 + \delta}}} \right.\rho,\begin{array}{*{20}c} {\theta _2^{\left({- c} \right)} \le \log \rho ^{- 2}} \\ {\theta _2^{\left({- c} \right)} > \log \rho ^{- 2},} \\\end{array}$$((1.45))$$r_ + = \left\{{\frac{{\mathop {e^{- \theta _1^{\left({+ c} \right)}}}\limits_{\gamma _1},}}{{\gamma _2 + \delta}}} \right.\rho,\begin{array}{*{20}c} {\theta _2^{\left({+ c} \right)} \le \log \rho ^{- 2}} \\ {\theta _2^{\left({+ c} \right)} > \log \rho ^{- 2},} \\\end{array}$$((1.46))-

(h1b)

(1.43) implies with \(r_2 = \frac{{\left({\lambda _2 + \delta} \right)}}{{\mu _2}}\rho _1 \) that

$$\begin{array}{*{20}c} {r_ - = \rho _1,} & {r_ + \left\{{\begin{array}{*{20}c} {e^{- \theta _1^{\left({+ c} \right)}},} \\ {t_ + \left({r_2} \right),} \\\end{array}\begin{array}{*{20}c} {\theta _2^{\left({+ c} \right)} \le \log r_2^{- 1}} \\ {\theta _2^{\left({+ c} \right)} > \log r_2^{- 1}.} \\\end{array}} \right.} \\\end{array}$$((1.47)) -

(h1c)

If (1.44) implies with \(r_2 = \frac{{\left({\lambda _1 + \delta} \right)}}{{\mu _1}}\rho _2 \) that

$$r - \, = \begin{cases}e^{- \theta _1 ^{(- c)}} \,&,\theta _2 ^{(- c)} \, \le \log r_2 ^{- 1},\\ \,\,t - (r2),\,\,&\theta _2 ^{(- c)} \, > \log r_2 ^{- 1},\, \end{cases} \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,r + \, = \rho 2\,\,$$((1.48))

-

(h1b)

-

(h2)

If (1.40) holds, then either one of the following cases happens.

-

(h2a)

(1.42) implies

$$\left({r_ -,r_ +} \right) = \left\{{_{\left({e^{- \theta _1^{\left({- c} \right)}},\rho _2} \right),}^{\left({e^{- \theta _1^{\left({- c} \right)}},\min \left({e^{- \theta _1^{\left({+ c} \right)}},t_ + \left({e^{- \eta _2^{\left(c \right)}}} \right)} \right)} \right),} \begin{array}{*{20}c} {\theta _1^{\left({+ c} \right)} \ge \eta _1^{\left({+ c} \right)}} \\ {\theta _1^{\left({+ c} \right)} < \eta _1^{\left({+ c} \right)}.} \\\end{array}} \right.$$((1.49)) -

(h2b)

(1.43) implies

$$\left({r_ -,r_ +} \right) = \left\{{\begin{array}{*{20}c} {\begin{array}{*{20}c} {\begin{array}{*{20}c} {\left({\rho _1,e^{- \theta _1^{\left({+ c} \right)}}} \right),} & {\eta _1^{\left({- c} \right)} < \log \rho _1^{- 1},\eta _1^{\left({+ c} \right)} < \theta _1^{\left({+ c} \right)}} \\\end{array}} \\ {\left({\rho _1,\min \left({e^{- \theta _1^{\left({+ c} \right)}},t\left({r_2} \right)} \right.} \right),\eta _1^{\left({- c} \right)} \ge \log \rho _1^{- 1},\eta _1^{\left({+ c} \right)} < \theta _1^{\left({+ c} \right)}} \\ {\left({\min \left({e^{- \theta _1^{\left({- c} \right)}},t_ - \left({r_2} \right),\rho _2} \right.} \right),\eta _1^{\left({- c} \right)} < \log \rho _1^{- 1},\eta _1^{\left({+ c} \right)} \ge \theta _1^{\left({+ c} \right)}} \\\end{array}} \\ {\left({\rho _1,\min \left({e^{- \theta _1^{\left({+ c} \right)}},t_ + \left({r_2} \right)} \right.} \right),\eta _1^{\left({- c} \right)} \ge \log \rho _1^{- 1},\eta _1^{\left({+ c} \right)} \ge \theta _1^{\left({+ c} \right)}} \\ {\begin{array}{*{20}c} {{\rm{and}}\frac{{\lambda _2 + \delta}}{{\mu _2}}\rho _1 < \frac{{\lambda _1 + \delta}}{{\mu _1}}\rho _2} \\ {\left({\min \left({e^{- \theta _1^{\left({- c} \right)}},t_ + \left({r_2} \right)} \right.\rho _2} \right),\eta _1^{\left({- c} \right)} \ge \log \rho _1^{- 1},\eta _1^{\left({+ c} \right)} \ge \theta _1^{\left({+ c} \right)}} \\\end{array}} \\ {{\rm{and}}\frac{{\lambda _2 + \delta}}{{\mu _2}}\rho _1 \ge \frac{{\lambda _1 + \delta}}{{\mu _1}}\rho _2,} \\\end{array}} \right.$$((1.50))where \(t_ + = \max \left\{{z;\left({1.32} \right){\rm{for\xi = log}}r_2^{- 1}} \right\}\) and \(r_2 = \frac{{\left({\lambda _2 + \delta} \right)}}{{\mu _2}}\rho _1 \).

-

(h2c)

(1.44) implies with \(r_2 = \frac{{\left({\lambda _1 + \delta} \right)}}{{\mu _1}}\rho _2 \) that

$$\left({r_ -,r_ +} \right) = \left\{{_{\begin{array}{*{20}c} {\left({t_ - \left({r_2} \right),\rho _2} \right),} & {\theta _2^{\left({- c} \right)} \ge \,\log \,r_2^{- 1}} \\\end{array}.}^{\begin{array}{*{20}c} {\left({e^{- \theta _1^{\left({- c} \right)}},\rho _2} \right),} & {\theta _2^{\left({- c} \right)}} \\\end{array} < \,\log \,r_2^{- 1}}} \right.$$((1.51))

-

(h2a)

Furthermore, the decay rates are exactly geometric unless either \(r_ - = e^{- \theta _1^{\left({- c} \right)}} \) with \(\theta _1^{\left({- c} \right)} = \theta _1^{- \max} {\rm{or r}}_ + = e^{- \theta _1^{\left({+ c} \right)}} {\rm{with}}\theta _1^{\left({+ c} \right)} = \theta _1^{+ \max} \).

Remark 1.4. In this corollary, the case that (1.41) holds is not considered. However, this case can be easily obtained by interchanging the roles of queues 1 and 2 in case (h2).

Remarks on Existence Results

We remark how our results include the existence results. The exactly geometric rate r 2 = ρ2 is obtained under the conditions (1.39) and (1.42) in [3], [6]. Our results cover all the possible cases although the decay rates are generally of the weak sense. We also note that there are some errors in Theorem 3.2 of [3]. They can be corrected by Corollary 1.2. Namely, the additional conditions (3.16) and (3.18) there are not sufficient to get the decay rates. They are used for all the terms in the sums of (3.15) and (3.17) to be positive. However, this is different from the corresponding eigenvectors to be positive. The right conditions are \(\theta _2^{\left({+ c} \right)} \ge \log \rho ^{- 2} \) and \(\theta _2^{\left({- c} \right)} \ge \log \rho ^{- 2} \), respectively, where \(\theta _2^{\left({- c} \right)} \) and \(\theta _2^{\left({+ c} \right)} \) are given in (1.31) and (1.34), respectively.

Conclusions

In this paper, we completely characterized the weak tail decay rates in terms of the transition probabilities for the stationary distribution of the two sided DQBD process (Theorems 1.3). For the exactly geometric decay, we find sufficient conditions, which are close to necessary conditions (Theorem 1.4). We then apply those results to the generalized join shortest queue with two waiting lines, whose decay rate problem has been only solved under some special conditions such as the weakly and strongly pooled conditions in the literature. We completely answer to this problem by finding the weak decay rates of the stationary distributions of the minimum of the two queues and their difference for all cases (Theorem 1.5 and Corollary 1.2). It is notable that the strongly and weakly pooled conditions still play the important role for finding the decay rate for the minimum of two queues. That is, the decay rate crucially changes according to whether or not those two conditions are satisfied.

Acknowledgments I am grateful to Yiqiang Zhao for his careful reading the original manuscript of this chapter and many invaluable comments. I also think Mr. Hiroyuki Yamakata for computing some numerical values. This research is supported in part by JSPS under grant No. 18510135.

Appendix 1

We prove Theorem 1.2. Let x = (…,χ−1,χ0,χ1,…) be the right positive invariant vector of \(A_*^{\left(2 \right)} \left(z \right).\) Then, we have

For s = ±, let \(s = \pm,\,{\rm{let}}\,w_1^{\left(s \right)} \) and \(w_2^{\left(s \right)} \) be the solutions of the following quadratic equation:

Then x must have the following forms:

By the irreducibility assumption in (i), \(p_{1_*}^{\left(s \right)} \left(z \right) > 0\) and \(p_{\left({- 1} \right)_*}^{\left(s \right)} \left(z \right) > 0.\) Furthermore, the positivity of \(x_n,\,w_1^{\left(s \right)},w_2^{\left(s \right)} \) must be real numbers. Hence, from the fact

\(w_1^{\left(s \right)} \) and \(w_2^{\left(s \right)} \) must be positive. This implies that x is nonnegative if and only if

From (1.52), we have

Substituting these χ−2 and χ2 into (1.56) yields

Since \(w_1^{\left(s \right)} \) satisfies (1.53), we have

Using (1.55), this is equivalent to

Hence, letting

we have (1.14), (1.15) and (1.16).

We next show that these conditions are also sufficient. Suppose that there are η2 ≥ 0 and \(\eta _1^{\left(s \right)} \) with s = ±1 satisfying (1.14), (1.15) and (1.16). Then, we can find u (s) with s = ±1 such that

Let χ0 = 1, and define χ−1 and χ1 as \(x_{- 1} = \frac{{u\left(- \right)}}{{p_{1*}^{\left(- \right)} \left(z \right)}},\,x_1 = \frac{{u\left(+ \right)}}{{p_{\left({- 1} \right)*}^{\left(+ \right)} \left(z \right)}}.\) Hence, letting z=e η2 and \(w_2^{\left(s \right)} = e^{- \eta _1^{\left(s \right)}} \) with s = ±1, we have (1.59) and (1.60). Then, defining χ−2, χ2 and χ n by (1.57), (1.58) and (1.54), respectively, we revive (1.52). Hence, we indeed find the positive left eigenvector x of \(A_*^{\left(2 \right)} \left(z \right).\) This proves the first part of the theorem. The remaining parts are obvious from (1.54) and Lemma 1.2.

Appendix 2

We prove Lemma 1.8. Define the following functions on \(_ + ^3,\) where ℝ+ = (0, ∞),

Obviously, all the functions are convex. Then, Lemma 1.8 is obtained by the following optimization problem. In particular, \(\eta _2^{\left(c \right)} \) is obtained as the logarithm of the maximum value of f.

subject to

This is a convex optimization problem, and (1.61) is satisfied with equality only if (z 1,z 2,ο) = (1,1,1) or (ρ−1,ρ−1,ρ−2) (see Lemma 3.2 of [3]). By D, we denote the set of all feasible solutions satisfying the constraints (1.61) and (1.62). Clearly, D is closed and bounded in \(R_ + ^3.\) For convenience, let

Since \(\left\{{\left({z_i,\xi} \right) \in \,_ + ^2;g_i \left({z_1,z_2,\xi} \right) \le 0} \right\}\) is a convex set, gi(z 1,z 2,ξ) = 0 have two solutions counting multiplicity for each ξ and each i = 1,2 if the solution exists. Hence, there exist at most four points (z 1,z 2,ξ) ∈ D for each ξ.

We show that D is a connected curve with end points (1,1,1) and (ρ−1,ρ−1,ρ−2) if D has three points at least. Suppose that this is not true. Let \(\left({z_1^ \circ,z_2^ \circ,\xi ^ \circ} \right) \in D\) be the third point other than the above end points. Then, we must have \(h\left({z_1^ \circ,z_2^ \circ,\xi ^ \circ} \right) < 0.\) This implies that the point \(\left({z_1^ \circ,z_2^ \circ} \right)\) is in the interior of the set

for ξ = ξ°, which is a polyhedral for each ξ and its region is continuously increased as ξ is increased. Hence, there exists a connected curve which passes through \(\left({z_1^ \circ,z_2^ \circ,\xi ^ \circ} \right)\) as an inner point. This curve must have (1,1,1) and (ρ−1,ρ−1,ρ−2) as its end points since otherwise we arrive at the contradiction that there is a point other than those points such that h = 0 holds.

Let us consider the cases for (1.39) and (1.40) separately. Here, we do not consider the case for (1.41) since it is symmetric to the case for (1.40). Denote the solutions of g i (z 1,z 2,ξ) = 0 for each ξ by \(\frac{{z_i}}{{- 2}}\left(\xi \right)\) and \(\overline {z_i} \left(\xi \right),\) where \(\underline z _i \left(\xi \right) \le \,\overline z _i \left(\xi \right).\) First, assume that (1.39) holds. then \(\left({\underline z _1 \left({\rho ^{- 2}} \right),\underline z _2 \left({\rho ^2} \right),\rho ^{- 2}} \right) = \left({\rho ^1,\rho ^{- 1},\rho ^{- 2}} \right) \in D\) and \(\overline {z_i} \left({\rho ^{- 2}} \right) \notin D_i \) for i = 1,2. Hence, f is maximized at (ρ−1,ρ−1,ρ−2). We next assume (1.40). Then, we have \(\left({\overline z _1 \left({\rho ^{- 2}} \right),\underline z _2 \left({\rho ^2} \right),\rho ^{- 2}} \right) = \left({\rho ^1,\rho ^{- 1},\rho ^{- 2}} \right) \in D\) and \(\left({\underline z _1 \left({\rho ^{- 2}} \right),\underline z _2 \left({\rho ^{- 2}} \right),\rho ^{- 2}} \right) \in D\)since \(\underline z _1 \left({\rho ^{- 2}} \right) \le \overline z _1 \left({\rho ^{- 2}} \right).\) if \(\underline z _1 \left({\rho ^{- 2}} \right) = \overline z _1 \left({\rho ^{- 2}} \right),\) we can reduce the problem to the case for (1.39). Otherwise, D has three points at least, so it is a connected curve with end points (1,1,1) and (ρ−1,ρ−1,ρ−2) as shown above. This concludes that f is maximized at \(\left({\underline z _1 \left({\xi ^*} \right),\underline z _2 \left({\xi ^*} \right),\xi ^*} \right)\) such that \(\underline z _1 \left({\xi ^*} \right) = \overline z _1 \left({\xi ^*} \right).\) Since ξ* must be the maximum value of ξ satisfying g 1(z 1,z 2,ξ) = 0, \(\eta _2^{\left(c \right)} = \theta _2^{- \max}.\) This completes the proof.

It may be notable that we can also solve the optimization problem by applying Karush-Kuhn-Tucker necessary conditions (e.g., see Sect. 4.3.7 of [11]). However, the present solution is more informative since the feasible region D is identified to be a connected curve.

References

M. Miyazawa, “Tail decay rates in a doubly QBD process,” Submitted for publication.

M. Miyazawa, “Doubly QBD process and a solution to the tail decay rate problem,” in Proc. the Second Asia-Pacific Symposium on Queueing Theory and Network Applications, pp. 33–42, 2007.

H. Li, M. Miyazawa, and Y. Q. Zhao, “Geometric decay in a QBD process with countable background states with applications to shortest queues,” Stochastic Models, vol. 23, pp. 413–438, 2007.

G. Latouche and V. Ramaswami, Introduction to Matrix Analytic Methods in Stochastic Modeling. Philadelphia: American Statistical Association and the Society for Industrial and Applied Mathematics, 1999.

M. F. Neuts, Matrix-Geometric Solutions in Stochastic Models. Baltimore: Johns Hopkins University Press, 1981.

R. D. Foley and D. R. McDonald, “Join the shortest queue: stability and exact asymptotics,” The Annals of Applied Probability, vol. 11, pp. 569–607, 2001.

A. A. Puhalskii and A. A. Vladimirov, “A large deviation principle for join the shortest queue,” Mathematics of Operations Research, vol. 32, pp. 700–710, 2007.

M. Miyazawa and Y. Q. Zhao, “The stationary tail asymptotics in the GI/G/1 type queue with countably many background states,” Advances in Applied Probability, vol. 36, pp. 1231–1251, 2004.

E. Seneta, Nonnegative Matrices and Markov Chains, Second Edition. New York: Springer-Verlag, 1981.

R. D. Foley and D. R. McDonald, “Bridges and networks: Exact asymptotics,” The Annals of Applied Probability, vol. 15, pp. 542–586, 2005.

M. S. Bazaraa, H. D. Sherali, and C. M. Shetty, Nonlinear Programming: Theory and Algorithms. New York: Wiley, 1993.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2009 Springer Science+Business Media, LLC

About this chapter

Cite this chapter

Miyazawa, M. (2009). Two Sided DQBD Process and Solutions to the Tail Decay Rate Problem and Their Applications to the Generalized Join Shortest Queue. In: Yue, W., Takahashi, Y., Takagi, H. (eds) Advances in Queueing Theory and Network Applications. Springer, New York, NY. https://doi.org/10.1007/978-0-387-09703-9_1

Download citation

DOI: https://doi.org/10.1007/978-0-387-09703-9_1

Publisher Name: Springer, New York, NY

Print ISBN: 978-0-387-09702-2

Online ISBN: 978-0-387-09703-9

eBook Packages: EngineeringEngineering (R0)