Abstract

The fractional order Kalman filtering theory is an extension and extension of traditional integer order Kalman filters, which can solve the state estimation problem of fractional order systems. At present, the descriptor fractional order systems have been widely applied in many fields, such as circuit and sensor fault diagnosis. However, there is currently little research on the filtering problem of descriptor fractional order systems. This paper will focus on a fractional order descriptor system with canonical form. Firstly, the non singular linear transformation method is applied to transform the descriptor fractional order system into two normal fractional order subsystems. Then, based on projective theory, a fractional order Kalman state filter with correlated noise subsystems is derived. For multi-sensor descriptor fractional order systems, the globally optimal weighted measurement fusion algorithm is applied to derive the optimal information fusion fractional order Kalman filter. Simulation results verify the effectiveness and feasibility of the proposed algorithm.

This work was supported by the National Natural Science Foundation of China, project approval number: 61104209; Supported by the Special Fund for Basic Scientific Research of Provincial Colleges and Universities, Project approval number :2020-KYYWF-0098.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Information fusion

- fractional order systems

- fractional order Kalman filtering

- weighted measurement fusion

- optimal filtering

1 Introduction

Kalman filter algorithm is a common state estimation method. This method has been widely concerned and applied since it was put forward in the 1960s. Its feature is that it can predict the estimated value of the next moment or even the next moment according to the current data through iteration, so as to solve the dynamic target estimation problem with interference [1]. In addition, Kalman filter has also been widely applied in the engineering field, such as integrated navigation [2,3,4], target tracking [5, 6], fault diagnosis and detection [7, 8]. At present, many improved Kalman filtering algorithms emerge in an endless stream. From the initial solution of linear system problems to the present solution of nonlinear system problems, simple classical Kalman filtering at the very beginning, Later, adaptive Kalman filtering with unknown noise statistics or model parameters and other uncertain information [9], self-correcting Kalman filtering [10, 11], robust Kalman filtering theory [12, 13] and so on. In recent years, with the development of fractional-order calculus theory, the filter problem of fractional-order system has gradually attracted people's attention. The state estimation of fractional-order system is a new idea and new direction of the development of the theory of state estimation, and also meets the actual production demand.

Fractional calculus was proposed by Leibniz and L'Hospital in 1965, and then Liouville and Riemann proposed the definition of fractional derivatives. However, it was initially studied only by engineers, and it was not until the late 1960s that fractional calculus was gradually developed, and it was discovered that fractional derivatives would allow more accurate descriptions of systems in simulation modeling or stability analysis. With the passing of time, the control system theory gradually develops, the traditional calculus theory is not enough to meet the needs of production and research. Fractional calculus became more active in the theory of control systems and played an indispensable role. Literature [14] and [15] proposed fractional Kalman filtering algorithm and extended fractional Kalman filtering algorithm, and analyzed specific cases to discuss the possibility of applying these algorithms to fractional system parameters and fractional estimation, and their algorithms have been applied to image processing, signal transmission and other fields [16, 17]. But compared with the traditional Kalman filter, its application is not so wide. At present, generalized fractional systems have been widely used in the fields of circuit [18, 19] and sensor fault estimation [20]. The fusion estimation problem of generalized fractional systems discussed in this paper has certain theoretical significance and potential application value.

In this paper, a typical fractional-order singular system is firstly transformed into two normal fractional-order subsystems by non-singular linear transformation method. Then, a fractional-order Kalman state filter with correlated noise subsystems is derived based on projective theory. For multi-sensor generalized fractional-order systems, the global optimal weighted observation fusion algorithm is applied to derive the optimal information fusion fractional-order Kalman filter, and the simulation results verify the effectiveness of the proposed algorithm.

2 Problem Formulation

Consider the following linear generalized fractional stochastic system

where \(x(k + 1) \in R^n\) is the state of the system, \(\Phi \in R^{n \times n}\) is the target variance coefficient matrix, \(B \in R^{n \times r}\) is the target noise matrix, \(\Delta^\gamma\) is a fractional operator, \(\gamma\) is fractional order, \(H\) is the observation matrix of the observation equation, \(v(k)\) is the observed noise, \(M,H\) is the constant matrix of corresponding dimension.

Assumption 1:

\(M \in R^{n \times n}\) is a singular square matrix, means \({\text{rank}} M = n_1 < n\), \(\det M = 0\).

Assumption 2:

The system is regular, means \(\exists z \in C\), so \(\det (zM - \Phi ) \ne 0\).

Assumption 3:

\(w(k) \in R^r\) and \(v(k)\) are zero mean uncorrelated white noise:

where \({\text{E}}\) is the expected value, \({\text{T}}\) is the transpose symbol.

Assumption 4:

The system is fully observable, So there's the matrix \(K\) that makes:

Assumption 4 leads to the existence of two non-singular square matrices \(R,W\) that make [77]

where \(n_1 + n_2 = n\).Introducing block matrix representation:

and introduce state:

where \(x_1 (k) \in R_{n_1 } ,x_2 (k) \in R_{n_2 }\).

Equation (3) is multiplied by \(K\) and subtracted by Eq. (1)

\(P\) is left multiplied by formula (9), and formula (6)–(8) is used to derive the observable model as follows:

This leads to two reduced order subsystems:

by substituting Eq. (14) into Eq. (15), a subsystem with different local dynamic transformation types and the same local state \(x_1 (k)\) is derived:

therein defined:

for the transformed conventional subsystem (16), if a non-zero term is added to the right side and substituted into formula (20), it can be obtained:

where \(\Lambda\) is the undetermined matrix and can be set:

Then equation of state (21) can be reduced to

However, the observation equation is still Eq. (17). According to assumption 3, we can know:

then have

and \(Q_\tau\) is the reciprocal covariance matrix of \(\tau (k)\):

so you can take the undetermined matrix

therefore, there is \(E\left[ {\phi (k)\tau^T (j)} \right] = 0\), that is, \(\phi (k)\) is unrelated to \(\tau (j)\), and the auto-covariance matrix of \(\phi (k)\) is easily obtained:

substitute into Eq. (27) to get

So \(\phi (k)\) has zero mean white noise, and the variance is

which is independent of white noise \(\tau (k)\).Generalized fractional filtering problem is to calculate the minimum variance estimation \(\hat{x}(\left. k \right|k)\) of state \(x(k)\) based on the observed value \(y(1), \cdots ,y(k)\) of data obtained by multiple sensors.

3 Kalman Filter for Single Sensor Generalized Fractional Order System

Theorem 1 For the observable singular system (1)–(3), under hypothesis 1-(4), the reduced-order subsystem (17) and (24) has a local recursive fractional Kalman filter of \(x_1 (k)\).

put in the initial value \(\hat{x}_1 (0|0) = \rho_{01} ,P_1 (0|0) = P_{01}\).

Proof:

It can be obtained from literature [13] that

available at this time

So we get (32) and (33) easily.

According to literature [13], the one-step optimal linear prediction \(\hat{z}(\left. k \right|k - 1)\) of \(z(k)\) can be obtained, i.e.

thus easy to obtain

among them \(\varepsilon (k) = z(k) - \hat{z}(k|k - 1)\), \(K_1 (k) = E[x_1 (k)\varepsilon^{\rm T} (k)][E(\varepsilon (k)\varepsilon^{\rm T} (k)]^{ - 1}\) is called Kalman

filter gain. Define \(\tilde{x}_1 (k|k - 1) = x_1 (k) - \hat{x}_1 (k|k - 1)\), then \(E[x_1 (k)\varepsilon^T (k)] = E[(\hat{x}_1 (k|k - 1) + \tilde{x}_1 (k|k - 1))\).

\(\times (H_1 \tilde{x}_1 (k|k - 1) + \tau (k))^{\rm T} ]\), By projective orthogonality we have \(\hat{x}_1 (k|k - 1) \bot \tilde{x}_1 (k|k - 1)\), \(\tau (k) \bot \hat{x}_1 (k|k - 1)\), \(\tau (k) \bot \tilde{x}_1 (k|k - 1)\), then

In the same way, we can get

where \(P_1 (k|k - 1) =\) \(E[(x_1 (k) - \hat{x}_1 (k|k - 1))(x_1 (k) -\) \(\hat{x}_1 (k|k - 1))^{\rm T} ]\) is the prediction error variance matrix.It can be obtained from (40) that

\(K_1 (k) = P_1 (k|k - 1)H_1^{\rm T} [H_1 P_1 (k|k - 1) \times\)\(H_1^{\rm T} + Q_\eta ]^{ - 1}\) is denoted as the gain matrix of fractional Kalman filter, then formulas (31) and (36) can be obtained.

where \(E[(x_{1m} - \hat{x}_{1\left. m \right|m - 1} )(x_{1n} - \hat{x}_{1\left. n \right|n - 1} )^T ] = 0\begin{array}{*{20}c} , & {m \ne n} \\ \end{array}\).It follows that:

where, \(Q_\phi\) is the autocovariance matrix of \(\phi (k)\), which is given by Eq. (28) and can be proved by Eq. (34).

It can be obtained from Eqs. (17) and (31) that

Theorem 2:

Fractional subsystem 2 has a local recursive fractional Kalman filter under Eqs. (14) and (15).

Prove:

Applying theorem 1, it can be proved easily by Eqs. (14) and (15).

4 Observational Fusion Kalman Filter for Generalized Fractional-Order Systems

The observation fusion of generalized fractional order system is carried out for the normalized subsystem, so the normalized subsystem of multi-sensor generalized fractional order system is considered as follows.

where \(x_i (k) \in R^n\) is the state quantity and \(z_i (k) \in R^m\) is the observation of the ith sensor,\(\tau_i (k) \in R^{m_i }\) is observed noise, \(H_{1i} \in R^r\) is observed white noise,\(\Phi_1\)、\(\overline{K}_2\)、\(\overline{H}\) is a known constant matrix of appropriate dimension, and the observed matrix \(\overline{H}_i\) has the same \(m \times n\) dimensional right factor \(\overline{H}\),and

Assumption 5:

\(\phi_i (k) \in R^r\) and \(\tau_i (k) \in R^n\) are mutually independent white noises with zero mean and variance matrices \(Q_{\phi i}\) and \(Q_{\tau_i }\), respectively, and

where \({\text{E}}\) is the mean symbol and \({\text{T}}\) is the transpose symbol,\(\delta_{tt} = 1\), \(\delta_{tk} = 0 \, (t \ne k)\)。

Assumption 6:

\((\Phi_1 \, H_{1i} )\) is a completely observable pair.

Assumption 7:

The matrix \(\sum_{i = 1}^L {[G_i^{\text{T}} R_{\xi i}^{ - 1} G_i ]^{ - 1} }\) is invertible.

The centralized fusion observation equation can be obtained from Eqs. (50)–(54) that

The fused observation noise \(\tau_0 (k)\) has a variance matrix

Equation (55) can be regarded as an observation model for \(H_1 x_1 (k)\), so the weighted least squares (WLS) method can be applied to estimate \(H_1 x_1 (k)\) as

The weighted observation fusion equation can be obtained by substituting Eq. (60) into (55)

And it has fused observation noise

It has the minimum error variance matrix

5 Simulation Study

Generalized fractional systems have important applications in circuits[18, 19] and sensor fault estimation[20]. Here, the canonical form of a generalized fractional order circuit system is considered.

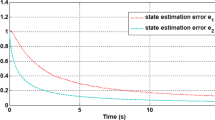

where \(w(k)\) and \(v(k)\) are uncorrelated white noise with zero mean andvariance \(Q = 0.01\) and \(Q_v = 0.02\),respectively,\(n_1 = 0.01\),the problem is to find a generalized fractional Kalman filter \(\hat{x}(k|k) = [\hat{x}_1 (k|k),\hat{x}_2 (k|k)]^{\rm T}\) for state \(x(k)\).The simulation results are shown in Fig. 1.

According to theorem 1, the model given above is simulated and analyzed. Figs. 1 and 2 show the filtering results of state values and estimated values of subsystem 1 and subsystem 2. As can be seen from the figure, the estimated value can almost keep up with its state truth value, which is comparable to the effect of Model II. The error is basically within 0.1, so it can be seen that the generalized fractional-order filtering algorithm is feasible. Of course, the effect can be improved by adjusting the parameters. Then, weighted observation fusion was explored for the above model, and simulation analysis was carried out. Based on (64) and (65), different local observation noise error variances were considered respectively as: \(Q_{v1} = 0.02\), \(Q_{v2} = 0.1\), \(Q_{v3} = 1\), As shown in Fig. 3, observation fusion is carried out on subsystem 1 after normalization, and curves of state truth value and observation estimation are made. By analogy with Fig. 1 of local valuation, there are obvious changes.

In order to further compare and analyze the estimation accuracy of local fusion, on the basis of (63) and (64), different local observation noise error variances are considered as: \(Q_{v1} = 0.02,\)\(Q_{v2} = 0.1,Q_{v3} = 1\),The comparison graph of mean square error obtained by 100-step Monte-Carlo simulation of the three fusion estimates is shown in Fig. 4. MSEm, MSCI123, MSEguance and MSEjizhong represent the mean square error curves of suboptimal weighted state fusion, SCI fusion, weighted observation fusion and centralized fusion, respectively. At \({\text{k = 60}}\),then

As can be seen from the figure, the actual estimation accuracy of SCI fusion is similar to that of distributed suboptimal state fusion, but lower than that of weighted observation fusion. The estimated accuracy of weighted observation fusion is the same as that of centralized fusion, which is a globally optimal weighted fusion algorithm.

Note 1 Based on subsystem 1 after weighted fusion, the corresponding state estimator of subsystem 2 can also be obtained from Theorem 2. According to the optimality of the state fusion estimation of subsystem 1, it is easy to know that the corresponding state estimator of subsystem 2 also has the same optimality.

6 Conclusions

A fractional Kalman state filter for fractional descriptor systems is proposed in this paper. For multi-sensor generalized fractional order system, the global optimal weighted observation fusion algorithm is applied to derive the optimal information fusion fractional order Kalman filter. The proposed algorithm has global optimality and equivalent estimation accuracy compared with the centralized fusion algorithm, but the computational complexity is greatly reduced, which is convenient for engineering applications. A simulation example proves the effectiveness and feasibility of the method.

References

Kalman, R.E.: A new approach to linear filtering and prediction problems. J. Basic Eng. 82(1), 35–45 (1960)

Cao, J., Yu, C., Xia, Y., Ji, X.: Clock synchronous GNSS/SINS tightly combined adaptive filtering algorithm. In: Proceedings of the 8th China Annual Conference on Satellite Navigation—S10 Multi-source Fusion Navigation Technology, pp. 109–113. Shanghai (2017)

Wang, W., Cong, N., Wu, J.: Design of robust GPS/INS integrated navigation filtering algorithm. J. Harbin Eng. Univ. 42(02), 240–245 (2021)

Hong, Z.: Research on improved algorithm of unscented Kalman filter and its application in GPS/INS integrated navigation, pp. 45–58. East China University of Technology (2019)

Chi, J.N., Qian, C., Zhang, P., et al.: A novel ELM based Adaptive Kalman Filter Tracking Algorithm. Neurocomputing 128, 42–49 (2014)

Chen, X., Fan, Y., Ma, Z.: Kalman filter tracking algorithm simulation based on angle expansion. In: 2021 4th International Conference on Algorithms, Computing and Artificial Intelligence, pp. 1–5. Sanya (2021)

Zhao, H.: Fault Diagnosis of Multi-Sensor Integrated Navigation Based on Federated Kalman Filter, pp. 31–44. Beijing Institute of Technology (2017)

Du, Z., Li, X., Zhen, Z., Mao, Q.: Fault prediction based on fusion of strong tracking square-root cubature Kalman filter and autoregressive model. Control Theory Appl. 31(08), 1047–1052 (2014)

Mehra, R.H.: On the identification of variances and adaptive kalman filtering. IEEE Trans. Autom. Control 15(2), 175–184 (1970)

Deng, Z.L., Gao, Y., Li, C.B., et al.: Self-tuning decoupled information fusion wiener state component filters and their convergence. Automatica 44(3), 685–695 (2008)

Ran, C.J., Tao, G.L., Liu, J.F., et al.: Self-tuning decoupled fusion Kalman predict-or and its convergence analysis. IEEE Sens. J. 9(12), 2024–2032 (2012)

Yu, H., Jing, Z.: Jianke Lv.Robust Kalman Filtering algorithm based on Outlier Detection. In: 11th National Conference on Signal and Intelligent Information Processing and Applications, pp. 44–48. Zhanjiang (2017)

Deng, Z.: Theory and application of information fusion estimation, pp. 463–479. Science Press, Beijing (2012)

Sicrociuk, D., Dzielinski, A.: Fractional Kalman filter algorithm for the states, parametiers and order of fractional system estimation. Int. J. Appl. Math. Comput. Sci. 16(1), 129–140 (2006)

Andrze, D., Dominik, S.: Adaptive feedback control of fractional order discrete state-space system. In: International Conference on Intelligent Agents, Web Technologies and Internet Commerce, vol. 1, pp. 804–809. Vienna, Austria (2005)

Chen, D., Bufa, H.: Application of adaptive fractional differential in facial image processing. Mech. Manufac. Autom. 46(06), 137–141 (2017)

Wang, T.: Application of Kalman Filter in image processing. Sci. Technol. Inform. (Acad. Ed.) 032, 575–576 (2008)

Kaczorek, T.: Descriptor fractional linear systems with regular pencils. Asian J. Control 15(4), 1051–1064 (2013)

Kaczorek, T., Ruszewski, A.: Application of the drazin inverse to the analysis of pointwise completeness and pointwise degeneracy of descriptor fractional linear continuous-time systems. Int. J. Appl. Math. Comput. Sci. 30(2), 219–223 (2020)

Jmal, A., Naifar, O., Makhlouf, A.B., Derbel, N., Hammami, M.A.: Sensor fault estimation for fractional-order descriptor one-sided lipschitz systems. Nonlinear Dyn. 91(3), 1713–1722 (2018). https://doi.org/10.1007/s11071-017-3976-1

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Liang, X., Yan, G., Zhu, Y., Li, T., Sun, X. (2024). Optimal Information Fusion Descriptor Fractional Order Kalman Filter. In: Xin, B., Kubota, N., Chen, K., Dong, F. (eds) Advanced Computational Intelligence and Intelligent Informatics. IWACIII 2023. Communications in Computer and Information Science, vol 1931. Springer, Singapore. https://doi.org/10.1007/978-981-99-7590-7_3

Download citation

DOI: https://doi.org/10.1007/978-981-99-7590-7_3

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-7589-1

Online ISBN: 978-981-99-7590-7

eBook Packages: Computer ScienceComputer Science (R0)