Abstract

The hypervolume indicator is commonly utilized in indicator-based evolutionary algorithms due to its strict adherence to the Pareto domination relationship. However, its high computational complexity in high-dimensional objective spaces limits its widespread adoption and application. In this paper, we propose a fast and efficient method for approximating the overall hypervolume to overcome this challenge. We then integrate this method into the basic evolutionary computation framework, forming an algorithm for solving many-objective optimization problems. To evaluate its performance, we compared our proposed algorithm with six state-of-the-art algorithms on WFG and DTLZ test problems with 3, 5, 10, and 15 objectives. The results demonstrate that our proposed method is highly competitive in most cases.

This work was partially funded by the National Key Research and Development Plan of China (No. 2018AAA0101000) and the National Natural Science Foundation of China under grant 62076028.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The purpose of optimization is to find the optimal value of a function. The problem becomes complicated when there are multiple objectives for optimization, which are called multi-objective optimization problems (MOPs). For MOPs, the ultimate goal is to solve the Pareto Front (PF) of the entire problem, and the solutions in PF are mutually non-dominant. When there are more than three optimization objectives in the problem, MOPs are generally called Many-object problems (MaOPs) [8]. In solving MaOPs, evolutionary multi-objective optimization algorithms (EMOAs) have achieved good results [12]. EMOAs can be divided into three categories according to their different mechanisms: 1) Pareto-based, 2) Decomposition-based, and 3) Indicator-based.

Pareto-based EMOAs use the Pareto dominant relationship to calculate individual fitness, so as to achieve the purpose of environmental selection. For example, NSGA-II [5] uses the distance value of adjacent individuals in objective space to calculate the crowding distance, and SPEA2 [21] calculates the K-order nearest neighbor distance. Pareto-based EMOAs have been well applied in the optimization of low-dimensional space due to their simple calculation method. However, Paretobased EMOAs are not very effective in solving MaOP problems. Because as the number of optimization objects increases, the proportion of non-dominant individuals in the population also increases, resulting in Pareto-based environmental selection becoming extremely inefficient.

Decomposition-based EMOAs use decomposition strategies to decompose a MOPs into several scalar optimization subproblems, and optimize them simultaneously in a collaborative manner. For example, MOEA/D [19] uses weighted sum, Chebyshev, and PBI to decompose MOPs. Finally, the convergence of the population is judged by the scalar function criterion. However, the weight vector has a strong influence on the decomposition-based EMOAs. When the Pareto front has an irregular shape, running the algorithm with a fixed weight vector will cause the population to be unevenly distributed on the Pareto front [11]. Therefore, for decomposition-based EMOAs, the weight vector adjustment during algorithm operation is still the current research focus [1, 15].

Indicator-based EMOAs use various indicators as the criteria for environmental selection of populations, and reflect the diversity and convergence of populations as the value of the indicators at the same time. For example, IGD [4] evaluates the convergence performance and diversity performance of the algorithm by calculating the sum of the minimum distances between each individual on the real Pareto front surface and the collection of individuals obtained by the algorithm. Hypervolume [22] evaluates the population quality by calculating the Lebesgue measure of the area enclosed by the population individual and the reference point. Generally speaking, the setting of indicator parameters will affect the size of the indicator to a large extent, such as the reference set in IGD and the reference point of hypervolume. By setting the parameters reasonably, the indicator-based EMOAs can achieve better performance.

Among the many population evaluation indicators, hypervolume is adopted by most indicators-based EMOAs (for example, SMS-EMOA [3, 7], MOPSOhv [9] and HypE [2]) because it strictly follows the Pareto principle. The main idea of hypervolume-based EMOAs is to transform MOPs into single-objective problem by maximizing the hypervolume of population. However, for hypervolume-based environmental selection, most algorithms use the following steps:

-

First, the hypervolume contribution of each solution in the solution set is computed.

-

Then, the solution with the smallest hypervolume contribution is discarded.

-

Finally, judge whether the size of the solution set meets the requirements, if not, return to the first step.

This method is called a greedy algorithm. For a solution set of size 2N, if N solutions are retained through environmental selection, it is necessary to calculate the hypervolume contribution of the solution set N times. Although a variety of hypervolume approximation methods have been proposed (such as IWFG [15], R2HCA [16], etc.), each environmental selection needs to run the approximation algorithm multiple times, which causes the algorithm to be time-consuming. To solve this problem, this paper designs an environment selection mechanism to avoid multiple calculations by screening the overall hypervolume of each solution, designs a new method to approximate the overall hypervolume contribution, and finally integrates this method into an EMOA framework. In the experimental part, we compared our method with two hypervolume-based EMOAs and four advanced EMOAs, tested on the DTLZ and WFG test suits of 3, 5, 8, 10, and 15 objectives.

The rest of this article is organized as follows. In Sect. 2, we briefly review some basic concepts. The details of the proposed algorithm are described in Sect. 3. In Sect. 4, the experiment content is introduced. In Sect. 5, the experimental results are presented and analyzed. We conclude this paper in Sect. 6.

2 Preliminary Concepts

2.1 Hypervolume

Consider a non-dominated point set S in the target space. We assume that they are all non-overlapping, then the hypervolume of this solution set is computed as follows.

where \(\mathcal {L}(\dots )\) is the Lebesgue measure of a set. Another important concept is the hypervolume contribution, which reflects how well each solution contributes to the entire solution set. The hypervolume contribution of solution s in solution set S is expressed as

The last concept is the overall hypervolume contribution. Because the general hypervolume contribution only considers the measure of the area dominated by the current solution alone but ignores the area dominated by other solutions. The overall hypervolume contribution considers this impact and is expressed as follows.

where \(worse(\dots )\) represents the worst value in each dimension in objective point set. For example, a minimization problem represents the maximum objective value in each dimension, while a maximization problem does the opposite. Figure 1 shows the geometric interpretation of the three concepts respectively.

2.2 R2-Based Hypervolume Contribution Approximation

The basic idea of the R2-based hypervolume contribution approximation(\(R^{HCA}_2\)) method is to use different line segments to estimate the hypervolume contribution only in the exclusive dominanced area. This method can directly approximate the hypervolume contribution and use all the direction vectors of the hypervolume contribution area. Compared with the Monte Carlo sampling method, the \(R^{HCA}_2\) further reduces the time complexity of the hypervolume calculation, and has achieved a significant improvement in the estimation accuracy. The literature shows that the calculation time of the \(R^{HCA}_2\) only increases linearly with the increase of the number of objectives, and when the number of direction vectors does not change, as the number of objects increases, the accuracy of the hypervolume estimation decreases slowly. However, the current \(R^{HCA}_2\) only estimates the exclusive hypervolume indicator value of each solution. This is feasible under the \((\mu + 1)\) strategy EMOAs, but applied to the \((\mu + \mu ')\) strategy EMOAs It may lead to eliminating individual sets of high-combination hypervolume indicators in the environmental selection stage.

3 Proposed Method

3.1 General Framework

The general framework of proposed method is roughly same as HypE. Considering that only one offspring in each generation will have a higher probability of premature convergence to the local optimum, we adopts the \((\mu +\mu ')\) evolutionary strategy. Algorithm 1 gives the pseudo-code of the whole body framework of the proposed method. In each algorithm generation, the same number of offspring as the parent is produced, then the parent and offspring are integrated into a population. Finally, environmental selection reduces the entire population to a prescribed number. The specific steps of environment selection have been explained in the previous section. For the generation of offspring, the proposed method adopts a method that randomly selects two individuals, performs binary simulation crossover and polynomial mutation, generates two offspring individuals, and then repeats the above steps repeatedly until the number of offspring individuals is increased to N.

3.2 Environmental Selection

The environmental selection mechanism in proposed method is different from other hypervolume-based EMOAs. First, perform a non-dominated sort on the solution set and determine the number of solutions that need to be removed (lines 1-2). Then determine the rank number after non-dominated sorting. If it exceeds 1, randomly select DelNum solutions of the last rank with probability selratio and delete them (lines 4); otherwise, choose DelNum solutions of the last rank with a minor overall hypervolume contribution and delete them (lines 6). Note that the smaller the selratio value, the faster the calculation speed of the algorithm, but the more the convergence speed is weakened. In this article, the value of selratio is set to 0.5.

3.3 Overall Hypervolume Contribution Approximation

3.3.1 R2 DataArray Computation.

To calculate the overall hypervolume contribution, we improved the hypervolume contribution estimation method proposed in [16] so that all direction vectors can be used to estimate the exclusive area and the common dominant area with other solutions.

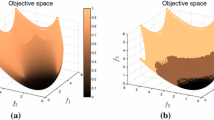

The principle of the \(R^{HCA}_2\) is shown in Fig. 2a. In the initial stage, a randomly generated set of uniformly distributed unit vectors is utilized. To evaluate the hypervolume contribution of each solution, the length of all line segments is computed by considering the solution as the starting point and projecting into the direction of every vector in the vector set. The line segment stops at the dominant region of the nearest other solution where it intersects, serving as the endpoint. The logarithmic average of the lengths of all line segments is computed based on the objective space dimensionality, and this resulting value is utilized as an estimated hypervolume contribution. Afterward, this estimated contribution is applied to the calculations.

Due to the algorithmic mechanism, \(R^{HCA}_2\) can only estimate the exclusive dominant area of each solution. However, based on the idea of using the mean value of line segment distance to estimate hypervolume in \(R^{HCA}_2\), we found that if the directional vector in \(R^{HCA}_2\) continues to extend, it will intersect with the dominant regions of other solutions in turn. At each focal point intersection, the number of shared solutions in the region will increase by one. By considering the length of the line segments in each region, it is possible to estimate the hypervolume of the co-dominant region with different numbers of solutions. The use of overall hypervolume calculation for each solution is proposed and implemented in HypE, and this technique has demonstrated an improvement in solution set diversity. \(L(b,\varLambda _1,a)\) is used to denote the length of the line segment that starts from solution b, moves in the direction of \(\varLambda _1\), and ends at the intersection of the dominant region of solution a.

The specific principle is shown in Fig. 2b. For solution b, the vector direction \(\varLambda _1\) first intersects the dominant region of the solution a, then intersects the corresponding line segment of reference point r, and finally intersects the dominant region of the solution c (not shown in the figure). In the line segment marked along the \(\varLambda _1\) vector direction in the figure, the black part represents the area dominated by solution b alone. After the first intersection, the red line segment denotes the area dominated by two solutions simultaneously, and so on. In Fig. 2b, the line segment in the direction of \(\varLambda _2\) is a special case. The first part of the line segment represents the area dominated by solution b alone, while the latter part represents the area dominated by all three solutions. However, this situation can still be explained using the previous method. In the direction of \(\varLambda _2\), the line segment passes through the intersection of the dominated region of solutions a and c, i.e., \(L(b,\varLambda _2,a)=L(b,\varLambda _2,c)\), and the point on the line segment is the coincidence of the two points. Therefore, after that point, the number of points dominated by the line segment increases by 2. Therefore, In order to estimate the common dominant area of multiple solutions, we need to modify \(R_2^{HCA}\) using the following steps:

-

Calculate the distances of all intersection points between the solution of the hypervolume to be estimated and other solutions in the solution set for each direction vector.

-

Calculate the intersection distance between the solution of the hypervolume to be estimated and the reference point r for each direction vector.

-

Sort the intersection distance data of each direction vector in ascending order.

The method of calculating the distance between the intersection of the line along the direction vector and the solution is consistent with the method of calculating \(g^{*2mth}\) and \(g^{mtch}\). For any direction vector \(\varLambda = \{\lambda _1,\lambda _2,...,\lambda _m\}\), the \(L(s,\varLambda ,r)\) function is defined as:

The \(L(a,\varLambda ,s)\) function is defined for minimization problems as

DataArray Modification. After the initialization of the R2 array is completed, there may still be invalid data in the array, as shown in Fig. 2b. An obvious problem is the intersection of solution a along the \(\Lambda _1\) direction and solution c, which exceeds the calculation area of the hypervolume contribution indicator. This intersection point is invalid.

To determine whether an intersection point is invalid, we can compare the length of the line segment formed by the intersection point and the solution with the critical distance. For any point s along the direction vector \(\Lambda \), the critical distance is the intersection distance between s and the reference point r. If the length of the line segment is greater than the critical distance, then the intersection point is invalid.

In the context of the R2 indicator, we can discard any intersection points that are deemed invalid and only consider the valid intersection points to avoid distortion of the hypervolume contribution estimate. The specific correction process of the R2 array is illustrated in Fig. 3. For solution a, we start with the intersection point along the \(\Lambda _3\) direction and compare the distance of each subsequent intersection point to the reference point in ascending order. We keep the first intersection point whose distance is less than the critical distance and discard all subsequent points.

The corrected R2 array is shown in Fig. 4. Note that this is an irregular array, as some rows have more columns than others. If an irregular data structure is not supported by a certain programming environment, we can replace the discarded entries with INF.

Numerical Approximation. Once the R2 data array is calculated, we can estimate the hypervolume contribution value using the following formula:

Here, \(|\varLambda _i|\) is the total number of vectors in the area dominated by i solutions, \(l_{ij}\) is the length of the line segment in the direction of the j-th vector in the area commonly dominated by i solutions, \(a_i\) is the hypervolume contribution coefficient of the area dominated by isolutions (the same definition as HypE), and M is the number of objectives.

3.4 Computational Complexity

The computational complexity of one generation of the proposed method is analyzed as follows. The initialization of the R2 data array and the process of estimating hypervolume contribution through the R2 data array occupy most of the algorithm time. In each generation, in the initialization phase of the R2 data array, \(O(V_NMN^2)\) calculation is required; the sorting of each row of data in the R2 array requires \(O(V_NN^3)\) calculation; in the environment selection phase, non-dominated sorting adopts The T-ENS [20] algorithm has a computational complexity of O(MNlogN/logM). So the computational complexity of environment selection is \(O(V_NN^3)\). In general, since m is generally much smaller than N, the computational complexity of the entire the proposed method algorithm is \(O(V_NN^3)\).

4 Experimental Design

4.1 Benchmark Problems

To evaluate the performance of the proposed method, we conducted experiments on the DTLZ\(\{1-7\}\) [6] and WFG\(\{1-9\}\) [10] test sets. Both of these test sets allow for arbitrary adjustment of the number of objectives and decision variables.

Following the suggestions in [6], we selected test problems with PFs of different shapes, including convex, concave, linear, continuous, non-convex, and discontinuous. These different types of PFs can evaluate the performance of the algorithm in all aspects. We set the number of objectives to \(m=\{3,5,10,15\}\).

For the number of decision variables in the DTLZ test set, DTLZ1 is \(m+4\), DTLZ2-6 is \(m+9\), and DTLZ7 is \(m+19\). For the WFG test set, based on the recommendation in [10], we set the number of decision variables to \(n=k+l\), where the position-related variable \(k=2*(m-1)\) and the distance-related variable \(l=20\).

4.2 Performance Metrics

In this paper, we used two evaluation indicators - hypervolume and inverted generational distance - to evaluate the performance of the algorithm. Both of these indicators can evaluate the convergence and diversity of the solution set. The hypervolume indicator strictly follows the Pareto relationship, so it can clearly distinguish the convergence and diversity of different solution sets.

For cases where \(m<10\), we used the WFG algorithm to accurately calculate the value of hypervolume. However, when \(m>10\), the accurate calculation takes too much time, so the Monte Carlo method was used to approximate the value of hypervolume. The true ideal point \(r^*\) and the true nadir point \(r^{nad}\) were set as (1, 1, ..., 1) and (0, 0, ..., 0) respectively, and each solution in the solution set was normalized. The reference point used when calculating hypervolume was \({\textbf {r}}=(1,1,...,1)\).

The Inverted Generational Distance (IGD) [10] is another widely used metric in multi-objective scenarios, providing combined information on convergence and diversity. However, unlike hypervolume, lower IGD values indicate better solution quality. For the calculation of IGD, the selection of the reference set is critical. In our study, we used the problem reference set that came with the platEMO platform, which will be described in detail later.

Furthermore, we used the Wilcoxon rank sum test with a significance level of 0.05 to analyze the test data results of the algorithm, to determine whether one algorithm is statistically significantly different from another, as denoted by “\(+\)” (significantly better), “−” (significantly worse), or “\(\approx \)” (statistically similar) compared to the proposed method.

4.3 Algorithms for Comparison

In the experimental part, two sets of comparison algorithms are adopted. The first group consists of two hypervolume-based EMOAs, namely SMS-EMOA and HypE. The number of sampling points for HypE is set to 10000, which is the same as the setting in [17]. The second group consists of four advanced EMOAs, namely: AR-MOEA [17], SPEA2+SDE [13], GFM-MOEA [18] and BCE-IBEA [14].

5 Experimental Results and Discussions

5.1 Performance Comparisons on WFG Test Suite

Table 1 presents the results of the proposed method’s hypervolume indicator test on WFG1-9 with five conditions for each problem: 3, 5, 10, and 15 objectives. The proposed method achieved the optimal results in the vast majority of cases, with 35 out of 45 problems solved most efficiently, demonstrating its superior performance when compared to other state-of-the-art algorithms. HypE came in second place, producing the best results in only seven problems. Interestingly, HypE outperformed all other algorithms in the WFG3 problem, likely due to its unique approach to individual fitness calculations.

It is worth noting that in WFG1 problem, the proposed method’s performance was initially mediocre with only three objectives in comparison to BCEIBEA, GFMMOEA, and SPEA2SDE. However, as the number of objectives increased, starting from eight objectives, the performance of these Pareto-based EMOAs began to weaken, and by the time the number of objectives reached 15, the proposed method had significantly surpassed them, demonstrating its powerful ability to deal with higher-dimensional problems.

Overall, these results emphasize the superior performance of the proposed method in solving complex optimization problems with a higher number of objectives, further demonstrating its potential as a promising algorithm for solving real-world problems.

Table 2 reports the IGD test results of the proposed method on WFG1-9. The results demonstrated that, in most cases, BCEIBEA and GFMMOEA outperformed the proposed method and even surpassed all hypervolume-based EMOAs under the IGD indicator. This finding aligns with our intuition since BCEIBEA and GFMMOEA make environmental selection based on simulated PF and IGD, while the proposed method selects based on hypervolume, causing the proposed method’s performance under IGD indicator to be comparatively weak.

Moreover, when the hypervolume-based EMOAs encountered concave PF, the solution set tended to concentrate in the center of the PF due to the hypervolume operation mechanism, which also influenced the IGD indicator measurement of the solution set generated by the hypervolume-based EMOAs to a certain extent.

5.2 Performance Comparisons on DTLZ Test Suite

Table 3 presents the hypervolume indicator test results of the proposed method on DTLZ{1-7} and IDTLZ{1-2}. The proposed method was the best at solving 18 out of 45 problems, while SPEA2SDE, BCEIBEA, HypE, and SMSEMOA achieved the best results in 9, 7, 6, and 3 problems, respectively. These results demonstrate the competitive performance of the proposed method compared to other state-of-the-art algorithms in solving multi-objective optimization problems with different levels of difficulty.

Overall, the proposed method does not perform as well on the DTLZ test set as it does on the WFG test set. This can be attributed to the fact that DTLZ mainly evaluates an algorithm’s convergence, while the hypervolume metric measures both convergence and diversity but focuses more on diversity. This can be observed in the DTLZ1, DTLZ2, and DTLZ7 problems, where the main emphasis is on the diversity of EMOAs.

6 Conclusion

This paper proposes an algorithm for solving MaOPs. The algorithm extends the direction vector of the original \(R_2^{HCA}\) indicator to determine the intersection with each remaining solution in the solution set, and calculates the length of the line segment. This allows for the simultaneous calculation of the overall hypervolume contribution of solutions. Moreover, a data array storing R2 information is designed to simplify the computational complexity of the algorithm. In the experimental study, we compared our algorithm with two hypervolume-based EMOAs and four advanced EMOAs. We tested five cases - of WFG{1-9}, DTLZ{1-7}, and IDTLZ{1-2} - where the objective numbers were 3, 5, 10, and 15. The results show that our algorithm outperforms comparison algorithms.

In the future, we plan to integrate this algorithm into practical problems for experimental testing, such as multi-robot system task planning and UAV swarm decision-making. We also aim to expand the algorithm to computationally expensive MOPs, large-scale MOPs, and multi-modal MOPs.

References

Asafuddoula, M., Singh, H.K., Ray, T.: An enhanced decomposition-based evolutionary algorithm with adaptive reference vectors. IEEE Trans. Cybernet. 48(8), 2321–2334 (2017)

Bader, J., Zitzler, E.: HypE: an algorithm for fast hypervolume-based many-objective optimization. Evol. Comput. 19(1), 45–76 (2011)

Beume, N., Naujoks, B., Emmerich, M.: SMS-EMOA: multiobjective selection based on dominated hypervolume. Eur. J. Oper. Res. 181(3), 1653–1669 (2007)

Coello Coello, C.A., Reyes Sierra, M.: A study of the parallelization of a coevolutionary multi-objective evolutionary algorithm. In: Monroy, R., Arroyo-Figueroa, G., Sucar, L.E., Sossa, H. (eds.) MICAI 2004. LNCS (LNAI), vol. 2972, pp. 688–697. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-24694-7_71

Deb, K., Pratap, A., Agarwal, S., Meyarivan, T.: A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 6(2), 182–197 (2002)

Deb, K., Thiele, L., Laumanns, M., Zitzler, E.: Scalable test problems for evolutionary multiobjective optimization. In: Evolutionary Multiobjective Optimization: Theoretical Advances and Applications, pp. 105–145. Springer, London (2005). https://doi.org/10.1007/1-84628-137-7_6

Emmerich, M., Beume, N., Naujoks, B.: An EMO algorithm using the hypervolume measure as selection criterion. In: Coello Coello, C.A., Hernández Aguirre, A., Zitzler, E. (eds.) EMO 2005. LNCS, vol. 3410, pp. 62–76. Springer, Heidelberg (2005). https://doi.org/10.1007/978-3-540-31880-4_5

Farina, M., Amato, P.: On the optimal solution definition for many-criteria optimization problems. In: 2002 Annual Meeting of the North American Fuzzy Information Processing Society Proceedings. NAFIPS-FLINT 2002 (Cat. No. 02TH8622), pp. 233–238. IEEE (2002)

García, I.C., Coello, C.A.C., Arias-Montano, A.: MOPSOhv: a new hypervolume-based multi-objective particle swarm optimizer. In: 2014 IEEE Congress on Evolutionary Computation (CEC), pp. 266–273. IEEE (2014)

Huband, S., Hingston, P., Barone, L., While, L.: A review of multiobjective test problems and a scalable test problem toolkit. IEEE Trans. Evol. Comput. 10(5), 477–506 (2006)

Ishibuchi, H., Setoguchi, Y., Masuda, H., Nojima, Y.: Performance of decomposition-based many-objective algorithms strongly depends on pareto front shapes. IEEE Trans. Evol. Comput. 21(2), 169–190 (2016)

Li, B., Li, J., Tang, K., Yao, X.: Many-objective evolutionary algorithms: a survey. ACM Comput. Surv. (CSUR) 48(1), 1–35 (2015)

Li, M., Yang, S., Liu, X.: Shift-based density estimation for pareto-based algorithms in many-objective optimization. IEEE Trans. Evol. Comput. 18(3), 348–365 (2013)

Li, M., Yang, S., Liu, X.: Pareto or Non-Pareto: Bi-Criterion evolution in multiobjective optimization. IEEE Trans. Evol. Comput. 20(5), 645–665 (2015)

Qi, Y., Ma, X., Liu, F., Jiao, L., Sun, J., Wu, J.: MOEA/D with adaptive weight adjustment. Evol. Comput. 22(2), 231–264 (2014)

Shang, K., Ishibuchi, H., Ni, X.: R2-based hypervolume contribution approximation. IEEE Trans. Evol. Comput. 24(1), 185–192 (2019)

Tian, Y., Cheng, R., Zhang, X., Cheng, F., Jin, Y.: An indicator-based multiobjective evolutionary algorithm with reference point adaptation for better versatility. IEEE Trans. Evol. Comput. 22(4), 609–622 (2017)

Tian, Y., Zhang, X., Cheng, R., He, C., Jin, Y.: Guiding evolutionary multiobjective optimization with generic front modeling. IEEE Trans. Cybernet. 50(3), 1106–1119 (2018)

Zhang, Q., Li, H.: MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans. Evol. Comput. 11(6), 712–731 (2007)

Zhang, X., Tian, Y., Cheng, R., Jin, Y.: A decision variable clustering-based evolutionary algorithm for large-scale many-objective optimization. IEEE Trans. Evol. Comput. 22(1), 97–112 (2016)

Zitzler, E., Laumanns, M., Thiele, L.: SPEA 2: improving the strength pareto evolutionary algorithm. TIK report 103 (2001)

Zitzler, E., Thiele, L.: Multiobjective optimization using evolutionary algorithms — a comparative case study. In: Eiben, A.E., Bäck, T., Schoenauer, M., Schwefel, H.-P. (eds.) PPSN 1998. LNCS, vol. 1498, pp. 292–301. Springer, Heidelberg (1998). https://doi.org/10.1007/BFb0056872

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wen, C., Li, L., Ma, H. (2024). An Improved Hypervolume-Based Evolutionary Algorithm for Many-Objective Optimization. In: Xin, B., Kubota, N., Chen, K., Dong, F. (eds) Advanced Computational Intelligence and Intelligent Informatics. IWACIII 2023. Communications in Computer and Information Science, vol 1931. Springer, Singapore. https://doi.org/10.1007/978-981-99-7590-7_23

Download citation

DOI: https://doi.org/10.1007/978-981-99-7590-7_23

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-7589-1

Online ISBN: 978-981-99-7590-7

eBook Packages: Computer ScienceComputer Science (R0)