Abstract

Presently due to technological advancement in computational logic based on intelligent computing using neural networks, ANN has become one of the solution techniques which gives adaptability and self-learning capability to the antenna system. A technique of antenna parameter adaptation under different conditions has been carried out in this work. The present technology of machine learning and neural network computations give good support to various antenna parameters, design in an optimized manner. Demand for fast and highly accurate computations are often satisfied using the models of artificial neural network. The ANN training algorithms help in the simulation of the results to minimize the error with high-accuracy geometric dimensions. Various EM simulators are available for designing microstrip patch antenna, but they all need a number of iterations and time-consuming processes to get actual data and develop the real prototype. Artificial Neural Networks have the capability as a fast and flexible solution to EM modelling, simulation and optimization also has the ability to respond precisely to the inputs which are in the interval or having inequality constraints. This chapter presents the artificial neural network modeling of rectangular microstrip patch antenna in an X-band region and analysis of the ANN model by using nftool in MATLAB through three different kinds of training algorithms. The best validation and training performances are observed and noted with various epochs and varying the number of hidden neurons. The minimum error values and best regression plots are also observed. To verify this observation rectangular microstrip patch antennas are designed and studied. The results show the usefulness of the antenna in the X-band region.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Rectangular microstrip patch antenna

- Resonant frequency

- Dielectric constant

- Artificial neural network

- S11parameter

- Gain

Introduction

The microstrip patch antenna design requires a set of complex requirements, which are calculated through some equations. The resonant frequency of MPA is a very important parameter related to its geometry which consists of the length, width of patch and dielectric constant of the substrate. Due to the compactness and excellent radiator, MPA has wide area usefulness in recent mobile antenna, aircraft, remote sensing and satellite communication. Various EM simulators are available for designing MPA, but they all need a number of iterations and time-consuming processes to get actual data and develop a real prototype. Though neural networks have the capability as a fast and flexible solution to EM modelling, simulation and optimization. Artificial neural network accurately respond to a new data set in a determined interval of interest, which is defined during the training phase. They are also able to provide results closer to the reality, once calculated data set is used (Güney et al. 2001; Haykin 2001; Silva 2006; Karaboga et al. 1999). MLP is a multi layer perception is very suitable for modelling high-dimensional and very non correlated problems.The multi layer perception mainly has three layers, which include one or more hidden layers.. The design of a rectangular patch antenna has been done using MLP (Ali Heidari 2011). A detailed study of multilayer perceptron neural networks has been conducted in Kala et al. (2013). Artificial Neural Network design of a microstrip antenna is clearly explained in Tamboli and Nikam (2013), Turker et al. (2004) and Thomas et al. (2014) displays the entire neural network toolbox concept. Levenberg–Marquardt (LM) algorithm, Bayesian Regularization(BR) training algorithm and Scaled Conjugate Gradient(SCG) training algorithm are generally used to train the multi layer perception for modelling. A stacked antenna with 2 radiating patches using neural network training algorithm has been proposed to find resonant frequencies (Jain et al. 2009). The network takes the various design parameters of the patch antenna as input and gives both upper resonant frequency and lower resonant frequency (Bisht and Malik 2022; Pandey et al. 2022; Vishnoi et al. 2023). In this chapter RMPA for the X-band region has designed with HFSS and S11 parameters and maximum gains are discussed. It also analyzed through ANN modelling and their performances are compared through different training algorithms, such as Levenberg–Marquardt training algorithm, Bayesian Regularization training algorithm and Scaled Conjugate Gradient training algorithm.

Methods of Analysis for Proposed Model

Artificial Neural Network is basically composed of several computational units known as neurons. They are mainly multiple processing units and connected through communication channels called links, associated with their respective weights. The weights used for storing the knowledge also represent the biological synapses of neurons, which are the existing connections between them. The model of an individual neuron consists of following components:

-

Weights which are a set of synapses.

-

A function that combines the weighted input signals.

-

An activation function to limit the amplitude of the signal output of the neuron.

There are so many methods available for the design of microstrip patch antenna. To get proper result, it is very difficult to develop real prototypes for specific applications. So the ANN technique is the best option to get the design parameters.

From the physical parameters of microstrip antenna, the resonant frequency can be estimated to use a model. Other parameters like radiation pattern, bandwidth and directivity can also be calculated. Some methods commonly used are the transmission line, cavity and full wave. The transmission line method (TLM) is an approximate method which is considered to be the simplest method and it provides good insight of mechanism of radiation, however, is the least accurate (Turker et al. 2006).

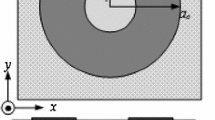

In order to generate the network learning a training database has to create which must contain both the network input and the required output. In the proposed method of ANN design, one model has been analyzed as shown below in Fig. 15.1.

The analysis of proposed models has been developed by using the Levenberg–Marquardt training algorithm, Bayesian Regularization training algorithm and Scaled conjugate gradient training algorithm with MATLAB17. The data set is calculated from microstrip patch calculator. The generated data is used for training and testing of ANN for both the analysis model. In our case the database contains 25 examples. Considering this ANN technique as an effective tool for nonlinear approximations has been used in this work to identify the relationship between the physical parameters of antenna system and its performances. In the analysis design as shown in Fig. 15.1, input data are resonant frequency(fr), permitivity of dielectric substrate(ϵr) and height of substrate(h). The output data are length(L) and width(W) of a radiating patch antenna, whose ranges are given in Table 15.1.

Statistical Analysis of Proposed Method

In this proposed model, three statistical indices such as Levenberg–Marquardt, Scaled conjugate gradient and Bayesian Regularization training algorithms are used for the accuracy evaluation of the performances and results.

-

Levenberg–Marquardt training algorithm: It is damped least square(DLS) used to solve the generic curve fitting problems with the local minimum finding which may not be the global minimum, shown in Eq. 15.1.

$$ {\text{S(}}\alpha {) = }\sum\nolimits_{j = 1}^{n} {\left[ {y_{j} - f\left( {x_{j} ,\,\alpha } \right)} \right]}^{2} $$(15.1)where n is a given set of independent and dependent variable (xj,yj). The algorithm find the parameter α of the model curve f(x,α) so that sum of squares of deviations S(α) is minimized.

-

Scaled conjugate gradient training algorithm: SCG algorithm is a feed-forward and supervised algorithm for Artificial Neural Network which shows that in connections there are no loops between the units, shown in Eq. 15.2.

$$ S_{k} = {{\left[ {E\left( {W_{k} + \sigma_{k} P_{k} } \right)E\left( {W_{k} } \right)} \right]} \mathord{\left/ {\vphantom {{\left[ {E\left( {W_{k} + \sigma_{k} P_{k} } \right)E\left( {W_{k} } \right)} \right]} {\sigma_{k} }}} \right. \kern-0pt} {\sigma_{k} }} $$(15.2) -

Bayesian Regularization training algorithm: BR algorithm reduces the need for lengthy cross-validation and it is more robust than the standard back-propagation methods, where to solve non-linear least squares problems, shown in Eq. 15.3.

$$X=\mathrm{arg}Max P({C}_{b})\pi np\left(\frac{{y}_{i}}{{C}_{b}}\right).$$(15.3)

The neural fitting tool of MATLAB is mainly used to analyze the performances and results.

Antenna Designs

Within X-band 8–12 GHz four rectangular microstrip patch antennas are designed in HFSS with the Permitivity of dielectric substrate 2.2 for 8 GHz, 9 GHz, 10 GHz and 2.94 for 8.5 GHz as mentioned in Table 15.2.

The design of antenna which is operating in 8 GHz with inset fed is shown in Figure 15.2.

Results and Discussion

The proposed ANN model is trained with three different types of training algorithm, considering the number of hidden neurons 10,20,30,40 and 50. All are tested with two categories of number of data samples. In first category out of 25 samples, 17 samples are taken as training data, 4 samples are taken as validation data and 4 samples are considered to testing data. The performance with number of epoch, gradient, regression and zero error point values are noted and mentioned in Table 15.3. The average value of performances and average zero error point, for all 3 training algorithms are calculated.

All the readings for 3 training algorithms are taken from the respective plots, for the analysis. The best validation performance values are taken from the plot of mean square error vs number of epochs. With hidden neurons 10, for category I, the LM training algorithm gives the best validation performance as 0.12796 at epoch 5 as shown in Figs. 15.3 and 15.4 shows the gradient as 1.2112e-8. The error histogram diagram with 20 bins is shown in Fig. 15.5, for the three steps of training data, validation data and testing data in ANN modelling. The zero error has come at 0.006322 and illustrated with a yellow standing line in the graph with 35 instances in training set. The regression value comes best as 0.99764 for the same and shown in Fig. 15.6. With 40 hidden neurons in the same case, error comes to very minimum as −0.0171. Though its regression is 0.91083 less than the previous case but it is a good value. The average of performance with LM training algorithm is 1.9906. Similarly the average performance value for BR and SCR training algorithm are 1.21e-08 and 1.790274 respectively. Performance of LM training algorithm is better than BR and SCR training algorithm whereas error is minimum in BR training algorithm as compared to others.

The number of epochs are very large in the Bayesian regularization training algorithm as compared to LM and SCR training algorithm. So regression is near about to 1 and error is also minimum in BR alg. Figure 15.7 shows the best training performance of category I with the Bayesian Regularization algorithm with 10 hidden neurons. When hidden neurons are 30, the zero error comes at −6.5e-5 shown in Fig. 15.8.

Similarly in the second category out of 25 data, 15 samples are considered for training data, 5 samples are for validation and 5 samples for testing. All of their performances with various epochs, gradient, regression and zero error point values are mentioned in Table 15.4. The number of epochs in BR is large as compared to SCR and LM algorithm. Regression values are near to 1 with less values of error. In this case average performance of SCR training algorithm is 9.654902 which is maximum as compared to other two training algorithm, but average zero error point value is minimum in the BR training algorithm as compared to LM and SCR training algorithms.

The S11 parameters of all four designed antennas are −28.8 dB, −10.8 dB, −11 dB and −18.7 dB for 8Ghz, 8.5 GHz, 9 GHz and 10Ghz respectively as mentioned in Table 15.2. The best value for S11 parameter comes for 8 GHz as shown in Fig. 15.9. Similarly the simulated maximum gain for designed antennas is 7.9 dB, 7.6 dB, 7.4 dB and 6.8 dB for 8Ghz, 8.5 GHz, 9 GHz and 10Ghz respectively. The best value for maximum gain is 7.9 dB for 8 GHz resonant frequency as shown in Fig. 15.10.

Conclusion

The physical parameters of rectangular microstrip patch antenna in X-band(8–12 GHz) region are considered as the dataset for ANN modelling in which input data are resonant frequency(fr), permitivity of dielectric substrate(ϵr) and height of substrate(h). The output data are length(L) and width(W) of radiating patch antenna. The model is analyzed with three different kinds of training algorithm such as Levenberg–Marquardt training algorithm, Bayesian Regularization training algorithm, and Scaled conjugate gradient training algorithm with MATLAB17. The analysis has been carried out by considering different numbers of data sets for training, validation and testing in two catagories. In each case a number of hidden neurons are varying for each of the three algorithms. For all cases performances with number of epochs, gradients, regression and error values are observed. The average value of performance and zero error point for all three training algorithms are calculated. RMPA is designed for different resonant frequencies within X band and simulated S11 parameters and maximum gains are observed.

References

Ali Heidari A, Dadgarnia A (2011) Design and optimization of a circularly polarized microstrip antenna for GPS applications using ANFIS and GA, communication research laboratory. Yazd University, IEEE

Bisht N, Malik PK (2022) Adoption of microstrip antenna to multiple input multiple output microstrip antenna for wireless applications: a review. In: Singh PK, Singh Y, Chhabra JK, Illés Z, Verma C (eds) Recent innovations in computing. lecture notes in electrical engineering, vol 855. Springer, Singapore. https://doi.org/10.1007/978-981-16-8892-8_15

Güney K, Sagiroglu S, Erler M (2001) Generalized neural method to determine resonant frequencies of various microstrip antennas. Int J RF Microwave Comput Aided Eng 12(1):131–139

Haykin S, Neurais R (2001) Princípios e prática, Bookman, 2nd Ed

Jain SK, Sinha SN, Patnaik A (2009) Analysis of coaxial fed dual patch multilayer X/Ku band antenna using artificial neural networks. In: World congress on nature & biologically inspired computing (NaBIC), pp 1111–1114

Kala P, Saxena R, Kumar M, Kumar A, Pant R (2013) Design Of rectangular patch antenna using MLP artificial neural network. J Glob Res Comput Sci 3(5)

Karaboga D, Guney K, Sagiroglu S, Erler M (1999) Neural computation of resonant frequency of electrically thin and thick rectangular microstrip antennas. IEEE Proc Microw Antennas Propag 126(2), 155–159

Pandey U, Gupta NP, Malik P (2022) Review on miniaturized flexible wearable antenna for body area network. In: Singh PK, Singh Y, Chhabra JK, Illés Z, Verma C (eds) Recent innovations in computing. lecture notes in electrical engineering, vol 855. Springer, Singapore. https://doi.org/10.1007/978-981-16-8892-8_4

da Silva PL (2006) Modelagem de Superfícies Seletivas de Freqüência Antenas de Microstrip Utilizando Redes Neurais Artificiais. MSc thesis, Federal University of Rio Grande do Norte, Natal

Tamboli ZJ, Nikam PB (2013) Study of multilayer perceptron neural network for antenna characteristics analysis. Int J Adv Res Comput Sci Softw Eng 3(8)

Thomas JS, Thomas JS, Mary Neebha T, Nesasudha M (2014) Improvement of microstrip patch antenna parameters for wireless communication. IEEE Xplore Digit Libr

Turker N, Gunes F, Yildirim T (2004) Artificial neural design of microstrip antennas. Yildiz Technical University, Istanbul, Turkey

Turker N, Gunes F, Yildirim T (2006) Artificial neural design of microstrip antennas. Turkey J Elect Eng 14(3):445–453

Vishnoi V, Singh P, Budhiraja I, Malik PK (2023). Multiband dual-layer microstrip patch antenna for 5G wireless applications. In: Singh PK, Wierzchoń ST, Tanwar S, Rodrigues JJPC, Ganzha M (eds) Proceedings of third international conference on computing, communications, and cyber-security. Lecture notes in networks and systems, vol 421. Springer, Singapore. https://doi.org/10.1007/978-981-19-1142-2_7

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Pani, S., Tripathy, M.R., Agarwal, S. (2023). ANN Modeling and Performance Comparison of X-Band Antenna. In: Malik, P.K., Shastry, P.N. (eds) Internet of Things Enabled Antennas for Biomedical Devices and Systems. Springer Tracts in Electrical and Electronics Engineering. Springer, Singapore. https://doi.org/10.1007/978-981-99-0212-5_15

Download citation

DOI: https://doi.org/10.1007/978-981-99-0212-5_15

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-0211-8

Online ISBN: 978-981-99-0212-5

eBook Packages: EngineeringEngineering (R0)