Abstract

The concept of weights on elements of Pythagorean fuzzy sets (PFSs) was rarely considered in the computations of the correlation coefficient between Pythagorean fuzzy sets (WCCPFSs), which in so doing could lead to some avoidable errors. In this chapter, we propose some new methods of computing WCCPFSs with better performance index than the existing ones defined in the Pythagorean fuzzy domain. The main aim of this chapter is to provide improved methods of computing WCCPFSs for the enhancement of efficient applications in multi-criteria decision-making (MCDM). It is mathematically investigated that the new weighted correlation coefficient methods satisfy the conditions for correlation coefficient between Pythagorean fuzzy sets (CCPFSs). Some numerical illustrations are considered to validate the advantage of the new methods of computing WCCPFSs in terms of accuracy with respect to the existing ones. Finally, we demonstrate the applications of the new weighted correlation coefficients alongside the existing ones in MCDM problems to augment juxtaposition analysis.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Correlation coefficient

- Intuitionistic fuzzy set

- Multi-criteria decision-making

- Pythagorean fuzzy set

- Weighted correlation coefficient

1 Introduction

Many real-life problems are enmeshed with uncertainties hence making decision-making a herculean task. To address such common challenges, Zadeh [64] introduced fuzzy sets to resolve/curb the embedded uncertainties in decision-making. Some decision-making problems could not be controlled with a fuzzy approach because fuzzy set only considered membership grade whereas, many real-life problems have the component of both membership grade and non-membership grade with the possibility of hesitation. Such cases can best be addressed by IFSs [1, 2]. IFS is described with membership grade \(\mu \), non-membership grade \(\nu \) and hesitation margin \(\pi \) in such a way that their sum is one and \(\mu +\nu \) is less than or equal to one. Due to the usefulness of IFS, it has been applied to tackle pattern recognition problems [43, 57], career determination/appointment processes [7, 18, 19, 23] and other MCDM problems discussed in [3,4,5, 21, 22, 24, 46, 51, 52]. Some improved similarity and distance measures based on the set pair analysis theory with applications have been studied [39, 40].

The idea of IFS though vital, cannot be suitable in a condition where a decision-maker wants to take decision in a multi-criteria problem when \(\mu +\nu \) is greater than one. Suppose \(\mu =\frac{1}{2}\) and \(\nu =\frac{3}{5}\), clearly IFS cannot model such a situation. This provoked Atanassov [2] to propose intuitionistic fuzzy set of second type or Pythagorean fuzzy sets (PFSs) [58, 61] to generalize IFSs such that \(\mu +\nu \) is also greater than one and \(\mu ^2+\nu ^2+\pi ^2=1\). PFS is a special case of IFS with additional conditions and thus has more ability to restraint hesitations more appropriate with higher degree of accuracy. The concept of PFSs have been sufficiently explored by different authors so far [8, 13, 60]. Some new generalized Pythagorean fuzzy information and aggregation operators using Einstein operations have been studied in [26, 31] with application to decision-making. Garg [32] studied some methods for strategic decision-making with immediate probabilities in Pythagorean fuzzy environment, and the idea of linguistic PFSs has been studied with application to multi-attribute decision-making problems [34]. The notion of interval-valued PFSs has been explicated with regards to score function and exponential operational laws with applications [28, 29, 33]. Many applications of PFSs have been discussed in pattern recognitions [10, 12, 15], TOPSIS method applications [27, 66], MCDM problems using different approaches [9, 11, 35, 58, 59, 61, 62, 67] and other applicative areas [6, 20, 29, 36, 65]. Several measuring tools have been employed to measure the similarity and dissimilarity indexes between PFSs with applications to MCDM problems as discussed in [8, 11, 12, 15, 20].

The concept of correlation coefficient which is a vital tool for measuring interdependency, similarity, and interrelationship between two variables was first studied in statistics by Karl Pearson in 1895 to measure the interrelation between two variables or data. By way of extension, numerous professions like engineering and sciences among others have applied the tool to address their peculiar challenges. To equip correlation coefficient to better handle fuzzy data, the idea was encapsulated into intuitionistic fuzzy context and applied to many MCDM problems. The first work on the correlation coefficient between IFSs (CCIFSs) was carried out by Gerstenkorn and Manko [42]. Hung [44] used a statistical approach to develop CCIFSs by capturing only the membership and non-membership functions of IFSs, and CCIFSs was proposed based on centroid method in [45]. Mitchell [48] studied a new CCIFSs based on integral function. Park et al. [49] and Szmidt and Kacprzyk [53] extended the method in [44] by incorporating the hesitation margin of IFS. Liu et al. [47] introduced a new CCIFSs with the application. Garg and Kumar [38] proposed novel CCIFSs based on set pair analysis and applied the approach to solve some MCDM problems. The concept of correlation coefficient and its applications have been extended to complex intuitionistic fuzzy and intuitionistic multiplicative environments, respectively [30, 41]. TOPSIS method based on correlation coefficient was proposed in [37] to solve decision-making problems with intuitionistic fuzzy soft set information. Several other methods of CCIFSs have been studied and applied to decision-making problems [14, 54, 56, 62, 63].

Garg [25] initiated the study of correlation coefficient between Pythagorean fuzzy sets (CCPFSs) by proposing two novel correlation coefficient techniques to determine the interdependency between PFSs, and applied the techniques to MCDM problems. Thao [55] extended the work on CCIFSs in [54] to CCPFSs and applied the approach to solve some MCDM problems. Singh and Ganie [50] proposed some CCPFSs procedures with applications, but the procedures do not incorporate all the orthodox parameters of PFSs. Ejegwa [16] proposed a triparametric CCPFSs method which generalized one of the CCPFSs techniques studied in [25], and applied the method to decision-making problems. Though one cannot doubt the important of distance and similarity measures as viable soft computing tools, the preference for correlation coefficient measure in information measure theory is because of its considerations of both similarity (which is the dual of distance) and interrelationship/interdependence indexes between PFSs.

In the computation of CCPFSs, the idea of weights of the elements of sets upon which PFSs are built are often ignored, which many times lead to misleading results. Thus, Garg [25] proposed some weighted correlation coefficients between PFSs (WCCPFSs). From the work of Garg [25], we are enthused to provide improved methods of computing WCCPFSs for the enhancement of efficient application. In this chapter, some new WCCPFSs methods are proposed which are provable to be more reliable with better performance indexes than the existing ones. The objectives of the work are to

-

(i)

explore the WCCPFSs methods studied in [25] and propose some new WCCPFSs methods to enhance accuracy and reliability in measuring CCPFSs.

-

(ii)

mathematically corroborate the proposed WCCPFSs methods with the axiomatic conditions for CCPFSs, and numerically verify the authenticity of the proposed methods over the existing ones.

-

(iii)

establish the applications of the proposed methods in some MCDM problems.

The rest of the chapter is delineated as follow; Sect. 2 briefly revises some basic notions of PFSs and Sect. 3 discusses some CCPFSs methods studied in [16, 25] with numerical verifications. Section 4 discusses existing WCCPFSs methods, introduces new WCCPFSs methods and numerically verifies their authenticity. Section 5 demonstrates the application of the new WCCPFSs methods in pattern recognition and medical diagnosis problems, all represented in Pythagorean fuzzy values. Section 6 concludes the chapter and gives some areas for future research.

2 Basic Notions of Pythagorean Fuzzy Sets

Definition 2.1

[1] An intuitionistic fuzzy set of X denoted by \(\mathsf {A}\) (where X is a non-empty set) is an object having the form

where the functions \(\mu _\mathsf {A}(x),\; \nu _\mathsf {A}(x): X\rightarrow [0,1]\) define the degrees of membership and non-membership of the element \(x\in X\) such that

For any intuitionistic fuzzy set \(\mathsf {A}\) of X, \(\pi _\mathsf {A}(x)= 1-\mu _\mathsf {A}(x)-\nu _\mathsf {A}(x)\) is the intuitionistic fuzzy set index or hesitation margin of \(\mathsf {A}\).

Definition 2.2

[58] A Pythagorean fuzzy set of X denoted by \(\mathsf {A}\) (where X is a non-empty set) is the set of ordered pairs defined by

where the functions \(\mu _{\mathsf {A}}(x),\; \nu _{\mathsf {A}}(x):X\rightarrow [0,1]\) define the degrees of membership and non-membership of the element \(x\in X\) to \(\mathsf {A}\) such that \(0\le (\mu _{\mathsf {A}}(x))^2 + (\nu _{\mathsf {A}}(x))^2 \le 1\). Assuming \((\mu _{\mathsf {A}}(x))^2 + (\nu _{\mathsf {A}}(x))^2 \le 1\), then there is a degree of indeterminacy of \(x\in X\) to \(\mathsf {A}\) defined by \(\pi _{\mathsf {A}}(x)=\sqrt{1-[(\mu _{\mathsf {A}}(x))^2 + (\nu _{\mathsf {A}}(x))^2]}\) and \(\pi _{\mathsf {A}}(x)\in [0,1]\).

Definition 2.3

[61] Suppose \(\mathsf {A}\) and \(\mathsf {B}\) are PFSs of X, then

-

(i)

\(\overline{\mathsf {A}}=\lbrace \langle \dfrac{\nu _{\mathsf {A}}(x), \mu _{\mathsf {A}}(x)}{x} \rangle |x\in X \rbrace \).

-

(ii)

\(\mathsf {A}\cup \mathsf {B}=\lbrace \langle \max (\dfrac{\mu _{\mathsf {A}}(x),\mu _{\mathsf {B}}(x)}{x}), \min (\dfrac{\nu _{\mathsf {A}}(x),\nu _{\mathsf {B}}(x)}{x}) \rangle |x\in X \rbrace \).

-

(iii)

\(\mathsf {A}\cap \mathsf {B}=\lbrace \langle \min (\dfrac{\mu _{\mathsf {A}}(x),\mu _{\mathsf {B}}(x)}{x}), \max (\dfrac{\nu _{\mathsf {A}}(x),\nu _{\mathsf {B}}(x)}{x}) \rangle |x\in X \rbrace \).

It follows that, \(\mathsf {A}=\mathsf {B}\) iff \(\mu _{\mathsf {A}}(x)=\mu _{\mathsf {B}}(x)\), \(\nu _{\mathsf {A}}(x)=\nu _{\mathsf {B}}(x)\, \forall x\in X\), and \(\mathsf {A}\subseteq \mathsf {B} \) iff \(\mu _{\mathsf {A}}(x)\le \mu _{\mathsf {B}}(x)\), \(\nu _{\mathsf {A}}(x)\ge \nu _{\mathsf {B}}(x)\) \(\forall x\in X\). We say \(\mathsf {A}\subset \mathsf {B}\) iff \(\mathsf {A}\subseteq \mathsf {B}\) and \(\mathsf {A}\ne \mathsf {B}\).

Remark 2.4

Suppose \(\mathsf {A}\), \(\mathsf {B}\) and \(\mathsf {C}\) are PFSs of X. By Definition 2.3, the following properties hold:

-

(i)

$$\begin{aligned} \overline{\overline{\mathsf {A}}}=\mathsf {A} \end{aligned}$$

-

(ii)

$$\begin{aligned} \mathsf {A}\cap \mathsf {A}=\mathsf {A}\end{aligned}$$$$\begin{aligned}\mathsf {A}\cup \mathsf {A}=\mathsf {A}\end{aligned}$$

-

(iii)

$$\begin{aligned} \mathsf {A}\cap \mathsf {B}=\mathsf {B}\cap \mathsf {A}\end{aligned}$$$$\begin{aligned} \mathsf {A}\cup \mathsf {B}=\mathsf {B}\cup \mathsf {A}\end{aligned}$$

-

(iv)

$$\begin{aligned} \mathsf {A}\cap (\mathsf {B}\cap \mathsf {C})=(\mathsf {A}\cap \mathsf {B})\cap \mathsf {C}\end{aligned}$$$$\begin{aligned} \mathsf {A}\cup (\mathsf {B}\cup \mathsf {C})=(\mathsf {A}\cup \mathsf {B})\cup \mathsf {C}\end{aligned}$$

-

(v)

$$\begin{aligned} \mathsf {A}\cap (\mathsf {B}\cup \mathsf {C})= (\mathsf {A}\cap \mathsf {B})\cup (\mathsf {A}\cap \mathsf {C})\end{aligned}$$$$\begin{aligned} \mathsf {A}\cup (\mathsf {B}\cap \mathsf {C})= (\mathsf {A}\cup \mathsf {B})\cap (\mathsf {A}\cup \mathsf {C})\end{aligned}$$

-

(vi)

$$\begin{aligned} \overline{(\mathsf {A}\cap \mathsf {B})} =\overline{\mathsf {A}}\cup \overline{\mathsf {B}}\end{aligned}$$$$\begin{aligned}\overline{(\mathsf {A}\cup \mathsf {B})}=\overline{\mathsf {A}}\cap \overline{\mathsf {B}}.\end{aligned}$$

Definition 2.5

[12] Pythagorean fuzzy pairs (PFPs) or Pythagorean fuzzy values (PFVs) is characterized by the form \(\langle a,b\rangle \) such that \(a^2+b^2\le 1\) where \(a,b\in [0,1]\). PFPs are used for the assessment of objects for which the components (a and b) are interpreted as membership degree and non-membership degree or degree of validity and degree of non-validity, respectively.

3 Correlation Coefficients Between PFSs

Correlation coefficient in the Pythagorean fuzzy environment was pioneered by the work of Garg [25]. The concept of CCPFSs is very valuable in solving MCDM problems. What follows is the axiomatic definition of CCPFSs.

Definition 3.1

[16] Suppose \(\mathsf {A}\) and \(\mathsf {B}\) are PFSs of X. Then, the CCPFSs for \(\mathsf {A}\) and \(\mathsf {B}\) denoted by \(\mathcal {K}(\mathsf {A}, \mathsf {B})\) is a measuring function \(\mathcal {K}:PFS\times PFS\rightarrow [0,1]\) which satisfies the following conditions;

-

(i)

\(\mathcal {K}(\mathsf {A}, \mathsf {B})\in [0,1]\),

-

(ii)

\(\mathcal {K}(\mathsf {A}, \mathsf {B})=\mathcal {K}(\mathsf {B}, \mathsf {A})\),

-

(iii)

\(\mathcal {K}(\mathsf {A}, \mathsf {B})=1\) if and only if \(\mathsf {A}=\mathsf {B}\).

Now, we recall the existing CCPFSs methods in [16, 25] as follows:

3.1 Some Existing/New CCPFSs Methods

Assume \(\mathsf {A}\) and \(\mathsf {B}\) are PFSs of \(X=\lbrace x_i\rbrace \) for \(i=1,\ldots , n\). Then, the CCPFSs for \(\mathsf {A}\) and \(\mathsf {B}\) as in [25] are as follows:

and

where

Ejegwa [16] generalized Eq. (3) as follows:

where

and

for \(k=1,\ldots , 4\).

In particular, for \(k=3\), we have

where

and

By modifying Eq. (10), we obtain the following new CCPFSs methods as follows:

and

where \(C(\mathsf {A}, \mathsf {A})\), \(C(\mathsf {B}, \mathsf {B})\) and \(C(\mathsf {A}, \mathsf {B})\) are equivalent to Eqs. (11) and (12). Certainly, \(\mathcal {K}_3(\mathsf {A}, \mathsf {B})\in [0,1]\), \(\mathcal {K}_4(\mathsf {A}, \mathsf {B})\in [0,1]\) and \(\mathcal {K}_5(\mathsf {A}, \mathsf {B})\in [0,1]\), respectively.

Proposition 3.2

The CCPFSs \(\mathcal {K}_4(\mathsf {A}, \mathsf {B})\) and \(\mathcal {K}_5(\mathsf {A}, \mathsf {B})\) are equal if and only if \(C(\mathsf {A}, \mathsf {A})=C(\mathsf {B}, \mathsf {B})\).

Proof

Straightforward. \(\square \)

Remark 3.3

If \(\mathcal {K}_4(\mathsf {A}, \mathsf {B})=\mathcal {K}_5(\mathsf {A}, \mathsf {B})\) and \(C(\mathsf {A}, \mathsf {A})\ne C(\mathsf {B}, \mathsf {B})\), then it must be as a result of approximation in the computational processes.

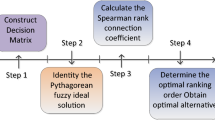

3.1.1 Flowchart for the New CCPFSs Methods

3.2 Numerical Illustrations for Computing CCPFSs

Here, we give examples of PFSs and apply the CCPFSs methods to find the interrelationship between the PFSs. Assume that \(\mathsf {A}\), \(\mathsf {B}\), and \(\mathsf {C}\) are PFSs of \(X=\lbrace a,b,c\rbrace \) such that

and

Now, we find the correlation coefficients between \((\mathsf {A}, \mathsf {C})\), and \((\mathsf {B}, \mathsf {C})\), respectively, using Eqs. (3), (4), (10), (13), and (14).

By using Eqs. (3) and (4), we obtain

Hence,

By using Eqs. (10), (13), and (14), we have

Hence,

3.2.1 Comparison of the New Methods of Computing CCPFSs with the Existing Methods

Table 1 contains the computational results for easy analysis.

From Table 1, we infer that the (i) CCPFSs methods via maximum approach in [16, 25] cannot determine the interrelationship between almost two equal PFSs with respect to an unrelated PFS, (ii) new CCPFSs methods are very reliable and can determine the interrelationship between almost two equal PFSs with respect to an unrelated PFS. Again, the new CCPFSs methods have better performance indexes when compare to the ones in [16, 25]. From the computations,we conclude that \((\mathsf {B},\mathsf {C})\) are more related to each other than \((\mathsf {A},\mathsf {C})\) because

4 Some Existing/New WCCPFSs Methods

In many applicative areas, different elements of sets have different weights. In order to have a reliable interdependence index between PFSs, the impact of the weights must be put into consideration. Suppose \(\mathsf {A}\) and \(\mathsf {B}\) are PFSs of \(X=\lbrace x_i\rbrace \) for \(i=1,\ldots ,n\) such that the weights of the elements of X is a set \(\alpha =\lbrace \alpha _1, \alpha _2,\ldots , \alpha _n\rbrace \) with \(\alpha _i\ge 0\) and \(\sum ^n_{i=1} \alpha _i=1\).

4.1 Some Existing WCCPFSs Methods

We recall some WCCPFSs methods proposed by Garg [25] as follows:

and

where

, and

4.2 New Methods of Computing WCCPFSs

By modifying Eqs. (10), (13) and (14), we have the following new WCCPFSs \(\mathsf {A}\) and \(\mathsf {B}\):

and

where

and

Proposition 4.1

The WCCPFSs \(\mathcal {\tilde{K}}_4(\mathsf {A}, \mathsf {B})\) and \(\mathcal {\tilde{K}}_5(\mathsf {A}, \mathsf {B})\) are equal if and only if \(C_\alpha (\mathsf {A}, \mathsf {A})=C_\alpha (\mathsf {B}, \mathsf {B})\).

Proof

Straightforward. \(\square \)

Proposition 4.2

The WCCPFSs \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\), \(\mathcal {\tilde{K}}_4(\mathsf {A}, \mathsf {B})\) and \(\mathcal {\tilde{K}}_5(\mathsf {A}, \mathsf {B})\) are CCPFSs.

Proof

We are to prove that \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\), \(\mathcal {\tilde{K}}_4(\mathsf {A}, \mathsf {B})\) and \(\mathcal {\tilde{K}}_5(\mathsf {A}, \mathsf {B})\) are CCPFSs. First, we show that \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\) is a CCPFS. Thus, we verify that \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\) satisfies the conditions in Definition 3.1.

Clearly, \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\in [0,1]\) implies \(0\le \mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\le 1\). Certainly, \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\ge 0\) since \(C_{\alpha }(\mathsf {A}, \mathsf {B})\ge 0\) and \([C_{\alpha }(\mathsf {A}, \mathsf {A}),C_{\alpha }(\mathsf {B}, \mathsf {B})]\ge 0\). Now, we prove that \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\le 1\). Assume we have the following:

But, \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})=\dfrac{C_\alpha (\mathsf {A}, \mathsf {B})}{\max [C_\alpha (\mathsf {A}, \mathsf {A}),C_\alpha (\mathsf {B}, \mathsf {B})]}\). By Cauchy–Schwarz’s inequality, we get

Thus,

So \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\le 1\). Hence, \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\in [0,1]\).

Certainly, \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})=\mathcal {\tilde{K}}_3(\mathsf {B}, \mathsf {A})\), so we omit details. Also, we show that \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})=1\) \(\Leftrightarrow \) \(\mathsf {A}=\mathsf {B}\). Suppose \(\mathsf {A}=\mathsf {B}\), then we obtain

The converse is straightforward. Therefore, \(\mathcal {\tilde{K}}_3(\mathsf {A}, \mathsf {B})\) is a CCPFS. The proofs for \(\mathcal {\tilde{K}}_4(\mathsf {A}, \mathsf {B})\) and \(\mathcal {\tilde{K}}_5(\mathsf {A}, \mathsf {B})\) are similar.

\(\square \)

4.2.1 Flowchart for the New WCCPFSs Methods

4.3 Numerical Verifications of the WCCPFSs Methods

By using the information in Subsection 3.2, and putting into consideration the effect of the weights of the elements of \(X=\lbrace a,b,c\rbrace \), we compute the interdependence indexes of \(\mathsf {A}\) and \(\mathsf {B}\). Assume \(\alpha =\lbrace 0.4, 0.32, 0.28\rbrace \), and using Eqs. (15) and (16), we obtain

Hence,

By using Eqs. (19), (20) and (21), we have

Hence,

4.3.1 Comparison of the New Methods of Computing WCCPFSs with the Existing Methods

Table 2 contains the computational results for easy analysis.

By comparing Tables 1 and 2, it is not superfluous to say that WCCPFSs give a better measure of interrelationship. This bespeaks the impact of weights on measuring correlation coefficient. From Table 2, we surmise that the (i) WCCPFSs techniques via maximum method in [25] and \(\mathcal {\tilde{K}}_3\) cannot determine the interrelationship between almost two equal PFSs with respect to an unrelated PFS, (ii) new WCCPFSs techniques are more reasonable and accurate and can determine the interrelationship between almost two equal PFSs with respect to an unrelated PFS. Again, the new WCCPFSs techniques have better performance indexes in contrast to the ones in [25]. From the computations, we conclude that \((\mathsf {B},\mathsf {C})\) are more related to each other than \((\mathsf {A},\mathsf {C})\).

5 Determination of Pattern Recognition and Medical Diagnostic Problem via WCCPFSs

In this section, we apply the WCCPFSs methods discussed so far to problems of pattern recognition and medical diagnosis to ascertain the more efficient approach and agreement of decision via the WCCPFSs techniques.

5.1 Applicative Example in Pattern Recognition

Pattern recognition is the process of identifying patterns by using machine learning procedure. Pattern recognition has a lot to do with artificial intelligence and machine learning. The idea of pattern recognition is important because of its application potential in neural networks, software engineering, computer vision, etc. Assume there are three pattern \(\mathsf {C}_i\), represented in Pythagorean fuzzy values in \(X=\lbrace x_i\rbrace \), for \(i=1,\ldots ,3\) and \(\alpha =\lbrace 0.4,0.3,0.3\rbrace \). If there is an unknown pattern \(\mathsf {P}\) represented in Pythagorean fuzzy values in \(X=\lbrace x_i\rbrace \). The Pythagorean fuzzy representations of these patterns are in Table 3.

To enable us to classify \(\mathsf {P}\) into any of \(\mathsf {C}_i\),\(i=1,2,3\), we deploy the WCCPFSs in [25] and the proposed WCCPFSs as follows:

Using Eqs. (15) and (16), we obtain

Hence,

Using Eqs. (19), (20) and (21), we have

Hence,

Table 4 presents the results for glance analysis.

From Table 4, \(\mathsf {P}\) is suitable to be classified with \(\mathsf {C}_3\) because \(\mathcal {\tilde{K}}_i(\mathsf {C}_3,\mathsf {P})>\mathcal {\tilde{K}}_i(\mathsf {C}_2,\mathsf {P})>\mathcal {\tilde{K}}_i(\mathsf {C}_1,\mathsf {P})\) \(\forall \) \(i=1,\ldots ,5\).

5.2 Applicative Example in Medical Diagnosis

Medical diagnosis is a delicate exercise because failure to make the right decision may lead to the death of the patient. Diagnosis of diseases is challenging due to embedded fuzziness in the processes. Here, we present a scenario of a mathematical approach of diagnosing a patient medical status via WCCPFSs methods, where the symptoms or clinical manifestations of the diseases are represented in Pythagorean fuzzy values by using hypothetical cases.

Suppose we have a set of diseases \(\mathsf {D}=\lbrace \mathsf {D}_1, \mathsf {D}_2, \mathsf {D}_3, \mathsf {D}_4, \mathsf {D}_5\rbrace \) represented in Pythagorean fuzzy values, where \(\mathsf {D}_1=\) viral fever, \(\mathsf {D}_2=\) malaria, \(\mathsf {D}_3=\) typhoid fever, \(\mathsf {D}_4=\) peptic ulcer, \(\mathsf {D}_5=\) chest problem, and a set of symptoms

for \(\mathsf {s}_1{=}\) temperature, \(\mathsf {s}_2=\) headache, \(\mathsf {s}_3=\) stomach pain, \(\mathsf {s}_4{=}\) cough, \(\mathsf {s}_5=\) chest pain, which are the clinical manifestations of \(\mathsf {D}_i\), \(i=1,\ldots ,5\). From the knowledge of the clinical manifestations, the weight of the symptoms as \(\alpha =\lbrace 0.3,0.25,0.1,0.25,0.1\rbrace \).

Assume a patient \(\mathsf {P}\) with a manifest symptoms \(\mathsf {S}\) is also capture in Pythagorean fuzzy values. Table 5 contains Pythagorean fuzzy information of \(\mathsf {D}_i\), \(i=1,\ldots ,5\) and \(\mathsf {P}\) with respect to \(\mathsf {S}\).

Now, we find which of the diseases \(\mathsf {D}_i\) has the greatest interrelationship with the patient \(\mathsf {P}\) with respect to the clinical manifestations \(\mathsf {S}\) by deploying Eqs. (15), (16), (19), (20), and (21).

By using Eqs. (15) and (16), we have

Hence,

Using Eqs. (19), (20) and (21), we obtain

Hence,

Table 6 presents the results for glance analysis.

From Table 6, it is inferred that the patient is suffering from malaria since

for \(i=1,\ldots ,5\).

6 Conclusion

In this chapter, we have studied some techniques of calculating CCPFSs and WCCPFSs, respectively. It is found that the approach of WCCPFSs is more reliable than CCPFSs. By juxtaposing the existing methods of computing WCCPFSs and the novel ones, it is proven that the novel methods of calculating WCCPFSs are more accurate and efficient. Some MCDM problems were considered via the existing and the novel WCCPFSs methods to demonstrate applicability. The novel methods of computing WCCPFSs could be applied to more MCDM problems via object-oriented approach in cases of larger population. Extending the concept of weights on elements of PFSs to other existing correlation coefficients in Pythagorean fuzzy domain [16, 50] could be of great interest in other different applicative areas through clustering algorithm.

References

Atanassov KT (1986) Intuitionistic fuzzy sets. Fuzzy Set Syst 20:87–96

Atanassov KT (1989) Geometrical interpretation of the elements of the intuitionistic fuzzy objects. Preprint IM-MFAIS-1-89, Sofia

Atanassov KT (1999) Intuitionistic fuzzy sets: theory and applications. Physica-Verlag, Heidelberg

Boran FE, Akay D (2014) A biparametric similarity measure on intuitionistic fuzzy sets with applications to pattern recognition. Inform Sci 255(10):45–57

De SK, Biswas R, Roy AR (2001) An application of intuitionistic fuzzy sets in medical diagnosis. Fuzzy Set Syst 117(2):209–213

Du YQ, Hou F, Zafar W, Yu Q, Zhai Y (2017) A novel method for multiattribute decision making with interval-valued Pythagorean fuzzy linguistic information. Int J Intell Syst 32(10):1085–1112

Ejegwa PA (2015) Intuitionistic fuzzy sets approach in appointment of positions in an organization via max-min-max rule. Global J Sci Frontier Res: F Math Decision Sci 15(6):1–6

Ejegwa PA (2018) Distance and similarity measures for Pythagorean fuzzy sets. Granul Comput 5(2):225–238

Ejegwa PA (2019) Improved composite relation for Pythagorean fuzzy sets and its application to medical diagnosis. Granul Comput 5(2):277–286

Ejegwa PA (2019) Pythagorean fuzzy set and its application in career placements based on academic performance using max-min-max composition. Complex Intell Syst 5:165–175

Ejegwa PA (2019) Modified Zhang and Xu’s distance measure of Pythagorean fuzzy sets and its application to pattern recognition problems. Neural Comput Appl 32(14):10199–10208

Ejegwa PA (2019) Personnel Appointments: a Pythagorean fuzzy sets approach using similarity measure. J Inform Comput Sci 14(2):94–102

Ejegwa PA (2019) Modal operators on Pythagorean fuzzy sets and some of their properties. J Fuzzy Math 27(4):939–956

Ejegwa PA (2020) Modified and generalized correlation coefficient between intuitionistic fuzzy sets with applications. Note IFS 26(1):8–22

Ejegwa PA (2020) New similarity measures for Pythagorean fuzzy sets with applications. Int J Fuzzy Comput Modelling 3(1):75–94

Ejegwa PA (2020) Generalized triparametric correlation coefficient for Pythagorean fuzzy sets with application to MCDM problems. Comput, Granul. https://doi.org/10.1007/s41066-020-00215-5

Ejegwa PA, Adamu IM (2019) Distances between intuitionistic fuzzy sets of second type with application to diagnostic medicine. Note IFS 25(3):53–70

Ejegwa PA, Akubo AJ, Joshua OM (2014) Intuitionistic fuzzy set and its application in career determination via normalized Euclidean distance method. Europ Scient J 10(15):529–536

Ejegwa PA, Akubo AJ, Joshua OM (2014) Intuitionistic fuzzzy sets in career determination. J Inform Comput Sci 9(4):285–288

Ejegwa PA, Awolola JA (2019) Novel distance measures for Pythagorean fuzzy sets with applications to pattern recognition problems. Comput Granul. https://doi.org/10.1007/s41066-019-00176-4

Ejegwa PA, Modom ES (2015) Diagnosis of viral hepatitis using new distance measure of intuitionistic fuzzy sets. Intern J Fuzzy Math Arch 8(1):1–7

Ejegwa PA, Onasanya BO (2019) Improved intuitionistic fuzzy composite relation and its application to medical diagnostic process. Note IFS 25(1):43–58

Ejegwa PA, Onyeke IC (2018) An object oriented approach to the application of intuitionistic fuzzy sets in competency based test evaluation. Ann Commun Math 1(1):38–47

Ejegwa PA, Tyoakaa GU, Ayenge AM (2016) Application of intuitionistic fuzzy sets in electoral system. Intern J Fuzzy Math Arch 10(1):35–41

Garg H (2016) A novel correlation coefficients between Pythagorean fuzzy sets and its applications to decision making processes. Int J Intell Syst 31(12):1234–1252

Garg H (2016) A new generalized Pythagorean fuzzy information aggregation using Einstein operations and its application to decision making. Int J Intell Syst 31(9):886–920

Garg H (2017) A new improved score function of an interval-valued Pythagorean fuzzy set based TOPSIS method. Int J Uncertainty Quantif 7(5):463–474

Garg H (2017) A new improved score function of an interval-valued Pythagorean fuzzy set based TOPSIS method. Int J Uncertainty Quantification 7(5):463–474

Garg H (2018) A linear programming method based on an improved score function for interval-valued Pythagorean fuzzy numbers and its application to decision-making. Int J Uncert Fuzz Knowl Based Syst 29(1):67–80

Garg H (2018) Novel correlation coefficients under the intuitionistic multiplicative environment and their applications to decision-making process. J Indust Manag Optim 14(4):1501–1519

Garg H (2018) Generalized Pythagorean fuzzy geometric interactive aggregation operators using Einstein operations and their application to decision making. J Exp Theoret Artif Intell 30(6):763–794

Garg H (2018) Some methods for strategic decision-making problems with immediate probabilities in Pythagorean fuzzy environment. Int J Intell Syst 33(4):87–712

Garg H (2018) A new exponential operational laws and their aggregation operators of interval-valued Pythagorean fuzzy information. Int J Intell Syst 33(3):653–683

Garg H (2018) Linguistic Pythagorean fuzzy sets and its applications in multiattribute decision making process. Int J Intell Syst 33(6):1234–1263

Garg H (2019) Hesitant Pythagorean fuzzy Maclaurin symmetricmean operators and its applications to multiattribute decision making process. Int J Intell Syst 34(4):601–626

Garg H (2020) Linguistic interval-valued Pythagorean fuzzy sets and their application to multiple attribute group decision-making process. Cognitive Comput. https://doi.org/10.1007/s12559-020-09750-4

Garg H, Arora R (2020) TOPSIS method based on correlation coefficient for solving decision-making problems with intuitionistic fuzzy soft set information. AIMS Math 5(4):2944–2966

Garg H, Kumar K (2018) A novel correlation coefficient of intuitionistic fuzzy sets based on the connection number of set pair analysis and its application. Scientia Iranica 25(4):2373–2388

Garg H, Kumar K (2018) An advance study on the similarity measures of intuitionistic fuzzy sets based on the set pair analysis theory and their application in decision making. Soft Comput 22(15):4959–4970

Garg H, Kumar K (2018) Distance measures for connection number sets based on set pair analysis and its applications to decision-making process. Appl Intell 48(10):3346–3359

Garg H, Rani D (2019) A robust correlation coefficient measure of complex intuitionistic fuzzy sets and their applications in decision-making. Applied Intell 49:496–512

Gerstenkorn T, Manko J (1991) Correlation of intuitionistic fuzzy sets. Fuzzy Set Syst 44(1):39–43

Hatzimichailidis AG, Papakostas AG, Kaburlasos VG (2012) A novel distance measure of intuitionistic fuzzy sets and its application to pattern recognition problems. Int J Intell Syst 27:396–409

Hung WL (2001) Using statistical viewpoint in developing correlation of intuitionistic fuzzy sets. Int J Uncert Fuzz Knowl Based Syst 9(4):509–516

Hung WL, Wu JW (2002) Correlation of intuitionistic fuzzy sets by centroid method. Inform Sci 144(1):219–225

Liu P, Chen SM (2017) Group decision making based on Heronian aggregation operators of intuitionistic fuzzy numbers. IEEE Trans Cybern 47(9):2514–2530

Liu B, Shen Y, Mu L, Chen X, Chen L (2016) A new correlation measure of the intuitionistic fuzzy sets. J Intell Fuzzy Syst 30(2):1019–1028

Mitchell HB (2004) A correlation coefficient for intuitionistic fuzzy sets. Int J Intell Syst 19(5):483–490

Park JH, Lim KM, Park JS, Kwun YC (2009) Correlation coefficient between intuitionistic fuzzy sets. In: Cao B, Li TF, Zhang CY (Eds.): Fuzzy information and engineering Volume 2 (2009), AISC 62, pp 601–610, Springer, Berlin, Heidelberg

Singh S, Ganie AH (2020) On some correlation coefficients in Pythagorean fuzzy environment with applications. J Intell Syst Int https://doi.org/10.1002/int.22222

Szmidt E, Kacprzyk J (2001) Intuitionistic fuzzy sets in some medical applications. Note IFS 7(4):58–64

Szmidt E, Kacprzyk J (2004) Medical diagnostic reasoning using a similarity measure for intuitionistic fuzzy sets. Note IFS 10(4):61–69

Szmidt E, Kacprzyk J (2010) Correlation of intuitionistic fuzzy sets. Lect Notes Comput Sci (Springer) 6178:169–177

Thao NX (2018) A new correlation coefficient of the intuitionistic fuzzy sets and its application. J Intell Fuzzy Syst 35(2):1959–1968

Thao NX (2019) A new correlation coefficient of the Pythagorean fuzzy sets and its applications. Soft Comput. https://doi.org/10.1007/s00500-019-04457-7

Thao NX, Ali M, Smarandache F (2019) An intuitionistic fuzzy clustering algorithm based on a new correlation coefficient with application in medical diagnosis. J Intell Fuzzy Syst 36(1):189–198

Wang W, Xin X (2005) Distance measure between intuitionistic fuzzy sets. Pattern Recog Lett 26:2063–2069

Yager RR (2013) Pythagorean membership grades in multicriteria decision making. Technical Report MII-3301 Machine Intelligence Institute, Iona College, New Rochelle, NY

Yager RR (2014) Pythagorean membership grades in multicriteria decision making. IEEE Trans Fuzzy Syst 22(4):958–965

Yager RR (2016) Properties and applications of Pythagorean fuzzy sets. Springer, Berlin

Yager RR, Abbasov AM (2016) Pythagorean membership grades, complex numbers and decision making. Int J Intell Syst 28(5):436–452

Xu Z (2006) On correlation measures of intuitionistic fuzzy sets. Lect Notes Comput Sci (Springer) 4224:16–24

Xu S, Chen J, Wu JJ (2008) Cluster algorithm for intuitionistic fuzzy sets. Inform Sci 178(2008):3775–3790

Zadeh LA (1965) Fuzzy sets. Inform Control 8:338–353

Zhang X (2016) A novel approach based on similarity measure for Pythagorean fuzzy multiple criteria group decision making. Int J Intell Syst 31:593–611

Zhang XL, Xu ZS (2014) Extension of TOPSIS to multiple criteria decision making with Pythagorean fuzzy sets. Int J Intell Syst 29(12):1061–1078

Zeng W, Li D, Yin Q (2018) Distance and similarity measures of Pythagorean fuzzy sets and their applications to multiple criteria group decision making. J Intell Syst Int https://doi.org/10.1002/int.22027

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Ejegwa, P.A., Jana, C. (2021). Some New Weighted Correlation Coefficients Between Pythagorean Fuzzy Sets and Their Applications. In: Garg, H. (eds) Pythagorean Fuzzy Sets. Springer, Singapore. https://doi.org/10.1007/978-981-16-1989-2_2

Download citation

DOI: https://doi.org/10.1007/978-981-16-1989-2_2

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-1988-5

Online ISBN: 978-981-16-1989-2

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)