Abstract

Iterative solution methods for a class of finite-dimensional constrained saddle point problems are developed. These problems arise if variational inequalities and minimization problems are solved with the help of mixed finite element statements involving primal and dual variables. In the paper, we suggest several new approaches to the construction of saddle point problems and present convergence results for the iteration methods. Numerical results confirm the theoretical analysis.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Variational inequality

- Optimal control problem

- Finite element method

- Constrained saddle point problem

- Iteration methods

1 Introduction

We construct and investigate iteration methods for the finite dimensional constrained saddle point problem

where \(f\in\mathbb{R}^{N_{x}}\) and \(g\in\mathbb{R}^{N_{\lambda}}\) are given vectors, and the following assumptions hold:

-

(A1)

Operator \(A:\mathbb {R}^{N_{x}}\rightarrow \mathbb {R}^{N_{x}}\) is continuous, strictly monotone and coercive;

-

(A2)

\(C\in \mathbb {R}^{N_{\lambda}\times N_{x}}\), N λ ≤N x , is a full rank matrix: \(\operatorname {rank}C = N_{\lambda}\);

-

(A3)

P=∂Φ, Q=∂Ψ, where \(\varPhi: \mathbb {R}^{N_{x}} \rightarrow\bar{\mathbb {R}}\) and \(\varPsi: \mathbb {R}^{N_{\lambda}} \rightarrow \bar{\mathbb {R}}\) are proper, convex and lower semi-continuous functions.

Different particular cases of the problem (2.1) arise if grid approximations (finite difference, finite element, etc.) are used to approximate variational inequalities or optimal control problems. Specifically, introducing the dual variables to the grid approximations of the variational inequalities with constraints for the gradient of a solution leads to (2.1) with Q=0. Approximations of the control problems with control function in the right-hand side of a linear differential equation or in the boundary conditions give rise to the saddle point problem (2.1) with Q=0 and linear A. Finally, we note that mixed and hybrid finite element schemes for the 2-nd order variational inequalities with pointwise constraints to the solution imply (2.1) with P=0.

The solution methods for large-scale unconstrained saddle point problems are thoroughly investigated. The state-of-the-art for this problem can be found in the survey paper [1] and in the book [6]. Constrained saddle point problems arising from the Lagrangian approach for solving variational inequalities in mechanics and physics are considered in [8–10] (see also the bibliography therein). Namely, the convergence of Uzawa-type, Arrow-Hurwitz-type, and operator-splitting iterative methods are investigated in these books.

The development of the efficient numerical methods designed to solve state-constrained optimal control problems represents severe numerical challenges. The construction of the effective iterative solution methods for them is an actual problem. The achievements in this field during the past two decades are reported in the book [5] and the articles [2–4, 11–15, 21]. The augmented Lagrangian method as well as regularization and penalty methods have been investigated for particular classes of the state-constrained optimal control problems. Adjustment schemes for the regularization parameter of a Moreau–Yosida-based regularization and for the relaxation parameter of interior point approaches to the numerical solution of pointwise state constrained elliptic optimal control problems have been constructed. Lavrentiev regularization has been applied to transform the state constraints to the mixed control-state constraints in the linear-quadratic elliptic control problem with pointwise constraints on the state. The interior point methods and the primal-dual active set strategy have been applied to the transformed problem.

In this article, we prove convergence of the iterative solution methods for the saddle point problem (2.1). The sufficient conditions of convergence for the iterative methods are presented in the form of matrix inequalities and give rise to constructing appropriate preconditioners and allow choosing the iterative parameter. Applications of the general convergence results to sample examples of the variational inequalities and optimal control problems, as well as several numerical results, are included. The results of this article are founded in the previous papers [16–19] by the authors.

2 Iterative Methods for the Saddle-Point Problem

2.1 Existence of the Solutions

Consider the problem (2.1) and suppose that it has a nonempty set of solutions X={(x,λ)}. Below we present the existence results for the cases P=0 or Q=0, which are mostly interesting for the applications included in the article. Note that the assumptions (A1)–(A3) ensure the uniqueness of the component x.

Lemma 2.1

Let the assumptions (A1)–(A3) be fulfilled and P=0. Let also the operator A be uniformly monotone, i.e.,

and Lipshitz-continuous

with a symmetric and positive definite matrix \(A_{0}\in\mathbb{R}^{N_{x}\times N_{x}}\). Then, the problem (2.1) has a unique solution (x,λ).

Lemma 2.2

([17])

Let the assumptions (A1)–(A3) be fulfilled, Q=0, and

Then, the problem (2.1) has a nonempty set of solutions X={(x,λ)} with a uniquely defined component x.

2.2 Iteration Methods

We consider two iteration methods for solving (2.1): a preconditioned Uzawa-type method

and a preconditioned Arrow-Hurwitz-type method

Preconditioners B x and B λ are supposed to be symmetric and positive definite matrices, τ>0 is an iteration parameter.

In the forthcoming theorem, we give sufficient conditions of the convergence for the iterative method (2.4).

Theorem 2.1

([17])

Let the operator A be uniformly monotone (2.2). If

then the iterations of the method (2.4) converge to a solution of (2.1) starting from any initial guess λ 0.

Note 1

Since the component x of the exact solution (x,λ), as well as the components x k of the iterations belong to \(D(P)\subset \operatorname {dom}\varPhi\), it is sufficient for A to be a uniform monotone operator only on \(\operatorname {dom}\varPhi\).

Note 2

-

(a)

In [6], it is proved that the positive eigenvalues μ of two generalized eigenvalue problems

$$CA_0^{-1}C^T=\mu B_{\lambda} \quad \mbox{and} \quad C^T B_{\lambda}^{-1} C=\mu A_0 $$with symmetric and positive definite matrices A 0 and B λ coincide. Owing to this inequality, (2.6) is equivalent to the inequality

$$ A_0>\frac{\tau}{2\alpha} C^T B_{\lambda}^{-1} C. $$(2.7) -

(b)

The inequality

$$(Ax-Ay, x-y)>\frac{\tau}{2} \bigl(C^TB_{\lambda}^{-1}C(x-y), x-y\bigr) \quad \forall x\neq y $$ -

(c)

If A is linear then we can take A 0=0/5(A+A T) and the inequalities (2.6) and (2.7) become, respectively (cf. [18]):

$$B_{\lambda}>\frac{\tau}{2} CA_0^{-1}C^T \quad\mbox{and} \quad A_0>\frac{\tau}{2} C^T B_{\lambda}^{-1} C. $$ -

(d)

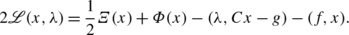

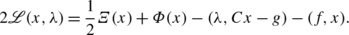

In the case of a potential operator A:A=∇Ξ, where Ξ is a differentiable convex function, the method (2.4) is just the preconditioned Uzawa method applied to finding a saddle point of the Lagrangian

The sufficient conditions for the choice of the preconditioning matrices B x and B λ and iterative parameter τ>0 required to ensure the convergence of the Arrow–Hurwitz-type method (2.5) are given by

Theorem 2.2

([17])

Let the operator A be uniformly monotone (2.2) and Lipshitz-continuous (2.3). If

where \(\mu_{\max}=\lambda_{\max}(B_{x}^{-1/2}A_{0}B_{x}^{-1/2})\) is the maximal eigenvalue of the matrix \(B_{x}^{-1/2}A_{0}B_{x}^{-1/2}\), then iterations of the method (2.5) converge to a solution of (2.1) starting from any initial guess (x 0,λ 0).

Note 3

It is sufficient for A to be a uniform monotone and Lipshitz-continuous operator only on \(\operatorname {dom}\varPhi\) (cf. Note 1).

Note 4

-

(a)

The choice B x =A 0 gives the best limit for the iterative parameter τ ensuring the convergence of the method. In this case, the inequality (2.8) reads

$$A_0>\frac{\tau}{2\alpha- \tau\beta^2} \,C^TB_{\lambda}^{-1}C, $$and further choice of a preconditioner B λ is similar to the case of the method (2.4).

-

(b)

If A is linear then the sufficient convergence condition (2.8) can be replaced by the following sharper condition:

$$A>\frac{\tau}{2} \bigl(AB_x^{-1}A^T+C^TB_{\lambda}^{-1}C \bigr). $$

2.3 Stopping Criterion

One possible stopping criterium for an iterative process is based on the evaluation of residual norms. Namely, when solving the problem (2.1) by an iterative method we find not only the pair (x k,λ k)—approximations of the exact solution (x,λ), but also uniquely defined selections γ k∈P(x k), δ k∈Q(λ k). Let us define the residual vectors

Lemma 2.3

Let the operator A be uniformly monotone (2.2). Then the error estimate

is valid for the methods (2.4) and (2.5). Constants c 1 and c 2 depend only on the constant α of uniform monotonicity of operator A.

Since ∥λ−λ k∥→0 for k→∞, then the inequality (2.9) gives an estimate for the error \(\|x-x^{k}\|_{A_{0}}\) throughout the norms \(\|r_{x}^{k}\|_{A_{0}^{-1}}\) and \(\|r^{k}_{\lambda}\|\).

Note 5

In the Uzawa-type method for the saddle point problem, the inclusion Ax−B T λ+∂φ(x)∋f is solved exactly on each iteration. Due to this fact, \(r^{k}_{x}=0\) and the estimate (2.9) reads

whence

3 Application to Variational Inequalities

Now we consider the application of the previous results to a sample example of the variational inequality: find u∈V such that ∀v∈V

Here \(H_{0}^{1}(\varOmega)\subset V\subset H^{1}(\varOmega)\), a(x)>0, and \(k(\bar{t}): \mathbb{R}^{2} \rightarrow\mathbb{R}^{2}\) is a continuous and uniformly monotone vector-function:

We construct a simple finite element approximation of (2.11) in the case of polygonal domain Ω. Let \(\overline{\varOmega}= \bigcup_{e\in T_{h}} e\) be a conforming triangulation of \(\overline{\varOmega}\) [7], where T h is a family of N e non-overlapping closed triangles e (finite elements) and h is the maximal diameter of all e∈T h . Further \(V_{h}\subset H_{0}^{1}(\varOmega)\) is the space of the continuous and piecewise linear functions (linear on each e∈T h ), while U h ∈L 2(Ω) is the space of the piecewise constant functions. Define f h ∈U h and a h ∈U h by the equalities

The finite element approximation of the problem (2.11) satisfies the relation

In order to formulate (2.13) in a vector-matrix form, we first define the vectors \(u\in\mathbb{R}^{N_{u}}\) and \(w\in\mathbb{R}^{N_{e}}\) of the nodal values of functions u h ∈V h and w h ∈U h , respectively. We correspond a vector valued function \(\bar{q}_{h}=(q_{1h}, q_{2h})\in U_{h}\times U_{h}\) to the vector \(q=(q_{11}, q_{21},\ldots, q_{1i}, q_{2i},\ldots,q_{1N_{e}},q_{2N_{e}}) \in \mathbb {R}^{2 N_{y}}\), where q 1i =q 1h (x), q 2i =q 2h (x) for x∈e i . Further, we define the matrix \(L\in\mathbb{R}^{N_{u}\times N_{y}}\) and the operator \(k: \mathbb{R}^{N_{y}}\rightarrow\mathbb{R}^{N_{y}}\) by the equalities

diagonal matrix \(D=\operatorname {diag}(a_{1}, a_{1},\ldots, a_{i}, a_{i},\ldots,a_{N_{e}},a_{N_{e}})\in \mathbb {R}^{N_{y}\times N_{y}}\) with the entries a i =a h (x) for x∈e i , and vector \(f\in\mathbb{R}^{N_{u}}\), (f,u)=∫ Ω f h (x)u h (x) dx. Finally, let the convex function be defined by the relation

Now, the discrete variational inequality (2.13) can be written in the form

or, equivalently, as the inclusion

We will construct different saddle point problems using the inclusion (2.14).

3.1 Variational Inequality with the Linear Main Operator

First, let us consider the discrete problem approximating variational inequality with the linear differential operator: k(∇u)=∇u. The corresponding discrete inclusion is

Denoting p=Lu, we transform it to one of the following three systems:

The matrix  of the first two equations in the system (2.15) is positive definite and block diagonal. Thus, the Uzawa-type method (2.4), being applied to this system, can be effectively implemented. On the other side, the saddle point problems (2.16) and (2.17) contain degenerate matrices

of the first two equations in the system (2.15) is positive definite and block diagonal. Thus, the Uzawa-type method (2.4), being applied to this system, can be effectively implemented. On the other side, the saddle point problems (2.16) and (2.17) contain degenerate matrices  and

and  , respectively, so, the iterative method (2.4) cannot be applied for their solution. We realize different equivalent transformations of (2.16) and (2.17) by using the equation Lu=p, to obtain the systems with positive definite matrices A

i

. In particular, we can get the system corresponding to the augmented Lagrangian method

, respectively, so, the iterative method (2.4) cannot be applied for their solution. We realize different equivalent transformations of (2.16) and (2.17) by using the equation Lu=p, to obtain the systems with positive definite matrices A

i

. In particular, we can get the system corresponding to the augmented Lagrangian method

The matrix  in (2.18) is symmetric and positive definite for any r>0. However, it is not block diagonal or block triangle. In view of this, the method (2.4) cannot be effectively implemented (while it converges for this problem). The most well-known methods for solving (2.18) are the so-called Algorithms 2–6 (see [8, 9]), based on the block relaxation technique to inverse A

r

and updating of the Lagrange multipliers λ. Instead of (2.18) we construct the systems with positive definite and block triangle 2×2 left upper blocks:

in (2.18) is symmetric and positive definite for any r>0. However, it is not block diagonal or block triangle. In view of this, the method (2.4) cannot be effectively implemented (while it converges for this problem). The most well-known methods for solving (2.18) are the so-called Algorithms 2–6 (see [8, 9]), based on the block relaxation technique to inverse A

r

and updating of the Lagrange multipliers λ. Instead of (2.18) we construct the systems with positive definite and block triangle 2×2 left upper blocks:

Lemma 2.4

Let 0<r<4. Then the matrices

in the systems (2.19) and (2.20) are energy equivalent to the block diagonal and positive definite matrix

with the constants depending only on r:

As the matrices A 2[r] and A 3[r] defined in (2.21) are block triangle, the Uzawa-type iterative method (2.4) can be easily implemented for the solution of the systems (2.19) and (2.20). Owing to Theorem 2.1, the most reasonable preconditioner is B λ =D −1. The convergence result in the particular case r=1 reads as follows:

Theorem 2.3

([18])

Let r=1. Then the method (2.4) with B λ =D −1 applied to the systems (2.19) and (2.20) converges provided that \(0<\tau<\frac{1}{2}\).

Implementation of the method (2.4) for (2.19) and (2.20) includes solving a system of linear equations with the matrix L T DL and solving an inclusion of the form cDp+∂θ(p)∋F, c=const with a known vector F. In the example under consideration, the matrix D is diagonal and the multivalued operator ∂θ is block-diagonal with 2×2 blocks. Because of this, the inclusion cDp+∂θ(p)∋F can be easily solved by the direct methods.

3.2 Variational Inequality with Non-linear Main Operator

To construct saddle point problems for the inclusion (2.14) with the non-linear main operator, we proceed similarly to the linear case. Namely, by using Lagrange multipliers λ and the equation Lu=p, we construct saddle point problems with uniformly monotone operators in the space of the vectors x=(u,p)T. Consider two of them:

The systems (2.22) and (2.23) contain block-triangle operators

Lemma 2.5

Let the uniform monotonicity property (2.12) with the constant σ 0 hold and 0<r<4σ 0. Then the operators A 1 and A 2 are uniformly monotone:

where

is the positive definite matrix.

is the positive definite matrix.

Lemma 2.6

Let the function k be Lipschitz-continuous:

Then the operators A 1 and A 2 are Lipschitz-continuous:

Application of Lemmas 2.5 and 2.6 and Theorem 2.1 gives the following result:

Theorem 2.4

Let 0<r<4σ 0. Then the Uzawa-type iterative method (2.4) with the preconditioner B λ =D −1 applied for solving (2.22) and (2.23) converges if

Implementation of the method (2.4) for (2.23) includes solving a system of linear equations with the matrix L T DL and solving the inclusion Dk(p)+∂θ(p)∋F with a known vector F. This inclusion can be effectively solved because the operator k is diagonal and ∂θ is a 2×2 block diagonal operator.

Implementation of (2.4) for the problem (2.22) requires solving the system of nonlinear equations L

T

k(Lu)+L

T

λ=0 by an inner iterative method. Thus, the effectiveness of the algorithm depends also on the effectiveness of an inner iterative method. Instead of the Uzawa-type method we can apply the Arrow–Hurwitz-type iterative method (2.5) for the problem (2.22) with B

λ

=D

−1 and  . The results of Lemmas 2.5 and 2.6 and Theorem 2.2 yield

. The results of Lemmas 2.5 and 2.6 and Theorem 2.2 yield

Theorem 2.5

Let 0<r<4σ 0. Then the iterative method (2.5) for the problem (2.22)

converges if

It is easy to see that the implementation of (2.27) includes the same steps as the implementation of the method (2.4) for (2.23).

3.3 Variational Inequality with Pointwise Constraints both for the Solution and Its Gradient

Consider the variational inequality: find \(u\in U_{ad}=\{u\in H^{1}_{0}(\varOmega): u(x)\ge 0 \mbox{ in } \varOmega\}\), such that for all v∈U ad

where a(x)>0 and the vector-function \(k(|\bar{t}|) \bar{t}\) satisfies (2.12). After approximation of this variational inequality, we obtain the discrete variational inequality

where φ is the indicator function of the constraint set \(\{u\in \mathbb {R}^{N_{u}}: u_{i}\ge 0\ \forall i\}\), while all other notations are the same as above. We write this variational inequality in the form of inclusion

We proceed as before and construct the saddle point problems

Both iterative methods, (2.4) and (2.5), can be applied for solving these saddle point problems because the results of Theorems 2.1 and 2.2 are valid with the operator P defined by P(x)=(∂φ(u),∂θ(p))T. But now, the implementation of the Uzawa-type iterative method (2.4) for (2.29) includes the solution of the finite dimensional obstacle problem—the inclusion

with the symmetric and positive definite matrix rL T DL, and the implementation of this method for (2.28) includes the solution of the problem with the non-linear operator

The Arrow–Hurwitz-type method (2.5) with preconditioners  and B

λ

=D

−1 being applied to (2.28) or (2.29) converges and it can be easily implemented. On the other hand, in this case the maximal eigenvalue μ

max of the matrix \(B_{x}^{-1/2}A_{0}B_{x}^{-1/2}\) depends on condition numbers of the matrices D and L

T

L, thus, on the mesh step h. Convergence of the corresponding iterative methods is guaranteed for the very small iterative parameter τ, and numerical experiments demonstrate slow convergence of the Arrow–Hurwitz-type method (2.5).

and B

λ

=D

−1 being applied to (2.28) or (2.29) converges and it can be easily implemented. On the other hand, in this case the maximal eigenvalue μ

max of the matrix \(B_{x}^{-1/2}A_{0}B_{x}^{-1/2}\) depends on condition numbers of the matrices D and L

T

L, thus, on the mesh step h. Convergence of the corresponding iterative methods is guaranteed for the very small iterative parameter τ, and numerical experiments demonstrate slow convergence of the Arrow–Hurwitz-type method (2.5).

3.4 Results of Numerical Experiments

We have solved a number of 1D and 2D linear and non-linear variational inequalities using the simplest finite element and finite difference approximations and applying the Uzawa-type method. The main purpose of the numerical experiments was to observe the dependence of the number of iterations upon the mesh step h and iterative parameter τ. We also compared proposed iterative algorithms with well-known algorithms for saddle point problems constructed via an augmented Lagrangian technique. Several numerical results are reported below.

Consider the following one-dimensional variational inequality

with the set of constraints \(K=\{u\in H^{1}_{0}(0,1): |u^{\prime}(x)|\le 1 \mbox{ for } x\in(0,1)\}\). Finite element approximation with piecewise linear elements on the uniform grid leads to the inclusion L T Lu+L T ∂θ(Lu)∋f, where the matrix L corresponds to the approximation of the first order derivative. We solve the corresponding saddle point problems:

Problem 2.1

The saddle point problem with  (which corresponds to (2.19)).

(which corresponds to (2.19)).

Problem 2.2

The saddle point problem with  (which corresponds to (2.15)).

(which corresponds to (2.15)).

We use the stopping criterion

where h=n −1 is the mesh step and u ∗ is the known exact solution, and the initial guess λ=0. Table 2.1 demonstrates the dependence of the number of iterations n it upon the iterative parameter and the number of the grid nodes for Problem 2.1.

For Problem 2.2 the optimal iterative parameter was found τ=0.4 and the number of iterations to achieve the accuracy \(\|u-u^{*}\|_{L_{2}}<10^{-4}\) for the grids with the number of nodes from n=50 to n=500 000 was equal to 12.

Now we consider two-dimensional variational inequalities with linear differential operators

We set Ω=(0,1)×(0,1) and construct finite difference approximations on uniform grids. These finite difference schemes can be written in the form of the inclusion L T Lu+L T ∂θ(Lu)∋f, where the rectangular matrix L corresponds to the approximation of the gradient operator. We have studied the following two saddle point problems:

Problem 2.3

2D saddle point problem with the matrix  .

.

Problem 2.4

2D saddle point problem with the matrix  (which corresponds to the augmented Lagrangian method with r=1).

(which corresponds to the augmented Lagrangian method with r=1).

We use the stopping criterion

where n=h −1 is the number of nodes in one direction and u ∗ is the known exact solution. Table 2.2 contains results for the variational inequality (2.30).

For the discrete saddle point problems corresponding to (2.31) the results were similar. Namely, for both aforementioned methods and grids with the number of nodes n=100,200,400 the accuracy \(\|u-u^{*}\|_{L_{2}}<10^{-3}\) was achieved within 19 iterations for τ=1.2, which was found as numerically optimal.

Finally, we consider a two-dimensional variational inequality associated with the non-linear differential operator

where Ω=(0,1)×(0,1), \(k(t) t=\sqrt{t}\) and \(K=\{u\in H^{1}_{0}(\varOmega):|\nabla u(x)|\le 1 \mbox{ in } \varOmega\}\). We constructed a finite difference approximation of (2.32) on the uniform grid. According to the theory the iterative parameter was taken τ=1/2. Since the exact solution was not known we estimated the norms of the residuals \(\|r_{\lambda}\||_{L_{2}}\) (see the estimate (2.10)). Calculations were made for different amount of nodes in one direction. For all grids, we observed typical dependence of norms of the residuals upon the iteration number: very fast decreasing during the first iterations with further deceleration. After 20–25 iterations the norm \(\|u^{k}-u^{k-1}\|_{L_{2}}\) became very close to zero and the vector u k could be taken as the exact solution. The calculation results for the case n=500 are given in Table 2.3, where \(\delta u=\|u^{k}-u^{100}\|_{L_{2}}\) is the norm of the difference between the current iteration and the 100th iteration which was taken as the exact solution.

In the computations performed for 1D and 2D variational inequalities, the following features were observed:

-

The dependence of the rate of convergence for the method (2.4) on the parameters r and τ=τ(r) was quite low;

-

The number of iterations did not depend on the mesh size h=1/n;

-

In all cases the Uzawa-type method (2.4) applied to transformed saddle point problems with the block triangle A was similar by a rate of convergence to Algorithm 2 applied to the saddle point problem constructed via the augmented Lagrangian technique.

4 Application to Optimal Control Problems

Consider the following elliptic boundary value problem:

Here Ω 0⊆Ω, \(\chi_{0}\equiv\chi_{\varOmega_{0}}\) is the characteristic function of the domain Ω 0, the function f∈L 2(Ω) is fixed, while u∈L 2(Ω 0) is a variable control function. Coefficients a ij (x) and a 0(x) are continuous in \(\overline{\varOmega}\) and satisfy the following ellipticity assumptions:

Define the goal functional

with a given function y d (x)∈L 2(Ω 1), Ω 1⊆Ω, and the sets of the constraints

The optimal control problem reads as follows:

We suppose that the set Z is non-empty. Then, the problem (2.34) has a unique solution (cf., e.g., [20]).

Construct a finite element approximation of the problem (2.34) in the case of polygonal domains Ω, Ω 0 and Ω 1. Let a triangulation of Ω be consistent with Ω 0 and Ω 1. Define the spaces of the continuous and piecewise linear functions (linear on each triangle of the triangulation) on the domain Ω (\(V_{h}\subset H^{1}_{0}(\varOmega)\)) and on the subdomains Ω 0 and Ω 1. Let functions f, u and y d be continuous and f h , u h and y d h be their piecewise linear interpolations. We use the quadrature formulas

where x α are the vertices of e, and \(|e|=\operatorname {meas}e\). Finite element approximations of the state equation, the goal function, and the constraints are as follows:

The state equation (2.35) has a unique solution y h and the following stability inequality holds:

with a constant k a independent on h. The finite element approximation of the optimal control problem (2.34) is

To obtain the matrix-vector form of (2.37), we define the vectors of nodal values \(y\in \mathbb {R}^{N_{y}}\), \(u\in \mathbb {R}^{N_{u}}\) and the matrices

Then, the discrete optimal control problem can be written in the form

where \(\theta(y)=I_{Y_{ad}}(y)\) and \(\varphi(u)=I_{U_{ad}}(u)\) are the indicator functions of the sets \(Y_{ad}=\{y\in \mathbb {R}^{N_{y}}: y_{i}\ge 0 \ \forall i\}\) and \(U_{ad}=\{u \in \mathbb {R}^{N_{u}}: |u_{i}|\le u_{d}\ \forall i\}\), respectively. The corresponding saddle point problem reads as follows:

In the problem (2.38), the stiffness matrix L is positive definite, and M>0, M 0>0, K≥0 are diagonal matrices. The main feature of (2.38) is that K is a degenerate matrix. We transform the system (2.38) to obtain a positive definite and block triangle left upper 2×2 block. To this end we add to the first inclusion in (2.38) the last equation multiplying by −rML −1, r>0, and obtain the saddle point problem

with

and \(\tilde{g}= (\tilde{f}, 0)^{T}\), \(\tilde{f}=K y_{d}+rML^{-1}Mf\).

Lemma 2.7

Let

\(0<r<\frac{4}{k_{a}^{2}}\), where the constant

k

a

is defined in (2.36). Then, the matrix

A[r] is an energy equivalent to

with constants depending only on

r. In particular,

with constants depending only on

r. In particular,

We solve (2.39) by using the iterative Uzawa-type method (2.4) with the preconditioner B λ =L M −1 L T:

Theorem 2.6

([18])

The iterative method (2.40) converges if

Along with the iterative method (2.40) we can use the gradient method for the regularized problem. Namely, let us change the indicator function \(\theta(y)=I_{Y_{ad}}(y)\) of the constraint set \(Y_{ad}=\{y\in\mathbb{R}^{N_{y}}:\, y_{i}\ge 0 \; \forall i\}\) by the differentiable function

For the corresponding regularized saddle point problem we can apply the “traditional” gradient method

Theorem 2.7

([19])

The iterative method (2.41) converges if

When implementing any of the iterative methods (2.40) or (2.41) we have to solve the systems of linear equations with matrices L and L T, and to solve two inclusions with diagonal operators M 0+∂φ and K+rM+∂θ.

4.1 Numerical Experiments

Problem 2.5

A control- and state-constrained optimal control problem with observation in the whole domain Ω=(0,1)×(0,1): minimize the goal functional

under the constraints

We constructed a finite difference approximation of this problem on the uniform grid. The corresponding saddle point problem has the form (2.38) with unit matrices K, M 0 and S. Therefore, we can use the preconditioned Uzawa-type method (2.40) for solving this saddle point problem without its transformation. The results of the calculations are reported in Table 2.4, where F ∗=J(y,u) is the value of the discrete goal function on the known exact solution (y,u) (y=3(sin(6πx 1 x 2))+ for the corresponding grid), while F=J(y k,v k) is its value on the current iteration; \(\mathit{Err}=(\|y^{k}-y\|_{L_{2}}^{2}+\|u^{k}-u\|^{2}_{L_{2}})^{\frac{1}{2}}\).

Problem 2.6

A control- and state-constrained optimal control problem with observation in the part Ω 1=(0,0.7)×(0,1) of the domain Ω=(0,1)×(0,1): minimize the goal functional

under the constraints (2.42). We constructed a finite difference approximation of this problem on the uniform grid. The corresponding saddle point problem has the form (2.38) with the degenerate matrix K. We transformed it to the problem of the form (2.39) with r=1 and applied the Uzawa-type method (2.40) for its solution. The corresponding calculation results are included in Table 2.5.

Problem 2.7

A state-constrained optimal control problem with observation in the whole domain: minimize the goal functional

under the constraints

We constructed a finite difference approximation on the uniform grid and applied the Uzawa-type method (2.40) and the gradient method (2.41) for solving the corresponding discrete saddle point problems. We compared the calculated iterations with the exact solution y, calculated by using a great deal of convergent iterations. Table 2.6 contains the results for the case f=20, h=10−2, F ∗=44.1789. The notations are Err y =∥y−y k∥, δy k=∥y k−1−y k∥.

Along with the Uzawa-type and regularization methods, we have also applied the Douglas-Rachford splitting method for solving state-constrained optimal control problems. We have found that none of the methods could be defined as the efficient one in all situations. More numerical experiments should be made to define the classes of the optimal control problems and the corresponding iterative methods which are the most efficient for their solving.

References

Benzi M, Golub G, Liesen J (2005) Numerical solution of saddle point problems. Acta Numer 14:1–137

Bergounioux M (1993) Augmented Lagrangian method for distributed optimal control problems with state constraints. J Optim Theory Appl 78(3):493–521

Bergounioux M, Haddou V, Hintermuller M, Kunisch K (2000) A comparison of a Moreau-Yosida-based active set strategy and interior point methods for constrained optimal control problems. SIAM J Optim 11(2):495–521

Bergounioux M, Kunisch K (2002) Primal-dual strategy for state-constrained optimal control problems. Comput Optim Appl 22(2):193–224

Biegler LT, Ghattas O, Heinkenschloss M, van Bloemen Waanders B (eds) (2003) Large-scale PDE-constrained optimization, Santa Fe, NM, 2001. Lect Notes Comput Sci Eng, vol 30. Springer, Berlin

Bychenkov Yu, Chizhonkov E (2010) Iterative solution methods for saddle point problems. Binom, Moscow. In Russian

Ciarlet PG, Lions J-L (eds) (1991) Handbook of numerical analysis. Finite element methods, vol II. North-Holland, Amsterdam

Fortin M, Glowinski R (1983) Augmented Lagrangian methods. North-Holland, Amsterdam

Glowinski R, Le Tallec P (1989) In: Augmented Lagrangian and operator-splitting methods in nonlinear mechanics. SIAM studies in applied mathematics, vol 9. SIAM, Philadelphia

Glowinski R, Lions J-L, Trémolières R (1976) Analyse numérique des inéquations variationnelles. Dunod, Paris

Graser C, Kornhuber R (2009) Newton methods for set-valued saddle point problems. SIAM J Numer Anal 47(2):1251–1273

Herzog R, Sachs E (2010) Preconditioned conjugate gradient method for optimal control problems with control and state constraints. SIAM J Matrix Anal Appl 31(5):2291–2317

Hintermüller M, Hinze M (2009) Moreau-Yosida regularization in state constrained elliptic control problems: error estimates and parameter adjustment. SIAM J Numer Anal 47(3):1666–1683

Hinze M, Schiela A (2011) Discretization of interior point methods for state constrained elliptic optimal control problems: optimal error estimates and parameter adjustment. Comput Optim Appl 48(3):581–600

Ito K, Kunisch K (2003) Semi-smooth Newton methods for state-constrained optimal control problems. Syst Control Lett 50(3):221–228

Laitinen E, Lapin A, Lapin S (2010) On the iterative solution for finite-dimensional inclusions with applications to optimal control problems. Comput Methods Appl Math 10(3):283–301

Laitinen E, Lapin A, Lapin S (2011) Iterative solution methods for variational inequalities with nonlinear main operator and constraints to gradient of solution. Preprint, University of Oulu

Lapin A (2010) Preconditioned Uzawa-type methods for finite-dimensional constrained saddle point problems. Lobachevskii J Math 31(4):309–322

Lapin AV, Khasanov MG (2010) The solution of a state constrained optimal control problem by the right-hand side of an elliptic equation. Kazan Gos Univ Uchen Zap Ser Fiz-Mat Nauki 152(4):56–67. http://mi.mathnet.ru/uzku884. In Russian

Lions J-L (1971) Optimal control of systems governed by partial differential equations. Springer, New York

Prüfert U, Tröltzsch F, Weiser M (2008) The convergence of an interior point method for an elliptic control problem with mixed control-state constraints. Comput Optim Appl 39(2):183–218

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media Dordrecht

About this chapter

Cite this chapter

Laitinen, E., Lapin, A. (2013). Iterative Solution Methods for Large-Scale Constrained Saddle-Point Problems. In: Repin, S., Tiihonen, T., Tuovinen, T. (eds) Numerical Methods for Differential Equations, Optimization, and Technological Problems. Computational Methods in Applied Sciences, vol 27. Springer, Dordrecht. https://doi.org/10.1007/978-94-007-5288-7_2

Download citation

DOI: https://doi.org/10.1007/978-94-007-5288-7_2

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-007-5287-0

Online ISBN: 978-94-007-5288-7

eBook Packages: EngineeringEngineering (R0)