Abstract

The availability of magnetic observatory data has evolved rapidly with the transition of observatories from analogue photographic magnetograms to digital electronic recordings, and the advent of the internet for instant global access to information of every sort. Metadata (information about the data) is undergoing its own transformation in order to accompany the rapid and extensive dissemination of these data. This chapter describes the types of data historically and currently produced by geomagnetic observatories and introduces new data types such as one-second and quasi-absolute data recently discussed at the 11th IAGA Scientific Assembly in Sopron, Hungary. We review the availability of these data types from the World Data Centres, INTERMAGNET and other sources. Finally, we discuss developments in metadata describing the current efforts in the geomagnetism community to gather, store and distribute this information about the data to better assist scientific discovery.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Magnetic Observatory

- International Geomagnetic Reference Field

- British Geological Survey

- Geomagnetic Data

- Metadata Standard

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

7.1 Introduction

Magnetic observatories continuously measure the strength and direction of the Earth’s magnetic field (Macmillan, 2007). The availability of data from these observatories has evolved greatly with the transition from the production of analogue photographic magnetograms to digital electronic recordings, and the development of technological capabilities such as the Personal Computer (PC); CD- and DVD-ROMs for the inexpensive archiving of large quantities of data; and the internet for instant global access to information of every sort. Metadata (information about the data) are undergoing their own transformation in order to accompany the rapid and extensive dissemination of these various data sets. Metadata describes the content, quality, originator, and other characteristics of a data set, and supports the proper interpretation and use of the data.

For more than a century, magnetic observatory data were only available on printed materials as analogue traces on photographic paper known as magnetograms, products derived from those traces, such as temporal averages (hourly, daily, etc.) and magnetic activity indices. Metadata consisted of yearbooks in which information about the observatories was provided, and copies of these various data products were reproduced in these books. The launching of the International Geophysical Year (IGY) in 1957 brought with it the beginning of the World Data Centres (WDC) established as central repositories where scientists could go to obtain data from a number of locations worldwide.

In the late 1960s, magnetic observatories began converting to digital electronic equipment and a true revolution in data types and availability was underway. The individual traces could now be kept separate (traces often crossed each other and became confused on magnetograms) and the standard observatory data product changed from hourly means to minute means. Most significantly, these data could now be collected, manipulated, archived and disseminated using computers.

The emergence of the PC in the 1980s, and the development of CD-ROMs for data storage saw the beginning of “small science” where individual scientists were able to have large quantities of data available to study for minimal cost. In 1990, for example, the U.S. Geological Survey (USGS) produced the first CD-ROM of one-minute magnetic observatory data for a 5-year period (1985–1989) from the USGS network of (then) 13 stations (Herzog and Lupica, 1992), and served as the model for the production of a series of CD-ROMs by INTERMAGNET containing a global set of observatory data.

During the 1980s and 1990s, significant advances were also made in the timeliness of data delivery through the use of satellites. Data Collection Platforms (DCPs), equipped with satellite transmission systems, began delivering data from observatories to data processing centres, with delays of the order of tens of minutes. An organisation called INTERMAGNET was formed which began transferring data from observatories worldwide using satellites, and which developed Geomagnetic Information Nodes (GINs) where users could obtain preliminary data from the participating stations quickly by means of email requests.

But without question the greatest advancement in data availability has come about from the impact of the World Wide Web (WWW) as a data discovery and distribution tool for the internet. Beginning in 1992, when WWW access became more broadly available to the general public, the growth in use of the WWW for access to data has been astonishing. Today virtually every institution, public and private, has a website with the capability of providing access to their information and data. As magnetic observatories have progressed in their abilities to collect and process data faster and with higher cadences (one-second data, for example), these data have also become ever more readily available. This change has implications for traditional WDC roles of data discovery and delivery, but also for long-term data archive.

In this chapter we will look at some of these developments and advances in data and metadata types and how they have been made available. Using an historical perspective, and with the help of results presented at the 11th IAGA Scientific Assembly held in Sopron, Hungary in 2009, we hope to provide an account of where we are today regarding these topics.

7.2 Data Types

7.2.1 Printed Media

From the earliest days of magnetic observatory operations, data were collected on paper records of one sort or another. Variations in the magnetic field were recorded as continuous traces on photographic paper. Observations of the absolute magnitudes of the field components were recorded and provided calibration reference data with which to convert the analogue trace amplitudes into magnetic field values. These observations could then be reduced to produce tables of results at various time-scales: hourly, daily, monthly, annual. In time, range data started to be presented and then activity measures, such as the K-index (1939) were developed. Magnetic records also included absolute measurements, information on the baseline used and indications of the most quiet and active days (from 1911).

These records were presented in the form of yearbooks. These yearbooks and photographic analogue traces are archived and are available from different sources and in a variety of formats. Many magnetogram traces are stored on microfilm and microfiche. In this digital age we are keen to preserve these, often, fragile documents and allow greater access for analysis of these historical records. Some have been scanned as images and some have been entered directly to form digitised electronic files.

7.2.1.1 Eye-Observations

The earliest records from magnetic observatories were from eye-observations made manually by observers at set times during each day. For example at Greenwich observatory observations were made at 2-hourly intervals except on “term-days” when measurements were every 5 min. At Colaba observatory observations were made hourly except during disturbed times when they were every 15 min or every 5 min during severely disturbed conditions. Figure 7.1 shows the 5-min eye-observations for Colaba during the September 1859 “Carrington Storm”. The laborious nature of these manual observations encouraged the development of automatic recording devices. In 1847 Brooke designed the automatic photographic magnetograph that was to form the standard technique for observatory operations for over a century (Brooke, 1847).

Eye-observations made with Grubb’s magnetometer at Colaba Observatory for 1–2 September 1859. The observation frequency increases from 15 to 5-min observations as the severity of the storm increases (see Tsurutani et al. 2003). (Credit: Indian Institute of Geomagnetism)

7.2.1.2 Magnetograms

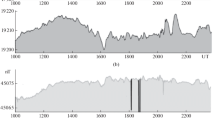

For more than a century, the changes in the Earth’s magnetic field have been recorded at observatories as analogue traces on sheets of photographic paper. Under darkroom conditions, mirrors attached to magnets suspended from quartz filament fibres, known as variometers, reflect light from a source through a horizontal lens and onto a sheet of photographic paper generally mounted on a drum that completes a rotation once a day. Time marks on the hour, generated by a light flash, provide a temporal reference. These paper records are known as normal magnetograms. There are also two auxiliary types of magnetograms produced at some stations: storm magnetograms, with reduced sensitivity, provide better amplitude resolution during periods of enhanced magnetic activity; and rapid-run magnetograms are recorded on drums that rotate more than once a day as the drum moved laterally, providing greater time resolution. In addition to the mirrors mounted on the magnets in the variometer, stationary mirrors are also used to reflect light onto the recording drum and produce a fixed (non-varying) trace known as a baseline. A schematic illustration of this arrangement can be seen in Fig. 7.2a. An example of a normal magnetogram, from Kakioka, Japan can be seen in Fig. 7.2b. Although still in use today at some observatories, these systems have become less common as the magnetic field is more often recorded using digital systems as described in Section 7.2.2.

(a) Schematic of an Eschenhagen type magnetic variometer (for details see Wienert, 1970) (b) Normal-run magnetogram recorded at Kakioka observatory on November 24, 1924. (Credit: WDC for Geomagnetism, Kyoto)

7.2.1.3 Calibration Data

In addition to the magnetogram traces continuously recording the variation in the magnetic field periodic measurements (generally weekly) of the absolute values of the magnetic field vector components were made using a variety of special instruments. Furthermore, special deflection magnets of known magnetic moment were used to obtain conversion factors, known as scale values, to convert the traces from units of distance to units of magnetic field strength. Knowing the absolute values of the field components for a given time interval, one could then combine these with the scale values to derive an absolute value for the baseline. With this, a calibrated value of the magnetic field at any point along the magnetogram trace could then be determined to convert the analogue traces into definitive magnetic values.

7.2.1.4 Hourly Values

After the magnetograms were developed, an observer would use a scaling glass to estimate the amplitude of each trace for each hour from its respective baseline. Using the calibration data, these could then be converted to hourly values of the magnetic field. In the earliest days of observatory operations these hourly values were often spot observations taken every 60 min on the hour. Later, just after the turn of the 20th century, observatories began to estimate the average amplitudes over the whole hour to provide hourly mean values (HMVs). The first HMVs were calculated by Schmidt (1905) for Potsdam observatory with many others following suit shortly afterwards. These HMVs referred to the period of 60 min from the start of the hour, resulting in a mean centred on the following half-hour (i.e., a mean labelled as hour 10 is centred at 10 h 30 min). Convenient for hand-scaling between the hour marks on a magnetogram, this method continued into the digital era, thus ensuring homogeneity throughout the HMV data sets.

Until the advent of digital recording systems HMVs were the primary data product from magnetic observatories. Both the spot hourly values and the HMVs were usually compiled into monthly tables as shown in Fig. 7.3. These tables were published in observatory yearbooks along with the information about the values that would enable distinction between spot and mean values. Unfortunately there were cases of insufficient or incorrect information being recorded, causing the potential for incorrect conclusions to be reached by researchers using these data sets and underlining the importance of clear and accurate metadata (see Section 7.4).

A table of hourly mean values from Honolulu observatory for the H component in June 1964. Each column shows the hour in UT and each row indicates the day of the month. These values are summed at the end of each row and a monthly sum and mean are produced. The table also indicates the five internationally quiet (Q) and disturbed (S) days

7.2.1.5 Magnetic Activity Indices

In addition to the values of the magnetic field components themselves, efforts were made to develop indicators of the various levels of geomagnetic activity. A comprehensive guide to this subject is given in the chapter “Geomagnetic Indices” by Menvielle et al. (2010) within this book and in the classical text by Mayaud (1980). Here, we mention only a few of the most commonly used indices that were historically derived from and originally stored as analogue records.

The 3 h K-index is a quantitative measure of local magnetic activity based upon the range of fluctuations in the observatory traces over 3 h. It uses a semi-logarithmic scale from 0 to 9, with 0 indicating completely quiet conditions and 9, highly disturbed conditions. It is intended to measure geomagnetic disturbances outside the normal diurnal quiet time variations and so these had to be accounted for by the experienced hand-scaler. The largest range of either the horizontal component (H) or declination (D) trace (originally the vertical component (Z) was also used) is selected. K-indices can also be converted into eight 3 h equivalent linear amplitudes, ak, and summed, these provide a local daily amplitude index, Ak.

The scaling of K-indices at a wide network of observatories enabled the derivation of various K based planetary indices adopted by IAGA: Kp from which ap is derived; am; and aa. The planetary activity index, Kp, is derived from the standardised K-indices of 13, mostly mid-latitude, observatories (Bartels et al. 1939). The name Kp comes from the German phrase “planetarische Kennziffer”, meaning planetary index. It is designed to monitor the influence of the auroral electrojet current system, and the magnetospheric ring current and field-aligned currents. As with Ak, ap is the 3-hourly equivalent planetary amplitude derived from Kp and Ap is the daily average of the eight values. Kp, ap and Ap extend back to 1932. Although much used by the external magnetic field communities, these indices are generally considered to have two main weaknesses: one is the poor global distribution of the observatory network used; and the second is that the length of the time series is insufficient for century-long studies. The first was solved by the design of the am-index (Mayaud, 1967), which uses a more extensive network of observatories and is available from 1959. The latter was solved by the design of a much simpler K based planetary index, the aa-index (Mayaud, 1972). Using only two near-antipodal observatories it could be extended back further in time to 1868.

Another important measure of magnetic activity is the Disturbed storm time, Dst-index (Sugiura, 1964). This hourly index is based on the average value of H measured hourly, as described in Section 7.2.1.4, at four near-equatorial geomagnetic observatories. It was originally introduced to measure the magnitude of the current which produces the symmetric disturbance field, but is often used to estimate the strength of magnetic storms caused largely by the magnetospheric ring current. This (mainly) westward flowing ring current causes H to be depressed from its normal level. Dst can be used as a measure of magnetic storm intensity because the strength of the surface magnetic field at low latitudes is inversely proportional to the energy content of the ring current.

7.2.1.6 Yearbooks

Every year the results from a magnetic observatory would be collated and published in the form of a yearbook. Along with photographic magnetometer traces yearbooks are one of the primary sources of printed geomagnetic observatory data. The type of data published in yearbooks has changed over the years as instrumentation has developed and methods were standardised, but all share commonality. Generally, yearbooks contain descriptive text describing the observatory; this may detail its location, housing, staff, instrumentation, and data processing methods. It also contains the final definitive measurements and results from the observatory. As an example, yearbooks from Greenwich observatory originally contained results called ‘indicators of the magnetometers’; firstly from eye-observations and then taken directly from the photographic magnetometer records. These were published alongside records of magnetic dip (these days normally referred to as inclination, I) and absolute measures of H. In later years hourly, daily, monthly and annual means were presented alongside K-indices, diurnal ranges, absolute measurements, baseline information and, sometimes, selective magnetograms and descriptive notes of magnetic disturbances. The results for many observatories were often published alongside meteorological observations.

It is worth noting when examining data from the earliest yearbooks that care must be taken regarding time recordings. Prior to the adoption of standard time-zones and Universal Time in 1884, time conventions at each observatory could vary considerably. Some used a local civil time where a day started at midnight whereas others used an astronomical time where a day started at noon or some other local convention (Boteler, 2006).

Presently, the tradition of publishing yearbooks is still practiced by many observatories worldwide. However since advent of digital distribution of results this practice has been discontinued at some observatories. Yearbooks continue to be the best source of valuable metadata on observatory practice, instrumentation, data processing and quality control and the publication of these are encouraged by IAGA (Jankowski and Sucksdorff, 1996).

7.2.1.7 Conservation and Conversion of Printed Media

During classical, analogue operations magnetograms, HMV tables, K-index tables, and calibration data made up the core of data types produced at magnetic observatories, although other derived products such as sudden storm commencements (ssc) and magnetic pulsations (Matsushita and Campbell, 1967) were also generated at some stations. At some WDCs the various paper products were archived onto microfilm rolls and microfiche cards. WDCs periodically published individual and joint catalogues identifying available data at each of the geomagnetic data centres (e.g., Abston et al. 1985). Figure 7.4 shows the holdings of analogue and digital data at WDC-Kyoto and illustrates that the digital-age of magnetic observatories only began in the mid-1980s. There are vast amounts of data ‘locked away’ in analogue form that is difficult to access and analyse in this digital era. Special procedures are required to preserve these data and allow electronic access.

Much effort has been made over the years to digitise magnetogram traces (e.g., Curto et al. 1996), but this has proved to be a challenging task. During magnetically active times, generally of most interest to researchers, the traces for the different components often cross each other making it difficult to identify which is which. Nonetheless, at the 11th IAGA Scientific Assembly, the British Geological Survey (BGS) reported on a programme to digitise magnetograms from the UK observatories dating back to 1848 (Clarke et al. 2009). The primary goal of the project is one of conservation, with the capture of the magnetograms as images (Fig. 7.5), which will also enable increased access to the scientific community. The second aim is to develop a semi-automated procedure to convert the images into digital values with a greater time resolution than has previously been reported.

In addition, many of the WDCs discussed in Section 7.3 of this chapter have been involved in a special project funded by an International Council for Science (ICSU) grant. This “Magnetogram Rescue Project” involved WDCs Kyoto, Moscow, and Mumbai and aimed to locate old magnetograms and convert them into digital images (Iyemori, 2005). The results of this project are available from these WDCs.

The observatory community has invested most of its efforts in this area to convert the tables of data in yearbooks and other bulletins (for example, HMVs, K-indices) into digital electronic values. Various attempts have been made at this, including the scanning techniques of Optical Character Recognition (OCR). The unreliability of this procedure, especially for HMV tables (generally due to the high variability of different typefaces and hand-written entries in the tables), caused these efforts to be directed more toward manual entry and checking (see for example, Nagarajan, 2008; Fouassier and Chulliat, 2009; Nevanlinna and Häkkinen, 2010). While the reliability of this manual procedure is very good, it is also labour intensive and can therefore be expensive. As a compromise, and in order to make the data accessible electronically, many data sets have been, and are being, scanned into images that will at least allow for computer manipulation by researchers.

7.2.2 Electronic Media

The earliest digital electronic data from magnetic observatories were recorded in 1969 from two French stations, Dumont d’Urville (DRV) in Antarctica, and Port-aux-Français (PAF) on the Kerguelen Islands in the Indian Ocean. These stations used photoelectric feedback and Cesium vapour magnetometers to sample the northerly intensity (X), easterly intensity (Y) components, and total intensity (F) of the magnetic field at one-minute intervals. The introduction of the triaxial fluxgate magnetometer (see for example, Trigg et al. 1971), and use of analogue-to-digital converters in data loggers by the early 1970s, ushered in the true digital electronic age in magnetic observatory operations. These devices sampled the field multiple times per minute which were then filtered to produce one-minute values. This also was the beginning of one-minute values becoming the standard published magnetic observatory results. Many institutes added proton precession scalar magnetometers at their stations which provided separate measurements of the absolute F, and also served as a reference to compare with the fluxgate vector data. Observatory data quality is discussed in the chapter “Improvements in geomagnetic observatory data quality” by Reda et al. (2010) within this book, where a full description of modern magnetic observatory instrumentation, operations and data processing is provided.

7.2.2.1 Minute Means

Various brands of linear core, three-component fluxgate magnetometers are installed at observatories throughout the world. Originally these instruments recorded an output voltage proportional to the strength of the ambient magnetic field. Using the calibration data, the voltages were converted to magnetic field values. The output from a fluxgate magnetometer can be sampled at various frequencies; initially systems were set to sample every 10 s but 1 s sampling is now more common. Regular manual absolute observations are still required to derive baseline values for the fluxgate data. Combined, these provide definitive data (see for example, Turner et al. 2007). Early on, data were stored on magnetic tape and processed to derive 2.5 min means. Over time these faded from use and one-minute means became the standard. These definitive digital one-minute data form the basis for easy computation of various time averages, including hourly, daily, monthly, and annual means.

As with the analogue records, the delay in the availability of early digital data could be considerable. This was due to several factors including the fact that recording tapes at many stations were left for weeks before they were retrieved. Even today, it requires several months of weekly absolute observations, to enable the production of reliable final baselines, before the final definitive data can be delivered to the WDCs.

With the many advantages that digital data provided, and the proliferation of low-cost computational capabilities, the demand for these one-minute data grew. New DCPs began allowing access remotely through modems, and requests for the data rapidly increased. Many researchers, for example in the space physics community, did not require definitive data as they are only interested in the variations in the magnetic field. The internet, with its email and FTP capabilities, allowed institutions to send preliminary data to users more quickly and efficiently. By 1991, INTERMAGNET had established GINs where a global collection of observatory one-minute data could be retrieved. Demand from industry for observatory data also grew. One example is its use, in near real-time, to improve the accuracy of bore-hole surveys in off-shore directional drilling operations carried out by oil and gas companies (Reay et al. 2005). Today, one-minute data are available from a multitude of locations through websites within minutes of the measurements being made.

However, three important points concerning the one-minute data should be emphasised. First, in the early 1990s magnetometers and data collection systems were developed that produced an output in magnetic units rather than voltage units (Narod, 2009). The field measured in this way may appear to be the correct value that one might expect for a given location, but it is not. There are correcting factors, such as zero-level offsets and orthogonality issues that must be corrected for with the use of baselines in the calibration data. With the widespread proliferation of preliminary one-minute data, one could potentially mistake the preliminary data for the definitive data. The preliminary values can be as little as a few to as many as hundreds of nT apart from the definitive values. Nowadays INTERMAGNET clearly separates each type of data on their website and the IAGA-2002 data format explicitly states the type of data both in the filename and the headers. This gives another good example of the power of metadata.

Secondly, early on, the one-minute means were often computed using simple arithmetic mean algorithms, sometimes referred to as a “box-car” mean. However, when converting from analogue to digital, in order to preserve the full information in the analogue signal, it is necessary to sample at least twice the maximum frequency of the analogue signal. Sampling at lower rates introduces erroneous components due to aliasing. A numerical filter can minimise the effects of aliasing in the construction of one-minute values from one-second samples. A Gaussian filter is often used for this purpose, although other types meeting the same requirements are also used. INTERMAGNET now requires numerical filtering of one-minute data as a criterion for attaining INTERMAGNET Magnetic Observatory (IMO) certification and encourages the use of a Gaussian filter using 90 one-second samples. The USGS, for example, began applying a Gaussian filter to derive its one-minute data from 1995 and the BGS use a 61-point cosine filter, having adopted this method in 1997 when instruments capable of one-second sampling were first installed in the UK.

Finally, we should mention that minute means have not always been centred on the minute, but rather were often computed from the 60 sec of values prior to the minute. It wasn’t until the IAGA meeting in Canberra, Australia in 1979 that the decision was taken to encourage observatories to centre the minute values exactly on the minute (Resolution 12).

7.2.2.2 Hourly, Daily, Monthly, Annual Means

An on-going debate within the geomagnetic observatory community is the question of how HMVs should be computed when data are missing within the hour. The question centres on the accuracy of HMVs when the one-minute data for the hour are not complete. Over recent years a number of researchers have considered what may affect the accuracy of HMVs considering various factors including how many data points are missing, the distribution of these data gaps (random, continuous blocks), the level of magnetic activity and, the magnetic latitude of the observatory. Both Mandea (2002) and Schott and Linthe (2007) concluded that a reliable HMV could be computed if less than 10% of the data were missing. However Herzog (2009) found that during magnetically quiet times, including at a high-latitude station, up to two-thirds of the data could be missing without significant loss of accuracy. Newitt (2009) also found the 10% rule be an over-simplification leading both to the unnecessary rejection and unacceptable inclusion of certain data. He suggested a more statistical approach based on a set maximum permissible error and this was further considered in Marsal and Curto (2009) who studied the effect of a pre-established relative accuracy on HMVs. Love (2009) stated that the level of accuracy set for HMVs could not be better than the <5 nT level set for minute data by INTERMAGNET. In his study, he found that HMVs could satisfy this 5 nT level of accuracy, on average, about 90% of the time if the “10% rule” were applied. Whilst no common consensus yet exists on this issue, an IAGA task force is currently working towards determining a resolution (Hejda et al. 2009).

Questions have also arisen regarding how the daily means should be computed. Differences can arise depending upon whether one computes a daily mean from the average of the 1440 min of the day or from the average of the 24 h of the day. Missing data during the day can give rise to these differences. INTERMAGNET guidance states that a daily mean is calculated from 24 h values and an hourly value is calculated from 60 one-minute values. Monthly and annual means are less sensitive to these differences, although this depends on the amount of missing data and the time distribution of the missing data. These have historically been computed both for all days and for magnetically quiet days using the daily mean values. At the WDC Edinburgh, a note is associated with any annual mean value derived from an incomplete data set. This allows these data to be down-weighted when used in global magnetic field modelling.

7.2.2.3 Digital Magnetic Activity Indices

Although production of digital one-minute data became the standard for observatory operations, the legacy of products produced from the analogue magnetograms, including magnetic indices, continued. The long time series of these products were important to researchers studying the magnetic field and development of “magnetic climatologies” requiring many decades of data.

The derivation of K-indices from digital data presented its own unique difficulties. At first the data were plotted and printed on paper thus enabling the index to be hand-scaled in the same way as traditionally done from photographic magnetograms. There was, however, a strong incentive to obtain K-indices automatically using computer algorithms. A comparison between the various proposed algorithms was organised by an IAGA Working Group on geomagnetic indices, and four computer-derived methods were found to provide acceptable results when compared to the hand-scaled method. The four methods, discussed in Menvielle et al. (1995), were approved at the IUGG General Assembly in Vienna in 1991. Although many institutes gradually switched to digital derivation, some have continued to use the traditional hand-scaling method.

The convenience and flexibility of digital electronic media provided the opportunity to not only derive existing indices digitally, and in a more timely manner, but also to devise new magnetic activity indices, such as the AE-index and PC-index.

The AE-index is the auroral electrojet index that is derived from a set of 12 observatories located in the auroral zone latitudes of the northern hemisphere (Davis and Sugiura, 1966). Plots of the H-component for each station are superimposed together to form a collection of traces. This collection produces upper and lower bounds of the range of magnetic activity for the group. The maximum positive deviation of the H-traces produces an upper bound (AU), and the maximum negative deviation produces a lower bound (AL). AE is the difference between the two and provides a global measure of the magnetic activity caused by ionospheric currents within the auroral oval. It correlates well with magnetospheric sub-storms that can last for several hours. This index is attractive because it is easy to compute digitally, and can be used to provide a measure of magnetic activity over any time scale desired. It also has known limitations; for example during very large storms the auroral electrojet will move equatorward of AE stations and its strength will no longer be measured (e.g., Akasofu, 1981; Feldstein et al. 1997). This index was endorsed by IAGA in 1969 (Resolution 2).

The PC-index is the polar cap (dimensionless) index designed to measure geomagnetic activity over the polar cap regions using only a single station located near the geomagnetic North and South poles (Troshichev and Andrezen, 1985). These polar stations are Qaanaaq (previously Thule) in Greenland for the northern hemisphere and Vostok in Antarctica for the southern hemisphere. This index is used to describe the principal features of the solar wind and the Interplanetary Magnetic Field (IMF), and indicates the total energy input into the magnetosphere. This was recommended to IAGA in Birmingham (1999) and endorsed in Hanoi (2001). The endorsement was later suspended due to different procedures being used in the derivation of the index in each hemisphere (PCN and PCS). At Sopron, Stauning et al. (2009) outlined a unified procedure now in use for both indices.

7.2.2.4 One-Second Data

With advances in magnetometers and other hardware systems and circuitry, it was inevitable that observatories would begin collecting and storing one-second data. Currently data reported from observatories are most commonly one-second values recorded from a magnetometer sampling at 1 Hz. Unlike one-minute and hourly mean data these are spot values and the accuracy of the timing (to the millisecond) is not well controlled. Providing true one-second mean data (obtained by filtering higher frequency data) is a challenging endeavour requiring sophisticated magnetometer specifications, timing accuracy, sampling restrictions, physical and numerical filtering processes, data processing and storage techniques. The greatest obstacle to overcome is instrumental noise which at 1 Hz is typically 10 pT ()−1, greater than geomagnetic signal at this frequency.

At the IAGA conference in Sopron, several authors reported on issues regarding developments regarding one-second data. Korepanov et al. (2009) presented the results of tests with a new magnetometer, developed at the Lviv Center of Institute for Space Research in the Ukraine, which meets the standards for one-second data set by INTERMAGNET. Their magnetometer accomplished a 1 pT resolution matching the standard proposed by INTERMAGNET. The sensitivity threshold is close to 1 pT, and the damping of higher frequency ambient noise, especially that of power line harmonics has been achieved. Dourbes and Conrad observatories are currently using this particular magnetometer. Worthington et al. (2009a) reported on an effort to estimate the noise levels and timing accuracy of the fluxgate magnetometers and A/D converters for the one-second data being collected at the USGS, compared to the standards proposed by INTERMAGNET. The results showed that the USGS observatories studied have noise levels of 0.01–0.02 nT and the timing is close to the 10 ms accuracy proposed by INTERMAGNET in 2008.

Others observatories around the world are investigating this issue and developing new techniques and instrumentation to provide one-second data (for example Chulliat et al. 2009a; Shanahan and Turbitt, 2009; Worthington et al. 2009b). Whilst this is a new development for most, the Japan Meteorological Agency has been recording one-second data at Kakioka observatory for about 25 years and at Memambetsu and Kanoya observatories for over 10 years (Minamoto, 2009).

A difficulty with one-second data is the question of what format to use for distribution. The IAGA-2002 format (see Appendix 1) allows for the possibility of one-second data, but only provides for a resolution of 0.01 nT or 10 pT, and this is insufficient for the INTERMAGNET proposal to record one-second data to 1 pT resolution. Nonetheless, it was decided at the INTERMAGNET meeting in Sopron (2009), that one-second preliminary data would be distributed from the website. IMOs will be requested to submit all one-second data that they hold. Initially the IAGA-2002 data format is to be used for these data, despite the resolution problem. Several possibilities for a more satisfactory format were discussed at the Sopron meeting, including: the development of a new format using XML; the development of a new format using the CDF (Common Data Format) [1] currently used in space physics and magnetic satellites communities; or modifying the current IAGA-2002 format.

7.2.2.5 Quasi-Definitive Data

The significant time delay between the availability of preliminary data and definitive data from observatories prompted the proposal of a new data type at the 11th IAGA Scientific Assembly in Sopron. Preliminary data are accessible almost immediately in many cases, but the definitive data can take a year or more to become available. Field modellers have a need for what has been termed “quasi-definitive data,” defined as “data corrected using temporary baselines shortly after their acquisition and very near to being the final data of the observatory” (Chulliat et al. 2009b). Such baseline corrected observatory data are useful to modellers to quickly detect geomagnetic jerks (Chulliat et al. 2010), to test IGRF-11 candidate models (Chulliat, 2009c; Finlay et al. 2010) and for the validation of level-2 products within the context of the upcoming Swarm mission (Friis-Christensen et al. 2006), expected to be launched in 2012. The appeal of this data to modellers is apparent, but one concern with this proposal is the notion of the values being “very near” to that of the final definitive data. One standard proposed at the INTERMAGNET meeting following IAGA was that the data should be within 5 nT of the final definitive values, although verification will only be possible following production of the definitive data. Recent studies suggest observatories should be able to meet this requirement, but confirmation of the accuracy achieved will always be after the fact.

One study presented in Sopron reported on work carried out at the BGS and demonstrated that quasi-definitive data can be produced in near real-time (Baillie et al. 2009). BGS use piecewise polynomials to estimate baselines, and these are extrapolated to provide the daily baseline values used to construct quasi-definitive data in near real-time. For the five BGS INTERMAGNET observatories, comparisons were made between the X, Y and vertical (Z) component quasi-definitive hourly mean values, available on a next day basis, and the final definitive hourly mean values from 2000 to 2007. The distribution of differences showed that for all observatories the quasi-definitive data were within 5 nT of the final data 94–99% of the time. Peltier and Chulliat (2010) also recently proposed a method for producing quasi-definitive data every month and demonstrated that the difference between quasi-definitive and definitive data was less than 1 nT, well within INTERMAGNET standard, for nine observatories having very different baseline characteristics. At Sopron, IAGA encouraged magnetic observatories to produce baseline-corrected quasi-definitive data shortly after their acquisition (Resolution 5) (IAGA, 2009).

7.3 Data Availability

7.3.1 World Data Centres for Geomagnetism

During the IGY, the ICSU created a number of WDCs that were designed to collect, catalogue, archive and distribute geophysical and solar data sets from a few centralised locations (ICSU Panel on WDCs, 1996). There was, and continues to be, a clear need for a few central repositories where scientists can go to obtain data in a consistent format and avoid catastrophic and irreversible losses of collected data (Mandea and Papitashvili, 2009). Originally, WDCs were set up in the United States (WDC-A), Russia (WDC-B), Europe (WDC-C1) and Asia-Oceania (WDC-C2). This has expanded to other regions, notably China (WDC-D) in 1988. WDCs were originally established to manage and preserve data from the physical sciences, such as geomagnetism, oceanography, and meteorology. Since these beginnings WDCs have expanded greatly in both the disciplines they support and the ways in which they meet the scientific community’s requirements. Today, WDCs are more broadly environmental and include such disciplines as biodiversity and ecology, soils, and land processes. There are now more than 50 WDCs located in 12 different countries, as shown in Fig. 7.6. Geomagnetic observatory data, geomagnetic indices, survey data, models and other sources are maintained at several of these WDCs.

WDCs are funded and maintained by their host countries on behalf of the international scientific community. The WDCs operate under the guidelines of an ICSU panel, and from the outset have championed full and open access with data made available free of change, or for the nominal costs of reproduction. WDCs are encouraged to exchange data with each other and there are informal arrangements between data centres holding geomagnetic data to exchange new data sets received. WDCs are also expected to assure a reasonable standard of data quality and documentation. The ultimate responsibility however for data quality lies with the data contributor and not the WDC (ICSU Panel on WDCs, 1996). Recently Korte et al. (2007) identified a number of inconstancies in the hourly mean data sets held at the (then) WDC for Geomagnetism in Copenhagen. It is preferable that data producers re-examine their data sets and update the WDC database accordingly. However in some cases WDCs have, and do, make changes to the data held within. For example, Dawson et al. (2009) reported in Sopron on WDC for Geomagnetism, Edinburgh’s efforts to correct simple typographical and formatting errors to the data sets. In this case it is not necessary to return the data to the individual observatory to correct. Improving the metadata associated with data sets, noting any errors, and if changes are made, is perhaps the first step to improving the quality of the data held.

7.3.1.1 World Data Centre for Geomagnetism, Edinburgh

The WDC for Geomagnetism, Edinburgh [2] was established in 1966 at the Institute of Geological Sciences in Sussex, which later became the BGS, and moved to its current location in Edinburgh in 1977. BGS, which is part of the Natural Environment Research Council (NERC), a publicly-funded agency, had concentrated on gathering data primarily for use in global magnetic field modelling—mainly annual mean data from the worldwide observatory network. Whereas, the WDC in Copenhagen, which was hosted by the Danish Meteorological Institute (DMI), gathered one-minute and hourly observatory data. WDC-Copenhagen provided access to these data sets online via a ‘Data Catalogue’ website. In 2007, BGS agreed to take over responsibility for these data sets and the operation of this ‘Data Catalogue’ website.

Data now held at the WDC-Edinburgh include digital data from geomagnetic observatories worldwide (Fig. 7.7) including one-minute mean values from 1969; hourly values from 1883; and annual means from 1813. Also available are uncorrected one-second data from 2000 onwards from UK observatories and digital versions of some yearbooks. Data from land, marine and aeromagnetic surveys and repeat stations worldwide from 1900 onwards are available, as well as charts and computations of main field models, including the World Magnetic Model (WMM) and International Geomagnetic Reference Field (IGRF) model. Further digital data include definitive magnetic activity indices (K, Kp, ap, Ap, aa, Aa, Cp and C9); estimated real-time planetary indices (ap est , Ap est , aa est and Aa est ) and solar activity indices (SSN and F10.7) [3]. In addition its analogue data holdings include ship-borne declination data from 1590 onwards; archived magnetograms for several UK stations from 1850; and a library containing yearbooks, expedition memoirs, original survey observations and similar items. Data are available online, by anonymous FTP or by request.

(a) Locations of geomagnetic observatories worldwide with data held in the Edinburgh WDC. Solid dots indicate operational observatories, open dots are sites that are currently closed. (b) The number of data holdings at the World Data Centre (Edinburgh) for various time resolutions. (Copyright BGS/NERC)

7.3.1.2 World Data Center for Geomagnetism, Kyoto

In 1957, the Faculty of Science, Kyoto University was assigned to establish the WDC-C2 for Geomagnetism [4] since it was well-known for its achievements in geomagnetic research. This led to the foundation of the World Data Archive for Geomagnetism in the Kyoto University Library in December of the same year. In 1976, the Solar Terrestrial Physics Subcommittee of Science Council of Japan adopted the resolution that the existing data archive should be managed by a new research institution. As a result, on April 18, 1977, the Data Analysis Center for Geomagnetism and Space Magnetism (DACGSM) was established as a new institution in the Faculty of Science, Kyoto University and an associate professor was assigned to it. For over 30 years, DACGSM has been operating the WDC for Geomagnetism, Kyoto and providing a leading data service.

WDC Kyoto holds geomagnetic field data in form of normal-run magnetograms, rapid-run magnetograms, hourly digital values, one-minute digital values, and one-second digital values. Kyoto also derives geomagnetic indices, including the AE-index and the Dst-index, both of which are IAGA sanctioned indices, as well as the SYM/ASY indices (Iyemori, 1990). Recent advances in computing have made it possible for this data centre to collect and display geomagnetic field data in real-time from more than 30 observatories. Magnetograms have been copied onto microfilm or microfiche and are available back to the early 1900s. Converted digital images of old magnetograms resulting from the ICSU “Magnetogram Rescue Project” are also available from the Kyoto WDC [5].

7.3.1.3 World Data Center for Geomagnetism, Copenhagen

The WDC for Geomagnetism, Copenhagen is maintained by the National Space Institute at the Technical University of Denmark (DTU Space) [6] since 2010. It was established at the DMI for the IGY in 1957. Its Geomagnetic Data Master Catalogue for geomagnetic observatory hourly means and one-minute means moved to WDC Edinburgh in 2007.

WDC-Copenhagen currently holds digital data for the PCN-index derived from Qaanaaq geomagnetic observatory, and a mirror of the GFZ Potsdam ftp-server, home of the Kp-index. It has substantial analogue holdings and catalogues of these holdings, in the form of searchable PDF files, are available online [7]. This includes approximately 4300 years of magnetograms from about 280 geomagnetic observatories preserved on microfilm or microfiche; about 3200 years of tabulated hourly means from about 230 observatories; nearly 790 years of quick-run or pulsation magnetograms from about 90 observatories; approximately 1400 records of special events from about 90 different observatories; nearly 3300 years of K-indices from about 180 observatories; and on the order of 100 years of Q-index for about 22 observatories. The WDC-Copenhagen holdings also include data from 406 observatories mainly from the IGY onwards, also data from the Second Polar Year, 1932–1933. Most of the data are stored on microfilm, microfiche, and in printed publications.

7.3.1.4 World Data Centre for Geomagnetism, Mumbai

The Indian Institute of Geomagnetism (IIG), Navi Mumbai houses the WDC for Geomagnetism, Mumbai [8]. The centre is situated on the campus of old Colaba Magnetic Observatory, Bombay, which operated from 1841 to 1905. India’s participation in the study of the earth’s magnetism dates back to the period 1834–1841, when the country joined the Gottingen Magnetic Union. Full-fledged functioning of the WDC for Geomagnetism started at the IIG in 1991 under the recommendation of ICSU and the WDC Panel.

The centre holdings include magnetograms, geomagnetic hourly values, yearbooks of various observatories, digital data on magnetic tape, diskettes and CD-ROMs, microfilms and microfiche of the magnetograms, solar geophysical data bulletins containing solar rotation numbers; Kp, Ap, Cp and Zürich sunspot numbers, and indices such as Dst, AE, AU, AL, and aa. The centre is equipped with ample facilities for data retrieval, and these data are available in machine readable form upon written request or personal visit to the WDC.

7.3.1.5 World Data Center for Solar-Terrestrial Physics, Moscow

The WDC system in Russia consists of two facilities. The WDC-B was one of the original data centres established in the USSR by the Academy of Sciences of the USSR in 1957 to support the IGY. In 1971, the WDC for Solid Earth Physics (WDC for SEP) in Moscow was established as a part of WDC-B, and is maintained by the Geophysical Center of the Russian Academy of Sciences (RAS). WDC for SEP has concentrated on gathering data about the main magnetic field and its secular variations. A considerable part of these data are stored as publications on paper and microfilm, but some are also available in digital electronic form from their website [9]. Data stored at the WDC for SEP, Moscow include digital annual means values from 1813 onwards; publications of original land, marine and aeromagnetic survey observations; catalogues of measured values of the geomagnetic field elements, secular variation data, and charts of the geomagnetic field elements and its anomalies.

The other facility is the WDC for Solar-Terrestrial Physics (STP), also in Moscow [10]. The WDC for STP holds analogue data (mainly on microfilm and microfiche) from 1957 for the worldwide network of 264 magnetic stations as normal-run magnetograms, rapid-run magnetograms, hourly-, minute- and daily-mean values, as well as global magnetic activity indices and various publications. All standard geomagnetic data stored in the WDC for STP, Moscow, are accessible on the Moscow SPIDR (Space Physics Interactive Data Resource) website and mirrored worldwide (see Section 7.3.5.1).

Additional data in non-standard formats are available on the website of the WDC for STP. Digital electronic data from the WDC for STP website include data from magnetic observatories in Russia and the Former Soviet Union; hourly-mean values for 38 observatories mainly from the IGY onwards; one-minute values from 41 observatories mainly from 1983 onwards; global magnetic activity indices (aa, Kp, Ap, AE, Dst, Pc); digital images of magnetograms beginning from 1957; ssc from 1868 onwards; and a catalogue of geomagnetic Pc1 pulsations at the Borok and Mirny observatories from 1957–1992. Participation in the ICSU “Rescue of Magnetograms Project” resulted in digital images of magnetograms from nine observatories covering over 100 observatory-years worth of data.

7.3.1.6 World Data Center for Solar-Terrestrial Physics, Boulder

WDC-A was one of the original data centres established in the United States by the Coast & Geodetic Survey (C&GS) in 1957 to support management of the full suite of IGY data. The National Geophysical Data Center (NGDC) was created in 1965 to assume responsibility for long-term management of the geophysical data, including the WDCs for Geomagnetism, Gravity, and Seismology. NGDC moved to Boulder, CO from Washington, DC in 1972, assuming responsibility for the WDC-A for Solar Activity hosted by the University of Colorado. Currently, NGDC operates two WDCs; the WDC for Solar-Terrestrial Physics (WDC-STP) [11] and the WDC for Geophysics and Marine Geology (WDC-GMG) [12].

The WDC-STP holds data sets relating to solar activity, the space environment, ionosphere, thermosphere, geomagnetism, and cosmic rays. Geomagnetic observations at magnetic observatories are maintained as one-minute, hourly, daily, monthly, and annual mean values. In addition to the observatory data, the WDC-STP also manages magnetic variation, repeat observation, and land survey data, satellite data and global indices of magnetic activity. The WDC-GMG manages magnetic data collected at sea, aeromagnetic data, and develops and distributes magnetic field models, including the joint US/UK WMM and IAGA IGRF. The marine and airborne magnetic data are available through the GEODAS (Geophysical Data System) online system at [13]. Digital data are available online through their websites or by FTP. The WDC-STP also supports the SPIDR data portal that allows internet users to access, browse, display, and analyse STP data.

7.3.1.7 World Data Centre for Solar-Terrestrial Science, Sydney

The WDC for Solar-Terrestrial Science [14] is operated by IPS (Ionospheric Prediction Service) Radio and Space Services, a program within the Bureau of Meteorology of the Australian Government. The centre was established in 2000 and operates in Sydney, Australia. It contains ionospheric, magnetometer, spectrograph, cosmic ray data and solar images that are available for download via FTP. Currently WDC-Sydney holds magnetometer data for ten locations in Australia and two in Pakistan.

7.3.1.8 World Data Center for Geophysics, Beijing

The WDC for Geophysics [15] is operated by the Institute of Geology and Geophysics, Chinese Academy of Sciences. It was established in 1988 and is based in Beijing, China. With the support of the institute, Chinese Academy of Sciences and Ministry of Science and Technology, they have established online access to scientific data, such as geomagnetic data, gravity data, geoelectric field data and seismic wave data. This WDC holds one-minute and K-index data for Beijing Ming Tombs (BMT) observatory. Much effort has also been made to rescue historical magnetograms from 1877–1962 from Sheshan magnetic observatory. This collection has now been microfilmed and some magnetograms have been scanned and made available from their website (Peng, 2007).

7.3.2 INTERMAGNET

INTERMAGNET stands for International Real-time Magnetic Observatory Network. Its objective, to establish a “global network of cooperating digital magnetic observatories, adopting modern standard specifications for measuring and recording equipment, in order to facilitate data exchange and the production of geomagnetic data products in close to real-time” (St-Louis et al. 2008) was achieved and further improvements continue to be the goal. The origin of the organisation and current operation are described in detail by Kerridge (2001) and Rasson (2007). Since its inception in 1986 INTERMAGNET has become the de facto organisation for setting standards for the highest quality observatory operations. Data types are defined as Reported (the raw data without any corrections), Adjusted (data that have some modifications made to it), and Definitive (the final published data), and these codes are used in the headers of the adopted data exchange formats. Instrument standards, such as accuracy and resolution were also established. Observatories that meet these standards and requirements are known as INTERMAGNET Magnetic Observatories, or IMOs.

In 1991, the USGS proposed that INTERMAGNET produce a CD-ROM of definitive one-minute data from IMOs, based upon a model the USGS had published (Herzog and Lupica 1992). Since then, INTERMAGNET has produced CD-ROMs, and now DVD-ROMs, annually. For 2007, the DVD-ROM contains data for 104 observatories from 41 countries. In 1997 a website was created [16] and this is now an important portal for the availability of both definitive and preliminary worldwide observatory data.

Following the 11th IAGA Scientific Assembly in Sopron, INTERMAGNET management resolved to make both one-second and quasi-definitive data available from all IMOs that are able to provide it (S Flower, personal communication, 2009). It was decided to restore all archived preliminary data to the website. The ability to provide commercial protection of data by time-limited distribution from the website will be introduced. The ‘gold standard’ for near real-time data delivery was agreed to be at 2–3 min delay and a discussion session was held to try to identify techniques that might be used to implement this standard.

7.3.3 World Data System

In 2006 ISCU established an ad-hoc Strategic Committee on Information and Data (SCID) to advise ISCU on the future organisation and direction of its activities in relation to scientific data and information. The SCID considered input from three ICSU Interdisciplinary Bodies: the WDC, the Federation of Astronomical and Geophysical Data analysis Services (FAGS) and the Committee on Data for Science and Technology (CODATA).

Despite the success of the WDCs, the review highlighted some basic problems that needed to be addressed. There was no real “system” in the sense of a managed or coordinated effort between the WDCs. While the ICSU WDC panel provided guidance, the WDCs have, for the most part, operated as individual institutions rather than a single entity, and the concept of data interoperability was only minimally implemented. There are also large disparities between WDCs in the developed countries and those in developing countries, no central comprehensive directory or catalogue of data available at the WDCs and an increasingly urgent problem with old data holdings being at risk of decay, lost, or discarded (ICSU 2008).

In its review the SCID also considered the operation of the FAGS and CODATA. The FAGS [17] was formed by ISCU in 1956 as part of IGY. Their principal purpose has been to encourage the analysis of long-term data sets and produce data products for the scientific community. The review by SCID identified similar issues to the WDCs with the services being very much a product of history rather than by design. CODATA were established by ICSU in 1966. It provides a cross-disciplinary focus on scientific data and aims to improve the quality and accessibility of scientific data and the methods by which those data are acquired, managed, and analysed.

Following the review by the SCID, at the 2008 ICSU General Assembly, it was agreed to abolish the ICSU Panel on WDCs and create a World Data System (WDS) incorporating former WDCs with the FAGS, and other centres and services [18]. Those WDCs and FAGS currently in existence must now apply to ICSU to re-establish membership as a part of the WDS. The WDS will provide a coordinated and strategic response to the data needs of the global scientific community with international initiatives such as the International Polar Year (IPY).

The WDS will transition from a set of stand-alone WDCs, and individual Services, to a common globally interoperable distributed data system, while incorporating new scientific data activities. The new system will have advanced interconnections between data management components for disciplinary and multidisciplinary scientific data applications. WDS will have a broader disciplinary and geographic base than the previous ICSU bodies and will strive to become a worldwide ‘community of excellence’ for scientific data. Eventually the WDS plan is to implement a Global Data System of Systems (GDSS) that should be interoperable with current and emerging data systems such as Global Earth Observations System of Systems (GEOSS) [19] (Minster et al. 2009).

7.3.4 The International Service of Geomagnetic Indices

The International Service of Geomagnetic Indices (ISGI) is a collaborative international service established by IAGA and falling within the FAGS. ISGI collects, derives, validates, maintains and distributes time-series of geomagnetic indices and other geomagnetic data products. The service is also responsible for the publication and distribution of any IAGA Bulletins containing information on geomagnetic indices.

The main ISGI operations are carried out at the Laboratoire Atmosphères, Milieux, Observations Spatiales (LATMOS), Paris (official derivation of aa, am, Km). The other ISGI collaborating institutes are: GeoForschungsZentrum (GFZ), Potsdam (official derivation of Kp, Ap and international quiet and disturbed days); Observatorio del Ebro, Roquetes (official derivation of ssc and solar flare effects (sfe)); and WDC-C2 for Geomagnetism, Kyoto (official derivation of Dst and AE). Data availability for the latter is described in more detail in Section 7.3.1.2 and further information on the other institutes follows.

7.3.4.1 LATMOS, France

Established in 2009 following a reorganisation of scientific organisations in France, LATMOS has now replaced Centre d’étude des Environnements Terrestre et Planétaires (CETP) as the main ISGI host. As well as having the primary responsibility for the derivation of the aa, am and Km indices, LATMOS also maintains the ISGI database of the IAGA endorsed indices and operates the ISGI publication office and the main ISGI website [20]. As well as providing online access to the ISGI data, the website contains valuable information (metadata) on the derivation and meaning of the indices. The definitive indices available online are: aa, Kpa (from 1868); am, Kpm (from 1959); Dst (from 1957); AU, AE and AL (from 1957); ssc (from 1868); international quietest days (from 1932); and Kp (from 1932). Monthly bulletins are also produced, which contain various provisional indices and include graphs of musical diagrams of Km and aa. These are circulated by post as well as being made available on the website.

7.3.4.2 GeoForschungsZentrum, Germany

Helmholtz-Center Potsdam, the German Research Center for Geosciences or GFZ is the national German research centre for the Earth sciences. The Adolf-Schmidt-Observatory Niemegk, belonging to GFZ, derives and maintains the Kp, ap and Ap indices as well as the lesser known planetary indices, Cp and C9. Definitive indices are produced twice a month and quick look versions are provided in near-real time. As well as the planetary indices, GFZ’s ISGI responsibilities include the selection of the international quietest days (Q-days) and most disturbed days (D-days) of each month, which are classified using the Kp indices. These, the aforementioned planetary indices and ssc data provided by Observatorio del Ebro are all available on the GFZ website [21] in various formats, including ASCII tables, histograms and Bartels music diagrams. They are also available to download directly from the GFZ anonymous FTP site.

7.3.4.3 Observatorio del Ebro, Spain

The Ebro Observatory is a research institute founded by the Society of Jesus in 1904 to study the Sun-Earth relationships. It is a non-profit organisation associated with the Spanish Research Council (CSIC). Since 1975, Ebro Observatory was entrusted by IAGA to host the International Service on Rapid Magnetic Variations. It has responsibility for collecting, creating and publishing the official IAGA lists of rapid variations, which consist of ssc and sfe records. Annual lists of ssc data are available online from 1868 and sfe data from 1995 [22]. Monthly updates are provided to LATMOS and GFZ for further distribution.

7.3.5 Other Data Resources

7.3.5.1 Space Physics Interactive Data Resource

The SPIDR [23] is a data portal designed to allow a solar terrestrial physics customer to intelligently access and manage historical space physics data for integration with environment models and space weather forecasts. SPIDR is a distributed network of synchronous databases, web-portals and web-services. There are SPIDR sites in Boulder, Paris, Nagoya, Sydney, Beijing, Kiev, and Cape Town. SPIDR databases include geomagnetic one-minute, hourly means and annual means, as well as global geomagnetic and solar indices.

7.3.5.2 Observatory Operator’s Websites

Many institutions operating magnetic observatories in the modern era have their own websites. This is an additional resource providing access to geomagnetic observatory data and data products to the user community. Since the advent of digital data distribution in the 1990s, observatories might not necessarily submit their data to a WDC as recommended by IAGA. It is recognised (Mandea and Papitashvili, 2009) that the traditional “push data” approach (to a WDC for example) is gradually being replaced by a “pull data” approach via data mining techniques such as virtual observatories (VO).

7.3.5.3 Variometer Networks

In addition to the data resources mentioned thus far, where full-field definitive observatory data and activity indices can be obtained, there are also a large number of variometer stations located around the globe providing additional data resources. Unlike magnetic observatories, variometer stations record only the short-term variations in the magnetic field and not the absolute level. These instruments complement the network of magnetic observatories and these measurements are used especially in the study of solar-terrestrial science.

There is a large amount of data (past and present) from many different magnetometer chains or networks around the world. A worldwide collaborative effort to pull these together was initiated and a single source of ground based magnetometer data is now available at SuperMAG [24]. This data portal website provides access to variometer data from more than 200 stations worldwide. Data are available from 1997 and can be viewed and downloaded online as linear plots and maps.

SuperMAG relies on acquiring data from a variety of sources including magnetometer networks such as IMAGE [25] (International Monitor for Auroral Geomagnetic Effects) and CARISMA [26] (Canadian Array for Realtime Investigations of Magnetic Activity). IMAGE is a network of 31 magnetometer stations operated by 10 institutions from Estonia, Finland, Germany, Norway, Poland, Russia and Sweden. CARISMA operate an array of 25 stations throughout Canada. INTERMAGNET also contributes data to SuperMAG since magnetic observatories can double as variometer stations. There are dozens of similar magnetometer networks located across the globe and are too numerous to mention here. Most of these are collaborative ventures in their own right, relying in turn on the individual institutes operating the stations.

7.4 Metadata and Metadata Standards

Metadata are an increasingly important aspect of geomagnetic data provision, both for the WDCs and for other data providers. Metadata are information about data; they are the “who, what, when, where, why and how” of a data set. They describe the content, quality, originator, and other characteristics of a data set that help users understand the nature of that data set and how to use it. As time passes, and personnel, instrumentation, and data processing procedures change, it is important that current and future generations have sufficient information about the data to enable them to independently understand and use them. The objective is to provide documentation that ensures that the values contained in a data set have the necessary reference information, and that even non-experts in a discipline will be able to use the data properly.

As Buneman (2005) notes, the web has radically changed the way scientific research is carried out, with more rapid and varied access to data, but this has produced new issues in maintaining the scientific record. As data can readily be copied and altered we need some means of verifying authorship and data provenance for researchers. For data producers too the issue of data citation is a growing concern. Wayne (2005a; 2005b) demonstrates the many benefits of metadata but also some of the obstacles to its production. Adhering to a metadata standard can be complex and time-consuming and there may be little immediate, tangible benefit to data producers. However the potential benefit is great.

The subject of metadata was raised at the IAGA conference in Sopron, and at the INTERMAGNET meeting that followed. Reay et al. (2009) highlighted the need for good data provenance to assist scientific examination of geomagnetic data sets. Better metadata would lead to improvements in the curation of data at the WDCs; addressing any inconsistencies seen in data sets and providing a clear ‘paper-trail’ of any transformations or corrections to data. It would also provide clear quality assurance to researchers, assisting data selection for global field models. In addition; common, well developed metadata improves the “discoverability” of data through the many metadata clearinghouses.

Svalgaard and Cliver (2007) discuss the current issues of data quality within WDCs and note that metadata about, especially, historical data is sorely lacking. They also point out the current difficulties in feeding back any corrections into the WDCs. If comprehensive metadata records were associated with a dataset a researcher could trace all the data processing steps applied to a data set, more easily identifying any inconsistencies. This would also provide a means for archivists to document any subsequent corrections made.

Consider another scenario: say, if you wanted to gather all geomagnetic data recorded by a fluxgate magnetometer, spanning 1990–2000, located within N30–50 latitude range. Currently this would require a significant amount of investigation to determine which observatories this applied to and where to get this data. If this simple metadata was recorded and stored in a central database in a known metadata standard this type of query would take seconds.

At the Sopron assembly, IAGA recognised the importance of metadata preservation in supporting geophysical studies (Resolution 7) and encouraged relevant agencies to support the generation, preservation and dissemination of metadata to ensure the future usability of these data for interdisciplinary studies. A session on metadata has been proposed for the next IUGG General Assembly in 2011.

INTERMAGNET has assigned a representative to work with groups in Japan, the UK, the US, and elsewhere on the issue of station-level metadata requirements and proposals. INTERMAGNET will notify the IMOs that a proposed standard would be forthcoming, and they should prepare to incorporate a new element into the observatory operations. It was also suggested that observatories place more effort on generating yearbooks with the omission of magnetogram plots if that would facilitate their production. The WDCs are also considering this issue and a wiki has been established to further coordinate discussions on this matter.

There are many issues to be considered in the development of a metadata standard for magnetic observatories. In the following sections we discuss some of the types of metadata currently available, or in development, that might provide a solution to capturing this important information resource.

7.4.1 Magnetic Observatory Metadata

7.4.1.1 Yearbooks

Yearbooks provided the earliest form of metadata for geomagnetic observatories, and are still being produced by many institutions today. These contain a permanent record of the status of the observatory for a given year and generally contain information about the station location, instrumentation, contact persons and record significant events or changes to the observatory throughout the year. These are published alongside the actual data products reported from an observatory.

Traditionally these were produced as printed books and these are stored in libraries, institutes and WDCs across the globe. Nowadays yearbooks are often produced in electronic PDF format that can be found from the websites of some institutions, WDCs, and INTERMAGNET [27]. Additionally some efforts are being made to scan historical yearbooks allowing electronic access to these important records.

Whilst the metadata held in yearbooks are often precisely what we wish to capture in a metadata standard, the format that this information is held is not currently standardised and is not suitable for data discovery applications. Principally, observatory operators should be encouraged to record this metadata, in whatever form, and then those within the geomagnetism community engaged in data curation must consider how to transfer this into an established metadata format. Improvements in data mining may be a way forward in this respect.

7.4.1.2 INTERMAGNET

The best source for digital observatory metadata, at least for those observatories within the organisation, is INTERMAGNET. Each year INTERMAGNET publish annual definitive observatory results on a CD/DVD-ROM. Alongside the data a Readme file is supplied for each observatory detailing basic information on location, instrumentation, sampling, filtering, contacts etc. This ASCII text file is in a standardised format with mandatory and optional fields. As these metadata records are directly related to a single published dataset they also provide temporal metadata that is challenging to capture otherwise.

Beyond this published metadata there is much implicit metadata associated with classifying an observatory as an IMO. If an observatory meets INTERMAGNET standards you can infer much information about its data processing, quality assurance, data distribution and instrumentation standards. However to a non-expert most of this information is not readily apparent: to access this type of metadata you would need to refer to the Technical Reference manual (St-Louis et al. 2008) held separate to the data.

7.4.1.3 Metadata Standards

Despite the wealth of metadata, in yearbooks and from INTERMAGNET, there is currently no metadata standard that can adequately describe data from magnetic observatories. With metadata, standardisation is important because it provides a common set of formats, terminology and definitions that facilitates the use and exchange of metadata digitally. It provides uniformity and consistency over time, enabling the development of tools and resources for metadata exchange and manipulation. One might say that metadata standards are to metadata what data formats are to data.

Considering what form a metadata standard for magnetic observatory data may take, we can suggest some of the basic information that would be required. These include, but are not limited to:

-

Contact Information—The name of the responsible institute that produces the data, including addresses and personal contacts.

-

Data Description—A description of the type of data, including a narrative summary of the nature of the data and its possible applications and a list of entities and attributes relevant to the data.

-

Station Description—A description of the station in question, including coordinates, elevation, and possibly photographs and maps.

-

Instrumentation—A description of the types of instruments in use at the observatory, and the nature of the data that are retrieved from them.

-

Data Processing—A description of the processes and methodology used to process the data from instrument recordings to the final definitive values. This should include a description of data formats and how the numerical values are to be interpreted.

-

Data Quality—A general assessment of the quality of the data set considering completeness, accuracy, quality control methods and so on.

-

Data Distribution—A description of how and from where the data may be acquired, particularly through the WDCs.

To help us towards a metadata standard for geomagnetic observatory data we must look toward those standards currently in use for other, similar, datasets. Metadata standards established for geospatial data may act as such a guide. While these standards address many of the basic requirements of our metadata (for data discoverability, distribution, etc.) they lack the scope to deal with parameters that change with time. For an observatory nothing is fixed for all time: instrumentation, processing techniques, responsible institutions, staff contacts, data types, data quality, and even the observatory location can change. How to address this issue is one the greatest challenges facing metadata standardisation.

7.4.2 FGDC Standard

One of the first standards produced for geospatial data was developed by the Federal Geographic Data Committee (FGDC) in the US [28], which was designed primarily as the template for storing and distributing geographic spatial metadata. The FGDC standard consists of seven information sections:

-

1.

Identification—Basic information about the data set and originator.

-

2.

Data Quality—General assessment of the quality of the data set.

-

3.

Spatial Data Organisation—Used to represent data set spatial information.

-

4.

Spatial Reference—Reference frame for data set coordinates.

-

5.

Entity and Attribute—Details about the information content.

-

6.

Distribution—Distributor information and how to obtain the data set.

-

7.

Metadata Reference—Metadata responsible parties and current status.

This standard is complex, requiring the data provider to fill in many metadata fields to comply. These are often in a terminology that is unclear to non-experts in the standard. The FGCD standard was developed primarily for data products that have a single publication date, such as maps, and consequently has limitations with regard to parameters that change in time—an important factor for observatory metadata. One would have to write a separate record for each change that occurs.