Abstract

Uncertainty evaluation is one of the important areas that needs to be strengthened toward effective implementation risk-based approach. At the outset, this chapter introduces the broad concepts in respect of integrated risk-based engineering and examines the capability of the current approaches for uncertainty modeling as applicable to integrated risk-based engineering. A brief overview of state of the art in uncertainty analysis for nuclear plants and the limitation of the current approaches in quantitative characterization of uncertainties have been discussed. Role of qualitative or cognitive-based approaches has also been discussed to address the scenario where quantitative approach is not adequate.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Uncertainty characterization

- Risk-based engineering

- Probabilistic safety assessment

- Nuclear plants

- Safety assessment

1 Introduction

Existing literature in safety assessment for nuclear plants deals with two terms, viz., “risk-based decisions” and “risk-informed decisions,” for dealing with regulatory cases. In the context of nuclear plant safety evaluation, risk-based engineering deals with the evaluation of safety cases using probabilistic safety assessment (PSA) methods alone, while risk-informed approach decisions are based on, primarily, deterministic methods including design and operational insights, and PSA results either complement or supplement the deterministic findings [1]. These approaches intuitively consider that probabilistic and deterministic methods are two explicit domains. However, ideally speaking, any problem or modeling requires considerations of deterministic as well as probabilistic methods together. Otherwise, the salutation is not adequate and complete. Even though the risk-informed approach that requires considerations of deterministic as primary approach and probabilistic as supplementary/complimentary approach deals with the issues in explicit manner. The fact is that even deterministic variables, like design parameters, process, and nuclear parameters, are often random in nature and require probabilistic treatment. Defense-in-depth along with other principles, viz., redundancy, diversity, and fail-safe design, forms the basic framework of deterministic approach. It will help to characterize the reliability of various barriers of protection – the basic instrument of defense-in-depth. Similarly, probabilistic methods cannot work in isolation and require deterministic input in terms of plant configurations, failure criteria, design inputs, etc. Hence, it can be argued that a holistic approach is required where deterministic and probabilistic methods have to work in an integrated manner in support of decisions related to design, operation, and regulatory review of nuclear plants. The objective should be to remove overconservatism and prescriptive nature of current approach and bring in rationales and make the overall process of safety evaluation scientific, systematic, effective, and integrated in nature.

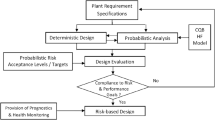

Integrated risk-based engineering is a new paradigm that is being introduced through this chapter. In this approach, the deterministic as well as probabilistic methods are integrated to form a holistic framework to address the safety issues. However, the key issues that need to be considered for applications are characterization of uncertainty, assessment of safety margins, and requirements of dynamic models for assessment of accident sequence evolution in time domain.

Another significant feature of this chapter is that it is perhaps for the first time that the term “risk-based engineering” has been used and not the traditional “risk-based decisions.” The reason is that traditionally the terms “risk-based” and “risk-informed” have been associated with regulatory decisions. However, keeping in view the knowledge base that is available and the tools and methods that have been developed along these years makes the case for risk-based approach to qualify as a discipline as “risk-based engineering.” Hence, it is proposed that the term “integrated risk-based engineering” has relevance to any area of engineering, be it design, operations including regulatory reviews.

Keeping in view the theme of this conference, the aspects related to uncertainty have been discussed. Included here is a brief overview of uncertainty evaluation methods in risk-based applications and requirements related to epistemic and aleatory uncertainty. Further, the aspects related to qualitative or cognitive aspects of uncertainty have also been discussed. This chapter treats the subject in a philosophical manner, and there is conscious decision not to cover the specifics that can be found in the referred literature.

2 Integrated Risk-Based Engineering: A Historical Perspective

It is generally felt that the traditional approach to safety assessment is purely deterministic in nature. It is true that most of the cases evaluated as part of safety assessment employ deterministic models and methods. However, if we look at the assumptions, boundary conditions, factors of safety, data, and model, it can be argued that there is a good deal of probabilistic element even as part of traditional safety analysis. These elements or variables had qualitative notions for bounding situations and often provided comparative or relative aspects of two or more prepositions. To understand this point further, let us review the traditional safety analysis report(s) and have a fresh look at the broader aspect of this methodology. The major feature of the traditional safety analysis was based on the maximum credible accident, and for nuclear plants, it was mainly loss-of-coolant accident, loss-of-regulation accident scenario, etc. It was assumed that plant design should consider LOCA and other scenario like station blackout scenario to demonstrate that plant is safe enough. A reference to a safety report will make clear that there is an element of probability in a qualitative manner. Like (a) the possibility of two-out-of-three train failure is very low, (b) possibility of a particular scenario involving multiple failure is very unlikely or low, and (c) series of assumptions that will form bounding conditions. These aspects provided definite observation that probabilistic aspects were part of deterministic methods. These aspects were qualitative in nature. Keeping in view the above and considerations of factor of safety in the design as part of deterministic methods bring out the fact that safety analysis approach was integrated right since inception. This background along with current safety requirements, like process of safety evaluation, should (a) be more rational based and not prescriptive in nature, (b) remove overconservative, (c) be holistic in nature, (d) provide improved framework for addressing uncertainty, (e) allow realistic safety margins, and (f) provide framework for dynamic aspect of the accident scenario evaluation.

The integrated risk-based approach as mentioned above is expected to provide an improved framework for safety engineering. Here, the deterministic and probabilistic approaches treat the issues in an implicit manner unlike risk-informed approach where these two approaches have been employed in explicit manner. In this approach, issues are addressed in an integrated manner employing deterministic and probabilistic approaches. From the point of uncertainty characterization, in this approach, the random phenomenon is addressed as aleatory uncertainty while the model- and data-related uncertainty as epistemic uncertainty. There are host of issues in safety analysis where handling of uncertain issue is more important than quantification. These rather qualitative aspects of uncertainty or cognitive uncertainty need to be addressed by having safety provisions in the plant. The integrated risk-based framework proposes to address these issues.

3 Major Issues for Implementation of Integrated Risk-Based Engineering Approach

One of the major issues that forms the bottleneck to realize application of risk-based engineering is characterization of uncertainty associated with data and model. Even though for internal events there exist reasonable data, characterization of external events poses major challenges. Apart from this availability of probabilistic criteria and safety margins as nation policy for regulation, issues related to new and advanced features of the plants like passive system modeling, digital system reliability in general, and software system reliability pose special challenges. Relatively large uncertainties associated with common cause failures of hardware systems and human action considerations particularly with accident scenarios are one of the major issues.

It can be argued that reduction of uncertainty associated with data and model in and characterization of uncertainties particularly for rare events where data and model are either not available or inadequate is one of the major challenges in implementation of integrated risk-based applications.

4 Uncertainty Analysis in Support of Integrated Risk-Based Decisions: A Brief Overview

Even though there are many definitions of uncertainty given in literature, the one which suits the risk assessment or rather the risk-informed/risk-based decisions has been given by ASME as “representation of the confidence in the state of knowledge about the parameter values and models used in constructing the PSA” [2]. Uncertainty characterization in the form of qualitative assessment and assumptions has been inherent part of risk assessment. However, as the data, tools, and statistical methods developed over the years, the quantitative methods for risk assessment came into being. The uncertainty in estimates has been recognized as inherent part of any analysis results. The actual need of uncertainty characterization was felt while addressing many real-time decisions related to assessment of realistic safety margin.

The major development has been in respect of classification/categorizing uncertainty based on the nature of uncertainty, viz., aleatory uncertainty and epistemic uncertainty. Uncertainty associated due to randomness (chance phenomenon) in the system/process is referred as aleatory uncertainty. This type of uncertainty arises due to inherent characteristic of the system/process and data. Aleatory uncertainty cannot be reduced as it is inherent part of the system. This is the reason aleatory uncertainty is also called irreducible uncertainty [3]. The nature of this uncertainty can be explained further by some examples like results of flipping a coin – head or tail it is matter of chance. Chances of diesel set to start on demand it could be success or failure, etc. On the other hand, the uncertainty associated due to lack of knowledge is referred as epistemic uncertainty. This uncertainty can be reduced either by performing additional number of experiments, more data, and information about the system. This uncertainty is more of subjective in nature.

If we look at the modeling and analysis methods in statistical distributions, we will note that probability distributions provide one of the important and fundamental mechanisms to characterize uncertainty in data by estimating upper bound and lower bounds of the data [4, 5]. Hence, various probability distributions are central to characterization of uncertainty. Apart from this, fuzzy logic approach also provides an important tool to handle uncertainty where the information is imprecise and where probability approach is not adequate to address the issues [6]. Like in many situations, the performance data on system and components is not adequate, and the only input that is available is opinion of the domain experts. Apart from this, there are many situations where it is required to use linguistic variables as an input. Fuzzy approach suits these requirements.

There are many approaches for characterization/modeling of uncertainty. Keeping in the nature of problem is being solved; a judicious selection of applicable method has to be made [7]. Even though the list of approaches listed here is not exhaustive, commonly, the following methods can be reviewed as possible candidate for uncertainty modeling:

-

1.

Probabilistic approach

-

Frequentist approach

-

Bayesian approach

-

-

2.

Evidence theory – imprecise probability approach

-

Dempster-Shafer theory

-

Possibility theory – fuzzy approach

-

-

3.

Structural reliability approach (application oriented)

-

First-order reliability method

-

Stochastic response surface method

-

-

4.

Other nonparametric approaches (application specific)

-

Wilk’s method

-

Bootstrap method

-

Each of the above methods has some merits and limitations. The available literature shows that general practice for uncertainty modeling in PSA is through the probabilistic approach [8–11]. Application of probabilistic distributions to address aleatory as well as epistemic uncertainty forms the fundamentals of this approach. There are two major basic models, classical model which is also referred as frequentist models and subjective model. Frequentist model tends to characterize uncertainty using probability bounds at component level. This approach has some limitations like no information on characterization of distribution and nonavailability of data and information for tail ends. The most popular approach is subjective approach implemented through Bayes theorem called Bayesian approach which allows subjective opinion of the analysts as “prior” knowledge to be integrated with the data or evidence that is available to provide with the estimate of the event called “posteriori” estimate [12]. Even though this approach provides an improved framework for uncertainty characterization in PSA modeling compared to frequentist approach, there are arguments against this approach. The subjectivity that this approach carries with it has become the topic of debate in respect of regulatory decisions. Hence, there are arguments in favor of application of methods that use evidence theory which works on to address “imprecise probabilities” to characterize uncertainty [13–18]. Among the existing approach for imprecise probability, the one involving “coherent imprecise probability” which provides upper and lower bound reliability estimates has been favored by many researchers[19].

Among other methods listed above, each one has its merit for specific applications like response surface method, and FORMs (first-order reliability methods) are used generally for structural reliability modeling [20]. There are some application-specific requirements, like problems involving nonparametric tests where it is not possible to assume any particular distribution (as is the case with probabilistic methods); in such cases, bootstrap nonparametric approach is employed [21]. Even though this method has certain advantages, like it can draw inference even from small samples, estimation of standard error, it is computationally intensive and may become prohibitive for complex problems that are encountered in risk-based applications. Other nonparametric methods that find only limited application in risk-based engineering do not form the scope of this chapter.

From the above, it could be concluded that the probabilistic methods that include classical statistical methods and Bayesian approach form the major approaches for uncertainty characterization in risk-based approach. At times, fuzzy-based approach is used as part where the data deals with imprecise input in the form of linguistic variables. However, fuzzy logic applications need to be scrutinized for methodology that is used to design the membership functions as membership functions have found to introduce subjectivity to final estimates.

5 Major Features of RB Approach Relevant Uncertainty Characterization

Keeping in view the subject of this chapter, i.e., uncertainty characterization for risk-based approach, it is required to understand the nature of major issues that need to be addressed in risk-based characterization and accordingly look for the appropriate approach. At the outset, there appears general consensus that on a case to case basis most of the above listed approaches may provide efficient solution for the specific domain. However, here the aim is to focus on the most appropriate approach that suits the risk-based applications. The PSA in general and Level 1 PSA in particular, as part of risk-based approaches, have following major features [22–25]:

-

(a)

The probabilistic models basically characterize randomness in data and model, and hence, the model at integrated level requires aleatory uncertainty characterization.

-

(b)

The probabilistic models are basically complex and relatively large in size compared to the models developed for other engineering applications.

-

(c)

The uncertainty characterization for PSA models requires an efficient simulation tool/method.

-

(d)

The approach should allow characterization epistemic component of data as well as model.

-

(e)

Confidence intervals for the component, human errors, etc., estimated using statistical analysis form the input for the probabilistic models.

-

(f)

Major part of modeling is performed using fault tree and event tree approaches; hence, the uncertainty modeling approach should be effective for these models.

-

(g)

There should be provision to integrate the prior knowledge about the event for getting the posteriori estimates, i.e., the approach should be able to handle subjective probabilities.

-

(h)

Often, instead of quantitative estimates, the analysts come across situations where it becomes necessary to derive quantitative estimates through “linguistic” inputs. Hence, the framework should enable estimation of variables based on qualitative inputs.

-

(i)

Evaluation of deterministic variable forms part of risk assessment. Hence, provision should exist to characterize uncertainty for structural, thermal hydraulic, and neutronics assessment.

-

(j)

Sensitivity analysis for verifying impact of assumptions, data, etc., forms the fundamental requirements.

-

(k)

The PSA offers improved framework for assessment of safety margin – a basic requirement for risk-based applications.

-

(l)

Even though PSA provides an improved methodology for assessment of common cause failure and human factor data, keeping in view the requirements of risk-based applications further consolidation of data and model is required.

Apart from this, there are specific requirements, like modeling for chemical, environmental, geological, and radiological dose evaluation, which also need to be modeled. As can be seen above, the uncertainty characterization for risk assessment is a complex issue.

5.1 Uncertainty Propagation

The other issue in characterizing uncertainty is consideration of effective methodology for propagation of uncertainty. Here, the literature shows that Monte Carlo simulation and Latin hypercube approach form the most appropriate approach for uncertainty propagation [26]. Even though these approaches are primarily been used for probabilistic methods, there are applications where simulations have been performed in evidence theory or application where the priori has been presented as interval estimates [27]. The risk-based models are generally very complex in terms of (a) size of the model, (b) interconnections of nodes and links, (c) interpretation of results, etc. The available literature shows that the Monte Carlo simulation approach is extensively being used in many applications; it also labeled this method as computationally intensive and approximate in natures. Even with these complexities, the risk-based applications, both Latin hypercube and Monte Carlo, have been working well. Even though it is always expected that higher efficiency in uncertainty modeling is required for selected cases, for overall risk-based models, these approaches can be termed as adequate. In fact, we have developed a risk-based configuration system in which the uncertainty characterization for core damage frequency has been performed using Monte Carlo simulation [28].

6 Uncertainty Characterization: Risk-Informed/Risk-Based Requirements

The scope and objective of risk-informed/risk-based applications determine the major element of Level 1 PSA. However, for the purpose of this chapter, let us consider that development of base Level 1 PSA for regulatory review as the all-encompassing study. The scope of this study includes full-scope PSA which means considerations of (a) internal event (including loss of off-site power and interfacing loss of coolant accident, internal floods, and internal fire); (b) external event, like seismic events, external impacts, and flood; (c) full-power and shutdown PSA; and (d) reactor core as the source of radioactivity (fuel storage pool not included) [25].

The point to be remembered here is that uncertainty characterization should be performed keeping in view the nature of applications [2, 29]. For example, if the application deals with the estimation of surveillance test interval, then the focus will start right from uncertainty in initiating event that demands automatic action of a particular safety system, unavailability for safety significant component, human actions, deterministic parameters that determine failure/success criteria, assumptions which determine the boundary condition for the analysis, etc.

An important reference that deals with uncertainty modeling is USNRC (United State Nuclear Regulatory Commission) document NUREG-1856 (USNRC, 2009) which provides guidance on the treatment of uncertainties in PSA as part of risk-informed decisions [29]. Though the scope of this document is limited to light water reactors, the guidelines with little modification can be adopted for uncertainty modeling in either CANDU (CA-Nadian Deuterium Uranium reactor)/PHWR (pressurized heavy water reactor) or any other Indian nuclear plants. In fact, even though this document provides guidelines on risk-informed decisions, requirements related to risk-based applications can be easily be modeled giving due considerations to the emphasis being placed on the risk metrics used in PSA. Significant contribution of ASME/ANS framework includes incorporation of “state-of-knowledge correlation” [2] which means the correlation that arises between sample values when performing uncertainty analysis for cut sets consisting of basic events using a sampling approach such as the Monte Carlo method; when taken into account, this results, for each sample, in the same value being used for all basic event probabilities to which the same data applies.

As for the standardization of risk-assessment procedure and dealing with uncertainty issues concerned, the PSA community finds itself in relatively comfortable position. The reason is that there is a consensus at international level as to which uncertainty aspects need to be addressed to realize certain quality criteria in PSA applications. The three major references that take care of this aspect are (a) ASME (American Society of Mechanical Engineers)/ANS (American Nuclear Society) Standard on PSA Applications [2], (b) IAEA-TECDOC-1120 (International Atomic Energy Agency-Technical Document) on Quality Attribute of PSA applications [30], and (c) various NEA (Nuclear Energy Agency) documents on PSA [24]. Any PSA applications to qualify as “Quality PSA” need to conform to these quality attributes as laid out for various elements of PSA. For example, the ASME/ANS code provides a very structured framework, wherein there are higher level attributes for an element of PSA, then there are specific attributes that support the higher level attributes, etc. These attributes enable formulating a program in the form of checklists that need to be fulfilled in terms of required attributes to achieve conformance quality level for PSAs. The examples of quality attributes that are required to assure uncertainty analysis requirements following are some examples from ASME/ANS in respect of the PSA element – Initiating event (IE) Modeling.

Examples of some lower level specific attributes from ASME/ANS include:

ASME/ANS attribute IE-C4: “When combining evidence from generic and plant-specific data, USE a Bayesian update process or equivalent statistical process. JUSTIFY the selection of any informative prior distribution used on the basis of industry experience.”

Similarly,

ASME/ANS attribute IE-C3: CALCULATE the initiating event frequency accounting for relevant generic and plant-specific data unless it is justified that there are adequate plant-specific data to characterize the parameter value and its uncertainty.

Also, the lower support requirement IE-D3 documents the sources of model uncertainty and related assumptions.

The USNRC guide as mentioned above summarizes in details the uncertainty related to supporting requirements of ASME/ANS documents systematically. For details, these documents may be referred. Availability of this ASME/ANS standard, NEA documents, and IAEA-TECDOC is one of the important milestones for risk-based/risk-informed applications as these documents provide an important tool toward standardization of and harmonization of risk-assessment process in general and capturing of important uncertainty assessment aspects that impact the results and insights of risk assessment.

7 Decisions Under Uncertainty

At this point, it is important to understand that the uncertainty in engineering systems creeps basically from two sources, viz., noncognitive generally referred as quantitative uncertainty and cognitive referred as qualitative uncertainty [31]. The major part of this chapter has so far dealt with the noncognitive part of the uncertainty, i.e., uncertainty due to inherent randomness (aleatory) and uncertainty due to lack of knowledge (epistemic). We had enough discussions on this type of uncertainty. However, unless we address the sources of uncertainty due to cognitive aspects, the topic of uncertainty has not been fully addressed. The cognitive uncertainty caused due to inadequate definition of parameters, such as structural performance, safety culture, deterioration/degradation in system functions, level of skill/knowledge base, and experience staff (design, construction, operation, and regulation) [32]. The fact is that dealing with uncertainty using statistical modeling or any other evidence-based approach including approaches that deal with precise or imprecise probabilities has their limitations and cannot address issues involving vagueness of the problem arising from missing information and lack of intellectual abstraction of real-time scenario, be it regulatory decisions, design-related issues, or operational issues. The reason for this is that traditional probabilistic and evidence-based methods for most of the time deal with subjectivities, perceptions, and assumptions that may not form part of the real-time scenarios that require to address cognitive part of the uncertainty. Following subsections bring out the various aspects of cognitive/qualitative part of the uncertainty and methods to address these issues.

7.1 Engineering Design and Analysis

The issues related to “uncertainty” have been part of engineering design and analysis. The traditional working stress design (WSD)/allowable stress design (ASD) in civil engineering deal with uncertainty by defining “suitable factor.” The same factor of safety is used for mechanical design to estimate the allowable stress (AS = Yield Stress/FS). This FS accounts for variation in material properties, quality-related issues, degradation during the design life, modeling issues and variation in life cycle loads, and lack of knowledge about the system being designed. The safety factor is essentially based on past experience but does not guarantee safety. Another issue is this approach is highly conservative in nature.

It is expected that an effective design approach should facilitate trade-off between maximizing safety and minimizing cost. Probabilistic- or reliability-based design allows this optimization in an efficient manner. The design problems require treatment of both cognitive and noncognitive sources of uncertainty. It should be recognized that the designer’s personal preferences or subjective choices can be source of uncertainties which bring in cognitive aspect of uncertainty. Statistical aspects like variability in assessment of loads, variation in material properties, and extreme loading cycles are the source of noncognitive uncertainties.

In probabilistic-based design approach, considerations of uncertainty when modeled as stress-strength relation for reliability-based design form an integral part of design methodology. The Load and Resistance Factor Design (LRFD), first-order reliability methods (FORM), and second-order reliability methods (SORM) are some of the application of probabilistic approach structural design and analysis. Many of civil engineering codes are based on probabilistic considerations. The available literature shows that design and analysis using probabilistic-based structural reliability approach have matured into an “engineering discipline” [20], and new advances and research have further strengthened this area [32].

The Level 1 PSA models are often utilized in support of design evaluation. During design stage, often complete information and data are not available. This leads to higher level of uncertainty in estimates. On the other hand, the traditional approach using deterministic design methodology involves use of relatively higher safety factors to compensate for the lack of knowledge. The strength of PSA framework is that it provides a systematic framework that allows capturing of uncertainties in data, model, and uncertainty due to missing or fuzzy inputs. Be it probabilistic or evidence-based tools and methods, it provides an improved framework for treatment of uncertainty. Another advantage of PSA framework is that it allows propagation of uncertainty from component level to system level and further up to plant level in terms of confidence bounds in for system unavailability/initiating event frequency and core damage frequency, respectively.

7.2 Management of Operational Emergencies

If we take lessons from the history of nuclear accidents in general and the three major accidents, viz., TMI (Three Mile Island) in 1979, Chernobyl in 1986, and the recent one Fukushima in 2011, it is clear that real-time scenario always require some emergency aids that respond to the actual plant parameters in a given “time window.” Even though probabilistic risk analysis framework may address these scenarios, it can only addresses the modeling part of the safety analysis. It is also required to consider the qualitative or cognitive uncertainty aspects and its characteristics for operational emergency scenario.

The major characteristics of the operational emergencies can include:

-

(a)

Deviation of plant condition from normal operations that require safety actions, it could be plant shutdown, actuation of shutdown cooling, etc.

-

(b)

Flooding of plant parameters which include process parameters crossing its preset bounds, parameter trends and indications

-

(c)

Available “time window” for taking a grasp of the situation and action by the operator toward correcting the situation

-

(d)

Feedback in terms of plant parameters regarding the improved/deteriorated situations

-

(e)

Decisions regarding restorations of systems and equipments status if the situation is moving toward normalcy

-

(f)

Decision regarding declaration of emergency which requires a good understanding whether the situation requires declaration of plant emergency, site emergency, or off-site emergency

-

(g)

Interpretation of available safety margins in terms of time window that can be used for designing the emergency operator aids

As the literature shows, that responding to accident/off-normal situations as characterized above calls for modeling that should have following attributes:

-

(a)

Modeling of the anticipated transients and accident conditions in advance such that knowledge-based part is captured in terms of rules/heuristics as far as possible.

-

(b)

Adequate provision to detect and alert plant staff for threat to safety functions in advance.

-

(c)

Unambiguous and automatic plant symptoms based on well-defined criterion like plant safety limits and emergency procedures that guide the operators to take the needed action to arrest further degradation in plant condition.

-

(d)

Considering the plant limits of plant parameters assessment of actual time window that is available for applicable scenarios.

-

(e)

The system for dealing with emergency should take into plant-specific attributes, distribution of manpower, laid down line of communications, other than the standard provisions, the tools, methods, and procedures that can be applied for planned and long-term or extreme situations.

-

(f)

Heuristics on system failure criteria using available safety margins.

Obviously, ball is out of “uncertainty modeling” domain and requires to address the scenarios from other side, i.e., taking decisions such that action part in real-time scenario compensates for the missing knowledge base and brings plant to safe state.

The answer to the above situation is development of knowledge-based systems that not only capture the available knowledge base but also provide advice to maintain plant safety under uncertain situation by maintaining plant safety functions. It may please be noted that here we are not envisaging any role for “risk-monitor” type of systems. We are visualizing an operator support system which can fulfill the following requirements (the list is not exhaustive and only presents few major requirements):

-

1.

Detection of plant deviation based on plant symptoms.

-

2.

The system should exhibit intelligent behavior, like reasoning, learning from the new patterns, conflict resolution capability, pattern recognition capability, and parallel processing of input and information.

-

3.

The system should be able to predict the time window that is available for the safety actions.

-

4.

Takes into account operator training and optimizes the graphic user interface (GUI).

-

5.

The system should be effective in assessment of plant transients – it calls for parallel processing of plant symptoms to present the correct plant deviation.

-

6.

The system should have adequate provision to deal with uncertain and incomplete data.

-

7.

The presentation of results of the reasoning with confidence limits.

-

8.

It should have an efficient diagnostics capability for capturing the basic cause(s) of the failures/transients.

-

9.

The advice should be presented with adequate line of explanations.

-

10.

The system should be interactive and use graphics to present the results.

-

11.

Provisions for presentation of results at various levels, like abstract level advice (like open MV-3001 and Start P-2) to advise with reasonable details (like Open ECCS Valve MV-3001 located in reactor basement area and Start Injection Pump P-2, it can be started from control room L panel).

Even though there are many examples of R&D efforts on development of intelligent operator advisory systems for plant emergencies, readers may refer to the paper by Varde et al. for further details [33]. Here, the probabilistic safety assessment framework is used for knowledge representation. The fault tree models of PSA are used for generating the diagnostics, while the event tree models are used to generate procedure synthesis for evolving emergencies. The intelligent tools like artificial neural network approach are used for identification of transients, while the knowledge-based approach is used for performing diagnostics.

As can be seen above, the uncertain scenarios can be modeled by capturing either from the lessons learned from the past records for anticipated events. Even for the rare events where uncertainty could be of higher levels, the symptom-based models which focus on maintaining the plant safety functions can be used as model plant knowledge base.

8 Regulatory Reviews

In fact, the available literature on decisions under uncertainty has often focused on the regulatory aspects [29]. One of the major differences between operational scenarios and regulatory reviews or risk-informed decisions is that there generally is no preset/specified time window for decisions that directly affect plant safety. The second difference is that in regulatory or risk-informed decisions requires collective decisions and basically a deliberative process unlike operational emergencies where the decisions are taken often by individuals or between a limited set of plant management staff where the available time window and some time resources are often the constraints. Expert elicitation and treatment of the same often form part of the risk-informed decisions. Here, the major question is “what is the upper limit of spread of confidence bounds” that can be tolerated in the decision process. In short, “how much uncertainty in the estimates” can be absorbed in the decision process? It may be noted that the decisions problem should be evaluated using an integrated approach where apart from probabilistic variables even deterministic variables should be subjected to uncertainty analysis. One major aspect of risk assessment from the uncertainty point of view is updating the plant-specific estimates with generic prior data available either in literature or from other plants. This updating brings in subjectivity to the posteriori estimates. Therefore, it is required to justify and document the prior inputs. Bayesian method coupled with Monte Carlo simulation is the conventional approach for uncertainty analysis. The regulatory reviews often deal with inputs in the form of linguistic variables or “perceptions” which require perception-based theory of probabilistic reasoning with imprecise probabilities [13]. In such scenarios, the classical probabilistic approach alone does not work. The literature shows that application of fuzzy logic offers an effective tool to address qualitative and imprecise inputs [34].

The assumptions often form part of any risk-assessment models. These assumptions should be validated by performing the sensitivity analysis. Here, apart from independent parameter assessment, sensitivity analysis should also be carried out for a set of variables. The formations of set of variables require a systematic study of the case under considerations.

The USNRC document NUREG-1855 on “Guidance on the treatment of Uncertainties Associated with PSAs in Risk-informed Decision Making” deals with the subject in details, and readers are recommended to refer to this document for details [29].

9 Conclusions

The available literature shows that there is an increasing trend toward the use of risk assessment or PSA insights in support of decisions. This chapter proposes a new approach called integrated risk-based engineering for dealing with safety of nuclear plants in an integrated and systematic manner. It is explained that this approach is a modification of the existing risk-informed/risk-based approach. Apart from application of PSA models, probabilistic treatment to traditional deterministic variables, success, and failure criteria, assessment of safety margins in general and treatment uncertainties in particular, forms part of the integrated risk-based approach.

There is general consensus that strengthening of uncertainty evaluation is a must for realizing risk-based application. It is expected that integrated risk-based approach will provide the required framework to implement the decisions. This chapter also argues that apart from probabilistic methods, evidence-based approaches need to be used to deal with “imprecise probabilities” which often form important input for the risk-based decisions.

Further other issue that this chapter discusses is that various methods, be it probabilistic or evidence based, cannot provide complete solution for issues related to uncertainty. There are qualitative or cognitive issues that need to be addressed by incorporating management tools for handling real-time situations. This is true for operational applications.

Finally, this chapter drives the point that both the quantitative and quantitative aspects need to be addressed to get toward more holistic solutions. Further research is needed to deal with imprecise probability, while cognitive aspects form the cornerstone of uncertainty evaluation.

References

Chapman JR et al (1999) Challenges in using a probabilistic safety assessment in risk-informed process. Reliab Eng Syst Saf 63:251–255

American Society of Mechanical Engineers/American Nuclear Society (2009) Standards for level 1 large early release frequency in probabilistic risk assessment for nuclear power plant applications. ASME/ANS RA-Sa-2009, March 2009

Parry GW (1996) The characterization of uncertainty in probabilistic risk assessments of complex systems. Reliab Eng Syst Saf 54:119–126

Modarres M (2006) Risk analysis in engineering – techniques, tools and trends. CRC-Taylor & Francis Publication, Boca Raton

Modarres M, Mark K, Vasiliy K (2010) Reliability engineering and risk analysis – a practical guide. CRC Press Taylor & Francis Group, Boca Raton

Mishra KB, Weber GG (1990) Use of fuzzy set theory for level 1 studies in probabilistic risk assessment. Fuzzy Sets Syst 37(2):139–160

Kushwaha HS (ed) (2009) Uncertainty modeling and analysis. A Bhabha Atomic Research Centre Publication, Mumbai

Weisman J (1972) Uncertainty and risk in nuclear power plant. Nucl Eng Des 21 (1972):396–405. North–Holland Publishing Company

Gábor Lajtha (VEIKI Institute for Electric Power Research, Budapest, Hungary), Attila Bareith, Előd Holló, Zoltán Karsa, Péter Siklóssy, Zsolt Téchy (VEIKI Institute for Electric Power Research, Budapest, Hungary) “Uncertainty of the Level 2 PSA for NPP Paks”

Pate-Cornell ME (1986) Probability and uncertainty in nuclear safety decisions. Nucl Eng Des 93:319–327

Nilsen T, Aven T (2003) Models and model uncertainty in the context of risk analysis. Reliab Eng Syst Saf 79:309–317

Siu NO, Kelly DL (1998) Bayesian parameter estimation in probabilistic risk assessment. Reliab Eng Syst Saf 62:89–116

Zadeh LA (2002) Towards a perception-based theory of probabilistic reasoning with imprecise probabilities. J Stat Plan Inference 105:233–264

Peter W, Lyle G, Paul B (1996) Analysis of clinical data using imprecise prior probabilities. The Statistician 45(4):457–485

Peter W (2000) Towards a unified theory of imprecise probability. Int J Approx Reason 24:125–148

Troffaes MCM (2007) Decision making under uncertainty using imprecise probabilities. Int J Approx Reason 45:17–29

George KJ (1999) Uncertainty and information measures for imprecise probabilities: an overview. First international symposium on imprecise probabilities and their applications, Belgium, 29 June–2 July 1999

Caselton FW, Wuben L (1992) Decision making with imprecise probabilities: Dempster-Shafer theory and application. AGU: Water Resour Res 28(12):3071–3083

Kozine IO, Filimonov YV (2000) Imprecise reliabilities: experience and advances. Reliab Eng Syst Saf 67(1):75–83

Ranganathan R (1999) Structural reliability analysis and design. Jaico Publishing House, Mumbai

Davison AC et al (1997) Bootstrap methods and their applications. Cambridge University Press, Cambridge

Winkler RL (1996) Uncertainty in probabilistic risk assessment. Reliab Eng Syst Saf 54:127–132

Daneshkhah AR. Uncertainty in probabilistic risk assessment: a Review

Nuclear Energy Agency (2007) Use and development of probabilistic safety assessment. Committee on Safety of Nuclear Installations, NEA/CSNI/R(2007)12, November 2007

IAEA (2010) Development and application of level 1 probabilistic safety assessment for nuclear power plants. IAEA safety standards – specific safety guide no. SSG-3, Vienna

Atomic Energy Regulatory Board (2005) Probabilistic safety assessment for nuclear power plants and research reactors. AERB draft manual. AERB/NF/SM/O-1(R-1)

Weichselberger K (2000) The theory of interval-probability as a unifying concept for uncertainty. Int J Approx Reason 24:149–170

Agarwal M, Varde PV (2011) Risk-informed asset management approach for nuclear plants. 21st international conference on structural mechanics in reactor technology (SMiRT-21), India, 6–11 Nov 2011

Drouin M, Parry G, Lehner J, Martinez-GuridiG, LaChance J, Wheeler T (2009) Guidance on the treatment of uncertainties associated with PSAs in risk-informed decision making (Main report). NUREG-1855 (Vol.1). Office of Nuclear Regulatory Research Office of Nuclear Reactor Regulation, USNRC, USA.

International Atomic Energy Agency. Quality attributes for PSA applications. IAEA-TECDOC-1120. IAEA, Vienna

Assakkof I. Modeling for uncertainty: ENCE-627 decision analysis for engineering. Making hard decisions. Dept. of Civil Engineering, University of Maryland, College Park

Haldar A, Mahadevan S (2000) Probability reliability and statistical methods i±n engineering design. Wiley, New York

Varde PV et al (1996) An integrated approach for development of operator support system for research reactor operations and fault diagnosis. Reliab Eng Syst Saf 56

Iman K, Hullermeier E (2007) Risk assessment system of natural hazards: a new approach based on fuzzy probability. Fuzzy Set Syst 158:987–999

Acknowledgments

I sincerely thank Shri R. C. Sharma, Head, RRSD, BARC, for his constant support, guidance, and help without which Reliability and PSA activities in Life Cycle Reliability Engineering Lab would not have been possible. I also thank my SE&MTD Section colleagues Mr. Preeti Pal, Shri N. S. Joshi, Shri A. K. Kundu, Shri D. Mathur, and Shri Rampratap for their cooperation and help. I also thank Shri Meshram, Ms Nutan Bhosale, and Shri M. Das for their assistance in various R&D activities in the LCRE lab.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer India

About this paper

Cite this paper

Varde, P.V. (2013). Uncertainty Evaluation in Integrated Risk-Based Engineering. In: Chakraborty, S., Bhattacharya, G. (eds) Proceedings of the International Symposium on Engineering under Uncertainty: Safety Assessment and Management (ISEUSAM - 2012). Springer, India. https://doi.org/10.1007/978-81-322-0757-3_13

Download citation

DOI: https://doi.org/10.1007/978-81-322-0757-3_13

Published:

Publisher Name: Springer, India

Print ISBN: 978-81-322-0756-6

Online ISBN: 978-81-322-0757-3

eBook Packages: EngineeringEngineering (R0)