Abstract

In this paper, a robust and adaptive framework of finite volume solutions for steady Euler equations is introduced. On a given mesh, the numerical solutions evolve following the standard Godunov process and the algorithm consists of a Newton method for the linearization of the governing equations and a geometrical multigrid method for solving the derived linear system. To improve the simulations, an h-adaptive method is proposed for more efficient discretization by means of local refinement and coarsening of the mesh grids. Several numerical issues such as the regularization of the system, selection of the reconstruction patch, treatment of the curved boundary, as well as the design of the error indicator will be discussed in detail. The effectiveness of the proposed method is successfully examined on a variety of benchmark tests, and it is found that all simulations can be implemented well with one set of parameters, which shows the robustness of the method.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Steady Euler equations

- Finite volume method

- Adaptive method

- A posteriori error estimation

- Newton iteration

1 Introduction

In the study of the compressible flow, Euler equations are one fundamental governing equations and have been playing an important role in a variety of practical applications such as optimal design of the vehicle shape [15], physical-based simulations in animation [31].

Steady-state flow is a typical phenomenon in the fluid dynamics in which the distributions of the physical quantities will not change with the time evolution. Such phenomena exist in several realistic fluid dynamics applications. For example, when an aeroplane is in its cruise state in the stratosphere, the fluid dynamics around the aeroplane can be described reasonably by the steady state. The theoretical and numerical studies on the steady-state flow have great importance on the applications such as the optimal design on the vehicle shape. In a classical optimization framework for the optimal design, the objective functional is optimized subject to several shape parameters. In the whole simulation, dozens of, or maybe hundreds of, steady-state flows need to be determined with different configurations. Hence, efficiency of the steady-state solver becomes crucial in the practical simulations.

Although there have been lots of work available in the market for solving steady Euler equations by using finite difference methods [54], finite element methods [16], spectral methods [28], the existence of the discontinuous solutions such as shock and contact discontinuity makes the use of the finite volume methods [29, 33], discontinuous Galerkin methods [10], spectral volume methods [51] more competitive. Besides the ability on representing discontinuous solutions, these methods also introduce the flux to preserve the conservation property of the simulation, which makes these methods more attractive towards delivering physical simulations. It is worth mentioning that, recently, the fast sweeping method [12, 13] was proposed to solve steady Euler equations, and excellent numerical results were obtained. In our previous works [21,22,23,24,25,26], an adaptive framework of finite volume solutions has been developed for solving steady Euler equations.

There are several challenges on developing quality high-order finite volume methods for solving Euler equations. One of the most important challenges is the solution reconstruction. In the original Godunov scheme, the cell average is used directly to evaluate the numerical flux. The advantage of Godunov is very attractive, i.e. the maximum principle can be preserved naturally. However, the piecewise constant approximation makes the scheme too dissipative to generate high-resolution solution; hence, the solution variation needs to be recovered to deliver high-order approximation for the exact solution. In the solution reconstruction, a nontrivial issue is to develop quality limiter functions to restrain the possible nonphysical oscillation, which is listed in [52] as one challenge for developing high-order numerical methods for computational fluid dynamics. Another challenge is efficiency of the algorithm. By propagating the time-dependent system for sufficiently long time is obviously not a good idea for obtaining the steady state of the system since the low efficiency. To effectively accelerate the simulation, several classical techniques such as local time-stepping, enthalpy damping, residual smoothing, multigrid methods and preconditioning techniques [6] have been developed and applied. Towards the efficient discretization of the governing equations, adaptive methods such as r-adaptive methods [37, 38, 46], h-adaptive methods [5, 18, 39, 43], and hp-adaptive methods [19, 50] have been developed and still attract more and more research attention. Nowadays, with the dramatic development of the computer hardware, the capacity of the high-performance computing cluster is also improved significantly. Hence, parallel algorithms based on OpenMP [1], OpenMPI [2] as well as GPU [53] become more and more popular in the community of computational fluid dynamics [34].

In this paper, we introduce an adaptive framework of finite volume solutions for the steady Euler equations. On a given mesh, the solver consists of a Newton iteration for the linearization of the governing equations and a geometrical multigrid method for solving the linear system. To resolve the issue on the quality high-order solution reconstruction, the non-oscillatory k-exact reconstruction is proposed which provides a unified strategy for high-order reconstruction. To handle the efficiency issue, h-adaptive method is introduced in our method and an adjoint-based a posteriori error estimation method is developed to generate quality error indicator. Some numerical issues such as regularization of the linearized system are also introduced. Numerical tests successfully show the robustness and effectiveness of the proposed method.

The rest of the paper is organized as follows. In Sect. 2, the steady Euler equations and finite volume discretization are introduced. In Sect. 3, the solution reconstruction will be introduced and the non-oscillatory k-exact reconstruction method will be described in detail. In Sect. 4, our methods on partially resolving the efficiency issue of the simulations are summarized and the adjoint weighted residual indicator as well as implementation are introduced in detail. Three numerical tests are delivered in Sect. 5 in which the robustness and effectiveness of the proposed framework are successfully demonstrated. Finally, the conclusion is given.

2 Finite Volume Framework for Steady Euler Equations

2.1 Governing Equations

The inviscid two-dimensional steady Euler equations are given as

where U and F(U) denote the conservative variables and flux given by

respectively. Here \((u,v)^T\), \(\rho \), p, and E denote the velocity, density, pressure, and total energy, respectively. To close the system, we use the following equation of state in this paper,

where \(\gamma = 1.4\) is the ratio of the specific heats of the perfect gas.

Before we get involved in the numerical methods for solving (1), let us introduce the notations as follows to facilitate the description. The computational domain is denoted by \(\varOmega \), and \(\mathscr {T} = \{\mathscr {K}_i\},i=1,2,\dots ,N_{tri}\) is its associated triangulation in which \(\mathscr {K}_i\) is the ith triangle in the mesh, and \(N_{tri}\) is the total number of the triangle elements in the mesh.

2.2 Newton Linearization

Certain linearization is needed since the nonlinearity of the governing Eq. (1), and Newton iteration is employed in our work. Below we would briefly summarize the implementation of the Newton iteration on our problem. People may refer to [21, 23, 24, 26, 39] for the details.

The governing Eq. (1) is discretized as follows. First of all, the integral form of (1) on \(\varOmega \) is given by

Then Green’s theorem gives the following equation,

where \(e_{i,j}\) means the common edge of the element \(\mathscr {K}_i\) and its neighbour element \(\mathscr {K}_j\), and \(n_{i,j}\) means the unit out normal vector of \(e_{i,j}\) with respect to the element \(\mathscr {K}_i\). In the simulation, numerical flux \(\bar{F}(U_i, U_j)\) is used to replace the unknown flux F(U). Hence, the above equations are approximated by the following ones

To resolve the nonlinearity of (6), Newton method is employed here. We assume that the approximation of the solution at the kth step, \(U^{(k)}\), is known, and then the approximation of the solution at the \((k+1)\)th step, \(U^{(k + 1)} = U^{(k)} + \varDelta U^{(k)}\), can be found by solving

for \(\varDelta U^{(k)}_i\) which is increment of the conserved quantity on the element \(\mathscr {K}_i\) to the kth approximation of the solutions. By Taylor theorem and only keeping the linear part, the linear system for \(\varDelta U\) can be written as

Regularization is necessary to solve the linear system (8). The issue is resolved by introducing the local residual \(LR_i = \sum _{e_{i,j}\in \partial \mathscr {K}_i}\int _{e_{i,j}} \bar{F}(U^{(k)}_i, U^{(k)}_j)\cdot n_{i,j}ds\), i.e. the regularized system is written as

where \(||\cdot ||_1\) is the \(l_1\) norm, and \(\alpha > 0\) is a parameter to weight the regularization.

So far, the only unknown quantity in (9) is the numerical flux \(\bar{F}\). In the simulation, this quantity is obtained by solving a local Riemann problem in which the left and right states are determined by the solutions in the element \(\mathscr {K}_i\) and its neighbour \(\mathscr {K}_j\). There are several Riemann solvers available in the market, and HLLC [48] is used in our simulations.

A natural choice for the left and right states for Riemann problem is the cell average of each conserved quantity. In this case, a piecewise constant approximation of the conserved quantity is supposed, and only first-order numerical accuracy can be expected. To improve the numerical accuracy, more accurate left and right states in Riemann problem are desired and this can be achieved by high-order solution reconstruction.

3 Solution Reconstruction

With the assumption of sufficient regularity, Taylor theorem gives the following substitution for the unknown function U(x, y) in the element \(\mathscr {K}\)

where \((x_{\mathscr {K}_i}, y_{\mathscr {K}_i})\) is the barycentre of the element \(\mathscr {K}_i\). The task of the reconstruction is to recover those coefficients \(\partial ^\alpha U/(\partial x^{\alpha _1}\partial y^{\alpha _2})\), \(\alpha =\alpha _1 + \alpha _2\), with the cell average \(\bar{U}_i =1/|\mathscr {K}_i|\int _{\mathscr {K}_i} U(x,y)dxdy\) of the conserved quantity U(x, y) in the element \(\mathscr {K}_i\), where \(|\mathscr {K}_i|\) is the area of the element \(\mathscr {K}_i\).

The most popular reconstruction in the market is the linear reconstruction, i.e.

It is noted that with the assumption of the linear distribution of U(x, y) in \(\mathscr {K}_i\), the constant term in (11) is the cell average, i.e. \(U(x_{\mathscr {K}_{i}},y_{\mathscr {K}_{i}}) = \bar{U}_i\). Hence, the linear reconstruction is to recover the gradient of U(x, y) in \(\mathscr {K}_i\). There are two ways to evaluate the gradient \(\nabla U = (\partial U/\partial x, \partial U/\partial y)^T\). One is the following Green–Gauss theorem [6],

Since the linearity of U, \(\nabla U\) is a constant. Hence,

Replacing U on the edge \(e_{i,j}\) by using the average \((\bar{U}_i + \bar{U}_j)/2\), the above integral can be approximated by

The implementation of Green–Gauss approach is quite simple. However, the numerical accuracy of such approximation heavily depends on the regularity of the mesh grids. Also, it is not trivial to extend the method to the high-order cases. People may refer to [11] for the quadratic reconstruction with Green–Gauss method.

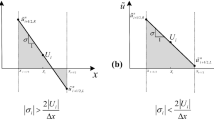

To overcome the above issues, the least square method becomes a very competitive candidate on solution reconstruction since its ability on delivering accurate solution even on skewed unstructured grids and on natural extension to high-order cases. To implement the least square reconstruction on the element \(\mathscr {K}_i\), a reconstruction patch \(\mathscr {P}_i\) is needed first. In the case of the linear reconstruction, a natural choice for \(\mathscr {P}_i\) is \(\mathscr {K}_i\) itself as well as its three Neumann neighbours. For example, for the element \(\mathscr {K}_{i,0} = \mathscr {K}_i\) in Fig. 1, the patch of the linear reconstruction for it can be chosen as \(\mathscr {P}_i = \{\mathscr {K}_{i,0},\mathscr {K}_{i,1},\mathscr {K}_{i,2},\mathscr {K}_{i,3}\}\).

With \(\mathscr {P}_i\) for \(\mathscr {K}_i\), the gradient \(\nabla U|_{\mathscr {K}_{i,0}} = (\partial U/\partial x|_{\mathscr {K}_{i,0}}, \partial U/\partial y|_{\mathscr {K}_{i,0}})^T\) can be solved from the following minimization problem,

The extension to the high-order reconstruction is straightforward for the least square approach. In the case of quadratic reconstruction, a larger patch containing at least 6 elements is needed since there are more unknowns included in (10). A method to enlarge \(\mathscr {P}_i\) is to introduce Neumann neighbours of the Neumann neighbours of \(\mathscr {K}_i\). However, it is found that generating \(\mathscr {P}_i\) by selecting \(\mathscr {K}_i\) and its Moore neighbours is a better choice, especially when the adaptive strategy is used in the simulation, based on our numerical experience. In this case, the patch \(\mathscr {P}_i\) becomes

Now the unknown quantity U(x, y) is approximated by

To preserve the conservative property of the reconstructed polynomial, the minimization problem we need to solve becomes

Remark 1

The above method is k-exact reconstruction [3]. To solve (17) directly, a large amount of integrals need to be evaluated during the reconstruction. In [42], a numerical trick is introduced to effectively save the computational resource. In the trick, several integrals are calculated beforehand, and then the linear system consists of those integrals by algebraic operations. Recently, the parallel k-exact reconstruction is developed [17], which significantly improves the efficiency of the reconstruction.

Remark 2

The conservative of U in \(\mathscr {K}_i\) cannot be guaranteed strictly by solving (17) in the least square sense. To preserve the conservative property rigorously, the constant term in \(P_i^2(x,y)\) is adjusted to make \(\frac{1}{|\mathscr {K}_{i,0}|}\int _{\mathscr {K}_{i,0}}P_i^2(x,y)dxdy = \bar{U}_{i}\).

For all high-order reconstructions (\(\ge \) linear reconstructions), limiting process is necessary to restrain the nonphysical oscillation, especially when there is shock in the solution. For linear reconstruction, there are several mature limiters available for the unstructured meshes such as the limiter of Barth and Jespersen [4], and the limiter of Venkatakrishnan [49]. Compared with the limiter of Barth and Jespersen, the limiter of Venkatakrishnan has better property towards the differentiability; hence, it has better performance on the steady-state convergence. Although these limiters work well for the linear reconstruction, it is nontrivial for the higher-order extension. People may refer to [41] for the contribution towards this direction. It is worth mentioning that quality limiter for high-order methods was listed as one of the challenges in developing high-order numerical methods for computational fluid dynamics in [52].

Weighted essentially non-oscillatory (WENO) scheme is well known for its ability on delivering high-order and non-oscillatory numerical solutions [30, 55]. For WENO implementation on unstructured meshes, people may refer to [30] for details. Besides the solution reconstruction, WENO has been also used as a limiter in the discontinuous Galerkin framework [40, 44, 45, 56]. In our works [21,22,23,24,25,26], WENO reconstruction is introduced for the solution reconstruction. Below is a brief summarization for the WENO reconstruction with the assumption of the locally linear distribution of the solutions.

In WENO reconstruction, besides the reconstruction patch \(\mathscr {P}_{i,0} = \mathscr {P}_{i}\) for \(\mathscr {K}_{i,0}\) in Fig. 1, we also solve the optimization problem (15) on patches \(\mathscr {P}_{i,1}=\{\mathscr {K}_{i,0},\mathscr {K}_{i,1},\mathscr {K}_{i,4},\mathscr {K}_{i,5}\}\), \(\mathscr {P}_{i,2}=\{\mathscr {K}_{i,0},\mathscr {K}_{i,2},\mathscr {K}_{i,6},\mathscr {K}_{i,7}\}\), and \(\mathscr {P}_{i,3}=\{\mathscr {K}_{i,0},\mathscr {K}_{i,3},\mathscr {K}_{i,8},\mathscr {K}_{i,9}\}\). Correspondingly, besides the polynomial \(P^1_{i,0} = P_i\) from \(\mathscr {P}_{i,0}\), we also have the candidate polynomials \(P^1_{i,1}\), \(P^1_{i,2}\), \(P^1_{i,3}\) from \(\mathscr {P}_{i,1}\), \(\mathscr {P}_{i,2}\) and \(\mathscr {P}_{i,3}\), respectively. For each candidate \(P^1_{i,j},j=0,1,2,3\), a smoothness indicator is defined by

Then the weight for each polynomial is calculated by

and the final polynomial for the element \(\mathscr {K}_i\) is given by

Remark 3

In the definition of \(\tilde{\omega }_j\) in (19), a parameter \(\gamma _j\) [20, 30] is used as the numerator. \(\gamma _j\) there is designed for preserving the higher order accuracy of \(P^1_i\), i.e. \(P^1_i(x_{GQ}, y_{GQ}) = P^2_i(x_{GQ},y_{GQ})\) where \(P^2_i(x,y)\) is a quadratic polynomial obtained by solving (16). With \(\gamma _j\) and the nonlinear weight \(\omega _j\), the reconstructed polynomial \(P_i\) can preserve the third-order numerical accuracy and restrain the nonphysical oscillation effectively in the meantime [20, 30]. However, an extra quadratic reconstruction problem (16) as well as the parameters \(\gamma _j\) need to be calculated, which would slow down the simulation efficiency. In our algorithm, the numerator 1 is used instead of \(\gamma _j\) to avoid the extra calculations and the h-adaptive method is introduced to remedy the accuracy issue.

The WENO reconstruction can be extended to higher order directly. People may refer to [25, 26] for our works on non-oscillatory k-exact reconstruction.

In the traditional reconstructions, the polynomial is obtained by certain method first, and then the limiter is introduced to remove or restrain the possible oscillation. Recently, Chen and Li developed an integrated linear reconstruction (ILR) method [8] in which an optimization method is proposed and solved locally for each element to construct the polynomial. The advantages of ILR include (i) the reconstruction can be finished by solving a single problem, i.e. the reconstructing and limiting processes are combined together, (ii) the local maximum principle is preserved theoretically by ILR, and (iii) no parameter is used in the reconstruction. An improved ILR method can be found in the paper [7].

4 Towards Efficiency

Efficiency is crucial for an algorithm in its practical applications. Since the Newton iteration is used for the linearization, a series of linearized system need to be solved in solving a steady Euler system, which means that the efficiency of the linear solver is important for an efficient simulation. Furthermore, in one of the most important applications for steady Euler solver, i.e. the optimal design of the vehicle shape, a series of steady Euler systems with different configurations need to be solved in a single design process. Hence, how to improve the efficiency of the steady Euler solver is also worth studying in detail.

For the first issue, a geometrical multigrid solver is developed to solve the linearized system in our algorithm [21, 23,24,25,26, 39]. In this geometrical multigrid solver, the coarse meshes are generated by the volume agglomeration method [6, 32]. Then the error on the coarse meshes is smoothed by blocked lower-upper Gauss–Seidel method proposed in [9]. People may refer to our works for the details of the implementation and performance of the solver.

To resolve the second issue mentioned above, the algorithm can be improved from the following aspects. First of all, it is the acceleration of the convergence of the Newton iteration. In (9), the local residual of the system is used to regularize the system. It is noted that this is a similar acceleration technique to the local time-stepping method [6]. In both methods, local information is used to improve the simulation. In local time-stepping method, the time-dependent Euler equations are solved and the Courant–Friedrichs–Lewy (CFL) number is chosen locally depending on the characteristic speed in the current control volume; hence, the evolution of the system is not uniform in the whole flow field. In the region with low characteristic speed, a larger CFL number can be chosen to speedup the convergence to the steady state. In our method, there is no temporal term in the equations and we use local residual to regularize the system. If the system is far from the steady state locally, the local residual is a large quantity, which corresponds to effect in solving time-dependent problem with a small CFL number. On the other hand, local residual would be a small quantity when the system is close to the steady state locally which corresponds to the large CFL number case. Based on our numerical experience, the local residual regularization works very well in all cases and the simulations are not sensitive to the selection of the parameter \(\alpha \) in (9).

The second way to improve the implementation efficiency is to develop efficient discretization. In the case that there is large variation of the solution in the domain, especially there is shock in the solution, numerical discretization on a uniform mesh is obviously not a good idea since too many mesh grids are wasted in the region with gentle solution. In the market, adaptive mesh methods are popular towards the efficient and nonuniform discretization of the governing equations. For example, r-adaptive methods have been successfully used in solving Euler equations [27, 36,37,38, 46, 47]. In our algorithm, h-adaptive methods are introduced towards the efficient numerical discretization [21, 22, 25, 26, 39]. To handle the local refinement or coarsening of the mesh grids efficiently, an hierarchy geometry tree (HGT) is developed. People may refer to [35] for HGT details. It is worth mentioning that CPU time on local refinement or coarsening is nothing compared with the whole CPU time in the simulation with HGT.

Another important component in adaptive method is the error indicator. The quality of the error indicator determines the quality of the nonuniform discretization. There are basically two types error indicators in the market. One is feature-based error indicators which depend on the numerical solution, and the other one is error indicators based on the a posteriori error estimation. In our works, several feature-based error indicators are tested in the h-adaptive framework such as the gradient of the pressure [21, 26, 39] and entropy [21, 26]. Recently, an adjoint-based a posteriori error estimation method is developed towards minimizing the numerical error of a quantity of interest [25]. Adjoint-based analysis is a very useful tool in the applications of optimal design of vehicle shape [15] and the error estimation [14]. Below is a brief summary of our adjoint-based error indicator, and people may refer to [25] for the details.

Suppose that \(U^H\) is the solution on the mesh \(\mathscr {T}^H\), and \(J(U^H)\) is the quantity of interest. In the practical applications, the quantity of interest \(J(U^H)\) could be the drag or lift in the simulations of flow through an airfoil, or other application-related quantities. Now, we are interested in the error of \(J(U^H)\), i.e. \(J(U) - J(U^H)\) where J(U) is the exact evaluation of the quantity of interest depending on the exact solution U. In most cases, J(U) is nonlinear. Then the linearization of the difference gives

By defining the residual \(R(U) := \nabla \cdot F(U)\), the linearization of the difference between the exact residual and approximate residual gives

which follows

By plugging the above expression into (21), we get

where the adjoint \(\psi ^T\) can be obtained by solving

The implementation in [25] is as follows. First, the mesh \(\mathscr {T}^H\) is uniformly refined one time to get the new mesh \(\mathscr {T}^h\). Then the solution \(U^H\) on \(\mathscr {T}^H\) is interpolated onto \(\mathscr {T}^h\) to get an approximation \(U^H_h\) which is used in (24) to replace U. Since we assume that the system is solved completely on \(\mathscr {T}^H\), the quantity \(R(U^H)\) can be reasonably ignored in (24). There are two ways mentioned in [25] to solve the adjoint problem (25). One is to evaluate two Jacobian matrices in (25) on \(\mathscr {T}^h\) first, and then the equation is solved on \(\mathscr {T}^h\). The other one is to do the same thing on \(\mathscr {T}^H\). Compared with the former one, the advantage of the latter strategy is that the size of the system is much smaller, i.e. the size is only 25% of the one in former case. Furthermore, since \(U^H\) is a quality approximation to U on \(\mathscr {T}^H\), the linear problem (25) can be solved smoothly. It is noted that based on our numerical experience, direct evaluation of \(\partial J/\partial U\) and \(\partial R/\partial U\) on \(\mathscr {T}^h\) with the interpolation approximation \(U^H_h\) would bring difficulty on solving (25) and several Newton iterations for (9) with \(U^H_h\) as the initial guess are necessary for the improvement. On the other hand, the disadvantage of the latter strategy is that the convergence order of the numerical method will be sacrificed a little bit. This is understandable since the information from the finer mesh would generate more accurate error estimation.

The third strategy to improve the efficiency of our steady Euler solver is to resort to the parallel computing. Since the operations on solution reconstruction, evaluation of the numerical flux, and the cell average update are local, OpenMP [1] has been introduced to realize the parallel computing on these operations in [22] in which a reactive Euler system is solved to simulate detonation. To handle large-scale simulations, the parallelization based on MPI becomes necessary. We are working on the parallelization of our algorithm based on domain decomposition method and OpenMPI [2], and the results will be reported in the forthcoming paper.

5 Numerical Tests

In this section, the following three numerical tests will be implemented to demonstrate the effectiveness of our method,

-

Subsonic flow around a circular cylinder,

-

Inviscid flow through a channel with a smooth bump,

-

Transonic flow around a NACA 0012 airfoil.

All simulations in this paper are supported by AFVM4CFD [21,22,23,24,25,26, 39] which is a C++ library developed and maintained by the authors and collaborators.

5.1 Subsonic Flow Through a Circular Cylinder

In this section, the subsonic flow passing a circular cylinder is simulated. The computational domain is a ring, and the radii for the inner and outer circles are 0.5 and 20, respectively. The configuration of the flow in the far field is as follows. The density is 1, the Mach number is 0.38, the velocity vector is \((\cos {\theta },\sin {\theta })^T\) where \(\theta \) is attack angle and \(\theta = 0^\circ \) in this case. The configuration for far field flow is also used as the initial condition for our Newton iteration.

The method with non-oscillatory 2-exact reconstruction is implemented on five meshes with 240, 504, 800, 1776, and 3008 grid points, respectively. Since the flow in the domain is subsonic, inviscid, and vortex free, the entropy of the flow should be a constant same to that in the far field. Hence, we use the \(L_2\) error of the entropy production to evaluate the convergence of the method which shown in Fig. 2. As a comparison, the results obtained with linear reconstruction in [24] are also demonstrated here. It can be observed from the figure that both linear and quadratic methods successfully generate theoretical convergence curves. The mesh grids around the inner circle as well as the isolines of the Mach number can be observed from Fig. 3.

5.2 Inviscid Flow Through a Channel with a Smooth Bump

In this subsection, the inviscid flow through a channel with a smooth bump is simulated by adaptive method with non-oscillatory 2-exact reconstruction. This test is a benchmark test listed in [52] in which the detailed setup for the simulation can be found.

In Fig. 4, the following three results are shown. The first result is the convergence curve generated on four successively and uniformly refined meshes. It can be observed obviously the theoretical curve is recovered very well. The second result is the convergence curve generated by adaptive method with error indicator obtained only by the local residual. It is observed that the adaptive method generates much better convergence curve, compared with the one generated by uniformly refining the mesh. The nonuniform distribution of the mesh grids with 5940 points as well as the corresponding isolines of the Mach number can be observed from Fig. 5 (bottom). The third result is the convergence curve generated by adaptive method with error indicator obtained by adjoint weighted residual. In the simulation, the following functional is used as the quantity of interest,

where \(s_\infty = p_\infty /\rho _\infty ^\gamma \) is the far field entropy, and \(p_\infty \) and \(\rho _\infty \) are the far field pressure and density, respectively. From Fig. 4, it can be observed that adjoint weighted residual gives the best convergence result among three results. In Fig. 5 (top), the distribution of the mesh grids with 3387 points and the isolines of Mach number are shown with adjoint weighted residual method. It can be seen that the adjoint method helps to assign more mesh grids in the region in which the entropy is more sensitive to the local residual. Hence, this explains that with adjoint weighted residual, better result can be generated with less mesh grids, compared with the second result in which only local residual is used.

5.3 Transonic Flow Around a NACA 0012 Airfoil

The last numerical test is for the transonic flow through a NACA0012 airfoil. The purpose is to show the advantage of adjoint weighted method on accurately calculating the quantity of interest in the practical applications such as drag coefficient in this test, i.e.

where \(\partial \varOmega _a\) is the surface of the airfoil, and n is the unit outer normal vector with respect to \(\partial \varOmega _a\). The parameter \(\beta \) in the above formula is given as

where \(C_\infty = 0.5\gamma p_\infty Ma_\infty ^2 l\), and \(Ma_\infty \) and l are the far field Mach number of the flow and the chord length of the airfoil, respectively.

The far field flow is set up with the following configuration. The density is 1, the Mach number is 0.8, and the velocity vector is \((\cos {\theta },\sin {\theta })^T\) with the attack angle \(\theta = 1.25^\circ \). The far field flow state is again used as the initial guess for the Newton iteration.

In Fig. 6 (left), the convergence history of Newton iteration on 11 successively and adaptively refined meshes is shown and it can be observed that the residual can be reduced towards the machine epsilon efficiently in all meshes which demonstrates the effectiveness of the algorithm. In Fig. 6 (right), the advantage on using adaptive method with error indicator generated by adjoint weighted residual is demonstrated obviously, i.e. the convergence curve of the drag coefficient generated by the adaptive method is much superior to that generated by uniformly refining the mesh and to reach almost the same numerical accuracy (around \(1.0e-05\)), only over 10% mesh grids are needed by the adaptive mesh method, compared with the uniform refinement strategy. Figure 7 shows the mesh grids around the airfoil (left), the isolines of the Mach number (middle), and the isolines of x-momentum from the adjoint problem (right). It can be seen that with the adjoint weighted residual, the upper and lower shocks as well as leading edge and tail region are successfully resolved, which guarantees the accurate calculation of drag coefficient.

Left: Residual convergence history with adaptively and successively mesh refinements for NACA0012 airfoil with 0.8 Mach number and 1.25\(^\circ \) attack angle; Right: the corresponding convergence history of the drag coefficient (solid line), while the dashed line shows the results given by the uniformly refining mesh

Remark 4

It is worth mentioning that in all simulations in this paper and our previous works [21, 23,24,25,26], the convergence of Newton iteration is smooth and efficient. Furthermore, the convergence is not sensitive to the selection of the parameters, which shows the robustness of our method.

Remark 5

In simulations with curved boundary, the direction of the out normal vector on the Gauss quadrature point is adjusted according to the exact curve. With this correction, the performance of the method with high-order solution reconstruction can be significantly improved and people may refer to the simulation on Ringleb problem in [26] for details. However, there are still errors on the other quadrature information such as the position and weight of the quadrature point. Moreover, to develop a framework for the optimal design of the vehicle, a flexible and powerful tool to handle the curved boundary approximation is desirable. In our forthcoming paper, the nonuniform rational B-splines (NURBS) will be introduced in our method to handle the curved boundary issue and preliminary results show the excellent performance of the new method.

6 Conclusion

In this paper, an efficient and robust framework of adaptive finite volume solutions on steady Euler equations is introduced. The governing equations are discretized with finite volume method, and the framework consists of the Newton iteration for the linearization of the Euler system and a geometrical multigrid method for solving the linearized system. A non-oscillatory k-exact reconstruction is developed to deliver quality solution reconstruction to linear and higher-order cases. To improve the solver efficiency, the h-adaptive method is introduced in the method and an adjoint-based a posteriori error estimation method is developed to generate quality error indicator for the adaptive method. Numerical results successfully show the desired convergence behaviour of the method, and quality nonuniform meshes generated by the adaptive method.

References

OpenMP. http://www.openmp.org

OpenMPI. http://www.open-mpi.org

T.J. Barth, Recent developments in high order \(k\)-exact reconstruction on unstructured meshes, in 31st Aerospace Sciences Meeting American Institute of Aeronautics and Astronautics, pp. 1–15 (1993)

T.J. Barth, D.C. Jespersen, The design and application of upwind schemes on unstructured meshes, in AIAA Paper, pp. 89–0366 (1989)

M.J. Berger, A. Jameson, Automatic adaptive grid refinement for the Euler equations. AIAA J. 23(4), 561–568 (1085)

J. Blazek, Computational Fluid Dynamics: Principles and Applications. (Elsevier Science, 2005)

L. Chen, G. Hu, R. Li, Integrated linear reconstruction for finite volume scheme on arbitrary unstructured grids, in ArXiv e-prints, March 2017. Commum. Comput. Phys. 24(2), 454–480 (2018)

L. Chen, R. Li, An integrated linear reconstruction for finite volume scheme on unstructured grids. J. Sci. Comput. 68(3), 1172–1197 (2016)

R.F. Chen, Z.J. Wang, Fast, block lower-upper symmetric Gauss-Seidel scheme for arbitrary grids. AIAA J. 38(12), 2238–2245 (2000)

B. Cockburn, G.E. Kamiadakis, C.-W. Shu (eds.), Discontinuous Galerkin Methods: Theory, Computation and Applications, Lecture Notes in Computational Science and Engineering, vol. 11 (Springer-Verlag, Heidelberg, 2000)

M. Delanaye, Polynomial reconstruction finite volume schemes for the compressible Euler and Navier-Stokes equations on unstructured adaptive grids. Ph.D. thesis, The University of Liege, Belgium, 1996

B. Engquist, B.D. Froese, Y.-H.R. Tsai, Fast sweeping methods for hyperbolic systems of conservation laws at steady state. J. Comput. Phys. 255, 316–338 (2013)

B. Engquist, B.D. Froese, Y.-H.R. Tsai, Fast sweeping methods for hyperbolic systems of conservation laws at steady state II. J. Comput. Phys. 286, 70–86 (2015)

D.J. Fidkowski, D.L. Darmofal, Review of output-based error estimation and mesh adaptation in computational fluid dynamics. AIAA J. 49(4), 673–694 (2011)

M.B. Giles, N.A. Pierce, An introduction to the adjoint approach to design. Flow Turbul. Combust. 65(3), 393–415 (2000)

M. Gurris, D. Kuzmin, S. Turek, Implicit finite element schemes for the stationary compressible Euler equations. Int. J. Numer. Meth. Fluids 69(1), 1–28 (2012)

F. Haider, P. Brenner, B. Courbet, J.-P. Croisille, Parallel Implementation of\(k\)-Exact Finite Volume Reconstruction on Unstructured Grids (Springer International Publishing, Cham, 2014), pp. 59–75

R.E. Harris, Z.J. Wang, High-order adaptive quadrature-free spectral volume method on unstructured grids. Comput. Fluids 38(10), 2006–2025 (2009)

P. Houston, E. Süli, hp-adaptive discontinuous Galerkin finite element methods for first-order hyperbolic problems. SIAM J. Sci. Comput. 23(4), 1226–1252 (2001)

C.Q. Hu, C.-W. Shu, Weighted essentially non-oscillatory schemes on triangular meshes. J. Comput. Phys. 150, 97–127 (1999)

G.H. Hu, An adaptive finite volume method for 2D steady Euler equations with WENO reconstruction. J. Comput. Phys. 252, 591–605 (2013)

G.H. Hu, A numerical study of 2D detonation waves with adaptive finite volume methods on unstructured grids. J. Comput. Phys. 331, 297–311 (2017)

G.H. Hu, R. Li, T. Tang, A robust high-order residual distribution type scheme for steady Euler equations on unstructured grids. J. Comput. Phys. 229(5), 1681–1697 (2010)

G.H. Hu, R. Li, T. Tang, A robust WENO type finite volume solver for steady Euler equations on unstructured grids. Commun. Comput. Phys. 9(3):627–648, 003 (2011)

G.H. Hu, X.C. Meng, N.Y. Yi, Adjoint-based an adaptive finite volume method for steady Euler equations with non-oscillatory \(k\)-exact reconstruction. Comput. Fluids 139:174–183 (2016). 13th USNCCM International Symposium of High-Order Methods for Computational Fluid Dynamics–A special issue dedicated to the 60th birthday of Professor David Kopriva

G.H. Hu, N.Y. Yi, An adaptive finite volume solver for steady Euler equations with non-oscillatory \(k\)-exact reconstruction. J. Comput. Phys. 312, 235–251 (2016)

W.Z. Huang, R.D. Russell, Adaptive Moving Mesh Methods, vol. 174 of Applied Mathematical Sciences (Springer-Verlag New York, 2011)

M.Y. Hussaini, D.A. Kopriva, M.D. Salas, T.A. Zang, Spectral methods for the Euler equations. I – Fourier methods and shock capturing. AIAA J. 23(1), 64–70 (1985)

A. Jameson, W. Schmidt, E. Turkel, Numerical solution of the Euler equations by finite volume methods using Runge-Kutta time stepping schemes, in 14th Fluid and Plasma Dynamics Conference (1981)

G.-S. Jiang, C.-W. Shu, Efficient implementation of weighted ENO schemes. J. Comput. Phys. 126(1), 202–228 (1996)

N. Kwatra, J.T. Grétarsson, R. Fedkiw, Practical animation of compressible flow for shock waves and related phenomena, in Proceedings of the 2010 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, SCA ’10, pp. 207–215, Aire-la-Ville, Switzerland, Switzerland (2010). Eurographics Association

M.H. Lallemand, H. Steve, A. Dervieux, Unstructured multigridding by volume agglomeration: current status. Comput. Fluids 21(3), 397–433 (1992)

R.J. LeVeque, Finite Volume Methods for Hyperbolic Problems. Cambridge Texts in Applied Mathematics (Cambridge University Press, 2002)

K. Li, Z. Xiao, Y. Wang, J.Y. Du, K.Q. Li (eds.), Parallel Computational Fluid Dynamics, vol. 405, Communications in Computer and Information Science (Springer, Berlin Heidelberg, 2013)

R. Li, On multi-mesh h-adaptive methods. J. Sci. Comput. 24(3), 321–341 (2005)

R. Li, T. Tang, Moving mesh discontinuous Galerkin method for hyperbolic conservation laws. J. Sci. Comput. 27(1), 347–363 (2006)

R. Li, T. Tang, P.W. Zhang, Moving mesh methods in multiple dimensions based on harmonic maps. J. Comput. Phys. 170(2), 562–588 (2001)

R. Li, T. Tang, P.W. Zhang, A moving mesh finite element algorithm for singular problems in two and three space dimensions. J. Comput. Phys. 177(2), 365–393 (2002)

R. Li, X. Wang, W.B. Zhao, A multigrid block LU-SGS algorithm for Euler equations on unstructured grids. Numer. Math. Theor. Methods Appl. 1, 92–112 (2008)

H. Luo, J.D. Baum, R. Lhner, A Hermite WENO-based limiter for discontinuous Galerkin method on unstructured grids. J. Comput. Phys. 225(1), 686–713 (2007)

K. Michalak, C. Ollivier-Gooch, Limiters for unstructured higher-order accurate solutions of the Euler equations, in 46th AIAA Aerospace Sciences Meeting and Exhibit, Reno, Nevada (2008)

C. Ollivier-Gooch, A. Nejat, K. Michalak, Obtaining and verifying high-order unstructured finite volume solutions to the Euler equations. AIAA J. 47(9), 2105–2120 (2009)

S. Popinet, Gerris: a tree-based adaptive solver for the incompressible Euler equations in complex geometries. J. Comput. Phys. 190(2), 572–600 (2003)

J.X. Qiu, C.-W. Shu, Hermite WENO schemes and their application as limiters for Runge-Kutta discontinuous Galerkin method: one-dimensional case. J. Comput. Phys. 193(1), 115–135 (2004)

J.X. Qiu, J. Zhu, RKDG with WENO Type Limiters (Springer, Heidelberg, 2010), pp. 67–80

H.Z. Tang, T. Tang, Adaptive mesh methods for one- and two-dimensional hyperbolic conservation laws. SIAM J. Numer. Anal. 41(2), 487–515 (2003)

T. Tang, Moving mesh methods for computational fluid dynamics. Contemporary Math. 383, 141–174 (2005)

E.F. Toro, M. Spruce, W. Speares, Restoration of the contact surface in the HLL-Riemann solver. Shock Waves 4(1), 25–34 (1994)

V. Venkatakrishnan, Convergence to steady-state solutions of the Euler equations on unstructured grids with limiters. J. Comput. Phys. 118, 120–130 (1995)

L. Wang, D.J. Mavriplis, Adjoint-based h-p adaptive discontinuous Galerkin methods for the compressible Euler equations, in 47th AIAA Aerospace Sciences Meeting including The New Horizons Forum and Aerospace Exposition, Orlando, Florida (2009)

Z.J. Wang, Evaluation of high-order spectral volume method for benchmark computational aeroacoustic problems. AIAA J. 43(2), 337–348 (2005)

Z.J. Wang, K. Fidkowski, R. Abgrall, F. Bassi, D. Caraeni, A. Cary, H. Deconinck, R. Hartmann, K. Hillewaert, H.T. Huynh, N. Kroll, G. May, P.-O. Persson, B. van Leer, M. Visbal, High-order CFD methods: current status and perspective. Int. J. Numer. Meth. Fluids 72(8), 811–845 (2013)

Wikipedia. Geforce–Wikipedia, the free encyclopedia (2016). Accessed 16 Dec 2016

X.X. Zhang, C.-W. Shu, Positivity-preserving high order finite difference WENO schemes for compressible Euler equations. J. Comput. Phys. 231(5), 2245–2258 (2012)

Y.-T. Zhang, C.-W. Shu, Chapter 5–ENO and WENO schemes, in Handbook of Numerical Methods for Hyperbolic Problems Basic and Fundamental Issues, Handbook of Numerical Analysis, ed. by R. Abgrall, C.-W. Shu, vol. 17 (Elsevier, 2016), pp. 103–122

J. Zhu, X.H. Zhong, C.-W. Shu, J.X. Qiu, Runge-Kutta discontinuous Galerkin method with a simple and compact Hermite WENO limiter. Commun. Comput. Phys. 19(4):944–969, 004 (2016)

Acknowledgements

The research of Guanghui Hu was partially supported by 050/2014/A1 from FDCT of the Macao S. A. R., MYRG2014-00109-FST and MRG/016/HGH/2013/FST from University of Macau and National Natural Science Foundation of China (Grant No. 11401608). The research of Tao Tang was partially supported by the Special Project on High-Performance Computing of the National Key R&D Program under No. 2016YFB0200604, the National Natural Science Foundation of China under No. 11731006, and the Science Challenge Project under No. TZ2018001.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Hu, G., Meng, X., Tang, T. (2018). On Robust and Adaptive Finite Volume Methods for Steady Euler Equations. In: Klingenberg, C., Westdickenberg, M. (eds) Theory, Numerics and Applications of Hyperbolic Problems II. HYP 2016. Springer Proceedings in Mathematics & Statistics, vol 237. Springer, Cham. https://doi.org/10.1007/978-3-319-91548-7_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-91548-7_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91547-0

Online ISBN: 978-3-319-91548-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)