Abstract

Linear reconstruction based on local cell-averaged values is the most commonly adopted technique to achieve a second-order accuracy when one uses the finite volume scheme on unstructured grids. For solutions with discontinuities appearing in such as conservation laws, a certain limiter has to be applied to the predicted gradient to prevent numerical oscillations. We propose in this paper a new formulation for linear reconstruction on unstructured grids, which integrates the prediction of the gradient and the limiter together. By solving on each cell a tiny linear programming problem without any parameters, the gradient is directly obtained which satisfies the monotonicity condition. It can be shown that the resulting numerical scheme with our new method fulfils a discrete maximum principle with fair relaxed geometric constraints on grids. Numerical results demonstrate that our method achieves satisfactory numerical accuracy with theoretical guarantee of local discrete maximum principle.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

When one adopts the finite volume scheme to discretize one-dimensional conservation laws, a second-order accuracy can be achieved by local linear reconstructions. To prevent spurious oscillations where discontinuities may appear, a suitable limiter has to be applied on the gradient given by the linear reconstruction. One common approach in this fold is the Monotonic Upstream-Centered Scheme for Conservation Law (MUSCL) due to van Leer [1]. Later MUSCL-type finite volume schemes were developed for multiple dimension problems on both structured and unstructured grids. In one-dimensional case the TVD criterion can prevent spurious oscillations and achieve second-order accuracy at the meantime, while TVD schemes in multi-dimensional cases have been proved to be at most first-order accurate [2]. Moreover, the implementation of TVD condition on unstructured grids is much less obvious. As an alternative for TVD criterion to prevent spurious oscillations, the local maximum principle was considered [3] which makes it easier to generalize to multi-dimensional problems. To ensure a local maximum principle, a new class of monotone scheme was proposed in [3] based on positivity of coefficients. The positivity of coefficients can be achieved by certain monotonicity condition and some additional geometric constraint on grids. Much subsequent research has been directed towards multi-dimensional numerical schemes which satisfy certain form of monotonicity condition [4–6]. The second-order TVD scheme of [5] was modified in [7] such that the local maximum principle is fulfilled by selecting the gradient which satisfies the monotonicity condition. In [8], an appropriate maximum principle was attained by restricting the gradient to lie within a maximum principle region. During the study of the multi-dimensional limiting process (MLP), Park et al. [9] extended the MLP condition from structured to unstructured grids, which also leads to a maximum principle. The MUSCL-type methods were generalized to higher-order reconstruction by introducing the Hessian [10]. The ENO/WENO-type methods are alternative methods to achieve higher-order accuracy. Although these methods have been successfully applied on unstructured grids [11–14], they are not free from parameters.

The linear reconstructions above consist of two steps. First, a predicted gradient is computed for each cell of the mesh using the cell averages in the reconstruction patch of the cell. Then the predicted gradient is limited to give a corrected gradient on the cell to prevent numerical oscillations. There exists a large family of so-called scalar limiters, which are implemented by multiplying all components of the gradient by a scalar in [0, 1]. Scalar limiters, though very popular, are far more diffusive, since the constraint triggered by any neighbouring cell will degrade the gradient in one specific direction, resulting limiting in all directions. Several approaches have been suggested to improve the scalar limiter with more consideration on the nature of multi-dimensional problems. Therefore, instead of reducing the magnitude of the predicted gradient, one may try to limit the predicted gradient componentwisely [15, 16], as is done in the vector limiters. One essential difference between the scalar limiter and the vector limiter is that the limited gradient given by the scalar limiter has the same direction with the predicted gradient, while the vector limiter may not only modify the magnitude, but also the direction of the predicted gradient. Therefore, the vector limiter is actually trying to find a gradient in a multi-dimensional cone adjacent to the direction of the predicted gradient. Some authors then turned the vector limiter into the formulation of an optimization problem [17–19]. Taking [17] as an example, the limiter was formulated as a linear programming (LP) problem with the goal of retaining as much of the predicted gradient as possible while fulfilling the monotonicity condition.

Since the direction of the predicted gradient is modified, one actually has no obligation to take the predicted gradient as a priori information. Motivated by such an idea, we propose a new formulation of reconstruction. In this formulation, the gradient satisfying the monotonicity condition can be directly obtained without a gradient prediction. The prediction step and limiting step in the traditional reconstruction are therefore integrated into a single step. Our construction eventually gives rise to a tiny LP problem similar to that of [19], except with an alternative objective function that does not require gradient prediction any longer. Equipped with an efficient LP solver, the reconstructed gradient can be obtained directly with cheap computational cost. Moreover, the LP problem itself is free from the grid topology, and thus the method may be applied to those discretization with very flexible grids. More importantly, our method is completely parameter-free and can thereby be easily extended to various applications. With some mild assumptions on the grids, we can show that the local maximum principle is preserved on triangular meshes. Similar analysis to alternative meshes can be performed. We present some numerical tests by solving scalar equations to validate our method. It is observed that the discrete scheme achieves satisfactory numerical accuracy without violating the discrete maximum principle. The numerical results for some benchmark problems governed by Euler equations are then provided to demonstrate the capacity of this new exploration.

This paper is organized as follows. At first, we briefly introduce the finite volume methods in Sect. 2, and then in Sect. 3 we present our linear reconstruction procedures in detail. In Sect. 4 we discuss the properties of our reconstruction method. Numerical examples will be given in Sect. 5 to demonstrate the robustness and accuracy of our method. Finally, a short conclusion will be drawn in Sect. 6.

2 MUSCL-Type Finite Volume Method

We briefly introduce the MUSCL-type finite volume methods for hyperbolic conservation laws on unstructured grids below. Let us consider a hyperbolic conservation law as follows

equipped with appropriate boundary conditions. The problem domain is triangulated into a mesh \({\mathcal {T}}\) with cells \(T_i\). The cell-centered finite volume discretization is derived by first integrating (1) on the control volume \(T_i\)

where \({{\varvec{u}}}_i(t)\) denotes the cell average of \({{\varvec{u}}}\) on the cell \(T_i\) and \({{\varvec{n}}}\) is the unit outward normal over the boundary \(\partial T_i\), and then approximating the integral on the cell boundary appeared in (2) with the numerical quadrature

where \(e_{ij}\) represents the common edge between \(T_i\) and \(T_j\), \({{\varvec{x}}}^{(q)}_{ij}\) and \(w_q~(q=1,2,\cdots ,Q)\) are the numerical quadrature points and corresponding weights respectively. Since we are concerned with second-order schemes, we adopt the midpoint quadrature rule and omit the index q hereinafter.

Next we supplant the flux \({{\varvec{F}}}({{\varvec{u}}}({{\varvec{x}}}_{ij},t))\cdot {\varvec{n}}_{ij}\) at interfaces by a numerical flux function \({\mathcal {F}}({{\varvec{u}}}_{ij}^-,{{\varvec{u}}}_{ij}^+)\), which yields a semi-discrete scheme formulated as

where \({{\varvec{u}}}_{ij}^-\) (resp. \({{\varvec{u}}}_{ij}^+\)) is the trace value of the numerical solution inside (resp. outside) the cell \(T_i\).

As one of the most popular numerical flux functions, the Lax-Friedrichs flux can be expressed as

where \(\alpha \) is taken as an upper bound for the signal speed.

To achieve a higher than first-order numerical accuracy, the trace values \({{\varvec{u}}}_{ij}^\pm \) are evaluated on a piecewise polynomial numerical solution reconstructed from cell-averaged values \(\{ {\varvec{u}}_i\}\). This is the focus of this paper and we will give a method to reconstruct a piecewise linear numerical solution to calculate trace values appeared in the numerical flux.

So far we obtain a system of ordinary differential equations

The system of ODEs (5) are then discretized by a higher-order TVD Runge–Kutta methods [20]. For example, the first-order TVD Runge–Kutta scheme is simply the forward Euler scheme

and the second-order TVD Runge–Kutta scheme is given therein as

To match the second-order spatial discretization, we use the second-order TVD Runge–Kutta scheme (7). The time step length \(\Delta t_n\) can be determined from CFL condition

where \(h_i\) is the size of the cell \(T_i\) and \(c_i\) is the maximal signal speed over \(T_i\).

3 Integrated Linear Reconstruction

We present the procedures of our linear reconstruction in this section. The linear reconstruction requires a suitable gradient on each cell. In our method, the gradient is the solution of an LP problem. The LP problem is solved by an efficient algorithm. For simplicity, we will restrict ourselves to two-dimensional cases. The extension to three-dimensional is straightforward.

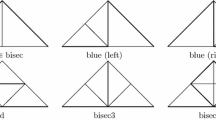

To start with, let us recall the typical procedure of the linear reconstructions in previous studies. Most of linear reconstructions consist of two steps: first construct a predicted gradient on each cell, and then correct the gradient to enforce some form of monotonicity condition. For clarity, we temporarily rename the relevant cell indices of a given cell within this section: the index 0 here stands for the current cell, whereas indices \(1, 2, \ldots , m\) stand for its neighbouring cells. The neighbourhood may consist of either cells sharing the edges of the given cell, or cells sharing at least one vertex of the given cell. We refer to the former as edge neighbours (EN) (Fig. 1), whereas the latter as vertex neighbours (VN) (Fig. 2). Note that both definitions exclude the cell 0 itself.

Let \(u_i\) and \({{\varvec{x}}}_i=(x_i,y_i)\) be the cell-averaged value and mass center of \(T_i\) respectively \((i=0,1,\ldots ,m)\). It is reasonable to assume that the mass centers \({{\varvec{x}}}_0, {\varvec{x}}_1,\ldots ,{{\varvec{x}}}_m\) are non-colinear. The reconstructed linear function on the cell \(T_0\) can be formulated as

where \({{\varvec{L}}}=[L_x, L_y]^\top \) is a limited form of the predicted gradient \({{\varvec{g}}}=[g_x,g_y]^\top \). The predicted gradient is taken as the Green–Gauss or least-squares approximations etc. [4, 8, 9]. For example, the least-square reconstruction gives a gradient prediction by fitting the cell-averaged values of the cell and its neighbourhood

The solution of (9) exists in the least-squares sense, i.e.

where

Next a monotonicity condition is enforced to prevent spurious oscillations. We present scalar limiters with two versions of monotonicity conditions [19] for comparison with our method. Following the construction of MUSCL schemes, the gradient is limited such that the reconstructed value evaluated at the mass center of any neighbouring cell is bounded by \(u_0\) and the cell-averaged value of that cell, i.e.

The constraints (11) may be viewed as a two-dimensional generalization of the minmod limiter for one-dimensional case. Later in Sect. 3.1 we will give a further remark on this point.

Instead of limiting using two cell-averaged values, a less diffusive approach is to limit the predicted gradient using the maximum and minimum over all neighbouring cells and the cell itself, i.e.

Following the terminology of [19], we refer to the monotonicity condition (11) as the standard formulation, whereas (12) as the relaxed formulation. As will be shown in Sect. 4, either (11) or (12) leads to a certain discrete maximum principle and subsequently \({\mathbb {L}}^\infty \)-stability with an additional geometric hypothesis on the grid.

The predicted gradient is then multiplied by a scalar factor \(\phi \in [0,1]\) as large as possible without violating the monotonicity condition

Explicit expressions are available for the scalar limiter by substituting (8) (13) into (11) or (12). The standard form of monotonicity condition (11) leads to the following upper bound of \(\phi \)

for \(i=1,2,\ldots ,m\), while the relaxed form (12) leads to the following upper bound of \(\phi \)

for \(i=1,2,\ldots ,m\), where

Finally the scalar factor \(\phi \) is selected as the minimum of all \(\Phi _i\)’s

Instead of a single factor in the scalar limiter, the vector limiter is implemented by multiplying different factors to each component of the predicted gradient. Since the direction of the predicted gradient is modified, one may be persuaded that the prediction of the gradient is actually unnecessary. As a further exploration of our method, the gradient is obtained without prediction at all. Since our reconstruction approach is not split into the prediction and limiting steps any more, we will refer it as the integrated linear reconstruction (ILR) from now on.

3.1 Reconstruction as an LP Problem

For better numerical accuracy and less numerical diffusion, the reconstructed values should fit the cell-averaged values as best as possible without violating any monotonicity conditions. In the following construction we will adopt the standard form of the monotonicity condition (11). Denote by \({\hat{u}}_i=u_0+L_x(x_i-x_0)+L_y(y_i-y_0)\) the reconstructed value at the mass center of neighbouring cell \(T_i~(i=1,2,\ldots ,m)\), then the inequality (11) becomes

We use the \(l^1\)-norm to measure the difference between the reconstructed and cell-averaged values when (16) holds. Define the total gaps \(\delta \) by

where \({\hat{v}}_i={\hat{u}}_i-u_0=(x_i-x_0)L_x+(y_i-y_0)L_y~(i=1,2,\ldots ,m)\).

Our goal is to minimize the total gaps \(\delta \) subjected to the monotonicity conditions (16) (see Fig. 3). This seems a non-linear objective function at first glance. However, one observes that, the difference \(v_i-{\hat{v}}_i\) has the same sign with \(v_i\), and consequently

As a result, \(\delta \) is actually an affine function of the limited gradient \((L_x,L_y)\).

So far we have formulated the linear reconstruction in the following LP problem

In particular the solution of (18) exactly recovers the linear data, since the exact gradient satisfies all the constraints and achieves minimal total gaps \(\delta = 0\).

Remark 1

In one-dimensional case, our approach actually degenerates to the minmod slope. Indeed, consider two neighbours assigned with labels 1 and 2 such that \(x_1<x_0<x_2\). Then the LP problem (18) reduces to

It is easy to derive the optimal solution of (19)

where the minmod function is defined by

A subsequent question is about the existence of the solution for LP problem (18). Since each double-sided constraint of (16) corresponds to a strip in the \((L_x,L_y)\)-plane, the feasible region of (18) is the intersection of m strips (see Fig. 4). Consider the region enclosed by any two of the m constraints (16), saying \(i=1\) and 2 for instance

This region is bounded as long as \({{\varvec{x}}}_0\), \({{\varvec{x}}}_1\) and \({{\varvec{x}}}_2\) are non-colinear. Since \({{\varvec{x}}}_0,{{\varvec{x}}}_1,\ldots ,{{\varvec{x}}}_m\) are non-colinear, the feasible region of (18) is always bounded. Another observation is that \((L_x,L_y)=(0,0)\) must be a feasible point. From the theory of LP, there exists a bounded optimal solution for (18).

3.2 LP Solver

The efficiency of LP solver is crucial since we need to solve an LP problem on each cell. Following [19], the all-inequality simplex method is taken as our LP solver. This variant of simplex method applies to LP problems consisting exclusively of inequality constraints. The idea is the same as the standard simplex algorithm: if there is an optimal solution for the LP, then there exists at least one vertex in the feasible region that achieves optimality. We search this vertex in an iterative manner. Compared to traditional simplex methods, this method is especially useful if the number of inequality constraints is much greater than the number of variables, which is the very case here. The algorithm of the all-inequality simplex method for the following LP problem

where \({{\varvec{c}}},{{\varvec{x}}}\in {\mathbb {R}}^{d},{{\varvec{A}}}\in {\mathbb {R}}^{N\times d}\) and \({{\varvec{b}}}\in {\mathbb {R}}^N\), can be found in “Appendix”.

In our case \(d=2\) and \(N=2m\). The corresponding variables become

Besides, it is known a priori that \({{\varvec{L}}}=[0,0]^\top \) is a valid starting point. We initialize the active matrix \({{\varvec{M}}}\) with the rows of \({{\varvec{A}}}\) corresponding to the following two constraints

The cost of the reconstruction is largely affected by the number of iterations needed to solve the LP. In all the two-dimensional cases we have run so far, the maximum number of iterations was 5. Moreover, one iteration of this simplex method is also very cheap, which requires only the solution of two \(2 \times 2\) linear systems to determine the Lagrange multiplier and search direction.

4 Discrete Maximum Principle

In this section we first give an a posteriori estimation of the gradient reduction, and then prove the local maximum principle for our ILR.

In [19], May et al. was aiming at retaining as much of the unlimited gradient as possible without violating the monotonicity conditions. The objective function then equals to the gradient reduction in \(l^1\)-norm: \(\Vert {{\varvec{g}}}- {\varvec{L}}\Vert _1=|g_x-L_x|+|g_y-L_y|\). Although optimal solutions of these two problems do not coincide, we claim that our optimal solution actually achieves quasi-optimality in the sense of minimal gradient reduction. This statement is made more clear in the following Theorem 1.

Theorem 1

(A posteriori estimation of gradient reduction). Let \({{\varvec{g}}}\) be the least-square predicted gradient given by (10) and \({{\varvec{L}}}\) be any feasible gradient satisfying (16), then the following \(l^1\)-estimation holds

where

is a geometry-dependent constant and \(\delta \) is the total gaps (17).

Proof

Denote

then we have the following two identities

Subtracting these two identities yields

By assumption \({{\varvec{X}}}{{\mathbf {X}}}^\top \) is invertible. Therefore

where C, given in (22), is the \(l^1\)-norm of the matrix \(({{\varvec{X}}}{{\varvec{X}}}^\top )^{-1}{{\varvec{X}}}\). \(\square \)

From Theorem 1 we conclude that by minimizing the total gaps \(\delta \), the quasi-optimality of the reduction of the resulting gradient \({{\varvec{L}}}\) with respect to \({{\varvec{g}}}\) is enforced.

We establish below the discrete maximum principle of linear reconstruction with either EN or VN for scalar conservation laws. The whole proof is sketched out in the following three stages: first we show that the solution is bounded from above and below by interpolants at the previous time level (Lemma 4); next we propose technical conditions of values at quadrature points that lead to a maximum principle (Theorem 5); finally we demonstrate that the monotonicity condition along with some geometric hypothesis results in the proposed technical conditions (Theorems 6, 7). The following notations of neighbouring indices are introduced to simplify the expressions (see Figs. 1, 2)

We also define the extremal trace values and extremal interior values at the quadrature points through

In the following argument we consider the fully discrete finite volume scheme

Definition 2

We say that the finite volume scheme (23) fulfils the local maximum principle, if the solution at a given cell is bounded by the minimum and maximum values within this cell and its VN at the previous time step, i.e.

where the local extrema

And we say that the finite volume scheme (23) fulfils the global maximum principle, if the global solution maximum is non-increasing and the global solution minimum is non-decreasing between two successive time levels, i.e.

where the global extrema

Of course the local maximum principle implies the global maximum principle, but the converse is not true. Before the proof of maximum principle, let us investigate some basic properties of linear reconstruction.

Lemma 3

With the notations defined above and a monotone Lipschitz continuous numerical flux function \({\mathcal {F}}\), the fully discrete finite volume scheme (23) satisfies the following inequality

where \(L_i\) denotes the perimeter of \(T_i\) and M is the Lipschitz constant of \({\mathcal {F}}\) with respect to the second argument, i.e.

Proof

Utilizing the consistency and monotonicity of numerical flux function, we have

In a similar manner we can prove the left hand side of the inequality (26). \(\square \)

Next we attempt to eliminate the extremal interior value terms \({\mathcal {U}}_i^{\min }\) and \({\mathcal {U}}_i^{\max }\) appeared in (26). In fact, utilizing the interpretation of Barth’s geometric shape parameter [21] one can prove that for linear reconstruction there exists a constant \(\Gamma \in (1,+\infty )\) such that

For triangular control volumes, the geometric shape parameter \(\Gamma =3\). As a result, the updated solution is bounded by interpolants of extremal trace values and the current solution. Precisely we have the following result.

Lemma 4

The fully discrete finite volume scheme (23) constructed with a monotone Lipschitz continuous numerical flux function and linear reconstruction exhibits the local interpolated interval bound

where the time-step-proportional interpolation parameter \(\sigma _i\) is defined by

Proof

By definition \(U_i^{\max }\ge {\mathcal {U}}_i^{\max }\ge {\mathcal {U}}_i^{\min }\ge U_i^{\min }\). Applying the inequalities (27) we conclude that

Consequently,

Inserting (28) into (26) and rearranging terms give rise to the desired results. \(\square \)

Given the two-sided bound, a discrete maximum principle can be established under a CFL-like time step restriction provided that the extremal trace values \(U_i^{\max }\) and \(U_i^{\min }\) can be bounded from both above and below by the neighbouring cell averages. Actually we have the following Theorem 5.

Theorem 5

The fully discrete finite volume scheme (23) constructed with a monotone Lipschitz continuous numerical flux function and linear reconstruction fulfils the global maximum principle (25) if on each cell \(T_i\) the following condition holds

Furthermore, the scheme fulfils the local maximum principle (24) if

In particular, the local maximum principle is satisfied if

Proof

Assuming the time step restriction

we have \(0\le \sigma _i\le 1\) and consequently

when (29) holds. The global maximum principle then follows.

Now we rewrite the condition (30) as

Then the extremal trace values can be bounded from above and below by the local extrema

and as a result,

which leads to the local maximum principle (24). \(\square \)

Let us recall the monotonicity conditions in Sect. 3 in a formal notation: the standard form

and the relaxed form

where either \(j\in \overline{{EN}}(i)\) or \(j\in \overline{{VN}}(i)\).

It is worth mentioning that these conditions all restrict values at the adjacent mass centers rather than the quadrature points. However, with an additional geometric hypothesis the quadrature points will be contained in the convex hull spanned by the adjacent mass centers, and the restriction on the quadrature points can thereby be obtained. Our results below rely on the following Hypothesis \({\mathcal {H}}_1\) or \({\mathcal {H}}_2\)

-

Hypothesis \({\mathcal {H}}_1\): Any vertex of the grid falls in the convex hull spanned by mass centers of cells sharing this vertex (Fig. 5);

-

Hypothesis \({\mathcal {H}}_2\): For each cell of the grid, the edge midpoints fall in the convex hull spanned by mass centers of EN (Fig. 6).

Hypothesis \({\mathcal {H}}_1\) is required in the proof of maximum principle for VN, while Hypothesis \({\mathcal {H}}_2\) is required in the proof of maximum principle for EN. Here we present two general results for linear reconstruction in Theorems 6 and 7, from which it can be shown that our ILR satisfies the local maximum principle.

Theorem 6

(Maximum principle for vertex neighbours). Consider the fully discrete finite volume scheme (23) with a monotone Lipschitz continuous numerical flux function and linear reconstruction from VN on a grid satisfying Hypothesis \({\mathcal {H}}_1\).

-

1.

If the linear reconstruction satisfies the standard form (11) of monotonicity condition, then the scheme satisfies a local maximum principle (24) under a proper CFL restriction.

-

2.

If the linear reconstruction satisfies the relaxed form (12) of monotonicity condition, then only a global maximum principle (25) can be guaranteed under a proper CFL restriction.

Proof

(1) Consider two adjacent cells \(T_i\) and \(T_j\) with a common edge \(e_{ij}={\overline{AB}}\). From Hypothesis \({\mathcal {H}}_1\) we know that the vertex A falls in the convex hull spanned by mass centers of cells from N(A), the set of indices of cells sharing the vertex A. As a result,

Utilizing the standard form of monotonicity condition

we conclude that

The inclusion relations \(N(A)\subset \overline{{VN}}(i)\) and \(N(A)\subset \overline{{VN}}(j)\) lead to

therefore

We can obtain the same bounds for vertex B. Therefore the interpolation \(u_{ij}^-\) satisfies (30) and the local maximum principle (24) follows.

(2) If we are using the relaxed form of monotonicity condition, then (34) should be replaced with

Therefore,

Similarly,

As a result,

Note that generally the inequality \(u_j^{\min }\le u_{ij}^-\le u_j^{\max }\) does not hold. Nevertheless we can still obtain a global maximum principle from (29). \(\square \)

Theorem 7

(Maximum principle for edge neighbours). Consider the fully discrete finite volume scheme (23) with a monotone Lipschitz continuous numerical flux function and linear reconstruction from EN on a grid satisfying Hypothesis \({\mathcal {H}}_2\). If the linear reconstruction satisfies either standard form (11) or relaxed form (12) of the monotonicity condition, then the scheme exhibits a local maximum principle (24) under a proper CFL restriction.

Proof

Again we consider two adjacent cells \(T_i\) and \(T_j\) with a common edge. From Hypothesis \({\mathcal {H}}_2\) we know that

Moreover, for any \(k\in EN(i)\), both standard and relaxed forms lead to

Note that \(\overline{{EN}}(i)\subset \overline{{VN}}(i)\) and \(\overline{{EN}}(i)\subset \overline{{VN}}(j)\). As a result,

The local maximum principle then follows from (30). \(\square \)

The geometry of the grid has to be examined for Hypothesis \({\mathcal {H}}_2\). Here we give a sufficient condition for Hypothesis \({\mathcal {H}}_2\) on a triangular grid, which is the case in our numerical experiments.

Lemma 8

For any triangle \(\Delta A_1A_2A_3\) in the grid. Let \(\Delta B_1A_2A_3,\Delta A_1B_2A_3\) and \(\Delta A_1A_2B_3\) be the edge neighbours of \(\Delta A_1A_2A_3\) (see Fig. 6) and assume the following constraint on the triangulation

where \(\lambda _j\) denotes the barycentric coordinate with respect to \(A_j(j=1,2,3)\). Then the grid satisfies Hypothesis \({\mathcal {H}}_2\).

Proof

Let \(C_1,C_2\) and \(C_3\) be the mass centers of three EN accordingly. Denote by \({\mathcal {A}}\) and \({\mathcal {C}}\) the barycentric coordinate systems of \(\Delta A_1A_2A_3\) and of \(\Delta C_1C_2C_3\). Then the matrix formed by \({\mathcal {A}}\)-coordinates of edge midpoints is

Let \({{\varvec{B}}}\) be the matrix formed by \({\mathcal {A}}\)-coordinates of \(B_1,B_2\) and \(B_3\). Then the matrix formed by \(\mathcal A\)-coordinates of \(C_1,C_2\) and \(C_3\) is \(({{\varvec{B}}}+2{{\varvec{M}}})/3\). Furthermore, let \({{\varvec{P}}}\) be the matrix formed by \(\mathcal C\)-coordinates of edge midpoints, then we have

Now Hypothesis \({\mathcal {H}}_2\) is equivalent to the entrywise non-negativity of matrix \({{\varvec{P}}}\). By assumption, the entry of matrix \({{\varvec{K}}}={{\varvec{B}}}+2{{\varvec{M}}}\) satisfies

By carrying out an algebraic manipulation we can show that all entries of \({{\varvec{P}}}=3{{\varvec{K}}}^{-1}{{\varvec{M}}}\) are non-negative. \(\square \)

For grids with alternative cell geometry, similar conditions may be required.

5 Numerical Results

We present several numerical examples to demonstrate the numerical performance of our ILR on unstructured grids. Here the comparison is made with standard scalar limiter (SSL) [cf. (14)] and relaxed scalar limiter (RSL) [cf. (15)].

5.1 Linear Advection Equations

We first consider the linear advection equation

with constant wave velocity \({{\varvec{a}}}=(1,2)\). The boundary conditions are periodic. The mesh is generated by a global refinement on an irregular Delaunay triangulation, with the ratio of maximum to minimum size being 1.47. The Lax-Friedrichs flux (4) is adopted as the numerical flux and CFL number is 0.4.

Double sine wave: The initial profile is given by the double sine wave function [8, 19]

on the domain \([0,1]\times [0,1]\). Errors in \({\mathbb {L}}^1\) and \({\mathbb {L}}^\infty \) norms for the solution at \(t=1\) as well as the CPU time are shown in Table 1. The results of ILR and RSL almost exhibit second-order accuracy, in contrast with the first-order accuracy of SSL. Although ILR seems slightly more time-consuming than SSL or RSL, it is still very efficient. In fact, the average number of iterations is approximately two. Moreover, ILR is free from the computation of predicted gradient. As a result, there is almost no extra cost in solving LP problems. Figure 7 shows the contour lines for the double sine wave with 3968 cells. Compared to SSL, both ILR and RSL are less sensitive to the grid orientation.

Square wave: Instead of a smooth function, we use the following square wave for initial profile [6]

Figure 8 shows the advection of square wave at \(t=1\). In Table 2 we examine the global maximum and minimum values on two levels of grids. One may see that ILR is, again similar as RSL, less dissipative than SSL, and resolves the discontinuity without spurious oscillations.

5.2 Solid Body Rotation

Another test to assess the capacity of the scheme to preserve the shape is the solid body rotation [22]. Consider the advection (38) with velocity \( {\varvec{a}}=(-(y-0.5),x-0.5)\) in the domain \([0,1]\times [0,1]\). The initial profile consists of three geometry shapes. Each shape is located within the circle of radius \(r_0=0.15\) whose center is \((x_0,y_0)\). Moreover, let \(r(x,y)=\dfrac{1}{r_0}\sqrt{(x-x_0)^2+(y-y_0)^2}\) be the normalized distance. The slotted cylinder is centered at \((x_0,y_0)=(0.5,0.75)\) and

The sharp cone is centered at \((x_0,y_0)=(0.5,0.25)\) and

The smooth hump is centered at \((x_0,y_0)=(0.25,0.5)\) and

For the rest of the domain, the initial value is zero. The homogeneous Dirichlet boundary conditions are prescribed. The snapshots presented in Fig. 9 show the shape of the solution on a Delaunay mesh with 15,872 cells at \(t = 2\pi \), which corresponds to one full rotation. Due to excessive numerical dissipation of SSL, the slotted cylinder is significantly smeared and distorted. On the other hand, the result of ILR almost preserves the shape of slotted cylinder without much distortion.

5.3 Euler Equations

For system of conservation laws, we apply the reconstruction directly to the conservative variables, mainly for the purposes of speed and simplicity, though in many aspects the characteristic limiting seems to be the most natural choice [8]. For Euler equations, the HLL flux is adopted as the numerical flux.

5.3.1 Shock Tube Problems

These tests are to examine the capability to resolve various linear and non-linear waves on unstructured grids. The computational domain is \([0,1]\times [0,0.1]\) with an irregular triangulation of 101 nodes in the x-direction and 11 nodes in the y-direction on the boundary. Initially the discontinuity is located at \(x=0.5\) with the prescribed left and right states as follows

- Sod’s problem :

-

$$\begin{aligned} (\rho _{\mathrm {L}},u_{\mathrm {L}},v_{\mathrm {L}},p_{\mathrm {L}})&=(1,0,0,1),\\ (\rho _{\mathrm {R}},u_{\mathrm {R}},v_{\mathrm {R}},p_{\mathrm {R}})&=(0.125,0,0,0.1). \end{aligned}$$

- Lax’s problem :

-

$$\begin{aligned} (\rho _{\mathrm {L}},u_{\mathrm {L}},v_{\mathrm {L}},p_{\mathrm {L}})&=(0.445,0.698,0,3.528),\\ (\rho _{\mathrm {R}},u_{\mathrm {R}},v_{\mathrm {R}},p_{\mathrm {R}})&=(0.5,0,0,0.571). \end{aligned}$$

- 123 problem :

-

$$\begin{aligned} (\rho _{\mathrm {L}},u_{\mathrm {L}},v_{\mathrm {L}},p_{\mathrm {L}})&=(1,-2,0,0.4),\\ (\rho _{\mathrm {R}},u_{\mathrm {R}},v_{\mathrm {R}},p_{\mathrm {R}})&=(1,2,0,0.4). \end{aligned}$$

Figures 10, 11 and 12 show the density and internal energy distributions for these test cases, which confirm the better performance of ILR. For 123 problem, the result of ILR captures expansion waves more accurately than SSL, and reduces the non-physical peak that appears in the middle of the domain considerably.

5.3.2 Isentropic Vortex Evolution

This test is to assess the performance of the proposed schemes for evolution of a two-dimensional inviscid isentropic vortex in a free stream [9]. The exact solution is simply a passive advection of the initial vortex with mean velocity. Assuming the free stream conditions \(\rho _\infty =p_\infty =T_\infty =1\) and \((u_\infty , v_\infty ) = (1,1)\), the initial condition is given by the mean flow field perturbed by

where the vortex strength \(\beta =5.0\) and adiabatic index \(\gamma =1.4\). The shifted coordinates \(({{\overline{x}}},{{\overline{y}}})=(x-x_0,y-y_0)\), where \((x_0,y_0)=(5,5)\) is the coordinates of the vortex center initially and \(r^2=\overline{x}^2+{{\overline{y}}}^2\). The entire flow field is required to be isentropic so, for an ideal gas, \(p/\rho ^\gamma =1\), and consequently, the resulting state is given by

The computational domain is \([0,10]\times [0,10]\) where the periodic boundary condition is applied. The vortex moves in the right-up direction with the free stream, and returns back to the initial location at every time interval \(\Delta T=10\).

Figure 13 shows the density contours on an irregular Delaunay mesh with 15,872 cells. Due to the numerical diffusion of the scalar limiter, the vortex shape is significantly distorted. However, ILR almost keeps the initial vortex shape. Figure 14 gives the comparison of density distribution across the vortex center. Again ILR exhibits less dissipation than SSL.

5.3.3 Double Mach Reflection

The double Mach reflection problem comes from [23]. Results at \(t = 0.2\) are plotted in Figs. 15 and 16 on two levels of Delaunay meshes. All pictures are the density contours with 30 equally spaced contour lines from 1.5 to 23.5. We can see that ILR captures the complicated flow structure under the Mach stem much better than SSL does, with a reasonable level of numerical diffusion.

In conclusion, ILR achieves better numerical resolution than SSL. While for RSL, the numerical dissipation may be on the similar level as ILR. Nevertheless, ILR satisfies the local maximum principle, while RSL only leads to a global maximum principle, which is much less sufficient to rule out spurious oscillations. Since we have not found any example in the literature where RSL produces numerical oscillations, the advantage of our method is mainly in the theoretical fold during the current stage.

6 Conclusion

We present an ILR under the framework of MUSCL-type schemes on unstructured grids. The reconstruction is formulated as an LP problem in every cell and contains no parameters at all. And the all-inequality simplex method used in this paper appears to be a very efficient algorithm to solve these LP problems. Moreover, the resulting schemes satisfy a local discrete maximum principle. Extension to higher-order reconstruction is possible, by increasing the number of variables and constraints in the LP problems. Additional constraints may be required to guarantee the local maximum principle. Fortunately, our framework is general enough to implement this with little extra cost.

References

van Leer, B.: Towards the ultimate conservative difference scheme. V. A second-order sequel to Godunov’s method. J. Comput. Phys. 32(1), 101–136 (1979)

Goodman, J.B., LeVeque, R.J.: On the accuracy of stable schemes for 2d scalar conversation laws. Math. Comput. 45(171), 15–21 (1985)

Spekreijse, S.: Multigrid solution of monotone second-order discretizations of hyperbolic conservation laws. Math. Comput. 49(179), 135–155 (1987)

Barth, T.J., Jespersen, D.C.: The design and application of upwind schemes on unstructured meshes. In: 27th AIAA Aerospace Sciences Meeting, vol. 366, Jan 1989

Durlofsky, L.J., Engquist, B., Osher, S.: Triangle based adaptive stencils for the solution of hyperbolic conservation laws. J. Comput. Phys. 98(1), 64–73 (1992)

Batten, P., Lambert, C., Causon, D.M.: Positively conservative high-resolution convection schemes for unstructured elements. Int. J. Numer. Methods Eng. 39(11), 1821–1838 (1996)

Liu, X.D.: A maximum principle satisfying modification of triangle based adapative stencils for the solution of scalar hyperbolic conservation laws. SIAM J. Numer. Anal. 30(3), 701–716 (1993)

Hubbard, M.E.: Multidimensional slope limiters for MUSCL-type finite volume schemes on unstructured grids. J. Comput. Phys. 155(1), 54–74 (1999)

Park, J.S., Yoon, S.H., Kim, C.: Multi-dimensional limiting process for hyperbolic conservation laws on unstructured grids. J. Comput. Phys. 229(3), 788–812 (2010)

Barth, T.J., Frederickson, P.O.: Higher order solution of the Euler equations on unstructured grids using quadratic reconstruction. In: 28th AIAA Aerospace Sciences Meeting, vol. 13, Jan 1990

Abgrall, R.: On essentially non-oscillatory schemes on unstructured meshes: analysis and implementation. J. Comput. Phys. 114(1), 45–58 (1994)

Friedrich, O.: Weighted essentially non-oscillatory schemes for the interpolation of mean values on unstructured grids. J. Comput. Phys. 144(1), 194–212 (1998)

Hu, C., Shu, C.W.: Weighted essentially non-oscillatory schemes on triangular meshes. J. Comput. Phys. 150(1), 97–127 (1999)

Liu, Y., Zhang, Y.T.: A robust reconstruction for unstructured WENO schemes. J. Sci. Comput. 54(2–3), 603–621 (2013)

Jawahar, P., Kamath, H.: A high-resolution procedure for Euler and Navier–Stokes computations on unstructured grids. J. Comput. Phys. 164(1), 165–203 (2000)

Li, W., Ren, Y.X., Lei, G., Luo, H.: The multi-dimensional limiters for solving hyperbolic conservation laws on unstructured grids. J. Comput. Phys. 230(21), 7775–7795 (2011)

Berger, M., Aftosmis, M.J., Murman, S.M.: Analysis of slope limiters on irregular grids. In: 43rd AIAA Aerospace Sciences Meeting, vol. 490, May 2005

Buffard, T., Clain, S.: Monoslope and multislope MUSCL methods for unstructured meshes. J. Comput. Phys. 229(10), 3745–3776 (2010)

May, S., Berger, M.: Two-dimensional slope limiters for finite volume schemes on non-coordinate-aligned meshes. SIAM J. Sci. Comput. 35(5), A2163–A2187 (2013)

Shu, C.W., Osher, S.: Efficient implementation of essentially non-oscillatory shock-capturing schemes. J. Comput. Phys. 77(2), 439–471 (1988)

Barth, T.J., Ohlberger, M.: Finite volume methods: foundation and analysis. In: Encyclopedia of Computational Mechanics. Citeseer, (2004)

LeVeque, R.J.: High-resolution conservative algorithms for advection in incompressible flow. SIAM J. Numer. Anal. 33(2), 627–665 (1996)

Woodward, P., Colella, P.: The numerical simulation of two-dimensional fluid flow with strong shocks. J. Comput. Phys. 54(1), 115–173 (1984)

Acknowledgments

The authors appreciate the financial supports provided by the National Natural Science Foundation of China (Grant Numbers 91330205 and 11325102).

Author information

Authors and Affiliations

Corresponding author

Appendix: All-Inequality Simplex Method

Appendix: All-Inequality Simplex Method

Consider a general LP problem in the all-equality form (20). The Lagrangian of (20) is

and the dual problem of (20) is

By the weak duality theorem, one knows that if \({{\varvec{x}}}\) is feasible for (20) and \(\varvec{\lambda }\) is feasible for (39), then

Moreover, \({{\varvec{x}}}\) is optimal for (20) provided that the equality above holds. Now we start from a vertex, and go from vertex to vertex until this equality holds. The iteration takes the form

where the step length \(\alpha \) and the searching direction \({{\varvec{p}}}\) need to be determined at each step.

Denote by \({{\varvec{a}}}_i^\top \) the i-th row of \({{\varvec{A}}}\). The active matrix \({{\varvec{M}}}\) consists of d rows of the matrix \({{\varvec{A}}}\), indicating that corresponding constraints at the current searching point \({{\varvec{x}}}\) are active. The active matrix \({{\varvec{M}}}\) needs to be non-singular.

Suppose that at a given step the active Lagrange multipliers \(\tilde{\varvec{\lambda }}\) satisfy

then \({{\varvec{c}}}^\top {{\varvec{x}}}={{\varvec{b}}}^\top \varvec{\lambda }\) with all the inactive Lagrange multipliers vanishing, and we end up with an optimal point \({{\varvec{x}}}\). Otherwise, we remove the constraint j such that the component \(\lambda _j<0\) and determine the searching direction \({\varvec{p}}\) through

where \({{\varvec{e}}}_j\) is the j-th coordinate vector.

Assuming that the point in the next step \({{\varvec{x}}}+\alpha _i{{\varvec{p}}}\) satisfies the constraint i, then we have

which yields the step length with regard to i to be

Since \(b_i-{{\varvec{a}}}_i^\top {{\varvec{x}}}\ge 0\), it suffices to consider those constraints satisfying \({{\varvec{a}}}_i^\top {{\varvec{p}}}>0\). The actual step length \(\alpha \) is then taken as the minimum of all the estimated step lengths

Finally we choose an index k satisfying \(\alpha _k=\alpha \) and update the active matrix by replacing j-th row of \({{\varvec{M}}}\) with k-th row of \({{\varvec{A}}}\). So far we finish one loop. The whole algorithm is illustrated in Algorithm 1. Note that we use the negation of both the searching direction \({{\varvec{p}}}\) and step length \(\alpha \).

Rights and permissions

About this article

Cite this article

Chen, L., Li, R. An Integrated Linear Reconstruction for Finite Volume Scheme on Unstructured Grids. J Sci Comput 68, 1172–1197 (2016). https://doi.org/10.1007/s10915-016-0173-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-016-0173-1