Abstract

High-dimensionality is one of the major challenges in kinetic modeling and simulation of realistic physical systems. The most appropriate numerical scheme needs to balance accuracy and computational complexity, and it also needs to address issues such as multiple scales, lack of regularity, and long-term integration. In this chapter, we review state-of-the-art numerical techniques for high-dimensional kinetic equations, including low-rank tensor approximation, sparse grid collocation, and ANOVA decomposition.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction

Kinetic equations are partial differential equations involving probability density functions (PDFs). They arise naturally in many different areas of mathematical physics. For example, they play an important role in modeling rarefied gas dynamics [12, 13], semiconductors [68], stochastic dynamical systems [18, 63, 74,75,76, 103, 114], structural dynamics [9, 60, 100], stochastic partial differential equations (PDEs) [19, 57, 66, 111, 112], turbulence [35, 71, 72, 90], system biology [30, 85, 123], etc. Perhaps, the most well-known kinetic equation is the Fokker-Planck equation [74, 96, 107], which describes the evolution of the probability density function of Langevin-type dynamical systems subject to Gaussian white noise. Another well-known example of kinetic equation is the Boltzmann equation [115] describing a thermodynamic system involving a large number of interacting particles [13]. Other examples that may not be widely known are the Dostupov-Pugachev equations [26, 60, 103, 114], the reduced-order Nakajima-Zwanzig-Mori equations [24, 112, 127], and the Malakhov-Saichev PDF equations [66, 111] (see Table 1). Computing the numerical solution to a kinetic equation is a challenging task that needs to address issues such as:

-

1.

High-dimensionality: Kinetic equations describing realistic physical systems usually involve many phase variables. For example, the Fokker-Planck equation of classical statistical mechanics is an evolution equation for a joint probability density function in n phase variables, where n is the dimension of the underlying stochastic dynamical system, plus time.

Table 1 Examples of kinetic equations in different areas of mathematical physics -

2.

Multiple scales: Kinetic equations can involve multiple scales in space and time, which could be hardly accessible by conventional numerical methods. For example, the Liouville equation is a hyperbolic conservation law whose solution is purely advected (with no diffusion) by the underlying system’s flow map. This can easily yield mixing, fractal attractors, and all sorts of complex dynamics.

-

3.

Lack of regularity: The solution to a kinetic equation is, in general, a distribution [50]. For example, it could be a multivariate Dirac delta function, a function with shock-type discontinuities [19], or even a fractal object (see Figure 1 in [112]). From a numerical viewpoint, resolving such distributions is not trivial although in some cases it can be done by taking integral transformations or projections [120].

-

4.

Conservation properties: There are several properties of the solution to a kinetic equation that must be conserved in time. The most obvious one is mass, i.e., the solution to a kinetic equation always integrates to one. Another property that must be preserved is the positivity of the joint PDF, and the fact that a partial marginalization still yields a PDF.

-

5.

Long-term integration: The flow map defined by nonlinear dynamical systems can yield large deformations, stretching and folding of the phase space. As a consequence, numerical schemes for kinetic equations associated with such systems will generally loose accuracy in time. This is known as long-term integration problem and it can be eventually mitigated by using adaptive methods.

Over the years, many different techniques have been proposed to address these issues, with the most efficient ones being problem-dependent. For example, a widely used method in statistical fluid mechanics is the particle/mesh method [77, 89,90,91], which is based directly on stochastic Lagrangian models. Other methods are based on stochastic fields [109] or direct quadrature of moments [33]. In the case of Boltzmann equation, there is a very rich literature. Both probabilistic approaches such as direct simulation Monte Carlo [8, 97], as well as deterministic methods, e.g., discontinuous Galerkin and spectral methods [15, 16, 31], have been proposed to perform simulations. However, classical techniques such as finite-volumes, finite-differences or spectral methods, are often prohibitive in terms of memory requirements and computational cost. Probabilistic methods such as direct Monte Carlo are extensively used instead because of their very low computational cost compared to the classical techniques. However, Monte Carlo usually yields poorly accurate and fluctuating solutions, which need to be post-processed appropriately, for example through variance reduction techniques. We refer to Di Marco and Pareschi [67] for a recent excellent review.

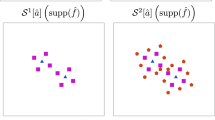

In this chapter, we review the state-of-the-art in numerical techniques to address the high-dimensionality challenge in both the phase space and the space of parameters of kinetic systems. In particular, we discuss the sparse grid method [84, 102], low-rank tensor approximation [6, 17, 29, 40, 59, 79, 80], and analysis of variance (ANOVA) decomposition [11, 36, 61, 125] including Bogoliubov-Born-Green-Kirkwood-Yvon (BBGKY) [73] closures. These methods have been established as new tools to address high-dimensional problems in scientific computing during the last years, and here we discuss those in the context of kinetic equations, particularly in the deterministic Eulerian approach. As we will see, most of these methods allow us to reduce the problem of computing high-dimensional PDF solutions to sequences of problems involving low-dimensional PDFs. The range of applicability of the numerical methods is sketched in Fig. 1 as a function of the number of phase variables n and the number of parameters m appearing in the kinetic equation.

Range of applicability of different numerical methods for solving kinetic equations as a function of the number of phase variables and the number of parameters appearing in the equation. The first name refers to the numerical method we employ to discretize the phase variables, the second name we employ to discretize the space of parameters. For example, DG-PCM refers to an algorithm in which we discretize the phase variables with discontinuous Galerkin methods (DG), and random parameters with tensor product probabilistic collocation (PCM). Other methods listed are: canonical tensor decomposition (CTD), tensor train (TT), high-dimensional model representation (ANOVA), sparse grids (SG), and quasi Monte Carlo (QMC). Reproduced with permission from [20]

2 Numerical Methods

This chapter discuss three classes of algorithms to compute the numerical solution of high-dimensional kinetic equations. The first class is based on sparse grids, and we discuss its construction in both the phase space and the space of parameters. The second class is based on low-rank tensor approximation and alternating direction methods, such as alternating least squares (ALS). The third class is based on ANOVA decomposition and BBGKY closures.

2.1 Sparse Grids

The sparse grid technique [10, 37] has been developed as a major tool to break the curse of dimensionality of grid-based approaches. The key idea relies on a tensor product hierarchical basis representation, which can reduce the degrees of freedom without losing much accuracy. Early work on sparse grid techniques can be traced back to Smolyak [102], in the context of high-dimensional numerical integration. The scheme is based on a proper balancing between the computational cost and the corresponding accuracy by seeking a proper truncation of the tensor product hierarchical bases, which can be formally derived by solving an optimization problem of cost/benefit ratios [41]. Sparse grid techniques have been incorporated in various numerical methods for high-dimensional PDEs, e.g., in finite element methods [10, 99], finite difference methods [42], spectral methods [38, 101], and collocation methods for stochastic differential equations [64, 78, 117]. More recently, sparse grids have been proposed within the discontinuous Galerkin (DG) framework to simulate elliptic and hyperbolic systems using wavelet bases [43, 116].

The sparse grid formulation is based on a hierarchical set of basis functions in one-dimension. For instance, we can consider basis functions in a space V k of piecewise polynomials of degree at most q on the k-th level grid that consists of 2k uniform intervals, i.e.,

on Ω = [0, 1]. Clearly, we have

These basis functions are suitable for the discontinuous Galerkin framework. Then, we define W k as the orthogonal complement of V k−1 on V k with respect to the L 2 inner product on Ω, that is,

with W 0 = V 0. This yields the hierarchical representation of

Next, define the multidimensional increment space as defined as \(\mathbf {W}_l = W_{l_1,z_1} \otimes W_{l_2,z_2} \otimes \cdots \otimes W_{l_N,z_N} \) with l = (l 1, ⋯ , l N ) as the multivariate mesh level. Accordingly, the standard tensor product space V ℓ can be represented as

and the sparse grid approximation space as

where \(|l|{ }_{\infty } = {\max }_{i} l_i \) and \(|l| = \sum _{i=1}^N l_i \). Then, \(\widetilde {\mathbf {V}}_{\ell } \subset \mathbf {V}_{\ell }\). The number of degrees of freedom of \(\widetilde {\mathbf {V}}_{\ell }\) is significantly smaller than the one of V ℓ . This set of basis functions is also called multi-wavelet basis and it has been employed with the discontinuous Galerkin method to study the Vlasov and the Boltzmann equations [43, 101]. In particular, for sufficiently smooth solutions, it was shown in [101, 116] that a semi-discrete L 2 stability condition and an error estimate of the order \(O\left ((\log h)^N h^{q+1/2}\right )\) can be obtained. We emphasize that although the computational cost of the sparse grid formulation is significantly smaller than the full tensor product, the curse of dimensionality still remains as the sparse grid level ℓ increases. For this reason, [43, 101, 116] can handle problems with less than ten dimensions in the phase space.

The application of the sparse grid technique in the space of parameters differs from the one we just described only in regard of the choice of the basis functions. In fact, in this case, we are usually interested in computing multi-dimensional integrals in the form

where b = (b 1, …, b m ). The collocation points \(\mathbf {b}^{k}=(b_1^{k},\ldots ,b_m^{k})\) and quadrature weights w k are obtained by suitable cubature rules with high polynomial exactness, e.g., Clenshaw-Curtis or Gauss abscissae [118]. More recent sparse collocation techniques can increase the number of dimensions that can be handled in the space of parameters up to hundreds [119, 122].

2.2 Low-Rank Tensor Approximation

Low-rank tensor approximation has been established as a new tool to overcome the curse of dimensionality in representing high-dimensional functions and the solution to high-dimensional PDEs. The method has been recently applied to stochastic PDEs [25, 29, 56, 69, 79], approximation of high-dimensional Green’s functions [44], the Boltzmann equation [48, 55], and Fokker-Planck equation [2, 22, 49, 54]. The key idea of low-rank tensor approximation [17, 40, 81] is to represent a multivariate function in terms of series involving products of low-dimensional functions. This allows us to reduce the problem of computing the solution from high-dimensional PDEs to a sequence of low-dimensional problems that can be solved recursively and in parallel, e.g., by alternating direction algorithms such as alternating least squares [20, 25] and its parallel extension [52]. These algorithms are usually based on low-rank matrix techniques [39], and they have a convergence rate that depends on the type of kinetic equation and on its solution.

The most simplest tensor format is a rank one tensor of an N-dimensional function, p(z 1, ⋯ , z N ) = p 1(z 1)p 2(z 2)⋯p N (z N ), where p j (z j ) are one-dimensional functions. Upon discretization we can write p in a tensor notation as

where p j is a vector of length q z corresponding to the discretization of p j (z j ) with q z degrees of freedom.Footnote 1 More generally, we have a summation of rank-one tensors

and

where R is the tensor rank or separation rank. This representation is also known as separated series expansion or canonical tensor decomposition. The main advantage of using a representation in the form (5)–(6) to solve a high-dimensional kinetic PDE relies on the fact that the algorithms to compute \(\mathbf {p}_j^r\) and the normalization factors α r involve operations with one-dimensional functions. In principle, the computational cost of such algorithms scales linearly with respect to the dimension N of the phase space, thus potentially avoiding the curse of dimensionality. The representation can be generalized to any combination of low-dimensional separated functions. Canonical tensor decompositions have been employed to compute the solution to the Malakhov-Saichev kinetic equation [20], the Vlasov-Poisson equation [27], and functional differential equations [110].

More advanced tensor decomposition techniques involve Tucker decomposition, tensor train decomposition (TT), and hierarchical Tucker decomposition (HT). In particular, the tensor train decomposition is in the form of

where the tensor rank becomes a tuple of (R 1, ⋯ , R N−1) with R 0 = R N = 1. In each direction j, the index that runs over \(\mathbb {R}^{R_{j-1}}\) and \(\mathbb {R}^{R_j}\) takes care of the coupling to the j − 1-th and the j + 1-th dimension, respectively. A discretization of (7) with q z degrees of freedom in each dimensions yields

where \(\mathbf {Q}_j^{r_{j-1},r_j} \) is a vector of length q z . With a payoff of an additional tensor rank dimension, the problem of constructing a tensor train decomposition is closed and it can be solved to any given error tolerance or fixed rank [86]. The algorithm is based on a sequence of SVD applied to the matricizations of the tensor, i.e. the so-called high-order singular value decomposition (HOSVD) [39]. Methods for reducing the computational cost of tensor train are discussed in [82, 87, 126]. Applications to the Vlasov kinetic equation can be found in [23, 46, 58].

2.2.1 Temporal Dynamics

To include temporal dynamics in the low rank tensor representation of a field we can simply add additional time-dependent functions, i.e., represent p(t, z 1, …, z N ) as

This approach has been considered by several authors, e.g., [2, 17], and it was shown to be effective for problems dominated by diffusion. However, for complex transient problems (e.g., hyperbolic dynamics), such approach is not practical as it requires a high resolution in the time domain. To address this issue, a discontinuous Galerkin method in time was proposed by Nouy in [79]. The key idea is to split the integration period into small intervals (finite elements in time) and then consider a space-time separated representation of the solution within each interval.

Alternatively, one can consider an explicit or implicit time-integration schemes [20, 59]. In this case, the separated representation of the solution is computed at each time step. In such representations we look for expansions in the form

Here, we demonstrate the procedure with reference to the simple Crank-Nicolson scheme. To this end, we consider the linear kinetic equation in the form

where z = (z 1, …, z N ) is the vector of phase variables and L(z) is a linear operator. For instance, in the case of the Fokker-Planck equation we have

We discretize (11) in time by using the Crank-Nicolson scheme. This yields

i.e.,

where τ k (z) is the truncation error arising from the temporal discretization. Assuming that p(z, t k ) is known, (12) is a linear equation for p(z, t k+1) which can be written concisely (at each time step) as

where

Note that we dropped the time t k+1 in p(z, t k+1) with the understanding that the linear system (13) has to be solved at each time step. We emphasize that other multi-step and time-splitting schemes [27, 58]—including geometric integrators [45]—can be used instead of the Crank-Nicolson method.

2.2.2 Alternating Direction Algorithms

The low-rank tensor decomposition is particularly convenient when the system operator A(z) and the right-hand-side f(z) are separable with respect to z, i.e.,

Note that A(z) is separable if L(z) is separable. A simple example of a two-dimensional separable operator L(z) with separation rank n L = 3 is

Another example is the Liouville operator associated to nonlinear dynamical systems with polynomial nonlinearities. A substitution of the tensor representation (5) into (12) yields the residualFootnote 2

which depends on z and on all degrees of freedom associated with \(p_j^r\). To determine such degrees of freedom we require that

in an appropriately chosen norm, and for a prescribed target accuracy ε. Ideally, the optimal tensor rank of can be defined as the minimal R such that the solution has an exact tensor decomposition with R terms, i.e., 𝜖 = 0. However, the storage requirements and the computational cost increase with R, which makes the tensor decomposition attractive for small R. Therefore, we look for a low-rank tensor approximation of the solution to (13), with a reasonable accuracy 𝜖. Although there are at present no useful theorems on the size R needed for a general class of functions, there are examples where tensor expansions are exponentially more efficient than one would expect a priori (see [6]).

Many existing algorithms to determine the best low-rank approximation of the solution to (13) are based on alternating direction methods. The key idea is to construct the tensor expansion (5) iteratively by determining \({p}_j^r(z_j)\) one at a time while freezing the degrees of freedom associated with all other functions. This yields a sequence of low-dimensional problems that can be solved efficiently [5, 6, 59, 79, 80, 83], eventually in parallel [52]. Perhaps, one of the first alternating direction algorithms to compute a low rank representation of the solution of a high-dimensional PDE was the one proposed in [2]. To clarify how the method works in simple terms, suppose we have constructed an approximated solution to the system (12) in the form (5), i.e., suppose we have available p R(z) with tensor rank R. Then we look for an enriched solution in the form

where {r 1(z 1), …, r N (z N )} are N unknown functions to be determined. In the alternating direction method, such functions are determined iteratively, one at a time. Typical algorithms to perform such iterations are based on alternating least squares (ALS),

or alternating Galerkin methods,

where \(\left <\cdot \right >\) is an inner product (multi-dimensional integral with respect to z), and q is a test function, typically chosen as q(z) = r 1(z 1)⋯ϕ j,k (z j )⋯r N (z N ) for k = 1, …, q z . In a finite-dimensional setting, the minimization problem (18) reduces to the problem of finding the minimum of a scalar function in as many variables as the number of unknowns we consider in each basis function r j (z j ), say q z . Similarly, the alternating direction solution to (19) yields a sequence of low-dimensional linear systems of size q z × q z . If A(z) is a nonlinear operator, then we can still solve (18) or (19), e.g., by using Newton iterations. Once the functions {r 1(z 1), …, r N (z N )} are computed, they are normalized (yielding the normalization factor α R+1) and added to p R(z) to obtain p R+1(z). The tensor rank is increased until the norm of the residual (16) is smaller than the desired target accuracy ε (see Eq. (17)). We would like to emphasize that it is possible to include additional constraints when solving the linear system (13) with alternating direction algorithms. For example, one can impose that the solution p(z) is positive and it integrates to one [59], i.e., it is a probability density function.

The enrichment procedure just described has been criticized in the literature due to its slow convergence rate, in particular for equations dominated by advection [79]. Depending on the criterion used to construct the tensor decomposition, the enrichment procedure might not even converge. To overcome this problem, Doostan and Iaccarino [25] proposed an alternating least-square (ALS) algorithm with granted convergence properties. The algorithm simultaneously updates the entire rank of the basis set in the j-th direction. In this formulation, the least square approach (18) becomes

The computational cost of this method clearly increases compared to (18). In fact, in a finite dimensional setting, the simultaneous determination of \(\{{p}^1_j,\ldots ,{p}^{R}_j \}\) requires the solution of a Rq z × Rq z linear system. However, this algorithm usually results in a separated solution with a lower tensor rank R than the regular approach, which makes the algorithm more favorable to advection dominated kinetic systems. The basic idea of updating the entire rank of functions depending on a specific variable can be also applied to the alternating Galerkin formulation (19) (see [20]). In Sect. 4 we provide a numerical example of such algorithm—see also Algorithm 1.

Further developments and applications of low-rank tensor approximation methods can be found in the excellent reviews papers [3, 40, 81]. Gradient-based and Newton-like methods modifying and improving the basic ALS algorithm are discussed in [1, 14, 28, 34, 53, 88, 93, 105, 106], Convergence of ALS and its parallel implementation has been studied in [21, 52, 70, 108].

Algorithm 1 Alternating least squares with canonical tensor decomposition

2.3 ANOVA Decomposition and BBGKY Hierarchies

Another typical approach to model high-dimensional functions is based on the truncation of interactions. Hereafter we discuss two different methods to perform such approximation, namely, the ANOVA decomposition [11, 36, 61, 125] and the BBGKY (Bogoliubov-Born-Green-Kirkwood-Yvon) technique. Both these methods rely on a representation of multivariate functions in terms of series expansions involving functions with a smaller number of variables. For example, a second-order ANOVA approximation of a multivariate PDF in N variables is a series expansion involving functions of at most two variables. As we will see, both the ANOVA decomposition and the BBGKY technique [73] yield a hierarchy of coupled PDF equations for each given stochastic dynamical system. These methods are especially appropriate for anisotropic problems where dimensional adaptivity can be pursued.

The ANOVA series expansion [11, 41, 121] involves a superimposition of functions with an increasing number of variables. Specifically, the ANOVA decomposition of an N-dimensional PDF takes the from

The function p 0 is a constant. The functions p i (z i ), which we shall call first-order interaction terms, give us the overall effects of the variables z i in p as if they were acting independently of the other variables. The functions p ij (z i , z j ) represent the interaction effects of the variables z i and z j , and therefore they will be called second-order interactions. Similarly, higher-order terms reflect the cooperative effects of an increasing number of variables, and the series is usually truncated at a certain interaction order. These terms can be computed in different ways [92, 124], however, we point out the following procedure,

where S ⊂ K ⊂{1, ⋯ , N}, K′ is the complement of K in {1, ⋯ , N}, \(p_K(z_K) = p_{j_1,\ldots ,j_k}(z_{j_1},\cdots ,z_{j_k})\) for K = {j 1, ⋯ , j k }, and μ is the Lebesgue measure. Due to its construction, this procedure generates ANOVA terms that are orthogonal with respect to μ, that is, \(\int p_K(z_K) p_S(z_S) d \mu (z)\), for all S ≠ K, which provides an effective criterion for dimensional adaptivity [65, 121].

The ANOVA expansion can be readily applied in the space of parameters of kinetic systems since the parameters do not depend on time and each terms computed at the initial time can be updated independently. To pursue a collocation approach similar to the sparse grid collocation method (3), we replace the Lebesgue measure with a Dirac measure dμ = δ(z −c) at an appropriate anchor point c, and consider the corresponding collocation scheme [118]. This method is called the anchored-ANOVA method (PCM-ANOVA) [7, 32, 36, 121]. The anchor points are often taken as the mean value of the random variable in each dimension [125]. Then, each PDF equations in Table 1 can be solved at the PCM-ANOVA collocation points in the space of parameters.

On the other hand, representing the dependence of the solution PDF on the phase variables through the ANOVA expansion yields a hierarchy of coupled PDF equations that resembles the BBGKY hierarchy of classical statistical mechanics. Let us briefly review the BBGKY technique type with reference to a nonlinear dynamical system in the form

where \(\mathbf {z}(t)\in \mathbb {R}^N\) is a multi-dimensional stochastic process including both phase and parametric variables, \(\mathbf {G}:\mathbb {R}^{N+1}\rightarrow \mathbb {R}^N\) is a Lipschitz continuous (deterministic) function, and \( \mathbf {z}_0\in \mathbb {R}^N\) is a random initial state. The joint PDF of z(t) evolves according to the Liouville equation

whose solution can be computed numerically with standard discretization methods only for relatively small N. This leads us to look for PDF equations involving only a reduced number of phase variables, for instance, the PDF of each component z i (t). Such equations can be formally obtained by marginalizing (23) with respect to different phase variables and discarding terms at infinity. This yields, for example,

Higher-order PDF equations can be derived similarly. The computation of the integrals in (24) and (25) requires the full joint PDF of z(t), which is available only if we solve the full Liouville equation (23). Alternatively, we can solve (24) or (25) directly, provided we need to introduce approximations. The most common one is to assume that the joint PDF p(z, t) can be written in terms of lower-order PDFs, e.g., as p(z, t) = p(z 1, t)⋯p(z N , t) (mean-field approximation). By using integration by parts, this assumption reduces the Liouville equation to a hierarchy of low-dimensional PDF equations (see, e.g., [20, 112]). An example of such approximation will be presented later in this chapter with an application to Lorenz-96 model.

3 Computational Cost

Consider a kinetic partial differential equation with n phase variables and m parameters, i.e., a total number of N = n + m variables. Suppose that we represent the solution by using q z degrees of freedom in each phase variable and q b degrees of freedom in each parameter. If we employ a tensor product discretization, the number of degrees of freedom becomes \(q_z^n \cdot q_b^m\) and the computational cost grows exponentially as \(O(q_z^{2n}\cdot q_b^{m})\). Hereafter we compare the computational cost of the methods we discussed in the previous sections. Table 2 summarizes the main results.

3.1 Sparse Grids

The computational complexity of sparse grids grows logarithmically with the number of degrees of freedom in each dimension, i.e., O(q z |log2(q z )|n−1). If we employ the multi-wavelet basis we mentioned before in the context of the discontinuous Galerkin framework, then it can be shown that the computational complexity is O((q z + 1)n2ℓ ℓ n−1), where ℓ is the element level and q z is the polynomial order in each element (see [43]). In the space of parameters, the sparse grid collocation method yields 2l(m + l)!/(m!l!) points, where l is the sparse grid level and m is the number of parameters. Thus, if we consider sparse grid in both phase and parametric space, the total computational cost can be estimated as \(O(q_z^{2} | \log _2( q_z ) |{ }^{2n-2}) \cdot \sum _{l=0}^{\ell } 2^{l}(m+l)!/(m!l!)\).

3.2 Low-Rank Tensor Approximation

The total number of degrees of freedom in a low-rank tensor decomposition grows linearly with both n and m. For instance, we have R(nq z + mq b ) in the canonical tensor decomposition (6), and R 2(nq z + mq b ) in the tensor train approach (8). If the tensor rank R turns out to be relatively small, then the tensor approximation is far more efficient than full tensor product, sparse grid, or ANOVA approaches, in terms of memory requirements as well as the computational cost. The classical alternating direction algorithm at the basis of the canonical tensor decomposition can be divided into two steps, i.e., the enrichment and the projection steps (see Algorithm 1). The computational cost of the projection step can be neglected with respect to the one of the enrichment step, as it reduces to solving a linear system of rather small size (r × r). The enrichment step at tensor rank r requires O((rq z )2 + (rq z )2) operations—provided we employ appropriate iterative linear solvers. If we assume that the average number of iterations is n itr , and sum up the cost for r = 1, …, R, the overall computational cost of canonical tensor decomposition can be estimated as \(O\left ({R}^3 \left (n q_z^2 + m q_b^2\right ) \right )\cdot n_{itr}\). In the tensor train approach, the cost also depends on the matrix rank S that comes from the procedure of HOSVD, and it becomes \(O\left (R^2 S^2 n q_z^2 + R^3 S^3 n q_z \right )\) [58].

3.3 ANOVA Decomposition

If we consider the ANOVA expansion or the BBGKY hierarchy, the computational complexity has a factorial dependency on the dimensionality n + m and the interaction orders of the variables [32]. In particular, the total number of degrees of freedom for a fixed interaction order ℓ and assuming q b = q z is

The computational cost of matrix-vector operations involving discretized variables in each level is \(O\left ( C(n+m, \ell , q_z^{2\ell } ) \right )\). It is possible to combine the BBGKY technique with the PCM-ANOVA approach to improve the accuracy, since the interaction order of the phase variables and the parameters, denoted as ℓ and ℓ′, can be controlled separately. In this case, the total number of degrees of freedom and the corresponding computational cost become, \((\sum _{l=0}^{\ell } C(n,l,q_z)) \cdot (\sum _{l=0}^{\ell '} C(m,l,q_b) )\) and \(O\left ( C(n, \ell , q_z^{2\ell }) \cdot (\sum _{l=0}^{\ell '} C(m,l,q_b) ) \right )\), respectively.

4 Applications

In this section, we present numerical examples to illustrate the performance and accuracy of the algorithms we discussed in this chapter. Specifically, we study the alternating Galerkin formulation (canonical tensor decomposition) of a kinetic model describing stochastic advection of a scalar field. We also study the BBGKY hierarchy of the Lorentz-96 model evolving from a random initial state.

4.1 Stochastic Advection of Scalar Fields

Let us consider the following stochastic advection equations

where x ∈ [0, 2π] and {ξ 1, …, ξ m } are i.i.d. uniform random variables in [−1, 1]. The kinetic equations governing the joint probability density function of {ξ 1, …, ξ m } and the solution to (27) or (28) are, respectively,

where p = p(x, t, a, b), b = {b 1, …, b m } (see [111] for a derivation). Note that this PDF depends on x, t, one phase variable a (corresponding to u(x, t)), and m parameters b (corresponding to {ξ 1, …, ξ m }). The analytical solutions to Eqs. (29) and (30) can be obtained by using the method of characteristics [95]. They are both in the form

where

in the case of Eq. (29) and

in the case of Eq. (30). Also, \(p_0\left (x,a,\boldsymbol {b}\right )\) is the joint PDF of u(x, t 0) and {ξ 1, …, ξ m }. In our simulations we take

which has tensor rank R = 2. Non-separable initial conditions can be approximated in the tensor format (5). Also, we consider different number of parameters in Eqs. (29) and (30), i.e., m = 3, 13, 24, 54, 84, 114.

4.1.1 Finite-Dimensional Representations

Let us represent the joint probability density function (5) in terms of polynomial basis functions as

where q z is the number of degrees of freedom in each variable. In particular, for (29) and (30), we consider a spectral collocation method in which {ϕ 1,j } and {ϕ 2,j } are trigonometric polynomials, while \(\{\phi _{n,j}\}_{n=3}^N\) (basis elements for the space of parameters) are Lagrange interpolants at Gauss-Legendre-Lobatto points. The finite-dimensional representation of the joint PDF admits the following canonical tensor form

where the vector

collects the (normalized) values of the solution at the collocation points. The fully discrete Galerkin formulation of our kinetic equations takes the form

where

By using a Gauss quadrature rule to evaluate the integrals, we obtain system matrices \(\mathbf {A}_n^k\) that are either diagonal or coincide with the classical differentiation matrices of spectral collocation methods [47]. For example, in the case of Eq. (29) we have

where q b denotes the vector of collocation points, w x , w z , and w b are collocation weights, \(\mathscr {D}_x\) is the differentiation matrix, and δ ij is the Kronecker delta function. In an alternating direction setting, we aim at solving the system (35) in a greedy way, by freezing all degrees of freedom except those representing the dimension n. This yields a sequence of linear systems

where B n is a block matrix with R × R blocks of size q z × q z , and g n is multi-component vector. Specifically, the hv-th block of B n and the h-th component of g n are obtained as

The solution vector

is normalized as \(\mathbf {p}_n^r/ \left \|\mathbf {p}_n^{r} \right \|\) for all r = 1, .., R and n = 1, …, N. This operation yields the coefficients \(\boldsymbol {\alpha } = \left (\alpha _1,\ldots ,\alpha _R\right )\) as a solution to the linear systems

where the entries of the matrix D and the vector d are, respectively

The main steps of the computational scheme are summarized in Algorithm 1. We also refer the reader to [21, 70, 108] for a convergence analysis of the alternating direction algorithm.

The iterative procedure at each time step is terminated when the norm of the residual is smaller than a tolerance, i.e., when ∥Ap R −f∥≤ ε. This usually involves the computation of an N-dimensional tensor norm, which can be expensive and compromise the computational efficiency of the whole algorithm. To avoid this problem, we replace the condition ∥Ap R −f∥≤ ε with the simpler convergence criterion

where \(\left \{\widetilde {\mathbf {p}}^R_1,\ldots ,\widetilde {\mathbf {p}}^R_N\right \}\) denotes the solution at the previous iteration. This criterion involves the computation of N vector norms instead of one N-dimensional tensor norm.

4.1.2 Numerical Results: Low-Rank Tensor Approximation

We compute the solution to the kinetic equations (29) and (30) by using Algorithm 1. The PDF solution is represented in the canonical tensor format as

We chose the degrees of freedom of the expansion to carefully balance the error between the space and time discretization, as well as the truncation error due to the finite rank R. In particular, x and a are discretized in terms of an interpolant with collocation points q z = 50 in each variable, while the parametric dependence on b j (j = 1, .., m) is represented with Legendre polynomials of order q b = 7.

In Fig. 2 we plot the first few tensor modes \(p_r(x,a,t) \doteq p_x^r(x,t) p_a^r(a,t)\) of the solution to Eqs. (29) and (30) at time t = 2. Specifically, we considered m = 54 in (29) and m = 3 in (30). Note that the tensor modes we obtain from Eq. (29), p r , are very similar to each other for r ≥ 2, while in the case of Eq. (30) the modes are quite distinct, suggesting the presence of modal interactions and the need of a larger tensor rank to achieve a certain accuracy. This is also observed in Fig. 3, where we plot the normalization coefficients {α 1, …, α R }, which can be interpreted as the spectrum of the tensor solution. The stochastic advection problem with random forcing yields a stronger coupling between the tensor modes, i.e., a slower spectral decay than the problem of random coefficient.

Tensor modes of the kinetic solution to the stochastic advection equations at t = 2. Reproduced with permission from [20]

Spectra of the canonical tensor decomposition of the stochastic advection problem at t = 2. Reproduced with permission from [20]

In Fig. 4 we plot the error of the low-rank tensor approximation of the solution versus the number of parameters m for different tensor rank R. As it is predicted from the spectra shown in Fig. 3, the overall relative error of the solution in the random forcing case is larger than in the random coefficient case (see also Fig. 5 for the convergence with respect to R). This is due to the presence of the time-dependent forcing term in Eq. (28), which injects additional energy in the system and activates new modes. This yields a higher tensor rank for a prescribed level of accuracy. In addition, the plots suggest that the accuracy of the low-rank tensor approximation method depends primarily on the tensor rank rather than on the number of parameters of the problem. The choice of the tensor format that yields the smallest possible tensor rank for a specific problem is an open question. Recent studies suggest that the answer is usually problem-dependent. For instance, Kormann [58] has recently shown that a semi-Lagrangian solver for the Vlasov equation in tensor train format achieves best performances if the phase variables are sorted as (v 1, x 1, x 2, v 2, x 3, v 3).

Relative L 2 errors of the low-rank tensor approximation of the solution with respect to the analytical solution (31). Shown are results for different number of random variables m in (27)–(28) and different tensor ranks R. It is seen that the accuracy of the tensor method mainly depends on the actual tensor rank rather than on the dimensionality. Reproduced with permission from [20]

4.1.3 Comparison Between Tensor Approximation and ANOVA

In this section we compare the accuracy and the computational cost of the low-rank alternating Galerkin method with the ANOVA expansion technique to compute the solution to Eqs. (29) and (30). The PCM-ANOVA representation of the solution is

For ℓ = 2 (level 2) and m parameters, the expansion (42) has 1 + m + m(m − 1)/2 terms.

In Fig. 5 we compare the accuracy of the low-rank tensor approximation and the PCM-ANOVA expansion in computing the solution to the kinetic equation (29). In particular, the convergence of the tensor solution with respect to R is demonstrated. Note that the tensor solution attains the same level of accuracy as the ANOVA decomposition with just five modes for t ≤ 1. Therefore the low-rank tensor approximation is preferable over ANOVA especially when m ≥ 54. However, this is not true in the case of Eq. (30) due to its relatively large tensor ranks. To overcome this problem, we developed an adaptive algorithm that sets the separation rank of the solution based on a prescribed target accuracy on the residual of the kinetic equation, or other quantities related to it.

In Fig. 6 (left) we plot the temporal dynamics of the tensor rank R(t) obtained by setting a threshold on the spectral condition number defined as the ratio between the smallest and the largest α i . Specifically, we increase R by one at t = t ∗ whenever the following condition is verified α R (t ∗)/α 1(t ∗) > θ. For a small threshold θ, we notice that R can increase to 20 and more at later times. This result reveals two key aspects of efficient tensor algorithms in practical applications. It is essential to develop a robust adaptive procedure that can identify the proper tensor rank on-the-fly and an effective compression technique that can reduce the tensor rank in time. This is critical especially when computing long term behavior of kinetic systems.

Comparison between the relative L 2 errors of the adaptive tensor method and the ANOVA method of level ℓ = 2. Results are for the kinetic equation (30) with threshold θ = 5 × 10−4. It is seen that the error of the tensor solution is slightly independent of m, while the error of ANOVA level 2 increases as we increase m. Reproduced with permission from [20]

In Fig. 6 (right) we plot the error of the adaptive tensor method and the level 2 ANOVA method versus time. It is seen that error in the tensor method is almost independent of m, while the error of ANOVA increases with m. The accuracy can be improved either by increasing the tensor rank (canonical tensor decomposition) or increasing the interaction order (ANOVA method). Before doing so, however, one should carefully examine the additional computational cost incurred by each method. For example, increasing the interaction order from two to three in the PCM-ANOVA expansion would increase the number of collocation points from 70, 498 to 8, 578, 270 (case m = 54). In Fig. 7 we compare the computational cost of canonical tensor decomposition with different ranks, ANOVA of level two, and sparse grid of level three in computing the solution to Eq. (30). It is seen that the tensor method is the most efficient one, in particular for high dimensions and low tensor rank, e.g., m ≥ 24 and R ≤ 8.

Computational time of the tensor decomposition, ANOVA level 2, and sparse grid (SG) level 3 with respect to the dimensionality m and the tensor rank R. The results are normalized with respect to the computing time of ANOVA when m = 3. Reproduced with permission from [20]

4.2 The Lorenz-96 Model

The Lorenz-96 model is a continuous in time and discrete in space model often used in atmospheric sciences to study fundamental issues related to forecasting and data assimilation [51, 62]. The basic equations are

Here we consider n = 40, F = 1, and assume that the initial state [x 1(0), …, x 40(0)] is jointly Gaussian with PDF

Without an additional parametric space, the dimensionality of this system is n = 40. The kinetic equation governing the joint PDF of the phase variables [x 1(t), …, x 40(t)] is

Such hyperbolic conservation law cannot be obviously solved in a classical tensor product representation because of high-dimensionality and possible lack of regularity (for F > 10) related to the fractal structure of the attractor [51]. Thus, we are led to look for reduced-order PDF equations.

4.2.1 Truncation of the BBGKY Hierarchy

In this section we illustrate how to compute low order probability density function equations by truncations of the BBGKY hierarchy. To this end, consider the dynamical system

where

With such velocity field G i (y, t) we can calculate the integrals at the right hand side of the one-point PDF equation (24) exactly as

where p i = p(z i , t) and p ik = p(z i , z k , t). Similarly, the two-point PDF equations (25) can be approximated as

where we discarded all contributions from the three-point PDFs and the two-point PDFs except the ones interacting with the i-th variable. A variance-based sensitivity analysis in terms of Sobol indices [98, 104, 113] can be performed to identify the system variables with strong correlations. This allows us to determine whether it is necessary to add the other two-points correlations or the three-points PDF equations for a certain triple {x k (t), x i (t), x j (t)}, and to further determine the equation for a general form of G i .

In the specific case of the Lorenz-96 system, we can write Eq. (46) as

where \( \langle f(\mathbf {z}) \rangle _{i|\,j} \doteq \int f(\mathbf {z}) p_{ij}(z_i,z_j,t) dz_i\). In order to close such a system within the level of one-point PDFs, \(\left < z_{i-1}\right >_{{i-1}|i}\) could be replaced, e.g., by \(\left < z_{i-1}\right > p_i(z_i,t)\). Similarly, Eq. (47) can be written for the two adjacent nodes as

By adding the two-points closure of one node apart, i.e., \(p_{{ }_{i-1\,i+1}}(z_{{ }_{i-1}},z_{{ }_{i+1}},t)\), the quantity \(\left < z_{{ }_{i-2}}\right > \left < z_{{ }_{i-1}} \right >_{i-1|i} p_{{ }_{i+1}}\) in the first row and \(\left < z_{{ }_{i-1}}\right > z_{{ }_i} p_{{ }_{i\,i+1}}\) in the second row can be substituted by \(\left < z_{i-2} \right >_{i-2|i} \left < z_{i-1} \right >_{i-1|i+1}\) and \(\left < z_{i-1} \right >_{i-1|i+1} z_{{ }_i} p_{{ }_i}\), respectively.In Fig. 8, we compare the mean and the standard deviation of the solution to (43) as computed by the one- and two-points BBGKY closures (Eqs. (48) and (49), respectively) and a Monte Carlo simulation with 50,000 solution samples. It is seen that the mean of both the one-point and the two-points BBGKY closures basically coincide with the Monte Carlo results. On the other hand, the error in standard deviation is slightly different, and it can be improved in the two-points BBGKY closure (Fig. 9).

Mean (a, b) and standard deviation (c, d) of the Lorenz-96 system computed by the one-point (a) and two-points (c) BBGKY closure compared to the Monte-Carlo simulation (b, d). Reproduced with permission from [20]

The absolute error of the mean (a) and standard deviation (c, d) of the Lorenz-96 system by using the BBGKY closure compared to the Monte-Carlo simulation in log-scale. In (c) and (d), the results are computed by the one- and two-points BBGKY closure, respectively, and the L 1 error is shown in (b). Reproduced with permission from [20]

5 Summary

In this chapter we reviewed state-of-the-art algorithms to compute the numerical solution of high-dimensional kinetic equations. The algorithms are based on low-rank tensor approximation, sparse grids, and ANOVA decomposition. A common feature of these methods is that they allow us to reduce the problem of computing the solution to a high-dimensional PDE to a sequence of low-dimensional problems. The range of applicability of the algorithms is sketched in Fig. 1 as a function of the number of phase variables and the number of parameters appearing in the kinetic equation. The computational complexity ranges from logarithmic (sparse grids) to linear (canonical tensor decomposition) with respect to the dimension of the system. Further extensions of the proposed algorithms can be addressed along different directions. For example, adaptive procedures capable of resolving different phase variables with different accuracy may allow applications to kinetic systems with non-smooth solutions and scaling to extremely high-dimensions. In the context of low-rank tensor approximation methods [20, 27, 58], a fundamental question is the development of effective techniques for rank reduction [4, 94]. This is especially challenging for hyperbolic PDEs, since such equations can yield a slow convergence rate when solved with canonical tensor decompositions [20, 79]. Future work should address the development of adaptive algorithms for the construction of controlled low-rank approximations and an adaptive selection of separation ranks and tensor formats.

Notes

- 1.

For instance, if we represent p j (z j ) in terms of an interpolant

$$\displaystyle \begin{aligned}p_j(z_j) = \sum_{k=1}^{q_z} \text{p}_{j,k} \phi_{j,k}(z_j),\end{aligned}$$then \(\mathbf {p}_j = (\text{p}_{j,1},\cdots ,\text{p}_{j,q_z})\).

- 2.

The residual W(z) incorporates both the truncation error arising from the time discretization as well as the error arising from the finite-dimensional expansion (5).

References

E. Acar, D.M. Dunlavy, T.G. Kolda, A scalable optimization approach for fitting canonical tensor decompositions. J. Chemom. 25, 67–86 (2011)

A. Ammar, B. Mokdad, F. Chinesta, R. Keunings, A new family of solvers for some classes of multidimensional partial differential equations encountered in kinetic theory modelling of complex fluids: part II: transient simulation using space-time separated representations. J. Non-Newtonian Fluid Mech. 144(2), 98–121 (2007)

M. Bachmayr, R. Schneider, A. Uschmajew, Tensor networks and hierarchical tensors for the solution of high-dimensional partial differential equations. Found. Comput. Math. 16, 1423–1472 (2016)

C. Battaglino, G. Ballard, T.G. Kolda, A practical randomized CP tensor decomposition (2017). arXiv: 1701.06600

G. Beylkin, M.J. Mohlenkamp, Algorithms for numerical analysis in high dimensions. SIAM J. Sci. Comput. 26(6), 2133–2159 (2005)

G. Beylkin, J. Garcke, M.J. Mohlenkamp, Multivariate regression and machine learning with sums of separable functions. SIAM J. Sci. Comput. 31(3), 1840–1857 (2009)

M. Bieri, C. Schwab, Sparse high order FEM for elliptic SPDEs. Comput. Methods Appl. Mech. Eng. 198, 1149–1170 (2009)

G.A. Bird, Molecular Gas Dynamics and Direct Numerical Simulation of Gas Flows (Clarendon Press, Oxford, 1994)

V.V. Bolotin, Statistical Methods in Structural Mechanics (Holden-Day, San Francisco, 1969)

H.J. Bungartz, M. Griebel, Sparse grids. Acta Numer. 13, 147–269 (2004)

Y. Cao, Z. Chen, M. Gunzbuger, ANOVA expansions and efficient sampling methods for parameter dependent nonlinear PDEs. Int. J. Numer. Anal. Model. 6, 256–273 (2009)

C. Cercignani, The Boltzmann Equation and Its Applications (Springer, New York, 1988)

C. Cercignani, U.I. Gerasimenko, D.Y. Petrina, Many Particle Dynamics and Kinetic Equations, 1st edn. (Kluwer Academic Publishers, Dordrecht, 1997)

Y. Chen, D. Han, L. Qi, New ALS methods with extrapolating search directions and optimal step size for complex-valued tensor decompositions. IEEE Trans. Signal Process. 59, 5888–5898 (2011)

Y. Cheng, I.M. Gamba, A. Majorana, C.W. Shu, A discontinuous Galerkin solver for Boltzmann-Poisson systems in nano devices. Comput. Methods Appl. Mech. Eng. 198, 3130–3150 (2009)

Y. Cheng, I.M. Gamba, A. Majorana, C.W. Shu, A brief survey of the discontinuous Galerkin method for the Boltzmann-Poisson equations. SEMA J. 54, 47–64 (2011)

F. Chinesta, A. Ammar, E. Cueto, Recent advances and new challenges in the use of the proper generalized decomposition for solving multidimensional models. Comput. Methods. Appl. Mech. Eng. 17(4), 327–350 (2010)

H. Cho, D. Venturi, G.E. Karniadakis, Adaptive discontinuous Galerkin method for response-excitation PDF equations. SIAM J. Sci. Comput. 35(4), B890–B911 (2013)

H. Cho, D. Venturi, G.E. Karniadakis, Statistical analysis and simulation of random shocks in Burgers equation. Proc. R. Soc. A 260, 20140080(1–21) (2014)

H. Cho, D. Venturi, G.E. Karniadakis, Numerical methods for high-dimensional probability density function equations. J. Comput. Phys. 305, 817–837 (2016)

P. Comon, X. Luciani, A.L.F. de Almeida, Tensor decompositions, alternating least squares and other tales. J. Chemom. 23, 393–405 (2009)

S.V. Dolgov, B.N. Khoromskij, I.V. Oseledets, Fast solution of parabolic problems in the tensor train/quantized tensor train format with initial application to the Fokker-Planck equation. SIAM J. Sci. Comput. 34(6), A3016–A3038 (2012)

S.V. Dolgov, A.P. Smirnov, E.E. Tyrtyshnikov, Low-rank approximation in the numerical modeling of the Farley-Buneman instability in ionospheric plasma. J. Comput. Phys. 263, 268–282 (2014)

J. Dominy, D. Venturi, Duality and conditional expectations in the Nakajima-Mori-Zwanzig formulation. J. Math. Phys. 58, 082701(1–26) (2017)

A. Doostan, G. Iaccarino, A least-squares approximation of partial differential equations with high-dimensional random inputs. J. Comput. Phys. 228(12), 4332–4345 (2009)

B.G. Dostupov, V.S. Pugachev, The equation for the integral of a system of ordinary differential equations containing random parameters. Automatika i Telemekhanica (in Russian) 18, 620–630 (1957)

V. Ehrlacher, D. Lombardi, A dynamical adaptive tensor method for the Vlasov-Poisson system. J. Comput. Phys. 339, 285–306 (2017)

M. Espig, W. Hackbusch, A regularized Newton method for the efficient approximation of tensors represented in the canonical tensor format. Numer. Math. 122, 489–525 (2012)

M. Espig, W. Hackbusch, A. Litvinenko, H.G. Matthies, P. Wähnert, Efficient low-rank approximation of the stochastic Galerkin matrix in tensor formats. Comput. Math. Appl. 67(4), 818–829 (2014)

A. Fiasconaro, B. Spagnolo, A. Ochab-Marcinek, E. Gudowska-Nowak, Co-occurrence of resonant activation and noise-enhanced stability in a model of cancer growth in the presence of immune response. Phys. Rev. E 74(4), 041904 (2006)

F. Filbet, G. Russo, High-order numerical methods for the space non-homogeneous Boltzmann equations. J. Comput. Phys. 186, 457–480 (2003)

J. Foo, G.E. Karniadakis, Multi-element probabilistic collocation method in high dimensions. J. Comput. Phys. 229, 1536–1557 (2010)

R.O. Fox, Computational Models for Turbulent Reactive Flows (Cambridge University Press, Cambridge, 2003)

S. Friedland, V. Mehrmann, R. Pajarola, S.K. Suter, On best rank one approximation of tensors. Numer. Linear Algebra Appl. 20, 942–955 (2013)

U. Frisch, Turbulence: the legacy of A. N. Kolmogorov (Cambridge University Press, Cambridge, 1995)

Z. Gao, J.S. Hesthaven, On ANOVA expansions and strategies for choosing the anchor point. Appl. Math. Comput. 217(7), 3274–3285 (2010)

J. Garcke, M. Griebel, Sparse Grids and Applications (Springer, Berlin, 2013)

V. Gradinaru, Fourier transform on sparse grids: code design and the time dependent Schrödinger equation. Computing 80(1), 1–22 (2007)

L. Grasedyck, Hierarchical singular value decomposition of tensors. SIAM J. Matrix Anal. Appl. 31, 2029–2054 (2010)

L. Grasedyck, D. Kressner, C. Tobler, A literature survey of low-rank tensor approximation techniques. GAMM Mitteilungen 36(1), 53–78 (2013)

M. Griebel, Sparse grids and related approximation schemes for higher dimensional problems, in Foundations of Computational Mathematics Santander 2005, vol. 331, ed. by L.M. Pardo, A. Pinkus, E. Süli, M.J. Todd (Cambridge University Press, Cambridge, 2006), pp. 106–161

M. Griebel, G. Zumbusch, Adaptive sparse grids for hyperbolic conservation laws, in Hyperbolic Problems: Theory, Numerics, Applications (Springer, Berlin, 1999), pp. 411–422

W. Guo, Y. Cheng, An adaptive multiresolution discontinuous Galerkin method for time-dependent transport equations in multi-dimensions. SIAM J. Sci. Comput. 38(6), 1–29 (2016)

W. Hackbusch, B.N. Khoromskij, Tensor-product approximation to multidimensional integral operators and Green’s functions. SIAM J. Matrix Anal. Appl. 30(3), 1233–1253 (2008)

E. Hairer, C. Lubich, G. Wanner, Geometric numerical integration illustrated by the Störmer-Verlet method. Acta Numer. 12, 399–450 (2003)

D.R. Hatch, D. del Castillo-Negrete, P.W. Terry, Analysis and compression of six-dimensional gyrokinetic datasets using higher order singular value decomposition. J. Comput. Phys. 22, 4234–4256 (2012)

J.S. Hesthaven, S. Gottlieb, D. Gottlieb, Spectral Methods for Time-Dependent Problems (Cambridge University Press, Cambridge, 2007)

I. Ibragimov, S. Rjasanow, Three way decomposition for the Boltzmann equation. J. Comput. Math. 27, 184–195 (2009)

T. Jahnke, W. Huisinga, A dynamical low-rank approach to the chemical master equation. Bull. Math. Biol. 70, 2283–2302 (2008)

R.P. Kanwal, Generalized Functions: Theory and Technique, 2nd edn. (Birkhäuser, Boston, 1998)

A. Karimi, M.R. Paul, Extensive chaos in the Lorenz-96 model. Chaos 20(4), 043105(1–11) (2010)

L. Karlsson, D. Kressner, A. Uschmajew, Parallel algorithms for tensor completion in the CP format. Parallel Comput. 57, 222–234 (2016)

V.A. Kazeev, E.E. Tyrtyshnikov, Structure of the Hessian matrix and an economical implementation of Newton’s method in the problem of canonical approximation of tensors. Comput. Math. Math. Phys. 50, 927–945 (2010)

V. Kazeev, M. Khammash, M. Nip, C. Schwab, Direct solution of the chemical master equation using quantized tensor trains. Semin. Appl. Math. 2013-04, 2283–2302 (2013)

B.N. Khoromskij, Structured data-sparse approximation to high order tensors arising from the deterministic Boltzmann equation. Math. Comput. 76(259), 1291–1315 (2007)

B.N. Khoromskij, I.V. Oseledets, Quantics-TT collocation approximation of parameter-dependent and stochastic elliptic PDEs. Comput. Methods Appl. Math. 10(4), 376–394 (2010)

V.I. Klyatskin, Dynamics of Stochastic Systems (Elsevier, Amsterdam, 2005)

K. Kormann, A semi-lagrangian Vlasov solver in tensor train format. SIAM J. Sci. Comput. 37(4), B613–B632 (2015)

G. Leonenko, T. Phillips, On the solution of the Fokker-Planck equation using a high-order reduced basis approximation. Comput. Methods Appl. Mech. Eng. 199(1-4), 158–168 (2009)

J. Li, J.B. Chen, Stochastic Dynamics of Structures (Wiley, Singapore, 2009)

G. Li, S.W. Wang, H. Rabitz, S. Wang, P. Jaffé, Global uncertainty assessments by high dimensional model representations (HDMR). Chem. Eng. Sci. 57(21), 4445–4460 (2002)

E.N. Lorenz, Predictability - a problem partly solved, in ECMWF Seminar on Predictability, Reading, vol. 1 (1996), pp. 1–18

D. Lucor, C.H. Su, G.E. Karniadakis, Generalized polynomial chaos and random oscillators. Int. J. Numer. Methods Eng. 60(3), 571–596 (2004)

X. Ma, N. Zabaras, An adaptive hierarchical sparse grid collocation method for the solution of stochastic differential equations. J. Comput. Phys. 228, 3084–3113 (2009)

X. Ma, N. Zabaras, An adaptive high-dimensional stochastic model representation technique for the solution of stochastic partial differential equations. J. Comput. Phys. 229, 3884–3915 (2010)

A.N. Malakhov, A.I. Saichev, Kinetic equations in the theory of random waves. Radiophys. Quantum Electron. 17(5), 526–534 (1974)

G.D. Marco, L. Pareschi, Numerical methods for kinetic equations. Acta Numer. 23, 369–520 (2014)

P. Markovich, C. Ringhofer, C. Schmeiser, Semiconductor Equations (Springer, Berlin, 1989)

H.G. Matthies, E. Zander, Solving stochastic systems with low-rank tensor compression. Linear Algebra Appl. 436(10), 3819–3838 (2012)

M.J. Mohlenkamp, Musings on multilinear fitting. Linear Algebra Appl. 438, 834–852 (2013)

A.S. Monin, A.M. Yaglom, Statistical Fluid Mechanics, vol. I (Dover, Mineola, 2007)

A.S. Monin, A.M. Yaglom, Statistical Fluid Mechanics, vol. II (Dover, Mineola, 2007)

D. Montgomery, A BBGKY framework for fluid turbulence. Phys. Fluids 19(6), 802–810 (1976)

F. Moss, P.V.E. McClintock (eds.), Noise in Nonlinear Dynamical Systems. Volume 1: Theory of Continuous Fokker-Planck Systems (Cambridge University Press, Cambridge, 1995)

F. Moss, P.V.E. McClintock (eds.), Noise in Nonlinear Dynamical Systems. Volume 2: Theory of Noise Induced Processes in Special Applications (Cambridge University Press, Cambridge, 1995)

F. Moss, P.V.E. McClintock (eds.), Noise in Nonlinear Dynamical Systems. Volume 3: Experiments and Simulations (Cambridge University Press, Cambridge, 1995)

M. Muradoglu, P. Jenny, S.B. Pope, D.A. Caughey, A consistent hybrid finite-volume/particle method for the PDF equations of turbulent reactive flows. J. Comput. Phys. 154, 342–371 (1999)

F. Nobile, R. Tempone, C. Webster, A sparse grid stochastic collocation method for partial differential equations with random input data. SIAM J. Numer. Anal. 46(5), 2309–2345 (2008)

A. Nouy, A priori model reduction through proper generalized decomposition for solving time-dependent partial differential equations. Comput. Methods Appl. Mech. Eng. 199(23-24), 1603–1626 (2010)

A. Nouy, Proper generalized decompositions and separated representations for the numerical solution of high dimensional stochastic problems. Comput. Methods Appl. Mech. Eng. 17, 403–434 (2010)

A. Nouy, Low-rank tensor methods for model order reduction, in Handbook of Uncertainty Quantification (Springer International Publishing, Berlin, 2016), pp. 1–26

A. Nouy, Higher-order principal component analysis for the approximation of tensors in tree-based low rank formats. 1–43 (2017). arXiv:1705.00880

A. Nouy, O.P.L. Maître, Generalized spectral decomposition for stochastic nonlinear problems. J. Comput. Phys. 228, 202–235 (2009)

E. Novak, K. Ritter, Simple cubature formulas with high polynomial exactness. Constr. Approx. 15, 499–522 (1999)

D. Nozaki, D.J. Mar, P. Grigg, J.J. Collins, Effects of colored noise on stochastic resonance in sensory neurons. Phys. Rev. Lett. 82(11), 2402–2405 (1999)

I.V. Oseledets, Tensor-train decomposition. SIAM J. Sci. Comput. 33(5), 2295–2317 (2011)

A.H. Phan, P. Tichavský, A. Cichocki, CANDECOMP/PARAFAC decomposition of high-order tensors through tensor reshaping. IEEE Trans. Signal Process. 61, 4847–4860 (2013)

A.H. Phan, P. Tichavský, A. Cichocki, Low complexity damped Gauss-Newton algorithms for CANDECOMP/ PARAFAC. SIAM J. Matrix Anal. Appl. 34, 126–147 (2013)

S.B. Pope, A Monte Carlo method for the PDF equations of turbulent reactive flow. Combust. Sci. Technol. 25, 159–174 (1981)

S.B. Pope, Lagrangian PDF methods for turbulent flows. Annu. Rev. Fluid Mech. 26, 23–63 (1994)

S.B. Pope, Simple models of turbulent flows. Phys. Fluids 23(1), 011301(1–20) (2011)

H. Rabitz, Ö.F. Aliş, J. Shorter, K. Shim, Efficient input–output model representations. Comput. Phys. Commun. 117(1-2), 11–20 (1999)

M. Rajih, P. Comon, R.A. Harshman, Enhanced line search: a novel method to accelerate PARAFAC. SIAM J. Matrix Anal. Appl. 30, 1128–1147 (2008)

M. Reynolds, G. Beylkin, A. Doostan, Optimization via separated representations and the canonical tensor decomposition. J. Comput. Phys. 348(1), 220–230 (2016)

H.K. Rhee, R. Aris, N.R. Amundson, First-Order Partial Differential Equations. Volume 1: Theory and Applications of Single Equations (Dover, New York, 2001)

H. Risken, The Fokker-Planck Equation: Methods of Solution and Applications (Springer, Berlin, 1989)

S. Rjasanow, W. Wagner, Stochastic Numerics for the Boltzmann Equation (Springer, Berlin, 2004)

A. Saltelli, K. Chan, M. Scott, Sensitivity Analysis (Wiley, New York, 2000)

C. Schwab, E. Suli, R.A. Todor, Sparse finite element approximation of high-dimensional transport-dominated diffusion problems. ESAIM: Math. Model. Numer. Anal. 42, 777–819 (2008)

M.F. Shlesinger, T. Swean, Stochastically Excited Nonlinear Ocean Structures (World Scientific, Singapore, 1998)

R. Shu, J. Hu, S. Jin, A stochastic Galerkin method for the Boltzmann equation with multi-dimensional random inputs using sparse wavelet bases. Numer. Math. Theor. Methods Appl. 10(2), 465–488 (2017)

S. Smolyak, Quadrature and interpolation formulas for tensor products of certain classes of functions. Sov. Math. Dokl. 4, 240–243 (1963)

K. Sobczyk, Stochastic Differential Equations: With Applications to Physics and Engineering (Springer, Berlin, 2001)

I.M. Sobol, Global sensitivity indices for nonlinear mathematical models and their Monte Carlo estimates. Math. Comput. Simul. 55, 271–280 (2001)

H.D. Sterck, A nonlinear GMRES optimization algorithm for canonical tensor decomposition. SIAM J. Sci. Comput. 34, A1351–A1379 (2012)

H.D. Sterck, K. Miller, An adaptive algebraic multigrid algorithm for low-rank canonical tensor decomposition. SIAM J. Sci. Comput. 35, B1–B24 (2012)

R.L. Stratonovich, Some Markov methods in the theory of stochastic processes in nonlinear dynamical systems, in Noise in Nonlinear Dynamical Systems, vol. 1, ed. by F. Moss, P.V.E. McClintock (Cambridge University Press, Cambridge, 1989), pp. 16–68

A. Uschmajew, Local convergence of the alternating least squares algorithm for canonical tensor approximation. SIAM J. Matrix Anal. Appl. 33, 639–652 (2012)

L. Valino, A field Monte Carlo formulation for calculating the probability density function of a single scalar in a turbulent flow. Flow Turbul. Combust. 60(2), 157–172 (1998)

D. Venturi, The numerical approximation of functional differential equations. 1–113 (2016). arXiv: 1604.05250

D. Venturi, G.E. Karniadakis, New evolution equations for the joint response-excitation probability density function of stochastic solutions to first-order nonlinear PDEs. J. Comput. Phys. 231(21), 7450–7474 (2012)

D. Venturi, G.E. Karniadakis, Convolutionless Nakajima-Zwanzig equations for stochastic analysis in nonlinear dynamical systems. Proc. R. Soc. A 470(2166), 1–20 (2014)

D. Venturi, M. Choi, G.E. Karniadakis, Supercritical quasi-conduction states in stochastic Rayleigh-Bénard convection. Int. J. Heat Mass Transfer 55(13–14), 3732–3743 (2012)

D. Venturi, T.P. Sapsis, H. Cho, G.E. Karniadakis, A computable evolution equation for the probability density function of stochastic dynamical systems. Proc. R. Soc. A 468, 759–783 (2012)

C. Villani, A review of mathematical topics in collisional kinetic theory, in Handbook of Mathematical Fluid Mechanics, vol. 1, ed. by S. Friedlander, D. Serre (North-Holland, Amsterdam, 2002), pp. 71–305

Z. Wang, Q. Tang, W. Guo, Y. Cheng, Sparse grid discontinuous Galerkin methods for high-dimensional elliptic equations. J. Comput. Phys. 314, 244–263 (2016)

D. Xiu, Efficient collocational approach for parametric uncertainty analysis. Commun. Comput. Phys. 2(2), 293–309 (2007)

D. Xiu, J. Hesthaven, High-order collocation methods for differential equations with random inputs. SIAM J. Sci. Comput. 27(3), 1118–1139 (2005)

L. Yan, L. Guo, D. Xiu, Stochastic collocation algorithms using ℓ 1-minimization. Int. J. Uncertain. Quantif. 2, 279–293 (2012)

Y. Yang, C.W. Shu, Discontinuous Galerkin method for hyperbolic equations involving δ-singularities: negative-order norm error estimate and applications. Numer. Math. 124, 753–781 (2013)

X. Yang, M. Choi, G.E. Karniadakis, Adaptive ANOVA decomposition of stochastic incompressible and compressible fluid flows. J. Comput. Phys. 231, 1587–1614 (2012)

X. Yang, H. Lei, N.A. Baker, G. Lin, Enhancing sparsity of Hermite polynomial expansions by iterative rotations. J. Comput. Phys. 307, 94–109 (2016)

C. Zeng, H. Wang, Colored noise enhanced stability in a tumor cell growth system under immune response. J. Stat. Phys. 141(5), 889–908 (2010)

Z. Zhang, M. Choi, G.E. Karniadakis, Anchor points matter in ANOVA decomposition, in Proceedings of ICOSAHOM’09, ed. by E. Ronquist, J. Hesthaven (Springer, Berlin, 2010)

Z. Zhang, M. Choi, G.E. Karniadakis, Error estimates for the ANOVA method with polynomial chaos interpolation: tensor product functions. SIAM J. Sci. Comput. 34(2), 1165–1186 (2012)

G. Zhou, A. Cichocki, S. Xie, Accelerated canonical polyadic decomposition by using mode reduction. IEEE Trans. Neural Netw. Learn Syst. 24, 2051–2062 (2013)

R. Zwanzig, Memory effects in irreversible thermodynamics. Phys. Rev. 124, 983–992 (1961)

Acknowledgements

We gratefully acknowledge support from DARPA grant N66001-15-2-4055, ARO grant W991NF-14-1-0425, and AFOSR grant FA9550-16-1-0092.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG, part of Springer Nature

About this chapter

Cite this chapter

Cho, H., Venturi, D., Karniadakis, G.E. (2017). Numerical Methods for High-Dimensional Kinetic Equations. In: Jin, S., Pareschi, L. (eds) Uncertainty Quantification for Hyperbolic and Kinetic Equations. SEMA SIMAI Springer Series, vol 14. Springer, Cham. https://doi.org/10.1007/978-3-319-67110-9_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-67110-9_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-67109-3

Online ISBN: 978-3-319-67110-9

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)