Abstract

Hierarchical tensors can be regarded as a generalisation, preserving many crucial features, of the singular value decomposition to higher-order tensors. For a given tensor product space, a recursive decomposition of the set of coordinates into a dimension tree gives a hierarchy of nested subspaces and corresponding nested bases. The dimensions of these subspaces yield a notion of multilinear rank. This rank tuple, as well as quasi-optimal low-rank approximations by rank truncation, can be obtained by a hierarchical singular value decomposition. For fixed multilinear ranks, the storage and operation complexity of these hierarchical representations scale only linearly in the order of the tensor. As in the matrix case, the set of hierarchical tensors of a given multilinear rank is not a convex set, but forms an open smooth manifold. A number of techniques for the computation of hierarchical low-rank approximations have been developed, including local optimisation techniques on Riemannian manifolds as well as truncated iteration methods, which can be applied for solving high-dimensional partial differential equations. This article gives a survey of these developments. We also discuss applications to problems in uncertainty quantification, to the solution of the electronic Schrödinger equation in the strongly correlated regime, and to the computation of metastable states in molecular dynamics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The numerical solution of high-dimensional partial differential equations remains one of the most challenging tasks in numerical mathematics. A naive discretisation based on well-established methods for solving PDEs numerically, such as finite differences, finite elements or spectral elements, suffers severely from the so-called curse of dimensionality. This notion refers to the exponential scaling \( \mathscr {O} ( n^d)\) of the computational complexity with respect to the dimension d of the discretised domain. For example, if \(d=10\) and we consider \(n=100\) basis functions in each coordinate direction, this leads to a discretisation space of dimension \(100^{10}\). Even for low-dimensional univariate spaces, e.g. \(n=2\), but with \(d=500\), one has to deal with a space of dimension \(2^{500}\). It is therefore clear that one needs to find additional structures to design tractable methods for such large-scale problems.

Many established methods for large-scale problems rely on the framework of sparse and nonlinear approximation theory in certain dictionaries [40]. These dictionaries are fixed in advance, and their appropriate choice is crucial. Low-rank approximation can be regarded as a related approach, but with the dictionary consisting of general separable functions—going back to one of the oldest ideas in applied mathematics, namely separation of variables. As this dictionary is uncountably infinite, the actual basis functions used for a given problem have to be computed adaptively.

On the level of matrix or bivariate approximation, the singular value decomposition (SVD) provides a tool to find such problem-adapted, separable basis functions. Related concepts underlie model order reduction techniques such as proper orthogonal decompositions and reduced bases [119]. In fact, one might say that low-rank matrix approximation is one of the most versatile concepts in computational sciences. Generalising these principles to higher-order tensors has proven to be a promising, yet nontrivial, way to tackle high-dimensional problems and multivariate functions [17, 65, 87]. This article presents a survey of low-rank tensor techniques from the perspective of hierarchical tensors, and complements former review articles [63, 67, 70, 87] with novel aspects. A more detailed review of tensor networks for signal processing and big data applications, with detailed explanations and visualisations for all prominent low-rank tensor formats can be found in [27]. For an exhaustive treatment, we also recommend the monograph [65].

Regarding low-rank decomposition, the transition from linear to multilinear algebra is not as straightforward and harmless as one might expect. The canonical polyadic format [76] represents a tensor \({\mathbf {u}}\) of order d as a sum of elementary tensor products, or rank-one tensors,

with \( {\mathbf {C}}^\mu _k \in {\mathbb {R}}^{n_\mu }\). For tensors of order two, the CP format simply represents the factorisation of a rank-r matrix, and therefore is a natural representation for higher-order tensors as well. Correspondingly, the minimal r required in (1.1) is called the canonical rank of \({\mathbf {u}}\).

If r is small, the CP representation (1.1) is extremely data-sparse. From the perspective of numerical analysis, however, it turns out to have several disadvantages in case \(d > 2\). For example, the set of tensors of canonical rank at most r is not closed [127]. This is reflected by the fact that for most optimisation problems involving tensors of low CP rank, no robust methods exist. For further results concerning difficulties with the CP representation and rank of higher-order tensors, we refer to [65, 75, 127, 136], and highlight the concise overview [100]. Many of these issues have also been investigated from the perspective of algebraic geometry, see the monograph [95].

The present article is intended to provide an introduction and a survey of a somehow alternative route. Instead of directly extending matrices techniques to analogous notions for tensors, the strategy here is to reduce questions of tensor approximation to matrix analysis. This can be accomplished by the hierarchical tensor (HT) format, introduced by Hackbusch and Kühn [69], and the tensor train (TT) format, developed by Oseledets and Tyrtyshnikov [110, 111, 113, 114]. They provide alternative data-sparse tensor decompositions with stability properties comparable to the SVD in the matrix case, and can be regarded as multi-level versions of the Tucker format [87, 133]. Whereas the data complexity of the Tucker format intrinsically suffers from an exponential scaling with respect to dimensionality, the HT and TT format have the potential of bringing this down to a linear scaling, as long as the ranks are moderate. This compromise between numerical stability and potential data sparsity makes the HT and TT formats promising model classes for representing and approximating tensors.

However, circumventing the curse of dimensionality by introducing a nonlinear (here: multilinear) parameterisation comes at the price of introducing a curse of nonlinearity, or more precisely, a curse of non-convexity. Our model class of low-rank hierarchical tensors is no longer a linear space nor a convex set. Therefore, it becomes notoriously difficult to find globally optimal solutions to approximation problems, and first-order optimality conditions remain local. In principle, the explicit multilinear representation of hierarchical tensors is amenable to block optimisation techniques like variants of the celebrated alternating least squares method, e.g. [17, 25, 34, 38, 44, 72, 78, 87, 91, 112, 132, 146], but their convergence analysis is typically a challenging task as the multilinear structure does not meet classical textbook assumptions on block optimisation. Another class of local optimisation algorithms can be designed using the fact that, at least for fixed rank parameters, the model class is a smooth embedded manifold in tensor space, and explicit descriptions of its tangent space are available [4, 35, 71, 79, 86, 89, 104, 105, 138, 139]. However, here one his facing the technical difficulty that this manifold is not closed: its closure only constitutes an algebraic variety [123].

An important tool available for hierarchical tensor representation is the hierarchical singular value decomposition (HSVD) [60], as it can be used to find a quasi-best low-rank approximation using only matrix procedures with full error control. The HSVD is an extension of the higher-order singular value decomposition [39] to different types of hierarchical tensor models, including TT [65, 109, 113]. This enables the construction of iterative methods based on low-rank truncations of iterates, such as tensor variants of iterative singular value thresholding algorithms [7, 10, 18, 68, 85, 90].

Historically, the parameterisation in a hierarchical tensor framework has evolved independently in the quantum physics community, in the form of renormalisation group ideas [55, 142], and more explicitly in the framework of matrix product and tensor network states [124], including the HSVD for matrix product states [140]. A further independent source of such developments can also be found in quantum dynamics, with the multilayer multi-configurational time-dependent Hartree (MCTDH) method [15, 102, 141]. We refer the interested reader to the survey articles [63, 99, 130] and to the monograph [65].

Although the resulting tensor representations have been used in different contexts, the perspective of hierarchical subspace approximation in [69] and [65] seems to be completely new. Here, we would like to outline how this concept enables one to overcome most of the difficulties with the parameterisation by the canonical format. Most of the important properties of hierarchical tensors can easily be deduced from the underlying very basic definitions. For a more detailed analysis, we refer to the respective original papers. We do not aim to give a complete treatment, but rather to demonstrate the potential of hierarchical low-rank tensor representations from their basic principles. They provide a universal and versatile tool, with basic algorithms that are relatively simple to implement (requiring only basic linear algebra operations) and easily adaptable to various different settings.

An application of hierarchical tensors of particular interest, on which we focus here, is the treatment of high-dimensional partial differential equations. In this article, we will consider three major examples in further detail: PDEs depending on countably many parameters, which arise in particular in deterministic formulations of stochastic problems; the many-particle Schrödinger equation in quantum physics; and the Fokker–Planck equation describing a mechanical system in a stochastic environment. A further example of an application of practical importance are chemical master equations, for which we refer to [41, 42].

This article is arranged as follows: Sect. 2 covers basic notions of low-rank expansions and tensor networks. In Sect. 3 we consider subspace-based representations and basic properties of hierarchical tensor representations, which play a role in the algorithms using fixed hierarchical ranks discussed in Sect. 4. In Sect. 5, we turn to questions of convergence of hierarchical tensor approximations with respect to the ranks, and consider thresholding algorithms operating on representations of variable ranks in Sect. 6. Finally, in Sect. 7, we describe in more detail the mentioned applications to high-dimensional PDEs.

2 Tensor Product Parameterisation

In this section, we consider basic notions of low-rank tensor formats and tensor networks and how linear algebra operations can be carried out on such representations.

2.1 Tensor Product Spaces and Multivariate Functions

We start with some preliminaries. In this paper, we consider the d-fold topological tensor product

of separable \({\mathbb {K}}\)-Hilbert spaces \({\mathscr {V}}_1,\dots ,{\mathscr {V}}_d\). For concreteness, we will focus on the real field \({\mathbb {K}} = {\mathbb {R}}\), although many parts are easy to extend to the complex field \({\mathbb {K}} = {\mathbb {C}}\). The confinement to Hilbert spaces constitutes a certain restriction, but still covers a broad range of applications. The topological difficulties that arise in a general Banach space setting are beyond the scope of the present paper, see [52, 65]. Avoiding them allows us to put clearer focus on the numerical aspect of tensor product approximation.

We do not give the precise definition of the topological tensor product of Hilbert spaces in (2.1) (see, e.g. [65]), but only recall the properties necessary for our later purposes. Let \(n_\mu \in {\mathbb {N}} \cup \{\infty \}\) be the dimension of \({\mathscr {V}}_\mu \). We set

and \({\mathscr {I}} = {\mathscr {I}}_1 \times \cdots \times {\mathscr {I}}_d\). Fixing an orthonormal basis \(\{ {\mathbf {e}}^\mu _{i_\mu } {:} i_\mu \in {\mathscr {I}}_\mu \}\) for each \({\mathscr {V}}_\mu \), we obtain a unitary isomorphism \(\varphi ^\mu :\ell ^2({\mathscr {I}}_\mu ) \rightarrow {\mathscr {V}}_\mu \) by

Then \(\{ {\mathbf {e}}^1_{i_1} \otimes \dots \otimes {\mathbf {e}}^d_{i_d}:i_1, \ldots ,i_d \in {\mathscr {I}} \} \) is an orthonormal basis of \({\mathscr {V}}\), and

is a unitary isomorphism from \(\ell ^2({\mathscr {I}})\) to \({\mathscr {V}}\).

Such a fixed choice of orthonormal basis allows us to identify the elements of \({\mathscr {V}}\) with their coefficient tensors \({\mathbf {u}} \in \ell ^2({\mathscr {I}})\),

often called hypermatrices, depending on discrete variables \(i_\mu \), usually called indices.

In conclusion, we will focus in the following on the space \(\ell ^2({\mathscr {I}})\), which is itself a tensor product of Hilbert spaces, namely,

The corresponding multilinear tensor product map of d univariate \(\ell ^2\)-functions is defined pointwise as \( ( {\mathbf {u}}^1 \otimes \dots \otimes {\mathbf {u}}^d )(i_1,\dots ,i_d) = {\mathbf {u}}^1(i_1) \cdots {\mathbf {u}}^d(i_d)\). Tensors of this form are called elementary tensors or rank-one tensors. Also the terminology decomposable tensors is used in differential geometry.

Let \( n= \max \{n_\mu {:} \mu =1, \ldots , d \} \) be the maximum dimension among the \({\mathscr {V}}_\mu \). Then the number of possibly nonzero entries in a pointwise representation (2.3) of \(\mathbf {u} \) is \( n_1 \cdots n_d = {\mathscr {O}} (n^d) \). This exponential scaling with respect to d is one aspect of what is referred to as curse of dimensionality, and poses a common challenge for the discretisation of the previously mentioned examples of high-dimensional PDEs. In the present paper we consider methods that aim to circumvent this core issue of high-dimensional problems using low-rank tensor decomposition. In very abstract terms, all low-rank tensor decompositions considered below ultimately decompose the tensor \({\mathbf {u}} \in \ell ^2({\mathscr {I}})\) such that

where \(\tau {:} {\mathscr {W}} {:=} {\mathscr {W}}_1 \times \dots {\mathscr {W}}_d \times {\mathscr {W}}_{d+1} \times \dots \times {\mathscr {W}}_D \rightarrow {\mathbb {R}}\) is multilinear on a Cartesian product of vector spaces \({\mathscr {W}}_\nu \), \(\nu =1,\dots ,D\). The choice of these vector spaces and the map \(\tau \) determines the format, and the tensors in its range are considered as “low-rank.” An example is the CP representation (1.1).

Remark 2.1

Since \(\varPhi \) is multilinear as well, we obtain representations of the very same multilinear structure (2.5) for the corresponding elements of \({\mathscr {V}}\),

For instance, if \({\mathscr {V}}\) is a function space on a tensor product domain \(\varOmega =\varOmega _1\times \cdots \times \varOmega _d\) on which point evaluation is defined, and \(({\mathbf {e}}^1 \otimes \cdots \otimes {\mathbf {e}}^d)(x_1,\dots ,x_d) = {\mathbf {e}}^1(x_1) \cdots {\mathbf {e}}^d(x_d)\) for \(x \in \varOmega \), then formally (dispensing for the moment with possible convergence issues), exploiting the multilinearity properties, we obtain

and the same applies to other tensor product functionals on \({\mathscr {V}}\). Since in the present case of Hilbert spaces (2.1), the identification with \(\ell ^2({\mathscr {I}})\) via \(\varPhi \) thus also preserves the considered low-rank structures, we exclusively work on basis representations in \(\ell ^2({\mathscr {I}})\) in what follows.

2.2 The Canonical Tensor Format

The canonical tensor format, also called CP (canonical polyadic) decomposition, CANDECOMP or PARAFAC, represents a tensor of order d as a sum of elementary tensor products \( {\mathbf {u}} = \sum _{k=1}^{r} \mathbf{c}_k^1 \otimes \cdots \otimes \mathbf{c}^d_k, \) that is

with \({\mathbf {c}}^\mu _k = \mathbf {C}^\mu (\cdot ,k) \in \ell ^2 ({\mathscr {I}}_\mu ) \) [25, 72, 76]. The minimal r such that such a decomposition exists is called the canonical rank (or simply rank) of the tensor \(\mathbf {u}\). It can be infinite.

Depending on the rank, the representation in the canonical tensor format has a potentially extremely low complexity. Namely, it requires at most r d n nonzero entries, where \(n = \max \left|{\mathscr {I}}_\mu \right|\). Another key feature (in the case \(d >2\)) is that the decomposition (2.6) is essentially unique under relatively mild conditions (assuming that r equals the rank). This property is a main reason for the prominent role that the canonical tensor decomposition plays in signal processing and data analysis, see [28, 87, 93] and references therein.

In view of the applications to high-dimensional partial differential equations, one can observe that the involved operator can usually be represented in the form of a canonical tensor operator, and the right-hand side is also very often of the form above. This implies that the operator and the right-hand sides can be stored in this data-sparse representation. This motivates the basic assumption in numerical tensor calculus that all input data can be represented in a sparse tensor form. Then there is a reasonable hope that the solution of such a high-dimensional PDE might also be approximated by a tensor in the canonical tensor format with moderate r. The precise justification for this hope is subject to ongoing research (see [36] for a recent approach and further references), but many known numerical solutions obtained by tensor product ansatz functions such as (trigonometric) polynomials, sparse grids, or Gaussian kernels are in fact low-rank approximations, mostly in the canonical format. However, the key idea in nonlinear low-rank approximation is to not fix possible basis functions in (2.6) in advance. Then we have an extremely large library of functions at our disposal. Motivated by the seminal papers [16, 17], we will pursue this idea throughout the present article.

From a theoretical viewpoint, the canonical tensor representation (2.6) may be seen a straightforward generalisation of low-rank matrix representation, and coincides with it when \(d=2\). As it turns out, however, the parameterisation of tensors via the canonical representation (2.6) is not as harmless as it seems to be. For example, for \(d>2\), the following difficulties appear:

-

The canonical tensor rank is (in case of finite-dimensional spaces) NP-hard to compute [75].

-

The set of tensors of the above form with canonical rank at most r is not closed when \(r \ge 2\) (border rank problem). As a consequence, a best approximation of a tensor by one of smaller canonical rank may not exist; see [127]. This is in strong contrast to the matrix case \(d=2\), see Sect. 3.1.

-

In fact, the set of tensors of rank at most r does not form an algebraic variety [95].

Further surprising and fascinating difficulties with the canonical tensor rank in case \(d>2\) with references are listed in [87, 94, 100, 136]. Deep results of algebraic geometry have been invoked for the investigation of these problems, see the monograph [95] for the state of the art. The problem of non-closedness can often be mitigated by imposing further conditions such as symmetry [95], nonnegativity [101] or norm bounds on factors [127].

In this paper we show a way to avoid all these difficulties by considering another type of low-rank representation, namely the hierarchical tensor representation [65], but at the price of a slightly higher computational and conceptual complexity. Roughly speaking, the principle of hierarchical tensor representations is to reduce the treatment of higher-order tensors to matrix analysis.

2.3 Tensor Networks

For fixed r, the canonical tensor format (2.6) is multilinear with respect to every matrix \({\mathbf {C}}^\mu {:=} \bigl ({\mathbf {C}}^\mu (i,k)\bigr )_{{i\in {\mathscr {I}}_\mu ,\,k=1,\ldots ,r}}\). A generalised concept of low-rank tensor formats is obtained by considering classes of tensors which are images of more general multilinear parameterisations. A very general form is

with an arbitrary number D of components \({\mathbf {C}}^\nu (i_1,\dots ,i_d, k_1, \ldots , k_E)\) that potentially depend on all variable \(i_1,\dots ,i_d\) and additional contraction variables \(k_1,\dots ,k_E\). For clarity, we will call the indices \(i_\mu \) physical variables. Again, we can regard \({\mathbf {u}}\) as elements of the image of a multilinear map \(\tau _{\mathfrak {r}}\),

parametrising a certain class of “low-rank” tensors. By \(\mathfrak {r} = (r_1,\dots ,r_E)\) we indicate that this map \(\tau \) depends on the representation ranks \(r_1,\dots ,r_E\).

The disadvantage compared to the canonical format is that the component tensors have order \(e_\nu \) instead of 2, where \(1 \le e_\nu \le d+E\) is the number of (contraction and physical) variables in \({\mathbf {C}}^\nu \) which are actually active.Footnote 1 In cases of interest introduced below (like the HT or TT format), this number is small, say at most three. More precisely, let p and q be bounds for the number of active contraction variables, and q a bound for the number of active physical variables per component. Let also \(n_\mu \le n\) for all \(\mu \), and \(r_\eta \le r\) for all \(\eta \), the data complexity of the format (2.7) is bounded by \( D n^q r^{p}\). Computationally efficient representations of multivariate functions arise by bounding p, q and r.

Without further restriction, the low-rank formats (2.7) form a too general class. An extremely useful subclass are tensor networks.

Definition 2.2

We call the multilinear parameterization (2.7) a tensor network, if

-

(i)

each physical variable \(i_\mu \), \(\mu =1, \ldots , d\), is active in exactly one component \({\mathbf {C}}^\nu \);

-

(ii)

each contraction variable \(k_\eta \), \(\eta = 1,\dots ,E\), is active in precisely two components \({\mathbf {C}}^\nu \), \(1 \le \nu \le D\),

see also [49, 124] and the references given there.

We note that the canonical tensor format (2.6) is not a tensor network, since the contraction variable k relates to all physical variables \(i_\mu \).

The main feature of a tensor network is that it can be visualised as a graph with D nodes, representing the components \({\mathbf {C}}^\nu \), \(\nu = 1,\dots ,D\), and E edges connecting those nodes with common active contraction variable \(k_\eta \), \(\eta = 1,\dots ,E\). In this way, the edges represent the summations over the corresponding contraction variables in (2.7). Among all nodes, the ones in which a physical variable \(i_\mu \), \(\mu = 1,\dots ,d\), is active play a special role and get an additional label, which in our pictures will be depicted by an additional open edge connected to the node. The graphical visualisation of tensor networks is a useful and versatile tool for describing decompositions of multivariate functions (tensors) into nested summations over contraction variables. This will be illustrated in the remainder of this section.

Plain vectors, matrices and higher-order tensors are trivial examples of tensor networks, since they contain no contraction variable at all. A vector \(i \mapsto \mathbf {u}(i)\) is a node with single edge i, a matrix \((i_1,i_2) \mapsto \mathbf {u}(i_1,i_2)\) is a node with two edges \(i_1,i_2\), and a d-th-order tensor is a node with d edges connected to it:

The simplest nontrivial examples of tensor networks are given by low-rank matrix decompositions like \(\mathbf {A} = \mathbf {U} \mathbf {V}^T\) or \(\mathbf {A} = \mathbf {U} \varvec{\varSigma } \mathbf {V}^T\), the latter containing a node with no physical variable:

Note that these graphs do not show which physical variables belong to which open edge. To emphasise a concrete choice one has to attach the labels \(i_\mu \) explicitly. Let us consider a more detailed example, in which we also attach contraction variables to the edges:

The graph on the right side represents the tensor network

Note that node \(\mathbf {C}^3\) depends on no physical variable, while \(\mathbf {C}^4\) depends on two. The sums have been nested to illustrate how the contractions are performed efficiently in practice by following a path in the graph.

As a further example, we illustrate how to contract and decontract a tensor of order \(d=4\) by a rank-r matrix decomposition that separates physical variables \((i_1,i_2)\) from \((i_3,i_4)\), using, e.g. SVD:

Diagrammatic representations of a similar kind are also common in quantum physics for keeping track of summations, for instance Feynman and Goldstein diagrams.

Remark 2.3

The representation of tensor networks by graphs can be formalised using the following definition as an alternative to Definition 2.2. A tensor network is a tuple \((G,E,\iota ,\mathfrak {r})\), where (G, E) is a connected graph with nodes G and edges E, \(\iota {:} \{1,\dots ,d\} \rightarrow G\) is an assignment of physical variable, and \(\mathfrak {r} {:} E \rightarrow {\mathbb {N}}\) are weights on the edges indicating the representation ranks for the contraction variables.

Remark 2.4

Tensor networks are related to statistical networks such as hidden Markov models, Bayesian belief networks, latent tree models, and sum-product networks. However, due to the probabilistic interpretation the components need to satisfy further constraints to ensure nonnegativity and appropriate normalisation. For further details on latent tree networks, we refer the reader to the recent monograph [149].

2.4 Tree Tensor Networks

The main subject of this article are tensor networks of a special type, namely those with a tree structure.

Definition 2.5

A tensor network is called a tree tensor network if its graph is a tree, that is, contains no loops.

Among the general tensor networks, the tree tensor networks have favourable topological properties that make them more amenable to numerical utilisation. For instance, tensors representable in tree networks with fixed rank bounds \(r_\nu \) form closed sets, and the ranks have clear interpretation as matrix ranks, as will be explained in Sect. 3. In contrast, it has been shown that the set of tensors represented by a tensor network whose graph has closed loops is not closed in the Zariski sense [96]. In fact, there is no evidence that the more general tensor network parameterisation with loops do not suffer from similar problems as the canonical tensor format, which is not even a tensor network. In the following, we therefore restrict ourselves to tree tensor networks.

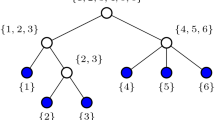

Some of the most frequent examples of tree tensor networks are the Tucker, hierarchical Tucker (HT), and tensor train (TT) formats. In case \(d=4\), they are represented by the tensor networks depicted in Fig. 1 and will be treated in detail in Sect. 3. By allowing large enough representation ranks, it is always possible to represent a d-th-order tensor in any of these formats, but the required values \(r = \max {r_\eta }\) can differ substantially depending on the choice of format. Let again p be a bound for the number of connected (active) contraction variables for a node, and \(n = \max n_\mu \). The three mentioned formats have storage complexity \(O(dnr^p)\). A potential disadvantage of the Tucker format is that \(p = d\), which implies a curse of dimension for large d. In contrast, \(p = 3\) in HT, and \(p=2\) in HT.

2.5 Linear Algebra Operations

For two tensors given in the same tree tensor network representation it is easy to perform standard linear algebra operations, such as summation, Hadamard (pointwise) product, and inner products. Also the application of a linear operator to such a tensor can be performed in this representation if the operator is in a “compatible” form.

For instance, a matrix-vector product \({\mathbf {b}} = {\mathbf {A}} {\mathbf {u}}\) results in a vector, and is obtained as a single contraction \({\mathbf {b}}(i) = \sum _{k=1}^n {\mathbf {A}}(i,k) {\mathbf {u}}(k)\). Hence it has the following tensor network representation.

As a next example, consider a fourth-order tensor represented in the TT format (see Fig. 1; Sect. 3.4):

Note that the ordering of physical and contraction variable in the components \(\mathbf {G}^\mu \) was adjusted to follow the linear structure of the tree. A linear operator \(\mathbf {A}\) in TT format has the following form:

The application of \(\mathbf {A}\) to \(\mathbf {u}\) is illustrated by:

Summing over connected edges \(i_\mu \) related to physical variables results again in a TT tensor \(\tilde{\mathbf {u}} = \mathbf {A} \mathbf {u}\):

The new ranges \(\tilde{r}_\eta \) for \(\tilde{k}_\eta \) are bounded by \(\tilde{r}_\eta \le r_\eta s_\eta \), compared to the initial \(r_\eta \). It can be seen that the overall complexity of computing \(\mathbf {A} \mathbf {u}\) is linear in d, quadratic in n and polynomial in the ranks.

To estimate the complexity of standard linear algebra operations, one observes that summing tensors in tree network representations leads to summation of ranks, while multiplicative operations like matrix-vector products or Hadamard products lead to multiplication of ranks. Fortunately, this is only an upper bound for the ranks. How to recompress the resulting parameterisation with and without loss of accuracy will be shown later. Details on linear algebra operations are beyond the scope of this paper, but can be found in [27, 65, 110].

3 Tree Tensor Networks as Nested Subspace Representations

In this section we explain how the deficiencies of the canonical format are cured using tree tensor network parameterisations. Tree tensor networks have the fundamental property that if one edge of the tree is removed, exactly two subtrees are obtained. This property enables the application of matrix techniques to tree tensor networks and constitutes the main difference to the canonical tensor format.

3.1 The Matrix Case \(d=2\) Revisited

An \(m \times n\) matrix can be either seen as an element of \({\mathbb {R}}^m \otimes {\mathbb {R}}^n\), as a bivariate function, or as a linear operator from \({\mathbb {R}}^n\) to \({\mathbb {R}}^m\). In the general, possibly infinite-dimensional case this corresponds to the fact that the topological tensor product of \({\mathscr {H}} = {\mathscr {H}}_1 \otimes {\mathscr {H}}_2\) is isometrically isomorphic to the Hilbert space \(\text {HS}({\mathscr {H}}_2,{\mathscr {H}}_1)\) of Hilbert–Schmidt operators from \({\mathscr {H}}_2\) to \({\mathscr {H}}_1\). This space consists of bounded linear operators \(T {:} {\mathscr {H}}_2 \rightarrow {\mathscr {H}}_1\) for which \( \Vert T \Vert _{\text {HS}}^2 = \langle T, T \rangle _{\text {HS}} < \infty , \) where the inner product is defined as \( \langle S, T \rangle _{\text {HS}} = \sum _{i_2=1}^{n_2} \langle S \mathbf {e}_{i_2}^2, T \mathbf {e}_{i_2}^2 \rangle . \) Here \(\{ {\mathbf {e}}^\mu _{i_2} {:} i_2 \in {\mathscr {I}}_\mu \}\) is any orthonormal basis of \({\mathscr {H}}_2\). It is an easy exercise to convince oneself that the choice of basis is irrelevant. The isometric isomorphism \(\mathbf {u} \mapsto T_{\mathbf {u}}\) between \({\mathscr {H}}\) and \(\text {HS}({\mathscr {H}}_2,{\mathscr {H}}_1)\) which we then consider is constructed by identifying

and linear expansion.

The relation to compact operators makes the case \(d=2\) unique as it enables spectral theory for obtaining tensor decompositions and low-rank approximations. The nuclear decomposition of compact operators plays the decisive role. It has been first obtained by Schmidt for integral operators [121]. A proof can be found in most textbooks on linear functional analysis or spectral theory. For matrices the decomposition (3.2) below is called the singular value decomposition (SVD), and can be traced back even further, see [128] for the history. We will use the same terminology. The best approximation property stated below was also obtained by Schmidt, and later also attributed to Eckart and Young [45]. We state the result in \(\ell ^2({\mathscr {I}}_1 \times {\mathscr {I}}_2)\); see [65] for a self-contained treatment from a more general tensor perspective.

Theorem 3.1

(E. Schmidt, 1907) Let \(\mathbf {u} \in \ell ^2({\mathscr {I}}_1 \times {\mathscr {I}}_2)\), then there exist orthonormal systems \(\{\mathbf {U}^1(\cdot ,k) {:} k \in {\mathscr {I}}_1 \}\) in \(\ell ^2({\mathscr {I}}_1)\) and \(\{\mathbf {U}^2(\cdot , k) {:} k \in {\mathscr {I}}_2 \}\) in \(\ell ^2({\mathscr {I}}_2)\), and \(\sigma _1 \ge \sigma _2 \ge \dots \ge 0\), such that

with convergence in \(\ell ^2({\mathscr {I}}_1 \times {\mathscr {I}}_2)\). A best approximation of \({\mathbf {u}} \) by a tensor of rank \(r \le \min (n_1,n_2)\) in the norm of \(\ell ^2({\mathscr {I}}_1 \times {\mathscr {I}}_2)\) is provided by

and the approximation error satisfies

The best approximation is unique in case \(\sigma _r > \sigma _{r+1}\).

The numbers \(\sigma _k\) are called singular values, the basis elements \(\mathbf {U}^1(\cdot ,k)\) and \(\mathbf {U}^2(\cdot ,k)\) are called corresponding left and right singular vectors. They are the eigenvectors of \(T_{\mathbf {u}}^{} T_{\mathbf {u}}^*\) and \(T_{\mathbf {u}}^* T_{\mathbf {u}}^{}\), respectively.

In matrix notation, let \(\mathbf {U}\) be the (possibly infinite) matrix with entries \(\mathbf {u}(i_1,i_2)\). Then, using (3.1), the singular value decomposition (3.2) takes the familiar form

where \(\mathbf {U}_\mu ^{} = [\mathbf {u}^\mu _1,\mathbf {u}^\mu _2,\dots ]\) have columns \(\mathbf {u}^\mu _k\), \(\mu = 1,2\), and \(\varvec{\varSigma } = {{\mathrm{diag}}}(\sigma _1,\sigma _2,\dots )\).

3.2 Subspace Approximation

The problem of finding the best rank-r approximation to a tensor of order two (a matrix) can be interpreted as a subspace approximation problem, and Schmidt’s theorem 3.1 provides a solution.

The problem is as follows: Find subspaces \({\mathscr {U}}_1 \subseteq \ell ^2({\mathscr {I}}_1)\) and \({\mathscr {U}}_2 \subseteq \ell ^2({\mathscr {I}}_2)\) of dimension r such that

Here \(\varPi _{{\mathscr {U}}_1 \otimes {\mathscr {U}}_2}\) denotes the orthogonal projection on \({\mathscr {U}}_1 \otimes {\mathscr {U}}_2\). The truncated singular value decomposition \(\mathbf {u}_r\) is the solution to this problem, more precisely the subspaces spanned by the dominating r left and right singular vectors, respectively, since one has that a tensor of order two is of rank at most r if and only if it is contained in such a subspaceFootnote 2 \({\mathscr {U}}_1 \otimes {\mathscr {U}}_2\). We highlight that the admissible set over which we minimise the distance \({{\mathrm{dist}}}(\mathbf {u}, {\mathscr {U}}_1 \otimes {\mathscr {U}}_2)\) is the closure of a Cartesian product of Grassmanians [1, 46, 95]. Note that the rank of \(\mathbf {u}\) can now be defined as the minimal r such that the minimal distance in (3.3) is zero.

In contrast to the representability in canonical tensor format, the interpretation of low-rank approximation as subspace approximation, which is possible in case \(d=2\), provides a different concept which offers advantageous mathematical properties also in the higher-order case. In the sequel we will pursue this concept. A direct generalisation of (3.3) to higher-order tensors leads to the by now classical Tucker format [65, 87, 133]. Given a tensor \(\mathbf {u} \in \ell ^2({\mathscr {I}})\) and dimensions \(r_1,\dots ,r_d\) one is searching for optimal subspaces \( {\mathscr {U}}_\mu \subseteq \ell ^2({\mathscr {I}}_\mu )\) of dimension \(r_\mu \) such that

The (elementwise) minimal tuple of \((r_1,\dots ,r_d)\) which minimises the distance to zero is called the Tucker rank of \(\mathbf {u}\). It follows from this definition that a tensor has Tucker rank at most \((r_1,\dots ,r_d)\) if and only if \(\mathbf {u} \in {\mathscr {U}}_1 \otimes \dots \otimes {\mathscr {U}}_d\) with \(\dim ({\mathscr {U}}_\mu ) \le r\). Note that this in turn is the case if and only if \(\mathbf {u}\) can be written as

For instance, one can choose \(\{ \mathbf {U}^\mu (\cdot ,1), \dots ,\mathbf {U}^d(\cdot ,r) \}\) to be a basis of \({\mathscr {U}}_\mu \). The multilinear representation (3.5) of tensors is called the Tucker format [77, 133]. Its tensor network representation is given in Fig. 1a.

The minimal \(r_\mu \) appearing in the Tucker rank of \({\mathbf {u}}\), as well as the corresponding subspaces \({\mathscr {U}}_\mu \), can be found constructively and independently from each other as follows. For \(\mu = 1,\dots ,d\), let \({\mathscr {I}}_\mu ^c = {\mathscr {I}}_1 \times \dots \times {\mathscr {I}}_{\mu - 1} \times {\mathscr {I}}_{\mu +1} \times \dots \times {\mathscr {I}}_{d}\). Then the spaces \(\ell ^2({\mathscr {I}}_\mu \times {\mathscr {I}}_\mu ^c) = \ell ^2({\mathscr {I}}_\mu ) \otimes \ell ^2({\mathscr {I}}_{\mu }^c)\), which are tensor product spaces of order two, are all isometrically isomorphic to \(\ell ^2({\mathscr {I}})\). The corresponding isomorphisms \(\mathbf {u} \mapsto \mathbf {M}_\mu (\mathbf {u})\) are called matricisations. The SVDs of \(\mathbf {M}_\mu (\mathbf {u})\) provide us with subspaces \({\mathscr {U}}_\mu \) of minimal dimension \(r_\mu \) such that \(\mathbf {M}_\mu (\mathbf {u}) \in {\mathscr {U}}_\mu \otimes \ell ^2({\mathscr {I}}_\mu ^c)\), that is,

Comparing with (3.4), this shows that this \(r_\mu \) cannot be larger than the corresponding Tucker rank. On the other hand, since (3.6) holds for \(\mu =1,\dots ,d\) simultaneously, we get (see, e.g. [65, Lemma 6.28])

which in combination yields that the \({\mathscr {U}}_\mu \) found in this way solve (3.4). These considerations will be generalised to general tree tensor networks in Sect. 3.5.

Similar to the matrix case one may pose the problem of finding the best approximation of a tensor \(\mathbf {u}\) by one of lower Tucker rank. This problem always has a solution [52, 134], but no normal form providing a solution similar to the SVD is currently available. The higher-order SVD [39] uses the dominant left singular subspaces from the SVDs of the matricisations \(\mathbf {M}_\mu (\mathbf {u})\), but they only provide quasi-optimal approximations. This will be explained in more detail Sect. 3.5, albeit somewhat more abstractly than required for the Tucker format.

There is a major drawback of the Tucker format, which motivates us to go beyond it: unless the core tensor \(\mathbf {C}\) in (3.5) is sparse, the low-rank Tucker representation is still affected by the curse of dimensionality. Since, in general, the core tensor contains \(r_1 \cdots r_d \) possibly nonzero entries, its storage complexity scales exponentially with the order as \({\mathscr {O}}(r^d)\), where \(r = \max \{ r_\mu {:} \mu =1,\dots ,d\}\). With \( n = \max \{ n_\mu {:} \mu =1, \ldots , d\}\), the overall complexity for storing the required data, including the basis vectors \(\mathbf {U}^\mu ( \cdot , k)\), is bounded by \({\mathscr {O}} ( ndr + r^d)\). Without further sparsity of the core tensor, the pure Tucker format is appropriate only for tensors of low order, say \(d \le 4\). Nonetheless, subspace-based tensor representation approximation as in the Tucker format is not a dead end. We will describe how it can be used in a hierarchical fashion to circumvent the curse of dimensionality, at least for a large class of tensors.

3.3 Hierarchical Tensor Representation

The hierarchical Tucker format or hierarchical tensor format (HT) was introduced by Hackbusch and Kühn [69], and extends the idea of subspace approximation to a hierarchical or multi-level framework. It is a tree tensor network corresponding to the diagram in Fig. 1b. Here we derive it from a geometric viewpoint.

Let us reconsider the subspace relation (3.7) with subspaces \({\mathscr {U}}_\mu \) of dimension \(r_\mu \). There exists a subspace \({\mathscr {U}}_{\{ 1,2 \} } \subseteq {\mathscr {U}}_1 \otimes {\mathscr {U}}_2\), of possibly lower dimension \(r_{\{1,2\}} \le r_1 r_2\), such that we actually have \(\mathbf {u} \in {\mathscr {U}}_{\{ 1,2 \} } \otimes {\mathscr {U}}_3 \otimes \dots \otimes {\mathscr {U}}_d\). Then \( {\mathscr {U}}_{\{ 1,2\}} \) is a space of “matrices”, and has a basis \(\{ \mathbf {U}^{\{1,2\}}(\cdot ,\cdot ,k_{\{1,2\}}) {:} k_{\{1,2\}} = 1,\dots ,r_{\{1,2\}} \}\), whose elements can be represented in the basis of \( {\mathscr {U}}_1 \otimes {\mathscr {U}}_2\):

One can now continue in several ways, e.g. by choosing a subspace \( {\mathscr {U}}_{\{ 1,2,3\} } \subseteq {\mathscr {U}}_{\{ 1,2\} } \otimes {\mathscr {U}}_3 \subseteq {\mathscr {U}}_1 \otimes {\mathscr {U}}_2 \otimes {\mathscr {U}}_3\). Another option is to find a subspace \( {\mathscr {U}}_{\{ 1,2,3,4\} } \subseteq {\mathscr {U}}_{\{ 1,2\} } \otimes {\mathscr {U}}_{\{3,4\}}\), where \( {\mathscr {U}}_{\{3,4\}}\) is defined analogously to \( {\mathscr {U}}_{\{1,2\}}\), and so on.

For a systematic treatment, this approach is cast into the framework of a partition tree \({\mathbb {T}}\) (also called dimension tree) containing subsets of \(\{1,\dots ,d\}\) such that

-

(i)

\(\alpha ^* {:}= \{1,\dots ,d\} \in {\mathbb {T}}\), and

-

(ii)

for every \(\alpha \in {\mathbb {T}}\) with \(\left|\alpha \right| > 1\) there exist \(\alpha _1,\alpha _2 \in {\mathbb {T}}\) such that \( \alpha = \alpha _1 \cup \alpha _2 \) and \( \alpha _1 \cap \alpha _2 = \emptyset \).

Such a set \({\mathbb {T}}\) forms a binary tree by introducing edges between fathers and sons. The vertex \(\alpha ^*\) is then the root of this tree, while the singletons \(\{\mu \}\), \(\mu =1,\dots ,d\) are the leaves. By agreeing that \(\alpha _1\) should be the left son of \(\alpha \) and \(\alpha _2\) the right son, a pre-order traversion through the tree yields the leaves \(\{\mu \}\) appearing according to a certain permutation \(\varPi _{{\mathbb {T}}}\) of \(\{1,\dots ,d\}\).

By \(\hat{{\mathbb {T}}}\) we denote the subset of \(\alpha \) which are neither the root, nor a leaf (inner vertices). In the HT format, to every \(\alpha \in {\mathbb {T}} {\setminus } \{\alpha ^*\}\) with sons \(\alpha _1,\alpha _2\) a subspace \({\mathscr {U}}_\alpha \subseteq \bigotimes _{j \in \alpha } \ell ^2({\mathscr {I}}_{j})\) of dimension \(r_\alpha \) is attached such that the nestedness properties

and \(\pi _{{\mathbb {T}}}(\mathbf {u}) \in {\mathscr {U}}_{\alpha _1^*} \otimes {\mathscr {U}}_{\alpha _2^*}\) hold true. Here \(\pi _{{\mathbb {T}}}\) denotes the natural isomorphismFootnote 3 between \(\bigotimes _{\mu =1}^d \ell ^2({\mathscr {I}}_\mu )\) and \(\bigotimes _{\mu =1}^d \ell ^2({\mathscr {I}}_{\varPi _{{\mathbb {T}}}(\mu )})\).

Corresponding bases \(\{ \mathbf {U}^\alpha (\cdot ,\ldots ,\cdot ,k_\alpha ) {:} k_\alpha = 1,\dots ,r_\alpha \}\) of \({\mathscr {U}}_\alpha \) are then recursively expressed as

where \({\mathbf {i}}_\alpha = \times _{\mu \in \alpha } \{i_\mu \}\) denotes, with a slight abuse of notation, the tuple of physical variables represented by \(\alpha \). Finally, \(\mathbf {u}\) is recovered as

It will be notationally convenient to set \(\mathbf {B}^\alpha = \mathbf {U}^\alpha \) for leaves \(\alpha = \{\mu \}\). If equation (3.9) is recursively expanded using (3.8), we obtain a multilinear low-rank format of the form (2.7) with \(E = \left|T\right|-1\), \(D = \left|T\right|\), \(r_\eta = r_\alpha \), and \(\mathbf {C}^\nu = \mathbf {B}^\alpha \) (in some ordering), that satisfies Definition 2.2. Its graphical representation takes the form of the tree in Fig. 1b, and has the same topology as the tree \({\mathbb {T}}\) itself, ignoring the edges with open ends which can be seen as labels indicating physical variables.

The tensors \(\mathbf {B}^{\alpha }\) will be called component tensors, the terminology transfer tensors is also common in the literature. In line with (2.8) the tensors which are representable in the HT format with fixed \(\mathfrak {r} = (r_\alpha )\) are the images

of a multilinear map \(\pi _{{\mathbb {T}}}^{-1} \circ \tau _{{\mathbb {T}},\mathfrak {r}}\).

For fixed \(\mathbf {u}\) and \({\mathbb {T}}\), the minimal possible \(r_\alpha \) to represent \(\mathbf {u}\) as image of \(\tau _{{\mathbb {T}},\mathfrak {r}}\) are, as for the Tucker format, given by ranks of certain matricisations of \(\mathbf {u}\). This will be explained in Sect. 3.5 for general tree tensor networks.

Depending on the contraction lengths \(r_\alpha \), the HT format can be efficient, as it only requires storing the tuple \((\mathbf {B}^\alpha )_{\alpha \in {\mathbb {T}}}\). Every \(\mathbf {B}^\alpha \) is a tensor of order at most three. The number of nodes in the tree \({\mathbb {T}} \) is bounded by \( 2 d -1 = {\mathscr {O}} (d) \), including the root node. Therefore, the data complexity for representing \(\mathbf {u}\) is \({\mathscr {O}} (ndr + d r^3) \), where \( n = \max \{n_\mu {:} \mu =1 , \ldots , d\}\), \(r = \max \{ r_{\alpha } {:} \alpha \in {\mathbb {T}}{\setminus } \{\alpha ^* \}\}\). In contrast to the classical Tucker format, the complexity formally no longer scales exponentially in d.

It is straightforward to extend the concept to partition trees such that vertices are allowed to have more than two sons, but the binary trees are the most common. Note that the Tucker format itself represents an extreme case where the root decomposes immediately into d leaves, as illustrated in Fig. 1a.

3.4 Tensor Trains and Matrix Product Representation

As a third example, we consider the tensor train (TT) format as introduced in [111, 113]. As it later turned out, this format plays an important role in physics, where it is known as matrix product states (MPS). The unlabelled tree tensor network of this format can be seen in Fig. 1c. When attaching the physical variable \(n_\mu \) in natural order from left to right, the pointwise multilinear representation is

The TT format is hence of the form (2.7) with \(D=d\) and \(E = d-1\), and satisfies Definition 2.2.

Introducing the matrices \( \mathbf {G}^\mu (i_\mu ) = [\mathbf {G}^\mu (k_{\mu -1},i_\mu ,k_\mu )] \in {\mathbb {R}}^{r_{\mu -1} \times r_{\mu } }\), with the convention \(r_0 =r_d =1\), \(\mathbf {G}^1(1,i_1,k_1) = \mathbf {G}^1(i_1,k_1)\), and \(\mathbf {G}^d(k_{d-1},i_1,1) = \mathbf {G}^d(k_{d-1},i_d)\), formula (3.10) becomes a matrix product,

which explains the name matrix product states used in physics. In particular, the multilinear dependence on the components \(\mathbf {G}^\mu \) is evident, and may be expressed as \(\mathbf {u} = \tau _{\text {TT}} (\mathbf {G}^1,\dots ,\mathbf {G}^d)\).

From the viewpoint of subspace representation, the minimal \(r_\eta \), \(\eta = 1,\dots ,d-1\), required for representing \(\mathbf {u}\) in the TT format are the minimal dimensions of subspaces \({\mathscr {U}}_{\{1,\dots ,\nu \}} \subseteq \bigotimes _{\mu = 1}^\nu \ell ^2({\mathscr {I}}_\mu )\) such that the relations

hold simultaneously. Again, these subspaces can be obtained as ranges of corresponding matricisations, as will be explained in the next subsection. Regarding nestedness, we will see that one even has

A tensor in canonical format

can be easily written in TT form, by setting all \(r_\eta \) to \(r_c\), \(\mathbf {G}^1 = \mathbf {C}^1\), \(\mathbf {C}^d = (\mathbf {G}^d)^T\), and

for \( \mu =2,\dots ,d-1\). From (3.11) we conclude immediately that a single point evaluation \(\mathbf {u}(i_1,\dots ,i_d)\) can be computed easily by matrix multiplication using \({\mathscr {O}} ( d r^2 ) \) arithmetic operations, where \(r = \max \{ r_\eta {:} \eta = 1,\dots ,d-1\}\). With \(n = \max \{n_\mu {:} \mu =1 , \ldots , d\}\), the data required for the TT representation is \({\mathscr {O}} (d n r^2)\), as the d component tensors \(\mathbf {G}^\mu \) need to be stored. Depending on r, the TT format hence offers the possibility to circumvent the curse of dimensionality.

Due to its convenient explicit representation (3.11) we will use the TT format frequently as a model case for explanation.

3.5 Matricisations and Tree Rank

After having discussed the most prominent examples of tree tensor networks in the previous sections, we return to the consideration of a general tree tensor network \(\tau _{\mathfrak {}} = \tau _{\mathfrak {r}}(\mathbf {C}^1,\dots ,\mathbf {C}^D)\) encoding a representation (2.7) and obeying Definitions 2.2 and 2.5. The nodes have indices \(1,\dots ,D\), and the distribution of physical variables \(i_\mu \) is fixed (nodes are allowed to carry more than one physical index).

The topology of the network is described by the set of its edges. Following [9], we now introduce a notion of effective edges, which may in fact comprise several lines in a graphical representation such as Fig. 1, and correspond precisely to the matricisations arising in the tensor format. The set of such edges will be denoted by \({\mathbb {E}}\). In slight deviation from (2.7), the contraction variable \((k_\eta )_{\eta \in {\mathbb {E}}}\) and the representation ranks \(\mathfrak {r} = (r_\eta )_{\eta \in {\mathbb {E}}}\) will now be indexed by the set \({\mathbb {E}}\).

Since we are dealing with a tree tensor network, along every contraction index we may split the tree into two disjoint subtrees. Both subtrees must contain vertices carrying physical variables. Hence such a splitting induces a partition

by gathering the \(\mu \) for which the physical index \(i_\mu \) is in the respective subtree. We then call the unordered pair \(\{ \alpha , \alpha ^c\}\) an edge.

For instance, for a given partition tree \({\mathbb {T}}\) in the HT case, we have

as used in [9], with each element of \({\mathbb {E}}\) corresponding to precisely one matricisation arising in the format. As a consequence of the definition, for each \(\eta \in {\mathbb {E}}\) we may pick a representative \([ \eta ] \in {\mathbb {T}}\). Note that in (3.12), the set \(\{ \alpha ^*_1,\alpha ^*_2\}\) appears twice as \(\alpha \) runs over \({\mathbb {T}} {\setminus } \{ \alpha ^*\}\), which is a consequence of the two children of \(\alpha ^*\) corresponding to the same matricisation; hence \(|{\mathbb {E}}| = 2d-3\).

In order to introduce the same notion for tree tensor networks, we first give a construction of a corresponding generalised partition tree \({\mathbb {T}}\) by assigning labels to the nodes in the tensor network as follows. Pick any node \(\nu ^*\) to be the root of the tree, for which we add \(\alpha ^*=\{1,\ldots ,d\}\) to \({\mathbb {T}}\). This induces a top–down (father–son) ordering in the whole tree. For all nodes \(\nu \), we have a partition of the physical variables in the respective subtree of the form

where \(\beta _\nu \) is the set of physical variables attached to \(\nu \) (of course allowing \(\beta _\nu =\emptyset \)). We now add all \(\alpha _\nu \) that are obtained recursively in this manner to the set \({\mathbb {T}}\). It is easy to see that such a labelling is possible for any choice of \(\nu ^*\).

For such a generalised partition tree \({\mathbb {T}}\) of a tree tensor network, we again obtain a set of effective edges \({\mathbb {E}}\) exactly as in (3.12), and again have a representative \([\eta ]\in {\mathbb {T}}\) for each \(\eta \in {\mathbb {E}}\).

The difference between this general construction and the particular case (3.12) of the HT format is that we now allow incomplete partitions (complemented by \(\beta _\nu \)), and in principle also further nodes with the same label. In the case of the TT format (3.10), which corresponds to the network considered in Fig. 1c with linearly arranged \(i_\mu \), starting from the rightmost node \(\nu ^*=\{d\}\), one obtains the \(d-1\) edges

which in this case comprise the set \({\mathbb {E}}\).

The main purpose of this section is to show how the minimal representation ranks \((r_{\eta })_{\eta \in {\mathbb {E}}}\) are obtained from matrix ranks. For every edge \(\eta \in {\mathbb {E}}\), we have index sets  and

and  , and, by (2.4), a natural isometric isomorphism

, and, by (2.4), a natural isometric isomorphism

called \(\eta \) -matricisation or simply matricisation. The second-order tensor \(\mathbf {M}_\eta (\mathbf {u})\) represents a reshape of the hyper-matrix (array) \(\mathbf {u}\) into a matrix in which the rows are indexed by \({\mathscr {I}}_\eta \) and the columns by \({\mathscr {I}}_\eta ^c\). The order in which these index sets are traversed is unimportant for what follows.

Definition 3.2

The rank of \(\mathbf {M}_\eta (\mathbf {u})\) is called the \(\eta \) -rank of \(\mathbf {u}\), and denoted by \({{\mathrm{rank}}}_\eta (\mathbf {u})\). The tuple \({{\mathrm{rank}}}_{{\mathbb {E}}}(\mathbf {u}) = ({{\mathrm{rank}}}_\eta (\mathbf {u}))_{\eta \in {\mathbb {E}}}\) is called the tree rank of \(\mathbf {u}\) for the given tree tensor network.

Theorem 3.3

A tensor is representable in a tree tensor network \(\tau _{\mathfrak {r}}\) with edges \({\mathbb {E}}\) if and only if \({{\mathrm{rank}}}_\eta (\mathbf {u}) \le r_\eta \) for all \(\eta \in {\mathbb {E}}\).

Proof

Assume \(\mathbf {u}\) is representable in the form (2.7). Extracting the edge \(\eta \) corresponding to (without loss of generality, only one) contraction index \(k_\eta \) from the tree we obtain two disjoint subtrees on both sides of \(\eta \), with corresponding contraction variables relabelled as \(k_1,\dots ,k_s\) and \(k_{s+1},\dots ,k_{E-1}\), respectively; the set of nodes for the components is partitioned into \(\{1,\dots ,D\} = \gamma _\eta ' \cup \gamma _\eta ''\). Since in every component \(\mathbf {C}^\nu \) at most two contraction variable are active, it follows that

The edge \(\eta \) is of the form \(\eta =\{\alpha ,\alpha ^c\}\), where all physical variables \(i_\mu \) with \(\mu \in \alpha \) are active in some \(\mathbf {C}^{\nu '}\) with \(\nu ' \in \gamma '\), and those in \(\alpha ^c\) are active in some \(\mathbf {C}^{\nu ''}\) with \(\nu '' \in \gamma ''\). Thus (3.14) implies \({{\mathrm{rank}}}_\eta (\mathbf {u}) \le r_\eta \).

To prove the converse statement it suffices to show that we can choose \(r_\eta = {{\mathrm{rank}}}_\eta (\mathbf {u})\). We assume a proper labelling with distinguished node \(\nu ^*\). To every edge \(\eta \) belongs a subspace \({\mathscr {U}}_\eta \subseteq \ell ^2({\mathscr {I}}_{[\eta ]})\), which is the Hilbert space whose orthonormal basis are the left singular vectors of \(\mathbf {M}_\eta (\mathbf {u})\) belonging to positive singular values. Its dimension is \(r_\eta \). In a slight abuse of notation (one has to involve an isomorphism correcting the permutation of factors in the tensor product) we note that

for every \(\eta \). Here our argumentation will be rather informal to avoid notational technicalities. One can show that (3.15) in combination with (3.13) yields (in intuitive notation)

and

by [65, Lemma 6.28]. Let \(\{ \mathbf {U}^\nu (\cdot ,\dots ,\cdot ,k_{\eta (\nu )}) {:} k_{\eta (\nu )} = 1,\dots , r_{\eta (\nu )}\}\) be a basis of \({\mathscr {U}}_{\alpha _\nu }\), with \(\eta (\nu )=\{\alpha _\nu ,\alpha _\nu ^c\}\). We also set \(\mathbf {U}^{\nu ^*} = \mathbf {u}\). Now if a node \(\nu \) has no sons, we choose \(\mathbf {C}^\nu = \mathbf {U}^{\nu }\). For other \(\nu \ne \nu ^*\), by (3.16) or (3.17), a tensor \(\mathbf {C}^\nu \) is obtained by recursive expansion. By construction, the final representation for \(\mathbf {u}\) yields a decomposition according to the tree network. \(\square \)

3.6 Existence of Best Approximations

We can state the result of Theorem 3.3 differently. Let \({\mathscr {H}}_{\le \mathfrak {r}} = {\mathscr {H}}_{\le \mathfrak {r}}({\mathbb {E}})\) denote the set of all tensors representable in a given tensor tree network with edges \({\mathbb {E}}\). For every \(\eta \in {\mathbb {E}}\) let

Then Theorem 3.3 states that

Using the singular value decomposition, it is relatively easy to show that for any finite \(r_\eta \), the set \({\mathscr {M}}_{\le r_\eta }^\eta \) is weakly sequentially compact [52, 65, 134, 136], and for \(r_\nu = \infty \), we have \({\mathscr {M}}_{\le r_\eta }^{\eta } = \ell ^2{({\mathscr {I}})}\). Hence the set \({\mathscr {H}}_{\le \mathfrak {r}}\) is weakly sequentially closed. Depending on the chosen norm, this is even true in tensor product of Banach spaces [52]. A standard consequence in reflexive Banach spaces like \(\ell ^2({\mathscr {I}})\) (see, e.g. [147]) is the following.

Theorem 3.4

Every \(\mathbf {u} \in \ell ^2({\mathscr {I}})\) admits a best approximation in \({\mathscr {H}}_{\le \mathfrak {r}}\).

For matrices we know that truncation of the singular value decomposition to rank r yields the best rank-r approximation of that matrix. The analogous problem to find a best approximation of tree rank at most \(\mathfrak {r}\) for a tensor \(\mathbf {u}\), that is, a best approximation in \({\mathscr {H}}_{\le \mathfrak {r}}\), has no such clear solution and can be NP-hard [75]. As we are able to project onto every set \({\mathscr {M}}^\eta _{\le r_\eta }\) via SVD, the characterisation (3.18) suggests to apply successive projections on these sets to obtain an approximation in \({\mathscr {H}}_{\le \mathfrak {r}}\). This works depending on the order of these projections, and is called hierarchical singular value truncation.

3.7 Hierarchical Singular Value Decomposition and Truncation

The bases of subspaces considered in the explicit construction used to prove Theorem 3.3 can be chosen arbitrarily. When the left singular vectors of \(\mathbf {M}_\eta (\mathbf {u})\) are chosen, the corresponding decomposition \(\mathbf {u} = \tau _{\mathfrak {r}}(\mathbf {C}^1,\dots ,\mathbf {C}^D)\) is called the hierarchical singular value decomposition (HSVD) with respect to the tree network with effective edges \({\mathbb {E}}\). It was first considered in [39] for the Tucker format, later in [60] for the HT and in [109, 111] for the TT format. It was also introduced before in physics for the matrix product representation [140]. The HSVD can be used to obtain low-rank approximations in the tree network. This procedure is called HSVD truncation.

Most technical details will be omitted. In particular, we do not describe how to practically compute an exact HSVD representation; see, e.g. [60]. For an arbitrary tensor given in full format this is typically prohibitively expensive. However, for \(\mathbf {u} = \tau _{\mathfrak {r}}(\tilde{\mathbf {C}}^1,\dots \tilde{\mathbf {C}}^D)\) already given in the tree tensor network format, the procedure is quite efficient. The basic idea is as follows. One changes the components from leaves to root to encode some orthonormal bases in every node except \(\nu ^*\), using e.g. QR decompositions that operate only on (matrix reshapes of) the component tensors. Afterwards, it is possible to install HOSV bases from root to leaves using only SVDs on component tensors. Many details are provided in [65].

In the following we assume that \(\mathbf {u}\) has tree rank \(\mathfrak {s}\) and \(\mathbf {u} = \tau _{\mathfrak {s}}(\mathbf {C}^1,\dots ,\mathbf {C}^D) \in {\mathscr {H}}_{\le \mathfrak {s}}({\mathbb {E}})\) is an HSVD representation. Let \(\mathfrak {r} \le \mathfrak {s}\) be given. We consider here the case that all \(r_\eta \) are finite. An HSVD truncation of \(\mathbf {u}\) to \({\mathscr {H}}_{\le \mathfrak {r}}\) can be derived as follows. Let

be the SVD of \(\mathbf {M}_\eta \), with \(\varSigma ^\eta = {{\mathrm{diag}}}(\sigma ^\eta _1(\mathbf {u}),\sigma ^\eta _2(\mathbf {u}),\dots )\) such that \(\sigma _1^\eta (\mathbf {u}) \ge \sigma _2^\eta (\mathbf {u}) \ge \dots \ge 0\). The truncation of a single \(\mathbf {M}_\eta (\mathbf {u})\) to rank \(r_\eta \) can be achieved by applying the orthogonal projection

where \(\tilde{\mathbf {P}}_{[\eta ],r_\mu }\) is the orthogonal projection onto the span of \(r_\eta \) dominant left singular vectors of \(\mathbf {M}_\eta (\mathbf {u})\). Then \(\mathbf {P}_{\eta ,r_\eta }(\mathbf {u})\) is the best approximation of \(\mathbf {u}\) in the set \({\mathscr {M}}_{\le r_\eta }^\eta \). Note that \(\mathbf {P}_{\eta ,r_\eta } = \mathbf {P}_{\eta ,r_\eta ,\mathbf {u}}\) itself depends on \(\mathbf {u}\).

The projections \((\mathbf {P}_{\eta ,r_\eta })_{\eta \in {\mathbb {E}}}\) are now applied consecutively. However, to obtain a result in \({\mathscr {H}}_{\le \mathfrak {r}}\), one has to take the ordering into account. Let \({\mathbb {T}}\) be a generalised partition tree of the tensor network. Considering a \(\alpha \in {\mathbb {T}}\) with son \(\alpha '\) we observe the following:

-

(i)

Applying \(\mathbf {P}_{\eta ,r_\eta }\) with \(\eta =\{\alpha ,\alpha ^c\}\) does not destroy the nestedness property (3.15) at \(\alpha \), simply because the span of only the dominant \(r_\eta \) left singular vectors is a subset of the full span.

-

(ii)

Applying \(\mathbf {P}_{\eta ',r_{\eta '}}\) with \(\eta '=\{\alpha ',\alpha '^c\}\) does not increase the rank of \(\mathbf {M}_\eta (\mathbf {u})\). This holds because there exists \(\beta \subseteq \{1,\dots ,d\}\) such that \({\mathrm {Id}}_{[\eta ']^c} \subseteq {\mathrm {Id}}_{[\eta ]^c} \otimes {\mathrm {Id}}_{\beta }\). Thus, since \(\mathbf {P}_{\eta ',r_{\eta '}}\) is of the form (3.19), it only acts as a left multiplication on \(\mathbf {M}_\eta (\mathbf {u})\).

Property (ii) by itself implies that the top-to-bottom application of the projections \(\mathbf {P}_{\eta ,r_\eta }\) will result in a tensor in \({\mathscr {H}}_{\le \mathfrak {r}}\). Property (i) implies that the procedure can be performed, starting at the root element, by simply setting to zero all entries in the components that relate to deleted basis elements in the current node or its sons, and resizing the tensors accordingly.

Let \({{\mathrm{level}}}\eta \) denote the distance of \([\eta ]\) in the tree to \(\alpha ^*\), and let L be the maximum such level. The described procedure describes an operator

called the hard thresholding operator. Remarkably, as the following result shows, it provides a quasi-optimal projection. Recall that a best approximation in \({\mathscr {H}}_{\le \mathfrak {r}}\) exists by Theorem 3.4.

Theorem 3.5

For any \(\mathbf {u} \in \ell ^2({\mathscr {I}})\), one has

The proof follows more or less immediately along the lines of [60], using the properties \(\Vert \mathbf {u} - P_1 P_2 \mathbf {u} \Vert ^2 \le \Vert \mathbf {u} - P_1 \mathbf {u} \Vert ^2 + \Vert \mathbf {u} - P_2 \mathbf {u} \Vert ^2\), which holds for any orthogonal projections \(P_1,P_2\), and \(\min _{\mathbf {v} \in {\mathscr {M}}^\eta _{\le r_\eta }} \Vert \mathbf {u} - \mathbf {v} \Vert \le \min _{\mathbf {v} \in {\mathscr {H}}_{\le \mathfrak {r}}} \Vert \mathbf {u} - \mathbf {v} \Vert \), which follows from (3.18).

There are sequential versions of hard thresholding operators which traverse the tree in a different ordering, and compute at edge \(\eta \) the best \(\eta \)-rank-\(r_\eta \) approximation of the current iterate by recomputing an SVD. These techniques can be computationally beneficial, but the error cannot easily be related to that of the direct HSVD truncation; see [65, Sect. 11.4.2] for corresponding bounds similar to Theorem 3.5.

3.8 Hierarchical Tensors as Differentiable Manifolds

We now consider geometric properties of \({\mathscr {H}}_{\mathfrak {r}} = {\mathscr {H}}_{\mathfrak {r}}({\mathbb {E}}) = \{ \mathbf {u} {:} {{\mathrm{rank}}}_{{\mathbb {E}}} (\mathbf {u}) = \mathfrak {r} \}\), that is, \({\mathscr {H}}_{\mathfrak {r}}= \bigcap _{\eta \in {\mathbb {E}}} {\mathscr {M}}^\eta _{r_\eta }\), where \({\mathscr {M}}^\eta _{r_\eta }\) is the set of tensors with \(\eta \)-rank exactly \(r_\eta \). We assume that \(\mathfrak {r}\) is such that \({\mathscr {H}}_{\mathfrak {r}}\) is not empty. In contrast to the set \({\mathscr {H}}_{\le \mathfrak {r}}\), it can be shown that \({\mathscr {H}}_{\mathfrak {r}}\) is a smooth embedded submanifold if all ranks \(r_\eta \) are finite [79, 138], which enables Riemannian optimisation methods on it as discussed later. This generalises the fact that matrices of fixed rank form smooth manifolds [74].

The cited references consider finite-dimensional tensor product spaces, but the arguments can be transferred to the present separable Hilbert space setting [136], since the concept of submanifolds itself generalises quite straightforwardly, see, e.g. [97, 148]. The case of infinite ranks \(r_\eta \), however, is more subtle and needs to be treated with care [52, 54].

We will demonstrate some essential features using the example of the TT format. Let \(\mathfrak {r} = (r_1,\dots ,r_{d-1})\) denote finite TT representation ranks. Repeating (3.11), the set \({\mathscr {H}}_{\le \mathfrak {r}}\) is then the image of the multilinear map

where \({\mathscr {W}}_\nu = \mathbb {R}^{r_{\nu -1}} \otimes \ell ^2({\mathscr {I}}_\nu ) \otimes \mathbb {R}^{r_{\nu }}\) (with \(r_0 = r_d = 1\)), and \(\mathbf {u} = \tau _{\text {TT}}(\mathbf {G}^1,\dots ,\mathbf {G}^d)\) is defined via

The set \({\mathscr {W}}\) is called the parameter space for the TT format with representation rank \(\mathfrak {r}\). It is not difficult to deduce from (3.21) that in this case \({\mathscr {H}}_{\mathfrak {r}} = \tau _{\text {TT}}({\mathscr {W}}^*)\), where \({\mathscr {W}}^*\) is the open and dense subset of parameters \((\mathbf {G}^1,\dots ,\mathbf {G}^d)\) for which the embeddings (reshapes) of every \(\mathbf {G}^\nu \) into the matrix spaces \(\mathbb {R}^{r_{\nu -1}} \otimes (\ell ^2({\mathscr {I}}_\mu ) \otimes \mathbb {R}^{r_{\nu }})\), respectively \((\mathbb {R}^{r_{\nu -1}} \otimes \ell ^2({\mathscr {I}}_\nu )) \otimes \mathbb {R}^{r_{\nu }}\), have full possible rank \(r_{\nu -1}\), respectively \(r_\nu \). Since \(\tau _{\text {TT}}\) is continuous, this also shows that \({\mathscr {H}}_{\le \mathfrak {r}}\) is the closure of \({\mathscr {H}}_{\mathfrak {r}}\) in \(\ell ^2({\mathscr {I}})\).

A key point that has not been emphasised so far is that the representation (3.21) is by no means unique. We can replace it with

with invertible matrices \(\mathbf {A}^\nu \), which yields new components \(\tilde{\mathbf {G}}^\nu \) representing the same tensor. This kind of non-uniqueness occurs in all tree tensor networks and reflects the fact that in all except one node only the subspaces are important, not the concrete choice of basis. A central issue in understanding the geometry of tree representations is to remove these redundancies. A classical approach, pursued in [136, 138], is the introduction of equivalence classes in the parameter space. To this end, we interpret the transformation (3.22) as a left action of the Lie group \({\mathscr {G}}\) of regular matrix tuples \(({\mathbf {A}}^1,\dots ,{\mathbf {A}}^{d-1})\) on the regular parameter space \({\mathscr {W}}^*\). The parameters in an orbit \({\mathscr {G}} \circ (\mathbf {G}^1,\dots ,\mathbf {G}^d)\) lead to the same tensor and are called equivalent. Using simple matrix techniques one can show that this is the only kind of non-uniqueness that occurs. Hence we can identify \({\mathscr {H}}_{\mathfrak {r}}\) with the quotient \({\mathscr {W}}^* / {\mathscr {G}}\). Since \({\mathscr {G}}\) acts freely and properly on \({\mathscr {W}}^*\), the quotient admits a unique manifold structure such that the canonical mapping \({\mathscr {W}}^* \rightarrow {\mathscr {W}}^* / {\mathscr {G}}\) is a submersion. One now has to show that the induced mapping \(\hat{\tau }_{\text {TT}}\) from \({\mathscr {W}}^* / {\mathscr {G}}\) to \(\ell ^2({\mathscr {I}})\) is an embedding to conclude that its image \({\mathscr {H}}_{\mathfrak {r}}\) is an embedded submanifold. The construction can be extended to general tree tensor networks.

The tangent space \({\mathscr {T}}_{\mathbf {u}} {\mathscr {H}}_{\mathfrak {r}}\) at \(\mathbf {u}\), abbreviated by \({\mathscr {T}}_{\mathbf {u}}\), is of particular importance for optimisation on \({\mathscr {H}}_{\mathfrak {r}}\). The previous considerations imply that the multilinear map \(\tau _{\text {TT}}\) is a submersion from \({\mathscr {W^*}}\) to \({\mathscr {H}}_{\mathfrak {r}}\). Hence the tangent space at \(\mathbf {u} = \tau _{\text {TT}}(\mathbf {C}^1,\dots ,\mathbf {C}^d)\) is the range of \(\tau '_{\text {TT}}(\mathbf {C}^1,\dots ,\mathbf {C}^d)\), and by multilinearity, tangent vectors at \(\mathbf {u}\) are therefore of the generic form

As a consequence, a tangent vector \(\delta u\) has TT-rank \(\mathfrak {s}\) with \(s_\nu \le 2r_\nu \).

Since \(\tau '_{\text {TT}}(\mathbf {G}^1,\dots ,\mathbf {G}^d)\) is not injective (tangentially to the orbit \({\mathscr {G}} \circ (\mathbf {G}^1,\dots ,\mathbf {G}^d)\), the derivative vanishes), the representation (3.23) of tangent vectors cannot be unique. One has to impose gauge conditions in the form of a horizontal space. Typical choices for the TT format are the spaces \(\hat{{\mathscr {W}}}_\nu = \hat{{\mathscr {W}}}_\nu (\mathbf {G}^\nu )\), \(\nu = 1,\dots ,d-1\), comprised of \(\delta \mathbf {G}^\nu \) satisfying

These \(\hat{{\mathscr {W}}}_\nu \) are the orthogonal complements in \({\mathscr {W}}_\nu \) of the space of \(\delta \mathbf {G}^\nu \) for which there exists an invertible \(\mathbf {A}^\nu \) such that \(\delta \mathbf {G}^\nu (i_\nu ) = \mathbf {G}^\nu (i_\nu ) \mathbf {A}^\nu \) for all \(i_\nu \). This can be used to conclude that every tangent vector of the generic form (3.23) can be uniquely represented such that

In fact, the different contributions in (3.23) then belong to linearly independent subspaces, see [79] for details. It follows that the derivative \(\tau '_{\text {TT}}(\mathbf {G}^1,\dots ,\mathbf {G}^d)\) maps the subspace \(\hat{{\mathscr {W}}}_\nu (\mathbf {G}^{1}) \times \dots \times \hat{{\mathscr {W}}}_{d-1}(\mathbf {G}^{d-1}) \times {\mathscr {W}}_d\) of \({\mathscr {W}}\) bijectively on \({\mathscr {T}}_{\mathbf {u}}\).Footnote 4 In our example, there is no gauge on the component \(\mathbf {G}^d\), but with modified gauge spaces, any component could play this role.

The orthogonal projection \(\varPi _{T_{\mathbf {u}}}\) onto the tangent space \({\mathscr {T}}_{\mathbf {u}}\) is computable in a straightforward way if the basis vectors implicitly encoded at nodes \(\nu =1,\dots ,d-1\) are orthonormal, which in turn is not difficult to achieve (using QR decomposition from left to right). Then the decomposition of the tangent space induced by (3.23) and the gauge conditions (3.24) is actually orthogonal. Hence the projection on \({\mathscr {T}}_{\mathbf {u}}\) can be computed by projecting on the different parts. To this end, let \(\mathbf {E}_\nu = \mathbf {E}_\nu (\mathbf {G}^1,\dots ,\mathbf {G}^d)\) be the linear map \(\delta \mathbf {G}^\nu \mapsto \tau _{\text {TT}}(\mathbf {G}^1,\dots ,\delta \mathbf {G}^\nu , \dots \mathbf {G}^d)\). Then the components \(\delta \mathbf {G}^\nu \) to represent the orthogonal projection of \(\mathbf {v} \in \ell ^2({\mathscr {I}})\) onto \({\mathscr {T}}_{\mathbf {u}}\) in the form (3.23) are given by

Here we denote by \(P_{\hat{{\mathscr {W}}}_\nu }\) is the orthogonal projection onto the gauge space \(\hat{{\mathscr {W}}}_\nu \), and \(\mathbf {E}_\nu ^+ = (\mathbf {E}_\nu ^T \mathbf {E}_\nu ^{})^{-1} \mathbf {E}_\nu ^T\) is the Moore–Penrose inverse of \(\mathbf {E}_\nu \). Indeed, the assumption that \(\mathbf {u}\) has TT-rank \(\mathfrak {r}\) implies that the matrix \(\mathbf {E}_\nu ^T \mathbf {E}_\nu ^{}\) is invertible. At \(\nu = d\) it is actually the identity by our assumption that orthonormal bases are encoded in the other nodes. In operator form, the projector \(\varPi _{{\mathscr {T}}_{\mathbf {u}}}\) can then be written as

The operators \(\mathbf {E}_\nu ^T\) are easy to implement, since they require only the computation of scalar product of tensors. Furthermore, the inverses \((\mathbf {E}_\nu ^T \mathbf {E}_\nu ^{})^{-1}\) are applied only to the small component spaces \({\mathscr {W}}_\nu \). This makes the projection onto the tangent space a flexible and efficient numerical tool for the application of geometric optimisation, see Sect. 4.2. Estimates of the Lipschitz continuity of \(\mathbf {\mapsto }P_{{\mathscr {T}}_{\mathbf {u}}}\) (curvature bounds) are of interest in this context, with upper bounds given in [4, 105].

The generalisation of these considerations to arbitrary tree networks is essentially straightforward, but can become notationally quite intricate, see [138] for the HT format.

4 Optimisation with Tensor Networks and Hierarchical Tensors and the Dirac–Frenkel Variational Principle

In this section, our starting point is the abstract optimisation problem of finding

for a given cost functional \( J: \ell ^2({\mathscr {I}})\rightarrow {\mathbb {R}} \) and an admissible set \( {\mathscr {A}} \subseteq \ell ^2({\mathscr {I}})\).

In general, a minimiser \({\mathbf {u}}^*\) will not have low hierarchical ranks in any tree tensor network, but we are interested in finding good low-rank approximations to \(\mathbf {u}^*\). Let \({\mathscr {H}}_{\le \mathfrak {r}}\) denote again a set of tensors representable in a given tree tensor network with corresponding tree ranks at most \(\mathfrak {r}\). Then we wish to solve the tensor product optimisation problem

By fixing the ranks, one fixes the representation complexity of the approximate solution. It needs to be noted that the methods discussed in this section yield approximations to \(\mathbf {u}_{\mathfrak {r}}\), but no information on the error with respect to \(\mathbf {u}^*\). Typically, one will not aim to approximate \(\mathbf {u}_{\mathfrak {r}}\) with an accuracy better than \(\Vert {\mathbf {u}_{\mathfrak {r}} - \mathbf {u}^*}\Vert \). In fact, finding an accurate approximation of the global minimiser \(\mathbf {u}_{\mathfrak {r}}\) of (4.1) is a difficult problem: The results in [75] show that even finding the best rank-one approximation of a given tensor, with finite \(\mathscr {I}\), up to a prescribed accuracy is generally NP-hard if \(d\ge 3\). This is related to the observation that (4.1) typically has multiple local minima.

In general, one thus cannot ensure a prescribed error in approximating a global minimiser \(\mathbf {u}_{\mathfrak {r}}\) of (4.1). In order to enable a desired accuracy with respect to \(\mathbf {u}^*\), one also needs in addition some means to systematically enrich \({\mathscr {C}}\) by increasing the ranks \(\mathfrak {r}\). Subject to these limitations, the methods considered in this section provide numerically inexpensive ways of finding low-rank approximations by hierarchical tensors.

Note that in what follows, the index set \({\mathscr {I}}\) as in (2.2) may be finite or countably infinite. In the numerical treatment of differential equations, discretisations and corresponding finite index sets need to be selected. This aspect is not covered by the methods considered in this section, which operate on fixed \({\mathscr {I}}\), but we return to this point in Sect. 5.

Typical examples of optimisation tasks (4.1) that we have in mind are the following, see also [49, 51].

-

(a)

Best rank-\(\mathfrak {r}\) approximation in \(\ell ^2({\mathscr {I}})\): for given \( \mathbf {v} \in \ell ^2({\mathscr {I}})\) minimise

$$\begin{aligned} J( \mathbf {u} ) {:=} \Vert \mathbf {u} - \mathbf {v} \Vert ^2 \end{aligned}$$over \({\mathscr {A}} = \ell ^2({\mathscr {I}})\). This is the most basic task we encounter in low-rank tensor approximation.

-

(b)

Solving linear operator equations: for elliptic self-adjoint \( {\mathbf {A}} : \ell ^2({\mathscr {I}})\rightarrow \ell ^2({\mathscr {I}})\) and \({\mathbf {b}} \in \ell ^2({\mathscr {I}})\), we consider \({\mathscr {A}} {:=} \ell ^2({\mathscr {I}})\) and

$$\begin{aligned} J( {\mathbf {u}} ) {:=} \frac{1}{2} \langle {\mathbf {A}} {\mathbf {u}},{\mathbf {u}} \rangle - \langle {\mathbf {b}} , {\mathbf {u}} \rangle \end{aligned}$$(4.2)to solve \(\mathbf {A} \mathbf {u} = \mathbf {b}\). For nonsymmetric isomorphisms \({\mathbf {A}}\), one may resort to a least squares formulation

$$\begin{aligned} J({\mathbf {u}}) {:=} \Vert {\mathbf {A}} {\mathbf {u}}- {\mathbf {b}} \Vert ^2. \end{aligned}$$(4.3)The latter approach of minimisation of the norm of a residual also carries over to nonlinear problems.

-

(c)

Computing the lowest eigenvalue of symmetric \({\mathbf {A}}\) by minimising the Rayleigh quotient

$$\begin{aligned} {\mathbf {u}}^* = \hbox {argmin} \{ J({\mathbf {u}})= \langle {\mathbf {A}} {\mathbf {u}} , {\mathbf {u}} \rangle {:} \Vert {\mathbf {u}} \Vert ^2 =1\} . \end{aligned}$$This approach can be easily extended if one wants to approximate the N lowest eigenvalues and corresponding eigenfunctions simultaneously, see, e.g. [43, 88, 108].

For the existence of a minimiser, the weak sequential closedness of the sets \({\mathscr {H}}_{\le \mathfrak { r}}\) is crucial. As mentioned before, this property can be violated for tensors described by the canonical format [65, 127], and in general no minimiser exists. However, it does hold for hierarchical tensors \( {\mathscr {H}}_{\le \mathfrak {r}} \), as was explained in Sect. 3.6. A generalised version of Theorem 3.4 reads as follows.

Theorem 4.1

Let \(J\) be strongly convex over \( \ell ^2({\mathscr {I}})\), and let \({\mathscr {A}} \subseteq \ell ^2({\mathscr {I}})\) be weakly sequentially closed. Then \(J\) attains its minimum on \({\mathscr {C}}={\mathscr {A}} \cap {{\mathscr {H}}_{\le \mathfrak { r}}}\).

Under the assumptions of example (b), due to ellipticity of \(\mathbf {A}\) in (4.2) or \(\mathbf {A}^* \mathbf {A}\) in (4.3), the functional \(J\) is strongly convex, and one obtains well-posedness of these minimisation problems with (a) as a special case.

Since in case (c) the corresponding set \({\mathscr {A}}\) (the unit sphere) is not weakly closed, such simple arguments do not apply there.

4.1 Alternating Linear Scheme

We are interested in finding a minimiser, or even less ambitiously, we want to decrease the cost functional along our model class when the admissible set is \( {\mathscr {A}}= \ell ^2({\mathscr {I}})\).