Abstract

Due to limited reliability testing resources (e.g., budget, time, and manpower etc.), the reliability of a sophisticated system may not be able to accurately estimated by insufficient reliability testing data. The book chapter explores the reliability testing resources allocation problem for multi-state systems, so as to improve the accuracy of reliability estimation of an entire system. The Bayesian reliability assessment method is used to infer the unknown parameters of multi-state components by merging subjective information and continuous/discontinuous inspection data. The performance of each candidate testing resources allocation scheme is evaluated by the proposed uncertainty quantification metrics. By introducing the surrogate model, i.e., kriging model, the computational burden in seeking the optimal testing resources allocation scheme can be greatly reduced. The effectiveness and efficiency of the proposed method are exemplified via two illustrative case.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- Multi-state system

- Reliability testing resources allocation

- Bayesian reliability assessment

- Surrogate model

1 Introduction

Multi-state is one of the characteristics of advanced manufacturing systems and complex engineered systems [1, 2]. Both systems and components may manifest multiple states ranging from perfect working, through deterioration, to completely failed over time [1, 2]. Multi-state system (MSS) reliability modeling has, therefore, received considerable attentions in recent years as it is able to characterize the complicated deteriorating process of systems in a finer fashion than that of traditional binary-state models [1,2,3]. In engineering practice, many systems can be regarded as multi-state systems, e.g., manufacturing systems, power generating systems, flow transmission systems, etc. Various tools, such as the stochastic models, the universal generating functions, the recursive algorithms, and the simulation-based methods etc., have been developed to assess reliability and performance of MSSs in a computationally efficient manner.

Nevertheless, all the reported studies on MSS reliability assessment are based on the critical premise that the transition intensities and/or the state distributions of components and systems are exactly known in advance. Very limited attention has been placed on the parameter inference, which is a preceding task before conducting reliability assessment and enhancement of MSSs. Lisnianski et al. [4] introduced the point estimation of the transition intensities of a multi-state power-generating unit by defining a special Markov chain embedded in the observed capacity process. However, the results of point estimation are biased when data are sparse. The parameter uncertainty due to limited data and/or vague information has been taken into account in several existing works. For example, the transition intensities or the state distributions of components in an MSS were treated as fuzzy numbers [5, 6], interval values [7], and belief function [8]. Although these methods can quantify the uncertainty associated with reliability measures of interest from various angles, such uncertainty cannot be progressively reduced by collecting additional data. The Bayesian approach, which treats the unknown parameters to be inferred as random variables, enables reliability engineers to systematically synthesize the subjective information from experts and intuitive judgements with actual observed data. The estimates can be progressively updated as more data and information become available. In our earlier work, a Bayesian framework has been proposed to assess reliability and performance of MSSs [9]. Two scenarios, i.e., components are continuously and discontinuously inspected, have been discussed. The uncertainty of estimates will eventually propagate to the reliability measures of interest via a simulation method. Yet, as demonstrated in our illustrative cases, adding the same amount of additional observations to each component of an MSS may not yield an even contribution to uncertainty reduction of system reliability function. Therefore, it raises a new research question: how to allocate the additional reliability testing resources strategically if reliability engineers aim at further reducing the uncertainty associated with the reliability measures of interest?

The testing resources allocation problem has been reported in the existing literature. A methodology for allocating additional testing resources across the fault tree events with the purpose of minimizing the uncertainty of the top event probability has been investigated in Hamada et al. [10]. The events in a fault tree were binary-state in their work. Anderson-Cook et al. [11] developed an approach to assess the relative improvement in system reliability estimation for additional data from various types of aging components. The data for components could be pass/fail observations, degradation data, and lifetime data, and components can be in one of only two states, either functioning or failed. The aforementioned optimization question has been extended to a multi-objective optimization problem, in which the widths of the credible intervals of system and two subsystem reliability estimates were maximally reduced simultaneously by allocating limited testing resources [12]. Nonetheless, to date, the reliability testing resources allocation problem has rarely been studied for MSSs, and this chapter serves this purpose.

The remainder of this chapter is organized as follows: In Sect. 2, the Bayesian reliability assessment method developed in our earlier work is briefly reviewed first. It is followed by the proposed reliability testing resources allocation approach in Sect. 3. The details of evaluating performance of candidate allocation schemes, the kriging metamodel, and the optimization algorithm are elaborated. Two illustrative examples are presented in Sect. 4 to demonstrate the effectiveness of the proposed approach. A brief conclusion is given in Sect. 5.

2 Review of Bayesian Reliability Assessment for MSS

The MSS with ordered states [13] in question is assumed to be composed of M non-repairable statistically independent multi-state components, each of which has \(k_{l}\) (\(l \in \{ 1,\,2, \ldots ,\,M\}\)) different states distinguished by the possible performance capacities \({\mathbf{g}}_{l} = \{ g_{(l,1)} ,\,g_{(l,2)} , \ldots ,g_{{(l,k_{l} )}} \}\), where \(g_{(l,i)} < g_{(l,j)}\) for \(i < j\). The stochastic deteriorating behaviors of components are governed by the homogenous continuous-time Markov model. In this case, the probability of component l remaining at any particular state in a future time is statistically independent of its previous state. Many engineering systems can be characterized by the aforementioned stochastic model, such as manufacturing systems [2], power systems [1], and flow transmission systems [3].

The state-space diagram of multi-state component l is given in Fig. 1, where \(\lambda_{(i,j)}^{l}\) (\(i,j \in \{ 1,\,2,\, \ldots ,\,k_{l} \} ,\;i \ne j\)) denotes the constant intensity of component l transitioning from state i to state j. Given the initial condition that component l is at state u at \(t = 0\), the corresponding Kolmogorov differential equations can be formulated as:

where \(p_{(u,i)}^{l} (t)\) is the probability of component l being at state i at time instant t if it is initially at state u at \(t = 0\). The initial condition is set to be \(p_{(u,u)}^{l} (0) = 1\) and \(p_{(u,i)}^{l} (0) = 0\) (\(i \ne u\)) (\(i,\,u \in \{ 1,\,2,\, \ldots ,\,k_{l} \}\)). By resolving the differential equations, one can get the state probability \(p_{(u,i)}^{l} (t)\) as a function of \({\varvec{\uplambda}}^{l}\) (a vector of \(\lambda_{(i,j)}^{l}\), \(i,j \in \{ 1,\,2,\, \ldots ,\,k_{l} \} ,\;i \ne j\)) and time. Nevertheless, in this work, the transition intensities \({\varvec{\uplambda}}^{l}\) are unknown parameters to be estimated by observations.

In our earlier work, a Bayesian framework has been developed to infer the unknown parameters \({\varvec{\uplambda}}^{l}\) of multi-state components and assess reliability measures of a multi-state system [2]. It enables reliability engineers to systematically synthesize the subjective information from experts and intuitive judgements with actual observations, thereby, obtaining a balanced estimate. On the other side, the estimates from the Bayesian approach can be progressively updated as more data become available, and the uncertainty due to the limited data can also be quantified. The proposed Bayesian reliability assessment method for MSSs is composed of six steps as illustrated in Fig. 2. In Step 1, data are collected by conducting inspections. According to the data collection strategy, two types of data, i.e., continuous inspection data and discontinuous inspection data, are involved. The likelihood functions of the two types of data are constructed in Step 2. By merging the collected data with the prior knowledge of the unknown parameters, i.e., \({\varvec{\uplambda}}^{l}\), from Step 3, the Bayesian inference can be performed in Step 4 to yield the posterior distributions of the unknown parameters. In Step 5, by the Monte Carlo (MC) simulation, a set of samples of \({\varvec{\uplambda}}^{l}\) is randomly generated to get the corresponding universal generating functions (UGFs) of component l. The UGFs of the entire system, representing the system state distribution, can be derived by aggregating the UGFs of all the components. Consequently, reliability measures, such as the reliability function, the state probabilities, the instantaneous expected performance capacity, etc., can be evaluated in Step 6 via the UGFs of the entire system. The ensuing sections will review the technical details of this method.

2.1 Bayesian Parameter Inference for Multi-state Components

Following the general framework of Bayesian inference, the posterior distribution of the unknown parameters, i.e., \({\varvec{\uplambda}}^{l}\), of component l can be readily estimated by [9]:

where \(f^{prior} ({\varvec{\uplambda}}^{l} )\) is the prior distribution of unknown parameters \({\varvec{\uplambda}}^{l}\), whereas \(f^{post} ({\varvec{\uplambda}}^{l} |data)\) is the posterior distribution of \({\varvec{\uplambda}}^{l}\) given observations. \(l(data|{\varvec{\uplambda}}^{l} )\) is the likelihood function.

In accordance to the data collection strategy, two common scenarios, i.e., continuous inspection data and discontinuous inspection data, are studied to construct the corresponding likelihood functions.

Scenario I: Continuous Inspection Data

In this scenario, components are continuously inspected all the time, and the exact times of components transitioning from one state to another can be recorded. Thus, one can evaluate the following quantities:

-

(1)

The number of the transitions from state i to state j among all the observations, denoted as \(m_{(i,j)} (i > j,i \in \{ 2,3, \ldots ,k_{l} \} ,j \in \{ 1,2, \ldots ,k_{l} - 1\} )\);

-

(2)

The total time that components are remaining at state i, denoted as \(T_{i} \,\,(i \in \{ 2,3, \ldots ,k_{l} \} )\);

-

(3)

The total number of the transitions from state i, denoted as \(m_{i} (i = 2,\,3, \ldots ,n)\), and it can be computed by \(m_{i} = \sum\nolimits_{j = 1}^{i - 1} {m_{(i,j)} }\).

Bear in mind that the deterioration of each component is assumed to comply with a homogenous continuous-time Markov model, \(m_{i}\) follows a Poisson process, i.e., \(m_{i} \sim {\text{Poisson}}(\sum\nolimits_{j = 1}^{i - 1} {\lambda_{(i,j)}^{l} } \cdot T_{i} )\), and its probability density function is expressed as:

If a transition occurs at state i, the conditional probability \(q_{(i,j)}^{l}\)(\(i > j\), \(i \in \{ 2,3, \ldots ,k_{l} \}\), \(j \in \{ 1,2, \ldots ,k_{l} - 1\}\)) that the transition is from state \(i\) to state j is given by:

Thereby, the set of quantities \((m_{(i,1)} ,m_{(i,2)} , \ldots ,m_{(i,i - 1)} )\) (\(i \in \{ 2,3, \ldots ,k_{l} \}\)) follows the multinomial distribution with parameters \(m_{i}\) and \({\mathbf{q}}_{i}^{l} = (q_{(i,1)}^{l} ,q_{(i,2)}^{l} , \ldots ,q_{(i,i - 1)}^{l} )\), and the corresponding probability mass function can be formulated as:

Hence, the likelihood function is the product of Eqs. 3 and 5, and written as [9]:

Scenario II: Discontinuous Inspection Data

Continuous inspections may be costly in engineering practice. Alternatively, components can be inspected periodically or non-periodically. However, in this case, only the state of components at each inspection time and the time interval between two adjacent inspections are recorded. The collected data cannot reflect the time duration that components resides in each state and the exact paths that components degrade from the best state to the worst. If components are inspected periodically with a time interval of \(\Delta t\), the following quantities can be derived from discontinuous observations:

-

(1)

The number of inspections in which components are observed at state i in the last inspection and at state j after an inspection interval \(\Delta t\), denoted as \(m_{(i,j)}^{\Delta t}\);

-

(2)

The number of inspections in which the time interval between two adjacent inspections is \(\Delta t\) and components are observed at state i in the last inspection, denoted as \(m_{(i,j)}^{\Delta t}\), and one has \(m_{i}^{\Delta t} = \sum\nolimits_{j = 1}^{i} {m_{(i,j)}^{{\Delta t}} }\).

Under the assumption that a component’s deterioration follows a homogenous continuous-time Markov model, all the observations with the same observed state in the last inspection and the same time interval \(\Delta t\) between two adjacent inspections can be regarded as repeated s-independent trials. It can be characterized by a multinomial distribution. According to Eq. 1, on the condition that component l is observed at state i in the last inspection, the probability of component l being at state \(j \in \{ 1,\,2, \ldots ,i\}\) after \(\Delta t\), denoted as \(p_{(i,j)}^{l} (\Delta t)\), is a function of the inspection interval \(\Delta t\) and \({\varvec{\uplambda}}^{l}\). Hence, the set of quantities \((m_{(i,1)}^{\Delta t} ,m_{(i,2)}^{\Delta t} , \ldots ,m_{(i,i)}^{\Delta t} )\) follows a multinomial distribution with parameters \(m_{i}^{\Delta t}\) and \({\mathbf{p}}_{i}^{l} (\Delta t) = (p_{(i,1)}^{l} (\Delta t),p_{(i,2)}^{l} (\Delta t),\) \(\ldots ,\) \(p_{(i,i)}^{l} (\Delta t))\), and it is written as:

To generalize this scenario to a non-periodical case, a vector \(\Delta {\mathbf{t}} = (\Delta t_{1} ,\) \(\Delta t_{2} , \ldots \Delta t_{n} )\) (\(n \in \{ 1,2, \ldots \}\)) with finite time intervals is used to represent the distinct time intervals between two adjacent inspections in the cases of non-periodical inspections. The quantities \(m_{i}^{{\Delta t_{v} }}\) (\(i \in \{ 2,3, \ldots ,k_{l} \}\)) and \(m_{(i,j)}^{{\Delta t_{v} }}\) (\(j \le i\), \(i \in \{ 2,3, \ldots ,k_{l} \}\), \(j \in \{ 1,2, \ldots ,k_{l} \}\)) for each individual time interval \(\Delta t_{v} (v\, \in \,\{ 1,2, \ldots ,n\} )\) can be evaluated based on all the collected data. Thus, the likelihood function of all the observations under non-periodical inspections can be formulated as [9]:

By plugging Eq. 6 or 8 into the Bayesian formula Eq. 2, together with the prior knowledge \(f^{prior} ({\varvec{\uplambda}}^{l} )\), one can get the posterior distributions \(f^{post} ({\varvec{\uplambda}}^{l} |data)\) of \({\varvec{\uplambda}}^{l}\) via Eq. 2. Depending on the experts’ knowledge or historical data, a particular distribution, such as the Gamma distribution, the Beta distribution, etc., can be chosen for the prior distribution \(f^{prior} ({\varvec{\uplambda}}^{l} )\) [14]. Alternatively, the uniform distribution can be used as a non-informative prior if the prior knowledge is unavailable. The methods to determine the prior distribution in the Bayesian framework can be found in Wang et al. [14], Smith [15], Hamada et al. [16], and Kelly [17].

However, it should be noted that the analytical solutions to the posterior distributions of \({\varvec{\uplambda}}^{l}\) may not exist. In our study, the Markov Chain Monte Carlo (MCMC) method is used, as an alternative, to generate the posterior distribution via simulation. The technical details of the MCMC method are available in Hamada et al. [16] and Kelly [17].

2.2 Bayesian Reliability Assessment for Multi-state Systems

Given a particular set of the transition intensities \({\varvec{\uplambda}}^{l}\) (\(l \in \{ 1,\,2,\, \ldots ,M\}\)), the state distributions of components and/or systems at any time instant can be evaluated. By using the UGF, the state distribution of a multi-state component can be written as a polynomial form as \(u_{l} (z,t) = \sum\nolimits_{i = 1}^{{k_{l} }} {p_{(u,i)}^{l} (t)z^{{g_{(l,i)} }} }\), where \(p_{(u,i)}^{l} (t)\) derived by Eq. 1 is a function of \({\varvec{\uplambda}}^{l}\) and time. In the same fashion, the state distribution of an MSS can also be represented by an UGF as \(U_{S} (z,t) = \sum\nolimits_{i = 1}^{{N_{S} }} {p_{i} (t)z^{{g_{i} }} }\), where \(p_{i} (t)\) is the probability of the system staying at state i at time t and it is a function of \(p_{(u,i)}^{l} (t)\) (\(l \in \{ 1,\,2,\, \ldots ,M\}\)). As such, \(p_{i} (t)\) is also a function of \({\varvec{\uplambda}}^{l}\) (\(l \in \{ 1,\,2,\, \ldots ,M\}\)). \(g_{i}\) is the performance capacity of the system at state i. \(N_{S}\) is the number of system states. The UGF of the system can be recursively derived by the UGFs of all the components via composition operators [2, 18].

The reliability of the studied MSS is defined as the probability of the system’s performance capacity being not less than a specified demand level \(W\). Hence, the system reliability function can be formulated as:

where \(w_{j}\) (\(j \in \{ 1,\,2, \ldots ,H\}\)) is the possible value of W with the associated probability mass function \(\Pr \{ W = w_{j} \} = q_{j}\). \(1( \cdot )\) is a unity function, i.e., \(1(\rm{TRUE}) = 1\) and \(1({\text{FALSE}}) = 0\). As \(p_{i} (t)\) is completely determined by \({\varvec{\uplambda}}^{l}\) (\(l \in \{ 1,\,2,\, \ldots ,M\}\)), \(R(t)\) is, therefore, a function of \({\varvec{\uplambda}}^{l}\) (\(l \in \{ 1,\,2,\, \ldots ,M\}\)).

However, unlike most reported works in which the transition intensities \({\varvec{\uplambda}}^{l}\) (\(l \in \{ 1,\,2,\, \ldots ,M\}\)) of all the components are assumed to be pre-specified precise values, in this work, the transition intensities are estimated from the proposed Bayesian framework and characterized by a set of posterior distributions. Such uncertainty associated with the parameter inference will eventually propagate to the reliability measures of interests, say \(R(t)\), which are functions of the estimates. Hence, at any time instant, the system reliability evaluated based on the posterior distributions of the transition intensities is a random quantity. In our earlier work, a simulation-based method was developed to approximate the posterior distributions of system reliability at any time [9]. The basic procedures of the simulation method are as follows:

-

(1)

\(N_{sa}\), say \(N_{sa} = 1000 \sim 5000\), samples of the transition intensities \({\varvec{\uplambda}}^{l}\) for each component are randomly generated based on the posterior distributions.

-

(2)

The state distribution of each component with respect to the ith sample of the transition intensities \({\varvec{\uplambda}}^{l}\) can be produced by solving Eq. 1.

-

(3)

The system reliability function can be obtained by aggregating all the components’ UGFs solved by the ith sample of the transition intensities \({\varvec{\uplambda}}^{l}\).

-

(4)

By putting all the results from the \(N_{sa}\) samples together, the posterior distributions of the system reliability at any time instant can be approximated by fitting all the \(N_{sa}\) results with either a parametric distribution (e.g., normal, Weibull) or non-parametric distribution (e.g., the empirical distribution).

3 Optimal Testing Resources Allocation Strategy

The testing resources allocation problem concerns the sequential experiments in the reliability field, with which the best strategy to allocate the future available resources for a new data collection can be determined [11]. The role of the testing resources allocation in the progressive reliability evaluation of a product is depicted in Fig. 3. Reliability analysis is conducted based on the available initial data collected at the present Phase 1. From the analysis, the system reliability can be estimated and predicted. If the results are not credible enough, Phase 2 will be, therefore, involved to collect more data to further update the estimates and predictions. The sequential experiment process of collecting new data will continue whenever additional testing resources are available until the results of interest are satisfactory. The testing resources allocation is a decision-making action bridging the two adjacent phases, and it can provide a cost-efficient allocation strategy which yields a maximum improvement to the reliability estimates and predictions.

In our particular study, due to the limited data collected from reliability tests, the uncertainty of the estimates, i.e., the transition intensities \({\varvec{\uplambda}}^{l}\) (\(l \in \{ 1,\,2,\, \ldots ,M\}\)), and the reliability measures, i.e., reliability function, cannot be completely eliminated. These uncertainties have been quantified by the corresponding posterior distributions in the Bayesian framework as introduced in Sect. 2. On the other hand, by conducting sequential reliability tests, the newly collected data can be further used to reduce the uncertainty associated with the estimates and the reliability measures of interest. The specific objective of this study is, therefore, to determine the optimal scheme for the reliability testing resources allocation of the next phase, so as to reduce the uncertainty of the estimated reliability measures of interest as much as possible.

As seeking the optimal testing resources allocation strategy is extremely time-consuming, a metamodel-based approach is developed in this study to alleviate the computational burden. The basic steps of the proposed metamodel-based approach to identify the optimal testing resources allocation strategy are plotted in Fig. 4.

In Step 1, a set of candidate schemes for reliability testing resources allocation is randomly generated by the design of experiment (DOE), such as the full factorial experimental design, the Latin Hypercube Design (LHD), the Improved distributed Hypercube Sampling (IHS). The candidate schemes produced by the DOE are required to evenly spread over the decision space. In Step 2, the performance of each scheme generated in Step 1 will be evaluated. In our particular study, we are concerned with the uncertainty associated with the reliability measures of interest. The improvement to the uncertainty of system reliability estimation/prediction will be quantified by a metric. As evaluating, the performance of all the candidate schemes is computationally unaffordable, the kriging model, as a surrogate model, will be constructed in Step 3 to approximate the implicit relationship between the decision variables and the performance of candidate schemes. New candidate schemes together with their performance evaluation may be added into the initial DOE to update the kriging model until the accuracy of the kriging model is acceptable. In Step 4, the optimization algorithm, such as the genetic algorithm, will be directly performed on the kriging model to seek the global optimal solution, and it corresponds to the optimal testing resources allocation strategy that decision-makers are looking for. The technical details of some steps will be elaborated in the ensuing sections.

3.1 Evaluating Performance of Candidate Allocation Schemes

To determine the optimal testing resources allocation scheme, it is necessary to define a criterion to evaluate the performance of each candidate scheme. In this study, we are concerned with the uncertainty associated with the reliability measures of interest, and the testing resources allocation scheme which can maximally reduce the uncertainty of the estimated reliability measures is preferable. As reported in Anderson-Cook et al. [11], several possible metrics can be used to quantify the uncertainty of the reliability measures of interest, such as the width of a particular \((1 - \alpha ) \times 100\%\) confidence bound and the entropy of the estimate. Although these metrics are all asymptotically equivalent as claimed by Wynn [19], the different metrics will lead to different relative rankings of the candidate allocation schemes. In our study, as the system reliability function is uncertain due to the uncertainty associated with the transition intensities \({\varvec{\uplambda}}^{l}\) (\(l \in \{ 1,\,2, \ldots ,\,M\}\)), we choose the width of the \((1 - \alpha ) \times 100\%\) confidence bound as a metric, denoted as \(R_{(1 - \alpha ) \times 100\% } (t|f^{post} ({\varvec{\uplambda}}^{l} |data))\), to quantify the uncertainty of the system reliability at a particular time instant, as shown in Fig. 5a. If decision-makers concern with the uncertainty of the system reliability in a period of time, the integration of the width of the \((1 - \alpha ) \times 100\%\) confidence bound over the particular period of time, as depicted in Fig. 5b, can be used as an alternative metric. In this study, we will only focus on the former case in the illustrative examples.

The performance of a candidate allocation scheme is evaluated by examining the expected uncertainty of the reliability measures of interest after conducting the sequential experiments. The scheme with smaller expected uncertainty is preferable. Nevertheless, the expected uncertainty of the reliability measures cannot be derived analytically for each candidate scheme. In this study, a simulation-based approach is developed to evaluate the expected uncertainty of the reliability measures after carrying out a testing resources allocation scheme. The flowchart of the simulation-based approach is shown in Fig. 6. In Step 1, \(N_{d}\) samples of transition intensities \({\varvec{\uplambda}}^{l}\) (\(l \in \{ 1,\,2, \ldots ,\,M\}\)) are randomly drawn from the posterior distributions \(f^{post} ({\varvec{\uplambda}}^{l} |data)\). The posterior distributions are the estimates of \({\varvec{\uplambda}}^{l}\) based on the initial data at Phase 1 as shown in Fig. 3. And then, set the index \(i = 1\). It is followed by Step 2 in which the deterioration paths of components are randomly generated based on the ith random sample of \({\varvec{\uplambda}}^{l}\), and a set of new artificial observations can be collected based on the candidate allocation schemes. By merging both the initial observations from Phase 1 and the new artificial observations, the Bayesian inference introduced in Sect. 2.1 can be executed to update the posterior distributions of \({\varvec{\uplambda}}^{l}\) in Step 3, and then, in Step 4, the reliability of the entire system can be evaluated by the proposed approach in Sect. 2.2.

The uncertainty associated with the reliability measures of interest is quantified by the metrics, such as the width of the \((1 - \alpha ) \times 100\%\) confidence bound, in Step 5. If \(i < N_{d}\), set \(i = i + 1\) and go to Step 2. Otherwise, go to Step 6 to compute the expected value of the metrics, e.g., the expected width of the \((1 - \alpha ) \times 100\%\) confidence bounds of the reliability measures, based on the \(N_{d}\) results.

It should be noted that,

-

(1)

as the true values of \({\varvec{\uplambda}}^{l}\) are unknown and the new observations are artificially generated based on the posterior distributions of \({\varvec{\uplambda}}^{l}\), the expected uncertainty of the reliability measures from the simulation-based approach is not a true value, but a predictive value;

-

(2)

the simulation-based approach is very time-consuming, and it is computationally unaffordable to enumerate the performance of all the candidate schemes.

3.2 Kriging Model

To mitigate the computational burden in enumerating the performance of candidate schemes, the metamodeling technique is adopted in this study to approximate the implicit relationship between the decision variables (corresponding a particular candidate allocation scheme) and the performance of schemes, i.e., the expected width of \((1 - \alpha ) \times 100\%\) confidence bound. Many metamodeling tools can be used here, such as the polynomial response surface, the radial basis function, the kriging, the artificial neural networks, and support vector machine, etc. It is desired that a metamodel is capable of capturing both global and local trends with a few training samples. A comparative study on the performance of various metamodels has been reported in Jin et al. [20, 21]. In this study, we choose the kriging model as a surrogate model because it has extremely widespread applications due to its flexibility and high accuracy [22,23,24].

In essence, the kriging model is a semi-parametric interpolation technique based on statistical theory. The kriging model is composed by a polynomial model and a stochastic model as follows:

where \(P\) is the number of basic functions; \({\mathbf{f}}({\mathbf{x}}) = [f_{1} ({\mathbf{x}}),\,f_{2} ({\mathbf{x}}), \ldots ,\,f_{P} ({\mathbf{x}})]^{T}\) and \({\varvec{\upbeta}} = [\beta_{1} ,\beta_{2} , \ldots ,\beta_{P} ]^{T}\) are polynomial function of inputs \({\mathbf{x}}\) and the corresponding coefficients, respectively, and they provide a global approximation. While \(z({\mathbf{x}})\) is the lack of fit and is represented by a realization of a random process with mean zero and non-zero covariance. The covariance of the residuals at any two sites, say \(z({\mathbf{x}}_{i} )\) and \(z({\mathbf{x}}_{j} )\), can be expressed by:

where \(\sigma^{2}\) is the variance of \(z({\mathbf{x}})\); \(R({\mathbf{x}}_{i} ,\,{\mathbf{x}}_{j} )\) is the spatial correlation function of any two sites, i.e., \(z({\mathbf{x}}_{i} )\) and \(z({\mathbf{x}}_{j} )\) in the sample space. The correlation function \(R({\mathbf{x}}_{i} ,\,{\mathbf{x}}_{j} )\) plays an important role in determining the accuracy of the model. Many correlation functions can be chosen, such as linear, spherical, exponential, and Gaussian correlation functions, etc. Among these options, the Gaussian correlation function is the most popular, and it is given by:

where \(x_{i}^{k}\) and \(x_{j}^{k}\) are the \(k\) th elements of \({\mathbf{x}}_{i}\) and \({\mathbf{x}}_{j}\), respectively; \({\varvec{\uptheta}} = [\theta_{1} ,\,\theta_{2} , \ldots ,\theta_{n} ]^{T}\) are the correlation parameters which measure how fast the correlation between \({\mathbf{x}}_{i}\) and \({\mathbf{x}}_{j}\) decays with the distance between these two sites. \(\{ {\varvec{\uptheta}},\,{\varvec{\upbeta}},\,\sigma^{2} \}\) are the unknown parameters of a kriging model and they can be estimated via the maximum likelihood estimations with existing training samples.

The predicted mean value of the estimated response \(y\) at any un-sampled site \({\mathbf{x}}\) is:

and

Where the column vector \({\mathbf{y}}_{{\mathbf{s}}}\) contains the response values at all sample sites; f are the values of the polynomial function at all of the sample sites; \({\mathbf{r}}({\mathbf{x}})\) are the correlations between the un-sampled site x and all of the sample sites; and R is a correlation matrix of all of the sample sites. The predicted variance of the estimated response y at the un-sampled site x is:

where

and \(\hat{\sigma }_{y}^{2} ({\mathbf{x}})\) quantifies the interpolation uncertainty associated with the un-sampled site x.

Given a set of training samples, the unknown parameters, i.e., \(\{ {\varvec{\uptheta}},\,{\varvec{\upbeta}},\,\sigma^{2} \}\), in a kriging model can be estimated, and then the responses ast un-sampled sites can be predicted by Eq. 13 with the associated predicted variance given in Eq. 15. In this particular study, the inputs x correspond to a candidate testing resources allocation scheme, e.g., the number of additional specimens and/or the inspection interval for each component, and the response y is the performance of each scheme, e.g. the expected width of \((1 - \alpha ) \times 100\%\) confidence bound of the concerned reliability measure. The implicit relationship between the inputs and the response can be built up by the initial training samples from the DOE. The global accuracy of the kriging model can be quantified by the metrics [20], such as the R square, the Relative Average Absolute Error (RAAE), the Relative Maximum Absolute Error (RMAE), etc. If the accuracy of the kriging model is not satisfactory, additional training samples could be further generated by the sequential DOE to update the kriging model [25, 26]. The kriging model with acceptable accuracy will be used in the next step to seek the optimal testing resources allocation strategy.

3.3 Optimization Model and Algorithm

In this study, the reliability testing resources allocation for MSSs can be formulated as an optimization problem as follows:

where \({\mathbf{s}} = \{ s_{1} ,\,s_{2} , \ldots ,s_{M} \}\) and \({\mathbf{\Delta t}} = \{ \Delta t_{1} ,\,\Delta t_{2} , \ldots ,\Delta t_{M} \}\) are two sets of decision variables, representing the number of additional specimens to be allocated to each component and the inspection intervals for collecting data, respectively. A setting for \(\{ {\mathbf{s}} , { }{\mathbf{\Delta t}}\}\) corresponds to a candidate scheme for the reliability testing resources allocation. If \(\Delta t_{l}\) (\(l \in \{ 1,\,2, \ldots ,\,M\}\)) is set to be zero, it is the case where component l will be continuously inspected to collect observations. \(C_{0}\) is the cost constraint for the testing resources; \(s_{l}^{0}\) is the constraint for the maximum number of additional specimens of component l. \(E[ \cdot ]\) is expectation. The objective function, i.e., \(E[R_{(1 - \alpha ) \times 100\% } (t|f^{post} ({\varvec{\uplambda}}^{l} |data))|({\mathbf{s}} , { }{\mathbf{\Delta t}})]\), is replaced by the kriging model introduced in Sect. 3.2 to mitigate the computational burden.

It should be noted that the optimization problem in Eq. 17 involves both integer and real decision variables and the number of decision variables increases linearly with respect to the types of components in a system. An exhaustive examination of all the candidate solutions is not realistic due to the limited computational capability. In this study, the genetic algorithm (GA) is utilized to search the global optimal solution owing to its flexibility in terms of representing mixed variables in various optimization problems [27, 28].

The main procedures of implementing the GA to solve the specific optimization problem are as follows:

-

(1)

Population initialization. For our specific problem, the chromosome is composed of two parts, and it can be denoted as a string \({\mathbf{c}} = \{ s_{1} ,\,s_{2} , \ldots ,s_{M} ,\,\Delta t_{1} ,\,\) \(\Delta t_{2} , \ldots ,\Delta t_{M} \}\). The first M elements are non-negative integers, corresponding to the additional specimens for components, whereas the last M elements are non-negative real numbers, representing the inspection intervals for data collection. A set of \(N_{g}\) chromosomes, as the initial population, are randomly generated in the first iteration.

-

(2)

Fitness evaluation. The expected width of 90% confidence bounds of reliability measures serves as the fitness value of each chromosome, and it is predicted by the kriging model introduced in Sect. 3.2. The smaller expected width of 90% confidence bound, the higher the fitness value. The infeasible solutions which violate the constraints are handled by the penalty function approach.

-

(3)

New population generation. The roulette-wheel selection strategy is used to select chromosomes based on their fitness values from the present population to form a new generation of population for the next iteration. The crossover and mutation operators are used to produce new chromosomes to explore the unsearched solution space, while maintaining the diversity of a population. As the chromosome is composed of mixed decision variables, the crossover and mutation operators are performed separately for each of the two parts to keep the digits within their allowable bounds. \(N_{r}\) chromosomes with the highest fitness values will be directly merge into the new generation.

-

(4)

Iterative process termination. The optimization procedure terminates when the iteration count reaches \(N_{c}\). Otherwise, go to Step 2 for the next iteration.

It is worth noting that many other advanced optimization algorithms, such as the Tabu search, the simulated annealing (SA), the ant colony optimization (ACO), etc., can also be used here, instead of the GA, to solve the resulting optimization problem.

4 Illustrative Examples

The illustrative example is a three-unit multi-state power generating system as shown in Fig. 7. Components 1 and 2 connected in parallel constitute a subsystem. The performance capacities and the transition intensities of all the components are tabulated in Tables 1 and 2, respectively. The transition intensities are assumed to be unknown to the reliability engineers, but can be inferred by the proposed Bayesian approach. The system and subsystem are viewed as failure if their performance capacities are less the required demand level. In Example 1, we only focus on allocating the limited testing resources for components 1 and 2 to improve the reliability estimation of the subsystem at a particular time, while the testing resources are allocated for all the three components in Example 2.

4.1 Example 1

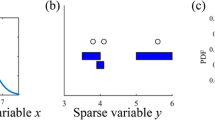

In this example, the testing resources allocation is only considered for components 1 and 2 to improve the reliability estimation of the subsystem at a specific time \(t = 3.0\) months. In other words, we expect to reduce the uncertainty of the subsystem reliability estimation at \(t = 3.0\) months. According to the transition intensities given in Table 2, 50 deterioration paths are randomly generated for components 1 and 2, respectively. Components 1 and 2 are supposed to be continuously inspected over time. The collected data are tabulated in Tables 3 and 4, and will be used to infer the posterior distributions of transition intensities. The prior distributions of transition intensities are set to be a uniform distribution in the range of [0, 0.5] month−1, together with the observations from 50 specimens, the posterior distributions of the transition intensities of the two components can be evaluated via the proposed Bayesian approach as shown in Figs. 8 and 9. Consequently, the subsystem reliability function can be estimated, as shown in Fig. 10, via the proposed simulation method in Sect. 2.2 if the required demand level W is given as tabulated in Table 5. The width of the 90% confidence bound of the subsystem reliability is 0.1087 at \(t = 3.0\) months. If the accuracy of the subsystem reliability function at \(t = 3.0\) months is not satisfactory, the inference results at the present stage can be viewed as Phase 1 shown in Fig. 3 and will facilitate the reliability testing resources allocation at Phase 2.

Suppose that the total budget for the reliability testing resources at Phase 2 is \(C_{0} = 29{,}500\) US dollars. The costs for conducting a test with continuous inspections for components 1 and 2 are 400 US dollars and 800 US dollars, respectively. A candidate testing resources allocation scheme, i.e., the additional number of reliability tests for each component, is denoted as \(\{ s_{1} ,\,s_{2} \}\) where \(s_{i} \in [0,\,50]\) (\(i \in \{ 1,\,2\}\)) is assumed as the decision space. By the Latin Hypercube Design (LHD), 15 candidate testing resources allocation schemes are randomly generated within the decision space. The performance, i.e., the expected width of \(90\%\) confidence bound of the subsystem reliability at \(t = 3.0\) months, of these candidate schemes are evaluated by the proposed approach in Sect. 3.1. However, it costs around 10 min to evaluate the performance for a candidate scheme, in which the sample size \(N_{d}\) is set to be 50. A kriging model, as depicted in Fig. 11, is therefore constructed to approximate the relationship between candidate schemes and the predicted performance of the schemes.

As seen in Fig. 11, with the increase of the numbers of reliability tests, the expected width of \(90\%\) confidence bound of the subsystem reliability at \(t = 3.0\) months declines. Additionally, adding the specimens for component 2 is more effective to reduce uncertainty than that of component 1, because the expected width of \(90\%\) confidence bound has a steeper decreasing trend along the \(s_{2}\) axis.

The resulting optimization problem with a budget constraint, i.e., \(400s_{1} + 800s_{2}\) \(\le 29{,}500\), can be resolved by the proposed GA algorithm. The optimal allocation strategy is \(\{ s_{1}^{*} = 17,\,s_{2}^{*} = 28\}\), and the corresponding expected width of confidence bound of the subsystem reliability at \(t = 3.0\) months is reduced to \(0.0852\). By replacing the time-consuming performance evaluation with a kriging model, the optimization algorithm takes less than 5.0 s via Matlab 2012 on a workstation with an Intel Xeon 2.10 GHz and 128 GB RAM when \(N_{g}\) and \(N_{c}\) are set to 50 and 1000, respectively.

4.2 Example 2

The proposed testing resources allocation approach is further validated in the entire system as shown in Fig. 7. The limited testing resources will be optimally distributed to the three components with the purpose of further reducing the uncertainty of the system reliability estimation at \(t = 3.0\) months. In addition to the data in Tables 3 and 4, 50 specimens of component 3 are supposed to be continuously inspected at Phase 1 and the collected data are tabulated in Table 6. The posterior distributions of transition intensities of the three components are evaluated by the proposed Bayesian method and depicted in Figs. 8, 9, and 12. They serve as the inputs for the decision-making at Phase 2. The possible values and the corresponding probabilities of the required demand W are given in Table 7. Therefore, the system reliability at any time instant can be evaluated via Eq. 9. The mean value and the 90% confidence bound of the estimated reliability function are shown in Fig. 13. At \(t = 3.0\) months, the width of the 90% confidence bound of the system reliability is 0.1368, and such uncertainty needs to be further reduced in Phase 2.

The budget for the reliability testing resources at Phase 2 is \(C_{0} = 97{,}500\) US dollars. The components can be continuously inspected or periodically inspected, and thus, a candidate testing resources allocation scheme can be represented by \(\{ s_{1} ,\,s_{2} ,\,s_{3} ,\Delta t_{1} ,\,\Delta t_{2} ,\,\Delta t_{3} \}\) where the number of additional specimens for each component \(s_{i} \in [0,\,50]\) (\(i \in \{ 1,\,2,\,3\}\)) and the inspection intervals for each component \(\Delta t_{i} \in [0,\,5]\) months (\(i \in \{ 1,\,2,\,3\}\)). The cost for conducting a test is associated with the inspection interval for data collection. In general, the cost of a test increases monotonically with the frequency of inspections. The following relationships are defined to link the inspection intervals with the cost for a single reliability test:

Component 1

Component 2

Component 3

where \(\Delta t_{i} = 0\) (\(i \in \{ 1,\,2,\,3\}\)) corresponds to the case of continuous inspections.

44 candidate allocation schemes are randomly generated via the LHD within the decision space and evaluated, and then, a kriging model is constructed to predict the performance, i.e., the expected width of \(90\%\) confidence bound of the system reliability at \(t = 3.0\) months, of a candidate scheme. The genetic algorithm with mixed decision variables is used to solve the optimal allocation scheme, and it takes around 30.0 s via Matlab 2012 on a workstation with an Intel Xeon 2.10 GHz and 128 GB RAM when \(N_{g}\) and \(N_{c}\) are set to 60 and 1000, respectively. The optimal testing resources allocation scheme is \(\{ s_{1}^{*} = 43,\,s_{2}^{*} = 39,\,s_{3}^{*} = 22,\,\Delta t_{1}^{*} =\,3.7,\) \(\Delta t_{2}^{*} = 1.1,\,\Delta t_{3}^{*} = 1.64\}\) with the expected width of \(90\%\) confidence bound equal to 0.0911 as predicted by the kriging model. By the flowchart in Fig. 6, the true performance of the optimal scheme is 0.0909 which is extremely close to the predicted value.

5 Conclusion

In this chapter, the testing resources allocation problem for MSSs is studied to optimally distribute the limited reliability testing resources to improve the accuracy of reliability estimation/prediction. The approach is on the base of the Bayesian reliability assessment method for MSSs with which both subjective information and actual continuous or discontinues inspection data can be merged to infer the unknown parameters, i.e., transition intensities \({\varvec{\uplambda}}^{l}\). The computational burden in the performance evaluation of candidate schemes is alleviated by introducing the kriging metamodel. The genetic algorithm is utilized to resolve the resulting optimization problem with mixed decision variables. Two illustrative examples are given to demonstrate the effectiveness and efficiency of the proposed method.

As reliability tests can be conducted at various physical levels of a system, allocating the limited testing resources across multiple levels of a system [29, 30], say system-level test, component-level test, is worth exploring in our future work.

References

Lisnianski A, Frenkel I, Ding Y (2010) Multi-state system analysis and optimization for engineers and industrial managers. Springer, London

Lisnianski A, Levitin G (2003) Multi-state system reliability: assessment. Optimization and applications. World Scientific, Singapore

Kuo W, Zuo MJ (2003) Optimal reliability modeling: principles and applications. Wiley, Hoboken, NJ

Lisnianski A, Elmakias D, Laredo D, Haim HB (2012) A multi-state Markov model for a short-term reliability analysis of a power generating unit. Reliab Eng Sys Saf 98(1):1–6

Ding Y, Zuo MJ, Lisnianski A, Tian Z (2008) Fuzzy multi-state systems: general definitions, and performance assessment. IEEE Trans Reliab 57(4):589–594

Liu Y, Huang HZ (2010) Reliability assessment for fuzzy multi-state systems. Int J Syst Sci 41(4):365–379

Li CY, Chen X, Yi XS, Tao JY (2011) Interval-valued reliability analysis of multi-state systems. IEEE Trans Reliab 60(4):595–606

Destercke S, Sallak M (2013) An extension of universal generating function in multi-state systems considering epistemic uncertainties. IEEE Trans Reliab 62(2):504–514

Liu Y, Lin P, Huang HZ (2015) Bayesian reliability and performance assessment for multi-state systems. IEEE Trans Reliab 64(1):394–409

Hamada M, Martz HF, Reese CS, Graves T, Johnson V, Wilson AG (2004) A fully Bayesian approach for combining multilevel failure information in fault tree quantification and optimal follow-on resource allocation. Reliab Eng Sys Saf 86(3):297–305

Anderson-Cook CM, Graves TL, Hamada MS (2009) Reliability allocation for reliability of a complex system with aging components. Qual Reliab Eng Int 25:481–494

Lu L, Chapman JL, Anderson-Cook CM (2013) A case study on selecting a best allocation of new data for improving the estimation precision of system and subsystem reliability using Pareto fronts. Technometrics 55(4):473–487

Yu K, Koren I, Guo Y (1994) Generalized multistate monotone coherent systems. IEEE Trans Reliab 43(2):242–250

Wang P, Youn BD, Xi Z, Kloess A (2009) Bayesian reliability analysis with evolving, insufficient, and subjective data sets. J Mech Des 131(11):111008

Smith JQ (1998) Decision analysis: a Bayesian approach. Chapman and Hall, London

Hamada MS, Wilson AG, Reese CS, Martz HF (2008) Bayesian reliability. Springer, London

Kelly D, Smith C (2011) Bayesian inference for probabilistic risk assessment. Springer, London

Levitin G (2005) The universal generating function in reliability analysis and optimization. Springer, London

Wynn HP (2004) Maximum entropy sampling and general equivalence theory. Advances in model-oriented design and analysis, contributions to statistics. Physica-Verlag, Heidelberg, pp 211–218

Jin R, Chen W, Simpson T (2001) Comparative studies of metamodeling techniques under multiple modelling criteria. Struct Multidiscip Optim 23:1–13

Jin R, Du X, Chen W (2003) The use of metamodeling techniques for optimization under uncertainty. Struct Multidiscip Optim 25:99–116

Cremona MA, Liu B, Hu Y, Bruni S, Lewis R (2016) Predicting railway wheel wear under uncertainty of wear coefficient, using universal kriging. Reliab Eng Sys Saf 154:49–59

Liu Y, Shi Y, Jiang T, Liu JZ, Wang WJ (2016) Metamodel-based direction guidance system optimization for improving efficiency of aircraft emergency evacuation. Comp Ind Eng 91:302–314

Zhang M, Gou W, Li L, Yang F, Yue Z (2016) Multidisciplinary design and multi-objective optimization on guide fins of twin-web disk using Kriging surrogate model. Struct Multidiscip Optim 55(1):361–373

Jin R, Chen W, Sudjianto A (2002) On sequential sampling for global metamodeling in engineering design. In: Proceedings of ASME 2002 design engineering technical conferences and computers and information in engineering conference, Montreal, Canada, pp 539–548

Wang GG, Shan S (2006) Review of metamodeling techniques in support of engineering design optimization. J Mech Des 129(4):370–380

Gen M, Yun YS (2006) Soft computing approach for reliability optimization: state-of-the-art survey. Reliab Eng Sys Saf 91(9):1008–1026

Levitin G (2006) Genetic algorithms in reliability engineering. Reliab Eng Sys Saf 91(9):975–976

Jiang T, Liu Y (2017) Parameter inference for non-repairable multi-state system reliability models by multi-level observation sequences. Reliab Eng Sys Saf 166:3–15

Liu Y, Chen CJ (2017) Dynamic reliability assessment for nonrepairable multistate systems by aggregating multilevel imperfect inspection data. IEEE Trans Reliab 66(2):281–297

Acknowledgements

The authors greatly acknowledge grant support from the National Natural Science Foundation of China under contract number 71371042 and the Fundamental Research Funds for the Central Universities under contract number ZYGX2015J082.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this chapter

Cite this chapter

Liu, Y., Jiang, T., Lin, P. (2018). Optimal Testing Resources Allocation for Improving Reliability Assessment of Non-repairable Multi-state Systems. In: Lisnianski, A., Frenkel, I., Karagrigoriou, A. (eds) Recent Advances in Multi-state Systems Reliability. Springer Series in Reliability Engineering. Springer, Cham. https://doi.org/10.1007/978-3-319-63423-4_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-63423-4_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-63422-7

Online ISBN: 978-3-319-63423-4

eBook Packages: EngineeringEngineering (R0)