Abstract

This chapter considers the possible solutions for adaptive attenuation of multiple sparse unknown and time-varying narrow-band disturbances. One takes also into account the possible presence of low damped complex zeros in the vicinity of the attenuation region. The problem of the design of the underlined linear controller for the known disturbance case is itself a challenging problem and is discussed first. The adaptive schemes proposed are obtained by extending the linear solutions to the case of unknown characteristics of the disturbances. Comparative experimental evaluation of the various solutions on a test bench are given.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- Central Controller

- Adaptive Regulation

- Task Execution Time

- Maximum Amplification

- Benchmark Specification

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In this chapter, the focus is on the strong attenuation of multiple sparsely located unknown and time-varying disturbances. One assumes that the various tonal disturbances are distant to each other in the frequency domain by a distance in Hz at least equal to \(10\,\%\) of the frequency of the disturbance and that the frequency of these disturbances vary over a wide frequency region.

The problem is to assure in this context a certain number of performance indices such as global attenuation, disturbance attenuation at the frequency of the disturbances, a tolerated maximum amplification (water bed effect), a good adaptation transient (see Sect. 12.3). The most difficult problem is to be sure that in all the configurations the maximum amplification is below a specified value. There is first a fundamental problem to solve: one has to be sure that in the known frequency case, for any combination of disturbances the attenuation and the maximum amplification specifications are achieved. The adaptive approach will only try to approach the performances of a linear controller for the case of known disturbances. So before discussing the appropriate adaptation schemes one has to consider the design methods to be used in order to achieve these constraints for the known frequencies case. This will be discussed in Sect. 13.2.

2 The Linear Control Challenge

In this section, the linear control challenge will be presented for the case of rejection of multiple narrow-band disturbances taking also into account the possible presence of low damped complex zeros in the vicinity of the border of the operational zone. Considering that in a linear context all the information is available, the objective is to set up the best achievable performance for the adaptive case.

Assuming that only one tonal vibration has to be cancelled in a frequency region far from the presence of low damped complex zeros and that the models of the plant and of the disturbance are known, the design of a linear regulator is relatively straightforward, using the internal model principle (see Chaps. 7 and 12).

The problem becomes much more difficult if several tonal vibrations (sinusoidal disturbances) have to be attenuated simultaneously since the water bed effect may become significant without a careful shaping of the sensitivity function when using the internal model principle. Furthermore, if the frequencies of the disturbance may be close to those of some of very low damped complex zeros of the plant, the use of the internal model principle should be used with care even in the case of a single disturbance (see Sect. 12.5).

This section will examine the various aspects of the design of a linear controller in the context of multiple tonal vibrations and the presence of low damped complex zeros. It will review various linear controller strategies.

To be specific these design aspects will be illustrated in the context of the active vibration control system using an inertial actuator, described in Sect. 2.2 and which has been already used for the case of a single tonal disturbance.

In this system, the tonal vibrations are located in the range of frequencies between 50 and 95 Hz. The frequency characteristics of the secondary path are given in Sect. 6.2.

Assume that a tonal vibration (or a narrow-band disturbance) p(t) is introduced into the system affecting the output y(t). The effect of this disturbance is centred at a specific frequency. As mentioned in Sect. 12.2.3, the IMP can be used to asymptotically reject the effects of a narrow-band disturbance at the system’s output if the system has enough gain in this region.

It is important also to take into account the fact that the secondary path (the actuator path) has no gain at very low frequencies and very low gain in high frequencies near \(0.5\,{f_s}\). Therefore, the control system has to be designed such that the gain of the controller be very low (or zero) in these regions (preferably 0 at 0 Hz and \(0.5\,{f_s}\)). Not taking into account these constraints can lead to an undesirable stress on the actuator.

In order to assess how good the controller is, it is necessary to define some control objectives that have to be fulfilled. For the remaining of this section, the narrow-band disturbance is supposed to be known and composed of 3 sinusoidal signals with 55, 70 and 85 Hz frequencies. The control objective is to attenuate each component of the disturbance by a minimum of 40 dB, while limiting the maximum amplification at 9 dB within the frequency region of operation. Furthermore it will be required that low values of the modulus of the input sensitivity function be achieved outside the operation region.

The use of the IMP principle completed with the use of auxiliary real (aperiodic) poles which have been used in Chap. 11 as a basic design for adaptive attenuation of one unknown disturbance may not work satisfactory for the case of multiple unknown disturbances even if it may provide good performance in some situations [1]. Even in the case of a single tonal disturbance, if low damped complex zeros near the border of the operation region are present, this simple design is not satisfactory. Auxiliary low damped complex poles have to be added. See Chap. 12, Sect. 12.6.

One can say in general, that the IMP is doing too much in terms of attenuation of tonal disturbances which of course can generate in certain case unacceptable water bed effects. In fact in practice one does not need a full rejection of the disturbance, but just a certain level of attenuation.

Three linear control strategy for attenuation of multiple narrow-band disturbances will be considered

-

1.

Band-stop filters (BSF) centred at the frequencies of the disturbances

-

2.

IMP combined with tuned notch filters

-

3.

IMP with additional fixed resonant poles

The controller design will be done in the context of pole placement. The initial desired closed-loop poles for the design of the central controller defined by the characteristic polynomial \(P_0\) include all the stable poles of the secondary path model and the free auxiliary poles are all set at 0.3. The fixed part of the central controller numerator is chosen as \(H_R(z^{-1})=(1-z^{-1})\cdot (1+z^{-1})\) in order to open the loop at 0 Hz and 0.5 \({f_s}\).

2.1 Attenuation of Multiple Narrow-Band Disturbances Using Band-Stop Filters

The purpose of this method is to allow the possibility of choosing the desired attenuation and bandwidth of attenuation for each of the narrow-band component of the disturbance. Choosing the level of attenuation and the bandwidth allows to preserve acceptable characteristics of the sensitivity functions outside the attenuation bands and this is very useful in the case of multiple narrow-band disturbances. This is the main advantage with respect to classical internal model principle which in the case of several narrow-band disturbances, as a consequence of complete cancellation of the disturbances, may lead to unacceptable values of the modulus of the output sensitivity function outside the attenuation regions. The controller design technique uses the shaping of the output sensitivity function in order to impose the desired attenuation of narrow-band disturbances. This shaping techniques has been presented in Sect. 7.2.

The process output can be written asFootnote 1

where

is called the secondary path of the system.

As specified in the introduction, the hypothesis of constant dynamic characteristics of the AVC system is considered (similar to [2, 3]). The denominator of the secondary path model is given by

the numerator is given by

and d is the integer delay (number of sampling periods).Footnote 2

The control signal is given by

with

where \(H_S(q^{-1})\) and \(H_R(q^{-1})\) represent fixed (imposed) parts in the controller and \(S'(q^{-1})\) and \(R'(q^{-1})\) are computed.

The basic tool is a digital filter \(S_{BSF_i}(z^{-1})/P_{BSF_i}(z^{-1})\) with the numerator included in the controller polynomial S and the denominator as a factor of the desired closed-loop characteristic polynomial, which will assure the desired attenuation of a narrow-band disturbance (index \(i\in \{1,\cdots ,n\}\)).

The BSFs have the following structure

resulting from the discretization of a continuous filter (see also [4, 5])

using the bilinear transformation. This filter introduces an attenuation of

at the frequency \(\omega _{i}\). Positive values of \(M_i\) denote attenuations (\(\zeta _{n_i}<\zeta _{d_i}\)) and negative values denote amplifications (\(\zeta _{n_i}>\zeta _{d_i}\)). Details on the computation of the corresponding digital BSF have been given in Chap. 7.Footnote 3

Remark

The design parameters for each BSF are the desired attenuation (\(M_i\)), the central frequency of the filter (\(\omega _i\)) and the damping of the denominator (\(\zeta _{d_i}\)). The denominator damping is used to adjust the frequency bandwidth of the BSF. For very small values of the frequency bandwidth the influence of the filters on frequencies other than those defined by \(\omega _i\) is negligible. Therefore, the number of BSFs and subsequently that of the narrow-band disturbances that can be compensated can be large.

For n narrow-band disturbances, n BSFs will be used

As stated before, the objective is that of shaping the output sensitivity function. \(S(z^{-1})\) and \(R(z^{-1})\) are obtained as solutions of the Bezout equation

where

and \(P(z^{-1})\) is given by

In the last equation, \(P_{BSF}\) is the product of the denominators of all the BSFs, (13.11), and \(P_0\) defines the initial imposed poles of the closed-loop system in the absence of the disturbances (allowing also to satisfy robustness constraints). The fixed part of the controller denominator \(H_S\) is in turn factorized into

where \(S_{BSF}\) is the combined numerator of the BSFs, (13.11), and \(H_{S_1}\) can be used if necessary to satisfy other control specifications. \(H_{R_1}\) is similar to \(H_{S_1}\) allowing to introduce fixed parts in the controller’s numerator if needed (like opening the loop at certain frequencies). It is easy to see that the output sensitivity function becomes

and the shaping effect of the BSFs upon the sensitivity functions is obvious. The unknowns \(S'\) and \(R'\) are solutions of

and can be computed by putting (13.17) into matrix form (see also [5]). The size of the matrix equation that needs to be solved is given by

where \(n_A\), \(n_B\) and d are respectively the order of the plant’s model denominator, numerator and delay (given in (13.3) and (13.4)), \(n_{H_{S_1}}\) and \(n_{H_{R_1}}\) are the orders of \(H_{S_1}(z^{-1})\) and \(H_{R_1}(z^{-1})\) respectively and n is the number of narrow-band disturbances. Equation (13.17) has an unique minimal degree solution for \(S'\) and \(R'\), if \(n_P\le n_{Bez}\), where \(n_P\) is the order of the pre-specified characteristic polynomial \(P(q^{-1})\). Also, it can be seen from (13.17) and (13.15) that the minimal orders of \(S'\) and \(R'\) will be:

In Fig. 13.1, one can see the improvement obtained using BSF with respect to the case when IMP with real auxiliary poles is used. The dominant poles are the same in both cases. The input sensitivity function is tuned before introducing the BSFs.

2.2 IMP with Tuned Notch Filters

This approach is based on the idea of considering an optimal attenuation of the disturbance taking into account both the zeros and poles of the disturbance model. It is assumed that the model of the disturbance is a notch filter and the disturbance is represented by

where e(t) is a zero mean white Gaussian noise sequence and

is a polynomial with roots on the unit circle.Footnote 4

In (13.20), \(\alpha = -2\cos \left( 2\pi \omega _1 T_s\right) \), \(\omega _1\) is the frequency of the disturbance in Hz, and \(T_s\) is the sampling time. \(D_p(\rho z^{-1})\) is given by:

with \(0<\rho <1\). The roots of \(D_p(\rho z^{-1})\) are in the same radial line as those of \(D_p(z^{-1})\) but inside of the unitary circle, and therefore stable [6].

This model is pertinent for representing narrow-band disturbances as shown in Fig. 13.2, where the frequency characteristics of this model for various values of \(\rho \) are shown.

Using the output sensitivity function, the output of the plant in the presence of the disturbance can be expressed as

or alternatively as

where

In order to minimize the effect of the disturbance upon y(t), one should minimize the variance of \(\beta (t)\). One has two tuning devices \(H_S\) and \(P_{aux}\). Minimization of the variance of \(\beta (t)\) is equivalent of searching \(H_S\) and \(P_{aux}\) such that \(\beta (t)\) becomes a white noise [5, 7]. The obvious choices are \(H_S=D_p\) (which corresponds to the IMP) and \(P_{aux}=D_p(\rho z^{-1})\). Of course this development can be generalized for the case of multiple narrow-band disturbances. Figure 13.1 illustrates the effect of this choice upon the output sensitivity function. As it can be seen, the results are similar to those obtained with BSF.

2.3 IMP Design Using Auxiliary Low Damped Complex Poles

The idea is to add a number of fixed auxiliary resonant poles which will act effectively as \(\rho \)-filters for few frequencies and as an approximation of the \(\rho \)-filters at the other frequencies. This means that a number of the real auxiliary poles used in the basic IMP design will be replaced by a number of resonant complex poles. The basic ad-hoc rule is that the number of these resonant poles is equal to the number of the low damped complex zeros located near the border of the operation region plus \(n-1\) (n is the number of tonal disturbances).

For the case of 3 tonal disturbances located in the operation region 50 to 95 Hz taking also into account the presence of the low damped complex zeros, the locations and the damping of these auxiliary resonant poles are summarized in Table 13.1. The poles at 50 and 90 Hz are related to the presence in the neighbourhood of low damped complex zeros. The poles at 60 and 80 Hz are related to the 3 tonal disturbances to be attenuated. The effect of this design with respect to the basic design using real auxiliary poles is illustrated in Fig. 13.3.

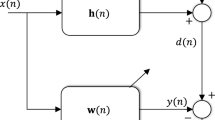

3 Interlaced Adaptive Regulation Using Youla–Kučera IIR Parametrization

The adaptive algorithm developed in Chap. 12 uses an FIR structure for the Q-filter. In this section, a new algorithm is developed, using an IIR structure for the Q filter in order to implement the linear control strategies using tuned notch filters (tuned auxiliary resonant poles). The use of this strategy is mainly dedicated to the case of multiple unknown tonal disturbances.

As indicated previously, since \(D_p(\rho z^{-1})\) will define part of the desired closed-loop poles, it is reasonable to consider an IIR Youla–Kučera filter of the form \(B_Q(z^{-1})/A_Q(z^{-1})\) with \(A_Q(z^{-1})=D_p(\rho q^{-1})\) (which will automatically introduce \(D_p(\rho q^{-1})\) as part of the closed-loop poles). \(B_Q\) will introduce the internal model of the disturbance. In this context, the controller polynomials R and S are defined by

and the poles of the closed-loop are given by:

\(R_0(z^{-1})\), \(S_0(z^{-1})\) are the numerator and denominator of the central controller

and the closed-loop poles defined by the central controller are the roots of

It can be seen from (13.25) and (13.26) that the new controller polynomials conserve the fixed parts of the central controller.

Using the expression of the output sensitivity function (AS / P) the output of the system can be written as follows:

where the closed-loop poles are defined by (13.27) and where w(t) is defined as:

Comparing (13.32) with (12.20) from Chap. 12, one can see that they are similar except that \(S_0\) is replaced by \(A_QS_0\) and \(P_0\) by \(A_QP_0\). Therefore if \(A_Q\) is known, the algorithm given in Chap. 12 for the estimation of the Q FIR filter can be used for the estimation of \(B_Q\). In fact this will be done using an estimation of \(A_Q\). A block diagram of the interlaced adaptive regulation using the Youla–Kučera parametrization is shown in Fig. 13.4. The estimation of \(A_Q\) is discussed next.

3.1 Estimation of \(A_Q\)

Assuming that plant model = true plant in the frequency range, where the narrow-band disturbances are introduced, it is possible to get an estimation of p(t), named \(\hat{p}(t)\), using the following expression

where w(t) was defined in (13.33). The main idea behind this algorithm is to consider the signal \(\hat{p}(t)\) as

where \(\left\{ c_i,\omega _i,\beta _i\right\} \ne 0\), n is the number of narrow-band disturbances and \(\eta \) is a noise affecting the measurement. It can be verified that, after two steps of transient \(\left( 1-2\cos (2\pi \omega _i T_s)q^{-1}+q^{-2}\right) \cdot c_i\sin \left( \omega _i t+\beta _i\right) =0\) [8]. Then the objective is to find the parameter \(\left\{ {\alpha }\right\} _{i=1}^{n}\) that makes \(D_p(q^{-1})\hat{p}(t)=0\).

The previous product can be equivalently written as \(D_p(q^{-1})\hat{p}(t+1)=0\) and its expression is

where n is the number of narrow-band disturbances.

Defining the parameter vector as

and the observation vector at time t as:

where

Equation (13.37) can then be simply represented by

Assuming that an estimation of \(\hat{D}_p(q^{-1})\) is available at the instant t, the estimated product is written as follows:

where \(\hat{\theta }_{D_p}(t)\) is the estimated parameter vector at time t. Then the a priori prediction error is given by

and the a posteriori adaptation error using the estimation at \(t+1\)

Equation (13.46) has the standard form of an a posteriori adaptation error [9] which allows to associate the standard parameter adaptation algorithm (PAA) introduced in Chap. 4 (Eqs. (4.121)–(4.123)):

The PAA defined in (4.121)–(4.123) is used with \(\phi (t) = \phi _{D_p}(t)\), \(\hat{\theta }(t)=\hat{\theta }_{D_p}(t)\) and \(\varepsilon ^\circ (t+1)=\varepsilon ^\circ _{D_p}(t+1)\). For implementation, since the objective is to make \(x(t+1)\rightarrow 0\), the implementable a priori adaptation error is defined as follows:

Additional filtering can be applied on \(\hat{p}(t)\) to improve the signal-noise ratio. Since a frequency range of interest was defined, a bandpass filter can be used on \(\hat{p}(t)\). Once an estimation of \(D_p\) is available, \(A_Q=D_p(\rho q^{-1})\) is immediately generated. Since the estimated \(\hat{A}_Q\) will be used for the estimation of the parameters of \(B_Q\) one needs to show that: \(\lim _{t\rightarrow \infty } \hat{A}_Q(z^{-1})=A_Q(z^{-1 })\). This is shown in Appendix C.

3.2 Estimation of \(B_Q(q^{-1})\)

Taking into account (13.12), (13.15), (13.16), and (13.17), it remains to compute \(B_Q(z^{-1})\) such that

Turning back to (13.26) one obtains

and taking into consideration also (13.29) it results

Once an estimation algorithm is developed for polynomial \(\hat{A}_Q(q^{-1})\), the next step is to develop the estimation algorithm for \(\hat{B}_Q(q^{-1})\). Assuming that the estimation \(\hat{A}_Q(t)\) of \(A_Q(z^{-1})\) is available, one can incorporate this polynomial to the adaptation algorithm defined in Sect. 12.2.2. Using (13.32) and (13.27) and assuming that an estimation of \(\hat{B}_Q(q^{-1})\) is available at the instant t, the a priori error is defined as the output of the closed-loop system written as followsFootnote 5

where the notationsFootnote 6

have been introduced.

Substituting (13.53) in (13.55) one gets:

where

tends asymptotically to zero since it is the output of an asymptotically stable filter whose input is a Dirac pulse.

The equation for the a posteriori error takes the formFootnote 7

where

and n is the number of narrow-band disturbances. The convergence towards zero for the signal \(\upsilon _1(t+1)\) is assured by the fact that \(\lim _{t\rightarrow \infty } \hat{A}_Q(t,z^{-1})=A_Q(z^{-1 })\) (see Appendix C). Since \(\upsilon ^{f}(t+1)\) and \(\upsilon _1(t+1)\) tend towards zero, (13.63) has the standard form of an adaptation error equation (see Chap. 4 and [9]), and the following PAA is proposed:

There are several possible choices for the regressor vector \(\varPhi _1(t)\) and the adaptation error \(\nu (t+1)\), because there is a strictly positive real condition for stability related to the presence of the term \(\frac{1}{A_Q}\) in (13.63). For the case where \(\nu (t+1)=\varepsilon (t+1)\), one has \(\nu ^\circ (t+1)=\varepsilon ^\circ (t+1)\), where

For the case where \(\nu (t+1)=\hat{A}_Q\varepsilon (t+1)\):

These various choices result from the stability analysis given in Appendix C. They are detailed below and summarized in Table 13.2.

-

\(\varPhi _1(t)=\phi _1(t)\). In this case, the prediction error \(\varepsilon (t+1)\) is chosen as adaptation error \(\nu (t+1)\) and the regressor vector \(\varPhi _1(t) = \phi _1(t)\). Therefore, the stability condition is: \(H'=\frac{1}{A_Q}-\frac{\lambda _2}{2}\) (\(\max _t \lambda _2(t)\le \lambda _2<2\)) should be strictly positive real (SPR).

-

\(\nu (t+1) = \hat{A}_Q\varepsilon (t+1)\). The adaptation error is considered as the filtered prediction error \(\varepsilon (t+1)\) through a filter \(\hat{A}_Q\). The regressor vector is \(\varPhi _1(t) = \phi _1(t) \) and the stability condition is modified to: \(H' = \frac{\hat{A}_Q}{A_Q}-\frac{\lambda _2}{2}\) (\(\max _t \lambda _2(t)\le \lambda _2<2\)) should be SPR where \(\hat{A}_Q\) is a fixed estimation of \(A_Q\).

-

\(\varPhi _1(t) = \phi _1^f(t)\). Instead of filtering the adaptation error, the observations can be filtered to relax the stability condition.Footnote 8 By filtering the observation vector \(\phi _1(t)\) through \(\frac{1}{\hat{A}_Q}\) and using \(\nu (t+1) = \varepsilon (t+1)\), the stability condition is: \(H'=\frac{\hat{A}_Q}{A_Q}-\frac{\lambda _2}{2}\) (\(\max _t \lambda _2(t)\le \lambda _2<2\)) should be SPR, where \(\phi _1^f(t) = \frac{1}{\hat{A}_Q}\phi _1(t)\) (\(\hat{A}_Q\) is a fixed estimation of \(A_Q\)).

-

\(\varPhi _1(t) = \phi _1^f(t)= \frac{1}{\hat{A}_Q(t)}\) where \(\hat{A}_Q=\hat{A}_Q(t)\) is the current estimation of \(A_Q\). When filtering through a current estimation \(\hat{A}_Q(t)\) the condition is similar to the previous case except that it is only valid locally [9].

It is this last option which is used in [10] and in Sect. 13.5.

The following procedure is applied at each sampling time for adaptive operation:

-

1.

Get the measured output \(y(t+1)\) and the applied control u(t) to compute \(w(t+1)\) using (13.33).

-

2.

Obtain the filtered signal \(\hat{p}(t+1)\) from (13.35).

-

3.

Compute the implementable a priori adaptation error with (13.48).

-

4.

Estimate \(\hat{D}_p(q^{-1})\) using the PAA and compute at each step \(\hat{A}_Q(q^{-1})\).

-

5.

Compute \(w^{f}(t)\) with (13.69).

-

6.

Compute \(w_1(t+1)\) with (13.58).

-

7.

Put the filtered signal \(w_2^f(t)\) in the observation vector, as in (13.68).

-

8.

Compute the a priori adaptation error defined in (13.74).

-

9.

Estimate the \(B_Q\) polynomial using the parametric adaptation algorithm (13.70)–(13.72).

-

10.

Compute and apply the control (see Fig. 13.4):

$$\begin{aligned} S_0u(t) = -R_0y(t+1) - H_{S_0}H_{R_0}\left( \hat{B}_Q(t)w(t+1)-\hat{A}_Q^{*}\hat{u}_Q(t)\right) . \end{aligned}$$(13.76)

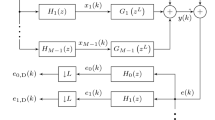

4 Indirect Adaptive Regulation Using Band-Stop Filters

In this section , an indirect adaptive regulation scheme will be developed for implementing the attenuation of multiple unknown narrow-band disturbances using band-stop filters centred at the frequencies corresponding to spikes in the spectrum of the disturbance. The principle of the linear design problem has been discussed in Sect. 13.2.1.

The design of the BSF for narrow-band disturbance attenuation is further simplified by considering a Youla–Kučera parametrization of the controller [2, 11–13]. By doing this, the dimension of the matrix equation that has to be solved is reduced significantly and therefore the computation load will be much lower in the adaptive case.

In order to implement this approach in the presence of unknown narrow-band disturbances, one needs to estimate in real time the frequencies of the spikes contained in the disturbance. System identification techniques can be used to estimate the ARMA model of the disturbance [3, 14]. Unfortunately, to find the frequencies of the spikes from the estimated model of the disturbance requires computation in real time of the roots of an equation of order \(2\cdot n\), where n is the number of spikes. Therefore, this approach is applicable in the case of one eventually two narrow-band disturbances [1, 2]. What is needed is an algorithm which can directly estimate the frequencies of the various spikes of the disturbance. Several methods have been proposed [15]. The adaptive notch filter (ANF) is particularly interesting and has been reviewed in a number of articles [6, 16–21]. In this book, the estimation approach presented in [22, 23] will be used. Combining the frequency estimation procedure and the control design procedure, an indirect adaptive regulation system for attenuation of multiple unknown and/or time-varying narrow-band disturbances is obtained.

In the present context, the hypothesis of constant dynamic characteristics of the AVC system is made (like in [3]). Furthermore, the corresponding control model is supposed to be accurately identified from input/output data.

4.1 Basic Scheme for Indirect Adaptive Regulation

The equation describing the system has been given in Sect. 13.2. The basic scheme for indirect adaptive regulation is presented in Fig. 13.5. In the context of unknown and time-varying disturbances, a disturbance observer followed by a disturbance model estimation block have to be used in order to obtain information on the disturbance characteristics needed to update the controller parameters.

With respect to Eq. (13.1), it is supposed that

represents the effect of the disturbance on the measured output.Footnote 9

Under the hypothesis that the plant model parameters are constant and that an accurate identification experiment can be run, a reliable estimate \(\hat{p}(t)\) of the disturbance signal can be obtained using the following disturbance observer

A disturbance model estimation block can then be used to identify the frequencies of the sines in the disturbance. With this information, the control parameters can directly be updated using the procedure described in Sect. 13.2.1. To deal with time-varying disturbances, the Bezout equation (13.17) has to be solved at each sampling instant in order to adjust the output sensitivity function. Nevertheless, given the size of this equation (see (13.18)), a significant part of the controller computation time would be consumed to solve this equation. To reduce the complexity of this equation, a solution based on the Youla–Kučera parametrization is described in the following section.

4.2 Reducing the Computational Load of the Design Using the Youla–Kučera Parametrization

The attenuation of narrow-band disturbances using band-stop filters (BSF) has been presented in Sect. 13.2.1 in the context of linear controllers.

In an indirect adaptive regulation scheme, the Diophantine equation (13.17) has to be solved either at each sampling time (adaptive operation) or each time when a change in the narrow-band disturbances’ frequencies occurs (self-tuning operation). The computational complexity of (13.17) is significant (in the perspective of its use in adaptive regulation). In this section, we show how the computation load of the design procedure can be reduced by the use of the Youla–Kučera parametrization.

As before, a multiple band-stop filter, (13.11), should be computed based on the frequencies of the multiple narrow-band disturbance (the problem of frequencies estimation will be discussed in Sect. 13.4.3).

Suppose that a nominal controller is available, as in (13.28) and (13.29), that assures nominal performances for the closed-loop system in the absence of narrow-band disturbances. This controller satisfies the Bezout equation

Since \(P_{BSF}(z^{-1})\) will define part of the desired closed-loop poles, it is reasonable to consider an IIR Youla–Kučera filter of the form \(\frac{B_Q(z^{-1})}{P_{BSF}(z^{-1})}\) (which will automatically introduce \(P_{BSF}(z^{-1})\) as part of the closed-loop poles). For this purpose, the controller polynomials are factorized as

where \(B_Q(z^{-1})\) is an FIR filter that should be computed in order to satisfy

for \(P(z^{-1})=P_0(z^{-1})P_{BSF}(z^{-1})\), and \(R_0(z^{-1})\), \(S_0(z^{-1})\) given by (13.28) and (13.29), respectively. It can be seen from (13.80) and (13.81), using (13.28) and (13.29), that the new controller polynomials conserve the fixed parts of the nominal controller.

Equation (13.18) gives the size of the matrix equation to be solved if the Youla–Kučera parametrization is not used. Using the previously introduced YK parametrization, it will be shown here that a smaller size matrix equation can be found that allows to compute the \(B_Q(z^{-1})\) filter so that the same shaping be introduced on the output sensitivity function (13.16). This occurs if the controller denominator \(S(z^{-1})\) in (13.81) is the same as the one given in (13.13), i.e.,

where \(H_S(z^{-1})\) has been replaced by (13.15).

Replacing \(S(z^{-1})\) in the left term with its formula given in (13.81) and rearranging the terms, one obtains

and taking into consideration also (13.29) it results

which is similar to (13.54) except that band-stop filters are used instead of notch filters.

In the last equation, the left side of the equal sign is known and on its right side only \(S'(z^{-1})\) and \(B_Q(z^{-1})\) are unknown. This is also a Bezout equation which can be solved by finding the solution to a matrix equation of dimension

As it can be observed, the size of the new Bezout equation is reduced in comparison to (13.18) by \(n_A+n_{H_{S_0}}\). For systems with large dimensions, this has a significant influence on the computation time. Taking into account that the nominal controller is an unique and minimal degree solution the Bezout equation (13.79), we find that the left hand side of (13.85) is a polynomial of degree

which is equal to the quantity given in (13.86). Therefore, the solution of the simplified Bezout equation (13.85) is unique and of minimal degree. Furthermore, the order of the \(B_Q\) FIR filter is equal to \(2\cdot n\).

Figure 13.6 summarizes the implementation of the Youla–Kučera parametrized indirect adaptive controller.

4.3 Frequency Estimation Using Adaptive Notch Filters

In order to use the presented control strategy in the presence on unknown and/or time-varying narrow-band disturbances, one needs an estimation in real time of the spikes’ frequencies in the spectrum of the disturbance. Based on this estimation in real time of the frequencies of the spikes, the band-stop filters will be designed in real time.

In the framework of narrow-band disturbance rejection, it is usually supposed that the disturbances are in fact sinusoidal signals with variable frequencies. It is assumed that the number of narrow-band disturbances is known (similar to [2, 3, 8]). A technique based on ANFs (adaptive notch filters) will be used to estimate the frequencies of the sinusoidal signals in the disturbance (more details can be found in [6, 23]).

The general form of an ANF is

where the polynomial \(A_{f}(z^{-1})\) is such that the zeros of the transfer function \(H_{f}(z^{-1})\) lie on the unit circle. A necessary condition for a monic polynomial to satisfy this property is that its coefficients have a mirror symmetric form

Another requirement is that the poles of the ANF should be on the same radial lines as the zeros but slightly closer to the origin of the unit circle. Using filter denominators of the general form \(A_{f}(\rho z^{-1})\) with \(\rho \) a positive real number smaller but close to 1, the poles have the desired property and are in fact located on a circle of radius \(\rho \) [6].

The estimation algorithm will be detailed next. It is assumed that the disturbance signal (or a good estimation) is available.

A cascade construction of second-order ANF filters is considered. Their number is given by the number of narrow-band signals, whose frequencies have to be estimated. The main idea behind this algorithm is to consider the signal \(\hat{p}(t)\) as having the form

where \(\eta (t)\) is a noise affecting the measurement and n is the number of narrow-band signals with different frequencies.

The ANF cascade form will be given by (this is an equivalent representation of Eqs. (13.88) and (13.89))

Next, the estimation of one spike’s frequency is considered, assuming convergence of the other \(n-1\), which can thus be filtered out of the estimated disturbance signal, \(\hat{p}(t)\), by applying

The prediction error is obtained from

and can be computed based on one of the \(\hat{p}^j(t)\) to reduce the computation complexity. Each cell can be adapted independently after prefiltering the signal by the others. Following the Recursive Prediction Error (RPE) technique, the gradient is obtained as

The parameter adaptation algorithm can be summarized as

where \(\hat{a}^{f_j}\) are estimations of the true \(a^{f_j}\), which are connected to the narrow-band signals’ frequencies by \(\omega _{f_j}=f_s\cdot \arccos (-\frac{a^{f_j}}{2})\), where \(f_s\) is the sampling frequency.

4.3.1 Implementation of the Algorithm

The design parameters that need to be provided to the algorithm are the number of narrow-band spikes in the disturbance (n), the desired attenuations and damping of the BSFs, either as unique values (\(M_i=M,~\zeta _{d_i}=\zeta _{d},~\forall i \in \{1,\ldots , n\}\)) or as individual values for each of the spikes (\(M_i\) and \(\zeta _{d_i}\)), and the central controller (\(R_0\), \(S_0\)) together with its fixed parts (\(H_{R_0}\), \(H_{S_0}\)) and of course the estimation of the spikes’ frequencies. The control signal is computed by applying the following procedure at each sampling time:

-

1.

Get the measured output \(y(t+1)\) and the applied control u(t) to compute the estimated disturbance signal \(\hat{p}(t+1)\) as in (13.78).

-

2.

Estimate the disturbances’ frequencies using adaptive notch filters, Eqs. (13.92)–(13.96).

-

3.

Calculate \(S_{BSF}(z^{-1})\) and \(P_{BSF}(z^{-1})\) as in (13.8)–(13.11).

-

4.

Find \(Q(z^{-1})\) by solving the reduced order Bezout equation (13.85).

-

5.

Compute and apply the control using (13.5) with R and S given respectively by (13.80) and (13.81) (see also Fig. 13.6):

$$\begin{aligned} S_0u(t) = -R_0y(t+1) - H_{S_0}H_{R_0}\left( B_Q(t)w(t+1)-P_{BSF}^{*}u_Q(t)\right) . \end{aligned}$$(13.97)

4.4 Stability Analysis of the Indirect Adaptive Scheme

The stability analysis of this scheme can be found in [24].

5 Experimental Results: Attenuation of Three Tonal Disturbances with Variable Frequencies

Samples of the experimental results obtained with the direct adaptive regulation scheme (see Sect. 13.2.3 and [25]), with the interlaced adaptive regulation scheme (see Sect. 13.3) and with the indirect adaptive regulation scheme (see Sect. 13.4) on the test bench described in Chap. 2, Sect. 2.2 are given in this section. A step change in the frequencies of three tonal disturbances is considered (with return to the initial values of the frequencies). Figures 13.7, 13.8 and 13.9 show the time responses of the residual force. Figures 13.10, 13.11 and 13.12 show the difference between the PSD in open-loop and in closed-loop as well as the estimated output sensitivity function. Figure 13.13 shows the evolution of the parameters of the FIR adaptive Youla–Kučera filter used in the direct adaptive regulation scheme. Figures 13.14 and 13.15 show the evolution of the estimated parameters of \(D_p\) (used to compute \(A_Q\)—the denominator of the IIR Youla–Kučera filter) and of the numerator \(B_Q\) of the IIR Youla–Kučera filter used in the interlaced adaptive regulation scheme. Figure 13.16 shows the evolution of the estimated frequencies of the three tonal disturbances used to compute the band-stop filters in the indirect adaptive regulation scheme.

For this particular experiment, the interlaced adaptive regulation scheme offers the best compromise disturbance attenuation/maximum amplification. Nevertheless, a global evaluation requires to compare the experimental results on a number of situations and this is done in the next section.

6 Experimental Results: Comparative Evaluation of Adaptive Regulation Schemes for Attenuation of Multiple Narrow-Band Disturbances

6.1 Introduction

Three schemes for adaptive attenuation of single and multiples sparsely located unknown and time-varying narrow-band disturbances have been presented in Chap. 12, Sect. 12.2.2 and in Sects. 13.3 and 13.4 of this chapter. They can be summarized as follows:

-

(1)

Direct adaptive regulation using FIR Youla–Kučera parametrization

-

(2)

Interlaced adaptive regulation using IIR Youla–Kučera parametrization

-

(3)

Indirect adaptive regulation using band-stop filters

The objective is to comparatively evaluate these three approaches in a relevant experimental environment.

An international benchmark on adaptive regulation of sparse distributed unknown and time-varying narrow-band disturbances has been organized in 2012–2013. The summary of the results can be found in [26]. The various contributions can be found in [25, 27–32]. Approaches 1 and 3 have been evaluated in this context. The approach 2, which is posterior to the publication of the benchmark results has been also evaluated in the same context. Detailed results can be found in [33]. Approaches 1 and 3 provided some of the best results for the fulfilment of the benchmark specifications (see [26]). Therefore a comparison of the second approach with the first and third approach is relevant for assessing its potential.

In what follows a comparison of the three approaches will be made in the context of the mentioned benchmark. The objective will be to assess their potential using some of the global indicators used in benchmark evaluation.

In Chap. 12, Sect. 12.3, some of the basic performance indicators have been presented. In the benchmark evaluation process, several protocols allowing to test the performance for various environmental conditions have been defined. Based on the results obtained for the various protocols, global performance indicator have been defined and they will be presented in the next section. This will allow later to present in a compact form the comparison of the real-time performance of the three approaches considered in Chap. 12 and this chapter. Further details can be found in [25, 28, 33].

The basic benchmark specification are summarized in Table 13.3 for the three levels of difficulty (range of frequencies variations: 50 to 95 Hz):

-

Level 1: Rejection of a single time-varying sinusoidal disturbance.

-

Level 2: Rejection of two time-varying sinusoidal disturbances.

-

Level 3: Rejection of three time-varying sinusoidal disturbances.

6.2 Global Evaluation Criteria

Evaluation of the performances will be done for both simulation and real-time results. The simulation results will give us information upon the potential of the design methods under the assumption: design model \(=\) true plant model. The real-time results will tell us in addition what is the robustness of the design with respect to plant model uncertainties and real noise.

Steady-State Performance (Tuning capabilities)

As mentioned earlier, these are the most important performances. Only if a good tuning for the attenuation of the disturbance can be achieved, it makes sense to examine the transient performance of a given scheme. For the steady-state performance, which is evaluated only for the simple step change in frequencies, the variable k, with \(k=1,\ldots ,3\), will indicate the level of the benchmark. In several criteria a mean of certain variables will be considered. The number of distinct experiments, M, is used to compute the mean. This number depends upon the level of the benchmark (\(M=10\) if \(k=1\), \(M=6\) if \(k=2\), and \(M=4\) if \(k=3\)).

The performances can be evaluated with respect to the benchmark specifications. The benchmark specifications will be in the form: XXB, where XX will denote the evaluated variable and B will indicate the benchmark specification. \(\varDelta XX\) will represent the error with respect to the benchmark specification.

Global Attenuation—GA

The benchmark specification corresponds to \(GAB_k=30\) dB, for all the levels and frequencies, except for 90 and 95 Hz at \(k=1\), for which \(GAB_1\) is 28 and 24 dB respectively.

Error:

with \(i=1,\ldots ,M\).

Global Attenuation Criterion:

Disturbance Attenuation—DA

The benchmark specification corresponds to \(DAB=40\) dB, for all the levels and frequencies.

Error:

with \(i=1,\ldots ,M\) and \(j=1,\ldots ,j_{max}\), where \(j_{max}=k\).

Disturbance Attenuation Criterion

Maximum Amplification—MA

The benchmark specifications depend on the level, and are defined as

Error:

with \(i=1,\ldots ,M\).

Maximum Amplification Criterion

Global Criterion of Steady-State Performance for One Level

Benchmark Satisfaction Index for Steady-State Performance

The Benchmark Satisfaction Index is a performance index computed from the average criteria \(J_{\varDelta GA_{k}}\), \(J_{\varDelta DA_{k}}\) and \(J_{\varDelta MA_{k}}\). The Benchmark Satisfaction Index is \(100\,\%\), if these quantities are “0” (full satisfaction of the benchmark specifications) and it is \(0\,\%\) if the corresponding quantities are half of the specifications for GA, and DA or twice the specifications for MA. The corresponding reference error quantities are summarized below:

The computation formulas are

Then the Benchmark Satisfaction Index (BSI), is defined as

The results for \(BSI_k\) obtained both in simulation and real-time for each approach and all the levels are summarized in Tables 13.4 and 13.5 respectively and represented graphically in Fig. 13.17. The YK IIR scheme provides the best results in simulation for all the levels but the indirect approach provides very close results. In real time it is the YK IIR scheme which gives the best results for level 1 and the YK FIR which gives the best results for levels 2 and 3. Nevertheless, one has to mention that the results of the YK FIR scheme are highly dependent on the design of the central controller.

The results obtained in simulation allows the characterization of the performance of the proposed design under the assumption that design model = true plant model. Therefore in terms of capabilities of a design method to meet the benchmark specification the simulation results are fully relevant. It is also important to recall that Level 3 of the benchmark is the most important. The difference between the simulation results and real-time results, allows one to characterize the robustness in performance with respect to uncertainties on the plant and noise models used for design.

To assess the performance loss passing from simulation to real-time results the Normalized Performance Loss and its global associated index is used. For each level one defines the Normalized Performance Loss as

and the global NPL is given by

where \(N=3\).

Table 13.6 gives the normalized performance loss for the three schemes. Figure 13.18 summarizes in a bar graph these results. The YK IIR scheme assures a minimum loss for level 1, while the YK FIR scheme assures the minimum loss for level 2 and 3.

Global Evaluation of Transient Performance

For evaluation of the transient performance an indicator has been defined by Eq. 12.46. From this indicator, a global criterion can be defined as follows

where \(M=10\) if \(k=1\), \(M=6\) if \(k=2\), and \(M=4\) if \(k=3\).

Transient performance are summarized in Table 13.7. All the schemes assures in most of the cases the \(100\,\%\) of the satisfaction index for transient performance, which means that the adaptation transient duration is less or equal to 2 s in most of the cases (except the indirect scheme for level 2 in simulation).

Evaluation of the Complexity

For complexity evaluation, the measure of the Task Execution Time (TET) in the xPC Target environment will be used. This is the time required to perform all the calculations on the host target PC for each method. Such process has to be done on each sample time. The more complex is the approach, the bigger is the TET. One can argue that the TET depends also on the programming of the algorithm. Nevertheless, this may change the TET by a factor of 2 to 4 but not by an order of magnitude. The xPC Target MATLAB environment delivers an average of the TET (ATET). It is however interesting to asses the TET specifically associated to the controller by subtracting from the measured TET in closed-loop operation, the average TET in open-loop operation.

The following criteria to compare the complexity between all the approaches are defined.

where \(k=1,\ldots ,3\). The symbols Simple, Step and Chirp Footnote 10 are associated respectively to Simple Step Test (application of the disturbance), Step Changes in Frequency and Chirp Changes in Frequency. The global \(\varDelta TET_{k}\) for one level is defined as the average of the above computed quantities:

where \(k=1,\ldots ,3\). Table 13.8 and Fig. 13.19 summarize the results obtained for the three schemes. All the values are in microseconds. Higher values indicate higher complexity. The lowest values (lower complexity) are highlighted.

As expected, the YK FIR algorithm has the smallest complexity. YK IIR has a higher complexity than the YK FIR (This is due to the incorporation of the estimation of \(A_Q(z^{-1})\)) but still significantly less complex than the indirect approach using BSF.

Tests with a different experimental protocol have been done. The results obtained are coherent with the tests presented above. Details can be found in [10, 34].

7 Concluding Remarks

It is difficult to decide what is the best scheme for adaptive attenuation of multiple narrow-band disturbances. There are several criteria to be taken into account:

-

If an individual attenuation level should be fixed for each spike, the indirect adaptive scheme using BSF is the most appropriate since it allows to achieve specific attenuation for each spike.

-

If the objective is to have a very simple design of the central controller, YK IIR scheme and the indirect adaptive scheme have to be considered.

-

If the objective is to have the simplest scheme requiring the minimum computation time, clearly the YK FIR has to be chosen.

-

If the objective is to make a compromise between the various requirements mentioned above, it is the YK IIR adaptive scheme which has to be chosen.

Notes

- 1.

The complex variable \(z^{-1}\) is used to characterize the system’s behaviour in the frequency domain and the delay operator \(q^{-1}\) will be used for the time domain analysis.

- 2.

As indicated earlier, it is assumed that a reliable model identification is achieved and therefore the estimated model is assumed to be equal to the true model.

- 3.

For frequencies bellow \(0.17\,{f_S}\) (\({f_S}\) is the sampling frequency) the design can be done with a very good precision directly in discrete time [5].

- 4.

Its structure in a mirror symmetric form guarantees that the roots are always on the unit circle.

- 5.

The argument \((q^{-1})\) will be dropped in some of the following equations.

- 6.

For the development of the equation for the adaptation error one assumes that the estimated parameters have constant values which allows to use the commutativity property of the various operators.

- 7.

The details of the developments leading to this equation are given in the Appendix C.

- 8.

Neglecting the non-commutativity of the time-varying operators.

- 9.

The disturbance passes through a so called “primary path” which is not represented in Fig. 13.5.

- 10.

References

Landau ID, Alma M, Constantinescu A, Martinez JJ, Noë M (2011) Adaptive regulation - rejection of unknown multiple narrow band disturbances (a review on algorithms and applications). Control Eng Pract 19(10):1168–1181. doi:10.1016/j.conengprac.2011.06.005

Landau I, Constantinescu A, Rey D (2005) Adaptive narrow band disturbance rejection applied to an active suspension - an internal model principle approach. Automatica 41(4):563–574

Landau I, Alma M, Martinez J, Buche G (2011) Adaptive suppression of multiple time-varying unknown vibrations using an inertial actuator. IEEE Trans Control Syst Technol 19(6):1327–1338. doi:10.1109/TCST.2010.2091641

Procházka H, Landau ID (2003) Pole placement with sensitivity function shaping using 2nd order digital notch filters. Automatica 39(6):1103–1107. doi:10.1016/S0005-1098(03)00067-0

Landau I, Zito G (2005) Digital control systems - design, identification and implementation. Springer, London

Nehorai A (1985) A minimal parameter adaptive notch filter with constrained poles and zeros. IEEE Trans Acoust Speech Signal Process ASSP-33:983–996

Astrom KJ, Wittenmark B (1984) Computer controlled systems. Theory and design. Prentice-Hall, Englewood Cliffs

Chen X, Tomizuka M (2012) A minimum parameter adaptive approach for rejecting multiple narrow-band disturbances with application to hard disk drives. IEEE Trans Control Syst Technol 20(2):408–415. doi:10.1109/TCST.2011.2178025

Landau ID, Lozano R, M’Saad M, Karimi A (2011) Adaptive control, 2nd edn. Springer, London

Castellanos-Silva A, Landau ID, Dugard L, Chen X (2016) Modified direct adaptive regulation scheme applied to a benchmark problem. Eur J Control 28:69–78. doi:10.1016/j.ejcon.2015.12.006

Tsypkin Y (1997) Stochastic discrete systems with internal models. J Autom Inf Sci 29(4&5):156–161

de Callafon RA, Kinney CE (2010) Robust estimation and adaptive controller tuning for variance minimization in servo systems. J Adva Mech Design Syst Manuf 4(1):130–142

Tay TT, Mareels IMY, Moore JB (1997) High performance control. Birkhauser, Boston

Airimitoaie TB, Landau I, Dugard L, Popescu D (2011) Identification of mechanical structures in the presence of narrow band disturbances - application to an active suspension. In: 2011 19th mediterranean conference on control automation (MED), pp 904–909. doi:10.1109/MED.2011.5983076

Tichavský P, Nehorai A (1997) Comparative study of four adaptive frequency trackers. IEEE Trans Autom Control 45(6):1473–1484

Rao D, Kung SY (1984) Adaptive notch filtering for the retrieval of sinusoids in noise. IEEE Trans Acoust Speech Signal Process 32(4):791–802. doi:10.1109/TASSP.1984.1164398

Regalia PA (1991) An improved lattice-based adaptive IIR notch filter. IEEE Trans Signal Process 9(9):2124–2128. doi:10.1109/78.134453

Chen BS, Yang TY, Lin BH (1992) Adaptive notch filter by direct frequency estimation. Signal Process 27(2):161–176. doi:10.1016/0165-1684(92)90005-H

Li G (1997) A stable and efficient adaptive notch filter for direct frequency estimation. IEEE Trans Signal Process 45(8):2001–2009. doi:10.1109/78.611196

Hsu L, Ortega R, Damm G (1999) A globally convergent frequency estimator. IEEE Trans Autom Control 4(4):698–713

Obregon-Pulido G, Castillo-Toledo B, Loukianov A (2002) A globally convergent estimator for n-frequencies. IEEE Trans Autom Control 47(5):857–863

Stoica P, Nehorai A (1988) Performance analysis of an adaptive notch filter with constrained poles and zeros. IEEE Trans Acoust Speech Signal Process 36(6):911–919

M’Sirdi N, Tjokronegoro H, Landau I (1988) An RML algorithm for retrieval of sinusoids with cascaded notch filters. In: 1988 International conference on acoustics, speech, and signal processing, 1988. ICASSP-88, vol 4, pp 2484–2487. doi:10.1109/ICASSP.1988.197147

Airimitoaie TB, Landau ID (2014) Indirect adaptive attenuation of multiple narrow-band disturbances applied to active vibration control. IEEE Trans Control Syst Technol 22(2):761–769. doi:10.1109/TCST.2013.2257782

Castellanos-Silva A, Landau ID, Airimitoaie TB (2013) Direct adaptive rejection of unknown time-varying narrow band disturbances applied to a benchmark problem. Eur J Control 19(4):326– 336. doi:10.1016/j.ejcon.2013.05.012 (Benchmark on Adaptive Regulation: Rejection of unknown/time-varying multiple narrow band disturbances)

Landau ID, Silva AC, Airimitoaie TB, Buche G, Noé M (2013) An active vibration control system as a benchmark on adaptive regulation. In: Control conference (ECC), 2013 European, pp 2873–2878 (2013)

Aranovskiy S, Freidovich LB (2013) Adaptive compensation of disturbances formed as sums of sinusoidal signals with application to an active vibration control benchmark. Eur J Control 19(4):253–265. doi:10.1016/j.ejcon.2013.05.008 (Benchmark on Adaptive Regulation: Rejection of unknown/time-varying multiple narrow band disturbances)

Airimitoaie TB, Silva AC, Landau ID (2013) Indirect adaptive regulation strategy for the attenuation of time varying narrow-band disturbances applied to a benchmark problem. Eur J Control 19(4):313–325. doi:10.1016/j.ejcon.2013.05.011 (Benchmark on Adaptive Regulation: Rejection of unknown/time-varying multiple narrow band disturbances)

de Callafon RA, Fang H (2013) Adaptive regulation via weighted robust estimation and automatic controller tuning. Eur J Control 19(4):266–278. doi:10.1016/j.ejcon.2013.05.009 (Benchmark on Adaptive Regulation: Rejection of unknown/time-varying multiple narrow band disturbances)

Karimi A, Emedi Z (2013) H\(_\infty \) gain-scheduled controller design for rejection of time-varying narrow-band disturbances applied to a benchmark problem. Eur J Control 19(4):279–288. doi:10.1016/j.ejcon.2013.05.010 (Benchmark on Adaptive Regulation: Rejection of unknown/time-varying multiple narrow band disturbances)

Chen X, Tomizuka M (2013) Selective model inversion and adaptive disturbance observer for time-varying vibration rejection on an active-suspension benchmark. Eur J Control 19(4):300–312 (Benchmark on Adaptive Regulation: Rejection of unknown/time-varying multiple narrow band disturbances)

Wu Z, Amara FB (2013) Youla parameterized adaptive regulation against sinusoidal exogenous inputs applied to a benchmark problem. Eur J Control 19(4):289–299 (Benchmark on Adaptive Regulation: Rejection of unknown/time-varying multiple narrow band disturbances)

Castellanos-Silva A (2014) Compensation adaptative par feedback pour le contrôle actif de vibrations en présence d’incertitudes sur les paramétres du procédé. Ph.D. thesis, Université de Grenoble

Landau ID, Silva AC, Airimitoaie TB, Buche G, Noé M (2013) Benchmark on adaptive regulation - rejection of unknown/time-varying multiple narrow band disturbances. Eur J Control 19(4):237–252. doi:10.1016/j.ejcon.2013.05.007

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Landau, I.D., Airimitoaie, TB., Castellanos-Silva, A., Constantinescu, A. (2017). Adaptive Attenuation of Multiple Sparse Unknown and Time-Varying Narrow-Band Disturbances. In: Adaptive and Robust Active Vibration Control. Advances in Industrial Control. Springer, Cham. https://doi.org/10.1007/978-3-319-41450-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-41450-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-41449-2

Online ISBN: 978-3-319-41450-8

eBook Packages: EngineeringEngineering (R0)