Abstract

In the words of Wehner (J Comp Physiol A 161:511–531, 1987) who first coined the term “matched filter” in the context of sensory systems, matched filters “severely limit the amount of information the brain can pick up from the outside world, but they free the brain from the need to perform more intricate computations to extract the information finally needed for fulfilling a particular task”. In other words, by matching the properties of neurons, circuits and sensory structures to the characteristics of the most crucial sensory stimuli that need to be detected, these stimuli can be rapidly and reliably extracted for further processing, thus drastically improving the efficiency of sensing. And by “severely limiting information picked up by the brain”, the energetic costs that would have been associated with coding superfluous information are effectively eliminated. Thus, “freeing the brain” not only frees it from the need to perform intricate computations, it also frees it from significant (and unnecessary) energetic costs. Not surprisingly, with their small eyes and brains and severely limited energy budgets, visual matched filtering is particularly well developed in small animals like insects. It is most obvious at the visual periphery, in the morphology and physiology of the compound eyes, but remarkable matched filters also occur at higher levels of visual processing. Using a number of case studies, I will show how visual matched filters have evolved for all aspects of insect life, including the detection and pursuit of mates and prey and for locomotion and navigation in the natural habitat.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Energy, Performance and Matched Filtering

The arthropods , arguably one of the most successful groups of animals on our planet, owe much of their success to the seemingly endless range of adaptations permitted by their hard cuticular exoskeletons. Due to its variable thickness and hardness, from heavy and armour-like to thin and rubber-like, and due to its variable opaqueness, where even transparent windows and lenses are possible, the arthropod cuticle has evolved far beyond being a simple skeletal element. All manner of cuticular appendages have evolved for locomotion, prey capture, defence and courtship, and the cuticle has been fashioned over millions of years into exquisitely sensitive sensory organs for vision, hearing, olfaction and mechanoreception. But despite its obvious evolutionary success, the arthropod exoskeleton is also a constraint – due partly to the finite mechanical strength of cuticular structures, arthropods tend to be limited in size, with the force of gravity in terrestrial environments favouring internal skeletons in larger animals such as vertebrates (although the buoyancy provided by water has in part allowed marine arthropods to grow larger than their terrestrial relatives). However, this typically small body size has by no means constrained the sophistication of arthropods (Eberhard 2007) – some species of bees and wasps are less than half a millimetre in length and yet still retain most of the locomotive and sensory capacities of their much larger relatives, a testament to the remarkable performance of their miniaturised musculature and nervous systems (Niven and Farris 2012).

To provide this sophistication in such a diminutive package (Chittka and Niven 2009), the comparatively small brains and nervous systems of arthropods have been honed by the forces of natural selection to provide neural circuits with functional repertoires that closely match a limited range of ecologically relevant behavioural tasks. Which circuits evolve – and which behavioural tasks take precedence – is determined not only by external ecological factors, such as the physical nature of the habitat or the presence of predators or prey, but also by various internal factors. One of the most important of these is undoubtedly the animal’s finite metabolic energy budget (Niven and Laughlin 2008), the size of which tends to decrease as animals become smaller. As with all finite budgets, resources need to be allocated wisely – overinvestment in one area may be detrimental to others and thus negative for the system as a whole (and in the case of an animal, possibly fatally so). Thus, in response to the external forces of natural selection, an animal’s finite energy budget – determined largely by the availability of food and the ease with which it is attained – is carefully allocated for the development and maintenance of the animal’s various organ systems. How much energy is allocated to a given organ system reflects the importance of that system for the animal’s chances of survival and reproduction, with the benefits of the system weighed against its energetic cost . The ultimate currency of this evolutionary cost-benefit analysis is defined in terms of the number of ATP molecules that is required to perform specific tasks (Laughlin et al. 1998; Niven et al. 2007) and involves an evolutionary process whereby the benefit of an improved performance is weighed against the energetic cost of achieving it (Niven and Laughlin 2008). It turns out that this process invariably involves a law of diminishing returns – each unit increment in performance tends to cost disproportionately more than the previous increment (i.e. performance is not a linear function of cost). This means that the evolution of a high performance organ comes only at a significant energetic cost, a cost that is likely to be a substantial fraction of the animal’s total energy budget (Niven et al. 2007; Niven and Laughlin 2008).

Nowhere is this truer than in the evolution of nervous systems, whose building blocks – neurons – are among the most energetically expensive cells in an animal’s body. The main reason for this expense is the cost of maintaining the neuron’s resting potential in readiness for electrical signalling. The resting potential , which is usually many tens of millivolts negative relative to the external cellular medium, is maintained (and restored following signalling) by active ion pumps that use energy from ATP molecules to pump sodium and potassium ions across the neuronal membrane against their passive electrical and concentration gradients. This energetic cost is substantial and is incurred even in the absence of signalling. The extra cost of signalling is simply added to this (Niven et al. 2007).

Due to their typical possession of a dense matrix of receptor neurons, sensory organs tend to be particularly expensive, but as with any organ, their cost can be weighed against the performance benefits they provide. One measure of this performance can be defined by the amount of information (in bits) gained by executing a particular sensory task, such as the transduction of photons of light by a photoreceptor, or of odour molecules by an olfactory receptor. This performance can be measured against its energetic cost, that is, the number of ATP molecules that are consumed to generate one bit of sensory information (Laughlin et al. 1998). For a light-adapted photoreceptor in a fly, this energetic cost is significant – depending on the species, between one million and ten million ATP molecules are consumed to generate a single bit of information, and a large fraction of this cost (around 20 %) is simply used to maintain the resting potential in the absence of signalling, that is, to maintain it in darkness (Laughlin et al. 1998; Niven et al. 2007). In fact, the “dark cost” of the entire retina is about 2 % of the fly’s total resting metabolic rate! A significant dark cost has likewise been estimated for the vertebrate retina (Okawa et al. 2008). The photoreceptors of flies also demonstrate the law of diminishing returns mentioned above – even though the larger photoreceptors of larger fly species provide their owners with a greater maximum number of bits of information per second, they do so only at a disproportionately high cost (Niven et al. 2007). In fact, across the flies, the total energy cost (in ATP molecules consumed per photoreceptor per second) rises almost with the square of the maximum information rate (in bits per second) coded by the photoreceptor (the actual exponent is 1.7).

The conclusion from the energetic arguments presented above is that larger eyes, with a greater number of larger photoreceptors, are likely to cost a disproportionate fraction of an animal’s energy budget compared to smaller eyes with fewer and/or smaller photoreceptors. For this reason alone, larger eyes – especially those larger than expected from the size of the animal – would normally be selected against during evolution. When they do appear in the animal kingdom, they are invariably critically important for their owner’s chances of survival, with the sizable energy commitment required being crucially necessary (as in the disproportionately large eyes of the giant deep-sea squid: Nilsson et al. 2012).

Not surprisingly, a number of strategies have evolved to make vision more efficient (Niven and Laughlin 2008), many of which are most obvious in small animals like arthropods with their small and limited energy budgets. Of these, matched sensory filtering is one of the most effective. By matching the properties of neurons, circuits and sensory structures to the characteristics of the most crucial visual stimuli that need to be detected, these stimuli can be directly and reliably extracted for further processing. All other visual stimuli – having little consequence for the animal’s chances of reproduction and survival – are simply suppressed or filtered out altogether. To see “the world through such a matched filter”, to quote Rüdiger Wehner, who first coined the term in 1987, “severely limits the amount of information the brain can pick up from the outside world, but it frees the brain from the need to perform more intricate computations to extract the information finally needed for fulfilling a particular task” (Wehner 1987). By “severely limiting information picked up by the brain”, the energetic costs that would have been associated with coding superfluous information are effectively eliminated. And “freeing the brain” not only frees it from the need to perform intricate computations it also frees it from the significant energetic costs that would have arisen by possessing the neural circuits necessary to make these computations. Simply put, matched filtering saves energy by stripping away unnecessary energetic investments and efficiently redirecting the remaining energy to where it is needed most. With their miniature brains and nervous systems orchestrating complex behaviours on a small and limited energy budget, it should come as no surprise that the arthropods are richly endowed with visual matched filters.

The best understood matched filters are undoubtedly those found among the insects, and these have evolved in response to almost every aspect of their ecology, from locomotion and navigation to predator avoidance, food acquisition and courtship. These matched filters provide some of the most beautiful and remarkable products of natural selection that can be found in the natural world. Indeed, a few seem so “ingenious” to human observers that engineers have directly used them to create “smart solutions” for some of our latest electronic devices. In the pages that follow, I will showcase a selection of visual matched filters in insects, described within the ecological contexts that they evolved. But before I do, it is first necessary to briefly describe the principle eye type possessed by insects – compound eyes.

2 Compound Eyes

Compound eyes are found in insects, crustaceans and some chelicerates (e.g. the horseshoe crab Limulus) and are composed of identical units called “ommatidia ” (Fig. 6.1a). Each ommatidium consists of a lens element – the “corneal lens” and “crystalline cone” – that focuses light incident from a narrow region of space onto the “rhabdom”, a photoreceptive structure built from the contributions of at least eight photoreceptor (retinula) cells, each of which apportions a region of membranous microvilli that house the rhodopsin molecules. A compound eye may contain as many as 30,000 ommatidia, as in large dragonflies, or as few as 6, as in some ants. Each ommatidium is responsible for reading the average intensity, colour and (in some cases) plane of polarisation within the small region of space that they each view. Two neighbouring ommatidia view two neighbouring regions of space. Thus, each ommatidium supplies a “pixel” of information to a larger image of pixels that the entire compound eye constructs. Larger compound eyes with more ommatidia thus have the potential for greater spatial resolution.

Arthropod eye designs. (a) A schematic longitudinal section (and an inset of a transverse section) through a generalised Hymenopteran ommatidium, showing the corneal lens (c), the crystalline cone (cc), the primary pigment cells (pc), the secondary pigment cells (sc), the rhabdom (rh), the retinular cells (rc), the basal pigment cells (bp) and the basement membrane (bm). The left half of the ommatidium shows screening pigment granules in the dark-adapted state, while the right half shows them in the light-adapted state (Redrawn from Stavenga and Kuiper (1977)). (b) Apposition compound eye (in this case a focal apposition eye). (c) Superposition compound eye (in this case a refracting superposition eye). cz clear zone. (d) The definition of the interommatidial angle Δϕ. D distance between the centres of two adjacent corneal facet lenses (equal to the facet diameter) and R local radius of the eye surface (From Cronin et al. 2014. Images in B-D courtesy of Dan-Eric Nilsson)

Compound eyes come in two main forms: “apposition eyes ” and “superposition eyes ”. Each of these forms comes in various subforms, but these I will avoid describing here for the sake of brevity (for further details see Land 1981; Nilsson 1989; Land and Nilsson 2012).

Apposition eyes (Fig. 6.1b) are typical of (but not restricted to) animals living in bright habitats. Insects with apposition eyes include day-active butterflies, flies, bees, wasps, ants, dragonflies and grasshoppers. Many shrimps and shallow-living and terrestrial crabs also have apposition eyes. Each ommatidium in an apposition eye is isolated from its neighbours by a sleeve of light-absorbing screening pigment, thus preventing light reaching the photoreceptors from all but its own small corneal lens. This tiny lens – typically a few tens of microns across – represents the pupil of the apposition eye. Such a tiny pupil only allows very little light to be captured.

Superposition eyes (Fig. 6.1c) are typical for (but not restricted to) animals living in dimmer habitats. These include nocturnal moths and beetles and deeper living marine crustaceans, such as lobsters and krill. In superposition eyes, the pigment sleeve is withdrawn, and a wide optically transparent area, the clear zone, is interposed between the lenses and the retina. This clear zone (cz in Fig. 6.1c) – and specially modified crystalline cones – allows light from a narrow region of space to be collected by a large number of ommatidia (comprising the superposition aperture) and focussed onto a single photoreceptor. Unlike the crystalline cones of apposition eyes, those of superposition eyes have evolved refractive index gradients or reflecting surfaces, which allow as many as around 2,000 lenses to collect the light for a single photoreceptor (as in some large nocturnal moths). This represents a massive improvement in sensitivity while still producing a reasonably sharp image.

As mentioned above, the spatial resolution of a compound eye is (in part) set by the density of its ommatidia . This is nicely illustrated by considering two extreme examples: large aeshnid dragonflies that may possess as many as 30,000 ommatidia in each of its apposition eyes and some groups of primitive ants that may possess fewer than ten. If the eyes of both insects view the same solid angular region of visual space, then the dragonfly will sample that region with vastly greater spatial resolution, simply because of its much higher density of sampling stations (i.e. ommatidia). This density is directly related to the local “interommatidial angle” Δϕ, the angular separation of two neighbouring ommatidia (Fig. 6.1d): smaller values of Δϕ indicate a greater sampling density and a higher spatial resolution. The interommatidial angle depends primarily on two anatomical parameters, the facet diameter D and the eye radius R:

A larger local eye radius (i.e. a flatter eye surface), or a smaller facet , produces a smaller interommatidial angle. However, there is a limit to how much Δϕ can be narrowed by decreasing the size of the facet – smaller facets sacrifice sensitivity to light (and degrade image quality due to diffraction). Nevertheless, it is possible to have a region of the eye that has such a large radius of curvature that an extremely small Δϕ is still possible without having to sacrifice facet size. In fact, in many apposition eyes, the facets in these regions can actually be much larger than in other regions of the eye having double the Δϕ (which is better for sensitivity)! Thus, particularly in apposition eyes, facet diameter and eye radius can both vary dramatically within a single eye, which means that the local interommatidial angle can also vary considerably – some directions of visual space can thereby be sampled much more densely than others. Such high-resolution “acute zones ” (Horridge 1978) are common among insects and crustaceans and their size, shape and placement in an eye tend to reflect the habitat and ecological needs of the eye’s owner. As we will see below, these acute zones are the basis for many different types of visual matched filters.

In the absence of all other effects (such as the quality of the optical image focused on the retina), Δϕ would set the finest spatial detail that could be seen. In reality, however, the finest spatial detail is determined by the size of the photoreceptor ’s “receptive field”, that is, the size of the region of visual space from which the photoreceptor is capable of receiving photons. The diameter of this roughly circular receptive field is sometimes called the photoreceptor’s “acceptance angle” Δρ, and smaller values indicate sharper spatial resolution. In most compound eyes, Δρ is typically larger than Δϕ since eyes often possess one or more optical limitations (e.g. aberrations) that blur the image formed in the retina. This blurring broadens Δρ and this in turn coarsens spatial resolution to a value below that predicted by the photoreceptor matrix.

3 Visual Matched Filtering in Insects

Variation in the size, location and organisation of acute zones provides one of the most important routes via which visual matched filters have been created in insect compound eyes. There are acute zones matched to the physical features of the terrain, to the locations and visual characteristics of mates and prey and to the way the visual world appears during locomotion. However, they are by no means the only types of matched filters. Some matched filters can also be found at the level of single cells in the sensory periphery (particularly for the pursuit of mates and prey), while others are manifested in the properties of entire circuits of cells in central areas of visual processing (some of which have important roles in flight control and navigation). However, no matter what their use or origin, all these matched filters fulfil their primary evolutionary role, as so elegantly shown by Rüdiger Wehner more than a quarter of a century ago – to free the brain from the need (and substantial energy cost) of performing intricate computations to extract the information needed for fulfilling a particular task.

We will begin our discussion of matched filters by considering those that are most obvious at the visual periphery, namely, those manifested in the optical design and physiological properties of the compound eye itself. Back in 1987, when Wehner first coined the term “matched filter”, these were by and large the only types of visual matched filters known. But since then we have come to realise that matched filtering also occurs at more central levels of visual processing, notably by visual interneurons in the lobula of the optic lobe and in the central brain. By having very large visual fields receiving inputs from enormous numbers of ommatidia, and by spatially integrating signals generated locally from each, higher-order visual cells can function as highly efficient matched filters for specific features of the visual world (Krapp 2014). It turns out, as we will see below, that these matched filters are of crucial importance for locomotion and navigation.

3.1 Peripheral Visual Matched Filtering in Insects

Peripheral visual matched filtering has manifested itself in three very important aspects of insect ecology: in the detection, attraction and pursuit of mates, in the detection and pursuit of prey and in the insect’s relationship to its physical environment. Of these, possibly the most spectacular matched filters are those concerned with sex.

3.1.1 Matched Filters for Sex

The urgency to reproduce has led to some of the most extraordinary visual adaptations found in nature, particularly among insects, where males can sometimes possess entirely separate eyes exclusively devoted to sex. In march flies and mayflies, for instance, the male eye has become bi-lobed, with the upper lobe heavily flattened to drastically increase the retinal sampling density within a narrow upward field of view (Zeil 1983a), within which, silhouetted against the brighter sky, females and rivals will appear as small dark moving spots (Zeil 1983b). The optical structure of their eyes and the physiology of the underlying neural circuitry that processes the visual image of a passing female together form a really impressive matched filter for detecting, pursuing and intercepting mates.

Sexual dimorphism in eye design need not necessarily result in the evolution of entirely separate eyes in males. In many species of brachyceran flies, for instance, the males instead have extended areas of compound eye that are missing in females. Whereas in females, the eyes remain widely separated, in males the eyes nearly (or completely) touch along the midline of the head (Fig. 6.2a, b). This extra piece of eye – the so-called love spot – is used by males exclusively for the detection and high-speed pursuit of females (Land and Collett 1974; Land and Eckert 1985). Love spot ommatidia are distinguished by their extra large facet lenses, and in the blowfly Calliphora erythrocephala (Fig. 6.2a, b) and the hoverfly Volucella pellucens (Fig. 6.2c), these collectively constitute an acute zone. This acute zone is clearly seen in male Volucella – each eye has a large love spot located frontally, 20° above the equator, within which the interommatidial angle Δϕ falls to just 0.7° (Fig. 6.2c). The size of the acute zone (the eye region where, say, Δϕ < 1.1°) occupies 2,230 deg2 of the visual field (shaded area in Fig. 6.2c). In females, there is also an acute zone, but instead directed frontally (Fig. 6.2d). Δϕ only falls to 0.9°, and the area of the acute zone (Δϕ < 1.1°) is a mere 23 % as large as that of males (510 deg2: shaded area in Fig. 6.2d).

Optical sexual dimorphism in the apposition eyes of flies. (a, b) Male (a) and female (b) blowfly heads (Calliphora erythrocephala). Note how the eyes of males almost touch, whereas those of females are quite separated. The extra eye surface – or “love spot” – of males (dotted white line) provides the input to a sophisticated neural pathway for detecting and chasing females (Images from Strausfeld 1991, with kind permission from Springer Science + Business Media). (c, d) In the hoverfly Volucella pellucens the male love spot is a large dorso-frontal acute zone, where interommatidial angles are small (c) and facet diameters are large. The visual fields of the left eyes of the two sexes, and interommatidial angles shown by isolines, are projected onto spheres. The females have a much smaller frontal acute zone (compare the shaded regions, where Δϕ <1.1°). D dorsal, V ventral, A anterior, L lateral (From Warrant 2001. Entire figure from Cronin et al. 2014)

Interestingly, the male’s matched filter is not restricted to the optics of the compound eyes. The photoreceptors of love spot ommatidia in the male housefly Musca domestica are 60 % faster than those of females and, due to the presence of the acute zone, spatially almost twice as acute (with acceptance angles Δρ around half those found in females: Hornstein et al. 2000). These properties make love spot photoreceptors especially well suited for detecting and pursing small high-speed targets like females. The improved response speed is achieved by a faster transduction mechanism and a tuned voltage-activated conductance that enhances the membrane’s frequency response – in the blowfly Calliphora vicina, this translates into an information rate (in bits/s) in male photoreceptors that is up to 40 % higher than that in females (Burton et al. 2001). Not surprisingly all these improvements only come at a cost – the extra-tuned conductance (which involves the passage of ions through dedicated channels in the photoreceptor membrane) is energetically expensive.

The visual matched filtering seen in the male’s eye is preserved in the visual processing circuits of the optic lobe, particularly in the lobula. Here, large male-specific visual cells – which are entirely lacking or highly modified in females – respond maximally to small dark objects moving across the frontal-dorsal visual field corresponding to the love spots (Strausfeld 1991; Gilbert and Strausfeld 1991; Gronenberg and Strausfeld 1991). When stimulated with larger objects, the responses of these cells rapidly decline, a clear demonstration of matched filtering for small targets (Nordström and O’Carroll 2009).

3.1.2 Matched Filters for Prey Detection and Pursuit

In insects, the known visual matched filters for detecting and pursuing prey turn out to be very similar to those for detecting and pursuing mates. The reason for this is quite straightforward – the visual stimulus is nearly identical. Like the typical mates of flies, the prey items of many insects turn out to be small dark silhouettes moving rapidly across the bright background of the sky.

Dragonflies, for instance, have a highly developed dorsal acute zone, with huge facets and narrow interommatidial angles (Fig. 6.3a, b) – in the dragonfly Anax junius, Δϕ falls to a phenomenally low 0.24° (Sherk 1978)! This acute zone scans the sky above and in front of the dragonfly, on the lookout for flying insect prey. And just as in the love spot photoreceptors of flies, the signals generated in the acute zone photoreceptors of dragonflies eventually feed into specialised neurons at higher levels of visual processing which collectively create a visual matched filter for detecting prey (Olberg 1981, 1986). One such neuron is the CSTMD4 cell, a large-field small-target-detecting cell from the lobula of the large Australian dragonfly Hemicordulia tau (O’Carroll 1993). This neuron has a response that is tuned to very small moving targets (Fig. 6.3c), around 1 square degree in size. When the target sizes increase, the response of CSTMD4 drops dramatically.

The dorsal acute zone of dragonflies. (a) An unknown species of dragonfly with eyes possessing distinct dorsal acute zones with larger facet lenses (Photo credit: Tanya Consaul (123RF.com photo agency)). (b) A map of spatial resolution in the eye of the dragonfly Anax junius (expressed as the number of ommatidial axes per square degree). The density of ommatidia (and thus spatial resolution) increases rapidly in the dorsal (D) visual field. V ventral, L lateral, A anterior (Redrawn from Land and Nilsson 2012, with data from Sherk 1978). (c) The responses (peristimulus time histograms) of CSTMD4, a large-field small-target-detecting cell from the lobula of the dragonfly Hemicordulia tau. In the part of the cell’s receptive field corresponding to its dorsal acute zone, the cell is most sensitive to small, dark, and reasonably slow-moving targets (as a fly might appear during a highly fixated pursuit). It is insensitive to large bars, edges and gratings (From O’Carroll 1993, reprinted with permission from Macmillan Publishers Ltd. Entire figure from Cronin et al. 2014)

3.1.3 Matched Filters for the Physical Terrain

The physical environments where animals live have profoundly influenced the evolution of their senses, not the least vision. Despite what appear to be enormous differences in the appearance of different habitats – especially with regard to their topology and structural complexity – there are also some notable constants that are common to almost all natural scenes and to which most visual systems have adapted. For instance, the probability distribution of visual contrasts in the terrestrial world is remarkably similar from habitat to habitat (Laughlin 1981), as are the probability distributions of spatial and temporal frequencies (Atick and Redlich 1992; Dong and Atick 1995; Field 1999). Not surprisingly, this predictability in the structure of natural scenes has strongly steered the evolution of early visual processing (Srinivasan et al. 1982; Atick 1992) – because features in the environment that are predicable are also redundant, maximum coding efficiency arises by ignoring or eliminating this redundancy and concentrating on unpredictable (and thus visually interesting) features. By using a variety of neural mechanisms to remove redundancy, notably lateral inhibition or spatiotemporal summation, early visual processing (van Hateren 1992, 1993) – whether in a fly (Laughlin 1981) or a mammal (Field 1987; Atick and Redlich 1992) – is thereby matched to the predictable nature of visual scenes.

Nonetheless, despite these structural similarities, natural scenes also manifest distinct differences. Some environments, like an intertidal beach or the immense open grass plains of the African veldt, are wide and flat and dominated by a single visual feature – the sharp border between the ground and the sky provided by the horizon. It is here that almost everything of importance to an animal occurs – the courtship displays of mates, the sudden appearance and flight of prey or the unforeseen attacks of predators. Other environments are much more complex, like the tangled understorey of a tropical rainforest, a maze of tree trunks, bushes and vines that spread in every direction. At the other extreme is the vast and featureless dim blue world of the mesopelagic deep sea. All of these environments are visually very different, and not surprisingly eyes and neural processing have evolved to match these habitats as closely as possible. For instance, desert ants (Wehner 1987), dance flies (Zeil et al. 1988) and fiddler crabs (Zeil et al. 1986; Zeil and Hemmi 2006; Smolka and Hemmi 2009) that inhabit flat and relatively featureless terrains or patrol water surfaces have evolved compound eyes whose sampling stations are densest – and resolution highest – in a narrow strip around the equator of the eye, thus creating a matched filter for sampling objects along the horizon, where the greatest density of visual information occurs. These so-called visual streaks of high resolution are a common evolutionary response to the demands of vision in a flat world (Hughes 1977).

Visual streaks are also found in the eyes of two water-dwelling bugs, both of which take advantage of the surface tension of water to position themselves at the water surface, one of them above it (the water strider Gerris lacustris, Fig. 6.4a) and the other below (the backswimmer Notonecta glauca, Fig. 6.4b). To detect their prey, both bugs are highly dependent on their eyes and on their ability to detect water ripples produced by small animals trapped at the water surface. With its long slender legs, the water strider is able to skate across the water film and to hold station on a slowly moving water surface by leaping upstream to counteract its displacement away from familiar shoreline landmarks (Junger and Varju 1990). To view this flat water surface, and the visual landmarks at its edge, the water strider has an extremely sharp visual streak aligned with this horizon, with vertical interommatidial angles falling at its centre to values close to the smallest recorded in insect eyes – 0.55° (Fig. 6.4a, Dahmen 1991).

Adaptations for vision at a flat water surface. (a) The sharp horizontal visual streak of the surface-dwelling water strider Gerris lacustris, where a vertical transect through the frontal eye, reveals a dramatic decrease in vertical interommatidial angle Δϕ v towards the equator of the eye (the eye region viewing the horizon). Here Δϕ v falls to a minimum value of 0.55° (Adapted from data taken from Dahmen 1991. Photo credit for the unknown species of Gerris: Ernie Cooper, www.macrocritters.wordpress.com). (b) Vision through Snell’s window in the backswimmer Notonecta glauca, where the 180° view of the world above the water surface, is compressed due to refraction into a 97.6° wide cone below the water surface. The backswimmer hangs upside at the water surface, with the ommatidia in the ventral regions of its apposition eyes looking upwards (positive directions of view in the left panel). At precisely the boundary of Snell’s window (red dashed lines), there is a sudden decrease in Δϕ v indicating enhanced spatial resolution for objects (prey) on the horizontal water surface above. In the horizontal direction below the water surface (0°: green dashed lines) Δϕ v is also minimal, indicating the presence of a second horizontal acute zone. In both panels, negative directions of view indicate ventral regions of the visual world, whereas positive directions indicate dorsal regions (note however that since the backswimmer hangs upside down, dorsal directions are viewed by the ventral eye and vice versa) (Adapted from Wehner 1987 (with kind permission from Springer Science + Business Media), with data and images from Schwind 1980. Photo credit: Eric Isselee (123RF.com photo agency). Panel B from Cronin et al. 2014)

The optical world experienced by a backswimmer that hangs suspended from the underside of the water surface is quite different to that experienced by a water strider. The fact that water has a higher refractive index than air means that the entire 180° dome of the sky is compressed to a 97° cone of light underwater. Within this cone of light – called “Snell’s window” – all the features of the terrestrial world above can be found, including the flat water surface, which is located at the edge of the cone (Walls 1942, p. 378). By looking upwards along the edge of the cone, a suspended backswimmer is able to have a periscopic view of the outside water surface and see anything, including prey, which might be trapped on it. The water surface is an important horizon for the backswimmer, and the ventral part of the eye possesses a well-developed visual streak (Fig. 6.4b) that watches the surface along the edge of Snell’s window (Schwind 1980). This matched filtering doesn’t stop at the optics of the eye: in the optic lobe, there are cells which have their visual fields coincident with the visual streak, and which respond maximally to prey-sized objects on the water surface (Schwind 1978). But the water surface is not the backswimmer’s only horizon. Frontwards, the backswimmer can also see the environment of the pond and any item of interest that might be located there. There is a second visual streak that views this direction as well (Schwind 1980).

3.2 Central Visual Matched Filtering in Insects

3.2.1 Matched Filters for Locomotion

Insects display all the forms of locomotion that have evolved in the animal kingdom, from walking and swimming, to flying and gliding. Some, like water striders, are even able to walk on water. But irrespective of its form, a characteristic of all modes of locomotion is its speed, and the speed of locomotion – or more particularly, the speed with which the visual contrasts of the world traverse the receptive fields of visual cells – has led to the evolution of fundamental matched filters in the photoreceptors, the cells responsible for recording the very first impressions of the moving visual world.

Sixty-five years ago, the great German sensory physiologist Hansjochem Autrum measured extracellular responses to light flashes in the eyes of insects (so-called electroretinograms or ERGs) to discover that the eyes of insects are either “fast” or “slow”, with fast eyes being correlated with rapidly moving (and often) diurnal insects and slow eyes with slowly moving (and often) nocturnal insects (Autrum 1950). Later intracellular recordings from the photoreceptors of a range of different insects confirmed this notion (Howard et al. 1984; de Souza and Ventura 1989): the voltage responses of photoreceptors to brief dim flashes of light (known as “impulse responses”) had slower time courses in slowly moving species and faster time courses in rapidly moving species (Fig. 6.5), indicating fundamental differences in the membrane properties of the two speed classes of photoreceptors. These differences, it turns out, are largely due to differences in the numbers and types of potassium (K+) channels found in the photoreceptor membranes of fast and slow eyes (Laughlin and Weckström 1993; Weckström and Laughlin 1995; Salmela et al. 2012), the exact complement of channels creating a sensory filter matched to the speed of locomotion (Laughlin 1996). Among the flies, species that fly rapidly (such as the hoverfly Epistrophe eligans) have photoreceptors possessing delayed-rectified K+ channels that allow a rapid response by reducing the membrane time constant. In contrast, the photoreceptors of slowly moving flies (like the crane fly Tipula paludosa) lack delayed rectifier channels, but instead express an inactivating K+ current (Laughlin and Weckström 1993). Due to their large currents and conductances, the fast delayed rectifiers of rapidly flying flies are energetically more expensive than the K+ currents of slowly moving flies which inactivate quickly (Laughlin and Weckström 1993), indicating that fast vision comes at a cost.

Fast and slow photoreceptors in flies, as revealed by the light-adapted impulse response (the response to a very dim and brief flash of light delivered at time = 0). (a, b) Impulse responses in two species of crane flies (Nephrotoma quadrifaria a; Tipula paludosa b), slowly flying crepuscular insects that possess typical “slow” photoreceptors. (c, d) Impulse responses in the tachinid Eriothrix rufomaculata (c) and the hoverfly Epistrophe eligans (d), fast aerobatic diurnal insects that possess typical “fast” photoreceptors (Adapted from Laughlin and Weckström 1993. Photo credits (www.naturespot.org.uk): a, d Graham Calow; b, c David Nicholls)

Interestingly, the “speed” of an eye can vary within the same animal, for example, during the transition from day to night. At night, the demands of seeing well in dim light tend to result in slower vision (van Hateren 1993; Warrant 1999; Frederiksen et al. 2008), and in the locust Schistocerca gregaria – an insect known to fly both day and night – the membrane filters are modulated accordingly. During the day, locust eyes are “fast”, with membrane filters functioning as delayed rectifiers, while at night their eyes are “slow”, with filters exhibiting inactivating K+ currents (Cuttle et al. 1995). This daily transformation from fast to slow eyes (with a corresponding change in energy costs) appears to be under the control of the neuromodulator serotonin (Cuttle et al. 1995). Interestingly, the same transition from day to night also causes changes in the morphology of the photoreceptors that broadens their spatial receptive fields (Williams 1983) and thus decreases spatial resolution. Thus, in locust photoreceptors, the visual matched filters are plastic, changing from faster and more acute vision that is well matched to life in bright light during the day, to slower and coarser vision that is well matched to dim conditions at night.

Matched filters for locomotion are not only restricted to the photoreceptors. Flying insects – such as butterflies, flies, bees, grasshoppers and dragonflies – have equatorial gradients of spatial resolution that are adaptations for forward flight through a textured environment (Land 1989). When an insect (or any animal) moves forward through its surroundings, its eyes experience an optic flow of moving features (Gibson 1950; Wehner 1981). Features directly ahead appear to be almost stationary, while closer features to the side of this forward “pole” appear to move with a velocity which becomes maximal when they are located at the side of the eye, 90° from the pole. If we assume for simplicity that all photoreceptors within the eye sample photons during a fixed integration time Δt (which may not be the case, as in some flies: Burton et al. 2001), the motion of flow-field images from front to back across the eye will cause blurring. An object moving past the side of the eye (with velocity v deg/s) will appear as a horizontal spatial smear whose angular size will be approximately vΔt degrees. This effectively widens the local optical acceptance angle Δρ to a new value of {Δρ 2 + (vΔt)2}½ (Srinivasan and Bernard 1975). The extent of this widening is worse at the side of eye (higher v) than at the front (lower v). In order to maintain an optimum sampling ratio of Δρ/Δϕ (Snyder 1977), the equatorial increase in Δρ posteriorly should be matched by an increase in Δϕ. This indeed seems to be the case in many flying insects, such as the Empress Leilia butterfly Asterocampa leilia (Rutowski and Warrant 2002). In Asterocampa Δϕ increases smoothly along the equator from the front of the eye to the side, from 0.9 to 2.0° in males and from 1.3 to 2.2° in females.

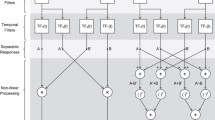

Remarkably, the significant extent of matched filtering occurring in the optics and photoreceptors of the compound eye are even more evident at higher levels of processing in the optic lobe, notably in the wide-field motion detecting neurons of the lobula and lobula plate. The lobula plate of the blowfly Calliphora erythrocephala (Fig. 6.6a) has long been known to contain cells – known as “horizontal” (H) and “vertical” (V) cells – which respond to wide-field motion (Hausen 1982a, b). Some cells apparently prefer upward or downward motion, others leftward or rightward. By examining very small regions of the visual fields of these cells, Holger Krapp and colleagues (Krapp and Hengstenberg 1996; Krapp et al. 1998) discovered that the local direction preference was usually very different to the global preference. In fact, they found that the local preferred direction changed in a predictable manner from one region to the next, building up an orderly map of directions across the entire visual field of the cell (Fig. 6.6c). The most remarkable feature of these maps is that they are astonishingly good matches to the maps of motion vectors that describe flow fields (Fig. 6.6b). Cell VS6 (Fig. 6.6c) has a map of preferred motion directions that is extremely similar to the map of motion vectors that describes “roll”, the field of rotating features that results from a turn around the body axis (rotation: Fig. 6.6b). This means that if a fly makes a roll turn, VS6 will be maximally stimulated. Signals from these cells can then be used to activate muscles in the fly’s neck, compensating for the roll by returning the head to a horizontal position and maintaining the fly’s course. Similar matching can be found in other cells, including VS1 which matches “pitch”, the field of upwardly moving features resulting from a nose dive. The cell VS8 matches a field consisting of both pitch and roll. In other words, taken as a group, these cells respond vigorously whenever the fly experiences rotational optic flow and behave as impressive matched filters for a predictable and invariant feature of the visual world.

Wide-field motion-sensitive cells in the lobula plate of the blowfly Calliphora erythrocephala (a) act as matched filters for optic flow during flight. (b) Local motion flow-field vectors at various azimuths Θ and elevations Ψ experienced during rotation about an axis of rotation A r , in this case the body axis. At a lateral position with Ψ = 90°, the local vectors are ventrally (v) oriented. At the opposite position (Ψ = −90°), the local vectors are dorsally (d) oriented. Frontally at the “pole” (Ψ = 0°, Θ = 0°: circled “f”), the local vector is zero. Between these extremes, the local vectors swirl in a clockwise direction around the frontal pole, and anti-clockwise around the caudal (c) pole. (c) The local directional preferences of optomotor cell VS6 for small moving black spots at different azimuths Θ and elevations Ψ. Spots were presented to the cell at various Θ and Ψ, and the preferred direction of movement measured electrophysiologically. The local directional preferences show a striking similarity to the motion vectors experienced by the fly during rotation (b), and this cell would respond maximally to such a flow field. In a similar manner, other optomotor cells respond maximally to translation and pitch (a Photo credit: Zhang Yuan Geng (123rf.com); b, c Modified from Krapp and Hengstenberg (1996))

3.2.2 Matched Filters for Navigation

Despite their small brains, many insects are remarkable navigators. Some, like bees, wasps and ants, are able to learn visual landmarks around their nest and along a foraging route and then use them to successfully navigate to and from the nest in search of food (Zeil 2012). Others – like the North African desert ant Cataglyphis bicolor – are in addition able to continuously update a homebound vector of correct length and direction (via a process known as path integration) while foraging far from the nest in near featureless terrain, thus ensuring their safe return upon finding food (Müller and Wehner 1988). Several species of butterflies and moths (such as the Monarch butterfly Danaus plexippus) are even capable of migrating over thousands of kilometres to specific destinations – sites typically favoured by endless generations of their ancestors – only to return months later to where they began (Williams 1965). All of these insects rely on a suite of visual (and other sensory) cues to ensure successful navigation, and many depend on reliable visual compass cues present in the sky (Wehner 1984) – the disc of the sun or moon (Perez et al. 1997; Byrne et al. 2003; Ugolini et al. 2003; Heinze and Reppert 2011), the distributions of stars (Dacke et al. 2013) or the celestial pattern of polarised light (Wehner and Labhart 2006). Of these, the last is particularly widely used by insect navigators, much due to the fact that the celestial polarisation pattern – which is distributed across the entire dome of the sky – is often still visible when other celestial cues have become hidden by cloud or vegetation. Even though we ourselves are unable to see this pattern, most invertebrates (and probably even some vertebrates) see it clearly and can potentially use it as a celestial compass during navigation. It turns out that a remarkable neural matched filter for the celestial polarisation pattern exists in the insect’s central brain. However, before describing this filter, it is first necessary to describe the pattern of celestial polarised light and how polarised light is detected in the retina.

The polarisation properties of light arise from its electromagnetic nature: the plane of polarisation of a light wave is defined as the plane in which its electric field wave (or E-vector) oscillates. Because of Rayleigh scattering of unpolarised sunlight in the atmosphere, each point in the sky emits light rays with a certain plane (direction) of polarisation. For scattered sunlight or moonlight, the exact direction of each light ray’s electric field vector, and its degree of polarisation, varies systematically across the dome of the sky. Scattering thus creates a distinct pattern of skylight polarisation, within which the E-vectors are approximately arranged in concentric circles around the sun or moon (Fig. 6.7a). The pattern has a symmetry plane defined by the celestial meridian, the semicircular line that traverses the entire dome of the sky (from horizon to horizon) and contains both the sky’s zenith (the point directly above the observer) and the sun or moon. This symmetry is the reason why many nocturnal and diurnal insects are able to use the polarisation pattern as a visual celestial compass during navigation.

Polarised light detection in insects. (a) The celestial polarisation pattern. The E-vectors of scattered plane-polarised light (bars) have directions that are arranged tangentially on concentric circular loci centred on the sun or moon. The degree of polarisation (bar thickness) is maximal at 90° from the sun. (b–c) Transverse sections of rhabdoms in the dorsal rim (b), and remainder of the eye (c), in the ant Polyrhachis sokolova. In the dorsal rim, the rhabdoms are dumbbell shaped and the rhabdomeres each have one of two possible perpendicular microvillar directions (white “T”). In the remainder of the eye, the rhabdoms are round and the rhabdomeres have microvilli oriented in one of several possible directions. Scale bar for both parts: 2 μm (Sections taken from Narendra et al. (2013))

The reason why insects can see plane-polarised light is due to the structure of their rhabdoms, which are formed from tube-like membranous microvilli. These microvilli – which are all highly aligned – each anchor and constrain the orientation of their resident rhodopsin molecules, so that they are aligned along the microvillar axis. Since each rhodopsin molecule is a linear absorption dipole, and the dipole orientation is constrained by the microvillus (and is identical to that for every other rhodopsin molecule), the rhabdom as a whole becomes highly polarisation sensitive in a direction parallel to the microvilli (Snyder and Laughlin 1975). The photoreceptors responsible for the detection and analysis of polarised skylight are housed within the “dorsal rim area” (or DRA), a narrow strip of ommatidia along the dorsal-most margin of the compound eye (reviewed by Wehner and Labhart 2006; Homberg and el Jundi 2014). The analysis of plane-polarised light requires each rhabdom of the DRA to house two “polarisation classes” of these photoreceptors – each with microvilli oriented in only one of two possible perpendicular directions (Fig. 6.7b, c) – thus forming two orthogonal analysis components for any direction of plane-polarised light. The neural signals generated in each class are then compared, via a neural opponency mechanism, at a subsequent (higher) level of the visual system.

How these signals are actually used as a celestial compass has, until very recently, remained unknown. It now turns out, however, that the insect central complex (CX) , a sophisticated structure in the central brain that functions as a control centre for motor coordination and spatial orientation, seems to play a central role. Many neurons of the central complex are highly sensitive to polarised light – the protocerebral bridge, the uppermost region of the CX, even has a columnar architecture reminiscent of the mammalian cortex, in which each column houses interneurons tuned to a specific direction of polarised light (Heinze and Homberg 2007). Recent recordings from TL neurons in the lower division of the central body region of the CX (Fig. 6.8: Bech et al. 2014) not only indicate a sensitivity to polarised light but also reveal that the directional preference for the plane of polarised light, as well as the response strength, both change in a systematic fashion across the cell’s enormous receptive field (which covers the entire dome of the sky). In one such cell, the directional preference and response strength (Fig. 6.8a) vary in such a way as to mimic the systematic variation in the direction and degree of polarisation across the dome of the sky when the sun is at an elevation of 10° (Fig. 6.8b), leading to a remarkably good match between the receptive field properties of the cell and the polarised light properties of the sky (Fig. 6.8c). At this sun elevation, and indeed for most elevations up to around 50 °C, as the locust rotates around its body axis under the sky, the receptive field of this cell would provide such a good match to the celestial polarisation pattern that its response would be strongest only for a single azimuthal direction (as determined by modelling: Fig. 6.8d). Recordings from other TL cells show best matches to the celestial polarisation pattern for other elevations of the sun and strongest responses at other azimuths. Together the responses of all of these cells – each of them an impressive matched filter to a specific celestial polarisation pattern – are likely to be integrated in the brain to provide a robust compass system that allows the locust to unambiguously signal a chosen compass bearing.

Visual matched filtering to the celestial polarisation pattern. (a) The tuning of a TL neuron in the locust central complex in different parts of its receptive field (which occupies the entire celestial hemisphere), shown relative to the locust’s body axis. The orientation of each red arrow shows the direction of polarised light that the TL neuron responded to best at that location in its visual field, while the length and thickness of each arrow represent the strength of the response. (b, c) The celestial polarisation pattern (b) that closely matched (c) the receptive field properties of the TL neuron shown in (a) has a sun elevation and azimuth of 10° and 0° (directly anterior of the locust), respectively (where blue arrows represent the angle and degree of polarisation of light at each point in the celestial hemisphere and the yellow spot represents the sun – see Fig. 6.7a). (d) Modelled neural responses of this TL neuron in response to the entire celestial polarisation pattern as a function of its position in the sky (as determined by the azimuth and elevation of the sun relative to the locust). For most sun elevations up to about 50°, there is a unique azimuth that leads to a maximal response in the TL neuron (and thus gives an unambiguous compass bearing). For elevations near 0° (sunrise/sunset), the neuron has greater difficulty to signal a unique azimuth (and compass bearing), although spectral gradients in the sky are likely used to overcome this ambiguity (Pfeiffer and Homberg 2007). For sun elevations above around 60° (approaching midday), the neuron also has difficulty to signal a unique azimuth, but other TL cells have receptive fields better matched to these elevations (From Bech et al. 2014)

4 Conclusions

From the optical structure of the compound eyes and the physiological properties of the photoreceptors, to the neural circuits that process visual information in the brain, visual matched filtering has constituted a major evolutionary strategy in insects. One reason for this is the necessity for a small visual system, like that of an insect, to be matched to the most pressing visual challenges that the species faces, at the expense of less pressing challenges. A second but no less important reason is that matched filtering undoubtedly saves energy, particularly in the brain, and in small animals like insects, with complex lifestyles but strictly limited energy budgets, this could be of critical benefit for freeing up energy that can be used for other vital functions. Both of these factors – pressing visual challenges and overriding energy constraints – have led to the enormous variety and sophistication of visual matched filters that we see among the insects today.

References

Atick JJ (1992) Could information theory provide an ecological theory of sensory processing? Network 3:213–251

Atick JJ, Redlich AN (1992) What does the retina know about natural scenes? Neural Comput 4:196–210

Autrum H (1950) Die Belichtungspotentiale und das Sehen der Insekten (Untersuchungen an Calliphora und Dixippus). Z Vergl Physiol 32:176–227

Bech M, Homberg U, Pfeiffer K (2014) Receptive fields of locust brain neurons are matched to polarization patterns of the sky. Curr Biol 24:2124–2129

Burton BG, Tatler BW, Laughlin SB (2001) Variations in photoreceptor response dynamics across the fly retina. J Neurophysiol 86:950–960

Byrne M, Dacke M, Nordström P, Scholtz C, Warrant EJ (2003) Visual cues used by ball-rolling dung beetles for orientation. J Comp Physiol A 189:411–418

Chittka L, Niven JE (2009) Are bigger brains better? Curr Biol 19:R995–R1008

Cronin TW, Johnsen S, Marshall NJ, Warrant EJ (2014) Visual ecology. Princeton University Press, Princeton

Cuttle MF, Hevers W, Laughlin SB, Hardie RC (1995) Diurnal modulation of photoreceptor potassium conductance in the locust. J Comp Physiol A 176:307–316

Dacke M, Baird E, Byrne M, Scholtz C, Warrant EJ (2013) Dung beetles use the milky way for orientation. Curr Biol 23:298–300

Dahmen H (1991) Eye specialisations in waterstriders: an adaptation to life in a flat world. J Comp Physiol A 169:623–632

de Souza JM, Ventura DF (1989) Comparative of temporal summation and response form in hymenopåteran photoreceptors. J Comp Physiol A 165:237–245

Dong DW, Atick JJ (1995) Statistics of natural time-varying images. Netw Comput Neur Syst 6:345–358

Eberhard WG (2007) Miniaturized orb-weaving spiders: behavioural precision is not limited by small size. Proc Roy Soc B 274:2203–2209

Field DJ (1987) Relations between the statistics of natural images and the response properties of cortical cells. J Opt Soc Am A 4:2379–2394

Field DJ (1999) Wavelets, vision and the statistics of natural scenes. Phil Trans R Soc A 357:2527–2542

Frederiksen R, Wcislo WT, Warrant EJ (2008) Visual reliability and information rate in the retina of a nocturnal bee. Curr Biol 18:349–353

Gibson JJ (1950) The perception of the visual world. Houghton Mifflin, Boston

Gilbert C, Strausfeld NJ (1991) The functional organization of male-specific visual neurons in flies. J Comp Physiol A 169:395–411

Gronenberg W, Strausfeld NJ (1991) Descending pathways connecting the male-specific visual system of flies to the neck and flight motor. J Comp Physiol A 169:413–426

Hausen K (1982a) Motion sensitive interneurons in the optomotor system of the fly. 1. The horizontal cells – structure and signals. Biol Cybern 45:143–156

Hausen K (1982b) Motion sensitive interneurons in the optomotor system of the fly. 2. The horizontal cells – receptive-field organization and response characteristics. Biol Cybern 46:67–79

Heinze S, Homberg U (2007) Maplike representation of celestial E-vector orientations in the brain of an insect. Science 315:995–997

Heinze S, Reppert SM (2011) Sun compass integration of skylight cues in migratory monarch butterflies. Neuron 69:345–358

Homberg U, el Jundi B (2014) Polarization vision in arthropods. In: Werner JS, Chalupa LM (eds) The new visual neurosciences. MIT Press, Cambridge, MA, pp 1207–1218

Hornstein EP, O'Carroll DC, Anderson JC, Laughlin SB (2000) Sexual dimorphism matches photoreceptor performance to behavioural requirements. Proc R Soc Lond B 267:2111–2117

Horridge GA (1978) The separation of visual axes in apposition compound eyes. Phil Trans Roy Soc Lond B 285:1–59

Howard J, Blakeslee B, Laughlin SB (1984) The dynamics of phototransduction in insects: a comparative study. J Comp Physiol A 154:707–718

Hughes A (1977) The topography of vision in mammals of contrasting life style: comparative optics and retinal organisation. In: Crescitelli F (ed) Handbook of sensory physiology, vol VII/5. Springer, Berlin, pp 613–756

Junger W, Varju D (1990) Drift compensation and its sensory basis in waterstriders (Gerris paludum F.). J Comp Physiol A 167:441–446

Krapp HG (2014) Sensory integration: neuronal filters for polarized light patterns. Curr Biol 24:R840–R841

Krapp HG, Hengstenberg R (1996) Estimation of self-motion by optic flow processing in single visual neurons. Nature 384:463–466

Krapp HG, Hengstenberg B, Hengstenberg R (1998) Dendritic structure and receptive field organization of optic flow processing interneurons in the fly. J Neurophysiol 79:1902–1917

Land MF (1981) Optics and vision in invertebrates. In: Autrum H (ed) Handbook of sensory physiology, vol VII/6B. Springer, Berlin, pp 471–592

Land MF (1989) Variations in the structure and design of compound eyes. In: Stavenga DG, Hardie RC (eds) Facets of vision. Springer, Berlin, pp 90–111

Land MF, Collett TS (1974) Chasing behaviour of houseflies (Fannia canicularis): a description and analysis. J Comp Physiol 89:331–357

Land MF, Eckert H (1985) Maps of the acute zones of fly eyes. J Comp Physiol A 156:525–538

Land MF, Nilsson DE (2012) Animal eyes. Oxford University Press, Oxford

Laughlin S (1981) A simple coding procedure enhances a neuron’s information capacity. Z Naturforsch 36C:910–912

Laughlin SB (1996) Matched filtering by a photoreceptor membrane. Vision Res 36:1529–1541

Laughlin SB, Weckström M (1993) Fast and slow photoreceptors – a comparative study of the functional diversity of coding and conductances in the Diptera. J Comp Physiol A 172:593–609

Laughlin SB, de Ruyter van Steveninck RR, Anderson JC (1998) The metabolic cost of neural information. Nat Neurosci 1:36–41

Müller M, Wehner R (1988) Path integration in desert ants, Cataglyphis fortis. Proc Natl Acad Sci U S A 85:5287–5290

Narendra A, Alkaladi A, Raderschall CA, Robson SKA, Ribi WA (2013) Compound eye adaptations for diurnal and nocturnal lifestyle in the intertidal ant, Polyrhachis sokolova. PLoS One 8(10):e76015

Nilsson DE (1989) Optics and evolution of the compound eye. In: Stavenga DG, Hardie RC (eds) Facets of vision. Springer, Berlin, pp 30–73

Nilsson DE, Warrant EJ, Johnsen S, Hanlon R, Shashar N (2012) A unique advantage for giant eyes in giant squid. Curr Biol 22:683–688

Niven JE, Farris SM (2012) Miniaturization of nervous systems and neurons. Curr Biol 22:R323–R329

Niven JE, Laughlin SB (2008) Energy limitations as a selective pressure on the evolution of sensory systems. J Exp Biol 211:1792–1804

Niven JE, Anderson JC, Laughlin SB (2007) Fly photoreceptors demonstrate energy-information trade-offs in neural coding. PLoS Biol 5(4):e91

Nordström K, O’Carroll DC (2009) Feature detection and the hypercomplex property in insects. TINS 32:383–391

O’Carroll DC (1993) Feature-detecting neurons in dragonflies. Nature 362:541–543

Okawa H, Sampath AP, Laughlin SB, Fain GL (2008) ATP consumption by mammalian rod photoreceptors in darkness and in light. Curr Biol 18:1917–1921

Olberg RM (1981) Object- and self-movement detectors in the ventral cord of the dragonfly. J Comp Physiol A 141:327–334

Olberg RM (1986) Identified target-selective visual interneurons descending from the dragonfly brain. J Comp Physiol A 159:827–840

Perez SM, Taylor OR, Jander R (1997) A sun compass in monarch butterflies. Nature 387:29

Pfeiffer K, Homberg U (2007) Coding of azimuthal directions via time-compensated combination of celestial compass cues. Curr Biol 17:960–965

Rutowski RL, Warrant EJ (2002) Visual field structure in a butterfly Asterocampa leilia (Lepidoptera, Nymphalidae): dimensions and regional variation in acuity. J Comp Physiol A 188:1–12

Salmela I, Immonen EV, Frolov R, Krause S, Krause Y, Vähäsöyrinki M, Weckström M (2012) Cellular elements for seeing in the dark: voltage-dependent conductances in cockroach photoreceptors. BMC Neurosci 13:93

Schwind R (1978) Visual system of Notonecta glauca: a neuron sensitive to movement in the binocular visual field. J Comp Physiol 123:315–328

Schwind R (1980) Geometrical optics of the Notonecta eye: adaptations to optical environment and way of life. J Comp Physiol 140:59–68

Sherk TE (1978) Development of the compound eyes of dragonflies (Odonata). III Adult compound eyes. J Exp Zool 203:61–80

Smolka J, Hemmi JM (2009) Topography of vision and behavior. J Exp Biol 212:3522–3532

Snyder AW (1977) Acuity of compound eyes: physical limitations and design. J Comp Physiol 116:161–182

Snyder AW, Laughlin SB (1975) Dichroism and absorption by photoreceptors. J Comp Physiol 100:101–116

Srinivasan MV, Bernard GD (1975) The effect of motion on visual acuity of the compound eye: a theoretical analysis. Vision Res 15:515–525

Srinivasan MV, Laughlin SB, Dubs A (1982) Predictive coding: a fresh view of inhibition in the retina. Proc R Soc Lond B 216:427–459

Stavenga DG, Kuiper JW (1977) Insect pupil mechanisms. I. On the pigment migration in the retinula cells of Hymenoptera (suborder Apocrita). J Comp Physiol 113:55–72

Strausfeld NJ (1991) Structural organization of male-specific visual neurons in calliphorid optic lobe. J Comp Physiol A 169:379–393

Ugolini A, Fantini T, Innocenti R (2003) Orientation at night: an innate moon compass in sandhoppers (Amphipoda: Talitridae). Proc Roy Soc Lond B 270:279–281

van Hateren JH (1992) Real and optimal neural images in early vision. Nature 360:68–70

van Hateren JH (1993) Spatiotemporal contrast sensitivity of early vision. Vision Res 33:257–267

Walls GL (1942) The vertebrate eye and its adaptive radiation. The Cranbrook Press, Bloomfield Hills

Warrant EJ (1999) Seeing better at night: life style, eye design and the optimum strategy of spatial and temporal summation. Vision Res 39:1611–1630

Warrant EJ (2001) The design of compound eyes and the illumination of natural habitats. In: Barth FG, Schmid A (eds) Ecology of sensing. Springer, Berlin, pp 187–213

Weckström M, Laughlin SB (1995) Visual ecology and voltage-gated ion channels in insect photoreceptors. Trends Neurosci 18:17–21

Wehner R (1981) Spatial vision in arthropods. In: Autrum H (ed) Handbook of sensory physiology, vol VII/6C. Springer, Berlin, pp 287–616

Wehner R (1984) Astronavigation in insects. Annu Rev Entom 29:277–298

Wehner R (1987) “Matched filters” – neural models of the external world. J Comp Physiol A 161:511–531

Wehner R, Labhart T (2006) Polarisation vision. In: Warrant EJ, Nilsson DE (eds) Invertebrate vision. Cambridge University Press, Cambridge, pp 291–348

Williams CB (1965) Insect migration. Collins, London

Williams DS (1983) Changes of photoreceptor performance associated with the daily turnover of photoreceptor membrane in locusts. J Comp Physiol 150:509–519

Zeil J (1983a) Sexual dimorphism in the visual system of flies: the compound eyes and neural superposition in Bibionidae (Diptera). J Comp Physiol 150:379–393

Zeil J (1983b) Sexual dimorphism in the visual system of flies: the free flight behaviour of male Bibionidae (Diptera). J Comp Physiol 150:395–412

Zeil J (2012) Visual homing: an insect perspective. Curr Opin Neurobiol 22:285–293

Zeil J, Hemmi JM (2006) The visual ecology of fiddler crabs. J Comp Physiol A 192:1–25

Zeil J, Nalbach G, Nalbach H-O (1986) Eyes, eye stalks and the visual world of semi-terrestrial crabs. J Comp Physiol 159:801–811

Zeil J, Nalbach G, Nalbach H-O (1988) Spatial vision in a flat world: optical and neural adaptations in arthropods. In: Singh RH, Strausfeld N (eds) Neurobiology of sensory systems. Plenum Press, New York, pp 123–137

Acknowledgements

This review was written during 2015 while I was a Visiting Fellow at the Research School of Biology at the Australian National University in Canberra, Australia. I am deeply indebted to Prof. Jochen Zeil who generously hosted me in his research group and provided critical comments on the manuscript.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Warrant, E.J. (2016). Matched Filtering and the Ecology of Vision in Insects. In: von der Emde, G., Warrant, E. (eds) The Ecology of Animal Senses. Springer, Cham. https://doi.org/10.1007/978-3-319-25492-0_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-25492-0_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-25490-6

Online ISBN: 978-3-319-25492-0

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)