Abstract

The backtracking search algorithm (BSA) was recently developed. It is an evolutionary algorithm for real-valued optimization problems. The main feature of BSA vis-à-vis other known evolutionary algorithms is that it has a single control parameter. It has also been shown that it has a better convergence behavior. In this chapter, the authors deal with the application of BSA to the optimal design of RF circuits, namely low-noise amplifiers. BSA performance, viz. robustness and speed, are checked against the widely used particle swarm optimization technique, and other published approaches. ADS simulation results are given to show the viability of the obtained results.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Low Noise Amplifier (LNA)

- Particle Swarm Optimization Technique

- Backtracking Search Algorithm (BSA)

- Real-valued Optimization Problems

- Single Control Parameter

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Radio-frequency circuit (RF) design is a laborious strained and iterative cumbersome task that mainly relies on the experience of the skilled designers. The literature offers a plethora of papers dealing with techniques, approaches, and algorithms aimed at assisting the designer in such a cumbersome task, see for instance [22, 47].

Mathematical approaches have been used for alleviating the sizing task of such circuits, and it has already been proven that classical approaches are powerless vis-a-vis these NP-hard optimization problems [23].

Metaheuristics bid interesting and arguably efficient tools for overcoming impotence of the classical techniques. This can be briefly explained by the fact that due to the stochastic aspect of metaheuristics, ‘efficient’ sweeping of large dimension search spaces can be insured. Furthermore, metaheuristics allow dealing with many objective problems as well as constrained ones [15, 51, 52, 57].

Evolutionary metaheuristics have been used to deal with the optimal design of RF circuits, as well as analog circuits, and a large number of algorithms have been tested [2, 19, 24, 25, 29, 32, 40–44, 47, 50, 53, 54].

Swarm intelligence techniques (SI) have also been used, such as particle swarm optimization techniques (PSO) [20, 21, 38, 55, 56], ant colony optimization techniques (ACO) [3, 5], and bacterial foraging techniques (BFO) [10, 31]. SI metaheuristics are nowadays largely adopted for the resolution of similar optimization problems. Actually, it has been shown that when compared to notorious optimization algorithms, mainly genetic algorithms (GA) [26, 33] and simulated annealing (SA) [35], SI techniques can be much interesting to be used because they can be more robust, faster, and require much less tuning of control parameters, see for instance [48].

Very recently, an evolutionary algorithm’s enhanced version has been proposed, and it is called the backtracking search optimization technique (BSA or BSOA), and it has been shown via mathematical test functions and few engineering problems that BSA offers superior qualities [11].

Thus, in this work, we have put BSA to the test. It was used for the optimal sizing of low-noise amplifiers (LNAs), namely an UMTS LNA and a multistandard LNA.

BSA performances were checked with those obtained using conventional PSO algorithm and also with published results (for the same circuits) using ACO and BA-ACO techniques [42] as it is highlighted in the following sections.

The rest of this chapter is structured as follows. In Sect. 14.2, we offer a brief introduction to the considered RF circuits. In Sect. 14.3, the BSA technique is detailed, and a concise overview of the PSO technique is recalled. Section 14.4 presents the BSA obtained results, which provides a comparison with performances from the other techniques. ADS simulation techniques are also given in this section. Finally, Sect. 14.5 concludes this chapter and discusses the reached results.

2 Low-Noise Amplifiers

Despite the tremendous efforts on RF circuit design automation, this realm remains very challenging. This is due to the complexity of the domain and its high interaction and dependency on other disciplines, as depicted in Fig. 14.1 [45].

It is to be stressed that one among the most different tasks in this design is the handling of various tradeoffs, known by the famous hexagon introduced in [45], see Fig. 14.2.

The most important block of a front-end receiver is arguably the low-noise amplifier, which principal role consists in amplifying the weak RF input signal fed from the external antenna with a sufficient gain, while adding as less noise as possible, hence its name [1].

Advances in CMOS technology have resulted in deep submicron transistors with high transit frequencies. Such advances have already been investigated for the design of CMOS RF circuits, particularly LNAs [39].

In this work, we deal with two CMOS LNAs, namely a wideband LNA and a multistandard LNA. Both architectures are chosen for comparison reasons with an already published paper [4] regarding performance optimization, as it is detailed in Sect. 14.4.

-

A multistandard LNA

The CMOS transistor level schematic of the LNA is shown in Fig. 14.3. It is intended for multistandard applications in the frequency range 1.5–2.5 GHz [8].

In short, this LNA encompasses a cascade architecture for reducing the Miller effect and uses the reverse isolation. M 3, R 2, and R 1 for the biasing circuitry of the input transistor; L 2, C 1, and C 2 allow the input matching.

-

An UMTS dedicated LNA

Figure 14.4 presents a CMOS LNA, in which topology was optimized in order to be dedicated for UMTS applications. R 1, R 2, and M 3 form the bias circuitry. M2 forms the isolation stage between the input and the output of the circuit. L L , R L , and C L form the circuit’s output impedance.

In Sect. 14.4, we will deal with the optimal sizing of these circuits. Most important performances of such LNAs are considered, i.e., the voltage gain and the noise figure. It is to be noted that the voltage gain is handled via the scattering parameter ‘S21’ [8]. Corresponding expression (generated using a symbolic analyzer [18]), as well as expressions of the noise figure and the input/output matching conditions, is not provided. We refer the reader to [8] for details regarding these issues.

3 PSO and BSA Metaheuristics

As introduced in Sect. 14.1, metaheuristics exhibit a wide spectrum of advantages when compared to the conventional mathematical optimization techniques. Metaheuristics are intrinsically stochastic techniques. They ensure random exploration of the parameter search space, allowing converging to the neighborhood of the global optimum within a reasonable computing time. According to [49], the name ‘metaheuristics’ was attributed to nature-inspired algorithms by Fred Glover [28].

Genetic algorithms [26, 33], which are parts of the evolutionary algorithms, are the oldest most known metaheuristics. A large number of variants of GA were proposed since the introduction of the basic GA (see for instance [15, 51]).

More recently, a new discipline was proposed, so-called swarm intelligence (SI). SI is an artificial reproduction of the collective behavior of individuals that is based on a decentralized control and self-organization [6].

A large number of such systems were studied by swarm intelligence, such as schools of fishes, flocks of birds, colonies of ants, and groups of bacteria, to name few processes [7, 9, 27, 34, 46]. Nowadays, particle swarm optimization may be the most known and the most used technique, particularly in the analog and RF circuits and systems designs, see for instance [13, 20, 21, 37, 48, 55].

More recently, a new improved variant of GA was proposed, and it is called backtracking search optimization technique (BSA) [11]. It offers some interesting features, mainly its robustness (vis-a-vis GA), its rapidity, and the low number of control parameters.

BSA is being used in the fields of analog and RF designs, see for instance [14, 16, 17, 30, 36]. BSA will be used for optimizing performances of both LNAs given in Sect. 14.2.

Presently, PSO is, as introduced above, largely used in design fields; it will also be considered for comparison reasons with BSA.

Furthermore, obtained results are also compared to the ones published in [3], using ant colony optimization (ACO) and backtrack ACO (BA-ACO) techniques.

-

PSO technique is inspired from the observation of social behavior of animals, particularly birds and fish. It is a population-based approach that has the particularity that the decision within the group is not centralized [12, 34]. In short, PSO algorithm can be presented as follows.

The group, which is formed of potential solutions called particles, moves (flies) within the hyper search space seeking for the best location of food (the fitness value).

Movements of the group are guided by two factors: the particle velocity and the particle position, with respect to Eqs. (14.1) and (14.2).

$$ \begin{array}{*{20}c} {\mathop {v_{i} }\limits^{ \to } (t + 1) = } \\ \end{array} \left| {\begin{array}{*{20}l} {\omega \mathop {v_{i} }\limits^{ \to } (t)} \hfill \\ { + C_{1} {\text{rand}}(0,1)(\mathop {x_{{P{\text{best}}i}} }\limits^{{\mathop{\longrightarrow}\limits{{}}}} (t) - \mathop {x_{i} }\limits^{ \to } (t))} \hfill \\ { + C_{2} {\text{rand}}(0,1)(\mathop {x_{{G{\text{best}}i}} }\limits^{{\mathop{\longrightarrow}\limits{{}}}} (t) - \mathop {x_{i} }\limits^{ \to } (t))} \hfill \\ \end{array} } \right. $$(14.1)$$ \begin{array}{*{20}c} {\mathop {x_{i} }\limits^{ \to } (t + 1) = } \\ \end{array} \mathop {x_{i} }\limits^{ \to } (t) + \mathop {v_{i} }\limits^{ \to } (t) $$(14.2)\( \mathop {x_{{P{\text{best}}i}} }\limits^{{\mathop{\longrightarrow}\limits{{}}}} \) is the best position of particle i reached so far; \( \mathop {x_{{G{\text{best}}i}} }\limits^{{\mathop{\longrightarrow}\limits{{}}}} \) is the best position reached by particle i’s neighborhood.

\( \omega \), C 1rand(0, 1), C 2rand(0, 1) are weighting coefficients. \( \omega \) controls the diversification feature of the algorithm, and it is known as the inertia weight. It is a critical parameter that acts on the balance between diversification and intensification. Thus, a large value of \( \omega \) makes the algorithm unnecessarily slow. On the other hand, small values of \( \omega \) promote the local search ability. C 1 and C 2 control the intensification feature of the algorithm. They are known as the cognitive parameter and the social parameter, respectively.

PSO algorithm is given in Fig. 14.5.

As shown above, PSO algorithm is simple to be implemented and is computationally inexpensive. Thus, it does not require large memory space and is rapid, as well. These facts are on the basis of its popularity.

-

BSA (or BSOA) is a new population-based global minimizer evolutionary algorithm for real-valued numerical optimization problems [11]. BSA offers some enhancements over the evolutionary algorithms, mainly the reduction in sensitivity to control parameters and improvement in convergence performances.

Classic genetic operators, namely selection, crossover, and mutation, are used in BSA, but in a novel way.

BSA encompasses five main processes: (i) initialization, (ii) selection ①, (iii) mutation, (iv) crossover, and (v) selection ②. BSA structure is simple, which confers low computational cost, rapidity, and necessitates low memory space. Moreover, the power of BSA can be summarized through its control process of the search directions within the parameters’ hyperspace.

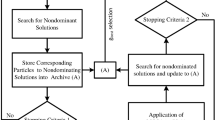

BSA algorithm is given in Fig. 14.6.

-

Initialization: The population P = (p ij )(N,M) is initialized via a uniform stochastic selection of particles values within the hypervolume search space, as shown by expression (14.3):

$$ \begin{aligned} & p_{ij} = p_{j\hbox{min} } + {\text{rand}}(0,1)(p_{{j{\text{Max}}}} - p_{j\hbox{min} } ) \\ & (i,j) \in \left\{ {1, \ldots ,N} \right\}x\left\{ {1, \ldots ,M} \right\} \\ \end{aligned} $$(14.3)BSA takes benefits from previous experiences of the particles; thus, it uses a memory where the best position of each particle visited so far is memorized. The corresponding matrix noted P best = (p bestij )(N,M) is initialized in the same way as matrix P:

$$ \begin{aligned} & p_{{{\text{best}}ij}} = p_{j\hbox{min} } + {\text{rand}}(0,1)(p_{{j{\text{Max}}}} - p_{{j{ \hbox{min} }}} ) \\ & (i,j) \in \left\{ {1, \ldots ,N} \right\}x\left\{ {1, \ldots ,M} \right\} \\ \end{aligned} $$(14.4) -

Selection ① consists of the update of theP best matrix,

-

The Mutation process operates as follows. A mutant MUTANT = (mutant ij )(N,M) is generated as shown in Eq. (14.5).

$$ \begin{aligned} & {\text{mutant}}_{ij} = p_{ij} + {\mathcal{F}} \left( {p{\text{best}}_{ij} - p_{ij} } \right) \\ & (i,j) \in \left\{ {1, \ldots ,N} \right\}x\left\{ {1, \ldots ,M} \right\} \\ \end{aligned} $$(14.5)\( {\mathcal{F}}\) is a normally distributed factor that is used to control the search path, i.e., the direction.

-

Crossover: It consists in generating a uniformly distributed integer valued matrix MAP = (map ij )(N,M). MAP elements values are controlled via a strategy that defines the number of particle components that mutate. This is performed via the ‘dimension-rate’ coefficient. Matrix MAP is used for determining the matrix P components to be handled: the offspring matrix.

-

Selection ② consists of the update of the trial population via P best matrix.

-

4 Experimental Results and Comparisons

In this section, we will first deal with the application of the BSA technique to some mathematic test functions and give comparison results with the ones obtained by means of PSO regarding the robustness and the algorithm execution time. Then, we will consider the case of both LNAs introduced in Sect. 14.2. It is to be noted that a Core™2 Duo Processor T5800 (2 M Cache, 2.00 GHz, 4.00 Go) PC was used for that purpose.

-

Test functions

Five test functions were considered: DeJong’s, Eason 2D, Griewank, Parabola, and Rosenbrock.

The corresponding expressions are given by (14.6)–(14.10), respectively. Figure 14.7 shows a plot of these functions.

Both algorithms, i.e., PSO and BSA, were run 100 times. The algorithms’ parameters are given in Table 14.1.

Figure 14.8 gives a whisker boxplot relative to the 100 executions of both algorithms.

Table 14.2 gives the mean execution time of both algorithms with respect to the five considered functions.

-

LNAs

PSO and BSA algorithms were used for optimally sizing both LNAs presented in Sect. 14.2. The same conditions observed above were considered. Tables 14.3 and 14.4 give the circuits’ optimized parameters. Moreover, simulations were performed using ADS software to check the viability of these results. Obtained performances are given in Tables 14.5 and 14.6. In addition, these tables present the results published in [4] obtained by application of ACO and BA-ACO techniques. The mean execution times for both problems are given in Table 14.7. Robustness results are shown in Figs. 14.9 and 14.10.

Simulation results obtained using the ‘a priori’ optimal parameters for both circuits are depicted in Figs. 14.11, 14.12, 14.13, 14.14, 14.15, 14.16, 14.17, 14.18, 14.19, 14.20, 14.21, 14.22, 14.23, 14.24, 14.25, and 14.26.

5 Discussion and Conclusion

This chapter investigated the application of BSA, the very recently proposed EA technique, on the resolution of RF sizing problems. For comparison reasons, PSO technique was also applied for optimizing these circuits (namely two LNAs). Furthermore, obtained performances were also compared with the already published results dealing with the same circuits but using ACO and BA-ACO, and also the application to the resolution of some test functions.

The obtained results show that BSA outperforms the other optimization techniques in terms of computing time. However, it has been noted that PSO is relatively more robust. Nonetheless, the rapidity of BSA and its good performances make this algorithm a good and interesting technique to be considered within a computer-aided design approach/tool.

References

Allstot, D.J., Choi, K., Park, J. (eds.): Parasitic-Aware Optimization of CMOS RF Circuits. Kluwer academic publishers, New York (2003)

Barros, M., Guilherme, J., Horta, N.: Analog Circuits and Systems Optimization Based on Evolutionary Computation Techniques. Springer, New York (2010)

Benhala, B., Ahaitouf, A., Kotti, M., Fakhfakh, M., Benlahbib, B., Mecheqrane, A., Loulou, M., Abdi, F., Abarkane, E.: Application of the ACO technique to the optimization of analog circuit performances. In: Tlelo-Cuautle, E. (ed.) Analog Circuits: Applications, Design and Performances, pp. 235–255. Nova Science Publishers Inc, New York (2011)

Benhala, B., Kotti, M., Ahaitouf, A., Fakhfakh, M.: Backtracking ACO for RF-circuit design optimization. In: Fakhfakh, M., et al. (eds.) Performance Optimization Techniques in Analog, Mixed-Signal, and Radio-Frequency Circuit Design, pp. 1–22. IGI-Global, USA (2014)

Benhala, B., Bouattane, O.: GA and ACO techniques for the analog circuits design optimization. J. Theor. Appl. Inf. Technol. 64(2), 413–419 (2014)

Blum, C., Merkle, D. (eds.): Swarm intelligence: introduction and applications. Springer, New York (2008)

Bonabeau, E., Theraulaz, G., Dorigo, M.: Swarm intelligence: from natural to artificial systems. Oxford University Press, New York (1999)

Boughariou, M., Fakhfakh, M., Loulou, M.: Design and optimization of LNAs through the scattering parameters. In: The IEEE Mediterranean Electrotechnical Conference, Malta, 26–28 Apr 2010

Chan, F.T.S., Tiwari, M.K.: Swarm Intelligence: Focus on Ant and Particle Swarm Optimization. I-Tech education and publishing, Croatia (2007)

Chatterjee, A., Fakhfakh, M., Siarry, P.: Design of second-generation current conveyors employing bacterial foraging optimization. Microelectron. J. 41(10), 616–626 (2010)

Civicioglu, P.: Backtracking search optimization algorithm for numerical optimization problems. Appl. Math. Comput. 219(15), 8121–8144 (2013)

Clerc, M.: Particle Swarm Optimization. ISTE Ltd., London (2006)

Datta, R., Dutta, A., Bhattacharyya, T.K.: PSO-based output matching network for concurrent dual-band LNA. In: International Conference on Microwave and Millimeter Wave Technology, Chengdu, China, 8–11 May 2010

de Sá, A.O., Nedjah, N., Mourelle, L.-M.: Genetic and backtracking search optimization algorithms applied to localization problems. In: Murgante, B. et al. (eds.) Computational Science and Its Applications. Lecture notes in computer science (8583), pp. 738–746. Springer, Heidelberg (2014)

Dréo, J., Pétrowski, A., Siarry, P., Taillard, E.: Metaheuristics for Hard Optimization: Methods and Case Studies. Springer, New York (2006)

Duan, H., Luo, Q.: Adaptive backtracking search algorithm for induction magnetometer optimization. IEEE Trans. Magn. 50(12), 1–6 (2014)

El-Fergany, A.: Optimal allocation of multi-type distributed generators using backtracking search optimization algorithm. Electr. Power Energy Syst. 64, 1197–1205 (2015)

Fakhfakh, M., Loulou, M.: Live demonstration: CASCADES. 1: a flow-graph-based symbolic analyzer. In: The IEEE International Symposium on Circuits and Systems, Paris, France, 30 May 2010–02 June 2010

Fakhfakh, M., Sallem, A., Boughariou, M., Bennour, S., Bradai, E., Gaddour, E., Loulou, M.: Analogue circuit optimization through a hybrid approach. In: Koeppen, M., Schaefer, G., Abraham, A. (eds.) Intelligent Computational Optimization in Engineering: Techniques and Applications, pp. 297–327. Springer, New York (2010)

Fakhfakh, M., Cooren, Y., Sallem, A., Loulou, M., Siarry, P.: Analog circuit design optimization through the particle swarm optimization technique. Analog Integr. Circ. Sig. Process 63(1), 71–82 (2010)

Fakhfakh, M., Siarry, P.: MO-TRIBES for the optimal design of analog filters. In: Fornarelli, G., Mescia, L. (eds.) Swarm Intelligence for Electric and Electronic Engineering, pp. 57–70. IGI-Global, USA (2013)

Fakhfakh, M., Tlelo-Cuautle, E., Castro-Lopez, R. (eds.): Analog/RF and Mixed-Signal Circuit Systematic Design. Springer, New York (2013)

Fakhfakh, M., Tlelo-Cuautle, E., Fino, M.H. (eds.): Performance Optimization Techniques in Analog, Mixed-Signal, and Radio-Frequency Circuit Design. IGI-Global, Hershey, Pennsylvania (2014)

Fallahpour, M.B., Hemmati, K.D., Pourmohammad, A.: Optimization of a LNA using genetic algorithm. Electr. Electron. Eng. 2(2), 38–42 (2012)

Fathianpour, A., Seyedtabaii, S.: Evolutionary search for optimized LNA components geometry. J. Circ. Syst. Comput. 23(1), 1–16 (2014)

Fisher, R.A.: The Genetical Theory of Natural Selection. Oxford University Press, New York (1958)

Fornarelli, G., Mescia, L.: Swarm Intelligence for Electric and Electronic Engineering. IGI-Global, USA (2013)

Glover, F.: http://leeds-faculty.colorado.edu/glover/. Accessed Nov 2014

Grimbleby, J. B.: Automatic analogue circuit synthesis using genetic algorithms. In: IEEE Proceedings-Circuits, Devices and Systems, vol. 147, no. 6, pp. 319–323 (2000)

Guney, K., Durmus, A., Basbug, S.: Backtracking search optimization algorithm for synthesis of concentric circular antenna arrays. Int. J. Antennas Propag. 2014, 1–11 (2014)

Gupta, N., Saini, G.: Performance analysis of BFO for PAPR reduction in OFDM. Int. J. Soft Comput. Eng. 2(5), 127–133 (2012)

Hemmati, K.D., Fallahpour, M.B., Golmakani, A.: Design and optimization of a new LNA. Majlesi J. Telecommun. Devices 1(3), 91–96 (2012)

Holland, J.H.: Hidden Order: How Adaptation Builds Complexity. Addison-Wesley, Redwood City (1995)

Kennedy, J., Eberhart, R.: Particle swarm optimization. In: IEEE International Conference on Neural Networks, Perth, 27 Nov 1995–01 Dec 1995

Kirkpatrick, S., Gelatt, C.D., Vecchi, M.P.: Optimization by simulated annealing. J. Sci. 220, 671–680 (1983)

Kolawole, S.O., Duan, H.: Backtracking search algorithm for non-aligned thrust optimization for satellite formation. In: The IEEE International Conference on Control and Automation, Taichung, Taiwan, 18–20 June 2014

Kotti, M., Sallem, A., Bougharriou, M., Fakhfakh, M., Loulou M.: Optimizing CMOS LNA circuits through multi-objective meta-heuristics. The international workshop on symbolic and numerical methods, modeling and applications to circuit design, Gammarth, Tunisia, 4–6 Oct 2010

Kumar, P., Duraiswamy, K.: An optimized device sizing of analog circuits using particle swarm optimization. J. Comput. Sci. 8(6), 930–935 (2012)

Li, Y., Yu, S.-M., Li, Y.-L.: A simulation-based hybrid optimization technique for low noise amplifier design automation. In: Shi, Y., van Albada, G.D., Dongarra, J., Sloot, P.M.A. (eds.) Proceedings of the 7th International Conference on Computational Science, Part IV: ICCS 2007, May 2007. Lecture Notes in Computer Science, vol. 4490, pp. 259–266. Springer, Heidelberg (2007)

Li, Y.: A simulation-based evolutionary approach to LNA circuit design optimization. Appl. Math. Comput. 209(1), 57–67 (2009)

Li, Y.: Simulation-based evolutionary method in antenna design optimization. Math. Comput. Model. 51(7–8), 944–955 (2010)

Liu, Z., Liu, T., Gao, X.: An improved ant colony optimization algorithm based on pheromone backtracking. In: The IEEE International Conference on Computational Science and Engineering, Dalian, China, 24–26 Aug 2011

Lourenço, N., Martins, R., Barros, M., Horta, N.: Analog circuit design based on robust POFs using an enhanced MOEA with SVM models. In: Fakhfakh, M., Tlelo-Cuautle, E., Castro-Lopez, R. (eds.) Analog/RF and Mixed-Signal Circuit Systematic Design. Springer, New Jersey (2013)

Neoh, S.C., Marzuki, A., Morad, N., Lim, C.P. Abdul Aziz, Z.: An interactive evolutionary algorithm for MMIC low noise amplifier design. ICIC Express Lett. 3(1), 15–19 (2009)

Razavi, B.: RF Microelectronics. Prentice Hall, Canada (2012)

Reyes-Sierra, M., Coello-Coello, C.A.: Multi-objective particle swarm optimizers: a survey of the state-of-the-art. Int. J. Comput. Intell. Res. 2(3), 287–308 (2006)

Roca, E., Fakhfakh, M., Castro-Lopez, R., Fernandez, F.V.: Applications of evolutionary computation techniques to analog, mixed-signal and RF circuit design—an overview. In: The IEEE International Conference on Electronics, Circuits, and Systems, Yasmine Hammamet, Tunisia, 13–16 Dec 2009

Sallem, A., Benhala, B., Kotti, M., Fakhfakh, M., Ahaitouf, A., Loulou, M.: Application of swarm intelligence techniques to the design of analog circuits: evaluation and comparison. Analog Integr. Circ. Sig. Process 75(3), 499–516 (2013)

Scholarpedia.org http://www.scholarpedia.org/article/Metaheuristic_Optimization. Accessed Nov 2014

Shin, L.W., Chin, N.S, Marzuki, A.: 5 GHz MMIC LNA design using particle swarm optimization. Inf. Manag. Bus. Rev. 5(6), 257–262 (2013)

Siarry, P., Michalewicz, Z.: Advances in Metaheuristics for Hard Optimization. Springer, New York (2007)

Siarry, P. (ed.): Heuristics, Theory and Applications. Nova publisher, USA (2013)

Silva, L.G., Jr A.C.S., da Silva S.C.H.: Development of tri-band RF filters using evolutionary strategy. AEU Int. J. Electron. Commun. 68(12), 1156–1164 (2014)

Sönmez, Ö.S., Dündar, G.: Simulation-based analog and RF circuit synthesis using a modified evolutionary strategies algorithm. Integr. VLSI J. 44(2), 144–154 (2011)

Tripathi, J.N., Mukherjee, J., Apte, P.R.: Design automation, modeling, optimization, and testing of analog/RF circuits and systems by particle swarm optimization. In: Fornarelli, G., Mescia, L. (eds.) Swarm Intelligence for Electric and Electronic Engineering, pp. 57–70. IGI-Global, USA (2013)

Ushie, O.J., Abbod, M.: Intelligent optimization methods for analogue electronic circuits: GA and PSO case study. In: The International Conference on Machine Learning, Electrical and Mechanical Engineering, Dubai 8–9 Jan 2014

Valadi, J., Siarry, P. (eds.): Applications of Metaheuristics in Process Engineering. Springer, New York (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Garbaya, A., Kotti, M., Fakhfakh, M., Siarry, P. (2015). The Backtracking Search for the Optimal Design of Low-Noise Amplifiers. In: Fakhfakh, M., Tlelo-Cuautle, E., Siarry, P. (eds) Computational Intelligence in Analog and Mixed-Signal (AMS) and Radio-Frequency (RF) Circuit Design. Springer, Cham. https://doi.org/10.1007/978-3-319-19872-9_14

Download citation

DOI: https://doi.org/10.1007/978-3-319-19872-9_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-19871-2

Online ISBN: 978-3-319-19872-9

eBook Packages: Computer ScienceComputer Science (R0)