Abstract

The age of multi-core computers is upon us, yet current programming languages, typically designed for single-core computers and adapted post hoc for multi-cores, remain tied to the constraints of a sequential mindset and are thus in many ways inadequate. New programming language designs are required that break away from this old-fashioned mindset. To address this need, we have been developing a new programming language called Encore, in the context of the European Project UpScale. The paper presents a motivation for the Encore language, examples of its main constructs, several larger programs, a formalisation of its core, and a discussion of some future directions our work will take. The work is ongoing and we started more or less from scratch. That means that a lot of work has to be done, but also that we need not be tied to decisions made for sequential language designs. Any design decision can be made in favour of good performance and scalability. For this reason, Encore offers an interesting platform for future exploration into object-oriented parallel programming.

Partly funded by the EU project FP7-612985 UpScale: From Inherent Concurrency to Massive Parallelism through Type-based Optimisations (http://www.upscale-project.eu).

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Nowadays the most feasible way for hardware manufacturers to produce processors with higher performance is by putting more parallel cores onto a single chip. This means that virtually every computer produced these days is a parallel computer. This trend is only going to continue: machines sitting on our desks are already parallel computers, and massively parallel computers will soon be readily at our disposal.

Most current programming languages were defined to be sequential-by-default and do not always address the needs of the multi-core era. Writing parallel programs in these languages is often difficult and error prone due to race conditions and the challenges of exploiting the memory hierarchy effectively. But because every computer will be a parallel computer, every programmer needs to become a parallel programmer supported by general-purpose parallel programming languages. A major challenge in achieving this is supporting scalability, that is, allowing execution times to remain stable as both the size of the data and available parallel cores increases, without obfuscating the code with arbitrarily complex synchronisation or memory layout directives.

To address this need, we have been developing the parallel programming language Encore in the context of the European Project UpScale. The project has one ambitious goal: to develop a general purpose parallel programming language (in the object-oriented vein) that supports scalable performance. Because message-based concurrency is inherently more scalable, UpScale takes actor-based concurrency, asynchronous communication, and guaranteed race freedom as the starting points in the development of Encore.

Encore is based on (at least) four key ingredients: active object parallelism for coarse-grained parallelism, unshared local heaps to avoid race conditions and promote locality, capabilities for concurrency control to enable safe sharing, and parallel combinators for expressing high-level coordination of active objects and low-level data parallelism. The model of active object parallelism is based on that of languages such as Creol [21] and ABS [20]. It requires sequentialised execution inside each active object, but parallel execution of different active objects in the system. The core of the local heaps model is a careful treatment of references to passive objects so that they remain within an active object boundary. This is based on Joëlle [13] and involves so-called sheep cloning [11, 29] to copy arguments passed to methods of other active objects. Sheep cloning is a variant of deep cloning that does not clone references to futures and active objects. Capabilities allow these restrictions to be lifted in various ways to help unhinge internal parallelism while still guaranteeing race free execution. This is done using type-based machinery to ensure safe sharing, namely that no unsynchronised mutable object is shared between two different active objects. Finally, Encore includes parallel combinators, which are higher-order coordination primitives, derived from Orc [22] and Haskell [30], that sit both on top of objects providing high-level coordination and within objects providing low-level data parallelism.

This work describes the Encore language in a tutorial fashion, covering course-grained parallel computations expressible using active objects, and fine-grained computations expressible using higher-order functions and parallel combinators. We describe how these integrate together in a safe fashion using capabilities and present a formalism for a core fragment of Encore.

Currently, the work on Encore is ongoing and our compiler already achieves good performance on some benchmarks. Development started more or less from scratch, which means not only that we have to build a lot of infrastructure, but also that we are free to experiment with different implementation possibilities and choose the best one. We can modify anything in the software stack, such as the memory allocation strategy, and information collected about the program in higher levels can readily be carried from to lower levels—contrast this with languages compiled to the Java VM: source level information is effectively lost in translation and VMs typically do not offer much support in controlling memory layout, etc.

Encore has only been under development for about a year and a half, consequently, anything in the language design and its implementation can change. This tutorial therefore can only give a snapshot of what Encore aspires to be.

Structure. Section 2 covers the active object programming model. Section 3 gently introduces Encore. Section 4 discusses active and passive classes in Encore. Section 5 details the different kinds of methods available. Section 6 describes futures, one of the key constructs for coordinating active objects. Section 7 enumerates many of the commonplace features of Encore. Section 8 presents a stream abstraction. Section 9 proposes parallel combinators as a way of expressing bulk parallel operations. Section 10 advances a particular capability system as a way of avoiding data races. Section 11 illustrates Encore in use via examples. Section 12 formalises a core of Encore. Section 13 explores some related work. Finally, Sect. 14 concludes.

2 Background: Active Object-Based Parallelism

Encore is an active object-based parallel programming language. Active objects (Fig. 1), and their close relation, actors, are similar to regular object-oriented objects in that they have a collection of encapsulated fields and methods that operate on those fields, but the concurrency model is quite different from what is found in, for example, Java [16]. Instead of threads trampling over all objects, hampered only by the occasional lock, the active-object model associates a thread of control with each active object, and this thread is the only one able to access the active object’s fields. Active objects communicate with each other by sending messages (essentially method calls). The messages are placed in a queue associated with the target of the message. The target active object processes the messages in the queue one at a time. Thus at most one method invocation is active at a time within an active object.

Method calls between active objects are asynchronous. This means that when an active object calls a method on another active object, the method call returns immediately—though the method does not run immediately. The result of the method call is a future, which is a holder for the eventual result of the method call. The caller can do other work immediately, and when it needs the result of the method call, it can get the value from the future. If the value is not yet available, the caller blocks.

Futures can be passed around, blocked on (in various ways), or have additional functionality chained on them. This last feature, available in Javascript for instance, allows the programmer to chain multiple asynchronous computations together in a way that makes the program easy to understand by avoiding callbacks.

Actors are a similar model to active objects (though often the terminology for describing them differs). Two features are more commonly associated with active objects. Firstly, active objects are constructed from (active) classes, which typically are composed using inheritance and other well-known object-oriented techniques. This arguably makes active objects easier to program with as they are closer to what many programmers are used to. Secondly, message sends (method calls) in active objects generally return a result, via a future, whereas message sends in actors are one-way and results are obtained via a callback. Futures are thus key to making asynchronous calls appear synchronous and avoid the inversion of control associated with callbacks.

Weakness of Active Objects. Although active objects have been selected as the core means for expressing parallel computation in Encore, the model is not without limitations. Indeed, much of our research will focus on ways of overcoming these.

Although futures alleviate the problem of inversion of control described above in problem, they are not without code. Waiting on a future that had not been fulfilled can be expensive as it involves blocking the active object’s thread of control, which may then prevent other calls depending in the active object to block. Indeed, the current implementation of blocking on a future in Encore is costly.

A second weakness of active objects is that, at least in the original model, it is impossible to execute multiple concurrent method invocations within an active object, even if these method invocations would not interfere. Some solutions to this problem have been proposed [19] allowing a handful of method invocations to run in parallel, but these approaches do not unleash vast amounts of parallelism and they lack any means for structuring and composing the non-interfering method invocations. For scalability, something more is required. Our first ideas in this direction are presented in Sect. 9.

3 Hello Encore

Encore programs are stored in files with the suffix .enc by convention and are compiled to C. The generated C code is compiled and linked with the Encore run-time system, which is also written in C. The compiler itself is written in Haskell, and the generated C is quite readable, which significantly helps with debugging.

To get a feeling for how Encore programs look, consider the following simple program (in file hello.enc) that prints “Hello, World!” to standard output.

The code is quite similar to modern object-oriented programming languages such as Scala, Python or Ruby. It is statically typed, though many type annotations can be omitted, and, in many cases, the curly braces { and } around classes, method bodies, etc. can also be omitted.

Ignoring the first line for now, this file defines an active class Main that has a single method main that specifies its return type as void. The body of the method calls print on the string “Hello, World!”, and the behaviour is as expected.

Every legal Encore program must have an active class called Main, with a method called main—this is the entry point to an Encore program. The run-time allocates one object of class Main and begins execution in its Main method.

The first line of hello.enc is optional and allows the compiler to automatically compile and run the program (on Unix systems such as Mac OS X). The file hello.enc has to be runnable, which is done by executing chmod u+x hello.enc in the shell. After making the program executable, entering ./hello.enc in the shell compiles and executes the generated binary, as follows:

An alternative to the #! /usr/bin/env encorec -run line is to call the compiler directly, and then run the executable:

4 Classes

Encore offers both active and passive classes. Instances of active classes, that is, active objects, have their own thread of control and message queue (cf. Sect. 2). Making all objects active would surely consume too many system resources and make programming difficult, so passive objects are also included in Encore. Passive objects, instances of passive classes, do not have a thread of control. Passive classes are thus analogous to (unsynchronised) classes in mainstream object-oriented languages like Java or Scala. Classes are active by default: class A. The keyword passive added to a class declaration makes the class passive: passive class P. Valid class names must start with an uppercase letter. (Type parameters start with a lower case letter.) Classes in Encore have fields and methods; there is a planned extension to include traits and interfaces integrating with capabilities (cf. Sect. 10).

A method call on an active object will result in a message being placed in the active object’s message queue and the method invocation possibly runs in parallel with the callee. The method call immediately results in a future, which will hold the eventual result of the invocation (cf. Sect. 6). A method call on a passive object will be executed synchronously by the calling thread of control.

4.1 Object Construction and Constructors

Objects are created from classes using new, the class name and an optional parameter list: new Foo(42). The parameter list is required if the class has an init method, which is used as the constructor. This constructor method cannot be called on its own in other situations.

4.2 Active Classes

The following example illustrates active classes. It consists of a class Buffer that wraps a Queue data structure constructed using passive objects (omitted). The active object provides concurrency control to protect the invariants of the underlying queue, enabling the data structure to be shared. (In this particular implementation, taking an element from the Buffer is implemented using suspend semantics, which is introduced in Sect. 7.)

Fields of an active object are private; they can only be accessed via this, so the field queue of Buffer is inaccessible to an object holding a reference to a Buffer object.

4.3 Passive Classes

Passive classes in Encore correspond to regular (unsynchronised) classes in other languages. Passive classes are used for representing the state of active objects and data passed between active objects. Passive classes are indicated with the keyword passive.

In passive classes, all fields are public:

4.4 Parametric Classes

Classes can take type parameters. This allows, for example, parameterised pairs to be implemented:

This class can be used as follows:

Currently, type parameters are unbounded in Encore, but this limitation will be removed in the future.

4.5 Traits and Inheritance

Encore is being extended with support for traits [14] to be used in place of standard class-based inheritance. A trait is a composable unit of behaviour that provides a set of methods and requires a set of fields and methods from any class that wishes to include it. The exact nature of Encore traits has not yet been decided at time of writing.

A class may be self-contained, which is the case for classes shown so far and most classes shown in the remainder of this document, or be constructed from a set of pre-existing traits. The inclusion order of traits is insignificant, and multiple ways to combine traits are used by the type system to reason about data races (cf. Sect. 10). Below, the trait Comparator implementation requires that the including class defines a cmp method, and provides five more high-level methods all relying on the required method.

Traits enable trait-based polymorphism—it is possible, for instance, to write a method that operates on any object whose class includes the Comparator trait:

For more examples of traits, see Sect. 10.

5 Method Calls

Method calls may run asynchronously (returning a future) or synchronously depending primarily on whether the target is active or passive. The complete range of possibilities is given in the following table:

Synchronous | Asynchronous | |

|---|---|---|

Active objects | get o.m() | o.m() |

Passive objects | o.m() | — |

this (in Active) | this .m() | let that = this in that.m() |

Self calls on active objects can be run synchronously—the method called is run immediately—or asynchronously—a future is immediately returned and the invocation is placed in the active object’s queue.

Sometimes the result of an asynchronous method call is not required, and savings in time and resources can be gained by not creating the data structure implementing the future. To inform the compiler of this choice, the . in the method call syntax is replaced by a !, as in the following snippet:

6 Futures

Method calls on active objects run asynchronously, meaning that the method call is run potentially by a different active object and that the current active object does not wait for the result. Instead of returning a result of the expected type, the method call returns an object called a future. If the return type of the method is t, then a value of type Fut t is returned to the caller. A future of type Fut t is a container that at some point in the future will hold a value of type t, typically when some asynchronous computation finishes. When the asynchronous method call finishes, it writes its result to the future, which is said to be fulfilled. Futures are considered first class citizens, and can be passed to and returned from methods, and stored in data types. Holding a value of type Fut t gives a hint that there is some parallel computation going on to fulfil this future. This view of a future as a handle to a parallel computation is exploited further in Sect. 9.

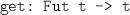

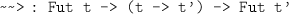

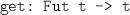

Several primitive operations are available on futures:

-

waits for the future to be fulfilled, blocking the current active object until it is; returns the value stored in the future.

-

waits for the future to be fulfilled, without blocking the current active object, thus other methods can run; does not return a value.Footnote 1

-

chaining:

takes a closure to run on the result when the future is fulfilled; returns another future that will contain the result of running the closure.

These operations will be illustrated using following the classes as a basis. These classes model a service provider that produces a certain product:

The next subsections provide several implementations of clients that call on the service provider, create an instance of class Handle to deal with the result, and pass the result provided by the service provider to the handler.

6.1 Using the get operation

When the get operation is applied to a future, the current active object blocks until the future is fulfilled, and when it has been, the call to get returns the value stored in the future.

Consider the following client code.

In method run of Client, the call to service.provide() results in a future of type Fut Product (line 6). In line 9, the actual Product object is obtained using a call to get. If the future had already been fulfilled, the Product object would be returned immediately. If not, method and the active object block, preventing any progress locally until the future is fulfilled.

6.2 Using the await command

One of the problems with calling get on a future is that it can result in the entire active object being blocked—sometimes this is desirable to ensure than internal invariants hold, but it can result in costly delays, for example, if the method called involves a time-consuming calculation. During that time, the whole active object can make no progress, which would also block other active objects that need its services.

An alternative, when it makes sense, is to allow the active object to process other messages from its message queue and resume the current method call sometime after the future has been fulfilled. This is exactly what calling await on a future allows.

Command await applies to a future and waits for it to be fulfilled, blocking the current method call but without blocking the current active object. The call to await does not return a value, so a call to get is required to get the value. This call to get is guaranteed to succeed without blocking.

The following code provides an alternative implementation to the method run from class Client above using await:Footnote 2

In this code, the call await fut on line 5 will block if the future fut is unfulfilled; other methods could run in the same active object between lines 5 and 6. When control returns to this method invocation, execution will resume on line 6 and the call to get fut is guaranteed to succeed.

6.3 Using Future Chaining

The final operation of futures is future chaining (

) [24]. Instead of waiting for the future fut to be fulfilled, as is the case for get and await, future chaining attaches a closure g to the future to run when the future is fulfilled. Future chaining immediately returns a future that will store the result of applying the closure to the result of the original future.

The terminology comes from the fact that one can add a further closure onto the future returned by future chaining, and add a additional closure onto that, and so forth, creating a chain of computations to run asynchronously. If the code is written in a suitably stylised way (e.g., one of the ways of writing monadic code such as Haskell’s do-notation [30]), then the code reads in sequential order—no inversion of control.

Consider the following alternative implementation of the run method from class Client above using future chaining:

In the example above, the closure defined on line 5 will be executed as soon as the future from service.provide() (line 2) is fulfilled.

A chained closure can run in one of two modes, depending on what is accessed within the closure. If the closure accesses fields or passive objects from the surrounding context, which would create the possibility of race conditions, then it must be run in attached mode, meaning that the closure when invoked will be run by the active object that lexically encloses it. The closure in the example above needs to run in attached mode as it accesses the local variable handle. In contrast, a closure that cannot cause race conditions with the surrounding active object can be run in detached mode, which means that it can be run independently of the active object. To support the specification of detached closures, the notion of spore [26], which is a closure with a pre-specified environment, can be used (cf. Sect. 7.6). Capabilities (Sect. 10) will also provide means for allowing safe detached closures.

7 Expressions, Statements, and so Forth

Encore has many of the language features one expects from a general purpose programming language. Some of these features are described (briefly) in the following subsections.

7.1 Types

Encore has a number of built in types. The following table presents these, along with typical literals for each type:

Type | Description | Literals |

|---|---|---|

void | The unit value | () |

string | Strings | “hello” |

int | Fixed-precision integers | 1, -12 |

uint | Unsigned, fixed-precision integers | 42 |

real | Floating point numbers | 1.234, -3.141592 |

bool | Booleans | true, false |

Fut t | Futures of type t | — |

Par t | Parallel computations producing type t | — |

Stream t | functional streams of type t | — |

| functions from type t to type t’ |

|

[t] | arrays of type t | [1,2,3,6], but not [] |

The programmer can also introduce two new kinds of types: active class types and passive classes types, both of which can be polymorphic (cf. Sect. 4).

7.2 Expression Sequences

Syntactically, method bodies, while bodies, let bodies, etc. consist of a single expression:

In this case, curly braces are optional.

If several expressions need to be sequenced together, this is done by separating them by semicolons and wrapping them in curly braces. The value of a sequence is the value of its last expression. A sequence can be used wherever an expression is expected.

7.3 Loops

Encore has two kinds of loops: while and repeat loops. A while loop takes a boolean loop condition, and evaluates its body expression repeatedly, as long as the loop condition evaluates to true:

This prints:

The repeat loop is syntax sugar that makes iterating over integers simpler. The following example is equivalent to the while loop above:

In general,

evaluates expr for values \(i=0,1,\ldots , n-1\).

7.4 Arrays

The type of arrays of type T is denoted [T]. An array of length n is created using new [T](n). Arrays are indexed starting from \(0\). Arrays are fixed in size and cannot be dynamically extended or shrunk.

Array elements are accessed using the bracket notation: a[i] accesses the \(i\)th element. The length of an array is given by |a|. Arrays can be constructed using the literal notation [1, 2, 1+2].

The following example illustrates the features of arrays:

The expected output is

7.5 Formatted Printing

The print statement allows formatted output. It accepts a variable number of parameters. The first parameter is a format string, which has a number of holes marked with {} into which the values of the subsequent parameters are inserted. The number of occurrences of {} must match the number of additional parameters.

The following example illustrates how it works.

The output is:

7.6 Anonymous Functions

In Encore, an anonymous function is written as follows:

This function multiplies its input \(i\) by 10.

The backslash \(\backslash \) (syntax borrowed from Haskell, resembling a lambda) is followed by a comma separated list of parameter declarations, an arrow

and an expression, the function body. The return type does not need to be declared as it is always inferred from the body of the lambda.

In the example below, the anonymous function is assigned to the variable tentimes and then later applied—it could also be applied directly.

Anonymous functions are first-class citizens and can be passed as arguments, assigned to variables and returned from methods/functions. Types of functions are declared by specifying its arguments types, an arrow

, and the return type. For example, the type of the function above is int -> int. Multi-argument functions have types such as (int, string) -> bool.

The following example shows how to write a higher-order function update that takes a function f of type int -> int, an array of int’s and applies the function f to the elements of the array data, updating the array in-place.

Closures as specified above can capture variables appearing in their surrounding lexical context. If a closure is run outside of the context in which it is defined, then data races can occur. A variation on closures exists that helps avoid this problem.

Encore provides a special kind of anonymous function called a spore [26]. A spore must explicitly specify the elements from its surrounding context that are captured in the spore. The captured elements can then, more explicitly, be controlled using types, locks or cloning to ensure that the resulting closure can be run outside of the context in which the spore is defined. Spores have an environment section binding the free variables of the sport to values from the surrounding context, and a closure, which can access only those free variables and its parameters.

In this code snippet, the only variables in scope in the closure body are x and y. The field service, which would normally be visible within the closure (in Scala or in Java if it were final), is not accessible. It is made accessible (actually, a clone of its contents), via variable x in the environment section of the spore.

7.7 Polymorphism and Type Inference

At the time of writing, Encore offers some support for polymorphic classes, methods and functions. Polymorphism in Encore syntactically resembles other well-established OOP languages, such as Java. Type variables in polymorphic classes, methods and/or functions must be written using lower case.

The following example shows how to write a polymorphic list:

7.8 Module System

Currently, Encore supports a rudimentary module system. The keyword import followed by the name of a module imports the corresponding module. The name of the module must match the name of the file, excluding the .enc suffix. The compiler looks for the corresponding module in the current directory plus any directories specified using the -I pathlist compiler flag.

Assume that a library module Lib.enc contains the following code:

This module can be imported using import Lib as illustrated in the following (file Bar.enc):

Here Bar.enc imports module Lib and can thus access the class Foo.

Currently, the module system has no notion of namespaces, so all imported objects needs to have unique names. There is also no support for cyclic imports and qualified imports, so it is up to the programmer to ensure that each file is only imported once.

7.9 Suspending Execution

The suspend command supports cooperative multitasking. It suspends the currently running method invocation on an active object and schedules the invocation to be resumed after all messages in the queue have been processed.

The example computes a large number of digits of \(\pi \). The method calculate_digits calls suspend to allow other method calls to run on the Pi active object. This is achieved by suspending the execution of the current method call, placing a new message in its message queue, and then releasing control. The message placed in the queue is the continuation of the suspended method invocation, which in this case will resume the suspended method invocation at line 6.

7.10 Embedding of C Code

Encore supports the embedding of C code. This is useful for wrapping C libraries to import into the generated C code and for experimenting with implementation ideas before incorporating them into the language, code generator, or run-time. Two modes are supported: top-level embed blocks and embedded expressions.

Note that we do not advocate the extensive use of embed. Code using embed is quite likely to break with future updates to the language.

Top-Level Embed Blocks. Each file can contain at most one top-level embed block, which has to be placed before the first class definition in the file. This embed block consists of a header section and an implementation section, as in the following example:

The header section will end up in a header file that all class implementations will include. The implementation section will end up in a separate C file. The sq function declaration must be included in the header section, otherwise the definitions in the body section would not be accessible in the generated C code.

Embedded Expressions. An embed block can appear anywhere where an expression can occur. The syntax is:

When embedding an expression, the programmer needs to assign an encore type to the expression. Encore will assume that this type is correct. The value of an embedded expression is the value of the last C-statement in the embedded code.

Encore variables can be accessed from within an embed block by wrapping them with #{ }. For instance, local variable x in Encore code is accessed using #{x} in the embedded C. Accessing fields of the current object is achieved using C’s arrow operator. For instance,this ->foo accesses the field this .foo.

The following example builds upon the top-level embed block above:

The embedded expression in this example promises to return an int. It calls the C-function sq on the local Encore variable x.

Embedding C Values as Abstract Data Types. The following pattern allows C values to be embedded into Encore code and treated as an abstract type, in a sense, where the only operations that can manipulate the C values are implemented in other embedded blocks. In the following code example, a type D is created with no methods or fields. Values of this type cannot be manipulated in Encore code, only passed around and manipulated by the corresponding C code.

Mapping Encore Types to C Types. The following table documents how Encore’s types are mapped to C types. This information is useful when writing embedded C code, though ultimately having some detailed knowledge of how Encore compiles to C will be required to do anything advanced.

Encore type | C type |

|---|---|

string | (char *) |

real | double |

int | int64_t |

uint | uint64_t |

bool | int64_t |

\(\langle \)an active class type\(\rangle \) | (encore_actor_t *) |

\(\langle \)a passive class type\(\rangle \) | (CLASSNAME_data *) |

\(\langle \)a type parameter\(\rangle \) | ( void *) |

8 Streams

A stream in Encore is an immutable sequence of values produced asynchronously. Streams are abstract types, but metaphorically, the type Stream a can be thought of as the Haskell type:

That is, a stream is essentially a future, because at the time the stream is produced it is unknown what its contents will be. When the next part of contents are known, it will correspond to either the end of the stream (Nothing in Haskell) or essentially a pair (Just (St e s)) consisting of an element e and the rest of the stream s.

In Encore this metaphor is realised, imperfectly, by making the following operations available for the consumer of a stream:

-

get: Stream a -> a — gets the head element of the (non-empty) stream, blocking if it is not available.

-

getNext: Stream a -> Stream a — returns the tail of the (non-empty) stream. A non-destructive operator.

-

eos: Stream a -> Bool — checks whether the stream is empty.

Streams are produced within special stream methods. Calling such methods results immediately in a handle to the stream (of type Stream a). Within such a method, the command yield becomes available to produce values on the stream. yield takes a single expression as an argument and places the corresponding value on the stream being produced. When the stream method finishes, stream production finishes and the end of the stream marker is placed in the stream.

The following code illustrate an example stream producer that produces a stream whose elements are of type int:

The following code gives an example stream consumer that processes a stream stored in variable str of type Stream int.

Notice that the variable str is explicitly updated with a reference to the tail of the stream by calling getNext, as getNext returns a reference to the tail, rather than updating the object in str in place—streams are immutable, not mutable.

9 Parallel Combinators

Encore offers preliminary support for parallel types, essentially an abstraction of parallel collections, and parallel combinators that operate on them. The combinators can be used to build pipelines of parallel computations that integrate well with active object-based parallelism.

9.1 Parallel Types

The key ingredient is the parallel type Par t, which can be thought of as a handle to a collection of parallel computations that will eventually produce zero or more values of type t—for convenience we will call such an expression a parallel collection. (Contrast with parallel collections that are based on a collection of elements of type t manipulated using parallel operations [31].) Values of Par t type are first class, thus the handle can be passed around, manipulated and stored in fields of objects.

Parallel types are analogous to future types in a certain sense: an element of type Fut t can be thought of as a handle to a single asynchronous (possibly parallel) computation resulting in a single value of type t; similarly, an element of type Par t can be thought of as a handle to a parallel computation resulting in multiple values of type t. Pushing the analogy further, Par t can be thought of as a “list” of elements of type Fut t: thus, Par t \(\approx \) [ Fut t].

Values of type Par t are assumed to be ordered, thus ultimately a sequence of values as in the analogy above, though the order in which the values are produced is unspecified. Key operations on parallel collections typically depend neither on the order the elements appear in the structure nor the order in which they are produced.Footnote 3

9.2 A Collection of Combinators

The operations on parallel types are called parallel combinators. These adapt functionality from programming languages such as Orc [22] and Haskell [30] to express a range of high-level typed coordination patterns, parallel dataflow pipelines, speculative evaluation and pruning, and low-level data parallel computations.

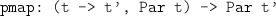

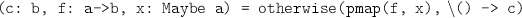

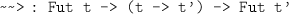

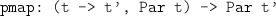

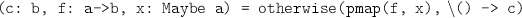

The following are a representative collection of operations on parallel types.Footnote 4 Note that all operations are functional.

-

empty: Par t. A parallel collection with no elements.

-

par: ( Par t, Par t) -> Par t. The expression par(c, d) runs c and d in parallel and results in the values produced by c followed (spatially, but not temporally) by the values produced by d.

-

pbind: ( Par t, t -> Par t’) -> Par t’. The expression pbind(c,f) applies the function f to all values produced by c. The resulting nested parallel collection (of type Par ( Par t’)) is flattened into a single collection (of type Par t’), preserving the order among elements at both levels.

-

is a parallel map. The expression pmap(f,c) applies the function f to each element of parallel collection c in parallel resulting in a new parallel collection.

-

filter: (t -> bool, Par t) -> Par t filters elements. The expression filter(p, c) removes from c elements that do not satisfy the predicate p.

-

select: Par t -> Maybe t returns the first available result from the parallel type wrapped in tag Just, or Nothing if it has no results.Footnote 5

-

selectAndKill: Par t -> Maybe t is similar to select except that it also kills all other parallel computations in its argument after the first value has been found.

-

prune: ( Fut (Maybe t) -> Par t’, Par t) -> Par t’. The expression prune(f, c) creates a future that will hold the result of selectAndKill(c) and passes this to f. This computation in run in parallel with c. The first result of c is passed to f (via the future), after which c is terminated.

-

otherwise: ( Par t, () -> Par t) -> Par t. The expression otherwise (c, f) evaluates c until it is known whether it will be empty or non-empty. If it is not empty, return c, otherwise return f().

A key omission from this list is any operation that actually treats a parallel collection in a sequential fashion. For instance, getting the first (leftmost) element is not possible. This limitation is in place to discourage sequential programming with parallel types.

9.3 From Sequential to Parallel Types

A number of functions lift sequential types to parallel types to initiate parallel computation or dataflow.

-

converts a value to a singleton parallel collection.

-

converts a future to a singleton parallel collection (following the Par t \(\approx \) [ Fut t] analogy above).

-

converts an array to a parallel collection.

One planned extension to provide a better integration between active objects and parallel collections is to allow fields to directly store collections but not as a data type but, effectively, as a parallel type. Then applying parallel operations would be more immediate.

9.4 ... and Back Again

A number of functions are available for getting values out of parallel types. Here is a sample:

-

select : : Par t -> Maybe t, described in Sect. 9.2, provides a way of getting a single element from a collection (if present).

-

sum : : Par Int -> Int and other fold/reduce-like functions provide operations such as summing the collection of integers.

-

sync : : Par t -> [t] synchronises the parallel computation and produces a sequential array of the results.

-

wsync : : Par t -> Fut [t] same as sync, but instead creates a computation to do the synchronisation and returns a future to that computation.

9.5 Example

The following code illustrates parallel types and combinators. It computes the total sum of all bank accounts in a bank that contain more than 10,000 euros.

The program starts by converting the sequential array of customers into a parallel collection. From this point on it applies parallel combinators to get the accounts, then the balances for these accounts, and to filter the corresponding values. The program finishes by computing a sum of the balances, thereby moving from the parallel setting back to the sequential one.

9.6 Implementation

At present, parallel types and combinators are implemented in Encore as a library and the implementation does not deliver the desired performance. In the future, Par t will be implemented as an abstract type to give the compiler room to optimise how programs using parallel combinators are translated into C. Beyond getting the implementation efficient, a key research challenge that remains to be addressed is achieving safe interaction between the parallel combinators and the existing active object model using capabilities.

10 Capabilities

The single thread of control abstraction given by active objects enables sequential reasoning inside active objects. This simplifies programming as there is no interleaving of operations during critical sections of a program. However, unless proper encapsulation of passive objects is in place, mutable objects might be shared across threads, effectively destroying the single thread of control.

A simple solution to this problem is to enforce deep copying of objects when passing them between active objects, but this can increase the cost of message sending. (This is the solution adopted in the formal semantics of Encore presented in Sect. 12.) Copying is, however, not ideal as it eliminates cases of benign sharing of data between active objects, such as when the shared data is immutable. Furthermore, with the creation of parallel computation inside active objects using the parallel combinators of Sect. 9, more fine-grained ways of orchestrating access to data local to an active object is required to avoid race conditions.

These are the problems addressed by the capability type system in Encore.Footnote 6

10.1 Capabilities for Controlling of Sharing

A capability is a token that governs access to a certain resource [27]. In an attempt to re-think the access control mechanisms of object-oriented programming systems, Encore uses capabilities in place of references and the resources they govern access to are objects, and often entire aggregates. In contrast to how references normally behave in object-oriented programming languages, capabilities impose principles on how and when several capabilities may govern access to a common resource. As a consequence, different capabilities impose different means of alias control, which is statically enforce at compile-time.

In Encore capabilities are constructed from traits, the units of reuse from which classes can be built (cf. Sect. 4.5). Together with a kind, each trait forms a capability, from which composite capabilities can be constructed. A capability provides an interface, essentially the methods of the corresponding trait. The capability’s kind controls how this interface can be accessed with respect to avoiding data-races. Capabilities are combined to form classes, just as traits do, which means that the range of capabilities are specified at class definition, rather than at object creation time.

From an object’s type, it is immediately visible whether it is exclusive to a single logical thread of control (which trivially implies that accesses to the object are not subject to data races), shared between multiple logical threads (in which case freedom from data races must be guaranteed by some concurrency control mechanism), or subordinate to some other object which protects it from data races—this is the default capability of a passive class in Encore. The active capability is the capability kind of active classes in Encore. Figure 2 shows the different capabilities considered, which will be discussed below.Footnote 7

10.2 Exclusive Capabilities

Exclusive capabilities are exclusive to a single thread of control. exclusive capabilities implement a form of external uniqueness [12] where a single pointer is guaranteed to be the only external pointer to an entire aggregate. The uniqueness of the external variable is preserved by destructive reads, that is, the variable must be nullified when read unless it can be guaranteed that the two aliases are not visible to any executing threads at the same time.

Exclusive capabilities greatly simplify ownership transfer—passing an exclusive capability as an argument to a method on another active object requires the nullification of the source variable, which means that all aliases at the source to the entire aggregate are dead and that receiver has sole access to the transferred object.

10.3 Shared Capabilities

A shared capability expresses that the object is shared and, in contrast to exclusive capabilities, some dynamic means is required to guarantee data-race freedom.

The semantics of concurrent accesses via a shared capability is governed by the sharing kind. The list below overviews the semantics of concurrent accesses of safe (first six) and unsafe capabilities.

Active. Active capabilities denote Encore’s active classes. They guarantee race-freedom through dynamic, pessimistic concurrency control by serialising all its inside computation.

Atomic. Atomic capabilities denote object references whose access is managed by a transaction. Concurrent operations either commit or rollback in a standard fashion. From a race-freedom perspective, atomic capabilities can be freely shared across Encore active objects.

An interesting question arises when considering the interaction between transactions and asynchronous message passing: can asynchronous messages “escape” a transaction? Since asynchronous messages are processed by other logical threads of control, they may be considered a side-effect that is impossible to roll-back. Some ways of resolving this conundrum are:

-

1.

Forbidding asynchronous message sends inside transactions. Problem: Restricts expressivity.

-

2.

Delaying the delivery of asynchronous message sends to commit-time of a transaction. Problem: Reduces throughput/increases latency.

-

3.

Accepting this problem and leaving it up to programmer’s to ensure the correctness of their programs when transactions are mixed with asynchronous message passing. Problem: Not safe.

Immutable. Immutable capabilities describe data that is always safely accessible without concurrency control. Immutability is “deep”, meaning that state can be observably modified through an immutable reference, though a method in an immutable object can mutate state created within the method or of its arguments. Immutable capabilities can be freely shared across Encore active objects without any need for copying.

Locked. Each operation via a locked capability requires prior acquisition of a lock specific for the resource. The lock can be reentrant (analogous to a synchronised method in Java), a readers-writer lock, etc. depending on desired semantics.

LockFree. The implementations of behaviours for this capability must follow a certain protocol for coordinating updates in a lock-free manner. Lock-free programming is famously subtle, because invariants must be maintained at all times, not just at select commit-points. As part of the work on Encore, we are implementing a type system that enforces such protocol usage on lock-free capabilities [8].

Stable. Stable capabilities present an immutable view of otherwise mutable state. There are several different possible semantics for stable capabilities: read-only references—capability cannot be used to modify state, but it may witness changes occurring elsewhere; fractional permissions—if a stable capability is available, no mutable alias to an overlapping state will be possible, thereby avoiding read-write races; or readers-writer locks—a static guarantee that a readers-writer lock is in place and used correctly.

Unsafe. As the name suggest, unsafe capabilities come with no guarantees with respect to data races. Allowing unsafe capabilities is optional, but they may be useful to give a type to embedded C code.

10.4 Subordinate Capabilities

A subordinate capability is a capability that is dominated by an exclusive or shared capability, which means that the dominating capability controls access to the subordinate. In Encore, passive objects are subordinate capabilities by default, meaning they are encapsulated by their enclosing active object. This corresponds to the fact that there can be no “free-standing” passive objects in Encore, they all live on the local heap of some active object.

Encapsulation of subordinate objects is achieved by disallowing them to be passed to non-subordinate objects. A subordinate capability is in this respect similar to the owner annotation from ownership types [10, 38].

Some notion of borrowing can be used to safely pass subordinate objects around under some conditions [13].

10.5 Polymorphic Concurrency Control

Capabilities allow for polymorphic concurrency control through the abstract capabilities shared, safe, optimistic, pessimistic and oblivious. This allows a library writer to request that a value is protected from data races by some means, but not specify those means explicitly. For example:

This expresses that the calls on from and to are safe from a concurrency stand-point. However, whether this arises from the accounts using locks, transactions or immutability is not relevant here.

Accesses through safe capabilities are interesting because the semantics of different forms of concurrency control requires a small modicum of extra work at run-time. For example, if from is active, then from.withdraw() should (implicitly) be turned into get from.withdraw(), or if we are inside an atomic capability and to is a locked capability, then the transfer transaction should be extended to also include to.deposit(), and committing the transaction involves being able to grab the lock on to and release it once the transaction’s log has been synchronised with the object.

The exact semantics of the combinations are currently being worked out.

10.6 Composing Capabilities

A single capability is a trait plus a mode annotation. Mode annotations are the labels in Fig. 2. Leaves denote concrete modes, i.e., modes that can be used in the definition of a capability or class. Remaining annotations such as safe, pessimistic etc. are valid only in types to abstract over concrete annotations, or combinations of concrete annotations.

Capabilities can be composed in three different ways: conjunction \(C_1 \mathop {\otimes }{} C_2\), disjunction \(C_1 \mathop {\oplus }{}C_2\), and co-encapsulation \(C_1\langle {C_2}\rangle \).

A conjunction or a disjunction of two capabilities \(C_1\) and \(C_2\) creates a composite capability with the union of the methods of \(C_1\) and \(C_2\). In the case of a disjunction, \(C_1\) and \(C_2\) may share state without concurrency control. As a result, the same guard (whether it is linearity, thread-locality, a lock, etc.) will preserve exclusivity of the entire composite. In the case of a conjunction, \(C_1\) and \(C_2\) must not share state, except for state that is under concurrency control. For example, they may share a common field holding a shared capability, as long as neither capability can write the field. The conjunction of \(C_1\) and \(C_2\), \(C_1\mathop {\otimes }{}C_2\), can be unpacked into its two sub-capabilities \(C_1\) and \(C_2\), creating two aliases to the same object that can be used without regard for the other.

In contrast to conjunction and disjunction, co-encapsulation denotes a nested composition, where one capability is buried inside the other, denoted \(C_1\langle {C_2}\rangle \). The methods of the composite \(C_1\langle {C_2}\rangle \) are precisely those of \(C_1\), but by exposing the nested type \(C_2\) in the interface of the composite capability, additional operations on the type-level become available. Co-encapsulation is useful to preserve linearity of nested capabilities. For example, unless \(C_3\) is exclusive, the capability \(C_3\langle {C_1\mathop {\otimes }C_2}\rangle \) can be turned into \(C_3\langle {C_1}\rangle \mathop {\otimes }C_3\langle {C_2}\rangle \) which introduces aliases to \(C_3\) but in a way that only disjoint parts of the nested capability can be reached.

Capabilities of different kinds may be used in disjunctions and conjunctions. A capability with at least one exclusive component must be treated linearly to guarantee race-freedom of its data. A capability with at least one subordinate component will be contained inside its enclosing class. There are vast possibilities to create compositions of capabilities, and we are currently investigating their possible uses and interpretations. For example, combinations of active and exclusive capabilities allow operating on an active object as it if was a passive object until the exclusive capability is lost, after which the active object can be freely shared. This gives powerful control over the initialisation phase of an object. As another example, conjunctions of active capabilities could be used to express active objects which are able to process messages in parallel.

10.7 Implementing a Parallel Operation on Disjoint Parts of Shared State

Figures 3, 4, 5, and 6 show how the capabilities can be used to construct a simple linked list data structure of exclusive pairs, which is subsequently “unpacked” into two (logical) immutable lists of disjoint cells which are passed to different objects, and later re-packed into a single mutable list of pairs again. Support for unstructured packing and unpacking is important in Encore as communication across active objects has a more flexible control flow than calls on a single stack, or fork-join style parallelism.

The trait keyword introduces a new trait which requires the presence of zero or more fields in any class that includes it. Figure 3 illustrates a trait Cell that requires a mutable field value in any including class.

The compositions of cells into WeakPair and StrongPair have different reuse stories for the Cell trait. The cells of a WeakPair may be independently updated by different threads whereas the cells of a StrongPair always belong to the same thread and are accessed together.

For simplicity, we employ a prime notation renaming scheme for traits to avoid name clashes when a single trait is included more than once.

Figure 4 shows how three capabilities construct a singly linked list. The links in the list are subordinate objects, and the elements in the list are typed by some exclusive parameter P̧ . The capabilities of the List class are Add, Del and Get. The first two are exclusive and the last is stable.

The Add and Del capabilities can add and remove exclusive P̧ objects from the list. (Since these objects are exclusive, looking them up, removes them from the list to maintain linear access.) Since Add and Del share the same field first with the same type, they are not safe to use separately in parallel, so their combination must be a disjunction. If they had been, for example, locked capabilities instead, they would have protected their internals dynamically, so in this case, a conjunction would be allowed.

Linearity of exclusive capabilities is maintained by an explicit destructive read, the keyword consumes. The expression consume x̧ returns the value of x̧ , and updates x̧ with null, logically in one atomic step.

The Get capability overlaps with the others, but the requirement on the field first is different: it considers the field immutable and its type stable through the Iterator capability (cf. Fig. 5). As the Get capability’s state overlaps with the other capabilities, their composition must be in the form of a disjunction.

Inside the Get trait, the list will not change—the first field cannot be reassigned and the Iterator type does not allow changes to the chain of links. The Get trait however is able to perform reverse borrowing, which means it is allowed to read exclusive capabilities on the heap non-destructively and return them, as long as they remain stack bound. The stack-bound reference is marked by a type wrapper, such as S(P).

The link capabilities used to construct the list are shown in Fig. 5; they are analogous to the capabilities in List, and are included for completeness.

Finally, Fig. 6 shows the code for unpacking a list of WeakPairs into two logical, stable lists of cells that can be operated on in parallel. The stable capability allows multiple (in this case two) active objects to share part of the list structure with a promise that the list will not change while they are looking.

On a call to start() on a Worker, the list of WeakPairs is split into two in two steps. Step one (line 10–11) turns the List disjunction into a conjunction by jailing the Add and Del components which prevents their use until the list is reassembled again on line 35. Step two (line 12) turns the iterator into two by unpacking the pair into two cells.

The jail construct is used to temporarily render part of a disjunction inacessible. In the example,

\(\mathop {\oplus }\)

\(\mathop {\oplus }\)

is turned into

\(\mathop {\oplus }\)

) \(\mathop {\otimes }\)

. The latter type allows unpacking the exclusive reference into two, but since one cannot be used while it is jailed, the exclusivity of the referenced object is preserved.

The lists of cells are passed to two workers (itself and other) that perform some work, before passing the data back for reassembly (line 32).

11 Examples

A good way to get a grip on a new programming language is to study how it is applied in larger programs. To this end, three Encore programs, implementing a thread ring (Sect. 11.1), a parallel prime sieve (Sect. 11.2), and a graph generator following the preferential attachment algorithm (Sect. 11.3), are presented.

11.1 Example: Thread Ring

Thread Ring is a benchmark for exercising the message passing and scheduling logic of parallel/concurrent programming languages. The program is completely sequential, but deceptively parallel. The corresponding Encore program (Fig. 7) creates 503 active objects, links them forming a ring and passes a message containing the remaining number of hops to be performed from one active object to the next. When an active object receives a message containing 0, it prints its own id and the program finishes.

In this example, the active objects forming the ring are represented by the class Worker, which has field id for worker’s id and next for the next active object in the ring. Method init is the constructor and the ring is set up using method setNext. The method run receives the number of remaining hops, checks whether this is larger than 0. If it is, it sends an asynchronous message to the next active object with the number of remaining hops decrement by 1. Otherwise, the active object has finished and prints its id.

11.2 Example: Prime Sieve

This example considers an implementation of the parallel Sieve of Eratosthenes in Encore. Recall that the Sieve works by filtering out all non-trivial multiples of 2, 3, 5, etc., thereby revealing the next prime, which is then used for further filtering. The parallelisation is straightforward: one active object finds all primes in \(\sqrt{N}\) and uses \(M\) filter objects to cancel out all non-primes in (chunks of) the interval \([\sqrt{N},N]\). An overview of the program is found in Fig. 8 and the code is spread over Figs. 9, 10, 11 and 12. Which each filter object finally receives a “done” message, they scan their ranges for remaining (prime) numbers and report these to a special reporter object that keeps a tally of the total number of primes found.

Overview of the parallel prime sieve. The root object finds all primes in \([2,\sqrt{N}]\) and broadcasts these to filter objects that cancel all multiples of these in some ranges. When a filter receives the final “done” message, it will scan its range for remaining (prime) numbers and report these to an object that keeps a tally.

The listing of the prime sieve program starts by importing libraries. The most important library component is a bit vector data type, implemented as a thin Encore wrapper around a few lines of C to flip individual bits in a vector. Figure 9 shows the class of the reporter object that collects and aggregates the results of all filter active objects. When it is created, is it told how many candidates are considered (e.g., all the primes in the first 1 billion natural numbers), and as every filter reports in, it reports the number of primes found in the number of candidates considered.

The main logic of the program happens in the Filter class. The filter objects form a binary tree, each covering a certain range of the candidate numbers considered. The lack of a math library requires a home-rolled power function (pow() below) and an embedded C-level sqrt() function (Lines 105–106).

The main filter calls the found_prime() function with a prime number. This causes the program to propagate the number found to its children (line 77). This allows them to process the number in parallel with the active object doing a more lengthy operation in cancel_one(), namely iterating over its bit vector and cancelling out all multiples of the found prime.

Once the main active object has found all the primes in \(\sqrt{N}\), it calls root_done() which is propagated in a similar fashion as found_prime(). Finally, the done() method is called on each filter active object, which scans the bit vector for any remaining numbers that have not been cancelled out. Those are the prime numbers which are sent to the reporter.

11.3 Example: Preferential Attachment

Preferential attachment is a model of graph construction that produces graphs whose node degrees have a power law distribution. Such graphs model a number of interesting phenomena such as the growth of the World Wide Web or social networks [3, 6].

The underlying idea of preferential attachment is that nodes are added incrementally to a graph by establishing connections with existing nodes, such that edges are added from each new node to a random existing node with probability proportional to the degree distribution of the existing nodes. Consequently, the better connected a node is, the higher the chance new nodes will be connected to it (thereby increasing the chance in the future that more new nodes will be connected to it).

The preferential algorithm is based on two parameters: \(n\) the total number of nodes in the final graph and \(k\) the number of unique edges connecting each newly added node to the existing graph. A sequential algorithm for preferential attachment is:

-

1.

Create a fully connected graph of size \(k\) (the clique). This is a completely deterministic and all the nodes in the initial graph will be equally likely to be connected to by new nodes.

-

2.

For \(i=k+1,\ldots ,n\), add a new node \(n_i\), and randomly select \(k\) distinct nodes from \(n_1,\dots ,n_{i-1}\) with probability proportional to the degree of the selected node and add the edges from \(n_i\) to the selected nodes to the graph.

One challenge is handling the probabilities correctly. This can be done by storing the edges in an array of size \(\approx \) \(n\times k\), where every pair of adjacent elements in the array represents an edge. As an example, consider the following graph and its encoding.

The number of times a node appears in this array divided by the size of the array is precisely the required probability of selecting the node. Thus, when adding new edges, performing a uniform random selection from this array is sufficient to select target nodes.

In the implementation a simple optimisation is made. Half of the edge information is statically known—that is (ignoring the clique), for each \(n>k\), the array will look like the following:

The indices where the edges for node \(n\) will be stored can be calculated in advance, and thus these occurrences of \(n\) need not be stored, and the amount of space required can be halved.

Parallelising preferential attachment is non-trivial due to the inherent temporality in the problem: the correctness (with respect to distinctness) of all random choices for an addition depends on the values selected for earlier nodes. However, even though a node may not yet appear in some position in the array, it is possible to compute in parallel a candidate for the desired for all future nodes. Then these can gradually be fulfilled (out of order) and the main challenge is ensuring that distinctness of edges is preserved. This is done by checking whenever new edges are added and randomly selecting again when a duplicate edge is added.

The naive implementation shown here attempts to parallelise the algorithm by creating \(A\) active objects each responsible for the edges of some nodes in the graph. Every active object proceeds according to the algorithm above, but with non-overlapping start and stop indexes. If a random choice picks an index that is not yet filled in, a message is sent to the active object that owns that part of the array with a request to be notified when that information becomes available.

The requirement that edges are distinct needs to be checked whenever a new edge is added. If a duplicate is found, the algorithm just picks another random index. With reasonably large graphs (say 1 million nodes with 20 edges each), duplicate edges is rare, but the scanning is still necessary, and this is more costly in the parallel implementation compared to the sequential one, because in the sequential algorithm all edges are available at the time the test for duplicates is made, but this is not the case in the parallel algorithm.

Figure 13 shows a high-level overview of the implementation. The colour-coded workers own the write rights to the equi-coloured part of the array. A green arrow denotes read rights, a red array denotes write rights. The middle worker attempts to the edge at index 3 in the array, which is not yet determined. This prompts a request to the (left) worker that owns the array chunk to give this information once the requested value becomes available. Once this answer is provided, the middle active object writes this edge into the correct place, provided it is not a duplicate, and forwards the results to any active object that has requested it before this point.

The Encore implementation of preferential attachment is fairly long and can be found in Appendix A.

12 Formal Semantics

This section presents the semantics of a fragment of Encore via a calculus called \(\mu \) Encore. The main aim of \(\mu \) Encore is to formalise the proposed concurrency model of Encore, and thereby establish a formal basis for the development of Encore and for research on type-based optimisations in the UpScale project. \(\mu \) Encore maintains a strong notion of locality by ensuring that there is no shared data between different active objects in the system.

12.1 The Syntax of \(\mu \) Encore

The formal syntax of \(\mu \) Encore is given in Fig. 14. A program \(P\) consists of interface and class declarations followed by an expression \(e\) which acts as the main block.Footnote 8 The types \(T\) includes Booleans \(\mathsf{bool}\) (ignoring other primitive types), a type \(\mathsf{void}\) (for the () value), type \(\mathsf{Fut}\ T\) for futures, interfaces \(I\), passive classes \(C\), and function types \(\overline{T}\rightarrow T\). In \(\mu \) Encore, objects can be active or passive. Active objects have an independent thread of execution. To store or transfer local data, an active object uses passive objects. For this reason, interfaces \( IF \) and classes \( CL \) can be declared as passive. In addition, an interface has a name \(I\) and method signatures \( Sg \) and class has a name \(C\), fields \(\overline{x}\) of type \(\overline{T}\), and methods \(\overline{M}\). A method signature \( Sg \) declares the return type \(T\) of a method with name \(m\) and formal parameters \(\overline{x}\) of types \(\overline{T}\). \(M\) defines a method with signature \( Sg \), and expressions \(e\). When constructing a new object of a class \(C\) by a statement new \(\;C(\overline{e})\), the new object may be either active or passive (depending on the class).

Expressions include, variables (local variables and fields of objects), values, sequential composition \(e_1;e_2\), assignment, skip (to make semantics easier to write), if, let, and while constructs. Expressions may access the fields of an object, the method’s formal parameters and the fields of other objects. Values \(v\) are expressions on a normal form, let-expressions introduce local variables. Cooperative scheduling in \(\mu \) Encore is achieved by explicitly suspending the execution of the active stack. The statement suspend unconditionally suspends the execution of the active method invocation and moves this to the queue. The statement \({{\mathbf {\mathtt{{await}}}}}\ e\) conditionally suspends the execution; the expression \(e\) evaluates to a future \(f\), and execution is suspended only if \(f\) has not been fulfilled.

Communication and synchronisation are decoupled in \(\mu \) Encore. In contrast to Encore, \(\mu \) Encore makes explicit in the syntax the two kinds of method call: \(e\diamond m(\overline{e})\) corresponds to a synchronous call and

corresponds to an asynchronous call. Communication between active objects is based on asynchronous method calls

whose return type is Fut \(\ T\), where \(T\) corresponds to the return type of the called method \(m\). Here, \(o\) is an object expression, \(m\) a method name, and \(\overline{e}\) are expressions providing actual parameter values for the method invocation. The result of such a call is a future that will hold the eventual result of the method call. The caller may proceed with its execution without blocking. Two operations on futures control synchronisation in \(\mu \) Encore. The first is await \(f\), which was described above. The second is get \(f\) which retrieves the value stored in the future when it is available, blocking the active object until it is. Futures are first-class citizens of \(\mu \) Encore. Method calls on passive objects may be synchronous and asynchronous. Synchronous method calls \(o\diamond m(\overline{e})\) have a Java-like reentrant semantics. Self calls are written

or \({{\mathbf {\mathtt{{this}}}}}\diamond m(\overline{e})\).

Anonymous functions and future chaining Anonymous functions are available in \(\mu \) Encore in the form of spores [26]. A spore \({{\mathbf {\mathtt{{spore}}}}}\ \overline{x}'=\overline{e}'\ {{\mathbf {\mathtt{{in}}}}}\ \lambda (\overline{x}:\overline{T})\rightarrow e:T\) is a form of closure in which the dependencies on local state \(\overline{e}'\) are made explicit; i.e., the body \(e\) of the lambda-function does not refer directly to variables outside the spore. Spores are evaluated to create closures by binding the variables \(\overline{x}'\) to concrete values which are controlled by the closure. This ensures race-free execution even when the body \(e\) is not pure. The closure is evaluated by function application \(e(\overline{e})\) where the arguments \(\overline{e}\) are bound to the variables \(\overline{x}\) of the spore, before evaluating the function body \(e\). Closures are first class values. Future chaining \(e_1\leadsto e_2\) allows the execution of a closure \(e_2\) to be spawned into a parallel task, triggered by the fulfilment of a future \(e_1\).

12.2 Typing of \(\mu \) Encore

Typing judgments are on the form \(\varGamma \vdash e:T\), where the typing context \(\varGamma \) maps variables \(x\) to their types. (For typing the constructs of the dynamic semantics, \(\varGamma \) will be extended with values and their types.) Write \(\varGamma \vdash \overline{e}:\overline{T}\) to denote that \(\varGamma \vdash e_i:T_i\) for \(1\le i\le |\overline{e}|\), assuming that \(|\overline{e}|=|\overline{T}|\). Types are not assigned to method definitions, class definitions and the program itself; the corresponding type judgements simply express that the constructs are internally well-typed by a tag “ok”.

Auxiliary definitions. Define function \(\textit{typeOf}(T,m)\) such that: (1) \(\textit{typeOf}(T,m)\) \(=\overline{T}\rightarrow T'\) if the method \(m\) is defined with signature \(\overline{T}\rightarrow T'\) in the class or interface \(T\); (2) \(\textit{typeOf}(T,x)=T'\) if a class \(T\) has a field \(x\) declared with type \(T'\); and (3) \(\textit{typeOf}(C)=\overline{T}\rightarrow C\) where \(\overline{T}\) are the types of the constructor arguments. Further define a predicate \(active(T)\) to be true for all active classes and interfaces \(T\) and false for all passive classes and interfaces. By extension, let \(active(o) = active(C)\) if \(o\) is an instance of \(C\).

Subtyping. Let class names \(C\) of active classes also be types for the type analysis and let \(\preceq \) be the smallest reflexive and transitive relation such that

-

\(T\preceq \mathsf{void}\) for all \(T\),

-

\(C\preceq I\iff \forall m\in I \cdot \textit{typeOf}(C,m)\preceq \textit{typeOf}(I,m)\)

-

\(\overline{T}\preceq \overline{T}'\iff n=length(\overline{T})=length(\overline{T}')\) and \(T_i\preceq T'_i\) for \(1\le i\le n\)

-

\(T_1\rightarrow T_2 \preceq T_1'\rightarrow T_2' \iff T_1'\preceq T_1 \wedge T_2 \preceq T_2'\)

A type \(T\) is a subtype of \(T'\) if \(T\preceq T'\). The typing system of \(\mu \) Encore is given in Fig. 15 and is mostly be standard. Note that rule T-Spore enforces that all dependencies to the local state to be explicitly declared in the spore.

12.3 Semantics of \(\mu \) Encore

The semantics of \(\mu \) Encore is presented as an operational semantics in a context-reduction style [15], using a multi-set of terms to model parallelism (from from rewriting logic [25]).

Run-Time Configurations. The run-time syntax of \(\mu \) Encore is given in Fig. 16. A configuration \( cn \) is a multiset of active objects (plus a local heap of passive objects per active object), method invocation messages and futures. The associative and commutative union operator on configurations is denoted by whitespace and the empty configuration by \(\varepsilon \).

An active object is given as a term \(g( active , hp ,q)\) where \( active \) is the method invocation that is executing or \( idle \) if no method invocation is active, \( hp \) a multiset of objects, and \(q\) is a queue of suspended (or not yet started) method invocations.

An object \( obj \) is a term \(o(\sigma )\) where \(o\) is the object’s identifier and \(\sigma \) is an assignment of the object’s fields to values. Concatenation of such assignments is denoted \(\sigma _1\circ \sigma _2\).

In an invocation message \(m(o,\overline{v}, hp , f)\), \(m\) is the method name, \(o\) the callee, \(\overline{v}\) the actual parameter values, \( hp \) a multiset of objects (representing the data transferred with the message), and \(f\) the future that will hold the eventual result. For simplicity, the futures of the system are represented as a mapping \( fut \) from future identifiers \(f\) to either values \(v\) or \(\bot \) for unfulfilled futures.

The queue \(q\) of an active object is a sequence of method invocations (\( task \)). A task is a term \(t( fr \circ sq )\) that captures the state of a method invocation as sequence of stack frames \(\{\sigma | e\}\) or \(\{\sigma | E\}\) (where \(E\) is an evaluation context, defined in Fig. 18), each consisting of bindings for local variables plus either the expression being run for the active stack frame or a continuation (represented as evaluation context) for blocked stack frames. eos indicates the bottom of the stack. Local variables also include a binding for this, the target of the method invocation. The bottommost stack frame also includes a binding for variable \(\textit{destiny}\) to the future to which the result of the current call will be stored.

Expressions \(e\) are extended with a polling operation \(e?\) on futures that evaluates to \(\mathtt true \) if the future has been fulfilled or \(\mathtt false \) otherwise. Values \(v\) are extended with identifiers for the dynamically created objects and futures, and with closures. A closure is a dynamically created value obtained by reducing a spore-expression (after sheep cloning the local state). Further assume for simplicity that \(\textit{default}(T)\) denotes a default value of type \(T\); e.g., null for interface and class types. Also, classes are not represented explicitly in the semantics, but may be seen as static tables of field types and method definitions. Finally, the run-time type \(\mathsf{Closure}\) marks the run-time representation of a closure. To avoid introducing too many new run-time constructs, closures are represented as active objects with an empty queue and no fields.

The initial configuration of a program reflects its main block. Let \(o\) be an object identifier. For a program with main block \(e\) the initial configuration consists of a single dummy active object with an empty queue and a task executing the main block itself: \(g(t(\{ this \mapsto \langle \mathsf{Closure},o\rangle |e\}\circ \text {eos}),o(\varepsilon ),\emptyset )\).