Abstract

Experimental recordings of cortical activity often exhibit narrowband oscillations, at various center frequencies ranging in the order of 1–200 Hz. Many neuronal mechanisms are known to give rise to oscillations, but here we focus on a population effect known as sparsely synchronised oscillations. In this effect, individual neurons in a cortical network fire irregularly at slow average spike rates (1–10 Hz), but the population spike rate oscillates at gamma frequencies (greater than 40 Hz) in response to spike bombardment from the thalamus. These cortical networks form recurrent (feedback) synapses. Here we describe a model of sparsely synchronized population oscillations using the language of feedback control engineering, where we treat spiking as noisy feedback. We show, using a biologically realistic model of synaptic current that includes a delayed response to inputs, that the collective behavior of the neurons in the network is like a distributed bandpass filter acting on the network inputs. Consequently, the population response has the character of narrowband random noise, and therefore has an envelope and instantaneous frequency with lowpass characteristics. Given that there exist biologically plausible neuronal mechanisms for demodulating the envelope and instantaneous frequency, we suggest there is potential for similar effects to be exploited in nanoscale electronics implementations of engineered communications receivers.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction and Background

Neuronal information processing relies on the dynamical electrical properties of a neuron’s membrane, such as its conductance, capacitance, and the various ionic currents that flow across it through ion channels, and which give rise to a time varying membrane potential. These currents are constantly changing over time, due to external input into a neuron at synaptic junctions. This external input occurs when an adjacent neuron ‘spikes,’ i.e. when its membrane potential reaches a large enough size to cause a short duration but large amplitude pulse, called an action potential, to propagate along cable-like structures called axons. When the action potential reaches the end of an axon it can cause chemical neurotransmitters to be released and then diffuse across a gap between neurons called a synapse. These neurotransmitters cause a change in conductance in the membrane of the neuron on the far side of the synapse, thus resulting in an ionic current flow across it [1].

Given that a neuron’s membrane can be modelled in terms of currents, conductances and capacitance, it is no surprise that equivalent electrical circuits for them can be studied using frequency domain methods favoured in electronic engineering [2] or in the analysis of stochastic noise [3]. In particular, neuronal membranes can be studied as if they were electrical filters, and models of neuronal low, high and bandpass filters, have been discussed in terms of neuronal ‘resonance’ [4]. There are several biophysical mechanisms for achieving this, as reviewed by [5]. Examples of single cell mechanisms include slow potassium ion currents [6], synaptic short-term plasticity [7], and subthreshold membrane oscillations [1]. Mechanisms due to interactions between cells also can cause band-pass filtering [8], while the basilar membrane in the inner ear provides mechanical bandpass filtering of sounds prior to transduction by inner hair cells [9].

In this paper we focus on neurons in a population that each achieve a bandpass filtering characteristic solely through delayed distributed feedback, rather than their intrinsic properties. Such a network has previously been studied and understood using approaches favoured in nonlinear physics [10] in order to explain the phenomenon of ‘sparsely synchronised population oscillations’ [11, 12]. These oscillations are often observed in recordings of the overall electrical field produced in small volumes of cortical region V1 when an experimental animal is awake and has their visual field stimulated [13, 14]. The label ‘sparse synchronisation’ describes the fact that individual neurons in the region spike in an irregular fashion with an average rate much slower than the frequency of the population oscillation.

The novelty in the approach presented here is to recast the problem as one where it is assumed that the network’s function is to act like a multivariate feedback system operating close to instability, thus producing a bandpass filter like response. This perspective leads us to posit that neuronal population spike rates, in the context of our assumption, can be treated as both a noisy version of a feedback control signal, as well as a compressed representation of the synaptic conductance or current.

Surprisingly, we find that the central assumption employed in electronic engineering design, namely that electrical dynamics is governed by linear differential equations, can also be employed for studying filtering in such a population of neurons, despite the obviously highly nonlinear behaviour that gives rise to the crucial aspect of ‘spiking.’

The remainder of this paper articulates these ideas as follows.

-

Section 2 describes how a single neuron with specified linear synaptic dynamics would ideally implement negative feedback, with gain, in order to produce a bandpass response.

-

Section 3 introduces the concept of distributed feedback within a population of redundant neurons, as a means of implementing feedback gain without requiring active amplifiers. This Section also shows how distributed feedback can have the additional benefit of reducing additive noise on feedback signals via the averaging effects inherent in redundancy. The Section next extends the analysis of feedback noise by assuming that feedback is only possible via quantized signals. We study this, as quantization is highly analogous to spiking in the real cortical network. It is shown that performance close to the ideal bandpass filter response is readily achievable by a population of neurons with distributed quantized feedback, particularly in the presence of stochastic noise.

-

Section 4 discusses the potential for exploiting effects like those discussed here in bio-inspired engineering, such as in frequency demodulation.

Unlike the model of [10], here we do not consider sparsely connected networks where neurons only rarely and asynchronously contribute feedback. This is an extension left for future work, as sparse connectivity has a significant impact on stability analysis. However, the work discussed here is expected to be readily extendible to the sparse connectivity scenario, as well as to randomly distributed delays.

2 Cortical Synapses as Filters: Open-Loop and Feedback Responses

2.1 Low Pass Filtering Due to Synaptic Current Dynamics

Our starting point is the so-called ‘difference of two exponentials’ model that describes how current flow across a neuron’s membrane changes over time in response to a single synaptic event (i.e. a spike arrival). This model has been used many times in computational neuroscience. However, we study a variation of the model that includes a biophysically realistic delayed response, as in [10, 13], i.e. the neuron responds to the arrival of a presynaptic spike only after a delay of \(\tau _l>0\) ms.

The model for the change in current in response to a single incoming spike at time \(t=0\) is parameterised by two constants, the rise time \(\tau _r\) and the fall time \(\tau _d\), where \(\tau _r<\tau _d\), and is expressed as

where \(u(\cdot )\) is the Heaviside unit step function. It can easily be verified that Eq. (1) is the solution to a pair of first order differential equations, which can be rewritten compactly in state-space form as

where \(\mathbf{u} = [0,\delta (t-\tau _l)/\tau _r]^T\) is the input vector, \(\mathbf{z}=[z_1(t),z_2(t)]^T\) is the state vector, and \(A=[-1/\tau _d,1/\tau _d;0,-1/\tau _r]\), and the synaptic current is \(i(t)=z_1(t)\). We write the input as \(\delta (t-\tau _l)\), which means we model the single input spike as a Dirac delta function, i.e. a single event (the spike arrival) occurs at time \(t=0\), buts its influence is seen only after delay \(t=\tau _l\).

Equation (1) is also the solution to the following second order differential equation

where \(x(t)=\delta (t-\tau _l)\). Given that there is no feedback in this system, we can for the time being ignore \(\tau _l\) in our analysis of the system itself, as it can be incorporated into the signal itself, by letting \(x(t)=\delta (t-\tau _l)\). We thus can consider the response of the system to arbitrary inputs \(x(t)\) into the dynamic of the systems. The fact that \(0<\tau _r<\tau _d\) ensures that the system only has damped solutions in response to bounded inputs, as expressed in Eq. (1).

Inspection of Eq. (3) when \(x(t)=\delta (t-\tau _l)\) suggests that the current \(i(t)\) can be interpreted as the impulse response of a linear time invariant filter, after a delay of \(\tau _l\). In the language of analog filtering or feedback control system design, the transfer function [15] of the system, \(G(s)\), is given by the ratio of the Laplace transform of \(i(t)\) to the Laplace transform of \(x(t)\). For an arbitrary bounded input signal, \(x(t)\), with Laplace transform \(X(s)\), the Laplace transform of the response of the system can be written as \(Y(s)=G(s)X(s)\), and in the time domain, the response \(y(t)\) is the inverse Laplace transform of \(Y(s)\).

For the system described by Eq. (3), the transfer function is

which has the form of a typical ‘two pole’ analog low pass filter.

If \(x(t)\) is a stationary stochastic process with a power spectral density \(S_{xx}(\omega )\), the transfer function can also be expressed in terms of Fourier transforms by substitution of \(s=i\omega \), and it can be shown that the power spectral density of the response is related to the power spectral density of \(x(t)\) as \(S_{yy}(\omega ) = |G(i\omega )|^2S_{xx}(\omega )\). From Eq. (4), we obtain

and note that if \(x(t)\) is white noise (i.e. its power spectral density is constant for all frequencies) then low frequencies \(\omega \ll \frac{1}{\tau _d}\) will be reproduced at the output without attenuation, but frequencies \(\omega \gg \frac{1}{\tau _d}\) will be heavily attenuated, i.e. filtered.

Inhibitory neurons in the cortex tend to have short rise times, \(\tau _r\sim 0.5\) ms, and decay times \(\tau _d\sim 5\) ms [10], and thus the model expressed by Eq. (1) will, if isolated from feedback, enable the membrane current to encode unattenuated the frequency content of the input only up to about \(f= 1/(2\pi \tau _d) \simeq 30\) Hz.

2.2 The Impact of Negative Feedback due to Inhibition, With Delays

Introducing negative feedback into a system with a low pass filtering characteristic is well known to enable the possibility of inducing either a resonant response, or unstable oscillations. When a delay is included in the feedback path, such a negative feedback system is a simple model of an inhibitory neuron that connects to itself via an autapse. Inhibitory neurons provide negative feedback because the neurotransmitters they release after spiking have an inhibitory response on the neurons they synapse with. If a neuron forms a synapse with itself, then the synaptic connection is known as an autapse [16].

Consider for example, a system with an open loop transfer function given by \(G(s)\) and negative feedback with gain \(K\). When considering the model of Eq. (1), we must also explicitly take into account that the synaptic response to the feedback will be delayed relative to the response due to \(x(t)\). The feedback system is shown in Fig. 1, and its closed loop transfer function is given by

Closed loop feedback system consisting of an external drive with Laplace transform \(X(s)\), that is operated on by system \(G(s)\), along with negative feedback. The output response has Laplace transform \(I(s)\), and the feedback signal has Laplace transform \(F(s)\). The feedback path consists of a delay relative to \(X(s)\), a proportional gain \(K\), and a subtraction from the input, \(X(s)\). The overall closed loop transfer function, \(H(s)\) is defined such that \(I(s)=H(s)X(s)\)

Studying the transfer function with \(s=i\omega \) enables analysis of the steady-state frequency response of the system when the input is either sinusoidal (or the sum of sinusoids) or random noise. For an input \(x(t)=A\cos {(\omega _xt+\phi (t))}\), the steady state response of any linear time invariant system with transfer function \(H(s)\) is given by \(i(t)=A|H(i\omega )|\cos {(\omega _x t + \phi (t)-\arg {H(i\omega )})}\) [15].

Moreover, the power spectral density (PSD) of the response, \(S_{yy}(\omega )\) can be obtained for an input with arbitrary PSD, \(S_{xx}(\omega )\) via the relationship \(S_{yy}(\omega ) = |H(i\omega )|^2S_{xx}(\omega )\). From Eq. (6) we obtain

Note that \(|H(0)|^2=1/(K+1)^2\), and thus the DC value of the output is \(y_\mathrm{DC}(t) = x_\mathrm{DC}/(K+1)\), so the feedback signal will have a DC component of \(Kx_\mathrm{DC}/(K+1)\).

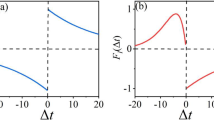

a Frequency response of the closed loop negative feedback system, \(H(s)\), for \(\tau _l=0\) ms (left panel) and \(\tau _l=1\) ms (right panel). b Illustration of resonant bandpass-filter like response, and stability, for the closed loop negative feedback system with delay. The maximum value of \(K\) that provides closed loop stability is shown with a circle for each value of \(\tau _l\). The time constants are \(\tau _r=0.5\) ms, and \(\tau _d=5\) ms.

The frequency response, \(|H(i\omega )^2|\) is shown in Fig. 2a for both \(\tau _l=0\) ms and \(\tau _l=1\) ms with \(\tau _r=0.5\) ms, \(\tau _d=5\) ms and various value of \(K\). Clearly, for \(\tau _l=1\) ms, as \(K\) increases the closed loop system begins to show a bandpass filter characteristic, with a resonant frequency near 200 Hz. The maximum response and the frequency of maximum response increase with \(K\). However, there is a limit to how much \(K\) can be increased, because if it is too large, then the closed loop system becomes unstable. On the other hand, in the absence of a delay, although the system exhibits a bandpass characteristic for sufficiently large \(K\), the resonant frequency is much higher than with the delay present. Moreover, the input is highly attenuated at all frequencies with respect to the open loop system (\(K=0\)), which is not the case when \(\tau _l=1\) ms.

The closed loop system \(H(s)\) has an unstable oscillatory mode if any root of its denominator has positive real part (it may also may have transient damped oscillatory modes even though \(G(s)\) does not). Given that stability depends on the roots of the denominator, whether an unstable solution exists depends on the values of both \(K\) and \(\tau _l\). There is no closed form solution for the roots, but they can be obtained numerically as a function of \(K\) and \(\tau _l\).

Note that due to the physical constraint that the time constants \(\tau _r\) and \(\tau _d\) are both positive, the open loop system, \(G(s)\) cannot have a bandpass characteristic. For the closed loop system without a delay (i.e. \(\tau _l=0\)), the system will resonate and have a bandpass filtering characteristic if \(K\) is sufficiently large, and also be stable for all \(K\). However, as suggested by Fig. 2a (left panel), the resonant frequency will be much larger than that of oscillations encountered in recordings of cortical activity, and moreover, the damping ratio, and therefore the peak response, grows very slowly with feedback gain.

These model deficiencies with respect to known biophysics are readily overcome by the inclusion of non-zero time delays. When these are included, as suggested by Fig. 2a (right panel), the closed loop transfer function can exhibit a bandpass filter characteristic with a resonant frequency at much lower (and therefore biophysically plausible) frequencies, and with a higher damping ratio.

However, non-zero delays make the closed loop system unstable when \(K\) is sufficiently large. Therefore, we will seek an appropriately small value of \(K\), such that there is a large resonant (and therefore bandpass filter like) response, but a stable system.

To illustrate the resonant or bandpass filter-like behaviour of the closed loop system, Fig. 2b shows the maximum response of \(|H(i\omega )|^2\), and the frequency of the maximum response (in hertz) as a function of \(K\), up to the maximum stable \(K\), for four values of delay, \(\tau _l\). The other time constants are \(\tau _r=0.5\) ms, and \(\tau _d=5\) ms. The figure shows that although the system is always stable for \(\tau _l=0\), it only shows a bandpass response at high frequencies, and with very large attenuation with respect to \(K=0\). As \(\tau _l\) increases, however, the frequency of the maximum response also decreases, while still enabling a gain in amplitude response with respect to the system without delay or with respect to \(K=0\) with delay.

2.3 Example Simulations

We consider \(\tau _r=0.5\) ms, \(\tau _d=5\) ms and \(\tau _l=1\) ms. With these values, it can easily be shown numerically that the closed loop system is unstable for \(k\gtrsim 7\), as illustrated in Fig. 2b. As shown in Fig. 2a, the system has a bandpass filter characteristic with a frequency of maximum response approaching \(180\,\text {Hz}\) as \(K\) approaches its maximum stable value, near \(K=7\). To illustrate the bandpass response for a non-random signal we use a swept chirp input of the form \(x(t) = \sin {(\pi kt^2)}\), which is a signal with instantaneous frequency \(f_\mathrm{i}=kt\,\text {Hz}\) that linearly increases with time.

a Simulated response of the closed loop system with feedback gain \(K=6\) and delay \(\tau _l=1\) ms, in response to a chirp input, for three values of \(K\). b Simulated response of the closed loop system with feedback gain \(K=6\) and delay \(\tau _l=1\) ms, in response to white noise, for three values of \(K\).

Fig. 3a shows the input chirp when turned on after allowing transient response to a step DC input to die away. The figure also shows the results of simulations of system response, \(i(t)\), for \(K=0,\,K=2\) and \(K=6\). For \(K=6\), the closed loop gain exceeds unity in the neighbourhood of the resonant frequency, and therefore the feedback can be interpreted as amplifying frequencies within the passband. We also illustrate the bandpass response using white noise. Simulated closed loop responses are shown in Fig. 3b for three values of \(K\). When the input is white noise, the power in the system response is many times smaller than that of the input, and its peak amplitude is much smaller than transient damped oscillations, and therefore we have not shown the input noise, or the transient responses. This example shows that narrowband oscillations are clearly observable in the case of \(K=6\).

A source of energy is required for the feedback amplification factor \(K\), and in the biophysical system it is not clear how this amplification factor may be realised. In the following section we consider one biophysically plausible mechanism for enabling proportional gain, \(K\). We then study the impact of feedback noise.

3 Feedback Amplification and Noise Reduction Via Redundancy

We now compare the ‘autapse’ model—a single neuron with ideal noiseless negative feedback (with gain)—with several scenarios where the feedback is noisy: (i) distributed feedback with stochastic additive noise; (ii) distributed feedback with quantization noise; (iii) distributed feedback with quantization and stochastic noise. We find that when distributed feedback is quantized, stochastic noise significantly enhances overall performance compared with the absence of stochastic noise.

3.1 Ideal Distributed Feedback Equivalent to Feedback Gain of \(K\)

We consider now the case where feedback amplification is due to redundancy. The ideal scenario is that \(K\) parallel and identical systems receive the same input, but as well as self-feedback, each system receives feedback from all the other systems. To enable more general analysis below, we write the feedback signal from system \(i\) to system \(j\) as \(F_{ij}(s)=f_{ij}[I_i(s)]\), where \(f_{ij}(\cdot )\) is an arbitrary function, and also write the overall feedback to system \(j\) as \(F_{j}(s)\)—see Fig. 4.

Parallel redundant network of systems receiving the same input (left panel). The feedback signal paths to each system are shown in the right panel: system \(j\) receives feedback from all systems including itself, and as in the original stand-alone system, the feedback path is delayed with respect to the output of the system

Without any noise, we have \(f_{ij}[I_i(s)]=I_i(s) \forall j\). Therefore if we set \(N=K\), then \(F_{j}(s)=\sum _{i=1}^{K}I_i(s) \forall j\). Given that each system receives the same input, it will also produce the same output and feedback signal, \(I(s)\), and therefore all feedback signals are identical with \(F_j(s)=KI(s)\exp {(-\tau _ls)}\). This illustrates that redundancy achieves feedback gain \(K\) in the overall system.

3.2 Distributed Feedback with Additive White Noise

Now we consider the same scenario but suppose each output signal, \(I_i(s)\) acquires independent additive Gaussian white noise, \(\eta _i(t)\) with mean zero, and variance \(\sigma ^2\), prior to being fed back. We write the Laplace transform of the noise as \(N_i(s)\), and thus \(F_{ij}(s)=f_{ij}[I_i(s)]=I_i(s)+N_i(s) \forall j\). Due to the independence of the noise from \(x(t)\), and the linearity of the system, each feedback noise is equivalent to input noise that subtracts from \(x(t)\). The subtraction is equivalent to an addition, due to the symmetry of Gaussian noise about its mean. Therefore, since there are \(K\) feedback signals, the total equivalent input noise for system \(j\) is \(\xi _j(t)\), where \(\xi (t)\) is white noise with zero mean and variance \(K\sigma ^2\). However, this compares very favourably with the situation in the ideal non redundant ‘autapse’ model. If there were feedback noise in that system, the feedback noise would be amplified, resulting in variance \(K^2\sigma ^2\). Therefore, the distributed feedback model will have a signal-to-noise ratio \(K\) times larger than the noisy autapse model, and is equivalent to the autapse model with additive input noise with variance \(K\sigma ^2\). We can write the feedback signal into each system \(j\) as \(F_j(s) = KI(s)+\sum _{i=1}^{K}N_i(s)\), and thus the noise signal is identical in each output as well as the input.

Increasing redundancy (more parallel systems) whilst retaining only \(K\) feedback signals would potentially enable the noise at the output to be non-identical for each system, and thus allow noise reduction by averaging the outputs. However as soon as the feedback signals become sparse rather than dense, this introduces the possibility of instability, since some systems will impact on other systems after longer delays. Even in the case of \(K+1\) systems with only autapses forbidden, this leads to positive feedback loops, and quite complex equivalent transfer functions. Therefore, we leave study of this for future work.

3.3 Distributed Quantized Feedback

In many engineered systems, feedback is only available in a digitized form. This means the feedback signal has been quantized in amplitude, and this quantization can be considered as a form of noise. Often quantization noise is modelled as additive white noise, but this is only an approximation that is more inaccurate as the number of quantization levels becomes small. We now consider a scenario similar to that of the previous subsection, except that instead of additive white feedback noise, each feedback signal is quantized. Like the additive white noise case, we can expect that each system \(j\) will have identical outputs, due to the redundancy. The overall output noise should decease as the number of quantization levels increases. However, unlike the additive stochastic noise case, the overall output variance will be of the order of \(K^2\) rather than \(K\), since the noise signals will not be independent, and redundancy does not provide a benefit in terms of noise reduction compared with the noisy autapse model.

3.4 Distributed Quantized Feedback with Independent Stochastic White Noise Prior to Quantisation

If the feedback signal is corrupted by white noise prior to quantization, then this can make the quantization noise largely independent for each feedback signal. This again enables the possibility of noise reduction due to averaging where the feedback signals enter each system. See [17, 18] for discussion.

3.5 Example Results

Figure 5 shows how well the models described above perform in comparison with the ideal autapse model described in Sect. 2. In each case we simulate the system for a variety of noise levels, and then calculate the output signal-to-noise ratio in comparison with the ideal response.

These results show that the distributed feedback model with stochastic noise provides much improved performance than the autapse model with feedback noise, as expected. They also show that when the feedback is quantized, that stochastic noise significantly enhances performance over quantization alone. This is in line with theoretical work presented in [17], and implies that the stochastic and quantized feedback noise model can be described as a stochastic pooling network [18].

Comparison of output signal-to-noise ratios for five different levels of feedback noise. For each model except the quantization feedback noise model, the feedback is corrupted by stochastic additive white Gaussian noise with variances \(0.02,0.04,0.06,0.08,0.1\), corresponding to Noise level ID \(1-5\). For the two quantization models, the feedback is quantized to \(8,7,6,5,4\) bits, corresponding to Noise level ID \(1-5\). Error bars indicated the standard deviation from 20 repeats, and the lines are the mean signal-to-noise ratio. No error bars are shown for the quantization feedback noise case, since in this case there is no stochastic noise, and therefore no variance

4 Possibilities for Bio-Inspired Engineering

We have discussed a model that plausibly explains why narrowband oscillations are often observed in recordings of cortical activity [10]. Models of this type have received much attention in neuroscience and computational neuroscience [10, 13, 14]. What is not known, however, is how cortical networks may utlize such bandpass filter-like characteristics, if at all. From an engineering perspective, however, is it possible that a designed system may benefit from mimicking some aspect of how the cortical network achieves a resonant characteristic?

One common use for bandpass filters is in modulated communication. Their use enables frequency multiplexing, and they are also useful in demodulation of FM signals. Given that in the first case an ideal filter has a flat passband, its seems unlikely that one would wish to design a filter like the cortical network. However, there is more potential for demodulation of FM signals, since a filter with a linear increase in gain with frequency is required in a frequency discriminator.

We therefore propose in future work to compare performance achieved by each model described in Sect. 3, when the input is a linearly swept chirp, or other FM signal, and the output of the system is used to estimate instantaneous frequency as a function of time. We also propose that the distributed noisy feedback model may be adapted in designs of distributed communication systems consisting of small, cheap and redundant nodes, similar to models discussed in [18].

References

E.M. Izhikevich, N.S. Desai, E.C. Walcott, F.C. Hoppensteadt, Trends Neurosci. 26, 161 (2003)

W.J. Freeman, Mass Action in the Nervous System (Academic Press, New York, 1975)

A. Destexhe, M. Rudolph-Lilith, Neuronal Noise (Springer, New York, 2012)

G. Buzsáki, A. Draguhn, Science 304, 1926 (2004)

B. Hutcheon, Y. Yarom, Trends Neurosci. 23, 216 (2000)

Y. Gai, B. Doiron, J. Rinzel, PLoS Comp. Bio. 6, e1000825 (2010)

B.P. Graham, C. Stricker, in Proceedings of the 2008 International Conference on Artificial Neural Networks (ICANN 2008), ed. by V. K\(\mathring{\rm {u}}\)rková, et. al (Lecture Notes in Computer Science LNCS 5164. Springer-Verlag, Berlin Heidelberg, Germany, 2008), pp. 268–276.

T. Sasaki, R. Kimura, M. Tsukamoto, N. Matsuki, Y. Ikegayan, J. Physiol. (London) 574, 195 (2006)

C.D. Geisler, From Sound to Synapse (Oxford University Press, New York, 1998)

N. Brunel, X.J. Wang, J. Neurophysiol. 90, 415 (2003)

N. Brunel, V. Hakim, Chaos 18, 015113 (2008)

X.J. Wang, Physiol.l Rev. 90, 1195 (2010)

A. Mazzoni, S. Panzeri, N.K. Logothetis, N. Brunel, PLoS Comp. Bio. 4, e1000239 (2008)

K. Kang, M. Shelley, J.A. Henrie, R. Shapley, J. Comput. Neurosci. 29, 495 (2010)

G.F. Franklin, J.D. Powell, A. Emami-Naeini, Feedback Control of Dynamic Systems, 5th edn. (Pearson Prentice Hall, New Jersey, 2006)

J.M. Bekkers, Current Biology 19, R296 (2009)

M.D. McDonnell, N.G. Stocks, C.E.M. Pearce, D. Abbott, Stochastic Resonance: From Suprathreshold Stochastic Resonance to Stochastic Signal Quantisation (Cambridge University Press, Cambridge, 2008)

M.D. McDonnell, P.O. Amblard, N.G. Stocks, J. Stat. Mech: Theory Exp. P0, 1012 (2009)

Acknowledgments

M. D. McDonnell was supported by the Australian Research Council under ARC grant DP1093425 (including an Australian Research Fellowship), and an Endeavour Research Fellowship from the Australian Government.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this chapter

Cite this chapter

McDonnell, M.D. (2014). Distributed Bandpass Filtering and Signal Demodulation in Cortical Network Models. In: In, V., Palacios, A., Longhini, P. (eds) International Conference on Theory and Application in Nonlinear Dynamics (ICAND 2012). Understanding Complex Systems. Springer, Cham. https://doi.org/10.1007/978-3-319-02925-2_14

Download citation

DOI: https://doi.org/10.1007/978-3-319-02925-2_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-02924-5

Online ISBN: 978-3-319-02925-2

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)