Abstract

It is always possible to find an integral representation for initial value problems of ordinary differential equations whenever they are explicit in the n-th derivative of some variable y with respect to some other variable t. Consequently, this chapter starts with simple integrators and extends these single step methods to multi-step methods like Taylor series, linear multi-step methods, and Runge–Kutta methods. The introduction of Butcher tableaus makes the algorithmic description of these methods more transparent. Symplectic integrators are discussed as the methods of choice to solve equations of motion in Hamiltonian systems with energy conservation. The Kepler problem serves then as a benchmark to test simple as well as symplectic integrators. It becomes transparent that due to the accumulative nature of the methodological error of non-symplectic integrators energy conservation in Hamiltonian systems is severely violated.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Linear Multi-step Methods

- Symplectic Integrators

- Butcher Tableau

- Methodological Errors

- Symplectic Euler Method

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In this chapter we will introduce common numeric methods designed to solve initial value problems. Within our discussion of the Kepler problem in the previous chapter we introduced four concepts, namely the implicit Euler method, the explicit Euler method, the implicit midpoint rule, and we mentioned the symplectic Euler method. In this chapter we plan to put these methods into a more general context and to discuss more advanced techniques.

Let us define the problem: We consider initial value problems of the form

where \(y(t) \equiv y\) is an \(n\)-dimensional vector and \(y_0\) is referred to as the initial value of \(y\). Let us make some remarks about the form of Eq. (5.1).

(i) We note that by posing Eq. (5.1), we assume that the differential equation is explicit in \(\dot{y}\); i.e. initial value problems of the form

are only considered if \(G(\dot{y})\) is analytically invertible. For instance, we will not deal with differential equations of the form

(ii) We note that Eq. (5.1) is a first order differential equation in \(y\). However, this is in fact not a restriction since we can transform every explicit differential equation of order \(n\) into a coupled set of explicit first order differential equations. Let us demonstrate this. We regard an explicit differential equation of the form

where we defined \(y^{(k)} \equiv \frac{\mathrm{{d}}^k}{\mathrm{{d}}t^k} y\). This equation is equivalent to the set

which can be written as Eq. (5.1). Hence, we can attenuate the criterion discussed in point (i), i.e. that the differential equation has to be explicit in \(\dot{y}\), to the criterion that the differential equation of order \(n\) has to be explicit in the \(n\)-th derivative of \(y\), namely \(y^{(n)}\).

There is another point required to be discussed before moving on. The numerical treatment of initial value problems is of inestimable value in physics because many differential equations, which appear unspectacular at first glance, cannot be solved analytically. For instance, consider a first order differential equation:

Although this equation appears to be simple, one has to rely on numerical methods in order to obtain a solution. However, Eq. (5.6) is not well posed since the solution is ambiguous as long as no initial values are given. A numerical solution is only possible if the problem is completely defined. In many cases, one uses numerical methods although the problem is solvable with the help of analytic methods, simply because the solution would be too complicated. A numerical approach might be justified, however, one should always remember that [1], quote:

“Numerical methods are no excuse for poor analysis.”

This chapter is augmented by a chapter on the double pendulum, which will serve as a demonstration of the applicability of Runge-Kutta methods and by a chapter on molecular dynamics which will demonstrate the applicability of the leap-frog algorithm.

2 Simple Integrators

We start by reintroducing the methods already discussed in the previous chapter. Again, we discretize the time coordinate \(t\) via the relation \(t_n = t_0 + n \varDelta t\) and define \(f_n \equiv f(t_n)\) accordingly. In the following we will refrain from noting the initial condition explicitly for a more compact notation. We investigate Eq. (5.1) at some particular time \(t_n\):

Integrating both sides of (5.7) over the interval \([t_n, t_{n+1}]\) gives

Note that Eq. (5.8) is exact and it will be our starting point in the discussion of several paths to a numeric solution of initial value problems. These solutions will be based on an approximation of the integral on the right hand side of Eq. (5.8) with the help of the methods already discussed in Chap. 3.

In the following we list four of the best known simple integration methods for initial value problems:

(1)

Applying the forward rectangular rule (3.9) to Eq. (5.8) yields

which is the explicit Euler method we encountered already in Sect. 4.3. This method is also referred to as the forward Euler method . In accordance to the forward rectangular rule, the leading term of the error of this method is proportional to \(\varDelta t^2\) as was pointed out in Sect. 3.2.

(2)

We use the backward rectangular rule (3.10) in Eq. (5.8) and obtain

which is the implicit Euler method, also referred to as backward Euler method . As already highlighted in Sect. 4.3, it may be necessary to solve Eq. (5.10) numerically for \(y_{n+1}\) (Some notes on the numeric solution of non-linear equations can be found in Appendix B).

(3)

The central rectangular rule (3.13) approximates Eq. (5.8) by

and we rewrite this equation in the form:

This method is sometimes referred to as the leap-frog routine or Störmer-Verlet method. We will come back to this point in Chap. 7. Note that the approximation

gives the implicit midpoint rule as it was introduced in Sect. 4.3.

(4)

Employing the trapezoidal rule (3.15) in an approximation to Eq. (5.8) yields

This is an implicit method which has to be solved for \(y_{n+1}\). It is generally known as the Crank-Nicolson method or simply as trapezoidal method.

Methods (1), (2), and (4) are also known as one-step methods, since only function values at times \(t_n\) and \(t_{n+1}\) are used to propagate in time. In contrast, the leap-frog method is already a multi-step method since three different times appear in the expression. Basically, there are three different strategies to improve these rather simple methods:

-

Taylor series methods: Use more terms in the Taylor expansion of \(y_{n+1}\).

-

Linear Multi-Step methods: Use data from previous time steps \(y_k\), \(k < n\) in order to cancel terms in the truncation error.

-

Runge-Kutta method: Use intermediate points within one time step.

We will briefly discuss the first two alternatives and then turn our attention to the Runge-Kutta methods in the next section.

Taylor Series Methods From Chap. 2 we are already familiar with the Taylor expansion (2.7) of the function \(y_{n+1}\) around the point \(y_n\),

We insert Eq. (5.7) into Eq. (5.15) and obtain

So far nothing has been gained since the truncation error is still proportional to \(\varDelta t^2\). However, calculating \(\ddot{y}_n\) with the help of Eq. (5.7) gives

and this results together with Eq. (5.16) in:

This manipulation reduced the local truncation error to orders of \(\varDelta t^3\). The derivatives of \(f(y_n,t_n)\), \(f'(y_n,t_n)\) and \(\dot{f}(y_n,t_n)\) can be approximated with the help of the methods discussed in Chap. 2, if an analytic differentiation is not feasible.

The above procedure can be repeated up to arbitrary order in the Taylor expansion (5.15).

Linear Multi-Step Methods

A \(k\)-th order linear multi-step method is defined by the approximation

of Eq. (5.8). The coefficients \(a_j\) and \(b_j\) have to be determined in such a way that the truncation error is reduced. Two of the best known techniques are the so called second order Adams–Bashford methods

and the second order rule (backward differentiation formula)

We note in passing that the backward differentiation formula of arbitrary order can easily be obtained with the help of the operator technique introduced in Sect. 2.4, Eq. (2.30). One simply replaces the first derivative on the left hand side by the function \(f(y_n,t_n)\) according to Eq. (5.7) and calculates the backward difference series on the right hand side to arbitrary order.

In many cases, multi-step methods are based on the interpolation of previously computed values \(y_k\) by Lagrange polynomials. This interpolation is then inserted into Eq. (5.8) and integrated. However, a detailed discussion of such procedures is beyond the scope of this book. The interested reader is referred to Refs. [2, 3].

Nevertheless, let us make one last point. We note that Eq. (5.19) is explicit for \(b_0 = 0\) and implicit for \(b_0 \ne 0\). In many numerical realizations one combines implicit and explicit multi-step methods in such a way that the explicit result (solve Eq. (5.19) with \(b_0 = 0\)) is used as a guess to solve the implicit equation (solve Eq. (5.19) with \(b_0 \ne 0\)). Hence, the explicit method predicts the value \(y_{n+1}\) and the implicit method corrects it. Such methods yield very good results and are commonly referred to as predictor–corrector methods [4].

3 Runge-Kutta Methods

In contrast to linear multi-step methods, the idea in Runge-Kutta methods is to improve the accuracy by calculating intermediate grid-points within the interval \([t_n,t_{n+1}]\). We note that the approximation (5.11) resulting from the central rectangular rule is already such a method since the function value \(y_{n+\frac{1}{2}}\) at the grid-point \(t_{n+\frac{1}{2}} = t_n + \frac{\varDelta t}{2}\) is taken into account. We investigate this in more detail and rewrite Eq. (5.11):

We now have to find appropriate approximations to \(y_{n+\frac{1}{2}}\) which will increase the accuracy of Eq. (5.11). Our first choice is to replace \(y_{n+\frac{1}{2}}\) with the help of the explicit Euler method, Eq. (5.9),

which, inserted into Eq. (5.22) yields

We note that Eq. (5.24) is referred to as the explicit midpoint rule. In analogy we could have approximated \(y_{n+\frac{1}{2}}\) with the help of the implicit Euler method (5.10) which yields

This equation is referred to as the implicit midpoint rule. Let us explain how we obtain an estimate for the error in Eqs. (5.24) and (5.25). In case of Eq. (5.24) we investigate the term

The Taylor expansion of \(y_{n+1}\) and \(f(\cdot )\) around the point \(\varDelta t = 0\) yields

We observe that the first term cancels because of Eq. (5.7). Consequently, the error is of order \(\varDelta t^2\). A similar argument holds for Eq. (5.25).

Let us introduce a more convenient notation for the above examples before we concentrate on a more general topic. It is presented in algorithmic form, i.e. it defines the sequence in which one should calculate the various terms. This is convenient for two reasons, first of all it increases the readability of complex methods such as Eq. (5.25) and, secondly, it can be easily identified which part of the method involves an implicit step which has to be solved separately for the corresponding variable. For this purpose let us introduce variables \(Y_i\) of some index \(i \ge 1\) and we use a simple example to illustrate this notation. Consider the explicit Euler method (5.9). It can be written as

In a similar fashion we write the implicit Euler method (5.10) as

It is understood that the first equation of (5.28) has to be solved for \(Y_1\) first and this result is then plugged into the second equation in order to obtain \(y_{n+1}\). One further example: the Crank–Nicolson (5.14) method can be rewritten as

where the second equation is to be solved for \(Y_2\) in the second step.

In analogy, the algorithmic form of the explicit midpoint rule (5.24) is defined as

and we find for the implicit midpoint rule (5.25):

The above algorithms are all examples of the so called Runge-Kutta methods. We introduce the general representation of a \(d\)-stage Runge-Kutta method:

We note that Eq. (5.32) it is completely determined by the coefficients \(a_{ij}\), \(b_j\) and \(c_j\). In particular \(a = \{a_{ij} \}\) is a \(d \times d\) matrix, while \(b = \{b_j \}\) and \(c = \{ c_j \}\) are \(d\) dimensional vectors.

Butcher tableaus are a very useful tool to characterize such methods. They provide a structured representation of the coefficient matrix \(a\) and the coefficient vectors \(b\) and \(c\):

We note that the Runge-Kutta method (5.32) or (5.33) is explicit if the matrix \(a\) is zero on and above the diagonal, i.e. \(a_{ij} = 0\) for \( j \ge i\). Let us rewrite all the methods described here in the form of Butcher tableaus:

Explicit Euler:

Implicit Euler:

Crank-Nicolson:

Explicit Midpoint:

Implicit Midpoint:

With the help of Runge–Kutta methods of the general form (5.32) one can develop methods of arbitrary accuracy. One of the most popular methods is the explicit four stage method (we will call it e-RK-4) which is defined by the algorithm:

This method is an analogue to the Simpson rule of numerical integration as discussed in Sect. 3.4. However, a detailed compilation of the coefficient array \(a\) and coefficient vectors \(b\), and \(c\) is quite complicated. A closer inspection reveals that the methodological error of this method behaves as \(\varDelta t^5\). The algorithm e-RK-4, Eq. (5.39), is represented by a Butcher tableau of the form

Another quite popular method is given by the Butcher tableau

We note that this method is implicit and mention that it corresponds to the two point Gauss-Legendre quadrature of Sect. 3.6.

A further improvement of implicit Runge-Kutta methods can be achieved by choosing the \(Y_i\) in such a way that they correspond to solutions of the differential equation (5.7) at intermediate time steps. The intermediate time steps at which one wants to reproduce the function are referred to as collocation points. At these points the functions are approximated by interpolation on the basis of Lagrange polynomials, which can easily be integrated analytically. However, the discussion of such collocation methods [4] is far beyond the scope of this book.

In general Runge-Kutta methods are very useful. However one always has to keep in mind that there could be better methods for the problem at hand. Let us close this section with a quote from the book by Press et al. [5]:

“For many scientific users, fourth-order Runge-Kutta is not just the first word on ODE integrators, but the last word as well. In fact, you can get pretty far on this old workhorse, especially if you combine it with an adaptive step-size algorithm. Keep in mind, however, that the old workhorse’s last trip may well take you to the poorhouse: Bulirsch-Stoer or predictor-corrector methods can be very much more efficient for problems where high accuracy is a requirement. Those methods are the high-strung racehorses. Runge-Kutta is for ploughing the fields.”

4 Hamiltonian Systems: Symplectic Integrators

Let us define a symplectic integrator as a numerical integration in which the mapping

is symplectic. Here \(\varPhi _{\varDelta t}\) is referred to as the numerical flow of the method. If we regard the initial value problem (5.1) we can define in an analogous way the flow of the system \(\varphi _t\) as

For instance, if we consider the initial value problem

where \(y \in {\mathbb R}^n\) and \(A \in {\mathbb R}^{n \times n}\), then the flow of the system \(\varphi _t\) is given by:

On the other hand, if we regard two vectors \(v, w \in {\mathbb R}^2\), we can express the area \(\omega \) of the parallelogram spanned by these vectors as

where we put \(v = (a, b)^T\) and \(w = (c,d)^T\). More generally, if \(v, w \in {\mathbb R}^{2d}\), we have

where \(I\) is the \(d \times d\) dimensional unity matrix. Hence (5.47) represents the sum of the projected areas of the form

If we regard a mapping \(M: {\mathbb R}^{2d} \mapsto {\mathbb R}^{2d}\) and require that

i.e. the area is preserved, we obtain the condition that

which is equivalent to \(\det (M) = 1\). Finally, a differentiable mapping \(f: {\mathbb R}^{2d} \mapsto {\mathbb R}^{2d}\) is referred to as symplectic if the linear mapping \(f'(x)\) (Jacobi matrix) conserves \(\omega \) for all \(x \in {\mathbb R}^{2d}\). One can easily prove that the flow of Hamiltonian systems (energy conserving) is symplectic, i.e. area preserving in phase space. Every Hamiltonian system is characterized by its Hamilton function\(H(p,q)\) and the corresponding Hamilton equations of motion:

We define the flow of the system via

where

Hence we rewrite (5.51) as

and note that \(x \equiv x(t,x_0)\) is a function of time and initial conditions. In a next step we define the Jacobian of the flow via

and calculate

Hence, \(P_t\) is given by the solution of the equation

Symplecticity ensures that the area

which can be verified by calculating \(\frac{{\mathrm {d}}}{{\mathrm {d}} t} \left( P_t^T J P_t \right) \) where we keep in mind that \(J^T = -J\). Hence,

if the Hamilton function is conserved, i.e.

This means that the flow of a Hamiltonian system is symplectic, i.e. area preserving in phase space.

Since this conservation law is violated by methods like e-RK-4 or explicit Euler, one introduces so called symplectic integrators, which have been particularly designed as a remedy to this shortcoming. A detailed investigation of these techniques is far too engaged for this book. The interested reader is referred to Refs. [6–9].

However, we provide a list of the most important integrators.

Symplectic Euler

Here \(a(p,q) = \nabla _p H(p,q)\) and \(b(p,q) = - \nabla _q H(p,q)\) have already been defined in Sect. 4.3.

Symplectic Runge – Kutta

It can be demonstrated that a Runge-Kutta method is symplectic if the coefficients fulfill

for all \(i,j\) [7]. This is a property of the collocation methods based on Gauss points \(c_i\).

5 An Example: The Kepler Problem, Revisited

It has already been discussed in Sect. 4.3 that the Hamilton function of this system takes on the form

and Hamilton’s equations of motion read

We now introduce the time instances \(t_n = t_0 + n \varDelta t\) and define \(q_i^n \equiv q_i(t_n)\) and \(p_i^n \equiv p_i(t_n)\) for \(i = 1,2\). In the following we give the discretized recursion relation for three different methods, namely explicit Euler, implicit Euler, and symplectic Euler.

Explicit Euler

In case of the explicit Euler method we have simple recursion relations

Implicit Euler

We obtain the implicit equations

These implicit equations can be solved, for instance, by the use of the Newton method discussed in Appendix B.

Symplectic Euler

Employing Eqs. (5.61) gives

These implicit equations can be solved analytically and we obtain

A second possibility of the symplectic Euler is given by Eq. (4.41). It reads

The trajectories calculated using these four methods are presented in Figs. 5.1 and 5.2, the time evolution of the total energy of the system is plotted in Fig. 5.3. The initial conditions were [7]

and

with \(e = 0.6\) which gives \(H = -1/2\). Furthermore, we set \(\varDelta t = 0.01\) for the symplectic Euler methods and \(\varDelta t = 0.005\) for the forward and backward Euler methods in order to reduce the methodological error. The implicit equations were solved with help of the Newton method as discussed in Appendix B. The Jacobi matrix was calculated analytically, hence no methodological error enters because approximations of derivatives were unnecessary.

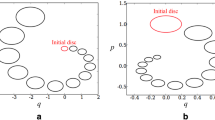

Kepler trajectories in position space for the initial values defined in Eqs. (5.70) and (5.71). They are indicated by a solid square. Solutions have been generated (a) by the explicit Euler method (5.65), (b) by the implicit Euler method (5.66), (c) by the symplectic Euler method (5.68), and (d) by the symplectic Euler method (5.69)

According to theory the \(q\)-space and \(p\)-space projections of the phase space trajectory are ellipses. Furthermore, energy and angular momentum are conserved. Thus, the numerical solutions of Hamilton’s equations of motion (5.64) should reflect these properties. Figures 5.1a, b and 5.2a, b present the results of the explicit Euler method, Eq. (5.65), and the implicit Euler method, Eq. (5.66), respectively. Obviously, the result does not agree with the theoretical expectation and the trajectories are open instead of closed. The reason for this behavior is the methodological error of the method which is accumulative and, thus, causes a violation of energy conservation. This violation becomes apparent in Fig. 5.3 where the total energy \(H(t)\) is plotted versus time \(t\). Neither the explicit Euler method (dashed line) nor the implicit Euler method (short dashed line) conform to the requirement of energy conservation. We also see step-like structures of \(H(t)\). At the center of these steps an open diamond symbol and in the case of the implicit Euler method an additional open circle indicate the position in time of the perihelion of the point-mass (point of closest approach to the center of attraction). It is indicated by the same symbols in Fig. 5.1a, b. At this point the point-mass reaches its maximum velocity, the pericenter velocity, and it covers the biggest distances along its trajectory per time interval \(\varDelta t\). Consequently, the methodological error is biggest in this part of the trajectory which manifests itself in those steps in \(H(t)\). As the point-mass moves ‘faster’ when the implicit Euler method is applied, again, the distances covered per time interval are greater than those covered by the point-mass in the explicit Euler method. Thus, it is not surprising that the error of the implicit Euler method is bigger as well when \(H(t)\) is determined.

Kepler trajectories in momentum space for the initial values defined in Eqs. (5.70) and (5.71). They are indicated by a solid square. Solutions have been generated (a) by the explicit Euler method (5.65), (b) by the implicit Euler method (5.66), (c) by the symplectic Euler method (5.68), and (d) by the symplectic Euler method (5.69)

Time evolution of the total energy \(H\) calculated with the help of the four methods discussed in the text. The initial values are given by Eqs. (5.70) and (5.71). Solutions have been generated (a) by the explicit Euler method (5.65) (dashed line), (b) by the implicit Euler method (5.66) (dotted line), (c) by the symplectic Euler method (5.68) (solid line), and (d) by the symplectic Euler method (5.69) (dashed-dotted line)

These results are in strong contrast to the numerical solutions of Eqs. (5.64) obtained with the help of symplectic Euler methods which are presented in Figs. 5.1c, d and 5.2c, d. The trajectories are almost perfect ellipses for both symplectic methods Eqs. (5.68) and (5.69). Moreover, the total energy \(H(t)\) (solid and dashed-dotted lines in Fig. 5.3) varies very little as a function of \(t\). Deviations from the mean value can only be observed around the perihelion which is indicated by a solid square. Moreover, these deviations compensate because of the symplectic nature of the method. This proves that symplectic integrators are the appropriate technique to solve the equations of motion of Hamiltonian systems.

Summary

We concentrated on numerical methods to solve the initial value problem. The methods discussed here rely heavily on the various methods developed for numerical integration because we can always find an integral representation of this kind of ordinary differential equations. The simple integrators known from Chap. 4 were augmented by the more general Crank-Nicholson method which was based on the trapezoidal rule introduced in Sect. 3.3. The simple single-step methods were improved in their methodological error by Taylor series methods, linear multi-step methods, and by the Runge-Kutta method. The latter took intermediate points within the time interval \([t_n,t_{n+1}]\) into account. In principle, it is possible to achieve almost arbitrary accuracy with such a method. Nevertheless, all those methods had the disadvantage that because of their methodological error energy conservation was violated when applied to Hamiltonian systems. As this problem can be remedied by symplectic integrators a short introduction into this topic was provided and the most important symplectic integrators have been presented. The final discussion of Kepler’s two-body problem elucidated the various points discussed throughout this chapter.

Problems

-

1.

Write a program to solve numerically the Kepler problem. The Hamilton function of the problem is defined as

$$\begin{aligned} H(p,q) = \frac{1}{2} \left( p_1^2 + p_2^2 \right) - \frac{1}{\sqrt{q_1^2 + q_2^2}}, \end{aligned}$$and the initial conditions are given by

$$\begin{aligned} p_1(0) = 0, \quad q_1(0) = 1-e, \quad p_2(0) = \sqrt{\frac{1+e}{1-e}}, \quad q_2(0) = 0, \end{aligned}$$where \(e = 0.6\). Derive Hamilton’s equations of motion and implement an algorithm which solves these equations based on the following methods

-

(a)

Explicit Euler,

-

(b)

Symplectic Euler.

-

(a)

-

2.

Plot the trajectories and the total energy as a function of time. You can use the results presented in Figs. 5.1 and 5.2 to check your code. Modify the initial conditions and discuss the results! Try to confirm Kepler’s laws of planetary motion with the help of your algorithm.

References

Dorn, W.S., McCracken, D.D.: Numerical Methods with Fortran IV Case Studies. Wiley, New York (1972)

Colliatz, L.: The Numerical Treatment of Differential Equations. Springer, Berlin (1960)

van Winckel, G.: Numerical methods for differential equations. Lecture Notes. Karl-Franzens Universität Graz, Austria (2012).

Ascher, U.M., Petzold, L.R.: Computer Methods for Ordinary Differential Equations and Differential-Algebraic Equations. Society for Industrial and Applied Mathematics, Philadelphia (1998)

Press, W.H., Teukolsky, S.A., Vetterling, W.T., Flannery, B.P.: Numerical Recipes in C++, 2nd edn. Cambridge University Press, Cambridge, UK (2002)

Guillemin, V., Sternberg, S.: Symplectic Techniques in Physics. Cambridge University Press, Cambridge, UK (1990)

Hairer, E.: Geometrical integration - symplectic integrators. Lecture Notes, TU München, Germany (2010)

Scheck, F.: Mechanics, 5th edn. Springer, Berlin (2010)

Levi, D., Oliver, P., Thomova, Z., Winteritz, P. (eds.): Symmetries and integrability of Difference Equations. London Mathematical Society Lecture Note Series. Cambridge University Press, Cambridge, UK (2011)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Stickler, B.A., Schachinger, E. (2014). Ordinary Differential Equations: Initial Value Problems. In: Basic Concepts in Computational Physics. Springer, Cham. https://doi.org/10.1007/978-3-319-02435-6_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-02435-6_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-02434-9

Online ISBN: 978-3-319-02435-6

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)