Abstract

We discuss several strategies for the time integration of first order initial value problems. The explicit Euler forward difference has low error order. The much more accurate symmetric difference quotient can be used as the corrector step in combination with an explicit Euler predictor step and is often used for the time integration of partial differential equations. Methods with higher error order can be obtained from a Taylor series expansion, like the Nordsieck and Gear predictor-corrector methods which have been often applied in molecular dynamics calculations. Runge-Kutta methods are very important for ordinary differential equations. They are robust and allow an adaptive control of the step size. Very accurate results can be obtained for ordinary differential equations with extrapolation methods like the famous Gragg-Bulirsch-Stör method. Multistep methods use information from several points. Best known are Adams-Bashforth-Moulton methods and Gear methods (also known as backward differentiation methods), which are especially useful for stiff problems. The class of Verlet methods has been developed for molecular dynamics calculations. These are symplectic and time reversible and conserve energy over long trajectories.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Verlet Method

- Lower Order Error

- Gear Predictor-corrector Method

- Backward Differentiation Methods

- Predictor Step

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Simulation of a physical system means to calculate the time evolution of a model system in many cases. We consider a large class of models which can be described by a first order initial value problem

where Y is the state vector (possibly of very high dimension) which contains all information about the system. Our goal is to calculate the time evolution of the state vector Y(t) numerically. For obvious reasons this can be done only for a finite number of values of t and we have to introduce a grid of discrete times t n which for simplicity are assumed to be equally spaced:Footnote 1

Advancing time by one step involves the calculation of the integral

which can be a formidable task since f(Y(t),t) depends on time via the time dependence of all the elements of Y(t). In this chapter we discuss several strategies for the time integration. The explicit Euler forward difference has low error order but is useful as a predictor step for implicit methods. A symmetric difference quotient is much more accurate. It can be used as the corrector step in combination with an explicit Euler predictor step and is often used for the time integration of partial differential equations. Methods with higher error order can be obtained from a Taylor series expansion, like the Nordsieck and Gear predictor-corrector methods which have been often applied in molecular dyamics calculations. Runge-Kutta methods are very important for ordinary differential equations. They are robust and allow an adaptive control of the step size. Very accurate results can be obtained for ordinary differential equations with extrapolation methods like the famous Gragg-Bulirsch-Stör method. If the solution is smooth enough, multistep methods are applicable, which use information from several points. Most known are Adams-Bashforth-Moulton methods and Gear methods (also known as backward differentiation methods), which are especially useful for stiff problems. The class of Verlet methods has been developed for molecular dynamics calculations. They are symplectic and time reversible and conserve energy over long trajectories.

1 The State Vector

The state of a classical N-particle system is given by the position in phase space, or equivalently by specifying position and velocity for all the N particles

The concept of a state vector is not restricted to a finite number of degrees of freedom. For instance a diffusive system can be described by the particle concentrations as a function of the coordinate, i.e. the elements of the state vector are now indexed by the continuous variable x

Similarly, a quantum particle moving in an external potential can be described by the amplitude of the wave function

Numerical treatment of continuous systems is not feasible since even the ultimate high end computer can only handle a finite number of data in finite time. Therefore discretization is necessary (Chap. 11), by introducing a spatial mesh (Sects. 11.2, 11.3, 11.6), which in the simplest case means a grid of equally spaced points

or by expanding the continuous function with respect to a finite set of basis functions (Sect. 11.5). The elements of the state vector then are the expansion coefficients

If the density matrix formalism is used to take the average over a thermodynamic ensemble or to trace out the degrees of freedom of a heat bath, the state vector instead is composed of the elements of the density matrix

2 Time Evolution of the State Vector

We assume that all information about the system is included in the state vector. Then the simplest equation to describe the time evolution of the system gives the change of the state vector

as a function of the state vector (or more generally a functional in the case of a continuous system). Explicit time dependence has to be considered for instance to describe the influence of an external time dependent field.

Some examples will show the universality of this equation of motion:

-

N-particle system

The motion of N interacting particles is described by

where the acceleration of a particle is given by the total force acting upon this particle and thus depends on all the coordinates and eventually time (velocity dependent forces could be also considered but are outside the scope of this book)

-

Diffusion

Heat transport and other diffusive processes are described by the diffusion equation

which in its simplest spatially discretized version for 1-dimensional diffusion reads

-

Waves

Consider the simple 1-dimensional wave equation

which by introducing the velocity \(g(\mathbf{x})=\frac{\partial}{\partial t}f(\mathbf{x})\) as an independent variable can be rewritten as

Discretization of space gives

-

Two-state quantum system

The Schrödinger equation for a two level system (for instance a spin-\(\frac{1}{2}\) particle in a magnetic field) reads

3 Explicit Forward Euler Method

The simplest method which is often discussed in elementary physics textbooks approximates the integrand by its value at the lower bound (Fig. 12.1):

The truncation error can be estimated from a Taylor series expansion

The explicit Euler method has several serious drawbacks

-

Low error order

Suppose you want to integrate from the initial time t 0 to the final time t 0+T. For a time step of Δt you have to perform N=T/Δt steps. Assuming comparable error contributions from all steps the global error scales as NΔt 2=O(Δt). The error gets smaller as the time step is reduced but it may be necessary to use very small Δt to obtain meaningful results.

-

Loss of orthogonality and normalization

The simple Euler method can produce systematic errors which are very inconvenient if you want, for instance, to calculate the orbits of a planetary system. This can be most easily seen from a very simple example. Try to integrate the following equation of motion (see Example 1.5 on page 12):

The exact solution is obviously given by a circular orbit in the complex plane:

Application of the Euler method gives

and you find immediately

which shows that the radius increases continually even for the smallest time step possible (Fig. 12.2).

The same kind of error appears if you solve the Schrödinger equation for a particle in an external potential or if you calculate the rotational motion of a rigid body. For the N-body system it leads to a violation of the conservation of phase space volume. This can introduce an additional sensitivity of the calculated results to the initial conditions. Consider a harmonic oscillator with the equation of motion

Application of the explicit Euler method gives

The change of the phase space volume (Fig. 12.3) is given by the Jacobi determinant

In this case the phase space volume increases continuously.

4 Implicit Backward Euler Method

Alternatively let us make a step backwards in time

which can be written as (Fig. 12.4)

Taylor series expansion gives

which shows that the error order again is O(Δt 2). The implicit method is sometimes used to avoid the inherent instability of the explicit method. For the examples in Sect. 12.3 it shows the opposite behavior. The radius of the circular orbit as well as the phase space volume decrease in time. The gradient at future time has to be estimated before an implicit step can be performed.

5 Improved Euler Methods

The quality of the approximation can be improved significantly by employing the midpoint rule (Fig. 12.5)

The error is smaller by one order of Δt:

The future value \(Y(t+\frac{\Delta t}{2})\) can be obtained by two different approaches:

-

Predictor-corrector method

Since \(f(Y(t+\frac{\Delta t}{2}),t_{n}+\frac{\Delta t}{2})\) is multiplied with Δt, it is sufficient to use an approximation with lower error order. Even the explicit Euler step is sufficient. Together the following algorithm results:

-

Averaging (Heun method)

The average of f(Y(t n ),t n ) and f(Y(t n +Δt),t+Δt) is another approximation to the midpoint value of comparable quality (Fig. 12.6).

Expansion around t n +Δt/2 gives

Inserting the average in (12.36) gives the following algorithm, which is also known as improved polygon method and corresponds to the trapezoidal rule for the integral (4.13) or to a combination of explicit and implicit Euler step:

In the special case of a linear function f(Y(t),t)=FY(t) (for instance rotational motion or diffusion) this can be solved formally by

Numerically it is not necessary to perform the matrix inversion. Instead a linear system of equations is solved:

In certain cases the Heun method conserves the norm of the state vector, for instance if F has only imaginary eigenvalues (as for the 1-dimensional Schrödinger equation, see page 393).

In the general case a predictor step has to be made to estimate the state vector at t n +Δt before the Heun expression (12.40) can be evaluated:

6 Taylor Series Methods

Higher order methods can be obtained from a Taylor series expansion

The total time derivative can be expressed as

where the partial derivatives have been abbreviated in the usual way by \(\frac{\partial f}{\partial t}=\dot{f}\) and \(\frac{\partial f}{\partial Y}=f'\). Higher derivatives are given by

6.1 Nordsieck Predictor-Corrector Method

Nordsieck [184] determines an interpolating polynomial of degree m. As variables he uses the 0th to mth derivativesFootnote 2 evaluated at the current time t, for instance for m=5 he uses the variables

Taylor expansion gives approximate values at t+Δt

where the next term of the Taylor series \(e(t)=\frac{\Delta t^{5}}{6!}\frac{\mathrm {d}^{6}}{\mathrm {d}t^{6}}Y(t)\) has been introduced as an approximation to the truncation error of the predicted values Y p,g p, etc. It can be estimated from the second equation

This predictor-corrector method turns out to be rather unstable. However, stability can be achieved by slightly modifying the coefficients of the corrector step. Nordsieck suggested to use

6.2 Gear Predictor-Corrector Methods

Gear [101] designed special methods for molecular dynamics simulations (Chap. 14) where Newton’s law (12.15) has to be solved numerically. He uses again a truncated Taylor expansion for the predictor step

to calculate new coordinates etc. \(\mathbf{r}_{n+1}^{p},\mathbf{v}_{n+1}^{p},\mathbf{a}_{n+1}^{p}\dots\) (Fig. 12.7). The difference between the predicted acceleration and that calculated using the predicted coordinates

is then used as a measure of the error to correct the predicted values according to

The coefficients c i were determined to optimize stability and accuracy. For instance the fourth order Gear corrector reads

Gear methods are generally not time reversible and show systematic energy drifts. A reversible symplectic predictor-corrector method has been presented recently by Martyna and Tuckerman [169].

7 Runge-Kutta Methods

If higher derivatives are not so easily available, they can be approximated by numerical differences. f is evaluated at several trial points and the results are combined to reproduce the Taylor series as close as possible [48].

7.1 Second Order Runge-Kutta Method

Let us begin with two function values. As common in the literature we will denote the function values as K 1,K 2,… . From the gradient at time t n

we estimate the state vector at time t n +Δt as

The gradient at time t n +Δt is approximately

which has the Taylor series expansion

and application of the trapezoidal rule (4.13) gives the 2nd order Runge-Kutta method

which in fact coincides with the improved Euler or Heun method. Taylor series expansion shows how the combination of K 1 and K 2 leads to an expression of higher error order:

7.2 Third Order Runge-Kutta Method

The accuracy can be further improved by calculating one additional function value at mid-time. From (12.76) we estimate the gradient at mid-time by

The gradient at time t n +Δt is then estimated as

Inserting the expansion (12.82) gives the leading terms

Applying Simpson’s rule (4.14) we combine the three gradients to get the 3rd order Runge-Kutta method

where the Taylor series

recovers the exact Taylor series (12.44) including terms of order O(Δt 3).

7.3 Fourth Order Runge-Kutta Method

The 4th order Runge-Kutta method (RK4) is often used because of its robustness and accuracy. It uses two different approximations for the midpoint

and Simpson’s rule (4.14) to obtain

Expansion of the Taylor series is cumbersome but with the help of an algebra program one can easily check that the error is of order Δt 5.

8 Quality Control and Adaptive Step Size Control

For practical applications it is necessary to have an estimate for the local error and to adjust the step size properly. With the Runge-Kutta method this can be achieved by a step doubling procedure. We calculate y n+2 first by two steps Δt and then by one step 2Δt. This needs 11 function evaluations as compared to 8 for the smaller step size only (Fig. 12.8). For the 4th order method we estimate the following errors:

The local error can be estimated from

The step size Δt can now be adjusted to keep the local error within the desired limits.

9 Extrapolation Methods

Application of the extrapolation method to calculate the integral \(\int_{t_{n}}^{t_{n+1}}f(t)\,\mathrm{d}t\) produces very accurate results but can also be time consuming. The famous Gragg-Bulirsch-Stör method [244] starts from an explicit midpoint rule with a special starting procedure. The interval Δt is divided into a sequence of N sub-steps

First a simple Euler step is performed

and then the midpoint rule is applied repeatedly to obtain

Gragg [111] introduced a smoothing procedure to remove oscillations of the leading error term by defining

He showed that both approximations (12.91, 12.92) have an asymptotic expansion in powers of h 2 and are therefore well suited for an extrapolation method. The modified midpoint method can be summarized as follows:

The number of sub-steps N is increased according to a sequence like

or

After each successive N is tried, a polynomial extrapolation is attempted. This extrapolation returns both the extrapolated values and an error estimate. If the error is still too large then N has to be increased further. A more detailed discussion can be found in [233, 234].

10 Linear Multistep Methods

All methods discussed so far evaluated one or more values of the gradient f(Y(t),t) only within the interval t n ⋯t n +Δt. If the state vector changes sufficiently smooth then multistep methods can be applied. Linear multistep methods use a combination of function values Y n and gradients f n from several steps

where the coefficients α, β are determined such, that a polynomial of certain order r is integrated exactly. The method is explicit if β 0=0 and implicit otherwise. Multistep methods have a small local error and need fewer function evaluations. On the other hand, they have to be combined with other methods (like Runge-Kutta) to start and end properly and it can be rather complicated to change the step size during the calculation. Three families of linear multistep methods are commonly used: explicit Adams-Bashforth methods, implicit Adams-Moulton methods and backward differentiation formulas (also known as Gear formulas [102]).

10.1 Adams-Bashforth Methods

The explicit Adams-Bashforth method of order r uses the gradients from the last r−1 steps (Fig. 12.9) to obtain the polynomial

and to calculate the approximation

which is generally a linear combination of f n ⋯f n−r+1. For example, the Adams-Bashforth formulas of order 2, 3, 4 are:

10.2 Adams-Moulton Methods

The implicit Adams-Moulton method also uses the yet not known value Y n+1 (Fig. 12.10) to obtain the polynomial

The corresponding Adams-Moulton formulas of order 2 to 4 are:

10.3 Backward Differentiation (Gear) Methods

Gear methods [102] are implicit and usually combined with a modified Newton method. They make use of previous function values Y n ,Y n−1… and the gradient f n+1 at time t+Δt. Only methods of order r≤6 are stable and useful. The general formula (12.95) is

For r=1 this becomes

and all linear polynomials

are integrated exactly if

which is the case for

Hence the first order Gear method is

which coincides with the implicit Euler method. The higher order stable Gear methods are given by

This class of algorithms is useful also for stiff problems (differential equations with strongly varying eigenvalues).

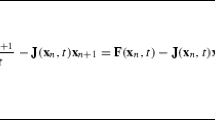

10.4 Predictor-Corrector Methods

The Adams-Bashforth-Moulton method combines the explicit method as a predictor step to calculate an estimate \(y_{n+1}^{p}\) with a corrector step using the implicit method of same order. The general class of linear multistep predictor-corrector methods [100] uses a predictor step

which is corrected using the formula

and further iterations

The coefficients α,β have to be determined to optimize accuracy and stability.

11 Verlet Methods

For classical molecular dynamics simulations it is necessary to calculate very long trajectories. Here a family of symplectic methods often is used which conserve the phase space volume [3, 116, 193, 255, 257, 265]. The equations of motion of a classical interacting N-body system are

where the force acting on atom i can be calculated once a specific force field is chosen. Let us write these equations as a system of first order differential equations

where x(t) and v(t) are functions of time and the forces m a(x(t)) are functions of the time dependent coordinates.

11.1 Liouville Equation

We rewrite (12.117) as

where the Liouville operator \(\mathcal{L}\) acts on the vector containing all coordinates and velocities:

The Liouville equation (12.118) can be formally solved by

For a better understanding let us evaluate the first members of the Taylor series of the exponential:

But since

we recover

11.2 Split-Operator Approximation

We introduce a small time step Δt=t/N and write

For the small time step Δt the split-operator approximation can be used which approximately factorizes the exponential operator. For example, write the Liouville operator as the sum of two terms

and make the approximation

Each of the two factors simply shifts positions or velocities

since these two steps correspond to either motion with constant velocities or constant coordinates and forces.

11.3 Position Verlet Method

Often the following approximation is used which is symmetrical in time

The corresponding algorithm is the so called position Verlet method (Fig. 12.11):

11.4 Velocity Verlet Method

If we exchange operators A and B we have

which produces the velocity Verlet algorithm (Fig. 12.12):

11.5 Störmer-Verlet Method

The velocity Verlet method is equivalent to Störmer’s version [208] of the Verlet method which is a two step method given by

To show the equivalence we add two consecutive position vectors

which simplifies to

This can be expressed as the difference of two consecutive velocities:

Now we substitute

to get

which simplifies to

Thus the equations of the velocity Verlet algorithm have been recovered. However, since the Verlet method is a 2-step method, the choice of initial values is important. The Störmer-Verlet method starts from two coordinate sets x 0,x 1. The first step is

The velocity Verlet method, on the other hand, starts from one set of coordinates and velocities x 1, v 1. Here the first step is

The two methods give the same resulting trajectory if we choose

If, on the other hand, x 0 is known with higher precision, the local error order of Störmer’s algorithm changes as can be seen from addition of the two Taylor series

which gives

11.6 Error Accumulation for the Störmer-Verlet Method

Equation (12.153) gives only the local error of one single step. Assume the start values x 0 and x 1 are exact. The next value x 2 has an error with the leading term Δx 2=αΔt 4. If the trajectory is sufficiently smooth and the time step not too large the coefficient α will vary only slowly and the error of the next few iterations is given by

This shows that the effective error order of the Störmer-Verlet method is only O(Δt 3) similar to the velocity Verlet method.

11.7 Beeman’s Method

Beeman and Schofield [17, 229] introduced a method which is very similar to the Störmer-Verlet method but calculates the velocities with higher precision. This is important if, for instance, the kinetic energy has to be calculated. Starting from the Taylor series

the derivative of the acceleration is approximated by a backward difference

This equation can be used as an explicit step to update the coordinates or as a predictor step in combination with the implicit corrector step

which can be applied repeatedly (usually two iterations are sufficient). Similarly, the Taylor series of the velocity is approximated by

Inserting the velocity from (12.158) we obtain the corrector step for the velocity

In combination with (12.157) this can be replaced by

Together, (12.157) and (12.161) provide an explicit method which is usually understood as Beeman’s method. Inserting the velocity (12.160) from the previous step

into (12.157) gives

which coincides with the Störmer-Verlet method (12.138). We conclude that Beeman’s method should produce the same trajectory as the Störmer-Verlet method if numerical errors can be neglected and comparable initial values are used. In fact, the Störmer-Verlet method may suffer from numerical extinction and Beeman’s method provides a numerically more favorable alternative.

11.8 The Leapfrog Method

Closely related to the Verlet methods is the so called leapfrog method [116]. It uses the simple decomposition

but introduces two different time grids for coordinates and velocities which are shifted by Δt/2 (Fig. 12.13).

The leapfrog algorithm is given by

Due to the shifted arguments the order of the method is increased as can be seen from the Taylor series:

One disadvantage of the leapfrog method is that some additional effort is necessary if the velocities are needed. The simple expression

is of lower error order than (12.168).

12 Problems

Problem 12.1

(Circular orbits)

In this computer experiment we consider a mass point moving in a central field. The equation of motion can be written as the following system of first order equations:

For initial values

the exact solution is given by

The following methods are used to calculate the position x(t),y(t) and the energy

-

The explicit Euler method (12.3)

-

The 2nd order Runge-Kutta method (12.7.1)

which consists of the predictor step

and the corrector step

To start the Verlet method we need additional coordinates at time −Δt which can be chosen from the exact solution or from the approximation

-

The leapfrog method (12.11.8)

$$\begin{aligned} &x(t_{n+1})=x(t_{n})+v_{x}(t_{n+\frac{1}{2}})\Delta t \end{aligned}$$(12.189)$$\begin{aligned} &y(t_{n+1})=y(t_{n})+v_{y}(t_{n+\frac{1}{2}})\Delta t \end{aligned}$$(12.190)$$\begin{aligned} &v_{x}(t_{n+\frac{1}{2}})=v_{x}(t_{n-\frac{1}{2}})- \frac{x(t_{n})}{R(t_{n})^{3}}\Delta t \end{aligned}$$(12.191)$$\begin{aligned} &v_{y}(t_{n+\frac{1}{2}})=v_{y}(t_{n-\frac{1}{2}})- \frac{y(t_{n})}{R(t_{n})^{3}}\Delta t \end{aligned}$$(12.192)

where the velocity at time t n is calculated from

To start the leapfrog method we need the velocity at time \(t_{-\frac{1}{2}}\) which can be taken from the exact solution or from

Compare the conservation of energy for the different methods as a function of the time step Δt. Study the influence of the initial values for leapfrog and Verlet methods.

Problem 12.2

(N-body system)

In this computer experiment we simulate the motion of three mass points under the influence of gravity. Initial coordinates and velocities as well as the masses can be varied. The equations of motion are solved with the 4th order Runge-Kutta method with quality control for different step sizes. The local integration error is estimated using the step doubling method. Try to simulate a planet with a moon moving round a sun!

Problem 12.3

(Adams-Bashforth method)

In this computer experiment we simulate a circular orbit with the Adams-Bashforth method of order 2⋯7. The absolute error at time T

is shown as a function of the time step Δt in a log-log plot. From the slope

the leading error order s can be determined. For very small step sizes rounding errors become dominating which leads to an increase Δ∼(Δt)−1.

Determine maximum precision and optimal step size for different orders of the method. Compare with the explicit Euler method.

Notes

- 1.

Control of the step width will be discussed later.

- 2.

In fact the derivatives of the interpolating polynomial which exist even if higher derivatives of f do not exist.

References

M.P. Allen, D.J. Tildesley, Computer Simulation of Liquids (Oxford University Press, London, 1989). ISBN 0-19-855645-4

D. Beeman, J. Comp. Physiol. 20, 130 (1976)

J.C. Butcher, The Numerical Analysis of Ordinary Differential Equations: Runge-Kutta and General Linear Methods (Wiley, New York, 1987)

C.W. Gear, Math. Comput. 21, 146 (1967)

C.W. Gear, Numerical Initial Value Problems in Ordinary Differential Equations (Prentice Hall, Englewood Cliffs, 1971)

C.W. Gear, IEEE Trans. Circuit Theory 18, 89–95 (1971)

W.B. Gragg, SIAM J. Numer. Anal. 2, 384 (1965)

E. Hairer, C. Lubich, G. Wanner, Acta Numer. 12, 399 (2003)

G.J. Martyna, M.E. Tuckerman, J. Chem. Phys. 102, 8071 (1995)

A. Nordsieck, Math. Comput. 16, 22 (1962)

I.P. Omelyan, I.M. Mryglod, R. Folk, Comput. Phys. Commun. 151, 272 (2003)

W.H. Press, S.A. Teukolsky, W.T. Vetterling, B.P. Flannery, Second-order conservative equations, in Numerical Recipes: The Art of Scientific Computing, 3rd edn. (Cambridge University Press, Cambridge, 2007), Sect. 17.4

P. Schofield, Comput. Phys. Commun. 5, 17 (1973)

L.F. Shampine, IMA J. Numer. Anal. 3, 383 (1983)

L.F. Shampine, L.S. Baca, Numer. Math. 41, 165 (1983)

J. Stör, R. Bulirsch, Introduction to Numerical Analysis, 3rd revised edn. (Springer, New York, 2010). ISBN 978-1441930064

S.-H. Tsai et al., Braz. J. Phys. 34, 384 (2004)

M. Tuckerman, B.J. Berne, J. Chem. Phys. 97, 1990 (1992)

L. Verlet, Phys. Rev. 159, 98 (1967)

Author information

Authors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Scherer, P.O.J. (2013). Equations of Motion. In: Computational Physics. Graduate Texts in Physics. Springer, Heidelberg. https://doi.org/10.1007/978-3-319-00401-3_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-00401-3_12

Publisher Name: Springer, Heidelberg

Print ISBN: 978-3-319-00400-6

Online ISBN: 978-3-319-00401-3

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)