Abstract

Understanding how cooperation spreads across social groups is fundamental in predicting how people will interact with each other in relation to the use and exploitation of the resources they are provided with. When social interactions can be mapped to a network, questions arise about the impact of the connection structure which can benefit from the literature developed for a dynamical systems. One model that is widely used as a model to understand the dynamics of cooperation is the replicator equation. While research has been proposed to adapt that equation to a structured graph, we offer a straightforward approach by benefiting from the networked SI diffusion model and replicator equation to create a replicator equation on a network with state-dependent diffusive constant. This approach can be applied to any network structure and features separation of the game and the information diffusion mechanism. The equilibria towards which the system evolves are here characterised and discussed.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Promoting cooperation in populations of selfish individuals is an extensive field of research. Numerous studies have used well-mixed or fully connected networks as a model [15]. At the beginning of the 21st century, it was recognised that some systems are well suited to be modelled as networks, which prompted the investigation of the evolution of cooperation in structured networks [8].

A completely connected network, where individuals represented by the vertices of the graph and the edges specify who interacts with whom, can also represent a well-mixed population in which everyone can interact with everyone else [5]. However, this does not exploit the flexibility of using a network to capture the variety of social connections [13] influencing the game. Because of that, other kinds of simple networks were proposed in this context, including trees, star networks [16], bipartite and line graphs [3].

A lattice network was proposed in [18, 22] as the model, which was appropriate to capture emerging behaviour in the classical prisoner dilemma [10], as also mentioned in [4]. The more frequent use of lattice networks followed from using a uniform, yet not fully mixed, environment in which most of the models originating in statistical physics can be used to describe the emergence and evolution of cooperation [12]. Despite their dissimilarity to real social networks, they offer a beneficial starting point to investigate how structure affects the development of cooperation [12]. Beyond regular graphs [4], more complex network structures have been considered, including the random geometric network [5] and the scale-free network [17]. Although more rare, some games dynamics and control studies have considered generic network structures [16].

Replicator dynamics is widely used in the evolution of the strategy and the literature offers a number of enhancement and variation to the original formulation. It works on the assumption that the strategies adopted by players who are successful in the game are adopted by players who are less successful. The probability of switching strategy is a function of the difference in player’s payoff average between each strategy in the game. The strategy of players with greater fitness or reward is replicated by those with lower achievement in the game as the game is played repeatedly [20].

Related to structure, the dynamics of the conventional replicator assumes that the players are engaged in a game and the adoption of global strategies. Each player engages with a representative sample of the population, and if a strategy produces a payoff that is higher than the average, the strategy will be chosen and spread[6]. Understanding that the game could be played on a structured population with a general network, the individual best strategy may be different from player to player, as each engage in games with a different set of neighbours. This, motivates us to consider a multiplier, function of the perceived payoff from choosing a strategy, to model its dynamics in a general network.

Recently, there has been an increasing interest in the research community in studying replicator equation on graph. One of the graph structures is the regular graph [11] which has been considered also in multiplex graphs [14]. Another approach is to modify the regular graph into a modular regular graph [2]. Beyond that, the replicator equation has been used in conjunction with different types of network, including random regular networks, Erdos-Rényi networks, Watts-Strogatz small world networks, and Barabási-Albert scale-free networks[19].

Application to even more general graphs has been attempted in [7][21]. This offers some benefit to understanding the impact of network structure on evolutionary dynamics. However, It is quite popular to assume the strategy change happens simultaneously, with consequent overlooking of dynamic aspects of the game and information diffusion. Understanding this potential for contribution, we develop a model to capture evolutionary dynamics that can be implemented in any population structure with some additional features.

The model mimic the shape of the usual susceptible-infected (SI) diffusion model on a network. Relating it to the structure of the replicator equation, the SI model’s infection rate is defined based on the payoff matrix of the replicator model and the payoffs the players acquire from it. This combination of the replicator equation and the susceptible-infected (SI) model hints at mechanisms of information diffusion in the evolution of the strategy on a general network.

There are some novel benefits of the combination. First, it becomes possible to separate the game and information diffusion process using this new model. The effect of the game and the diffusion process toward cooperation can be better understood separately. As this can also be extended into multilayer network games, it opens up the development of a novel model that uses other diffusion processes or opinion dynamics and other game types.

2 The Model

The network is modelled as a graph \(G=(V,E)\). The node \(v_i\) is in the set of nodes V. It can be written as

E is a set of ordered pair of nodes, also known as edges, that is \(\epsilon \subseteq \upsilon \times \upsilon \).

Every node will have specific interactions with other nodes based on the connection structure, as captured by the adjacency matrix \(A=[a_{ij}]\). It is necessary to add the new variable of the neighbour set \(H_{i}\), which indicates the neighbour of i.

We consider a Public Goods game with continuous strategy set \(x:= [0,1]\) mapping the extent by which each node is contributing (hence cooperating) to the public good. With \(x_i\) indicating the amount by which a node cooperates, \(1-x_i\) will be the proportion of defection. This approach is often referred to as individual-based mean field [9], where the state variable can be intended as a continuous variable or as the probability that the node is in one of the binary states 0 (defection) or 1 (cooperation). In each round of the game, interactions will incur some payoff for each player or agent, which is the function of \(x_i(t)\) and \(x_j(t)\) as written in Equation

After receiving the payoff, each player will update their strategy. This change is modelled in the concept of strategy update dynamics, which depend on strategy and payoff in the neighbourhood. It is written as follows;

where the initial condition is \(x_i(0)\) and the control is \(u_i(t)\) for each agent \( i \in V\).

3 Replicator Equation Model Development

3.1 Related Model

Before the game dynamics is introduced, let us introduce the dynamics of a well-known epidemic model, known as Susceptible-Infected (SI) model. In fact, we shall note how the diffusion of cooperation within the network can be modelled as a pandemic, and in particular by means of the SI model. For a constant size of the population, only the fraction of infected can be considered, with the susceptible being the complement to 1 to it. The differential model in scalar and vector form is [1]

The \(a_{ij}^k\) has a value 0 or 1, which 1 means that there is an edge between \(v_i\) and \(v_j\) in the layer k. This is the networked version of the basic SI model in a well-mixed population, which is expressed as

It comes from the idea that the cooperation condition is transformed from its fraction of defection multiplied by its interaction with the neighbour’s fraction of cooperation, summed up.

Understanding the nature of replicator equation and network SI model, there is an opportunity to combine these two functions to model replicator equation in a general network. The connection between two models is as follows:

-

Differential equations can represent the replicator and SI epidemic models with continuous time dynamics,

-

The population is divided into many segments or groups in both models, often called compartments in the SI model,

-

In both models, the rates of change depend on how people in the population interact with each other,

-

Both models have equilibrium points that represent stable or steady-state conditions.

-

Both models can be examined using related mathematical methods, such as stability analysis, to determine how the system behaves around equilibrium points.

Considering the similarity and connection between two models, the following section will explain the combination process of the two models.

3.2 Combining the SI Model and Replicator Equation

The payoff matrix that will be used for cooperation and defection is

The basic equation of replicator is [5]

where,

The equation introduces new variables \(f_c\), \(f_d\), and \(\bar{f}\) which mean the average payoff of the cooperator, the defector, and the population, respectively. Based on Eqs. 9 and 8, the modified replicator equation will be:

or,

The equivalence between an SI model in Eq. 6 and the replicator Eq. 11 becomes evident when considering

Understanding the similarity between the networked SI model in Eq. 6 and the replicator equation for the evolutionary game in Eq. 11, the networked evolutionary game can be modelled as a cooperation contagion process. Rather than representing infectious, the variables x represent cooperation and \(1-x\) represent defection. The \(\beta \) value is probability of contagion of cooperation, which represent how superior cooperation compared to defection, and influenced by the amount of cooperator (namely, x)

3.3 The Network Structure of Information Diffusion

Using the concept from [1], \((1-x)\cdot x\) can be a diffusion process in the SI model. Therefore, it means that \(1-x\) is a percentage of defection in the nodes. It may change by the proportion of cooperation x with its neighbour, multiplied by the contagion factor \(\beta \). The new equation will be

where \(\bar{\textbf{x}}(t)\) is the average value of \(\textbf{x}\) according to its neighbour at time t or

Because the information dissemination process will include not only the neighbour but also self-reinforcing belief loops A will be given by the adjacency matrix added with the identity matrix or \(A= A^* + I\).

3.4 Network Structure on Game

As has been introduced in Eq. 12, we assume that the rate at which cooperation spreads depends on the expected payoff difference between cooperation and defection. In particular, in well-mixed population, a player will expect a payoff from cooperating equal to \(f_c= x \cdot P + (1-x) \cdot Q\), which x considers the fraction of population expected to cooperate and \(1-x\) expected to defect. Likewise, the expected reward from defection will be \(f_d= x \cdot R + (1-x) \cdot S\).

Introducing the role of network structure on \(\beta \), will modify the equation to become:

This can be simplified to become

Equation 16 shows the possible role of the network structure in the game. The variable \(\beta \) represents the cooperator payoff minus the defector payoff. Equation \(x_j(P-R)\) means that the player i has the value \(\beta \) which is the result of payoff of cooperation (P) minus payoff of defection (R), multiplied by \(x_j\). The variable \(x_j\) is the fraction of cooperation of the neighbour of i. The same applies for \((1-x_j)(Q-S)\) but for the defecting neighbour. When the neighbour is related to the network structure, Eq. 16 can be modified into

In the matrix form it will become,

Then the complete equation from 18 and 13 will be

where \(\odot \) is the hadamard product between two vectors or two matrices.

4 Results

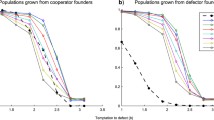

We can then offer some results about the system’s equilibrium through numerical simulations. After experimenting with other kinds of networks which produce consistent results, We present a result from the Erdos-Renyi network with ten nodes. The initial value of \(\textbf{x}\) are equally spread between 0 and 0.9 A key difference with the classical SI dynamics is in the value of \(\beta =f_c-fd\), which in this case is between -1 and 1. This is because in the SI model, \(\beta \) is a probability of transformation \(1-x\) into x. Therefore, it should not be greater than 1 for positive value of \(\dot{x}\) (Increasing x) and should not be less than 1 for negative value of \(\dot{x}\) (decreasing x). In other words \(-1\le \beta \le 1\). Therefore, \(|Q-S|\le 1\) and \(|P-R|\le 1\).

The simulation is set as an anticoordination game. For the anticoordination game, Q and R in the payoff matrix should be the highest value. Therefore, \(0\le \max \{Q,R\}-\min \{P,S\} \le 2\)

Looking at Eq. 19, the payoff matrix component that affects \(\dot{x}\) is not P,Q,R, or S individually, but \(P-R\) and \(Q-S\). It is consistent that if the value of P and R is changed but the value of \(P-R\) remains the same, the equilibrium point will not change. The same applies for \(Q-S\). The equilibrium point will remain the same even if the pay-off matrix is changed, as long as \(P-R\) and \(Q-S\) are the same.

Thus, we suggest that the equilibrium point is related to the ratio between the difference of P,R and the difference of Q,S. Or in other words,

The simulation also shows that the higher \(|\frac{Q-S}{P-R}|\) will make the equilibrium of \(\textbf{x}\) higher. The result of the simulation using some value of \(|\frac{Q-S}{P-R}|\) is shown in Fig. 1.

Value of \(\textbf{x}\) using dynamical equation Eq. 19 where value of \(|\frac{Q-S}{P-R}|\) equal to (a) 0.1,(b) 1, and (c) 10.

5 Conclusion

The replicator dynamics, originally implemented in a well-mixed population, is one of the most widely used methods as is or developed with some enhancement and variation. We propose a new approach to model the replicator equation in a general graph. Although some of the previous findings merge the game and strategy diffusion process, we try to separate those. Taking advantage of the replicator equation and SI model, the mechanism of the game and strategy diffusion process can be better understood.

This modelling approach opens up possibilities for future development. With the separate mechanism of game and information diffusion, it can be extended into a two-layer network. The diffusion process can also be exchanged with another spreading mechanism in the network, such as the opinion dynamic and another more complex model and mechanism.

References

Bullo, F.: Lectures on Network Systems (2018)

Cassese, D.: Replicator equation on networks with degree regular communities. Appl. Network Sci. 3, 29 (2018). https://doi.org/10.1007/s41109-018-0083-2

Das, S., Eksin, C.: Approximate submodularity of maximizing anticoordination in network games. In: 2022 IEEE 61st Conference on Decision and Control (CDC), pp. 3151–3157 (2022). https://doi.org/10.1109/CDC51059.2022.9993180

Du, J., Wu, Z.: Evolutionary dynamics of cooperation in dynamic networked systems with active striving mechanism. Appl. Math. Comput. 430, 127295 (2022). https://doi.org/10.1016/J.AMC.2022.127295

Ghivarello, S., Antonioni, A.: Coevolution of individual perception and cooperative behavior in the norm compliance dilemma (2022)

Hilbe, C.: Local replicator dynamics: a simple link between deterministic and stochastic models of evolutionary game theory. Bull. Math. Biol. 73, 2068–2087 (2011). https://doi.org/10.1007/s11538-010-9608-2

Li, J.Y., Wu, W.H., Li, Z.Z., Wang, W.X., Zhang, B.: Data-driven evolutionary game models for the spread of fairness and cooperation in heterogeneous networks. Front. Psychiatry 14, 1131769 (2023). https://doi.org/10.3389/fpsyt.2023.1131769

Lleberman, E., Hauert, C., Howak, M.A.: Evolutionary dynamics on graphs. Nature 433, 312–316 (2005). https://doi.org/10.1038/nature03204

Mieghem, P.V., Omic, J., Kooij, R.: Virus spread in networks. IEEE/ACM Trans. Networking 17, 1–14 (2009). https://doi.org/10.1109/TNET.2008.925623

Nowak, M.A., May, R.M.: Evolutionary games and spatial chaos. Nature 359, 826–829 (1992). https://doi.org/10.1038/359826a0

Ohtsuki, H., Nowak, M.A.: The replicator equation on graphs. J. Theor. Biol. 243, 86–97 (2006). https://doi.org/10.1016/j.jtbi.2006.06.004

Perc, M., Gómez-Gardeñes, J., Szolnoki, A., Floría, L.M., Moreno, Y.: Evolutionary dynamics of group interactions on structured populations: a review. J. R. Soc. Interface 10, 20120997 (2013). https://doi.org/10.1098/rsif.2012.0997

Perc, M., Jordan, J.J., Rand, D.G., Wang, Z., Boccaletti, S., Szolnoki, A.: Statistical physics of human cooperation. Phys. Rep. 687, 1–51 (2017). https://doi.org/10.1016/j.physrep.2017.05.004

Requejo, R.J., Díaz-Guilera, A.: Replicator dynamics with diffusion on multiplex networks. Phys. Rev. E 94, 022301 (2016). https://doi.org/10.1103/PhysRevE.94.022301

Riehl, J.R., Cao, M.: Towards control of evolutionary games on networks. In: 53rd IEEE Conference on Decision and Control, pp. 2877–2882 (2014). https://doi.org/10.1109/CDC.2014.7039831

Riehl, J.R., Cao, M.: Towards optimal control of evolutionary games on networks. IEEE Trans. Autom. Control 62, 458–462 (2017). https://doi.org/10.1109/TAC.2016.2558290

Santos, F.C., Pacheco, J.M.: Scale-free networks provide a unifying framework for the emergence of cooperation. Phys. Rev. Lett. 95, 098104 (2005). https://doi.org/10.1103/PhysRevLett.95.098104

Szolnoki, A., Perc, M.: Reward and cooperation in the spatial public goods game. Europhys. Lett.=Europhys. Lett. 92, 38003 (2010). https://doi.org/10.1209/0295-5075/92/38003

Tan, S., Wang, Y.: Graphical nash equilibria and replicator dynamics on complex networks. IEEE Trans. Neural Networks Learn. Syst. 31, 1831–1842 (2020). https://doi.org/10.1109/TNNLS.2019.2927233

Taylor, P.D., Jonker, L.B.: Evolutionary stable strategies and game dynamics. Math. Biosci. 40, 145–156 (1978). https://doi.org/10.1016/0025-5564(78)90077-9

Veelen, M.V.: The replicator dynamics with n players and population structure. J. Theor. Biol. 276, 78–85 (2011). https://doi.org/10.1016/j.jtbi.2011.01.044

Wang, S., Chen, X., Xiao, Z., Szolnoki, A.: Decentralized incentives for general well-being in networked public goods game. Appl. Math. Comput. 431, 127308 (2022)

Acknowledgement

This work funding was supported by Puslapdik BPI (Pusat Layanan Pendidikan Beasiswa Pendidikan Indonesia) Ministry of Education, Culture, Research and Technology of Indonesia, LPDP (Lembaga Pengelola Dana Pendidikan) Ministry of Finance of Indonesia, and Telkom University.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Aurachman, R., Punzo, G. (2024). On the Relation Between Replicator Evolutionary Dynamics and Diffusive Models on General Networks. In: Cherifi, H., Rocha, L.M., Cherifi, C., Donduran, M. (eds) Complex Networks & Their Applications XII. COMPLEX NETWORKS 2023. Studies in Computational Intelligence, vol 1142. Springer, Cham. https://doi.org/10.1007/978-3-031-53499-7_29

Download citation

DOI: https://doi.org/10.1007/978-3-031-53499-7_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-53498-0

Online ISBN: 978-3-031-53499-7

eBook Packages: EngineeringEngineering (R0)