Abstract

Digital hardware Trojans are integrated circuits whose implementation differ from the specification in an arbitrary and malicious way. For example, the circuit can differ from its specified input/output behavior after some fixed number of queries (known as “time bombs”) or on some particular input (known as “cheat codes”).

To detect such Trojans, countermeasures using multiparty computation (MPC) or verifiable computation (VC) have been proposed. On a high level, to realize a circuit with specification \(\mathcal{F}\) one has more sophisticated circuits \(\mathcal{F}^\diamond \) manufactured (where \(\mathcal{F}^\diamond \) specifies a MPC or VC of \(\mathcal{F}\)), and then embeds these \(\mathcal{F}^\diamond \)’s into a master circuit which must be trusted but is relatively simple compared to \(\mathcal{F}\). Those solutions impose a significant overhead as \(\mathcal{F}^\diamond \) is much more complex than \(\mathcal{F}\), also the master circuits are not exactly trivial.

In this work, we show that in restricted settings, where \(\mathcal{F}\) has no evolving state and is queried on independent inputs, we can achieve a relaxed security notion using very simple constructions. In particular, we do not change the specification of the circuit at all (i.e., \(\mathcal{F}=\mathcal{F}^\diamond \)). Moreover the master circuit basically just queries a subset of its manufactured circuits and checks if they’re all the same.

The security we achieve guarantees that, if the manufactured circuits are initially tested on up to T inputs, the master circuit will catch Trojans that try to deviate on significantly more than a 1/T fraction of the inputs. This bound is optimal for the type of construction considered, and we provably achieve it using a construction where 12 instantiations of \(\mathcal{F}\) need to be embedded into the master. We also discuss an extremely simple construction with just 2 instantiations for which we conjecture that it already achieves the optimal bound.

T. Lizurej—Stefan Dziembowski, Małgorzata Gałązka, and Tomasz Lizurej were supported by the 2016/1/4 project carried out within the Team program of the Foundation for Polish Science co-financed by the European Union under the European Regional Development Fund.

K. Pietrzak—Suvradip and Krzysztof have received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (682815 - TOCNeT).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Hardware Trojans

Preventing attacks on cryptographic hardware that are based on leakage and tampering has been a popular topic both in the theory in the practical research communities [6,7,8,9,10, 15]. Despite being very powerful, the models considered in this area are restricted in the sense that it is typically assumed that a given device has been manufactured correctly, i.e., the adversary is present during the execution of the device, but not when it is produced. As it turns out, this assumption is not always justifiable, and in particular in some cases the adversary may be able to modify the device at the production time. This is because, for economic reasons, private companies and government agencies are often forced to use hardware that they did not produce themselves. The contemporary, highly-specialized digital technology requires components that are produced by many different enterprises, usually operating in different geographic locations. Even a single chip is often manufactured in a production cycle that involves different entities. In a very popular method of hardware production, called the foundry model, the product designer is only developing the abstract description of a device. The real hardware fabrication happens in foundry. Only few major companies (like Intel) still manufacture chips by themselves [16].

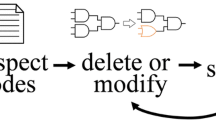

Modifications to the original circuit specification introduced during the manufacturing process (in a way that is hard to detect by inspection and simple testing) are called hardware Trojans, and can be viewed as the extreme version of hardware attacks. For more on the practical feasibility of such attacks the reader may consult, e.g., books [13, 16], or popular-science articles [1, 12]. Hardware Trojans can be loosely classified into digital and physical ones. Physical hardware Trojans can be triggered and/or communicate via a physical side-channel, while digital hardware Trojans only use the regular communication interfaces. In this paper we only consider digital hardware Trojans.

1.1 Detecting Digital Hardware Trojans

A simple non-cryptographic countermeasure to detect whether a circuit \(\mathsf{F}\) contains a hardware Trojan or follows the specification \(\mathcal{F}\) is testing: one samples inputs \(x_1,\ldots ,x_T\), queries \(y_i\leftarrow \mathsf{F}(x_i)\) and checks whether \(y_i=\mathcal{F}(x_i)\) for all i. Two types of digital hardware Trojans discussed in the literature that evade detection by such simple testing are time bombs and cheat codes (see, e.g., [5]). A time bomb is a hardware Trojan where the circuit starts deviating after a fixed number of queries. Cheat codes refer to hardware Trojans where the circuits deviate on a set of hard-coded inputs. To achieve some robustness against all digital hardware Trojans, solutions using cryptographic tools, in particular verifiable computation (VC) [2, 17] and multiparty computation (MPC) [5] were suggested. In both cases the idea is to take the specification \(\mathcal{F}\) of the desired circuit and replace it with a more sophisticated construction of one or more circuits \(\mathcal{F}^\diamond \). The circuit(s) \(\mathsf{F}^\diamond \) that (presumably) are manufactured according to specification \(\mathcal{F}^\diamond \) are then embedded into a master circuit \(\mathsf{M}\) to get a circuit \(\mathsf{M}^{\mathsf{F}^\diamond }\) which is proven to follow specification \(\mathcal{F}\) with high probability as long as it produces outputs. The master circuit must be trusted, but hopefully can be much simpler than \(\mathcal{F}\). We elaborate on these two methods below.

Using Verifiable Computation. Here the idea is to let \(\mathcal{F}^\diamond (x)\) output a tuple \((y,\pi )\) where \(y=\mathcal{F}(x)\) and \(\pi \) is a succinct zero-knowledge proof (see [3]) that y is the correct output. In the compiled circuit \(\mathsf{M}^{\mathsf{F}^\diamond }\) the master \(\mathsf{M}\) in input x invokes \((y,\pi )\leftarrow \mathsf{F}^\diamond \), then verifies the proof \(\pi \) and only outputs y if the check passes. If verification fails, the master aborts with a warning. As long as the compiled circuit provides outputs, they are guaranteed to be correct. If there are no Trojans, the number of outputs is unbounded; but if there is a Trojan, they can make the compiled circuit abort already at the first query. See [2, 17] for the details.

Using Multiparty Computation. In this case, the idea is to use secure multiparty computation protocols (MPCs, see, e.g., [4]). The compiled circuit \(\mathsf{M}^{\mathsf{F}^\diamond }\) contains some number of sub-components \(\mathsf{F}^\diamond \) that communicate only via the master circuit. In [5], this number is 3k (where k is a parameter). The sub-components are grouped in triples, each of them executing a 3-party protocol. In order to avoid the “cheat code” attacks, the master secret shares the input between the 3 parties. To get assurance that the sub-components are not misbehaving they are tested before deployment. In order to avoid the “time bomb” attacks, the number of times each sub-component is tested is an independently chosen random number from 1 to T. The output of each triple is secret-shared between its sub-components. Each of them sends its share to the master circuit, who reconstructs the k secrets, and outputs the value that is equal to the majority of these secrets. For the details see [5].

Simple Schemes. In this work we consider compilers as discussed above, but only particularly simple ones which have the potential of being actually practical. In particular, we require that \( \mathcal{F}\equiv \mathcal{F}^\diamond \). That is, the specification \(\mathcal{F}^\diamond \) of the functionality given to the untrusted manufacturer is the actual functionality \(\mathcal{F}:\mathcal{X}\rightarrow \mathcal{Y}\) we want to implement. Moreover, our master just invokes (a random subset of) the circuits on the input and checks if the outputs are consistent.

This restricted model has very appealing properties. For example, it means one can use our countermeasures with circuits that have already been manufactured. But there are also limitations on what type of security one can achieve. Informally, the security we prove for our construction roughly states that for any constant \(c>0\) there exists a constant \(c'\) such that no malicious manufacturer can create Trojans which (1) will not be detected with probability at least c, and (2) if not detected, will output a \(\ge c'/T\) fraction of wrong outputs. Here T is an upper bound on the number of test queries we can make to the Trojans before they are released.

In particular, we only guarantee that most outputs are correct, and we additionally require that the inputs are iid. Unfortunately, it’s not hard to see that for the simple class of constructions considered these assumptions are not far from necessary.Footnote 1 We will state the security of the VC and MPC solutions using our notion of Trojan-resilience in Sect. 2.7.

It’s fair to ask whether our notion has any interesting applications at all. Two settings in which Trojan resilience might be required are (1) in settings where a computation is performed where false (or at least too many false) outputs would have serious consequences, and (2) cryptographic settings where the circuit holds a key or other secret values that should not leak.

For (1) our compiler would only be provably sufficient if the inputs are iid, and only useful if a small fraction of false outputs can be tolerated. This is certainly a major restriction, but as outlined above, if one doesn’t have the luxury to manufacture circuits that are much more sophisticated than the required functionality, it’s basically the best one can get. Depending on the setting, one can potentially use our compiler in some mode – exploiting redundancy or using repetition – to fix those issues. We sketch some measures in the cryptographic setting below.

For the cryptographic setting (2) our notion seems even less useful: if the adversary can learn outputs of the Trojans, he can use the \(\varTheta (1/T)\) fraction of wrong outputs to embed (and thus leak) its secrets. While using the compiler directly might not be a good idea, we see it as a first but major step towards simple and Trojan-resilient constructions in the cryptographic setting. As an example, consider a weak PRF \(\mathcal{F}:\mathcal{K}\times \mathcal{X}\rightarrow \mathcal{Y}\) (a weak PRF is defined like a regular PRF, but the outputs are only pseudorandom if queried on random inputs). While implementing \(\mathcal{F}(k,\cdot )\) using our compiler directly is not a good idea as discussed above,Footnote 2 we can compile \(t>1\) weak PRFs with independent keys and inputs and finally XOR the outputs of the t master circuits to implement a weak PRF \(\mathcal{F}_3((k_1,\ldots ,k_t),(x_1,\ldots ,x_t))=\oplus _{i=1}^t\mathcal{F}(k_i,x_i)\). Intuitively, the output can only leak significant information about the keys if all t outputs are wrong as otherwise the at least one pseudorandom output will mask everything. If each output is wrong with probability, say 1/T for a modest \(T=2^{30}\) and we use \(t=3\), then for each query we only have a probability of \(1/T^3=2^{-90}\) that all \(t=3\) outputs deviate, which we can safely assume will never happen. Unfortunately, at this point we can’t prove the above intuition and leave this for future work. For one thing, while we know that the XOR will not leak much if at least one of the t values is correct when the weak PRFs are modelled as ideal ciphers [11], we don’t have a similar result in the computational setting. More importantly, we only prove that at most a 1/T fraction of the outputs is wrong once a sufficiently large number of queries was made, but to conclude that in the above construction all t instances fail at the same time with probability at most \(1/T^t\) we need a stronger statement saying that for each individual query the probability of failure is 1/T (we believe that this is indeed true for our construction, but the current proof does not imply this).

Weak PRFs are sufficient for many basic symmetric-key cryptographic tasks like authentication or encryption.Footnote 3 Even if a fraction of outputs can be wrong, as long as they don’t leak the key (as it seems to be the case for the construction just sketched), this will only affect completeness, but not security. An even more interesting construction, and the original motivation for this work, is a trojan-resilient stream cipher. This could then be used to e.g., generate the high amount of randomness required in side-channel countermeasures like masking schemes. The appealing property of a stream-cipher in this setting is that we don’t care about correctness at all, we just want the output to be pseudorandom. It’s not difficult to come up with a candidate for such a stream-cipher based on our compiler, but again, a proof will require more ideas. One such construction would start with the weak PRF construction just discussed, and then use two instantiations of it in the leakage-resilient mode from [14].

2 Definition and Security of Simple Schemes

For \(m\in \mathbb {N}\), an m-redundant simple construction \( \varPi _m=(\mathsf{T}^*,\mathsf{M}^*) \) is specified by a master circuit \(\mathsf{M}^*:{\mathcal{X}}\rightarrow \mathcal {\mathcal{Y}}\cup \{\mathsf{abort}\}\) and a test setup \(\mathsf{T}^*:\mathbb {N}\rightarrow \{\mathsf{fail},\mathsf{pass}\}\).Footnote 4 The \(*\) indicates that they expect access to some “oracles”. The following oracles will be used: (a) \(\mathsf{F}_1,\ldots ,\mathsf{F}_m\)—the Trojan circuits that presumably implement the functionality \(\mathcal{F}:\mathcal{X}\rightarrow \mathcal{Y}\), (b) \(\mathsf{F}\)—a trusted implementation of \(\mathcal{F}\) (only available in the test phase), and (c) \(\$\)—a source of random bits (sometimes we will provide the randomness as input instead),

2.1 Test and Deployment

The construction \(\varPi _m\) which implements \(\mathcal{F}\) in a Trojan-resilient way using the untrusted \(\mathsf{F}_1,\ldots ,\mathsf{F}_m\) is tested and deployed as follows.

-

Lab Phase (test): In this first phase we execute \(\{\mathsf{pass},\mathsf{fail}\}\leftarrow \mathsf{T}^{\mathsf{F}_1,\ldots ,\mathsf{F}_m,\mathsf{F},\$}(T)\) The input T specifies that each \(\mathsf{F}_i\) may be queried at most T times. If the output is \(\mathsf{fail}\), a Trojan was detected. Otherwise (i.e. the output is \(\mathsf{pass}\)) we move to the next phase.

-

Wild Phase (deployment): If the test outputs \(\mathsf{pass}\), the \(\mathsf{F}_i\)’s are embedded into the master to get a circuit \( \mathsf{M}^{\mathsf{F}_1,\ldots ,\mathsf{F}_m,\$}:\mathcal{X}\rightarrow \mathcal{Y}\cup \mathsf{abort}. \)

2.2 Completeness

The completeness requirement states that if every \(\mathsf{F}_i\) correctly implements \(\mathcal{F}\), then the test phase outputs pass with probability 1 and the master truthfully implements the functionality \(\mathcal{F}\). That is, for every sequence \(x_1,x_2,\ldots ,x_q\) (of arbitrary length and potentially with repetitions) we have

The reason we define completeness this way and not simply for all x we have \(\Pr [\mathsf{M}^{\mathsf{F}_1,\ldots ,\mathsf{F}_m,\$}(x)=\mathcal{F}(x)]\) is that the Trojan \(\mathsf{F}_i\) can be stateful, so the order in which queries are made does matter.

2.3 Security of Simple Schemes

We consider a security game \(\mathsf{TrojanGame}(\varPi ,T,Q)\) where, for some \(T,Q\in \mathbb {Z}\), an adversary \(\mathsf{Adv}\) can choose the functionality \(\mathcal{F}\) and the Trojan circuits \(\mathsf{F}_1,\ldots ,\mathsf{F}_m\). We first run the test phase \(\tau \leftarrow \mathsf{T}^{\mathsf{F}_1,\ldots ,\mathsf{F}_m,\mathsf{F},\$}(T)\) We then run the wild phase by querying the master on Q iid inputs \(x_1,\ldots ,x_Q\).

The goal of the adversary is two-fold:

-

1.

They do not want to be caught, if either \(\tau =\mathsf{fail}\) or \(y_i=\mathsf{abort}\) for some \(i\in [Q]\) we say the adversary was detected and define the predicate

$$ \mathsf{detect}=\mathsf {false}\iff (\tau =\mathsf{pass}) \wedge (\forall i\in [Q] : y_i\ne \mathsf{abort}) $$ -

2.

They want the master to output as many wrong outputs as possible. We denote the number of wrong outputs by

Informally, we call a compiler (like our simple schemes) \((\mathsf{win},\mathsf{wrng})\)-Trojan resilient, or simply \((\mathsf{win},\mathsf{wrng})\)-secure, if for every Trojan, the probability that it causes the master to output \(\ge \mathsf{wrng}\) fraction of wrong outputs without being detected is at most \(\mathsf{win}\). In the formal definition \(\mathsf{win}\) and \(\mathsf{wrng}\) are allowed to be a function of the number of test queries T.

Definition 1

(\((\mathsf{win},\mathsf{wrng})\)-Trojan resilience). And adversary \((\mathsf{win},\mathsf{wrng})\)-wins in \(\mathsf{TrojanGame}(\varPi ,T,Q)\) if the master outputs more than a \(\mathsf{wrng}\) fraction of wrong values without the Trojans being detected with probability greater than \(\mathsf{win}\), i.e.,

For \(\mathsf{win}:\mathbb {N}\rightarrow [0,1],\mathsf{wrng}:\mathbb {N}\rightarrow [0,1],q:\mathbb {N}\rightarrow \mathbb {N}\), we say that \(\varPi \) is \((\mathsf{win}(T),\mathsf{wrng}(T),q(T))\)-Trojan-resilient (or simply “secure”) if there exists a constant \(T_0\), such that for all \(T\ge T_0\) and \(Q\ge q(T)\) no adversary \((\mathsf{win}(T),\mathsf{wrng}(T))\)-wins in \(\mathsf{TrojanGame}(\varPi ,T,Q)\).

We say \(\varPi \) is \((\mathsf{win}(T),\mathsf{wrng}(T))\) Trojan-resilient if it is \((\mathsf{win}(T),\mathsf{wrng}(T),q(T))\)-Trojan-resilient for some (sufficiently large) polynomial \(q(T)\in poly(T)\).

In all our simple constructions the test and master only use the outputs of the \(\mathsf{F}_i\) (and for the test also \(\mathsf{F}\)) oracles to check for equivalence. This fact will allow us to consider somewhat restricted adversaries in the security proof.

Definition 2

(Generic Simple Scheme). A generic simple scheme \(\mathsf{T}^*,\mathsf{M}^*\) treats the outputs of the \(\mathsf{F}_i\) (and for \(\mathsf{T}^*\) additionally \(\mathsf{F}\)) oracles like variables. Concretely, two or more oracles can be queried on the same input, and then one checks if the outputs are identical. Moreover the master can use the output of an \(\mathsf{F}_i\) as its own output.

By the following lemma, to prove security of generic simple schemes, it will be sufficient to consider restricted adversaries that always choose to attack the trivial functionality \(\mathcal{F}(x)=0\) and where the output range of the Trojans is a bit.

Lemma 1

For any generic simple scheme \(\varPi _m\), assume an adversary \(\mathsf{Adv}\) exists that \((\mathsf{win},\mathsf{wrng})\)-wins in \(\mathsf{TrojanGame}(\varPi _m,T,Q)\) and let \(\mathcal{F}:\mathcal{X}\rightarrow \mathcal{Y}\ ,\ \mathsf{F}_1,\ldots ,\mathsf{F}_m:\mathcal{X}\rightarrow \mathcal{Y}\) denote its choices for the attack. Then there exists an adversary \(\mathsf{Adv}'\) who also \((\mathsf{win},\mathsf{wrng})\)-wins in \(\mathsf{TrojanGame}(\varPi _m,T,Q)\) and chooses \(\mathcal{F}':\mathcal{X}\rightarrow \{0,1\}\ ,\ \mathsf{F}'_1,\ldots ,\mathsf{F}'_m:\mathcal{X}\rightarrow \{0,1\}\) where moreover \(\forall x\in \mathcal{X}:\mathcal{F}'(x)=0\).

Proof

\(\mathsf{Adv}'\) firstly runs \(\mathsf{Adv}\) to learn (i) the functionality \(\mathcal{F}:\mathcal{X}\rightarrow \mathcal{Y}\) which it wants to attack and (ii) its Trojans \(\mathsf{F}_1,\ldots ,\mathsf{F}_m\). It then outputs (as its choice of function to attack) an \(\mathcal{F}'\) where \(\forall x\in \mathcal{X}:\mathcal{F}'(x)=0\) and, for every \(i\in [m]\), it chooses the Trojan \(\mathsf{F}'_i\) to output 0 if \(\mathsf{F}_i\) would output the correct value, and 1 otherwise. More formally, \(\mathsf{F}'_i(x)\) invokes the original Trojan \(y\leftarrow \mathsf{F}_i(x)\) and outputs 0 if \(\mathcal{F}(x)=y\) and 1 otherwise.

By construction, whenever one of the \(\mathsf{F}'_i\)’s deviates (i.e., outputs 1), also the original \(\mathsf{F}_i\) would have deviated. And whenever the test or master detect an inconsistency in the new construction, they would also have detected an inconsistency with the original \(\mathcal{F}\) and \(\mathsf{F}_i\).Footnote 5 \(\square \)

2.4 Lower Bounds

By definition, \((\mathsf{win},\mathsf{wrng})\)-security implies \((\mathsf{win}',\mathsf{wrng}')\)-security for any \(\mathsf{win}'\ge \mathsf{win},\mathsf{wrng}'\ge \mathsf{wrng}\). The completeness property implies that no scheme is (1, 0)-secure (as by behaving honestly an adversary can (1, 0)-win). And also no scheme is (0, 1)-secure (as \(\Pr [E]\ge 0\) holds for every event E). Thus our \((\mathsf{win},\mathsf{wrng})\)-security notion is only interesting if both, \(\mathsf{win}\) and \(\mathsf{wrng}\) are \(>0\). We will prove the following lower bound:

Lemma 2

(Lower bound for simple schemes). For any \(c>0\) and \(m\in \mathbb {N}\) there exists a constant \(c'=c'(c,m)>0\) such that no m-redundant simple scheme \(\varPi _m\) is \((c,\frac{c'}{T})\)-Trojan-resilient.

Proof

\(\mathsf{Adv}\) chooses the constant functionality \(\mathcal{F}(x)=0\) with a sufficiently large input domain \(|\mathcal{X}|\gg (m\cdot T)^2\) (so that sampling \(m\cdot T\) elements at random from \(\mathcal{X}\) with or without repetition is basically the same). Now \(\mathsf{Adv}\) samples a random subset \(\mathcal{X}'\subset \mathcal{X}\ ,\ {|\mathcal{X}'|}/{|\mathcal{X}|}=\frac{1.1\cdot c'}{T}\) (for \(c'\) to be determined) and then defines Trojans which deviate on inputs from \(\mathcal{X}'\)

Should the test pass, the master will deviate on each input with probability \(1.1\cdot c'/T\), if we set the number of queries Q large enough, the fraction of wrong outputs will be close to its expectation \(1.1\cdot c'/T\), and thus almost certainly larger than \(c'/T\).

It remains to prove that the test passes with probability \(\ge c\). By correctness, the testing procedure \(\mathsf{T}^{\mathsf{F}_1,\ldots ,\mathsf{F}_m,\mathsf{F},\$}\) must output pass unless one of the total \(\le m\cdot T\) queries it made to the \(\mathsf{F}_i\)s falls into the random subset \(\mathcal{X}'\). The probability that no such query is made is at least

and this expression goes to 1 as \(c'\) goes to 0. We now choose \(c'>0\) sufficiently small so the expression becomes \(>c\). To get a quantitative bound one can use the well known inequality \(\lim _{T\rightarrow \infty } (1-1/T)^T=1/e\approx 0.367879\). \(\square \)

The (proof of) the previous lemma also implies the following.

Corollary 1

If a simple scheme \(\varPi _m\) is \((\mathsf{win}(T),\mathsf{wrng}(T))\) secure with

-

1.

\(\mathsf{win}(T)\in 1-o(1)\) then \(\mathsf{wrng}(T)\in o(1/T)\).

-

2.

\(\mathsf{wrng}(T)\in \omega (1/T)\) then \(\mathsf{win}(T)\in o(1)\).

The first item means that if \(\mathsf{Adv}\) wants to make sure the Trojan is only detected with sub-constant probability, then he can only force the master to output a o(1/T) fraction of wrong outputs during deployment. The second item means that if \(\mathsf{Adv}\) wants to deviate on a asymptotically larger than 1/T fraction of outputs, it will be detected with a probability going to 1.

Not Interesting Security for 1-Redundant Schemes. For \(m=1\) redundant circuits a much stronger lower bound compared to Lemma 2 holds. The following Lemma implies that no 1-redundant scheme is \((\epsilon (T),\delta (T))\)-Trojan-resilient for any \(\epsilon (T)>0\) and \(\delta (T)=1/\mathsf{poly}(T)\) (say \(\epsilon (T)=2^{-T},\delta (T)=T^{-100}\)).

Lemma 3

(Lower bound for \(m=1\)). For any 1-redundant scheme \(\varPi _1\) and any polynomial \(p(T)>0\), there is an adversary that \((1,1-1/p(T))\)-wins in the \(\mathsf{TrojanGame}(\varPi _1,T,Q)\) game for \(Q\ge p(T)\cdot T\).

Proof

Consider an adversary who chooses a “time bomb” Trojan \(\mathsf{F}_1\) which correctly outputs \(\mathcal{F}(x)\) for the first T queries and also stores those queries, so it can output the correct value if one of those queries is repeated in the future. From query \(T+1\) the Trojan outputs wrong values unless it is given one of the first T queries as input, in which case it outputs the correct value. This Trojan will pass any test making at most T invocations, while the master will deviate on almost all queries, i.e., all except the first T.

To see why we store the first T queries and do not deviate on them when they repeat in the future, consider a master which stores the outputs it observes on the first T queries so it can later detect inconsistencies. \(\square \)

2.5 Efficiency of Lower Bound vs. Constructions

For the lower bounds in the previous section, the only restriction on the test \(\mathsf{T}^{\mathsf{F}_1,\ldots ,\mathsf{F}_m,\mathsf{F},\$}(T)\) is that each \(\mathsf{F}_i\) can only be queried at most T times. There are no restrictions on the master \(\mathsf{M}^{\mathsf{F}_1,\ldots ,\mathsf{F}_m,\$}(\cdot )\) at all. In particular, it can be stateful, computationally unbounded, use an arbitrary amount of randomness, and query the \(\mathsf{F}_i\)s on an unbounded number of inputs (as the Trojan \(\mathsf{F}_i\)s can be stateful this is not the same as learning the function table of the \(\mathsf{F}_i\)’s).

While the lack of any restrictions makes the lower bound stronger, we want our upper bounds, i.e., the actual constructions, to be as efficient (in terms of computational, query and randomness complexity) and simple as possible, and they will indeed be very simple.

Let us stress that one thing the definition does not allow is the test to pass a message to the master. If we would allow a message of unbounded length to be passed this way no non-trivial lower bound would hold as \(\mathsf{T}\) could send the entire function table of \(\mathcal{F}\) to \(\mathsf{M}\), which then could perfectly implement \(\mathcal{F}\). Of course such a “construction” would get against the entire motivation for simple schemes where \(\mathsf{M}^*\) should be much simpler and independent of \(\mathcal{F}\). Still, constructions where the test phase sends a short message to the master (say, a few correct input/output pairs of \(\mathcal{F}\) which the master could later use to “audit” the Trojans) could be an interesting relaxation to be considered.

2.6 Our Results and Conjectures

Our main technical result is a construction of a simple scheme which basically matches the lower bound from Lemma 2. Of course for any constant \(c>0\), the constant \(c'\) in the theorem below must be larger than in Lemma 2 so there’s no contradiction.

Construction \(\varPi _2^\star \) (discussed in Sect. 2.9), which is \((c,\frac{c'}{T})\) secure for history-independent Trojans.

Construction \(\varPi _{12}\) for which we prove optimal Trojan-resilience as stated in Theorem 1. Very informally, the security proof is by contradiction: via a sequence of hybrids an attack against \(\varPi _{12}\) is shown to imply an attack where the yellow part basically corresponds to \(\varPi _2^\star \) with two history independent circuits. This attack contradicts the security of \(\varPi _2^\star \) as stated in Theorem 2. (Color figure online)

Theorem 1

(Main, optimal security of \(\varPi _{12}\)). For any constant \(c>0\) there is a constant \(c'\) such that the simple construction \(\varPi _{12}\) from Fig. 3 is \((c,\frac{c'}{T})\)-Trojan resilient.

While \((c,\frac{c}{T})\)-Trojan-resilience matches our lower bound, the construction is \(m=12\)-redundant (recall this means we need 12 instantiations of \(\mathcal{F}\) manufactured to instantiate the scheme). While for \(m=1\) redundancy is not sufficient to get any interesting security, as we showed in Lemma 3, we conjecture that \(m=2\) is sufficient to match the lower bound, and give a candidate construction.

Conjecture 1

(Optimal security of \(\varPi _2^\phi \)). For any \(0<\phi <1\) and any constant \(c>0\) there is a constant \(c'=c'(c,\phi )\) such that the simple construction \(\varPi _2^\phi \) from Sect. 3 is \((c,\frac{c'}{T})\)-Trojan resilient.

The parameter \(\phi \) in this construction basically specifies that the master will query both oracles \(\mathsf{F}_1\) and \(\mathsf{F}_2\) on a (random) \(T^{-\phi }\) fraction of the input, and check consistency in this case. While the conjecture is wrong for \(\phi =0\) and \(\phi \ge 1\), the \(\phi =0\) case (i.e., when we always query both, \(\mathsf{F}_1\) and \(\mathsf{F}_2\)) will be of interest to us as security of the  construction against a limited adversary (termed history-independent and discussed in Sect. 2.9 below) will be a crucial step towards our proof of our main theorem.

construction against a limited adversary (termed history-independent and discussed in Sect. 2.9 below) will be a crucial step towards our proof of our main theorem.

2.7 Comparison with VC and MPC

Let us shortly compare the security we achieve with the more costly solutions based on verifiable computation (VC) [2, 17] and multiparty computation (MPC) [5] discussed in the introduction. We can consider \((\mathsf{win},\mathsf{wrng})\)-security as in Definition 1 also for the VC and MPC construction, here one would need change the \(\mathsf{TrojanGame}(\varPi ,T,Q)\) from Sect. 2.3 to allow the trojans \(\mathsf{F}_i\) to implement a different functionality than the target \(\mathcal{F}\) (for VC one needs to compute an extra succinct proof, for MPC the trojans implement the players in an MPC computation). For VC there’s no test (so \(T=0\)) and only one \(m=1\) Trojan, and for MPC and VC we can drop the requirement that the inputs are iid.

In the VC construction the master will catch every wrong output (except with negligible probability), so for any polynomial poly there is a negligible function negl (in the security parameter of the underlying succinct proof system), such that the scheme is (1/poly, negl, Q) secure for any polynomial Q.

For the MPC construction the master will provide \(Q<c_0 T\) outputs with probability \(c_1^m\) (where \(c_0 \in [0,1/2]\) and \(c_1 \in [0,1]\) are some constants), but while outputs are provided they are most likely correct, so for any polynomial Q, T we have \((1-c_1^m, negl, Q)\) security.

2.8 Stateless Trojans

In our security definition we put no restriction on the Trojans \(\mathsf{F}_i\) provided by the adversary (other than being digital hardware Trojans as discussed in the introduction), in particular, the \(\mathsf{F}_i\)’s can have arbitrary complex evolving state while honestly manufactured circuits could be stateless. We can consider a variant of our security definition (Definition 1) where the adversary is only allowed to choose stateless Trojan circuits \(\mathsf{F}_i\). Note that the lower bound from Lemma 2 still holds as in its proof we did only consider stateless \(\mathsf{F}_i\)’s. There’s an extremely simple 1-redundant construction that matches the lower bound when the adversary is only allowed to chose stateless Trojans.

Consider a construction \(\varPi _1=(\mathsf{T}^*,\mathsf{M}^*)\) where the master is the simplest imaginable: it just forwards inputs/outputs to/from its oracle, if \(\mathsf{F}_1\) is stateless this simply means \(\mathsf{M}^{\mathsf{F}_1}(\cdot )=\mathsf{F}_1(\cdot )\). The test \(\mathsf{T}^{\mathsf{F}_1,\mathsf{F},\$}(T)\) queries \(\mathsf{F}_1\) and the trusted \(\mathsf{F}\) on T random inputs and outputs \(\mathsf{fail}\) iff there is a mismatch.

Proposition 1

(Optimal security for 1-redundant scheme for stateless Trojans). For any constant \(c>0\) there is a constant \(c'>0\) such that \(\varPi _1\) is \((c,\frac{c'}{T})\)-Trojan resilient if the adversary is additionally restricted to choose a stateless Trojan.

Proof

If \(\mathsf{wrng}'\) denotes the fraction of inputs on which the Trojan \(\mathsf{F}_1\) differs from the specification \(\mathcal{F}\) (both chosen by an adversary \(\mathsf{Adv}\), note that \(\mathsf{wrng}'\) is only well defined here as \(\mathsf{F}_1\) is stateless), then \(\mathsf{wrng}'\) must satisfy \(c>(1-\mathsf{wrng}')^T\) if the adversary wants to \((c,\mathsf{wrng})\)-win for any \(\mathsf{wrng}\), as otherwise already the test catches the Trojan with probability \((1-\mathsf{wrng}')^T> c\). For \(c>(1-\mathsf{wrng}')^T\) to hold \(\mathsf{wrng}'\in \varOmega (1/T)\), in particular, \(\mathsf{wrng}'\ge c'/T\) for some \(c'>0\). \(\square \)

2.9 History-Independent Trojans

A notion of in-between general (stateful) Trojans and stateless Trojans will play a central role in our security proof. We say a trojan \(\mathsf{F}_i\) is history-independent if its only state is a counter which is incremented by one on every invocation, so it’s answer to the i’th query can depend on the current index i, but not on any inputs it saw in the past.

We observe that Lemma 3 stating that no 1-redundant simple scheme can be secure still holds if we restrict the choice of the adversary to history-independent Trojans as the “time-bomb” Trojan used in the proof is history-independent. We will show a 2-redundant construction \(\varPi _2^\star \) that achieves optimal security against history-independent Trojans.

Theorem 2

(History-Independent Security of \(\varPi _2^\star \)). For any constant \(c>0\) there is a constant \(c'=c'(c)>0\) such that \(\varPi _2^\star \) from Fig. 2 is \((c,\frac{c'}{T})\)-Trojan resilient if the adversary is additionally restricted to choose a history-independent Trojans.

The technical Lemma 4 we prove and which implies this theorem, actually implies a stronger statement: for any positive integer k, the above holds even if we relax the security notion and declare the adversary a winner as long a Trojan is detected by the test or master at most \(k-1\) times. What this exactly means is explained in Sect. 4.2. Note that this notion coincides with the standard notion for \(k=1\).

The \(\varPi _2^\star \) scheme is just the \(\varPi _2^\phi \) scheme from Conjecture 1 for \(\phi =0\), where we conjecture that \(\varPi _2^\phi \) is (in some sense) optimally secure for \(0<\phi <1\). For \(\phi \) the conjecture is wrong, but somewhat ironically we are only able to prove security against history-independent Trojans for \(\phi =0\), and this result will be key towards proving the security of \(\varPi _{12}\) as stated in our main Theorem 1.

2.10 Proof Outline

The proof of our main Theorem 1 stating that \(\varPi _{12}\) is optimally Trojan-resilient is done in two steps. As just discussed, we first prove security of \(\varPi _2^\star \) against history-independent Trojans, and in a second step we reduce the security of \(\varPi _{12}\) against general Trojans to the security of \(\varPi _2^\star \) against history-independent Trojans. We outline the main ideas of the two parts below.

Part 1: Security of \(\varPi _2^\star \) Against History-Independent Trojans (Theorem 2, Lemma 4). \(\varPi _2^\star \) is a very simple scheme where the test \(\mathsf{T}^{\mathsf{F}_1,\mathsf{F}_2,\mathsf{F},\$}\) just queries \(\mathsf{F}_1\) on a random number \(\varDelta ,0\le \varDelta <T\) of inputs and checks if they are correct (the test ignores \(\mathsf{F}_2\)). The master \(\mathsf{M}^{\mathsf{F}_1,\mathsf{F}_2,\$}(x)\) queries \(y\leftarrow \mathsf{F}_1(x)\) and \(y'\leftarrow \mathsf{F}_2(x)\) on x and aborts if they disagree.

In the proof of Lemma 4 we define \(p_i\) and \(q_i\) as the probability that \(\mathsf{F}_1\) and \(\mathsf{F}_2\) outputs a wrong value in the ith query on a random input, respectively. As \(\mathsf{F}_1,\mathsf{F}_2\) are history independent, this is well defined as this probability only depends on i (but not previous queries).

Let the variable \(\varPhi _\varDelta \) denote the number of times the Trojans will be detected conditioned on the random number of test queries being \(\varDelta \). This value is (below Q is the number of queries to the master and we use the convention \(q_i=0\) for \(i<0\))

In this sum, the first \(\varDelta \) terms account for the test, and the last Q terms for the wild-phase. Moreover let \(Y_\varDelta \) denote the number of times \(\mathsf{F}_1\) deviates (and thus the master outputs a wrong value), its expectation is

To prove Trojan-resilience of \(\varPi _2^\star \) as stated in Lemma 4 boils down to proving that, for most \(\varDelta \), whenever the probability of \(\varPhi _\varDelta =0\) (i.e., the Trojan is not detected) is constant, the fraction of wrong outputs \(Y_\varDelta /Q\) must be “small” (concretely, O(1/T)).

The core technical result establishing this fact is Lemma 5. Unfortunately, this Lemma only establishes this fact for the expectation, i.e., whenever \(\mathop {\mathbb {E}}[\varPhi _\varDelta ]\) is small, also \(\mathop {\mathbb {E}}[Y_\varDelta ]\) is small. Here is where we use the fact that the \(\mathsf{F}_1,\mathsf{F}_2\) are history independent: in the history independent case \(\varPhi _\varDelta \) and \(Y_\varDelta \) can be written as the sum of independent boolean variables, so using a Chernoff bound it follows that their actual value will be close to their expectation with high probability.

It is instructive to see why for example setting \(p_i=q_i=\delta \) for some fixed \(\delta >0\) does not contradict Theorem 2. To contradict it, the fraction of wrong outputs (which here is simply \(\delta \)) must be \(\omega (1/T)\). In this case, \(\mathop {\mathbb {E}}[\varPhi _\varDelta ]=\varDelta \cdot \delta =\omega (\varDelta /T)\), which to contradict the lemma must be at least constant, which in turn means \(\varDelta \in o(T)\) must hold. As \(\varDelta ,0\ge \varDelta <T\) is uniform, t’s o(T) with o(1) probability, but for a contradiction we also need this probability to be constant.

Part 2: Reducing the Security of \(\varPi _{12}\) Against General Trojans to the Security of \(\varPi _2^\star \) Against History Independent Trojans (Theorem 1). While the random shift \(\varDelta \) makes \(\varPi _2^\star \) secure against history-independent attacks (like time-bombs, where a Trojan starts deviating after some fixed number of queries), it succumbs to cheat codes: as the master always queries \(\mathsf{F}_1,\mathsf{F}_2\) on the same inputs, a Trojan can specify some set of trigger inputs, and after receiving such a trigger the Trojans will deviate forever. By making the fraction of inputs that are triggers sparse, the Trojans will likely not be detected during testing (a 1/T fraction will survive testing with constant probability).

To prevent such a coordination via the inputs, in \(\varPi _{12}\) inputs are somewhat randomly assigned to the different Trojans. In particular, as emphasized in the yellow area in Fig. 3, the \(\mathsf{F}_1\) is always queried on the input x, and then the random bit b determines whether \(\mathsf{F}_2\) or \(\mathsf{F}_3\) are queried on x. If an input x were to trigger the Trojans to always deviate, \(\mathsf{F}_1\) and one of \(\mathsf{F}_2\) and \(\mathsf{F}_3\) will be triggered, say it’s \(\mathsf{F}_2\). But now, as soon as \(\mathsf{F}_3\) is queried in a future round the master will abort as \(\mathsf{F}_1\) will deviate, but \(\mathsf{F}_3\) will not (except if this query also happens to be a trigger, which is unlikely as triggers must be sparse to survive the testing phase).

This just shows why a particular attack does not work on \(\varPi _{12}\). But we want a proof showing security against all possible Trojans. Our proof proceeds by a sequence of hybrids, where we start with assuming a successful attack on \(\varPi _{12}\), and then, by carefully switching some circuits and redefining them by hard coding “fake histories”, we arrive at a hybrid game where there is still a successful attack, but now the circuits in the yellow area basically correspond to two the \(\varPi _2^\star \) construction instantiated with history-independent Trojans, but such an attack contradicts our security proof for \(\varPi _2^\star \) as stated in Lemma 4.

3 Conjectured Security of 2-Redundant Schemes

While the main technical result in this paper is a simple scheme \(\varPi _{12}\) that provably achieves optimal security as stated in Theorem 1, this construction is not really practical as it is 12-redundant. Recall that k-redundant means the master needs k instantiations of the functionality \(\mathcal{F}\), so it’s in some sense the hardware cost. For this section let us also define a computational cost: the rate of a simple construction is the average number of invocations to its \(\mathsf{F}_i\) oracles the master \(\mathsf{M}^{\mathsf{F}_1,\ldots ,\mathsf{F}_m,\$}(\cdot )\) makes with any query.

3.1 A 2-Redundant Scheme \(\varPi _2^\phi \)

We will now define a scheme \(\varPi _2^\phi \) which in terms of redundancy and rate is as efficient as we possibly could hope for a scheme with non-trivial security: it’s 2-redundant and has a rate of slightly above (the trivial lower bound of) 1. The construction \(\varPi ^\phi _2=(\mathsf{M}^*,\mathsf{T}^*)\), where \(\phi \in \mathbb {R},\phi \ge 0\) is illustrated in Fig. 4.

-

test: In the test phase, \(\mathsf{T}^{\mathsf{F}_1,\mathsf{F}_2,\mathsf{F}}(T)\) picks a random \(\varDelta ,0\le \varDelta \le T-1\), then queries \(\mathsf{F}_1\) on \(\varDelta \) random inputs and checks if the outputs are correct by comparing with the trusted \(\mathsf{F}\).

-

master: With probability \(1-T^{-\phi }\) the master \(\mathsf{M}^{\mathsf{F}_1,\mathsf{F}_2,\$}(x)\) picks either \(\mathsf{F}_1\) or \(\mathsf{F}_2\) at random, queries it on x and uses the output as its output. Otherwise, with probability \(T^{-\phi }\), the master queries both oracles and outputs abort if their outputs don’t match, and forwards the output of \(\mathsf{F}_1\) otherwise.

Construction \(\varPi _2^\phi \) from Conjecture 1.

Our Conjecture 1 states that this construction achieves optimal security (optimal in the sense of matching the lower bound from Lemma 2) for any \(0<\phi <1\), i.e.,

For any \(0<\phi <1\) and any constant \(c>0\) there is a constant \(c'\) such that \(\varPi _2^\phi \) is \((c,\frac{c'}{T})\)-Trojan resilient.

We discuss how \(\varPi _2^\phi \) performs against typical Trojans like time-bombs and cheat codes. Our conjecture only talks about \((\mathsf{win}(T),\mathsf{wrng}(T))\)-security where the winning probability \(\mathsf{win}(T)=c\) is a constant, and here the exact value of \(\phi \) does not seem to matter much as long as it is bounded away from 0 and 1. For \(\mathsf{win}(T)=o(1)\) the parameter \(\phi \) will matter as those attacks will illustrate. (the o(1) always denotes any value that goes to 0 as \(T\rightarrow \infty \)).

Proposition 2

(Time Bomb against \(\varPi _2^\phi \)). For any \(\phi \), there exists an adversary that \((\varTheta (T^{-\phi }),1-o(1))\)-wins in \(\mathsf{TrojanGame}(\varTheta (\varPi ^\phi _2),T,\omega (T))\)

Proof

(sketch). Let \(\mathsf{Adv}\) choose the constant functionality \(\forall x\in \mathcal{X}:\mathcal{F}(x)=0\), and a Trojan \(\mathsf{F}_1\) which outputs the correct value 0 for the first T queries, and 1 for all queries \(>T\), while \(\mathsf{F}_2\) always outputs 1.

\(\mathsf{F}_1\) will always pass the test. The master will abort iff one of its first \(T-\varDelta \) queries to \(\mathsf{F}_1\) (where the output is 0) is a “\(b=2\)” query (as then \(\mathsf{F}_1(x)=1\ne 0=\mathsf{F}_2(x)\)). With probability \(T^{-\phi }\) we have \(\varDelta \ge T-T^{\phi }\), and in this case such a bad event only happens with constant probability (using \((1-\epsilon )^{1/\epsilon }\approx 1/e=0.368\ldots \)). So the Trojan will not be detected with probability \(T^{-\phi }/e\), and in this case also almost all outputs will be wrong. \(\square \)

Proposition 3

(Cheat Code against \(\varPi _2^\phi \)). For any \(\phi \), there exists an adversary that \((\varTheta (T^{\phi -1}),1-o(1))\)-wins in \(\mathsf{TrojanGame}(\varTheta (\varPi ^\phi _2),T,\omega (T))\)

Proof

(sketch). Let \(\mathsf{Adv}\) choose the constant functionality \(\forall x\in \mathcal{X}:\mathcal{F}(x)=0\). The Trojans \(\mathsf{F}_1,\mathsf{F}_2\) output 0 until they get a query from a “trigger set” \(\mathcal{X}'\subset \mathcal{X}\), after this query they always deviate and output 1.

If we set \(|\mathcal{X}'|/|\mathcal{X}|=1/T\), then the test will pass with constant probability \((1-1/T)^\varDelta \ge (1-1/T)^T \approx 1/e\). Assuming the Trojans passed the test phase, the master will not catch the Trojans if the first trigger query to \(\mathsf{F}_1\) and \(\mathsf{F}_2\) happen in-between the same \(b=2\) queries (or in such a query). This happens with probability \(\approx T^{\phi -1}\). \(\square \)

The two propositions above imply that the adversary can always \((T^{\max \{-\phi ,\phi -1\}},1-o(1))\)-win by either using a time-bomb or cheat-code depending on \(\phi \). The winning probability is minimized if \(-\phi =\phi -1\) which holds for \(\phi =0.5\). We conjecture that the above two attacks are basically all one can do to attack \(\varPi _2^{\phi }\).

Conjecture 2

(Security of \(\varPi _2^{0.5}\) for low winning probabilities). For \(\mathsf{win}(T)\in \omega (T^{-0.5})\), \(\varPi _2^{0.5}\) is \((\mathsf{win}(T),\mathsf{wrng}(T))\)-Trojan resilient for some \(\mathsf{wrng}(T)\in o(1)\).

4 A Scheme for History-Independent Trojans

In this section we define the simple scheme \(\varPi _2^\star \) and prove its security as claimed in Theorem 2. Recall that a history-independent Trojan circuit is a stateless circuit, except that it maintains a counter. We recall that a trojan is history-independent if its state is a counter which is incremented by one on every invocation, so its answer to the i’th query can depend on the current index i and current input \(x_i\), but not on any inputs it saw in the past.

4.1 Notation

For an integer n we define  and

and  . We will also use the Chernoff bound.

. We will also use the Chernoff bound.

4.2 Security of \(\varPi _2^\star \)

Relaxing the Winning Condition. We can think of the security experiment \(\mathsf{TrojanGame}(\varPi _2^\star ,T,Q)\) as proceeding in rounds. First, for a random \(\varDelta \in [T]\), we run the test for \(\varDelta \) rounds (in each querying \(\mathsf{F}_1\) and \(\mathsf{F}\) on a random input and checking equivalence), and then Q rounds for querying the master (in each round querying \(\mathsf{F}_1\) and \(\mathsf{F}_2\) and checking for equivalence). The adversary immediately loses the game if a comparison fails (outputs 0 in the test or abort in the master) in any round.

We consider a relaxed notion of \((\mathsf{win},\mathsf{wrng})\)-winning, “relaxed” as we make it easier for the adversary, and thus proving security against this adversary gives a stronger statement. We define \((\mathsf{win},\mathsf{wrng})\)-k-winning like \((\mathsf{win},\mathsf{wrng})\)-winning, but the adversary is allowed to be detected in up to \(k-1\) rounds, so \((\mathsf{win},\mathsf{wrng})\)-1-winning is \((\mathsf{win},\mathsf{wrng})\)-winning.

This relaxed notion is not of practical interested, as one would immediately abort the moment a Trojan is detected. We consider this notion as we need it for the security proof of our main Theorem 1, where we will only be able to reduce security of \(\varPi _{12}\) to the security of \(\varPi _2^\star \) (against history-independent Trojans) if \(\varPi _2^\star \) satisfies this stronger notion.

Proof of Theorem 2. The following lemma implies Theorem 2 for \(k=1\), as discussed after the statement of the theorem the lemma below is somewhat more general as we’ll need the stronger security for any k.

Lemma 4

For any constant \(c>0\) and positive integer k, there exists a constant \(c'\), and integer \(T_0\) and polynomial q(.) such that no adversary \(\mathsf{Adv}\) exists that only chooses history-independent Trojans and that for any

can \((c,c'/T)\)-k-win \(\mathsf{TrojanGame}(\varPi _2^\star ,T,Q)\).

Proof

For a given \(c>0\) define

we then set \(c',q(T)\) and \(T_0\) as

These values are just chosen so that later our inequalities work out nicely, we did not try to optimise them.

By Lemma 1 we can consider the security experiment where an adversary \(\mathsf{Adv}\) chooses the constant functionality \(\mathcal{F}:\mathcal{X}\rightarrow 0\) as target and the two (history-independent) Trojans \(\mathsf{F}_1,\mathsf{F}_2:\mathcal{X}\rightarrow \{0,1\}\) output a bit (so they can either correctly output 0 or deviate by outputting 1). As the \(\mathsf{F}_1,\mathsf{F}_2\) are history independent, we can think of \(\mathsf{F}_1\) as a sequence \(\mathsf{F}_1^1,\mathsf{F}_1^2,\ldots \) of functions where \(\mathsf{F}_1^i\) behaves like \(\mathsf{F}_1\) on the ith query. Let \(p_i\) and \(q_i\) denote the probability that \(\mathsf{F}_1\) and \(\mathsf{F}_2\) deviates on the ith query, respectively

In \(\mathsf{TrojanGame}(\varPi _2^\star ,T,Q)\), for \(\delta \in [T-1]_0\) let the variable \(Y_\delta \) denote the number of wrong outputs by \(\mathsf{F}_1\) conditioned on the number of test queries \(\varDelta \leftarrow _\$[T-1]_0\) being \(\varDelta =\delta \). The expectation is

Let the variables \(\varPhi ^T_\delta \) and \(\varPhi ^M_\delta \) denote the number of times the test and the master “catch” a Trojan conditioned on \(\varDelta =\delta \)

let  denote the total number of times the Trojans are detected, and \(\varPhi '_\delta \) being the same but we ignore the last \(\delta \) queries. With the convention that \(q_i=0\) for \(i<1\)

denote the total number of times the Trojans are detected, and \(\varPhi '_\delta \) being the same but we ignore the last \(\delta \) queries. With the convention that \(q_i=0\) for \(i<1\)

As we consider history-independent Trojans the \(Y_\delta ,\varPhi _\delta \) variables are sums of independent Bernoulli random variables. Using a Chernoff bound we will later be able to use the fact that for such variables are close to their expectation with high probability.

Claim

For any \(\delta \in [T-1]_0,\tau \in [T-1-\delta ]\) (so \(\delta +\tau \le T-1\))

Proof

(of Claim). Assume for a moment that \(p_1,\ldots ,p_\tau =0\) as required to apply Lemma 16, then

The last step used \( \mathop {\mathbb {E}}[Y_0]+\delta \ge \mathop {\mathbb {E}}[Y_\delta ]\) and \(\delta \le T\).

We now justify our assumption \(p_1,\ldots ,p_\tau =0\). For this change the security experiment and replace the Trojans \(\mathsf{F}_1,\mathsf{F}_2\) chosen by the adversary with Trojans that first behave correctly for the first T inputs, and only then start behaving like \(\mathsf{F}_1,\mathsf{F}_2\) (technically, the new Trojans deviate with probabilities \(p'_i,q'_i\) satisfying \(p'_1,\ldots ,p'_T=0,q'_1,\ldots ,q'_T=0\) and for \(i>T\ :\ :p'_i=p_{i-T}\) and \(q'_i=q_{i-T}\)). At the same time, we increase Q to \(Q+T\). This change leaves \(\mathop {\mathbb {E}}[Y_\delta ]\) unaffected, while \(\mathop {\mathbb {E}}[\varPhi '_\delta ], \mathop {\mathbb {E}}[\varPhi '_{\delta +\tau }]\) can only increase. This proves the claim, note that in (5) the denominator is \(Q+T\) not Q as in (9) to account for this shift. \(\bigtriangleup \)

Claim

For all but at most a c/2 fraction of the \(\delta \in [T]_0\)

Proof

(of Claim). We use Eq. (5) which can be understood as stating that if \(\mathop {\mathbb {E}}[\varPhi _\delta ]\) is “small” for some \(\delta \), then all \(\mathop {\mathbb {E}}[\varPhi _{\delta '}]\) with \(|\delta -\delta '|\) large enough can’t be small too. Concretely, consider any \(\delta \) for which (if no such \(\delta \) exists the claim already follows)

then for all \(\delta '\in [T]_0\) for with \(|\delta -\delta '|\ge \frac{c\cdot T}{4}\) (note this is at least a c/2 fraction) by Eq. (5)

the two equations above now give

as claimed. \(\bigtriangleup \)

To prove the lemma we need to show that when Q is sufficiently large, any adversary attacking at least \(c'/T\) fraction of times, can win at most k times with probability less than c. Since the duration of test phase \(\delta \) is chosen randomly from the set \(\{0, ..., T-1\}\), we start with the following equation:

Let \(c_\delta \) denote the probability the adversary k-wins conditioned on \(\varDelta =\delta \)

With this notation we need to show

which follow from the claim below

Claim

\(c_\delta <c/2\) holds for all \(\delta \), except (the at most c/2 fraction of) the \(\delta \in [T]_0\) for which (10) does not hold

Proof

(of Claim). Consider any \(\delta \) for which (10) holds. If for this \(\delta \) \(\Pr [Y_\delta \ge Q\cdot c'/T]< c/2\) we’re done as by (12) also \(c_\delta <c/2\) (using that \(\Pr [a\wedge b]\le \Pr [a]\) for all events a, b). To finish the proof of the claim we need to show that otherwise, i.e., if

then

as this again implies \(c_\delta <c/2\). Equation (13) (using \(\Pr [V \ge x]\ge p \Rightarrow \mathop {\mathbb {E}}[V]\ge x\cdot p\) which follows from Markov’s inequality) gives

Plugging this into (10), then using or choice (2) of \(c'=c''/c^2\) and in the last step of \(Q\ge q(T)=5\cdot T^2\cdot c/c''+5T\) (this bound for q(T) was just chosen so the last inequality below works out nice).

Using the Chernoff bound with \(\epsilon =1/2\) and \(c''\ge -256\ln (c/2)\) (refer to Appendix in the extended technical report for details).

With our choice (2) of \(c''=\max \{64k,-256\ln (c/2)\}\) we get the bound \( \Pr [\varPhi _\delta <k] \le c/2 \) claimed in (14). \(\bigtriangleup \)

\(\square \)

4.3 A Technical Lemma

Consider any \(t,z\in \mathbb {N}\), \(z>t\), \(t=0\bmod 2\) and \(p_1,\ldots ,p_z\in [0,1]\). Denote with  be the average value.

be the average value.

Lemma 5

For any \(q_1,\ldots ,q_z\in [0,1]\), (defining \(q_i=0\) for \(i\le 0\)) and integers \(\varDelta ',\tau \) where \(0\ge \varDelta ',\tau \ge 0\), if \(p_1=p_2=\ldots =p_{\tau }=0\) then

We refer the reader to our technical report for the full proof, but let us observe that for example it implies, that if \(p_1=p_2=\ldots =p_t=0\), then

Looking ahead, the lhs. of Eq. (17) will denote the expected number of times the master circuit detects an inconsistency in the experiment, while \(\bar{p}\) denotes the fraction of outputs where \(\mathsf{F}_1\) diverts. So if the fraction of wrong outputs is larger than 4/t, the master circuit will on average raise an alert once. To get a bound on the security the expected number of alerts is not relevant, only in the probability that it’s larger than one, as this means that a Trojan was detected. The more fine grained statement Eq. (16) will be more useful to argue this.

Illustration of the main variables used in the proof of Lemma 5.

5 A 12-Redundant Scheme \(\varPi _{12}\)

In this section we define a scheme \(\varPi _{12}\) and we will show that the lower bound for achievable security for very simple schemes (shown in Lemma 2) is asymptotically tight. Our proof is constructive - the analysis of our \(\varPi _{12}\) construction shows that it is \((c, \frac{c'}{T})\)-Trojan resilient for suitable constants.

Our \(\varPi _{12}\) scheme operates with three independent input streams and one independent bit stream. On each query, every circuit in \(\varPi _{12}\) receives one of the three inputs and produces an output. The master circuit then checks the consistency of the outputs, i.e. verifies if there is no mismatch between any pair of circuits receiving the same input.

As stated in Sect. 1, digital Trojans mainly employ two types of strategies: time bombs (where time is measured in the number of usages) and cheat codes (as a part of the input). To counter these strategies, \(\varPi _{12}\) desynchronizes the circuits in two ways. First, some of the circuits are tested in the test phase for a randomly chosen time (already employed in the \(\varPi _2^*\) scheme). This effectively makes it difficult for time bomb Trojans to coordinate the time in which they start deviating. In \(\varPi _{12}\), half of the circuits are tested for T times where T is a random variable with uniform distribution on [t].

The second method of desynchronization involves using the value of the aforementioned input bit to alternate the way inputs are distributed among the circuits. Consequently, cheat code Trojans are rendered ineffective as only a subset of the circuits share the same input. Moreover, at any given point in time a circuit never “knows” which alternating state it is in (i.e. it does not know whether its output would be compared with deviating circuits or not).

The main building block of the \(\varPi _{12}\)-scheme is a group of four circuits: two outer ones and two inner ones (see Fig. 6). On each query, every group of circuits receives two inputs - the first is given to the outer circuit on the left and the second to the outer circuit on the right. Additionally, in every step a fresh decision/alternation bit b is sampled. According to its value these two inputs are given to the inner circuits. \(\varPi _{12}\) consists of three such groups. Crosschecks are performed whenever two distinct circuits receive the same input (both within a group and among groups).

The proof that the construction \(\varPi _{12}\) is actually Trojan-resilient starts with assuming that it is not secure, goes via a hybrid argument and leads to a contradiction with security of \(\varPi ^*_2\) construction. In every hybrid we change the construction slightly by swapping some pairs of circuits, arguing that the advantage of the adversary does not change much between each successive hybrids. In the final hybrid we show that the modified construction contains \(\varPi _2^\star \) as a sub-construction. It turns out, that any adversary who wins with reasonable good probability in the final hybrid can be used to build an adversary who breaks the security of \(\varPi _2^\star \) which is a contradiction with Theorem 2.

5.1 The \(\varPi _{12}\) Scheme

We will now define our \(\varPi _{12}\) construction. It is illustrated in Fig. 3. We view our 12-circuit construction as three groups of four circuits each. Group 1 consists of circuits \(\mathsf{F}_1, \dots , \mathsf{F}_4\), group 2 consists of \(\mathsf{F}_5, \dots , \mathsf{F}_8\), and group 3 consists of \(\mathsf{F}_9, \dots , \mathsf{F}_{12}\). At the beginning the three independent and identically distributed sequences of inputs are sampled. Moreover, independent sequence of bits is sampled (it is used to alternate the inputs’ distribution in the wild). For every query in the wild, the construction performs two steps: (i) the querying step, where the inputs are distributed to all the 12 circuits depending on the value of the corresponding bit (ii) the cross-checking step, where the master circuit checks the consistency of the outputs of the circuits who receive the same inputs.

Now we can take a closer look on our construction. There are three pairs of circuits that share the same input throughout the course of the game regardless of the value of the random bit (see Fig. 3). For instance, the circuit pairs \((\mathsf{F}_2, \mathsf{F}_6)\), \((\mathsf{F}_3, \mathsf{F}_{11})\) and \((\mathsf{F}_7, \mathsf{F}_{10})\) share the exact same inputs throughout the game. The outer two circuits within each circuit group (circuits \(\mathsf{F}_i\) for \(i \equiv 0, 1 \textsf { mod } 4\)) are uniquely paired with exactly one of the middle circuits, i.e. given the same input, depending on the value of the random bit \(b_i\) sampled by the master circuit at each step of the game. For instance, in circuit group 1 if \(b_i=0\), \(\mathsf{F}_1\) is paired with \(\mathsf{F}_2\) and both circuits given \(x^1_i\) as input, and \(\mathsf{F}_4\) is paired with \(\mathsf{F}_3\) and both given \(x^2_i\) as input. After the querying phase, the master cross-checks the output of the circuits which share the same input streams. If any of the cross checks in any round fail, then the master aborts and the adversary looses. We now provide a more detailed description of the construction as follows:

-

test: In the test phase, \(\mathsf{T}^{\mathsf{F}_1,\cdots ,\mathsf{F}_{12},\mathsf{F}}(T)\) picks a random \(\varDelta \) such that \(0\le \varDelta \le T-1\), then queries \(\mathsf{F}_1, \mathsf{F}_4, \mathsf{F}_5, \mathsf{F}_8, \mathsf{F}_9\) and \(\mathsf{F}_{12}\) on \(\varDelta \) random and independent inputs \(x_i^1, x_i^4, x_i^5, x_i^8, x_i^9\) and \(x_i^{12}\) respectively and checks if the outputs of the corresponding circuits are correct by comparing them with the trusted \(\mathsf{F}\).

-

master: The master samples three independent input streams \(\vec {x_1} = (x_1^1, x_2^1, x_3^1,\cdots ), \vec {x_2} = (x_1^2, x_2^2, x_3^2, \cdots ), \vec {x_3} = (x_1^3, x_2^3, x_3^3,\cdots .)\) and an independent bit string \(\vec {b} = (b_1, b_2, \cdots )\). The operation of the master circuit is split into two phases: (i) querying phase and (ii) cross-checking phase.

Querying step. For all \(i \in [Q]\), it queries the functions \(\mathsf{F}_1, \mathsf{F}_2 \cdots , \mathsf{F}_{12}\) as follows:

-

1.

If \(b_i=0\),

-

The functions \(\mathsf{F}_1, \mathsf{F}_2, \mathsf{F}_5, \mathsf{F}_6\) get \(x_i^1\) as input,

-

The functions \(\mathsf{F}_3, \mathsf{F}_{4}, \mathsf{F}_{11}, \mathsf{F}_{12}\) get \(x_i^2\) as input, and

-

The functions \(\mathsf{F}_7, \mathsf{F}_8, \mathsf{F}_{9}, \mathsf{F}_{10}\) get \(x_i^3\) as input.

-

-

2.

if \(b_i=1\),

-

The functions \(\mathsf{F}_1, \mathsf{F}_3, \mathsf{F}_9, \mathsf{F}_{11}\) get \(x_i^1\) as input,

-

The functions \(\mathsf{F}_2, \mathsf{F}_{4}, \mathsf{F}_{6}, \mathsf{F}_{8}\) get \(x_i^2\) as input, and

-

The functions \(\mathsf{F}_5, \mathsf{F}_7, \mathsf{F}_{10}, \mathsf{F}_{12}\) get \(x_i^3\) as input

-

-

1.

Cross-Checking Step. For all \(i \in [Q]\), the master circuit pairwise compares the outputs of the circuits that receive the same inputs (refer to the technical report for the details of the cross-checking phase). If at any round any of the checks fail, the master outputs \(\mathsf{abort}\) and the adversary looses.

Output. If all the checks succeed in the cross-checking phase, the master outputs the output of the circuit \(\mathsf{F}_1\), i.e., \(\vec {y} = \mathsf{F}_1(\vec {x^1})\) as the output of \(\varPi _{12}\).

5.2 Security of \(\varPi _{12}\)

In this section we prove Theorem 1, which states that the construction presented in Sect. 5.1 is \((c,\frac{c'}{T})\)-secure for appropriate choice of constants c and \(c'\). More precisely, we show that the security of the 2-circuit construction from Sect. 2.9 can be reduced to the security of the 12-circuit construction presented above. Before proceeding with the proof, we introduce some useful definitions and notations.

5.2.1 History Hardcoded Circuits and Plaits

We observe that the notation \(\mathsf{F}(x)\) for stateful circuits is ambiguous, since its value depends also on the history of queries to \(\mathsf{F}\) (which is not provided as a parameter). We can thus assume that each \(\mathsf{F}\) is associated with some stream \(\mathbf{x} = (x_1,x_2,...)\) and that \(\mathsf{F}(x_i):=\mathsf{F}(x_i| x_1,x_2,...,x_{i-1})\). This notation uniquely describes the i-th query to \(\mathsf{F}\) given the stream \(\mathbf{x} \).

In our proof we will however need a slightly different notion called history-hardcoded circuits. Given any stateful circuit \(\mathsf{F}\) and two arbitrary streams \(\mathbf{x} = (x_1, x_2, x_3,...)\) and \(\mathbf{w} = (w_1, w_2, w_3,...)\), we say \(\mathsf{F}^\mathbf{x }\) is an \(\mathbf{x} \)-history-hardcoded circuit if at the i-th query it hardcodes the stream values \(x_1, \dots , x_{i-1}\) as its history and takes \(w_i\) from the stream \(\mathbf{w} \) as the input to query i. Thus \(\mathsf{F}^\mathbf{x }\) on the i-th query with input \(w_i\) returns the value: \(\mathsf{F}^\mathbf{x ,i} (w_i) = \mathsf{F}(w_i|x_1, x_2,...,x_{i-1})\) and on the \(i+1\)-th query returns \(\mathsf{F}^\mathbf{x ,i+1} (w_{i+1}) = \mathsf{F}(w_{i+1}|x_1, x_2,...,x_{i-1}, x_i)\). We call the stream \(\mathbf{x} \) the hardcoded history stream and \(\mathbf{w} \) the input stream.

For a random variable \(\mathbf{X} \) which takes values from \(\{X_1, X_2,...\}\) and a circuit \(\mathsf{F}\) we define another random variable \(\mathsf{F}^\mathbf{X }\) as follows. Its value for \(\mathbf{X} = \mathbf{x} \) is simply \(\mathsf{F}^\mathbf{x }\). We will call this random variable an X-history-hardcoded circuit. Note that as long as \(\mathsf{F}^\mathbf{X }\) receives inputs from a stream \(\mathbf{W} \) independent from \(\mathbf{X} \), we can say that \(\mathsf{F}^\mathbf{x }\) is a history-independent circuit.

We emphasize that when the hardcoded history stream is equal to the actual input stream, the history-hardcoded circuit returns the same results as the original stateful circuit receiving the same input stream. In other words:

for all \(i \in \mathbb {N}\) with probability 1.

Another idea exploited in our construction is the concept of alternating inputs depending on the values of random bits. We will express this idea using the notion of b-plaits, where \(\mathbf{b} \) is a stream of random bits. A b-plait of two streams \(\mathbf{a} ^0\) and \(\mathbf{a} ^1\) is a new stream \((\mathbf{a} ^0 \mathbf{a} ^1)_\mathbf{b }\), where its i-th value is either \(a^0_i\) from stream \(\mathbf{a} ^0\) or \(a^1_i\) from stream \(\mathbf{a} ^1\) depending on the i-th value of the decision stream b. More precisely:

In our construction, there is only one decision stream used for every plait, therefore the \(\mathbf{b} \) will be omitted for simplicity. Thus to express the plait of two streams \(\mathbf {a}^0\), \(\mathbf {a}^1\) we will simply write \(\mathbf {a}^0\mathbf {a}^1\). A plait of two identical streams of say \(\mathbf {s}\) will simply be written as \(\mathbf {s}\), rather than \(\mathbf {s}\mathbf {s}\).

Similarly to b-plaits of streams we can define the plaits of history-hardcoded circuits. Let \(\mathsf G_0^{\mathbf {x}^0}\) be an \(\mathbf{x} ^0\)-history-hardcoded circuit and \(\mathsf G_1^{\mathbf {x}^1}\) be an \(\mathbf{x} ^1\)-history-hardcoded circuit. We say \((\mathsf G_0^{\mathbf {x}^0} \mathsf G_1^{\mathbf {x}^1})_{\mathbf {b}}\) is b-plait for \(\mathsf G_0^{\mathbf {x}^0}, \mathsf G_1^{\mathbf {x}^1}\) iff

Note that the plaited circuit \((\mathsf G_0^{\mathbf {x}^0} \mathsf G_1^{\mathbf {x}^1})_{\mathbf {b}}\) can be expressed as a function of \(\mathsf G_0, \mathsf G_1\) and streams \(\mathbf {x}^0, \mathbf {x}^1\). Looking ahead, this notion of plaited circuits will be crucial in our final reduction of the security of \(\varPi _{12}\) to \(\varPi _2^\star \)

Finally, we define an operation on history-hardcoded circuits in the context of our construction:

\(\mathsf{Swap}(\mathsf{F}^{\mathbf {x}},\mathsf G^{\mathbf {t}})\): Given two history hardcoded circuits \(\mathsf{F}^{\mathbf {x}}\) and \(\mathsf G^{\mathbf {t}}\) in our construction, this operation physically exchanges the positions of both circuits. That is, that \(\mathsf{F}^{\mathbf {t}}\) physically replaces \(\mathsf G^{\mathbf {x}}\) and vice versa. Swapped circuits keep their histories, but since they change their place in the construction, they now receive potentially different inputs and are crosschecked with different circuits.

An important notion related to the \(\mathsf{Swap}\) operation which we will exploit in a proof is a red edge. We say there is a red edge in the k-th query between two history hardcoded circuits \(\mathsf{F}^{\mathbf {x}}\) and \(\mathsf G^{\mathbf {t}}\) iff after performing the \( \mathsf{Swap}(\mathsf{F}, \mathsf G)\) operation there is a change in either of the outputs of the swapped circuits on the k-th query compared to the outputs of the circuits if the \(\mathsf{Swap}\) operation was not performed. Looking ahead, the notion of swaps and red edges would be used in our proof to show that modifying the original \(\varPi _{12}\) construction by some \(\mathsf{Swap}\) operations does not change much the security parameters.

Now, given these definitions, we are ready to present an intuition that lies behind our construction. We might (and should) ask the authors “but why 12 circuits?”. The reason is understandable: it is hard to perform any direct proof for history-dependent circuits; things become too complicated. Fortunately, there exist reductions. As long as we have a valid proof of Theorem 2 for history-independent circuits, we can try to find some construction of history-dependent circuits which can be reduced to it. The main goal is to design the crosschecks is such a way, that, informally speaking, making circuit more history-dependent make the whole construction more secure. It is not hard to believe in such a statement; thanks to the alternating random bit, you never know which of some two circuits will receive a specific input. If these two circuits are very history dependent and have independent histories, there is a high probability, that on the given input they would answer differently. Thank to crosschecks, the master may detect such inconsistency with high probability. To make a practical advantage of this remark, we need to perform many \(\mathsf{Swap}\) operations and analyze the behaviour of various parameters describing our construction. We were able to handle such design and analysis for 12 circuits construction.

Now we will give a more detailed description of the intuition. As written a few lines before, the main idea of the proof is to reduce the construction which consists of (possibly) history-dependent circuits to \(\varPi _2^\star \). \(\varPi _2^\star \) consists of 2 history-independent circuits (alternatively speaking - pairs of circuits with different hardcoded histories, independent of the inputs that they receive). The \(\mathsf{Swap}\) operation \(\mathsf{Swap}(\mathsf{F}^{\mathbf {x}},\mathsf G^{\mathbf {t}})\) is legit whenever either one of the conditions holds - the circuits \(\mathsf{F}\) and \(\mathsf G\) are engaged in the cross-checking process as pictured in the Fig. 6 (e.g. circuits \(\mathsf{F}_1\) and \(\mathsf{F}_4\) or circuits \(\mathsf{F}_6\) and \(\mathsf{F}_7\) in the Fig. 7 (\(\mathsf {Hyb}_0\)) or the circuits received the same inputs before performing any swaps (e.g. circuits \(\mathsf{F}_2\) and \(\mathsf{F}_7\) swapped in \(\mathsf {Hyb}_2\) which are placed at the positions of \(\mathsf{F}_2\) and \(\mathsf{F}_6\) from \(\mathsf {Hyb}_0\) in the Fig. 7). Now, the main idea of the proof is that by performing a series of \(\mathsf{Swap}\) operations on the setting with 3 rows of 4-circuit groups, we are able to end up with a setting \(\mathsf {Hyb}_2\) that contains 2 pairs of history-independent circuits at the place of cross-checked circuit pairs (\(\mathsf{F}_1\), \(\mathsf{F}_2\)) and (\(\mathsf{F}_3\), \(\mathsf{F}_4\)). We need just 1 \(\mathsf{Swap}\) operation in the middle row to have history-independent circuits in the place of \(\mathsf{F}_1\) and \(\mathsf{F}_4\), but for \(\mathsf{F}_2\) (and \(\mathsf{F}_2\)) we will need an additional input stream that goes with a new row.

We are now ready to proceed to the proof of Theorem 1.

5.2.2 Proof of Theorem 1

The proof of Theorem 1 proceeds in two parts. We ultimately want to prove a reduction from the security of \(\varPi _{12}\) to that of \(\varPi ^\star _2\). Nevertheless recall in Lemma 4 the security of \(\varPi _2^\star \) crucially depends on history independent circuits. Thus the first part of our proof constructs a sequence of three hybrids, \(\mathsf {Hyb}_0\), \(\mathsf {Hyb}_1\), \(\mathsf {Hyb}_2\), to get a pair of history independent circuits,  , in the final hybrid. \(\mathsf {Hyb}_0\) is the original construction. To get from \(\mathsf {Hyb}_0\) to \(\mathsf {Hyb}_1\), we perform the \(\mathsf{Swap}\) operation on the following pairs of circuits in \(\mathsf {Hyb}_0\):

, in the final hybrid. \(\mathsf {Hyb}_0\) is the original construction. To get from \(\mathsf {Hyb}_0\) to \(\mathsf {Hyb}_1\), we perform the \(\mathsf{Swap}\) operation on the following pairs of circuits in \(\mathsf {Hyb}_0\):  . To get from \(\mathsf {Hyb}_1\) to \(\mathsf {Hyb}_2\), we perform the \(\mathsf{Swap}\) operation on the following pairs of circuits in \(\mathsf {Hyb}_1\):

. To get from \(\mathsf {Hyb}_1\) to \(\mathsf {Hyb}_2\), we perform the \(\mathsf{Swap}\) operation on the following pairs of circuits in \(\mathsf {Hyb}_1\):  (refer to Fig. 7). Note that in the final hybrid \(\mathsf {Hyb}_2\), it is crucial that \(\mathsf{F}_4^{\mathbf {2}}\) and \(\mathsf{F}_7^{\mathbf {3}} \mathsf{F}_{10}^{\mathbf {3}}\) are not just history independent, but also take in the same inputs from input stream \(\mathbf {1}\) regardless of the value of the random bit (\(\mathsf{F}_7^{\mathbf {3}} \mathsf{F}_{10}^{\mathbf {3}}\) takes inputs from stream \(\mathbf {1}\) due to the definition of plaited circuit in (19)). This will be necessary for the second part of our proof which uses \(\mathsf{F}_4^{\mathbf {2}}\) and \(\mathsf{F}_7^{\mathbf {3}} \mathsf{F}_{10}^{\mathbf {3}}\) in the final hybrid as the two history independent circuits needed for the \(\varPi _2^\star \) construction and uses the \(\varPi _2^\star \) construction with these circuits as a subroutine.

(refer to Fig. 7). Note that in the final hybrid \(\mathsf {Hyb}_2\), it is crucial that \(\mathsf{F}_4^{\mathbf {2}}\) and \(\mathsf{F}_7^{\mathbf {3}} \mathsf{F}_{10}^{\mathbf {3}}\) are not just history independent, but also take in the same inputs from input stream \(\mathbf {1}\) regardless of the value of the random bit (\(\mathsf{F}_7^{\mathbf {3}} \mathsf{F}_{10}^{\mathbf {3}}\) takes inputs from stream \(\mathbf {1}\) due to the definition of plaited circuit in (19)). This will be necessary for the second part of our proof which uses \(\mathsf{F}_4^{\mathbf {2}}\) and \(\mathsf{F}_7^{\mathbf {3}} \mathsf{F}_{10}^{\mathbf {3}}\) in the final hybrid as the two history independent circuits needed for the \(\varPi _2^\star \) construction and uses the \(\varPi _2^\star \) construction with these circuits as a subroutine.

Hybrids with the circuits and their corresponding plaited hardcoded history and input streams (above and below each circuit in black respectively). In \(\mathsf {Hyb}_2\), \(\mathsf{F}_4^{\mathbf {2}}\) and the plaited circuit \(\mathsf{F}_7^{\mathbf {3}} \mathsf{F}_{10}^{\mathbf {3}}\) (in red) are history independent. (Color figure online)

Proof

For a given \(\mathsf{F}_1,...,\mathsf{F}_{12}\), we can define some random variables as follows. Let \(\phi _{\mathsf{F}_j^{\mathbf {A}};\mathbf {B}}\) be the total number of queries, where \(\mathsf{F}_j^{\mathbf {A}}\) gets input from a stream \(\mathbf {B}\) and has a mismatch with any other circuit getting input from the same stream in this query. We will refer to random variables related to the i-th hybrid by adding a superscript i. For example, \(\phi ^0_{\mathsf{F}_1^{\mathbf {1}};\mathbf {2}}=0\), since in \(\mathsf {Hyb}_0\) no crosschecks are made between \(\mathsf{F}_1^{\mathbf {1}}\) and the circuits receiving inputs from stream \(\mathbf {2}\). Let \(\varPhi \) be the total number of mismatches detected by the master circuit. Recall from Sect. 2.3 that Y is the total number of mistakes the master circuit makes. The probability space of these random variables is the set of all choices of a stream of random bits b and streams of random inputs \(\mathbf {1}, \mathbf {2}, \mathbf {3}\) and a number of tests \(\varDelta \).

We prove our statement by contradiction. To this end, we assume that

Therefore for some c and for all \(c'\) there exists an infinite set \(\mathcal {T} \subset \mathbb {N}\) such that for every \(t \in \mathcal {T}\) there exists an infinite set \(\mathcal {Q}_t \subset \mathbb {N}\) such that for every \(t\in \mathcal {T}, z \in \mathcal {Q}_t\) there exists an adversary \(\mathsf{Adv}= \mathsf{Adv}(c,c',z,t)\) such that the following formula is true:

Now we will look what happens to inequality (21) as we move through each hybrid:

\(\mathsf {Hyb}_0\): Hybrid 0 corresponds to the original construction due to equality (18). Hence, the probability that the adversary \(\mathsf{Adv}(c,c',z,t)\) wins in this hybrid is precisely that in Eq. (21).

\(\mathsf {Hyb}_1\): In this hybrid we simply perform three \(\mathsf{Swap}\) operations on the following pairs of circuits:  .

.

Claim

Proof of the claim is in the technical report.

\(\mathsf {Hyb}_2\): In this hybrid we simply perform two \( \mathsf{Swap}\) operations on the following pairs of circuits:  .

.

Claim

Proof

Every \(\mathsf{Swap}\) operation performed in \(\mathsf {Hyb}_2\) changes the value of \(Y^2, \phi ^2_{\mathsf{F}_4^{\mathbf {2}}, \mathbf {1}}\) by at most k (since inequality (refer to our extended technical report for details) holds). The inequality is explicit. \(\bigtriangleup \)

Claim

For every \(k \in \mathbb {N}\) and every adversary \(\mathsf{Adv}\) who \((c,\frac{c'}{t})\)-wins \((\varPi _{12}, T,Q)-\mathsf{TrojanGame}\) there exists an adversary \(\mathsf{Adv}'\) who \((c-3 \cdot 2^{-k}, \frac{c'}{t}-\frac{5k}{z})\)-\((3k+1)\)-wins the game \(\mathsf{TrojanGame}(\varPi _2^\star , T,Q)\).

We want to conclude, that the above statement contradicts Lemma 4. So we want to show, that this incorrect statement is implied by our construction.

Let \(k = 2+\log (\frac{1}{c}) \) and \(\tilde{c} = c - 3 \cdot 2^{-k} = \frac{c}{4} > 0\). Choose \(\tilde{c'}\) arbitrarily and let \(c' = \cdot \tilde{c'}\). Let \(\widetilde{\mathcal {T}} = \mathcal {T}\). Let

Obviously \(\widetilde{\mathcal {Q}_t}\) is infinite. As a result, for every \(q \in poly\) there exists \(z \in \widetilde{\mathcal {Q}_t}\) such that \(z>q(t)\).