Abstract

The non-linear analysis of the performance of engineering structures requires in general a huge computational effort. Moreover, in some cases a model updating procedure is needed. In this contribution, a model updating procedure has been applied for the simulation of pre-stressed reinforced concrete (RC) beams. The combined ultimate shear and flexure capacity of the beams is affected by many complex phenomena, such as the multi-axial state of stress, the anisotropy induced by diagonal concrete cracking, the interaction between concrete and reinforcement (bond), and the brittleness of the failure mode. Spatial distribution of material properties may be considered by random fields. Furthermore, statistical and energetic size effects may influence the analysis. To incorporate all the mentioned affects within a probabilistic analysis by using Monte Carlo simulation, feasibility limits are achieved quickly. Therefore, the aim was to improve the sampling technique for the generation of the realizations of the basic variables for, a general, computationally complex analysis tasks. The target was to develop a method similar to a simplified probabilistic method e.g. Estimation of Coefficient of Variation (ECoV). Therefore the so-called fractile based sampling procedure (FBSP) by using Latin Hypercube Sampling (LHS) has been developed. It allows a drastic reduction in the computational effort and allows the consideration of correlations between the individual basic variables (BV). However, fundamental aspect of the presented procedure is the appropriate selection of a leading basic variable (LBV). The appropriate choice of the LBV among the defined BVs is essential for mapping the correct correlation. Three methods for the determination of the LBV were investigated in this paper.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Fractile based sampling

- Non-linear finite element analysis

- Probabilistic analysis

- Sensitivity and reliability

1 Introduction

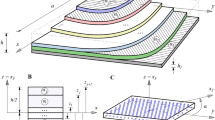

The development of a non-linear numerical computational model for the representation of experimental data and further for reliability assessment tasks is a complex challenge and generally an updating procedure is needed. The load bearing capacity of a reinforced concrete beam is affected by different influences. These are e.g. multi-axial stress states, diagonal cracking causes by anisotropy of the material matrix, description of the bond interaction between concrete and reinforcement or brittle failure of the structural system. However, material properties vary in a spatial manner and might be considered by random fields. Furthermore, statistical and energetic size effects may influence the analysis. In order to incorporate all these influences on the bearing capacity, a non-linear finite element analysis (NLFEA) can be used.

For the analysis of the load bearing capacity in terms of shear and bending interaction limit state functions are needed, whereby the afore mentioned phenomena can be incorporated in a more or less detailed way. However, these parameters are generally not deterministic but rather probabilistic in order to incorporate uncertainties. Code bases traditional approaches simplify the problem by considering the uncertain parameters to be deterministic and to use partial safety factors to account for the uncertainties. Such an approach does not absolutely guarantee the required reliability level and the influence of individual parameters on the reliability is not determinable. Compared to that the application of a fully probabilistic (FA) approach can be used instead [1]. Verification of a structure with respect to a particular limit state is carried out via a model describing the limit state in terms of a function, whose value depends on all relevant design parameters. Verification of the limit states shall be realized by a probability-based method. The Model Code 2010 recommends to use different safety formats for verification of the limit state, see [2, 3]. A review of these safety formats can be found e.g. in [4,5,6]. The most common are the following:

-

Semi-probabilistic approach: Computational requirements are significantly reduced, whereby the design value of response R is evaluated instead of the probability of failure.

-

Global safety factor approach: It is defined in EN 1992-2 and allows only compressive type of failure. However, the study presented in [5] extended the application also to shear failure modes.

-

The Estimation of Coefficient of Variation (ECoV) method: This is based on the semi-probabilistic approach, the difference among them consists in the procedure adopted to estimate the coefficient of variation and mean value of the response [7]. Only two simulations of NLFEA are required, the first one is carried out with mean values of basic variables (BV) and the second simulation with characteristic values.

-

The extended ECoV method proposed in [4, 8] allows to evaluate not only the material uncertainties but also the model and geometrical uncertainties.

The probabilistic safety format, sometimes referred to as fully probabilistic method, allows explicitly including the reliability requirements in terms of the reliability index β and the reference period. This latter safety format may be used for structures to be designed and for existing structures in cases where such an increased effort is economically justified. However, the FP approach is less often used for the design of new structures due to lack of statistical data. It is often used in the assessment of existing structures in order to determine the residual service life. For the FP approach different simulation procedures, e.g. Monte Carlo (MC) or Latin Hyper Cube Sampling (LHS) can be used to generate realizations of the probabilistic variables. In case of MC a large number of simulations is needed in order to receive an accurate result. In combination with complex calculations this method is often not applicable [9]. Remedial measure is to use LHS instead. This technique is an advanced MC sampling procedure, firstly described in [10]. LHS allows a significant reduction of the required number of realizations and it allows also the incorporation of correlation between the BVs [11].

In addition to these classical methods, the objective within this research was to develop a more efficient sampling method which, approximates the ECoV method in terms of the computational effort but includes the features of the well-established LHS. Therefore the so-called Fractile-Based Sampling Procedure (FBSP) has been developed.

2 Experimental Data

In a comprehensive research project the behavior of pre-stressed reinforced T-shaped concrete beams were investigated. Thereby experimental investigations to characterize the material properties were carried out as well as large scale tests with proof-loading on the concrete beams with a comprehensive monitoring program were carried out. Based on this research, Table 1 shows the material characteristics in terms of mean value, coefficient of variation (cov) and an appropriate probability density function (PDF) obtained from experiments or code information. Further details on the research project can be found in [12, 13].

3 Probabilistic Sampling Procedure

The failure probability is depending mainly on the proper characterization of input BVs, computational models and sampling techniques which are needed to create the input samples from the BVs and to be used in the NLFEA.

For structural engineering systems, typical BVs for capturing uncertainties are (a) material properties such as the elastic modulus of concrete or steel, (b) geometrical properties such as the cross section dimensions or the concrete cover of the reinforcement and (c) the environmental properties such as the chloride content in the air or the humidity.

For the probabilistic sampling procedure itself MC or LHS technique can be used. Whereby, LHS allows a significant reduction of the required number of realizations of the BVs due to a “controlled” random generator process [14]. The multipurpose probabilistic software for statistical, sensitivity and reliability analyses of engineering problems (FReET) is based on the LHS technique. FReET can be used for the estimation of statistical parameters of the structural response, the estimation of the theoretical failure probability, the sensitivity analysis, the response approximation and reliability-based optimization.

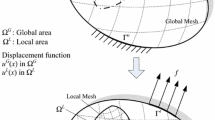

Now, in order to combine the low computational effort of ECoV method with the significance of a FP approach with respect to sensitivity and reliability the FBSP is proposed. Thereby the BVs are defined by PDFs and according to the LHS strategy the representative parameters of variables are selected randomly, being random permutations of integers k = 1, 2, … N and the representative value of each interval is the mean value [15, 16].

In the FBSP as a first step a set of 300 realizations of the BVs were generated by using LHS and as a second step sub-sample fields were extracted. The extracted sub-sample field were chosen by a so-called leading-basic-variable (LBV). By selecting a LBV Xi the randomization sets k for which Xik of the LBV are closest to the predefined fractile Xi,p% = {Xi,5% Xi,15% Xi,30% Xi,70% Xi,85% Xi,95%} are extracted. The selection procedure showed that already for a small number of simulation sets k a very good mapping with the target correlation of the BVs could be achieved. The values of the BVs are differently coupled with respect to the LBV, while maintaining the correlations, e.g. the LBV Xi,5% is not necessarily associated with the LBV Xi,15% and it significantly influences the sample sets k, and the LBV also maybe significantly influences the simulation process e.g. the crack initiation and the crack pattern development associated with the NLFEA of concrete structures. In the following there are three approaches suggested for the appropriate determination of the LBV.

The whole FBSP was carried out by using two different resources (1) FBSPMC based on Model Code 2010 information and (2) FBSPTP based on information gathered from experimental data (proof loading of reinforced concrete beam). Figure 1 shows the extraction process of the FBSP specific sub-sample fields.

3.1 Determination of the LBV Using the “FBSP Based LBV” Procedure

In this approach, the reference is the structural response obtained by the application of a FP analysis. Further, simulations are performed for n FBSP sub-sample fields according to the n-selected LBVs. Consequently, the appropriate LBV can be determined from the consistency of the FBSP generated structural response with the structural response obtained by FP analysis method. Within the investigation on the pre-stressed reinforced T-shaped concrete beam, in particular predefined fractiles (a) of the compressive strength fc as LBV, (b) of the tensile strength fct as LBV and (c) of the specific fracture energy Gf as LBV and its accompanying parameters are extracted from the basic sample field. The computed PDFs of the structural responses of are shown in Fig. 2. The comparison with FP results using lognormal PDFs showed that fc is an appropriate LBV. The major disadvantage of the “FBSP based LBV” procedure for determining the LBV is the necessity of processing the complete FP analysis.

3.2 Determination of the LBV Using the “Target Correlation Matrix” Procedure

In this approach, the target correlation matrix, defined for the BV of the FP analysis serves as a reference for the comparison between the correlation matrix obtained from the considered FBSP sub-sample fields (e.g. for n-LBV the correlation matrixes extracted from the n-FBSP sub-sample fields are analyzed and compared with the reference one).

The computation of the correlation matrix coefficients of the basic sample field as well as for the FBSP specific sub-sample fields can be computed by using the Pearson methodology. The big advantage of this procedure is that there is no analysis of the structural response for the determination of the LBV necessary, since only the BVs are used for the comparison of the correlations. The Pearson correlation coefficient is evaluated for each couple of BVs and the correlation matrix of FBSP with a specific LBV is setup. Hence, an error matrix can be derived between the exact correlation matrix Tx and the target correlation matrix T by using Eq. 2.

To evaluate the error with respect of changing the LBV, the second-order-norm of matrix E is calculated. Denoting A as a generic matrix, nA is the second-order-norm of A, and is derived according to Eq. 3.

Thereby f(.) is a function that provides the maximum eigenvalue between all eigenvalues of the matrix in square brackets, which is equal to the scalar product between A and its transposed matrix.

3.3 Determination of the LBV Using the “Sensitivity Analyses” Procedure

In this approach, the simulation of the structural response with the basic sample field or with the FBSP specific sub-sample field serves as a basis for the sensitivity analyses considerations. For instance, the sensitivity analysis is processed for the maximum bearing capacity, as LBV those BVs are defined which have the largest impact on the bearing capacity. The big advantage of this procedure is that a FP analysis of the structural response for the determination of the LBV is not necessary, because the sensitivity analyses can also be performed on the FBSP specific sub-sample fields.

4 Comparison of Basic Sampling and FBSP

The LBV plays an important role in the context of FBSP. The LBV defines the composition of the sample set in the FBSP sub-sample field on the basis of the predefined fractile values. Figure 3a shows a comparison of the PDFs of fct and Fig. 3b shows the comparison of the PDFs of Gf. In both cases fc was considered as LBV and the PDFs are generated from (a) basic sample field, (b) the FBSPTP sub-sample field, and (c) the FBSPMC sub-sample field. Figure 3c shows a comparison of the PDFs of fc and Fig. 3d shows the comparison of the PDFs of Gf. In both cases, fct was considered as LBV and the PDFs are generated as before.

PDFs for different mechanical parameters in which the probabilistic distribution parameters were obtained from the basic sample field (experimental in the legend), FBSPTP (by using target correlation matrix) and FBSPMC (by using Model Code 2010 correlations): (a) and (b) show the results if fc is the LBV, (c) and (d) show the results if fct is the LBV

According to [1, 13], a Gumbel Maximum distribution (GMB MAX EVI) has been used for fct and Gf for the comparison of the PDFs. The comparison of the PDFs of fct and Gf generated from the FBSPTP sub-sample field using fc as LBV with the PDF based on the basic sample field shows a very good agreement and provides a very strong argument for the proposed FBSP. On the other hand, the comparison of the PDFs generated based on the FBSPMC sub-sample fields shows a significant deviation in the mean as well as the standard deviation with respect to the PDFs of the basic sample field.

As can be seen from Fig. 3a, the mean value of fct is significantly higher and the standard deviation of the FBSPMC-PDF is significantly smaller than the FBSPTP-PDF or the experimental based PDF. Figure 3b shows a smaller mean and standard deviation for Gf by using FBSPMC with respect to the experimental based PDFs. It is evident, that the Model Code 2010 formulations produces a higher value of fct and a lower value of Gf compared to the experimentally derived PDFs. As can be seen from Fig. 3c the mean value of fc is significantly lower and the standard deviation is significantly higher in case of FBSPMC compared to the FBSPTP or to the experimental based PDF. Figure 3d shows significant smaller mean and standard deviation values of Gf in case of FBSPMC with respect to the experimentally based PDFs.

5 Conclusions

Numerous simulation methods, which originated from the MC method and subsequently adapted for advanced probabilistic analyses, reliability and safety considerations, have been already established in the scientific community. These methods include e.g. the LHS method as well as the ECoV method. For time-consuming analyses, e.g. NLFEA of engineering structural components, including shear and normal force interaction as well as pre-stressed reinforcement, an average of up to 300 LHS simulations are required for a serious probabilistic statement. Conversely, if the ECoV method is adopted only 2 simulations are necessary, but this method cannot map correlations among the BVs and the failure modes.

Therefore, the FBSP method as presented can be located between the well-established LHS and the ECoV method. It could be shown, that a proper selection of the LBV results in a decrease of necessary simulations but still provides reliable predictions. However, the appropriate choice of the LBV among the defined BVs is essential for mapping the correct correlation.

Three methods for the determination of the LBV were investigated, in which the sensitivity-based correlation analysis provided the best results.

References

Vořechovská, D., Teplý, B., & Chromá, M. (2010). Probabilistic assessment of concrete structure durability under reinforcement corrosion attack. Journal of Performance of Constructed Facilities, 24(6), 571–579.

Belletti, B., Damoni, C., Hendriks, M. A. N., & De Boer, A. (2014). Analytical and numerical evaluation of the design shear resistance of reinforced concrete slabs. Structural Concrete, 15(3), 317–330.

Belletti, B., Pimentel, M., Scolari, M., & Walraven, J. C. (2015). Safety assessment of punching shear failure according to the level of approximation approach. Structural Concrete, 16(3), 366–380.

Schlune, H., Plos, M., & Gylltoft, K. (2011). Comparative study of safety formats for nonlinear finite element analysis of concrete structures. In Applications of Statistics and Probability in Civil Engineering, Proceedings of the 11th International Conference on Applications of Statistics and Probability in Civil Engineering (pp. 2542–2548).

Cervenka, V. (2013). Reliability-based non-linear analysis according to fib Model Code 2010. Structural Concrete, 14(1), 19–28.

Holický, M., & Sykora, M. (2010). Global resistance factors for reinforced concrete structures. In Advances and Trends in Structural Engineering, Mechanics and Computation - Proceedings of the 4th International Conference on Structural Engineering, Mechanics and Computation, SEMC (pp. 771–774).

Holický, M. (2006). Global resistance factors for reinforced concrete members. Presented at the ACTA POLYTECHNICA.

Schlune, H., Plos, M., & Gylltoft, K. (2011). Safety formats for nonlinear analysis tested on concrete beams subjected to shear forces and bending moments. Engineering Structures, 33(8), 2350–2356.

Hurd, C. C. (1985). A note on early Monte Carlo computations and scientific meetings. Annual History Computing, 7(2), 141–155.

McKay, M. D., Beckman, R. J., & Conover, W. J. (1979). Comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics, 21(2), 239–245.

Vořechovský, M., & Novák, D. (2003). Efficient random fields simulation for stochastic FEM analyses. Computational Fluid and Solid Mechanics, 2003, 2383–2386.

Strauss, A., Krug, B., Slowik, O., & Novák, D. (2017). Combined shear and flexure performance of prestressing concrete T-shaped beams: Experiment and deterministic modeling. Structural Concrete, 19(1), 16–35.

Zimmermann, T., & Lehký, D. (2015). Fracture parameters of concrete C40/50 and C50/60 determined by experimental testing and numerical simulation via inverse analysis. Journal of Fractional, 192(2), 179–189.

Novák, D., Vořechovský, M., & Teplý, B. (2014). FReET: Software for the statistical and reliability analysis of engineering problems and FReET-D: Degradation module. Advances in Engineering Software, 72, 179–192.

Keramat, M., & Kielbasa, R. (1997). Efficient average quality index estimation of integrated circuits by modified Latin hypercube sampling Monte Carlo (MLHSMC). In Proceedings—IEEE International Symposium on Circuits and Systems (Vol. 3, pp. 1648–1651).

Huntington, D. E., & Lyrintzis, C. S. (1998). Improvements to and limitations of Latin hypercube sampling. Probabilistic Engineering Mechanics, 13(4), 245–253.

Acknowledgments

Austrian Research Promotion Agency (FFG) and the National Foundation for Research, Technology and Development supported this work by the project OMZIN [FFG-N° 836472]. This paper also reports on the scientific results obtained by the University of Parma within the project PRIN (Project of Prominent National Interest, Italian Research) and financially co-supported by MIUR (the Italian Ministry of Education, University and Research). Finally, the support of DASSAULT SYSTEMES is also strongly acknowledged.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Strauss, A., Belletti, B., Zimmermann, T. (2021). Fractile Based Sampling Procedure for the Effective Analysis of Engineering Structures. In: Matos, J.C., et al. 18th International Probabilistic Workshop. IPW 2021. Lecture Notes in Civil Engineering, vol 153. Springer, Cham. https://doi.org/10.1007/978-3-030-73616-3_27

Download citation

DOI: https://doi.org/10.1007/978-3-030-73616-3_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-73615-6

Online ISBN: 978-3-030-73616-3

eBook Packages: EngineeringEngineering (R0)