Abstract

In this paper, we use the rational Lanczos method to approximate Toeplitz matrix functions, in which the matrices are symmetric positive semidefinite (SPSD). In order to reduce the computational cost, we use the inverse of the Toeplitz matrix and the fast Fourier transform (FFT). Then, we apply this method to solve a heat equation. Numerical examples are given to show the effectiveness of the rational Lanczos method.

Supported by the “Peiyu” Project from Xuzhou University of Technology (Grant Number XKY2019104).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Recently, many authors have been interested in exponential integrators which are widely used in various fields [15, 16, 27, 32]. In the exponential integrators, one needs to compute some products of \(\varphi _{i}\) matrix functions and vectors:

where \(A_{m}\) is an \(m\times m\) matrix, \(s_1\), t are given parameters, and \(\text {v}\) is a vector. And \(\varphi _{i}\)-functions are of the following form

Furthermore, the \(\varphi _{i}\)-functions satisfy the following relations

Toeplitz matrices have various applications [5, 6]. Based on the importance of Toeplitz matrices, we want to approximate the products of the \(\varphi _{i}\) matrix functions and vectors (TMF), in which the matrices are the SPSD Toeplitz matrix. That is, in (1), the matrix \(A_{m}\) is the SPSD Toeplitz matrix. TMF can be applied to practical calculation problems; see [12, 36] for example. Recently, some new techniques are proposed to improve network routing and performance measurement [17, 39]. Based on effective user behavior and traffic analysis approaches [19, 20], we can design more effective scheduling strategies to raise resources utilization [22, 26] and energy-efficiency [23, 24]. To test new scheduling strategies, traffic must be reconstructed in test bed [18, 21, 25, 34, 38]. Fluid model is effective model to reconstruct the bursty data traffic. In this situation, TMF can also be used to build the fluid model.

Classical methods for solving \(\varphi _{i}\) matrix functions require very high complexity [2]. Recently, Krylov subspace method has been widely studied in large-scale sparse matrix due to its high efficiency [1, 3, 4, 7,8,9, 29, 30, 40]. In this method, we only need to compute the smaller matrix functions instead of computing the large matrix functions. Moreover, rational technique could be exploited to speed up Krylov subspace method [10, 11].

It is known that we can calculate Toeplitz matrix-vector products by the fast Fourier transform [5, 6], and one can calculate the explicit inverse of the Toeplitz matrix by the Gohberg-Semencul formula (GS) [13, 14]. These important properties can be used to accelerate the rate of convergence of the computation of TMF. In this work, we use the rational Lanczos method to compute the TMF and reduce the computational cost by using the GS.

2 Toeplitz Matrix

An \(m\times m\) Toeplitz matrix \(T_{m}\) satisfies \((T_{m})_{i,j}=t_{i-j}\) for \(1\le i,j \le m\). A circulant matrix \(C_{m}((C_{m})_{i,j}=c_{i-j})\) satisfies \(c_{i}=c_{i-m}\), \(1\le i \le m-1\). According to [5], we know that the complexity is \(\mathcal {O}(m\log m)\), if one computes the products \(C_{m}\text {u}\) and \(C^{-1}_{m}\text {u}\) for a given vector \(\text {u}\) by the FFT.

A skew-circulant matrix \(S_{m}((S_{m})_{i,j}=s_{i-j})\) satisfies \(s_{i}=-s_{i-m}\) for \(1\le i \le m-1\). Similarly, the computational complexity of the products of \(S_{m}\text {u}\) and \(S^{-1}_{m}\text {u}\) is also \(\mathcal {O}(m\log m)\) by the FFT.

In addition, by constructing a proper circulant matrix, we can compute \(T_{m}\text {u}\) in \(\mathcal {O}(2m\log ( 2m))\) complexity by the FFT; see [5, 6].

The GS for the inverse of a Toeplitz matrix \(T_{m}\) which is SPD is as follows [13]

where the matrices \(A_{m}\) and \(\hat{A}_{m}\) are of the following forms

and

Denote \(\mathbf {a}=[a_{1},a_{2},\ldots ,a_{m}]^\intercal \), then we can get \(\mathbf {a}\) by solving the following linear system

According to [31, 33], by using (4), one can obtain

and

where \(\mathbf {i}\) is the imaginary unit and \(\hat{J}\) is the anti-identity matrix, and \(Re(\text {p})\) is the real part of \(\text {p}\) and \(Im(\text {p})\) is the imaginary part of \(\text {p}\). Thus, we can compute \(T_{m}^{-1}\text {u}\) in \({\mathcal {O}}(m \log m)\) operations. To construct \(T_{m}^{-1}\) by the GS, we need to solve the Toeplitz linear system (5). We use the PCG with Strang’s preconditioner to solve (5) in this paper.

3 Rational Lanczos Method

In this section, we first introduce the Lanczos method for solving \(y_{i}(t)=\varphi _{i}(-tT_{m})\text {v}\). By using the Lanczos algorithm for a symmetric matrix \(T_{m}\), we can get a basis of a Krylov subspace

Please see [35] for the details of this algorithm.

The following formulation can be obtained by the Lanczos algorithm [35]

where \(U_n=[\text {u}_{1},\text {u}_{2},\ldots ,\text {u}_{n}]\) is an \(m\times n\) matrix. \(H_n\) is an \(n\times n\) symmetric tri-diagonal matrix, and \(\text {e}_n \) is the n-th column of the identity matrix. Therefore, we can give the following approximation

Therefore, the computation of large matrix functions \(\varphi _{i}(-tT_{m})\) are replaced by the computation of the small matrix functions \(\varphi _{i}(-tH_{n})\). In addition, \(\varphi _{i}(-tH_{n})\) can be effectively calculated by the function “phipade” in the software package EXPINT [2].

According to [35], we note that, for approximating \(\varphi _{i}(-tT_{m})\text {v}\), the rate of convergence of the Lanczos algorithm is very slow when the 2-norm of \(tT_m\) gets larger. In order to overcome this drawback, the rational Krylov subspace method is proposed [10, 11, 30, 40].

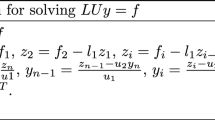

Let \(I_m\) be the identity matrix and \(\hat{\sigma }\) is a parameter. We give the rational Lanczos algorithm as follows:

Similar to (8), we have the following formulation

Therefore, we can approximate \(\varphi _{i}(-tT_{m})\text {v}\) by

where

In [11], the following error bound for approximating (10) is given.

Theorem 1

Let \(A_{m}=P^\intercal _mT_mP_m\), where \(P_m\) is the projection operator of \(T_m\) on the subspace \(\mathcal {K}_{n}((I_m+\sigma T_{m})^{-1},\mathrm {v})\), then the approximation of \(\varphi _{i}(-tT_{m})\mathrm {v}\) on the subspace \(\mathcal {K}_{n}((I_m+\hat{\sigma } T_{m})^{-1},\mathrm {v})\) has the following error bound

where D is a constant which depends on \(\hat{\sigma }\) and i.

For the rational Lanczos algorithm, \(P_m=U_nU^\intercal _n\), and

The error bound of Theorem 1 shows: Firstly, the error bound of the rational Lanczos method (11) does not depend on the 2-norm of the matrix \(tT_n\). Secondly, if the i increases, the rate of convergence of the approximation \(\varphi _{i}(-tT_{m})\text {v}\) will increase.

3.1 Implementation for the TMF Algorithm

In this section, we give the implementation of the algorithm for approximating the TMF. We note that if a Toeplitz matrix \(T_{m}\) is a SPSD, then \(I_m+\hat{\sigma } T_{m}\) (\(\hat{\sigma }>0\)) is a SPD Toeplitz matrix. Therefore, the GS can be used to solve the inverse of the Toeplitz matrix \(I_m+\hat{\sigma } T_{m}\). For the computation of the TMF, the rational Lanczos algorithm using the GS is as follows:

Algorithm 2: Rational Lanczos algorithm for the TMF

1. Solve \((I_m+\hat{\sigma } T_{m})\mathbf {a}=\text {e}_{1}\)

2. Run Algorithm 1, where \((I_m+\hat{\sigma } T_{m})^{-1}\text {u}_j\) is computed by (6) and (7)

3. Calculate \(\tilde{y}_i(t)=\hat{\beta } U_n \varphi _{i}(-tB_{n})\text {e}_1\)

In step 1 of Algorithm 2, the cost of solving \((I_m+\hat{\sigma } T_{m})\mathbf {a}=\text {e}_{1}\) is \({\mathcal {O}}(m \log m)\) [5, 6]. Then, the matrix-vector products \((I_m+\hat{\sigma } T_{m})^{-1}\text {u}_j\) in step 2 of Algorithm 2 can be computed by using (6) and (7), and the cost of computation is \({\mathcal {O}}(m \log m)\). In step 3 of Algorithm 2, we need to approximate \(\varphi _{i}(-tB_{n})\text {e}_1\). From [37], we know that \(n \ll m\) in general. Therefore, \(\varphi _{i}(-tB_{n})\text {e}_1\) can be fast approximated by the function “phipade” in the software package EXPINT [2], the computation amount is \({\mathcal {O}}(n^{3})\). As a consequence, the computation amount of Algorithm 2 is \({\mathcal {O}}(nm \log m)\).

4 Numerical Examples

In this section, we show the effectiveness of the rational Lanczos algorithm to approximate \(\varphi _{i}(-tT_{m})\text {v}\) by two numerical examples. In Example 1, we use MATLAB command “phipade” to calculate the exact solution \(\hat{y}(t)\). In the tables of numerical examples, “m” is the size of the matrix \(T_{m}\), and “Itol” is the accuracy of the error

where \(\hat{y}_n(t)\) is the approximation of \(\hat{y}(t)\). “IStand” and “IRL” denote the Lanczos method and rational Lanczos method, respectively. The parameter \(\hat{\sigma }\) in Algorithm 2 is \(\hat{\sigma }=\frac{t}{10}\) [28].

Example 1

In the first example, we study the SPD Toeplitz matrix. The elements of the SPD Toeplitz matrix are as follows [6].

The elements of the vector \(\text {v}\) are all 1. We approximate \(\varphi _{i}(-tT_{m})\text {v}\) (\(i=1,2,3\)). In this example, the order of the matrix \(T_{m}\) is \(2^{10}\) and the value of t changes.

It can be seen from Tables 1, 2 and 3 that the numbers of iterations of the IRL are much less than these of the IStand, especially when the 2-norm of \(tT_{m}\) gets larger. In addition, for the IRL, the numbers of iterations do not change. This indicates that the rate of convergence of the IRL does not depend on the 2-norm of \(tT_{m}\) compared with the IStand.

To compare the computational time of the IStand and the IRL, we give the results of the numbers of iterations and computational time in seconds of the IStand and the IRL in Table 4, where \(Itol=10^{-9}\) and \(m=2^{10}\). It can be seen from Table 4: Firstly, the computational times and the numbers of iterations of the IRL are much less than these of the IStand. Furthermore, if the size of the matrix \(T_{m}\) gets larger, the superiority of the IRL will become more obvious. Secondly, if t is fixed, as i increases, the iteration numbers of the IRL decreases, which also validates the result of (11) in Theorem 1.

Example 2

In the second example, we study a heat equation [12]. Please refer to [12] for the detailed equation. Numerically solving the heat equation leads to a matrix function problem

where

is an approximation solution, \(T_m\) is a SPD Toeplitz matrix, and \(v_0\) is an initial vector. We solve \(\hat{v}(t)\) by the IStand and the IRL, respectively. Table 5 lists the numbers of iterations and computational times of the IStand and the IRL for different m and t.

According to Table 5, it is seen that the IRL needs fewer numbers of iterations and calculation times to reach the final accuracies than these of the IStand. In addition, for the large matrix size, the IStand becomes unacceptable due to a lot of iteration numbers, while the IRL still works well.

5 Conclusion and Future Work

In this work, we use the rational Lanczos algorithm to approximate the TMF, and this method is applied to the numerical calculation. Using the GS, we can avoid the use of internal iterations to implement the rational Lanczos algorithm. In addition, due to the Toeplitz matrix, the amount of computation can be reduced. Numerical results show the advantage of the new method.

References

Al-Mohy, A., Higham, N.J.: Computing the action of matrix exponential, with an application to exponential integrators. SIAM J. Sci. Comput. 33, 488–511 (2011)

Berland, H., Skaflestad, B., Wright, W.: Expint-A matlab package for exponential integrators. ACM Tran. Math. Soft. 33(4), 4-es (2007)

Botchev, M., Grimm, V., Hochbruck, M.: Residual, restarting and Richardson iteration for the matrix exponential. SIAM J. Sci. Comput. 35, A1376–A1397 (2013)

Caliari, M., Kandolf, P., Zivcovich, F.: Backward error analysis of polynomial approximations for computing the action of the matrix exponential. BIT Numer. Math. 58, 907–935 (2018)

Chan, R., Jin, X.: An Introduction to Iterative Toeplitz Solvers. SIAM, Philadelphia (2007)

Chan, R., Ng, M.: Conjugate gradient methods for Toeplitz systems. SIAM Rev. 38, 427–482 (1996)

Eiermann, M., Ernst, O.: A restarted Krylov subspace method for the evaluation of matrix functions. SIAM J. Numer. Anal. 44, 2481–2504 (2006)

Frommer, A., Gttel, S., Schweitzer, M.: Efficient and stable Arnoldi restarts for matrix functions based on quadrature. SIAM J. Matrix Anal. Appl. 35, 661–683 (2014)

Frommer, A., Simoncini, V.: Stopping criteria for rational matrix functions of hermitian and symmetric matrices. SIAM J. Sci. Comput. 30, 1387–1412 (2008)

Gc̈kler, T., Grimm, V.: Uniform approximation of \(\varphi \) functions in exponential integerators by a rational Krylov subspace method with simple poles. SIAM J. Matrix Anal. Appl. 35, 1467–1489 (2014)

Grimm, V.: Resolvent Krylov subspace approximation to operator functions. BIT Numer. Math. 52, 639–659 (2012)

Gockenbach, M.: Partial Differential Equations-Analytical and Numerical Methods. SIAM, Philadelphia (2002)

Gohberg, I., Semencul, A.: On the inversion of finite Toeplitz matrices and their continuous analogs. Matem. Issled. 2, 201–233 (1972)

Heinig, G., Rost, L.: Algebraic Methods for Toeplitz-like Matrices and Operators. Birkhäuser, Basel (1984). https://doi.org/10.1007/978-3-0348-6241-7

Higham, N.J., Kandolf, P.: Computing the action of trigonometric and hyperbolic matrix functions. SIAM J. Sci. Comput. 36, A613–A627 (2017)

Hochbruck, M., Ostermann, A.: Exponential integrators. Acta Numer. 19, 209–286 (2010)

Huo, L., Jiang, D., Lv, Z., et al.: An intelligent optimization-based traffic information acquirement approach to software-defined networking. Comput. Intell. 36, 1–21 (2019)

Huo, L., Jiang, D., Qi, S., Song, H., Miao, L.: An AI-based adaptive cognitive modeling and measurement method of network traffic for EIS. Mob. Net. Appl., 1–11 (2019). https://doi.org/10.1007/s11036-019-01419-z

Jiang, D., Huo, L., Song, H.: Rethinking behaviors and activities of base stations in mobile cellular networks based on big data analysis. IEEE Trans. Netw. Sci. Eng. 1(1), 1–12 (2018)

Jiang, D., Wang, Y., Lv, Z., et al.: Big data analysis-based network behavior insight of cellular networks for industry 4.0 applications. IEEE Trans. Ind. Inf. 16(2), 1310–1320 (2020)

Jiang, D., Huo, L., Li, Y.: Fine-granularity inference and estimations to network traffic for SDN. PLoS One 13(5), 1–23 (2018)

Jiang, D., Huo, L., Lv, Z., et al.: A joint multi-criteria utility-based network selection approach for vehicle-to-infrastructure networking. IEEE Trans. Intell. Transp. Syst. 19(10), 3305–3319 (2018)

Jiang, D., Li, W., Lv, H.: An energy-efficient cooperative multicast routing in multi-hop wireless networks for smart medical applications. Neurocomputing 220, 160–169 (2017)

Jiang, D., Wang, Y., Lv, Z., et al.: Intelligent Optimization-based reliable energy-efficient networking in cloud services for IIoT networks. IEEE J. Sel. Areas Commun. Online available (2019)

Jiang, D., Wang, W., Shi, L., et al.: A compressive sensing-based approach to end-to-end network traffic reconstruction. IEEE Trans. Netw. Sci. Eng. 5(3), 1–12 (2018)

Jiang, D., Zhang, P., Lv, Z., et al.: Energy-efficient multi-constraint routing algorithm with load balancing for smart city applications. IEEE Internet Things J. 3(6), 1437–1447 (2016)

Kooij, G.L., Botchev, M.A., Geurts, B.J.: An exponential time integrator for the incompressible Navier-stokes equation. SIAM J. Sci. Comput. 40, B684–B705 (2018)

Lee, S., Pang, H., Sun, H.: Shift-invert Arnoldi approximation to the Toeplitz matrix exponential. SIAM J. Sci. Comput. 32, 774–792 (2010)

Lopez, L., Simoncini, V.: Analysis of projection methods for rational function approximation to the matrix exponential. SIAM J. Numer. Anal. 44, 613–635 (2006)

Moret, I.: On RD-rational Krylov approximations to the core-functions of exponential integrators. Numer. Linear Algebra Appl. 14, 445–457 (2007)

Ng, M., Sun, H., Jin, X.: Recursive-based PCG methods for Toeplitz systems with nonnegative generating functions. SIAM J. Sci. Comput. 24, 1507–1529 (2003)

Noferini, V.: A formula for the Fréchet derivative of a generalized matrix function. SIAM J. Matrix Anal. Appl. 38, 434–457 (2017)

Pang, H., Sun, H.: Shift-invert Lanczos method for the symmetric positive semidefinite Toeplitz matrix exponential. Numer. Linear Algebra Appl. 18, 603–614 (2011)

Qi S., Jiang, D., Huo, L.: A prediction approach to end-to-end traffic in space information networks. Mob. Netw. Appl. Online available (2019)

Saad, Y.: Analysis of some Krylov subspace approximations to the matrix exponential operator. SIAM J. Numer. Anal. 29, 209–228 (1992)

Tangman, D.Y., Gopaul, A., Bhuruth, M.: Exponential time integration and Chebychev discretisation schemes for fast pricing of options. Appl. Numer. Math. 58, 1309–1319 (2008)

Van Den Eshof, J., Hochbruck, M.: Preconditioning Lanczos approximations to the matrix exponential. SIAM J. Sci. Comput. 27, 1438–1457 (2006)

Wang, Y., Jiang, D., Huo, L., et al.: A new traffic prediction algorithm to software defined networking. Mob. Netw. Appl. Online available (2019)

Wang, F., Jiang, D., Qi, S.: An adaptive routing algorithm for integrated information networks. China Commun. 7(1), 196–207 (2019)

Wu, G., Feng, T., Wei, Y.: An inexact shift-and-invert Arnoldi algorithm for Toeplitz matrix exponential. Numer. Linear Algebra Appl. 22, 777–792 (2015)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 ICST Institute for Computer Sciences, Social Informatics and Telecommunications Engineering

About this paper

Cite this paper

Chen, L., Zhang, L., Wu, M., Zhao, J. (2021). Fast Rational Lanczos Method for the Toeplitz Symmetric Positive Semidefinite Matrix Functions. In: Song, H., Jiang, D. (eds) Simulation Tools and Techniques. SIMUtools 2020. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, vol 369. Springer, Cham. https://doi.org/10.1007/978-3-030-72792-5_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-72792-5_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-72791-8

Online ISBN: 978-3-030-72792-5

eBook Packages: Computer ScienceComputer Science (R0)