Abstract

We describe how to perform the backward error analysis for the approximation of \(\exp (A)v\) by \(p(s^{-1}A)^sv\), for any given polynomial p(x). The result of this analysis is an optimal choice of the scaling parameter s which assures a bound on the backward error, i.e. the equivalence of the approximation with the exponential of a slightly perturbed matrix. Thanks to the SageMath package expbea we have developed, one can optimize the performance of the given polynomial approximation. On the other hand, we employ the package for the analysis of polynomials interpolating the exponential function at so called Leja–Hermite points. The resulting method for the action of the matrix exponential can be considered an extension of both Taylor series approximation and Leja point interpolation. We illustrate the behavior of the new approximation with several numerical examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are interested in approximating the action of the matrix exponential to a vector, i.e. \(\exp (A)v\), where \(A\in \mathbb {C}^{n\times n}\) and \(v\in \mathbb {C}^{n\times 1}\). When A is sparse and of large scale it is not favorable to compute \(\exp (A)\) and then multiply by v, since the matrix exponential is, in general, full. It is instead preferable to approximate the exponential function directly by a polynomial. Ideally this should only require matrix-vector products. This allows the computation of \(\exp (A)V\), for a matrix \(V\in \mathbb {C}^{n\times n_0}\) with \(1\le n_0\ll n\), by the same method. Moreover, the process is easily parallelizable, since it does not require solving linear systems (see [19]).

The starting point of our analysis is the consideration that for every polynomial p(x) of degree m such that \(p(0)=1\), there exist a positive integer s and a value \(\theta _m\ge 0\) such that \(p(s^{-1}A)^s v\) is sufficiently close to \(\exp (A)v\) if \(s^{-1}\left\| A\right\| _{}\le \theta _m\). In Sect. 2 we extend the backward error analysis introduced in [2] for truncated Taylor series to any given polynomial \(p(x)=1+a_1x+\cdots +a_mx^m\). This allows us to determine an appropriate value for s (if it exists) that guarantees a priori a satisfying approximation. This general result can be used for existing polynomial approximations whose parameters are currently determined only in a heuristic or a posteriori ways. Moreover, any new polynomial interpolation will benefit from the analysis carried out here.

Then in Sect. 3 we analyse the important case of interpolation polynomials. In particular, we show how to compute in a stable way the divided differences needed for the polynomial interpolation in Newton form (best suited for computing the action of the matrix exponential), how to perform interpolation at complex points in real arithmetic for real input matrices, and how to sort the interpolation points in order to facilitate the triggering of an early termination criterion. In Sect. 4 we introduce and perform the backward error analysis of newly defined approximation polynomials for \(\exp (A)v\). They are based on polynomials which interpolate the exponential function at the so called Leja–Hermite points (see [5]) and extend both Taylor series approximation and Leja point interpolation [7]. In particular, they allow us to select the scaling parameter s by considering not only \(\left\| A\right\| _{}\), but also the values \(\left\| A^q\right\| _{}^{1/q}\le \left\| A\right\| _{}\), \(q\ge 1\), in order to reduce the risk of over-scaling the input matrix. The main advantage of interpolation at Leja–Hermite points with respect to existing polynomial methods is its flexibility. In fact, it can be adapted to matrices with (nearly) real or purely imaginary spectra, to matrices with different behaviors of the sequence \(\{\left\| A^q\right\| _{}^{1/q}\}_q\), and to different requirements by the user, such as best expected performance or best worst case scenario.

In Sect. 4, moreover, we briefly describe the SageMath code expbea we have developed in order to perform the backward error analysis for arbitrary polynomials and tolerances. Finally in Sect. 5 we perform several numerical experiments and compare different approximation or interpolation polynomials in the task of approximating \(\exp (A)v\) and draw some conclusions in Sect. 6.

2 Backward error analysis

We consider a polynomial

of degree m. In this section we show how to compute the value \(\theta _m\). To a first approximation, this value represents the maximal norm that a matrix A can have so that its exponential can be accurately approximated by the given polynomial. If p(x) allows for \(\theta _m>0\), then any matrix A with norm larger than \(\theta _m\) has to be scaled down by a positive integer s, so that \(s^{-1}\Vert A\Vert \le \theta _m\). Consequently, the approximation \(v^{(s)}\) of \(\exp (A)v\) is computed by the iterative scheme

This recursion can be carried out with only two auxiliary vectors, as it is illustrated by the SageMath codeFootnote 1 reported in Algorithm 1.

The main cost of such an algorithm is the number of performed matrix-vector products. Therefore, for given scaling parameter s and degree of approximation m, the cost is \(s\cdot m\). For a specific vector \(v^{(l)}\) it might not be necessary to perform m iterations of the inner loop to achieve a given tolerance \(\mathrm {tol}\). For instance, if \(v^{(l)}\) is the zero vector, it is enough to perform only the initial assignment. To detect such cases an early termination criterion is employed. Common (heuristic) early termination criteria (see [2, 7]) allow to break the inner loop at step \(k<m\) whenever the following estimate of the norm of the tail

is smaller than

We note that such an early termination, during the construction of the approximation polynomial, is not available if Horner’s scheme is used for the evaluation of the polynomial.

In order to reduce the risk of over-scaling, the parameter s should not be chosen too large (see [1, 2]). We refer to [1, §1] for an explicit \(2\times 2\) matrix A for which choosing s too large deteriorates the approximation of \(\exp (A)\). We therefore follow the backward error analysis of Al-Mohy and Higham [1]. The main idea is to assume that the interpolation polynomial p provides the exact solution to a slightly perturbed matrix, i.e.

We consider ourselves satisfied whenever the relative backward error does not exceed the prescribed tolerance \(\mathrm {tol}\), i.e.

In order to achieve this we represent \(\varDelta A\) as a function of A by exploiting the relation

and by setting

Let us now investigate the relation between A and \(\varDelta A\) in more depth: for every matrix X in the set

where \(\rho \) denotes the spectral radius, the function h(X) has the power series expansion

where \(\ell \ge 0\) is the largest integer such that

This means that the coefficients of the polynomial p(x) satisfy

Hence, for those values of s such that \(s^{-1}A \in \varOmega \), we obtain the inequality

Therefore, inequality (2.3) holds true if

In order to verify this inequality for a given polynomial, we solve for the non-negative real root of the scalar equation

and define the largest real solution as \(\theta _m\). The equation above has at least the trivial solution \(\theta =0\). We observe that \(\tilde{h}(\theta )/\theta \) is a strictly increasing function in \(\theta \). If \(\ell >0\), then there exists one additional positive solution \(\theta _m\). If instead \(\ell =0\), this property holds provided that \(|c_1|< \mathrm {tol}\). Moreover, \(\tilde{h}(\theta )\le \mathrm {tol}\cdot \theta \) for \(0\le \theta \le \theta _m\). Therefore, it is possible to find the integer scaling parameter \(s\ge 1\) such that

A possible weakness of this approach is that the powers \(\left\| s^{-1}A\right\| _{}^k\) might be much larger than \(\left\| (s^{-1}A)^k\right\| _{}\) in inequality (2.6). This could lead to a huge over-estimate of \(\left\| h(s^{-1}A)\right\| _{}\) and consequently to an over-estimate of the scaling parameter s. In order to tackle this issue, following [1, Thm. 4.2(a)], it is possible to derive the inequality

valid for \(X\in \varOmega \) and

Clearly, we have

The constraint \(q(q-1)\le \ell +1\) can be rewritten as

Therefore, in order to assure inequality (2.3) for \(q\le q_\ell \) it is enough to verify

This is certainly true if

As result we get

Since \(\alpha _q(A)\le \left\| A\right\| _{}\), the condition for s required in (2.10) is usually less restrictive than in (2.8). Hence s is less likely over-estimated. Since the evaluation of (2.2) requires \(s\cdot m\) matrix-vector products, the minimum cost for a given m corresponds to

As \(\alpha _2(A)\le \alpha _1(A)\), the smallest value for q can be assumed to be 2, if \(\ell \ge 1\). We note, that we did not take the cost of evaluating or approximating \(\alpha _q(A)\) into account. Hence, it is not mandatory to minimize up to \(q_\ell \).

Remark 2.1

In order to further reduce s, it may be convenient to work with a shifted matrix \(B=A-\mu I\), \(\mu \in \mathbb {C}\). This is certainly true if the values \(\alpha _q(B)\) are smaller then the corresponding values \(\alpha _q(A)\). In [2] it was empirically found that the shift \(\mu =\mathrm {trace}(A)/n\) often produces smaller values \(\alpha _q(B)\).

A sufficient condition on the shift \(\mu \) in order to preserve the backward error anaylysis discussed above is that \(\left\| B\right\| _{}\le \left\| A\right\| _{}\). In fact, if the scaling parameter s is chosen in order to guarantee

then the approximation of \(\exp (A)\) is recovered by

The first expression has to be preferred in order to avoid \(p(s^{-1}B)^s\) to overflow (see [13, sect. 10.7.3]). In exact arithmetic, both expressions coincide with

and

as desired.

2.1 Power expansion of the function h

In order to find the positive root solving (2.7), we first need to compute the coefficients \(c_k\) of the power series expansion of h in (2.4). First of all, we consider the truncated polynomial of degree M of the series, for a suitable choice of \(M>m\), and denote it by \(h(x)|_M\). We found that \(M=3m\) is a reasonable choice. We have

Since the polynomial \((\mathrm {e}^{-x}p(x))|_M-1\) starts from degree \(\ell +1\), its \(\lceil M/(\ell +1)\rceil \)-th power has no monomials of degree less than or equal to M. Therefore,

The coefficients of the polynomial

can be obtained by the convolution of the sequences \(((-1)^j/j!)_{j=0}^M\) and \((a_j)_{j=0}^m\). By convolving \((\mathrm {e}^{-x}p(x))|_M-1\) and the coefficients of the \((j-1)\)-th power of \((\mathrm {e}^{-x}p(x))|_M-1\) the coefficients of the j-th power of \((\mathrm {e}^{-x}p(x))|_M-1\) can be computed iteratively. Finally, with the help of the leading coefficients \(|c_k|\) of the power series of \(\tilde{h}\) we can approximate the positive zero of \(\tilde{h}(\theta )|_M-\mathrm {tol}\cdot \theta \). This allows us to obtain our bound \(\theta _m\). In the next sections we compute the bounds \(\theta _m\) for various approximation polynomials up to degree \(m=55\).

2.2 Example: truncated Taylor series approximation

As example to illustrate the backward error analysis outlined above we recall the results for the truncated Taylor series presented in [2]. The polynomial p(x) of degree m is

that is the Taylor series expansion of the exponential function about 0. Since all the coefficients \(a_i\) of p(x) are exactly 1 / i!, the value \(\ell \) in (2.4) is precisely m. Therefore, we get \(p(s^{-1}A)^s=\exp (A+\varDelta A)\) with

where

In Table 1 we report some values of \(\theta _m\) up to \(m=55\) for double precision tolerance (\(\mathrm {tol}=2^{-53}\)) and the corresponding value \(q_m\). The smallest maximum cost, i.e. no early termination criterion in place, is

where we took into consideration that only the values of \(\alpha _q(A)\) for \(1\le q\le \bar{q}\) might be available, for a user defined \(\bar{q}\) (because, for instance, it is considered too expensive to compute or approximate them up to \(q_m\)). We finally remark that the shift strategy described in Remark 2.1 corresponds to a Taylor expansion about the complex point \(\mu \).

3 Hermite polynomial interpolation of the exponential function

Although interpolation can be considered a special case of approximation, we reserve it a proper treatment. The above mentioned truncated Taylor series approximation can be interpreted as a special case of Hermite interpolation, where all interpolation points coincide with \(x_0=0\) and the polynomial p(x) satisfies the following conditions

Moreover, the matrix exponential \(\exp (A)\) itself can be represented through the polynomial that interpolates the exponential function at the eigenvalues \(\{\lambda _i\}_{i=0}^{n-1}\) of A (see [13]). In Newton form it writes as

where \(\exp [\lambda _0,\lambda _1,\ldots ,\lambda _i]\) denotes the divided difference of order i of the function \(\exp \) on the set \(\{\lambda _j\}_{j=0}^i\). Of course one is interested in a polynomial of degree m much smaller than \(n-1\), which still gives a sufficiently accurate approximation of \(\exp (A)\).

In the general case, we consider a finite set of distinct complex interpolation points \(P=\{x_0,x_1,\ldots ,x_k\}\), with \(x_0=0\). Then there exists a unique polynomial of degree m as in (2.1), called Hermite interpolation polynomial, satisfying

for any choice of the indexes \(i=0,1,\ldots ,k\) and \(j=0,1,\ldots ,m_i\) such that the degree condition

holds true. Here \(m_i+1\) corresponds to the number of copies of \(x_i\) used for the interpolation. The equivalence of the leading coefficients with the inverse of the factorials (2.5) now depends on the multiplicity \(m_0+1\) of the interpolation point \(x_0=0\), which we therefore also denote by \(\ell +1\).

With confluent or nearly confluent points the computation of the coefficients of p(x) through the Vandermonde matrix or the evaluation of the elementary Lagrange polynomials are highly unstable ways to evaluate the polynomial (see [4, § 2.]). The barycentric formula should also be discarded since, in the matrix case, it requires the solution of linear systems (see [4, end of § 3.]). Hence we consider the Newton form, which is also an elegant way for considering information on derivatives (see, again, [4, end of § 3.]). For any permutation \((z_0,z_1,\ldots ,z_m)\) of the elements of the vector

the polynomial p(x) can be written in terms of the Newton basis as

where the coefficient \(d_i\) denotes the divided difference of order i. When the points \(\{z_j\}_{j=0}^i\) are ordered such that equal points are adjacent (see [17]), there are simple recursive formulas for the explicit computation of the divided differences. Otherwise, the Genocchi–Hermite formula can be used (see [13, § B.16]). But there is another representation of divided differences, which turns out to be extremely important for the approximation of the matrix exponential.

Theorem 3.1

(Opitz’s divided differences [20, 24]) Let f be a function for which

exist and let \((z_0,z_1,\ldots ,z_m)\) be as above. Let \(f_{i,j}\), \(j\le i\) be the divided difference of order \(i-j\) for the function f on the set \(\{z_j,z_{j+1},\ldots ,z_i\}\) and let finally

Then

In particular, the vector \((d_0,d_1,\ldots ,d_m)^T\) is the first column of \(\exp (Z)\). Due to the special structure of Z there is an efficient and accurate implementation of Taylor series approximation for the matrix exponential, see algorithm TS(II) in [21]. In fact, classical formulas for the computation of the divided differences suffer from severe cancellation errors and are not suitable for matrix interpolation, see [6]. Furthermore, it is not enough to approximate \(\exp (Z)\) by standard Padé formulas [1] or directly \(\exp (Z)e_1\) by the truncated Taylor series method [2], since these algorithms only bound the norm of the backward error, while here each coefficient \(d_i\) is required to have a relative error of the order of machine precision. In fact, if we use the function expm in Matlab R2017a to compute \(\exp (Z)\) for the case \(z_0=z_1=\cdots =z_m=0\) and extract the first column, we see in Table 2 that for \(m=30\) the result \(d_m\) is four orders of magnitude different from the exact value 1 / 30!. Finally, the algorithm described in [21] works for any permutation of the sequence of interpolation points.

Once the divided differences are accurately computed, the matrix polynomial \(p(s^{-1}A)^sv\) is computed by a simple two-term recurrence which is sketched in Algorithm 2. As in the case of polynomial approximation, we can break the inner loop at step \(k<m\) if

is smaller than

A suitable choice for the scaling parameter s is made by means of the backward error analysis framework introduced above. In order to do so, the coefficients \(\{a_i\}_{i=0}^m\) of the polynomial p(x) in the monomial basis have to be recovered. This is possible using Stetekluh’s algorithm [27] written in Algorithm 3.

We remark that the computation of the coefficients \(\{a_i\}_{i=0}^m\) is only meant for the backward error analysis which should be performed a priori and once and for all. In fact, if the early termination at step k is triggered, the monomial form

does not interpolate the exponential function at the set \(\{z_0,z_1,\ldots ,z_{k}\}\), in general, whereas the Newton form

does.

3.1 Real interpolation at complex points

When A and v are real, so is \(\exp (A)v\). In such a case we would like to employ real arithmetic only. This is, in general, not possible when the interpolation points are complex. On the other hand, if for each complex interpolation point \(z_j\) the conjugate \(\overline{z_j}\) point is also among the interpolation points, it is possible to rewrite Newton interpolation in real arithmetic (see [28]). In order to do that it is necessary to reorder the points in such a way that every complex point is followed by its complex conjugate. If we consider the sequence

ordered in such a way that the first \(r+1\) points are real, r and m have the same parity, and the next satisfy \(z_{r+j+1}=\overline{z_{r+j}}\), for \(j=1,3,5,\ldots ,m-1-r\), then the Newton interpolation can again be written as a two-term recurrence in real arithmetic, see Algorithm 4.

We note that the divided differences \(\{d_i\}_{i=0}^r\) are real, and so are \(\{d_{r+2i}\}_{i=1}^{(m-r)/2}\). Moreover, from the updating of the polynomial in the second last line, it is clear that an early termination criterion similar to that described above is available. For the above sequences of points, this evaluation scheme coincides with the classical form of the Newton interpolation in exact arithmetic, even if A or v are complex. It turns out to be faster when A and v are real, since it does not use complex arithmetic. Moreover, it reduces roundoff errors in the imaginary parts when A or v are complex. Therefore, this scheme is our default choice when dealing with such interpolation points.

3.2 Ordering of the interpolation points

Even though we can accurately compute divided differences for any permutation of the interpolation points, a good order of the points in the sequence \((z_0,z_1,\ldots ,z_m)\) is a crucial requirement for triggering an early termination criterion in the above scheme. In [25] Reichel suggests the following ordering, called Leja ordering, of the points of \(P=\{x_0,x_1,\ldots ,x_k\}\): for fixed initial point \(y_0\in P\), \(y_{i+1}\) is recursively chosen as

and the sequence of interpolation points is given by

Since the interpolation error \(\mathrm {e}^x-p(x)\) for p(x) of degree i is proportional to \(\pi _{i}(x)=(x-z_0)(x-z_1)\cdot \cdots \cdot (x-z_i)\), when \(z_0\), \(z_1\), ..., \(z_i\) are all distinct, the procedure above selects a point \(z_{i+1}\) which maximizes \(|\pi _{i}(x)|\) among the points in P. This should greedily reduce the interpolation error. For repeated points the above procedure diverges from this idea, that we would like to pursue in the following. Hence, fixing the first point \(z_0\in P=\{x_0,x_1,\ldots ,x_k\}\), \(z_{i+1}\) is recursively picked from the set

Of course, when i equals k then

since all the points in P have been already selected once. In order to complete the sequence of interpolation points up to m, let us suppose that we can look for the next point in a perturbed set \(P+\nu \), \(\nu \in \mathbb {C}\), that is

We can express \(\pi _{k}(x+\nu )\) by means of Taylor’s formula

and therefore

Finally, letting \(\nu \) tend to 0, our suggested choice for \(z_{k+1}\) is therefore

Now, let \(P_1\subseteq P\) be the set of points with multiplicity at least two and \(k_1\) its cardinality. Then, we can define

If for \(k+k_1<m\) it is

following the reasoning above, we select

where \(P_2\subseteq P_1\) is the set of points with multiplicity at least three and \(k_2\) is its cardinality. We iterate this reasoning until we complete the sequence \((z_0,z_1,\ldots ,z_m)\). In case of complex conjugate pairs of points, such an ordering algorithm has to be slightly adjusted in order to keep them contiguous.

4 Examples of sets of interpolation points

In this section we consider some sets of interpolation points suited for the approximation of the matrix exponential through a polynomial interpolation. In particular, we extend some sets already used in the literature and analyse their properties. From formula (3.1) above, it is clear that it is desirable that the interpolation points \(\{z_j\}_{j=0}^m\) lie in a neighbourhood of the convex hull of the spectrum \(\sigma (A)\). If we shift the matrix A by \(\mu =\mathrm {trace}(A)/n\), then the eigenvalues of the matrix \(B=A-\mu I\) have arithmetic average 0. Another possible shift which requires some information about the field of values of A is used in [7], with the purpose to have the field of values of B inscribed into a rectangle symmetric with respect to the origin of the complex plane. Therefore, without loss of generality, we may assume that the interpolation points \(\{z_j\}_{j=0}^m\) are distributed around the origin. Since we would like to work in real arithmetic whenever the input matrix A is real, we consider only sets of real interpolation points or sets with real and complex conjugate pairs of interpolation points.

We start with interpolation at real Leja points, already used and analysed in [7]. Because of the shift strategy described above, it is not restrictive to consider a real interval \([-c,c]\) symmetric with respect to the origin. A sequence of Leja points for the real interval \([-c,c]\) is defined by \(z_0=0\) and

We select the first three points as \(z_1=c\), \(z_2=-c\), and \(z_3=c/\sqrt{3}\). The next points are univocally determined. The related unique polynomial p(x) of degree m satisfying

is called Leja interpolation polynomial. Differently from the truncated Taylor series method, here there is the interpolation interval as a free parameter to be chosen. Therefore, for given m and c it is possible to compute, by means of the backward error analysis, the corresponding value \(\theta _{m,c}\). For each m, the curve \(c\mapsto \theta _{m,c}\) has been drawn for m up to 100 in [7]. For some values of m up to 55, we report in Table 3, the value

computed by taking the maximum of \(\theta _{m,c}\) over 200 values of c between 0 and \(\bar{c}_m\).Footnote 2 It is an approximation of the maximum of the curve \(c\mapsto \theta _{m,c}\) before its first intersection with the curve \(c\mapsto c\). Additionally, we obtain the interpolation interval \([-c_m,c_m]\) corresponding to the maximum \(\theta _m\). Since c is allowed to range from 0 (in which case Leja interpolation does coincide with the truncated Taylor approximation) to \(\bar{c}_m\), for each degree m the value found for \(\theta _m\) is never smaller than the corresponding value for the truncated Taylor approximation. It turns out that for degree m up to 16 and degrees 19 and 20, the maximum in (4.1) is in fact attained at \(c_m=0\). Therefore, Leja interpolation does coincide with Taylor approximation and it is in fact a Hermite interpolation. For these degrees m the interpolation points are \(\ell _m+1=m+1\) copies of 0 and the value

is greater than 1. For the degrees larger than 20, the value \(\theta _m\) is larger than the corresponding value in the Taylor method. For instance, for degree \(m=50\), it is about 8.78 versus 8.55. Therefore, if p(x) is the polynomial of degree m which interpolates the exponential function at the Leja points of \([-c_m,c_m]\), then \(p(s^{-1}B)^s=\exp (B+\varDelta B)\) with

The Leja points in the intervals \([-c_m,c_m]\) (for any degree m) as well as the corresponding divided differences can be computed once and for all and stored for an actual implementation of the method. Therefore, the method has no extra cost with respect to a standard two-terms recurrence algorithm, such as Taylor approximation.

Despite the slightly improved boundaries \(\theta _m\) presented by this method, there exists a drawback given by the fact that the interpolation point \(z_0=0\) has in general multiplicity 1 (i.e. \(\ell =0\)). Therefore, it is not possible to employ the values of \(\alpha _q(B)\) for q different from 1. Nevertheless, this interpolation method can be effective whenever the matrix B has a thin spectrum distributed along the real axis. This property has been already exploited in [7].

In order to combine all the interesting properties of the truncated Taylor series and the Leja interpolation method it appears logical to literally mix them. This is done in our second example, the so called Leja–Hermite points. In fact, we consider sets of points P obtained as Leja extension of a core set composed by \(\ell +1\) points at the origin (see [5]). Namely, we take the sequence of points \((z_0,z_1,\ldots ,z_\ell )=(0,0,\ldots ,0)\) and choose

We note that it is possible to extend any set of \(\ell +1\) given points in a similar fashion. For the case of interest, we select \(z_{\ell +1}=c\), \(z_{\ell +2}=-c\), and \(z_{\ell +3}=c\sqrt{(\ell +1)/(\ell +3)}\). Consequently, the polynomial interpolating the exponential function at such a sequence of Leja–Hermite points satisfies

That gives us the possibility to employ the values \(\alpha _q(B)\) for some suitable \(q\ge 1\) together with new boundaries \(\theta _m\). Now, for each degree of interpolation m, there are two free parameters, namely the multiplicity \(\ell +1\) of 0, \(\ell \le m\) and the interpolation interval \([-c,c]\). Therefore, it is possible to compute the boundaries \(\theta _{m,\ell ,c}\) for each \(\ell \) and each c. A possible choice for the determination of a unique interpolation polynomial of a given degree m is the following. We first consider

[see formula (2.9)] and select \(\theta _m\) as

requiring

This choice allows us to use the values \(\alpha _q(B)\), while keeping \(m-\ell \) points distributed in the interval \([-c_m,c_m]\). In our experience this is beneficial if an early termination criterion is used. Moreover, we obtain values for \(\theta _m\) not smaller than the corresponding value for the truncated Taylor series. For instance, for degree \(m=50\), the corresponding value \(\theta _m\) is about 8.64. We note that in some circumstances the above maximum is attained for \(c_m=0\). In these cases, the number of zeros among the interpolation points is exactly \(\ell _m+1=m+1\ge q_m(q_m-1)\). In Table 4 we report some values \(c_m\), \(\theta _m\), and \(q_m\) for degree m up to 55.

Another possible choice in order to associate a unique interpolation polynomial to a given degree m is the one which maximizes the value \(\theta _m\), namely

For instance, for degree \(m=50\) the value \(\theta _m\) is about 8.84 obtained with an interpolation set with \(\ell _m=37\). In Table 5 we report some values \(c_m\), \(\theta _m\), and \(q_m\) for degree m up to 55. This choice is particularly appealing when the early termination is not necessary and the information about the values \(\alpha _q(B)\) for \(q\ge 2\) is not available. In this case, in formula (2.10) \(q_\ell \) has to be replaced by \(q_1\). These features can be useful for some exponential integrators (see [16, 18]), which may require the evaluation in parallel of large matrix exponentials.

As a final example, we analyse an extension of the sequence of complex conjugate Leja points introduced in [5, 7, 8] with a single initial point \(z_0=0\). Given the initial sequence \((z_0,z_1,\ldots ,z_\ell )=(0,0,\ldots ,0)\) we define them in the complex interval \(\mathrm {i}[-c,c]\) as

The first four points after the initial sequence are \(z_{\ell +1}=\mathrm {i}c\), \(z_{\ell +2}=-\mathrm {i}c\), \(z_{\ell +3}=\mathrm {i}c\sqrt{(\ell +1)/(\ell +3)}\), and \(z_{\ell +4}=-\mathrm {i}c\sqrt{(\ell +1)/(\ell +3)}\). This set of interpolation points is beneficial for those matrices B with eigenvalues whose convex hull is well described by a vertical skinny rectangle. In order to use a set containing an even number of points, the value \(\ell \) has to be taken odd. Therefore, in order to allow the use of the largest number of values \(\alpha _q(B)\), we select the number of repeated initial zeros as

In [7] it was observed that the maximum of the curve \(c\mapsto \theta _{m,0,c}\) (which corresponds to \(\ell =0\)) is attained at \(c=0\). This fact now turns out to be true for any number of repeated initial zeros in the sequence. We moreover observed the same phenomenon with pure complex Leja–Hermite points, without any artificial conjugation. Therefore, a maximization like (4.2) produces nothing else than the truncated Taylor series of degree m. However, as previously observed, the presence of some point in the interpolation interval generally helps to trigger the early termination criterion. Therefore, unless \(\ell _m\) turns out to be equal to m from formula (4.4) and all the interpolation points concide with zero, we follow the path traced in [7] by taking

The values of \(\ell _m\), \(\theta _m\) (obtained by a fixed point iteration of the equation \(\gamma =\theta _{m,\ell _m,\gamma }\)), and \(q_{\ell _m}\) are reported in Table 6. For \(m=50\) we get \(\theta _m\) about 8.17.

For comparison, we also computed the values \(\theta _m\), which correspond to sets of complex conjugate Leja points with the smallest number of repeated zeros \(\ell _m+1\) (one or two), see Table 7.

4.1 SageMath code for the backward error analysis

All the previous tables were obtained with the help of the SageMath code mentioned above and described in this section. The code is a very flexible and powerful tool for the analysis of interpolation polynomials for the exponential function. In the following we will highlight some of its main features as well as some possible applications. We will mainly do this by showing how the \(\theta _m\) values in the above tables can be computed with the help of the code.

The package is essentially comprised of two functions, namely expbea.sage, which performs the backward error analysis for a polynomial and intpol.sage which computes the coefficients of the polynomial which interpolates the exponential at certain given points. Moreover, we prepared a list containing Leja–Hermite points (lejaptslist.sage) up to degree 109 with any number of leading zeros and the corresponding symmetric version (lejasymptslist.sage) suitable for interpolation at complex points. Finally, the function leja.sage has to be invoked in order to select the proper set of points and scale them to the wanted interval.

In order to get started with the package the above mentioned functions need to loaded into SageMath by:

Now the function

is available. The three arguments are the polynomial (2.1), the desired tolerance \(\mathrm {tol}\) in (2.7), and the binary digits used for evaluation. The result, if it exists, is the largest real solution \(\theta _m\) fulfilling (2.7). As a possible application, not yet mentioned, suppose you have a problem where you are interested in obtaining a result for a certain tolerance, e.g. quadruple or specifically \(2^{-10}\). Currently the Taylor as well as Leja method do support the precisions half, single, and double. In addition to the early termination you can obtain the best performance for your example by using the function expbea to compute the required parameters \(\theta _m\) tailored to your tolerance. These values can easily be computed and used for your problem.

To illustrate the functionality of expbea, as well as the other methods, we recompute the value \(\theta _{50}\) for Taylor, Leja, Leja–Hermite, and complex conjugate Leja–Hermite interpolation in several different ways. Furthermore, we also show how parameters for other popular approximation methods can be computed with our code.

For the truncated Taylor method the value \(\theta _{50}\) (compare with Table 1) can be computed by

resulting in

The function leja selects the set of 51 Leja–Hermite points with \(\ell =50\) from the list lejapts (therefore, a set containing 51 repetitions of zero). The coefficients of the interpolation polynomials and the backward error analysis were performed with 165 binary digits. The tolerance \(2^{-53}\) corresponds to double precision. The computational time for the above computation (obtained by the timeit functions) is 351 ms on a standard laptop. For such an example, a simpler way to reach the same result is to directly give the coefficient of Taylor series expansion for the exponential function, i.e.

with has a computational time of 42.9 ms and provides the same result as before. Now suppose we are interested in quadruple precision, invoking

computes the value \(\theta _{50}\) for \(\mathrm {tol}=2^{-\,113}\), i.e.

with a computational time of 69.8 ms.

Now let us extend this example to the Leja interpolation. For this method we need to include a interpolation interval and obtain the necessary polynomial. Again we recompute the value \(\theta _{50}\) found in Table 3 and therefore the interpolation interval \([-4.2,4.2]\) is used. By calling

we compute the Leja polynomial of degree 50 with \(\ell =0\) in the interval \([-\,4.2,4.2]\) as input for expbea and obtain

with a computational time of 1.97 s. Again we used 165 binary digits and the unite roundoff for double. To obtain the same coefficient \(\theta _{50}\) for the Leja–Hermite interpolation in the interval \([-\,6.3,6.3]\) (compare with Table 4), we invoke

and obtain

Finally, the value \(\theta _{50}\) for the Leja–Hermite interpolation at complex conjugate points in the interval \(\mathrm {i}\cdot [-\,8.2,8.2]\) (compare with Table 6) is computed by,

and results in

Other direct polynomial methods are used in the literature for the matrix exponential approximation, see, for instance [3, 11, 22, 23, 26]. We briefly look at Chebyshev approximation in the interval \([-c,c]\) and show how expbea can be used here. The truncated Chebyshev series approximation can be written as

where \(I_i\) is the modified Bessel function of the first kind of order i and \(T_i\) the Chebyshev polynomial of the fist kind of degree i. The truncated polynomial of degree m has the representation

and does not fulfill the property \(p(0)=1\). Therefore, the above analysis cannot directly be applied. Nevertheless, it is possible to consider the polynomial

which trivially fulfills the required property. In SageMath it is possible to perform the backward error analysis in the following way:

The result

is very similar to that of the Leja interpolation in the same interval.

With the framework compiled in expbea.sage it is also possible to compute the value \(\theta _m\) for other approximations, such as the rational Padé approximation. For instance, starting from

which is the Padé approximant of order [3 / 3] to the exponential, we get

which corresponds up to 16 digits with the corresponding value used in the Matlab R2017a function expm. The relation between the order of the Padé approximant and the order of the Talyor expansion used for the computation (14 in this example) is not further investigated here.

This little demonstration outlines the main features of or SageMath code. The whole code for the backward error analysis is available at the web page https://bitbucket.org/expleja/expbea.

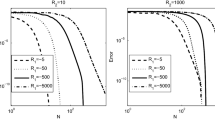

5 Numerical experiments

In this section we report on several numerical experiments. For all experiments we use Matlab R2017a. Furthermore, we denote the truncated Taylor series method by “T”, the interpolation at Leja–Hermite points by “L–H”, and the Leja–Hermite method with the reordering of the points as suggested at the end of Sect. 3.2 by “L–H reord.”. If complex conjugate Leja–Hermite points are used we indicate this by adding “cplx”, in this case choice (4.5) is used. Furthermore, A denotes the original matrix and \(B=A-\mathrm {trace}(A)/n\). For each of the following experiments, we report the scaling parameter s, the degree m, the interval endpoint \(c_m\), the value \(\theta _m\), and the number \(\ell _m\) of repetitions of zero among interpolation points. Here m corresponds to the maximum number of iterations for each scaling parameter, selected by the optimization strategy (2.11). Moreover, the total maximum number of iterations (which corresponds to \(s\cdot m\)), the actual number of iterations (if the early termination criterion is enabled), and the relative error in the 1-norm, with respect to the solution computed by expm(A)*v, are reported. The global accuracy of expm(A)*v is not known. On the other hand, for Hermitian, skew-Hermitian, or real and essentially nonnegative matrices the Taylor method approximates the action of the matrix exponential in a forward stable way [12] and the amplification of rounding errors is governed by the condition number of the \(\exp (A)v\) problem (which can be estimated as described in [14] or in [10], for large and sparse matrices). Not all of the matrices used in our experiments have the above properties. Nevertheless, we decided to report in parenthesis the error of Leja–Hermite interpolation with respect to the Taylor approximation. We also note that the computational cost of evaluating the sequence \(\{\alpha _q(B)\}_{q=1}^{\bar{q}}\) through the estimation algorithm described in [15], which is \(4\bar{q}(\bar{q}+3)\) matrix-vector products (see [2]), is not reported in the tables below. In all experiments \(\mathrm {tol}\) is set to \(2^{-53}\) (which corresponds to the double precision unit roundoff) if required.

We group the experiments, depending on the behavior of the sequence \(\{\alpha _q(B)\}_q\).

5.1 Matrices with non-decreasing \(\alpha _q\) values

In the following we are going to present several experiments where the sequence \(\{\alpha _q(B)\}_q\) is constant. On the one hand, it means that it is not necessary to waste matrix-vector products to estimate the sequence. On the other hand, applying [1, Thm. 4.2(a)] to the estimation of s provides no additional savings.

5.1.1 Advection–diffusion matrices

We consider the method of lines applied to the partial differential equation

equipped with homogeneous Dirichlet boundary conditions in the spatial domain \([0,1]^2\). The parameters are chosen as \(d=1/100\), b non-negative, and we discretise in space by standard central second-order finite differences. The space step size h is chosen equal to 1 / 50. The grid Péclet number is thus

The resulting matrix A has size \(n\times n=2401\times 2401\) (we used a discretization with inner nodes). We are interested in the solution at time \(t=1\), where v is the space discretization of \(u_0\). After applying the shift \(\mu =\mathrm {trace}(A)/n\), the equality \(\left\| B\right\| _{1}=\alpha _q(B)=4d/h^2=100\) holds for any value of \(q\le 8\) and non-negative b. For \(b=0\) the matrix is symmetric and all the eigenvalues are real and distributed in the interval \([-\,100,100]\). The results for this experiment are collected in Table 8. For such an example, interpolation at pure Leja points, without repeated zeros, outperforms Taylor method, since the interpolation points are well spread in the convex hull of the matrix of interest and Taylor method cannot take advantage of the values \(\alpha _q(B)\).

For the case \(b=0.5\), results are reported in Table 9. In this case the convex hull of the spectrum resembles an ellipse inscribed in the skinny rectangle \([-\,100,100]+\mathrm {i}[-\,15,15]\) (see [9]). The results are comparable to the previous case. Finally, for \(b=1\) the matrix B is lower triangular with zero in the diagonal and the results are reported in Table 10. In this case, the set of Leja–Hermite points was chosen in order to maximize \(\theta _m\) according to (4.3), independently of the number of repeated zeros (since the sequence \(\{\alpha _q(B)\}_q\) does not decrease). As a result, it was selected a smaller s with respect to Taylor method and the number of iterations is smaller. The reordering of the points slightly improves the result.

5.1.2 Advection matrices

We consider the discretization of the one-dimensional advection operator \(\partial _x\) in the space domain [0, 1] with periodic boundary conditions. We consider the first order upwind and the second order central schemes

with \(h=1/70\) (\(n=70\)). Both matrices are normal, the second is skew-symmetric. After applying the standard shift, the values for \(\alpha _q(B)\) are constant and equal to \(1/h=70\), for \(q\le 8\). For \(k=0,1,\ldots ,n-1\) the eigenvalues are \(\lambda _k=\mathrm {e}^{2\pi \mathrm {i}kh}/h\) and \(\lambda _k=\mathrm {i}\cdot \sin (2\pi kh)/h\), respectively. The initial vector has the components \(v_i=\mathrm {e}^{-10(ih-1/2)^2/2}\). For the upwind scheme, we can see in Table 11 that interpolation at Leja–Hermite points outperforms Taylor method, thanks again to the possibility to select a smaller scaling parameter s. For the central scheme, we tested complex conjugate Leja points. Due to the distribution of the eigenvalues on the complex interval \(\mathrm {i}\cdot [-70,70]\), one can observe in Table 12 that real interpolation at complex conjugate points needs the fewer number of iterations.

5.1.3 Schrödinger matrix

We finally consider the discretization of the free Schrödinger equation

equipped with homogeneous Dirichlet boundary conditions in the spatial domain \([-\,1,1]\). The space step size h is 1 / 35. The resulting matrix A has size \(n\times n=69\times 69\) and is skew-symmetric. After the usual shift, the matrix B has \(\alpha _q(B)=2/h^2=2450\) for any value of \(q\le 8\) and the eigenvalues are distributed in the complex interval \(\mathrm {i}[-\,2450,2450]\). As initial vector v the discretization of u(0, x) is used. We compute the solution at time \(t=1\). Once again, interpolation at complex conjugate Leja points gives the smallest number of actual iterations. We note that this outcome could not be predicted by the maximum number of iterations given after the initial parameter computation. Moreover, the relative error with respect to the reference solution is two orders of magnitude smaller, see Table 13. Due to the large difference of the computed errors with respect to the expm(A)*v solution, we decided to compute the reference solution in two other different ways. In the first, we explicitely computed the eigenvectors \(\{v_j\}_{j=1}^n\) and the eigenvalues \(\{\lambda _j\}_{j=1}^n\) of the matrix A, i.e. without using eig, and built the reference solution as \(V\exp (\varLambda )V^T\). Then, we used the variable precision arithmetic in Matlab R2017a and computed the reference solution by expm with 32 decimal digits. In both cases, we found an error of 7.3e−11 for the Taylor approximation and of 3.0e−13 for the complex Leja–Hermite interpolation. A larger error in the Taylor approximation with respect to the interpolation at complex Leja points for a diagonal matrix with pure imaginary eigenvalues was already observed in [7, Section 4.3].

5.2 Matrices with decreasing \(\alpha _q\) values

In the following we are going to present several experiments where the sequence \(\alpha _q(B)\) is decreasing. In particular this means applying [1, Thm. 4.2(a)] can be used to reduce the over-estimation of s.

5.2.1 lesp matrix

We consider the tridiagonal matrix A of size \(n\times n=20\times 20\), with \((-2k-3)_{k=1}^{20}\) on the main diagonal, \((1/k)_{k=2}^{20}\) on the lower diagonal, and \((k)_{k=2}^{20}\) on the upper diagonal, multiplied by 100. It can be obtained by the MATLAB command 100*gallery(’lesp’,20). After the standard shift, the values of \(\alpha _q(B)\) range from \(\left\| B\right\| _{1}=\alpha _1(B)=3900\) to \(\alpha _8(B)\approx 3383\). The eigenvalues of B lie in the real interval \([-\,2000,2000]\). The initial vector has components \(v_j=j\), \(j=1,2,\ldots ,n\). The results are collected in Tables 14, and 15. In this example, the best performance is reached by interpolation at reordered Leja–Hemite points selected as in (4.2), since the very efficient early termination criterion takes place.

Now we consider the same matrix scaled by 1 / 25. The scaling parameter and the maximum number of iterations roughly scale accordingly. After the standard shift, we have \(\alpha _1(B)=156\) and \(\alpha _2(B)\approx 152.9\). One may think it is not worth to compute further values in the \(\alpha _q\) sequence, since they seem to decrease too slowly to give a clear advantage and cost hundreds of matrix-vector products. So, we force the methods to use only these two values. In such a situation, a large number of zeros among interpolation points would probably not help and the choice like (4.3) would at least minimize the maximum number of iterations. This is confirmed as it can be seen in Table 15. Both methods optimize their performance with the value \(\alpha _2(B)\) and interpolation at Leja–Hermite points allows smaller maximum and actual numbers of iterations.

5.2.2 \(\mathrm {i}\cdot \) lesp matrix

Additionally, we consider the previous matrix multiplied by the complex identity \(\mathrm {i}\). In this case, the optimization stratery (2.11) selects degree \(m=55\) which, for the interpolation at complex Leja–Hermite points according to (4.4), means 56 repeated zeros. Therefore, the method coincides with truncated Taylor series. Among all our numerical experiments, in this one we obtained the largest errors with respect to the reference solution. On the other hand, the condition condition number of the \(\exp (A)v\) problem, computed as in [2, (4.2)], is very large and takes value 1.0e10.

For this example, we report in Table 16 also the result (denoted by “L cplx”) obtained with interpolation at complex conjugate Leja points as introduced in [7]. As it can be seen, interpolation at pure complex Leja points performs very bad. In fact, the eigenvalues of B / s are in the complex interval \(\mathrm {i}[-\,4.4,4.4]\), while the interpolation points are spread in \(\mathrm {i}[-\,8.1,8.1]\), since \(\left\| B{/}s\right\| _{1}\approx 8.1\) and it is not possible to take advantage of \(\alpha _q(B/s)\) for \(q>1\).

5.2.3 triw matrix

Our last example consists of the matrix A of size \(n\times n=20\times 20\) with \(-\,1\) on the main diagonal and \(-\,4\) on the remaining upper triangular part (see [2, Experiment 6]). It corresponds to the MATLAB matrix -gallery(’triw’,20,4). The non-normality of the matrix, measured as

after applying the standard shift, is about 0.5. This is in fact not much different from \(\kappa _1(B)\) for the shifted matrix B from Sect. 5.1.1, with \(b=1\). However, the sequence of \(\alpha _q(B)\) strongly decays, ranging from \(\left\| B\right\| _{1}=\alpha _1(B)=76\) to \(\alpha _8(B)\approx 16.29\). The initial vector has components \(v_j=\cos j\), for \(j=1,2,\ldots ,n\). The matrix B is nilpotent and therefore it is preferable to have enough zeros among the interpolation points and keep them in the leading positions. The results can be found in Table 17. Taylor truncated series and interpolation at Leja–Hermite points (both real and complex) give the same number of actual iterations, which is the minimum required by the exit criterion in order do discover the nilpotency of the matrix. Also in this case, interpolation at pure complex conjugate Leja points as in [7] would lead to a very bad result, as indicated in the last row of Table 17.

6 Discussion and conclusions

In this paper we aim to create a common framework for all those researchers who are approaching the problem of approximating the action of the matrix exponential \(\exp (A)v\) through a polynomial. In fact, the effort spent to generalise and to make the backward error analysis tool so elastic and customisable can be particularly useful to people working on the matrix exponential. To this purpose, we developed a SageMath package called expbea which is publicly available at https://bitbucket.org/expleja/expbea. This code can be used for the analysis of interpolation polynomials for the exponential function, the computation of additional \(\theta _m\) values for other tolerances or help tailor existing methods to a specific example for best performance.

In this work we consider classical Taylor approximation and several examples of polynomial interpolation. Besides the already known Leja points, we present Leja–Hermite points. They combine key features of both Taylor and Leja approximation. In addition, we describe a new way to extend the Leja ordering for repeated interpolation points. In particular, the Leja–Hermite interpolation has never been used before for matrix exponential approximation. In the following we summarize the results from the presented examples.

-

1.

Interpolation at real Leja–Hermite points allows to fully use the values \(\alpha _q(B)\). Moreover, the estimated cost is not larger than the cost predicted by Taylor approximation (compare Table 1 with Tables 4 and 5). For complex conjugate Leja–Hermite points, however, it is not possible to improve the boundaries \(\theta _m\) of the Taylor approximation. Anyway, choice (4.5) allows to use the values \(\alpha _q(B)\). Furthermore, it can outperform Taylor approximation, both in terms of total number of iterations and global accuracy. This is true whenever the spectrum of B has a vertical skinny shape and \(\left\| B\right\| _{1}\) is a good estimate of the spectral radius (see Tables 12 and 13).

-

2.

The effectiveness of sets of interpolation points containing some zeros depends more on the behavior of the sequence of values \(\alpha _q(B)\), than on the normality of the matrix B.

-

If the sequence \(\{\alpha _q(B)\}_q\) is flat, then the sets of Leja and Leja–Hermite points with reordering (both real and complex conjugate) lead in general to less iterations, thanks to the possibility of early termination. In fact, see Tables 8, 11, 12, and 13 (normal matrices), and Tables 9 and 10 (non-normal matrices), respectively. Of course, the choice between real or complex interpolation points should be based on some information about the spectrum of B. If not available from theory, it can be recovered in a cheap way by Gershgorin disks (see [7]).

-

If the sequence \(\{\alpha _q(B)\}_q\) strongly decreases, Taylor series approximation or interpolation at Leja–Hermite points lead to the best results (see Table 17). In this case the use of pure Leja points results in a much larger number of iterations.

-

In the intermediate cases, real Leja–Hermite reordered point interpolation pays off (see Table 14). Concerning complex points, Leja–Hermite point interpolation avoids a quite huge amount of wasted iteration with respect to the use of pure complex conjugate Leja points (see Table 16). If the sequence of \(\{\alpha _q(B)\}_q\) values is considered too slowly decreasing, the choice (4.3) of Leja–Hermite points which maximizes the value \(\theta _m\) allows to reduce the maximum number of iterations and, in our example, also the actual number (see Table 15).

-

We therefore conclude that interpolation at Leja–Hermite points, being an extension of both Taylor truncated series and Leja point interpolation, can be considered a reliable, powerful and flexible method for the approximation of the matrix exponential applied to a vector. In fact, it has an a priori backward error estimate, a cost proportional to the degree of approximation without requiring the solution of linear systems, and the possibility to select proper sets of interpolation depending on some readly available information on the matrix.

References

Al-Mohy, A.H., Higham, N.J.: A new scaling and squaring algorithm for the matrix exponential. SIAM J. Matrix Anal. Appl. 31(3), 970–989 (2009)

Al-Mohy, A.H., Higham, N.J.: Computing the action of the matrix exponential with an application to exponential integrators. SIAM J. Sci. Comput. 33(2), 488–511 (2011)

Bergamaschi, L., Caliari, M., Vianello, M.: Efficient approximation of the exponential operator for discrete 2D advection–diffusion problems. Numer. Linear Algebra Appl. 10(3), 271–289 (2003)

Berrut, J.P., Trefethen, N.: Barycentric Lagrange Interpolation. SIAM Rev. 46(3), 501–517 (2004)

Bos, L.P., Caliari, M.: Application of modified Leja sequences to polynomial interpolation. Dolomites Res. Notes Approx. 8, 66–74 (2015)

Caliari, M.: Accurate evaluation of divided differences for polynomial interpolation of exponential propagators. Computing 80(2), 189–201 (2007)

Caliari, M., Kandolf, P., Ostermann, A., Rainer, S.: The Leja method revisited: backward error analysis for the matrix exponential. SIAM J. Sci. Comput. 38(3), A1639–A1661 (2016)

Caliari, M., Ostermann, A., Rainer, S.: Meshfree exponential integrators. SIAM J. Sci. Comput. 35(1), A431–A452 (2013)

Caliari, M., Vianello, M., Bergamaschi, L.: Interpolating discrete advection–diffusion propagators at Leja sequences. J. Comput. Appl. Math. 172(1), 79–99 (2004)

Deadman, E.: Estimating the condition number of \(f(A)b\). Numer. Algorithms 70, 287–308 (2015)

Druskin, V.L., Knizhnerman, L.A.: Two polynomial methods of calculating functions of symmetric matrices. USSR Comput. Math. Math. Phys. 29(6), 112–121 (1989)

Fischer, T.M.: On the algorithm by Al-Mohy and Higham for computing the action of the matrix exponential: a posteriori roundoff error estimation. Linear Algebra Appl. 531, 141–168 (2017)

Higham, N.J.: Functions of Matrices. SIAM, Philadelphia (2008)

Higham, N.J., Relton, S.D.: Higher order Fréchet derivatives of matrix functions and the level-2 condition number SIAM. J. Matrix Anal. Appl. 35(3), 1019–1037 (2014)

Higham, N.J., Tisseur, F.: A block algorithm for matrix 1-norm estimation, with an application to 1-norm pseudospectra. SIAM J. Matrix Anal. Appl. 21(4), 1185–1201 (2000)

Hochbruck, M., Ostermann, A.: Exponential integrators. Acta Numer. 19, 209–286 (2010)

Kalantari, B.: Generalization of Taylor’s theorem and Newton’s method via a new family of determinantal interpolation formulas and its applications. J. Comput. Appl. Math. 126, 287–318 (2000)

Luan, V.T., Ostermann, A.: Parallel exponential Rosenbrock methods. Comput. Math. Appl. 71, 1137–1150 (2016)

Martínez, A., Bergamaschi, L., Caliari, M., Vianello, M.: A massively parallel exponential integrator for advection–diffusion models. J. Comput. Appl. Math. 231(1), 82–91 (2009)

McCurdy, A.: Accurate computation of divided differences. Technical report, University of California—ERL (1980)

McCurdy, A., Ng, K.C., Parlett, B.N.: Accurate computation of divided differences of the exponential function. Math. Comput. 43(168), 501–528 (1984)

Moret, I., Novati, P.: An interpolatory approximation of the matrix exponential based on Faber polynomials. J. Comput. Appl. Math. 131, 361–380 (2001)

Novati, P.: A polynomial method based on Fejèr points for the computation of functions of unsymmetric matrices. Appl. Numer. Math. 44, 201–224 (2003)

Opitz, V.G.: Steigungsmatrizen. Z. Angew. Math. Mech. 44, T52–T54 (1964)

Reichel, L.: Newton interpolation at Leja points. BIT 30, 332–346 (1990)

Schaefer, M.J.: A polynomial based iterative method for linear parabolic equations. J. Comput. Appl. Math. 29, 35–50 (1990)

Stetekluh, J.: http://stetekluh.com/NewtonPoly.html. Accessed 4 Oct 2017

Tal-Ezer, H.: High degree polynomial interpolation in Newton form. SIAM J. Sci. Stat. Comput. 12(3), 648–667 (1991)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Daniel Kressner.

Rights and permissions

About this article

Cite this article

Caliari, M., Kandolf, P. & Zivcovich, F. Backward error analysis of polynomial approximations for computing the action of the matrix exponential. Bit Numer Math 58, 907–935 (2018). https://doi.org/10.1007/s10543-018-0718-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-018-0718-9