Abstract

Twin support vector machine (TSVM) has been widely applied to classification problems. But TSVM is sensitive to outliers and is not efficient enough to realize feature selection. To overcome the shortcomings of TSVM, we propose a novel sparse twin support vector machine with the correntropy-induced loss (C-STSVM), which is inspired by the robustness of the correntropy-induced loss and the sparsity of the \(\ell _1\)-norm regularization. The objective function of C-STSVM includes the correntropy-induced loss that replaces the hinge loss, and the \(\ell _1\)-norm regularization that can make the decision model sparse to realize feature selection. Experiments on real-world datasets with label noise and noise features demonstrate the effectiveness of C-STSVM in classification accuracy and confirm the above conclusion further.

This work was supported in part by the Natural Science Foundation of the Jiangsu Higher Education Institutions of China under Grant No. 19KJA550002, by the Six Talent Peak Project of Jiangsu Province of China under Grant No. XYDXX-054, by the Priority Academic Program Development of Jiangsu Higher Education Institutions, and by the Collaborative Innovation Center of Novel Software Technology and Industrialization.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Recently, feature selection has been a hot area of research to address the curse of dimensionality. Feature selection refers to select an optimal subset of original features, which retains valuable features and eliminates redundant features [14]. This procedure can reduce the complexity of processing data and improve the prediction performance [2]. Many methods about feature selection have been proposed, which can be broadly classified into three types: filter, wrapper, and embedded methods [6, 17, 29]. In this paper, we focus on feature selection using support vector machine (SVM) that is the most representative one of embedded methods.

SVM, based on the principle of structural risk minimization and the theory of VC dimension, has been used to solve classification and regression problems and has a broad variety of application in real-word tasks [1, 3, 13, 16, 19, 24, 27]. For a binary classification problems, SVM aims at seeking a separating hyperplane to maximize the margin between positive and negative samples, which has excellent generalization performance [10]. Unfortunately, SVM has a high-computational complexity because it needs to solve an entire quadratic programming problem (QPP).

Lately, twin support vector machine (TSVM) has been proposed inspired by the idea of SVM [15]. Compared to SVM, TSVM attempts to find two hyperplanes by solving a pair of smaller QPPs for a binary classification task. Thus, TSVM works faster than SVM in theory and becomes a popular classifier. Many variants of TSVM have been proposed, such as twin bounded support vector machine (TBSVM) [20], least squares twin support vector machine (LSTSVM) [18], v-projection twin support vector machien (v-PTSVM) [9], locality preserving projection least squares twin support vector machine (LPPLSTSVM) [8], and new fuzzy twin support vector machine (NFTSVM) [7]. However, these methods do not have sparse representative models or cannot implement feature selection.

In order to improve the feature selection ability or the sparseness performance of TSVM-like methods, many scholars have proposed same improved methods. For example, \(\ell _p\)-norm least square twin support vector machine (\(\ell _p\)-LSTSVM) was proposed by Zhang et al. [30], which can realize feature selection by introducing an adaptive learning procedure with the \(\ell _p\)-norm (\(0< p <1\)). Sparse non-parallel support vector machine (SNSVM) was proposed in [23]. By replacing the hinge loss with both the \(\epsilon \)-insensitive quadratic loss and the soft margin quadratic loss, SNSVM has better sparseness performance than TSVM. Tanveer [22] proposed a new linear programming twin support vector machines (NLPTSVM) that uses the \(\ell _1\)-norm regularization, distance and loss term, which causes the robustness and sparsity of NLPTSVM.

In real applications, data often contains noises or outliers, which would have a negative effect on the generalization performance of the learned model. To remedy it, Xu et al. [26] proposed a novel twin support vector machine (Pin-TSVM) inspired by the pinball loss. Pin-TSVM has favorable noise insensitivity, but it does not consider the sparseness of model. That is to say, the feature selection performance of Pin-TSVM is poor.

In this paper, we propose a novel sparse twin support vector machine with the correntropy-induced loss, called C-STSVM. The correntropy-induced loss function is a smooth, nonconvex and bounded loss, which was designed for both classification and regression tasks in [21]. For a classification task, the correntropy-induced loss measures the similarity between the prediction value and the true value from the perspective of correntropy. In addition, we know that the \(\ell _1\)-norm regularization can induce a sparse model from [28] and [31]. C-STSVM is to minimize three terms: the distance term, the correntropy-induced loss term and the \(\ell _1\)-norm regularization term. In doing so, we expect that C-STSVM have the ability to perform feature selection and the robustness to outliers. To find the solution to C-STSVM in the primal space, we design an alternating iterative method with the help of the half-quadratic (HQ) optimization. Experimental results verify the validity of the these theories.

The paper is organized as follows. Section 2 dwells on the proposed C-STSVM, including the introduction of the correntropy-induced loss and the description of the optimization problems and the solutions of C-STSVM. Section 3 discusses experimental results. The last section contains the conclusions.

2 Sparse Twin Support Vector Machine with Correntropy-Induced Loss

In this paper, we consider a binary classification problem and define a normal training set \(X = \{(\mathbf {x}_{1},y_{1}), \cdots ,(\mathbf {x}_{n_{1}},y_1),(\mathbf {x}_{n_{1}+1},y_2),\cdots ,(\mathbf {x}_{n_{1}+n_{2}},y_{2})\}\) where \(y_1=1\) and \(y_2=-1\) are the positive and negative labels, respectively. Let \(\mathbf{X}_1 = \left[ \mathbf{x}_1,\cdots ,\mathbf{x}_{n_1}\right] ^T \in \mathbb {R}^{{n_1}\times m}\) be the positive sample matrix, \(\mathbf{X}_2 = \left[ \mathbf{x}_{n_1+1},\cdots ,\mathbf{x}_{n_1+n_2}\right] \in \mathbb {R}^{{n_2}\times m}\) be the negative sample matrix, \(\mathbf{e}_1\) and \(\mathbf{e}_2\) be vectors of all ones with appropriate size.

2.1 Correntropy-Induced Loss

For any sample \((\mathbf{x}_i,y_i)\) in the given training set X, the predicted value of \(\mathbf{x}_i\) is defined as \(f(\mathbf{x}_i)\). A loss function can be used to measure the difference between the predicted value \(f(\mathbf{x}_i)\) and the true value \(y_i\). Different loss functions would result in various learners.

Here, we introduce the correntropy-induced loss function. Correntropy is a nonlinear measure of the similarity between two random variables. Inspired by that, Singh [21] presented the correntropy-induced loss function that is to maximize the similarity between the predicted values and the true values for classification tasks. The correntropy-induced loss function has the form:

where \(\beta =[1-\exp (-\frac{1}{2\sigma ^2})]^{-1}\) and \(\sigma >0\) is the parameter. Note that \(L_{C}(0)=1\) when \(y_if(\mathbf{x}_i)=0\).

As an mean square error in reproducing kernel Hilbert space, the correntropy-induced loss can further approximate a transition from like the \(\ell _2\)-norm to like the \(\ell _0\)-norm. The curve of the correntropy-induced loss is shown in Fig. 1. We can see that the correntropy-induced loss is smooth, non-convex and bounded. For its boundedness, the correntropy-induced loss is robust to outliers.

2.2 Optimization Problems

Similar to TSVM [15], the aim of C-STSBM is to seek two optimal hyperplanes: positive and negative ones, where the positive hyperplane is closer to the positive samples and as far as possible from the negative samples, and the same goes for the negative hyperplane. The two optimal hyperplanes are defined by the following discrimination functions:

where \(\mathbf{w}_1\) and \(\mathbf{w}_2\) are the weight vectors for positive and negative classes, respectively, \(b_1\) and \(b_2\) are the thresholds for two classes, respectively. To represent these weight vectors and thresholds, we construct four non-negative vectors and four non-negative variables, which is \(\varvec{\beta }^*_+\), \(\varvec{\beta }_+^{ }\), \(\varvec{\beta }_-^{*}\), \(\varvec{\beta }_-\), \(\gamma ^*_+\), \(\gamma _+\), \(\gamma _-^*\) and \(\gamma _-^{ }\), and let

Then, (2) can be rewritten as

C-STSVM can be described as the following pair of QPPs:

where \(C_i>0,i=1,2,3,4\) are parameters chosen a priori and \(||\cdot ||_i,~i=1,2\) is the \(\ell _i\)-norm. The first term of the first QPP in (5) is to minimize the distance between the outputs of positive samples and the hyperplane \(f_1(\mathbf{x}) = 0\). The second term is the \(\ell _1\)-norm regularization term that can reduce a sparseness solution of C-STSVM. The third term is to minimize the sum of correntropy-induced loss function, which makes the negative samples as far as from the positive hyperplane. Note that QPPs in (5) are similar to each other in form. Thus, for the second QPP in (5), we have a similar explanation.

We denote the first two terms of QPPs in (5) by

The third term in the first QPP of (5) can be expressed as:

Let

Then minimizing (7) is identical to maximizing \(L_1(\varvec{\beta }^*_+, \varvec{\beta }_+^{ }, \gamma ^*_+, \gamma _+^{ })\).

Thus, we can represent the first QPP in (5) as

Similarly, the second QPP in (5) can be rewritten as follows:

where

2.3 Solutions

In this subsection, we turn the optimization problems (8) and (9) into two HQ optimization ones and use the alternating iterative method to find their solutions.

We first define two auxiliary variables \(\mathbf {v}=[v_1,\cdots ,v_{n_2}]^T\) and \(\mathbf {v}'=[v'_1,\cdots ,v'_{n_1}]^T\), where \(v_i<0\) and \(v'_i<0\), and then construct two convex functions

Because each \(g(v_i),i=1,\cdots ,n_2\) and \(g(v'_i),i=1,\cdots ,n_1\) is independent of others, we analyze each \(g(v_i)\) and \(g(v'_i)\) separately.

Based on [4, 5], we can derive the conjugate function \(g^*(u_i)\) and \(g^*(u'_i)\) of \(g(v_i)\) and \(g(v'_i)\) respectively:

where \(v_i\) and \(v'_i\) are the optimization variables of the right hand of (11) respectively, and the supremums can be achieved at \(v_i=-exp(-u_i)\) and \(v'_i=-exp(-u'_i)\) respectively. Substituting \(v_i=-exp(-u_i)\) and \(v'_i=-exp(-u'_i)\) into (11), we have

Hence, we have the conjugate function \(G_1^*(\mathbf{u})\) and \(G_2^*(\mathbf{u}')\) of \(G_1(\mathbf{v})\) and \(G_2(\mathbf{v}')\) respectively, their forms as

where \(\sup \{\cdot \}\) represents the upper bounded of a variable.

Let \(u_i=\frac{\left( 1+(\varvec{\beta }^*_+-\varvec{\beta }_+^{ })^T\mathbf {x}_{2i}+(\gamma _+^* -\gamma _+^{ })\right) ^2}{2\sigma ^2}\), \(u'_i=\frac{\left( 1-(\varvec{\beta }^*_--\varvec{\beta }_-^{ })^T\mathbf {x}_{1i}-(\gamma _+^* -\gamma _+^{ })\right) ^2}{2\sigma ^2}\), (13) can be described as

where \(h(v_i|\varvec{\beta }^*_+, \varvec{\beta }_+^{ }, \gamma ^*_+, \gamma _+^{ })=\left( v_i \frac{\left( 1+(\varvec{\beta }^*_+-\varvec{\beta }_+^{ })^T\mathbf {x}_{2i}+(\gamma _+^* -\gamma _+^{ })\right) ^2}{2\sigma ^2} -g(v_i)\right) \), \(i=1,\cdots ,n_2\) and \(h(v'_i|\varvec{\beta }^*_-, \varvec{\beta }_-^{ }, \gamma ^*_-, \gamma _-^{ })=\left( v'_i \frac{\left( 1-(\varvec{\beta }^*_--\varvec{\beta }_-^{ })^T\mathbf {x}_{1i}-(\gamma _+^* -\gamma _+^{ })\right) ^2}{2\sigma ^2} -g(v' _i)\right) \), \(i=1,\cdots ,n_1\).

Obviously, we have

where the supremums of \(L_1(\varvec{\beta }^*_+, \varvec{\beta }_+^{ }, \gamma ^*_+, \gamma _+)\) and \(L_2(\varvec{\beta }^*_-, \varvec{\beta }_-^{ }, \gamma ^*_-, \gamma _-^{ })\) are achieved at

respectively. Thus, the optimization problems (8) and (9) with four variables can be turned to a HQ optimization problem with five variables:

From (17), we use the alternating iterative method to solve the optimization problem (17).

First, given \((\varvec{\beta }^*_+, \varvec{\beta }_+^{ }, \gamma ^*_+, \gamma _+^{ })\) and \((\varvec{\beta }^*_-, \varvec{\beta }_-^{ }, \gamma ^*_-, \gamma _-^{ })\) to optimize \(\mathbf {v}\) and \(\mathbf {v}'\) respectively. So that, (17) can be reduced to two independent functions with only respect to \(v_i\) or \(v'_i\):

Second, given \(\mathbf {v}\) and \(\mathbf {v}'\) to optimize \((\varvec{\beta }^*_+, \varvec{\beta }_+^{ }, \gamma ^*_+, \gamma _+^{ })\) and \((\varvec{\beta }^*_-, \varvec{\beta }_-^{ }, \gamma ^*_-, \gamma _-^{ })\) respectively. The optimization problems (17) can be rewritten as:

To solve the optimization problem (19) easily, we rewrite it as follows:

where \(\varvec{\upxi }=[\xi _1,\cdots ,\xi _{n_2}]^T\) and \(\varvec{\upxi }'=[\xi '_1,\cdots ,\xi '_{n_1}]^T\) are the slack variables, \(\xi _i=1+(\varvec{\beta }^*_+-\varvec{\beta }_+^{ })^T\mathbf {x}_{2i}+(\gamma _+^* -\gamma _+^{ })\), \(i=1,\cdots ,n_2\), \(\xi '_i=1-(\varvec{\beta }^*_--\varvec{\beta }_-^{ })^T\mathbf {x}_{1i}-(\gamma _-^* -\gamma _-^{ })\), \(i=1,\cdots ,n_1\), \(C'_2=C_2/\left( 2n_2 \sigma ^2 \right) \), \(C'_4=C_4/\left( 2n_1 \sigma ^2 \right) \) and \(\varvec{\varOmega }=\frac{1}{n_2}diag(-\mathbf {v})\in \mathbb {R}^{n_2\times n_2}\), \(\varvec{\varOmega }'=\frac{1}{n_1}diag(-\mathbf {v}')\in \mathbb {R}^{n_1\times n_1}\).

Further, let \(\varvec{\upalpha }_1=\left[ \begin{array}{cccc} {\varvec{\beta }_+^{*T}}, &{}{\varvec{\beta }_+^T},&{}\gamma _+^*,&{}\gamma _+^{ } \\ \end{array} \right] ^T\) , \(\varvec{\upalpha }_2=\left[ \begin{array}{ccccc} {\varvec{\beta }_-^{*T}} , &{}{\varvec{\beta }_-^T}, &{} \gamma _-^* , &{} \gamma _-^{ }\\ \end{array} \right] ^T \) , \(\varvec{\zeta }_1=\left[ \begin{array}{ccccc} C_1 \mathbf{1}_{m}^T, &{} C_1 \mathbf{1}_{m}^T, &{} C_1, &{} C_1\\ \end{array}\right] ^T\) , \(\varvec{\zeta }_2 = \left[ \begin{array}{ccccc} C_3 \mathbf{1}_{m}^T ,&{} C_3 \mathbf{1}_{m}^T ,&{} C_3, &{}C_3 \\ \end{array} \right] ^T\) , \(\mathbf{M}_1=[\mathbf {X}_2, -\mathbf {X}_2, 1, -1]\) , \(\mathbf{M}_2=[-\mathbf {X}_1,\mathbf {X}_1,-1,1]\) , and

Then substitute the equality constraints into the objective function in (20), we can derive that

where \(\mathbf{H}_1=2\lambda '_1\mathbf{M}_1\varvec{\varOmega }\mathbf{M}_1+\mathbf{Q}_1\), \(\mathbf{H}_2=(2\lambda '_2\mathbf{M}_2^T\varvec{\varOmega }'\mathbf{M}_2+\mathbf{G})\), \(\mathbf {f}_1^T=2C'_21^T\varvec{\varOmega }\mathbf{M}_1+\varvec{\zeta }_1^T\), and \(\mathbf{f}_2^T=2C'_41^T\varvec{\varOmega }'\mathbf{M}_2+\varvec{\zeta }_2^T\).

Once we have the values of \(\varvec{\upalpha }_1\) and \(\varvec{\upalpha }_2\), the hyperplanes \(f_1(\mathbf{x})=0\) and \(f_2(\mathbf{x})=0\) can be obtained. Hence, given a new sample \(\mathbf{x}\), its class \(i (i=1,2)\) is

3 Numerical Experiments

In this section, we carry out experiments to testify the validity of the novel algorithm C-STSVM. We compare C-STSVM with the state-of-the-art methods, including SVM [10], TSVM [15], TBSVM [20], KCC [25], Pin-TSVM [26], LSTSVM [18], \(\ell _p\)LSTSVM [30], NLPTSVM [22] and SNSVM [23], where SVM, TSVM, and TBSVM are three traditional algorithms, KCC is a linear classifier with the correntropy-induced loss, Pin-TSVM and LSTSVM introduce the pinball loss and the square loss into TSVM, respectively, and the others were proposed for obtaining sparse models.

For all SVM-like methods, the linear kernel or the linear version is adopted. All experiments are implemented in MATLAB R2016a on Windows 10 running on a PC with a 3.0 GHz Intel Core and 8 GB of memory.

3.1 Data Description and Experimental Setting

We carry out experiments on nine UCI datasets [11]: Australian (690 samples and 14 features), Breast (288 samples and 9 features), German (1000 samples and 24 features), Heart (270 samples and 13 features), Pima (768 samples and 8 features), Sonar (208 samples and 60 features), Tic\(\_\)tac\(\_\)toe (958 samples and 9 features), Vote (435 samples and 16 features) and Wdbc (569 samples and 30 features).

The repeated double cross validation [12] was used to select parameters and give the final average result. The five-fold cross validation method is used in twice. First, each dataset is randomly divided into five parts, where one part is taken as the test set at a time and the remaining four parts are used as the calibration set. Next, each calibration set is randomly split into five parts, where we take four parts as training set and the rest part as the validation set. In this process, we train models on the training set using the regularization parameters in the range of \(\{2^{-3},\cdots , 2^3\}\) one by one, and apply the trained-model to the validation set to select optimal parameters. Then we train a model on the calibration set using the optimal parameters and apply the trained-model to the test set to obtain the result.

This process is repeated five times, and the average results of five trials are reported. In addition, the parameter \(\sigma \) in both KCC and C-STSVM is fixed at \(\sigma =0.5\) empirically.

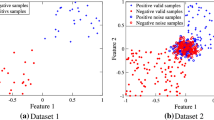

3.2 Robustness to Outliers

In order to demonstrate the robustness to outlier of C-STSVM clearly, we test our method C-STSVM and the other comparison methods on the nine UCI datasets with \(0\%\) and \(10\%\) label noise.

Table 1 shows the results for the original UCI datasets and the best classification accuracy is highlighted in bold. It can be seen that C-TSVM has satisfying results. The C-TSVM method obtains the highest classification accuracy on five out of nine datasets, while SVM obtain the best classification accuracy on Heart and Wdbc dataset, and \(\ell _p\)LSTSVM and Pin-TSVM has the best performance on Tic_tac_toe and Sonar dataset respectively. Then, we corrupt the label of each calibration set. For each calibration set, the ratio of label noise is \(10\%\). In this case, the average accuracy and standard deviation are presented on Table 2. As shown in Table 2, C-TSVM has the best performance on six out of nine datasets in terms of classification accuracy. TSVM and Pin-TSVM has the best accuracy on Australian and Sonar dataset respectively.

From the comparison between Table 1 and 2, we can state the following points: (1) The accuracy of C-STSVM has a small change by increasing the number of label noise and has the best classification accuracy on the most of datasets; (2) The accuracy of Pin-TSVM on German and Pima dataset have a substantial reduction by increasing the number of label noise; (3) SVM has the best accuracy on two out of original nine datasets. However, as the number of label noise increases, its classification advantage does not seem to be maintained. (4) The performances of TSVM, TBSVM, KCC, LSTSVM, \(\ell _p\)LSTSVM, NLPTSVM and SNSVM method are average. In summary, C-STSVM has the better robustness and classification accuracy to outlier.

3.3 The Ability of Feature Selection

We add 50 noise features to each UCI dataset to validate the ability of feature selection of these methods, where the last 50 features are noise features and the others are valid features. These noise features are drawn from the Gaussian distribution with 0 mean and 0.01 variance. Table 3 summarizes the results of numerical experiments. It is easy to see that the accuracy of C-STSVM is significantly better than other methods on most of datasets. Pin-TSVM and NLPTSVM algorithm has the best on Sonar and Wdbc dataset respectively, followed by C-STSVM. Compare Table 1 and Table 3, we can find that the increase in noise features does not negatively affect classification accuracy of C-STSVM, and it still maintains better classification performance than other methods.

In order to clearly show the feature selection performance, we calculated the ratio of sum of the absolute values of the \(\mathbf{w}_1\) (\(\mathbf{w}_2\)) corresponding to the valid features to the sum of the absolute values of total \(\mathbf{w}_1\) (\(\mathbf{w}_2\)) and Table 4 shows the results of some TSVM-like methods. In theory, the greater the contribution of a feature to the classification result, the greater the weight value corresponding to this feature. Hence, for a weight value obtained by training some algorithm, the greater the proportion of weight values corresponding to the valid features, the better the feature selection performance of this algorithm. In Table 4, the highest proportion is shown in bold figures. Table 4 depicts NLPTSVM and C-STSVM has the best feature selection performance on the most of datasets, followed by Pin-TSVM. But the accuracy of C-STSVM is higher than NLPTSVM and Pin-TSVM. The feature selection performance of TSVM, TBSVM, LSTSVM, \(\ell _p\)LSTSVM, and SNSVM are unsatisfactory. The results in Table 3 and Table 4 undoubtedly prove that C-STSVM is significantly better than other compared methods in feature selection performance and the classification accuracy.

4 Conclusion

This paper proposes a novel sparse twin support vector machine with the correntropy-induced loss (C-STSVM). Because the correntropy-induced loss has favorable robustness to outliers and the \(\ell _1\)-norm regularization can induce a sparse model, we expect that C-STSVM has a satisfactory robustness to outliers and a sparseness solution to implement feature selection. To validate the robustness of C-STSVM, we carry out experiments on nine UCI datasets with \(0\%\) and \(10\%\) label noise. To validate the performance of C-STSVM to select features, we conduct experiments on nine UCI datasets with 50 noise features. Experiments results confirm that C-STSVM is significantly better than other compared methods in robustness to outliers, feature selection performance, and classification accuracy.

Since C-STSVM achieves a good performance in the binary classification tasks, we plan to extend C-STSVM to regression estimation and multi-class classification tasks in future.

References

Adankon, M.M., Cheriet, M.: Model selection for LS-SVM: application to handwriting recognition. Pattern Recogn. 42(12), 3264–3270 (2009)

Jain, A.K., Robert, P.W., Duin, J.M.: Statistical pattern recognition: a review. IEEE Trans. Pattern Anal. Mach. Intell. 22(1), 4–37 (2000)

Arjunan, S.P., Kumar, D.K., Naik, G.R.: A machine learning based method for classification of fractal features of forearm sEMG using twin support vector machines. In: Proceedings of the IEEE Annual International Conference of the Engineering in Medicine and Biology Society, pp. 4821–4824 (2010)

Borwein, J., Lewis, A.: Convex Analysis and Nonlinear Optimization: Theory and Examples, 2nd edn. Springer, New York (2006). https://doi.org/10.1007/978-0-387-31256-9

Boyd, S.P., Vandenberghe, L.: Convex Optimization. Cambridge University, Cambridge (2004)

Chandrashekar, G., Sahin, F.: A survey on feature selection methods. Comput. Electr. Eng. 40, 16–28 (2014)

Chen, S., Wu, X.: A new fuzzy twin support machine for pattern classification. Int. J. Mach. Learn. Cybernet. 9, 1553–1564 (2018)

Chen, S., Wu, X., Xu, J.: locality preserving projection least squares twin support vector machine for pattern classification. Pattern Anal. Appl. 23, 1–13 (2020)

Chen, W., Shao, Y., Li, C., Liu, M., Wang, Z., Deng, N.: \( v\)-projection twin support vector machine for pattern classification. Neurocomputing 376, 10–24 (2020)

Cristianini, N., Shawe-Taylor, J.: An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods. Cambridge University, Cambridge (2000)

Dheeru, D., Karra Taniskidou, E.: UCI machine learning repository (2017). http://archive.ics.uci.edu/ml

Filzmoser, P., Liebmann, B., Varmuza, K.: Repeated double cross validation. J. Chemom. 23(4), 160–171 (2008)

Hua, X., Ding, S.: Locality preserving twin support vector machines. J. Comput. Res. Dev. 51(3), 590–597 (2014)

Isabelle Guyon, A.E.: An introduction to variable and feature selection. J. Mach. Learn. Res. 3, 1157–1182 (2003)

Jayadeva, Khemchandani, R., Chandra, S.: Twin support vector machine for pattern classification. IEEE Trans. Pattern Anal. Mach. Intell. 29(5), 905–910 (2007)

Khan, N., Ksantini, R., Ahmad, I., Oufama, B.: A novel SVM+NDA model for classification with an application to face recognition. Pattern Recogn. 45(1), 66–79 (2012)

Kohavi, R., John, G.H.: Wrappers for feature subset selection. Artif. Intell. 97, 273–324 (1997). https://doi.org/10.1016/S0004-3702(97)00043-X

Kumar, M.A., Gopall, M.: Least squares twin support vector machine for pattern classification. Expert Syst. Appl. 36, 7535–7543 (2009)

Liu, M., Dai, B., Xie, Y., Ya, Z.: Improved GMM-UBM/SVM for speaker verification. In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 1, pp. 1925–1928 (2006)

Shao, Y., Zhang, C., Wang, X., Deng, N.: Improvements on twin support vector machine. IEEE Trans. Neural Netw. 22(6), 962–968 (2011)

Singh, A., Principe, J.C.: A loss function for classification based on a robust similarity metric. In: Proceedings of IEEE International Joint Conference on Neural Network, pp. 1–6 (2010)

Tanveer, M.: Robust and sparse linear programming twin support vector machines. Cogn. Comput. 7(1), 137–149 (2015)

Tian, Y., Ju, X., Qi, Z.: Efficient sparse nonparallel support vector machines for classification. Neural Comput. Appl. 24(5), 1089–1099 (2013). https://doi.org/10.1007/s00521-012-1331-5

Wang, X., Wang, T., Bu, J.: Color image segmentation using pixel wise support vector machine classification. Pattern Recogn. 44(4), 777–787 (2011)

Xu, G., Hu, B., Principe, J.C.: Robust C-loss kernel classifiers. IEEE Trans. Neural Netw. Learn. Syst. 29(3), 510–522 (2018)

Xu, Y., Yang, Z., Pan, X.: A novel twin support-vector machine with pinball loss. IEEE Trans. Neural Netw. Learn. Syst. 28(2), 359–370 (2017)

Xue, Z., Ming, D., Song, W., Wan, B., Jin, S.: Infrared gait recognition based on wavelet transform and support vector machine. Pattern Recogn. 43(8), 2904–2910 (2010)

Zhang, L., Zhou, W.: On the sparseness of 1-norm support vector machines. Neural Netw. 23(3), 373–385 (2010)

Zhang, X., Wu, G., Dong, Z., Curran, C.: Embedded feature-selection support vector machine for driving pattern recognition. J. Franklin Inst. 352, 669–685 (2015)

Zhang, Z., Zhen, L., Deng, N., Tan, J.: Sparse least square twin support vector machine with adaptive norm. Appl. Intell. 41(4), 1097–1107 (2014). https://doi.org/10.1007/s10489-014-0586-1

Zhu, J., Rosset, S., Hastie, T., Tibshirani, R.: 1-norm support vector machines. In: Proceedings of the 16th International Conference on Neural Information Processing Systems, vol. 16, no. 1, pp. 49–56 (2003)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Zheng, X., Zhang, L., Yan, L. (2020). Feature Selection Using Sparse Twin Support Vector Machine with Correntropy-Induced Loss. In: Li, G., Shen, H., Yuan, Y., Wang, X., Liu, H., Zhao, X. (eds) Knowledge Science, Engineering and Management. KSEM 2020. Lecture Notes in Computer Science(), vol 12274. Springer, Cham. https://doi.org/10.1007/978-3-030-55130-8_38

Download citation

DOI: https://doi.org/10.1007/978-3-030-55130-8_38

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-55129-2

Online ISBN: 978-3-030-55130-8

eBook Packages: Computer ScienceComputer Science (R0)