Abstract

We approach the task of computing a carefully synchronizing word of minimum length for a given partial deterministic automaton, encoding the problem as an instance of SAT and invoking a SAT solver. Our experimental results demonstrate that this approach gives satisfactory results for automata with up to 100 states even if very modest computational resources are used.

Supported by the Ministry of Science and Higher Education of the Russian Federation, projects no. 1.580.2016 and 1.3253.2017, and the Competitiveness Enhancement Program of Ural Federal University.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Nondeterministic automaton

- Deterministic automaton

- Partial deterministic automaton

- Careful synchronization

- Carefully synchronizing word

- SAT

- SAT solver

1 Introduction

A nondeterministic finite automaton (NFA) is a triple \(\langle Q,\varSigma ,\delta \rangle \), where Q and \(\varSigma \) are finite non-empty sets called the state set and the input alphabet respectively, and \(\delta \) is a subset of \(Q\times \varSigma \times Q\). The elements of Q and \(\varSigma \) are called states and letters, respectively, and \(\delta \) is referred to as the transition relationFootnote 1. For each pair \((q,a)\in Q\times \varSigma \), we denote by \(\delta (q,a)\) the subset \(\{q'\mid (q,a,q')\in \delta \}\) of Q; this way \(\delta \) can be viewed as a function \(Q\times \varSigma \rightarrow \mathcal {P}(Q)\), where \(\mathcal {P}(Q)\) is the power set of Q. When we treat \(\delta \) as a function, we refer to it as the transition function.

Let \(\varSigma ^*\) stand for the collection of all finite words over the alphabet \(\varSigma \), including the empty word \(\varepsilon \). The transition function extends to a function \(\mathcal {P}(Q)\times \varSigma ^*\rightarrow \mathcal {P}(Q)\), still denoted \(\delta \), in the following inductive way: for every subset \(S\subseteq Q\) and every word \(w\in \varSigma ^*\), we set

(Here the set \(\delta (S,v)\) is defined by the induction assumption since v is shorter than w.) We say that a word \(w\in \varSigma ^*\) is undefined at a state \(q\in Q\) if the set \(\delta (q,w)\) is empty; otherwise w is said to be defined at q.

When we deal with a fixed NFA, we suppress the sign of the transition relation, introducing the NFA as the pair \(\langle Q,\varSigma \rangle \) rather than the triple \(\langle Q,\varSigma ,\delta \rangle \) and writing \(q.w\) for \(\delta (q,w)\) and \(S.w\) for \(\delta (S,w)\).

A partial (respectively, complete) deterministic automaton is an NFA \(\langle Q,\varSigma \rangle \) such that \(|q.a|\le 1\) (respectively, \(|q.a|=1\)) for all \((q,a)\in Q\times \varSigma \). We use the acronyms PFA and CFA for the expressions ‘partial deterministic automaton’ and ‘complete deterministic automaton’, respectively.

A CFA \({\mathscr {A}}=\langle Q,\varSigma \rangle \) is called synchronizing if there exists a word \(w\in \varSigma ^*\) whose action leaves the automaton in one particular state no matter at which state in Q it is applied: \(q.w=q'.w\) for all \(q,q'\in Q\). Any w with this property is said to be a synchronizing word for the automaton.

Synchronizing automata serve as simple yet adequate models of error-resistant systems in many applied areas (system and protocol testing, information coding, robotics). At the same time, synchronizing automata surprisingly arise in some parts of pure mathematics and theoretical computer science (symbolic dynamics, theory of substitution systems, formal language theory). We refer to the survey [39] and the chapter [20] of the forthcoming ‘Handbook of Automata Theory’ for a discussion of synchronizing automata as well as their diverse connections and applications. From both applied and theoretical viewpoints, the key question is to find the optimal, i.e., shortest reset word for a given synchronizing automaton. Under standard assumptions of complexity theory, this optimization question is known to be computationally hard; see [20, Section 2] for a summary of various hardness results in the area. As it is quite common for hard problems of applied importance, there have been many attempts to develop practical approaches to the question. These approaches have been based on certain heuristics [1, 17, 18] and/or popular techniques, including (but not limiting to) binary decision diagrams [29], genetic and evolutionary algorithms [19, 32], satisfiability solvers [38], answer set programming [12], hierarchical classifiers [30], and machine learning [31].

The present authors [36, 37] have initiated an extension to the realm of NFAs of the approach of [38]. Here we consider a more restricted class, namely, that of PFAs, where studying synchronization issues appears to be much better motivated. While we follow the general strategy of and re-use some technical tricks from [36, 37], our present constructions heavily depend on the specifics of partial automata and have not been obtained via specializing the constructions of those earlier papers.

The rest of the paper is structured as follows. In Sect. 2 we describe and motivate the version of PFA synchronization that we have studied. In Sect. 3 we first outline the approach based on satisfiability solvers and then explain in detail how we encode PFAs and their synchronization problems as instances of the Boolean satisfiability problem. In Sect. 4 we provide samples of our experimental results and conclude in Sect. 5 with a brief discussion of the future work.

We have tried to make the paper, to a reasonable extent, self-contained, except for a few discussions that involve some basic concepts of computational complexity theory. These concepts can be found, e.g., in the early chapters of the textbook [28].

2 Synchronization of NFAs and PFAs

The concept of synchronization of CFAs as defined in Sect. 1 was extended to NFAs in several non-equivalent ways. The following three nowadays popular versions were suggested and analyzed in [13] in 1999 (although, in an implicit form, some of them appeared in the literature much earlier, see, e.g., [5, 11]). For \(i\in \{1,2,3\}\), an NFA \(\mathscr {A}=\langle Q,\varSigma \rangle \) is called \(D_i\)-synchronizing if there exists a word \(w\in \varSigma ^*\) that satisfies the condition \((D_i)\) from the list below:

-

\((D_{1})\): \(|q.w|=|Q.w|=1\) for all \(q\in Q\);

-

\((D_{2})\): \(q.w=Q.w\) for all \(q\in Q\);

-

\((D_{3})\): \(\bigcap _{q\in Q}q.w\ne \varnothing \).

Any word satisfying \((D_i)\) is called \(D_{i}\)-synchronizing for \(\mathscr {A}\). The definition readily yields the following properties of \(D_{i}\)-synchronizing words:

Lemma 1

-

(a)

A \(D_1\)- or \(D_3\)-synchronizing word is defined at each state.

-

(b)

A \(D_2\)-synchronizing word is either defined at each state or undefined at each state.

-

(c)

Every \(D_1\)-synchronizing word is both \(D_2\)- and \(D_3\)-synchronizing; every \(D_2\)-synchronizing word defined at each state is \(D_3\)-synchronizing.

In [37] we adapted the approach based on satisfiability solvers to finding \(D_3\)-synchronizing words of minimum length for NFAs. The first-named author used a similar method in the cases of \(D_1\)- and \(D_2\)-synchronization; results related to \(D_2\)-synchronization were reported in [36].

Yet another version of synchronization for NFAs was introduced in [15] and systematically studied in [23,24,25,26,27], which terminology we adopt. An NFA \(\mathscr {A}=\langle Q,\varSigma \rangle \) is called carefully synchronizing if there is a word \(w=a_1\cdots a_\ell \), with \(a_1,\dots ,a_\ell \in \varSigma \), that satisfies the condition (C), being the conjunction of (C1)–(C3) below:

-

(C1): the letter \(a_1\) is defined at every state in Q;

-

(C2): the letter \(a_t\) with \(1<t\le \ell \) is defined at every state in \(Q.a_1\cdots a_{t-1}\),

-

(C3): \(|Q.w|=1\).

Any w satisfying (C) is called a carefully synchronizing word (c.s.w., for short) for \(\mathscr {A}\). Thus, when a c.s.w. is applied at any state in Q, no undefined transition occurs during the course of application. Every carefully synchronizing word is clearly \(D_{1}\)-synchronizing but the converse is not true in general; moreover, a \(D_{1}\)-synchronizing NFA may admit no c.s.w.

In this paper we focus on carefully synchronizing words for PFAs. There are several theoretical and practical reasons for this.

On the theoretical side, it is easy to see that each of the conditions (C), \((D_{1})\), \((D_{3})\) leads to the same notion when restricted to PFAs. As for \(D_2\)-synchronization, if a word w is \(D_{2}\)-synchronizing for a PFA \(\mathscr {A}\), then w carefully synchronizes \(\mathscr {A}\) whenever w is defined at each state. Otherwise w is nowhere defined by Lemma 1b, and such ‘annihilating’ words are nothing but usual synchronizing words for the CFA obtained from \(\mathscr {A}\) by adding a new sink state and making all transitions undefined in \(\mathscr {A}\) lead to this sink state. Synchronization of CFAs with a sink state is relatively well understood (see [35]), and therefore, we may conclude that \(D_2\)-synchronization also reduces to careful synchronization in the realm of PFAs. On the other hand, there exists a simple transformation that converts every NFA \(\mathscr {A}=\langle Q,\varSigma \rangle \) into a PFA \(\mathscr {B}=\langle Q,\varSigma '\rangle \) such that \(\mathscr {A}\) is \(D_{3}\)-synchronizing if and only if so is \(\mathscr {B}\) and the minimum lengths of \(D_{3}\)-synchronizing words for \(\mathscr {A}\) and \(\mathscr {B}\) are equal; see [14, Lemma 8.3.8] and [16, Lemma 3]. These observations demonstrate that from the viewpoint of optimal synchronization, studying carefully synchronizing words for PFAs may provide both lower and upper bounds applicable to arbitrary NFAs and all aforementioned kinds of synchronization.

Probably even more important is the fact that careful synchronization of PFAs is relevant in numerous applications. Due to the page limit, we mention only two examples here.

In industrial robotics, synchronizing automata are widely used to design feeders, sorters, and orienters that work with flows of certain objects carried by a conveyer. The goal is achieved by making the flow encounter passive obstacles placed appropriately along the conveyer belt; see [21, 22] for the origin of this automata approach and [2] for an illustrative example. Now imagine that the objects to be oriented or sorted have a fragile side that could be damaged if hitting an obstacle. In order to prevent any damage, we have to forbid ‘dangerous’ transitions in the automaton modelling the orienter/sorter so that the automaton becomes partial and the obstacle sequences must correspond to carefully synchronizing words. (Actually, the term ‘careful synchronization’ has been selected with this application in mind.)

Our second example relates to so-called synchronized codesFootnote 2. Recall that a prefix code over a finite alphabet \(\varSigma \) is a set X of words in \(\varSigma ^*\) such that no word of X is a prefix of another word of X. Decoding of a finite prefix code X over \(\varSigma \) can be implemented by a finite deterministic automaton \(\mathscr {A}_X\) whose state Q is the set of all proper prefixes of the words in X (including the empty word \(\varepsilon \)) and whose transitions are defined as follows: for \(q\in Q\) and \(a\in \varSigma \),

In general, \(\mathscr {A}_X\) is a PFA (it is complete if and only if the code X is not contained in another prefix code over \(\varSigma \)). It can be shown that if \(\mathscr {A}_X\) is carefully synchronizing, the code X has a very useful property: whenever a loss of synchronization between the decoder and the coder occurs (because of a channel error), it suffices to transmit a c.s.w. w of \(\mathscr {A}_X\) such that w sends all states in Q to the state \(\varepsilon \) to ensure that the following symbols will be decoded correctly.

We may conclude that the problems of determining whether or not a given PFA is carefully synchronizing and of finding its shortest carefully synchronizing words are both natural and important. The bad news is that these problems turn out to be quite difficult: it is known that the first problem is PSPACE-complete and that the minimum length of carefully synchronizing words for carefully synchronizing PFAs can be exponential as a function of the number of states. (These results were found in [33, 34] and later rediscovered and strengthened in [25].) Thus, one has to use some tools that have proved to be efficient for dealing with computationally hard problems. As mentioned in Sect. 1, in this paper we make an attempt to employ a satisfiability solver as such a tool.

3 Encoding

For completeness, recall the formulation of the Boolean satisfiability problem (SAT). An instance of SAT is a pair (V, C), where V is a set of Boolean variables and C is a collection of clauses over V. (A clause over V is a disjunction of literals and a literal is either a variable in V or the negation of a variable in V.) Any truth assignment on V, i.e., any map \(\varphi :V\rightarrow \{0,1\}\), extends to a map \(C\rightarrow \{0,1\}\) (still denoted by \(\varphi \)) via the usual rules of propositional calculus: \(\varphi (\lnot x)=1-\varphi (x)\), \(\varphi (x\vee y)=\max \{\varphi (x),\varphi (y)\}\). A truth assignment \(\varphi \) satisfies C if \(\varphi (c)=1\) for all \(c\in C\). The answer to an instance (V, C) is YES if (V, C) has a satisfying assignment (i.e., a truth assignment on V that satisfies C) and NO otherwise.

By Cook’s classic theorem (see, e.g., [28, Theorem 8.2]), SAT is NP-complete, and by the very definition of NP-completeness, every problem in NP reduces to SAT. On the other hand, over the last score or so, many efficient SAT solvers, i.e., specialized programs designed to solve instances of SAT have been developed. Modern SAT solvers can solve instances with hundreds of thousands of variables and millions of clauses within a few minutes. Due to this progress, the following approach to computationally hard problems has become quite popular nowadays: one encodes instances of such problems into instances of SAT that are then fed to a SAT solverFootnote 3. It is exactly the strategy that we want to apply.

We start with the following problem:

CSW (the existence of a c.s.w. of a given length):

Input: a PFA \(\mathscr {A}\) and a positive integer \(\ell \) (given in unary);

Output: YES if \(\mathscr {A}\) has a c.s.w. of length \(\ell \);

NO otherwise.

We have to assume that the integer \(\ell \) is given in unary because with \(\ell \) given in binary, a polynomial time reduction from CSW to SAT is hardly possible. (Indeed, it easily follows from [25] that the version of CSW in which the integer parameter is given in binary is PSPACE-hard, and the existence of a polynomial reduction from a PSPACE-hard problem to SAT would imply that the polynomial hierarchy collapses at level 1.) In contrast, the version of CSW with the unary integer parameter is easily seen to belong to NP: given an instance \((\mathscr {A}=\langle Q,\varSigma \rangle ,\ell )\) of CSW in this setting, guessing a word \(w\in \varSigma ^*\) of length \(\ell \) is legitimate. Then one just checks whether or not w is carefully synchronizing for \(\mathscr {A}\), and time spent for this check is clearly polynomial in the size of \((\mathscr {A},\ell )\).

Now, given an arbitrary instance \((\mathscr {A},\ell )\) of CSW, we construct an instance (V, C) of SAT such that the answer to \((\mathscr {A},\ell )\) is YES if and only if so is the answer to (V, C). Our encoding follows general patterns presented in [6, Chapters 2 and 16] but has some specific features so that we describe it in full detail and provide a rigorous proof of its adequacy. In the following presentation of the encoding, precise definitions and statements are interwoven with less formal comments explaining the ‘physical’ meaning of variables and clauses.

So, take a PFA \(\mathscr {A}=\langle Q,\varSigma \rangle \) and an integer \(\ell >0\). Denote the sizes of Q and \(\varSigma \) by n and m respectively, and fix some numbering of these sets so that \(Q=\{q_1,\dots ,q_n\}\) and \(\varSigma =\{a_1,\dots ,a_m\}\).

We start with introducing the variables used in the instance (V, C) of SAT that encodes \((\mathscr {A},\ell )\). The set V consists of two sorts of variables: \(m\ell \) letter variables \(x_{i,t}\) with \(1\le i\le m\), \(1\le t\le \ell \), and \(n(\ell +1)\) state variables \(y_{j,t}\) with \(1\le j\le n\), \(0\le t\le \ell \). We use the letter variables to encode the letters of a hypothetical c.s.w. w of length \(\ell \): namely, we want the value of the variable \(x_{i,t}\) to be 1 if and only if the t-th letter of w is \(a_i\). The intended meaning of the state variables is as follows: we want the value of the variable \(y_{j,t}\) to be 1 whenever the state \(q_j\) belongs to the image of Q under the action of the prefix of w of length t, in which situation we say that \(q_j\) is active after t steps. We see that the total number of variables in V is \(m\ell +n(\ell +1)=(m+n)\ell +n\).

Now we turn to constructing the set of clauses C. It consists of four groups. The group I of initial clauses contains n one-literal clauses \(y_{j,0}\), \(1\le j\le n\), and expresses the fact that all states are active after 0 steps.

For each \(t=1,\dots ,\ell \), the group L of letter clauses includes the clauses

Clearly, the clauses (1) express the fact that the t-th position of our hypothetical c.s.w. w is occupied by exactly one letter in \(\varSigma \). Altogether, L contains \(\ell \left( \frac{m(m-1)}{2}+1\right) \) clauses.

For each \(t=1,\dots ,\ell \) and each triple \((q_j,a_i,q_k)\) in the transition relation of \(\mathscr {A}\), the group T of transition clauses includes the clause

Invoking the basic laws of propositional logic, one sees that the clause (2) is equivalent to the implication \( y_{j,t-1}\mathop { \& } x_{i,t}\rightarrow y_{k,t},\) that is, (2) expresses the fact that if the state \(q_j\) has been active after \(t-1\) steps and \(a_i\) is the t-th letter of w, then the state \(q_k=q_j.a_i\) becomes active after t steps. Further, for each \(t=1,\dots ,\ell \) and each pair \((q_j,a_i)\) such that \(a_i\) is undefined at \(q_j\) in \(\mathscr {A}\), we add to T the clause

The clause is equivalent to the implication \(y_{j,t-1}\rightarrow \lnot x_{i,t},\) and thus, it expresses the requirement that the letter \(a_i\) should not be occur in the t-th position of w if \(q_j\) has been active after \(t-1\) steps. Obviously, this corresponds to the conditions (C1) (for \(t=0\)) and (C2) (for \(t>0\)) in the definition of careful synchronization. For each \(t=1,\dots ,\ell \) and each pair \((q_j,a_i)\in Q\times \varSigma \), exactly one of the clauses (2) or (3) occurs in T, whence T consists of \(\ell mn\) clauses.

The final group S of synchronization clauses includes the clauses

The clauses (4) express the requirement that at most one state remains active when the action of the word w is completed, which corresponds to the condition (C3) from the definition of careful synchronization. The group S contains \(\frac{n(n-1)}{2}\) clauses.

Summing up, the number of clauses in \(C:=I\cup L\cup T\cup S\) is

In comparison with encodings used in our earlier papers [36, 37], the encoding suggested here produces much smaller SAT instances. Since in the applications the size of the input alphabet is a (usually small) constant, the leading term in (5) is \(\varTheta (\ell n)\) while the restriction to PFAs of the encodings from [36, 37] has \(\varTheta (\ell n^2)\) clauses.

Theorem 2

A PFA \(\mathscr {A}\) has a c.s.w. of length \(\ell \) if and only if the instance (V, C) of SAT constructed above is satisfiable. Moreover, the carefully synchronizing words of length \(\ell \) for \(\mathscr {A}\) are in a 1-1 correspondence with the restrictions of satisfying assignments of (V, C) to the letter variables.

Proof

Suppose that \(\mathscr {A}\) has a c.s.w. of length \(\ell \). We fix such a word w and denote by \(w_t\) its prefix of length \(t=1,\dots ,\ell \). Define a truth assignment \(\varphi :V\rightarrow \{0,1\}\) as follows: for \(1\le i\le m\), \(0\le j\le n\), \(1\le t\le \ell \), let

In view of (6) and (7), \(\varphi \) satisfies all clauses in L and respectively I. As \(w_\ell =w\) and \(|Q.w|=1\), we see that (8) ensures that \(\varphi \) satisfies all clauses in S. It remains to analyze the clauses in T. For each fixed \(t=1,\dots ,\ell \), these clauses are in a 1-1 correspondence with the pairs in \(Q\times \varSigma \). We fix such a pair \((q_j,a_i)\), denote the clause corresponding to \((q_j,a_i)\) by c and consider three cases.

Case 1: the letter \(a_i\) is not the t-th letter of w. In this case \(\varphi (x_{i,t})=0\) by (6), and hence, \(\varphi (c)=1\) since the literal \(\lnot x_{i,t}\) occurs in c, independently of c having the form (2) or (3).

Case 2: the letter \(a_i\) is the t-th letter of w but it is undefined at \(q_j\). In this case the clause c must be of the form (3). Observe that \(t>1\) in this case since the first letter of the c.s.w. w must be defined at each state in Q. Moreover, the state \(q_j\) cannot belong to the set \(Q.w_{t-1}\) because \(a_i\) must be defined at each state in this state. Hence \(\varphi (y_{j,t-1})=0\) by (8), and \(\varphi (c)=1\) since the literal \(\lnot y_{j,t-1}\) occurs in c.

Case 3: the letter \(a_i\) is the t-th letter of w and it is defined at \(q_j\). In this case the clause c must be of the form (2), in which the literal \(y_{k,t}\) corresponds to the state \(q_k=q_j.a_i\). If the state \(q_j\) does not belong to the set \(Q.w_{t-1}\), then as in the previous case, we have \(\varphi (y_{j,t-1})=0\) and \(\varphi (c)=1\). If \(q_j\) belongs to \(Q.w_{t-1}\), then the state \(q_k\) belongs to the set \((Q.w_{t-1}).a_i=Q.w_t\), whence \(\varphi (y_{k,t})=1\) by (8). We conclude that \(\varphi (c)=1\) since the literal \(y_{k,t}\) occurs in c.

Conversely, suppose that \(\varphi :V\rightarrow \{0,1\}\) is a satisfying assignment for (V, C). Since \(\varphi \) satisfies the clauses in L, for each \(t=1,\dots ,\ell \), there exists a unique \(i\in \{1,\dots ,m\}\) such that \(\varphi (x_{i,t})=1\). This defines a map \(\chi :\{1,\dots ,\ell \}\rightarrow \{1,\dots ,m\}\). Let \(w:=a_{\chi (1)}\cdots a_{\chi (\ell )}\). We aim to show that w is a c.s.w. for \(\mathscr {A}\), i.e., to verify that w fulfils the conditions (C1)–(C3) from the definition of a c.s.w. For this, we first prove two auxiliary claims. Recall that a state is said to be active after t steps if it lies in \(Q.w_t\), where, as above, \(w_t\) is the length t prefix of the word w. (By the length 0 prefix we understand the empty word \(\varepsilon \)).

Claim 1. For each \(t=0,1,\dots ,\ell \), there are states active after t steps.

Claim 2. If a state \(q_k\) is active after t steps, then \(\varphi (y_{k,t})=1\).

We prove both claims simultaneously by induction on t. The induction basis \(t=0\) is guaranteed by the fact that all states are active after 0 steps and \(\varphi \) satisfies the clauses in I. Now suppose that \(t>0\) and there are states active after \(t-1\) steps. Let \(q_r\) be such a state. Then \(\varphi (y_{r,t-1})=1\) by the induction assumption. Let \(i:=\chi (t)\), that is, \(a_i\) is the t-th letter of the word w. Then \(\varphi (x_{i,t})=1\), whence \(\varphi \) cannot satisfy the clause of the form (3) with \(j=r\). Hence this clause cannot appear in T as \(\varphi \) satisfies the clauses in T. This means that the letter \(a_i\) is defined at \(q_r\) in \(\mathscr {A}\), and the state \(q_s:=q_r.a_i\) is active after t steps. Claim 1 is proved.

Now let \(q_k\) be an arbitrary state that is active after \(t>0\) steps. Since \(a_i\) is the t-th letter of w, we have \(Q.w_t=(Q.w_{t-1}).a_i\), whence \(q_k=q_j.a_i\) for same \(q_j\in Q.w_{t-1}\). Therefore the clause (2) occurs in T, and thus, it is satisfied by \(\varphi \). Since \(q_j\) is active after \(t-1\) steps, \(\varphi (y_{j,t-1})=1\) by the induction assumption; besides that, \(\varphi (x_{i,t})=1\). We conclude that in order to satisfy (2), the assignment \(\varphi \) must fulfil \(\varphi (y_{k,t})=1\). This completes the proof of Claim 2.

We turn to prove that the word w fulfils (C1) and (C2). This amounts to verifying that for each \(t=1,\dots ,\ell \), the t-th letter of the word w is defined at every state \(q_j\) that is active after \(t-1\) steps. Let, as above, \(a_i\) stand for the t-th letter of w. If \(a_i\) were undefined at \(q_j\), then by the definition of the set T of transition clauses, this set would include the corresponding clause (3). However, \(\varphi (x_{i,t})=1\) by the construction of w and \(\varphi (y_{j,t-1})=1\) by Claim 2. Hence \(\varphi \) does not satisfy this clause while the clauses from T are satisfied by \(\varphi \), a contradiction.

Finally, consider (C3). By Claim 1, some state is active after \(\ell \) steps. On the other hand, the assignment \(\varphi \) satisfies the clauses in S, which means that \(\varphi (y_{j,\ell })=1\) for at most one index \(j\in \{1,\dots ,n\}\). By Claim 2 this implies that at most one state is active after \(\ell \) steps. We conclude that exactly one state is active after \(\ell \) steps, that is, \(|Q.w|=1\). \(\square \)

4 Experimental Results

We have successfully applied the encoding constructed in Sect. 3 to solve CSW instances with the help of a SAT solver. As in [12, 36,37,38], we have used MiniSat 2.2.0 [8, 9]. In order to find a c.s.w. of minimum length for a given PFA \(\mathscr {A}\), we have considered CSW instances \((\mathscr {A},\ell )\) with fixed \(\mathscr {A}\) and performed binary search on \(\ell \). Even though our encoding is different from those we used in [36, 37], it shares with them the following useful feature: when presented in DIMACS CNF format, the ‘primary’ SAT instance that encodes the CSW instance \((\mathscr {A},1)\) can be easily scaled to the SAT instances that encode the CSW instances \((\mathscr {A},\ell )\) with any value of \(\ell \). Due to this feature, one radically reduces time needed to prepare the input data for the SAT solver; we refer the reader to [36, Sect. 3] for a detailed explanation of the trick and an illustrative example. Thus, we encode \((\mathscr {A},1)\), write the corresponding SAT instance in DIMACS CNF format, and scale the instance to the instances encoding \((\mathscr {A},\ell )\) with \(\ell =2,4,8,\dots \) until we reach an instance on which the SAT solver returns YESFootnote 4. The corresponding value of \(\ell \) serves as the right border \(\ell _{\max }\) of the binary search while the previous value of \(\ell \) serves as the left border \(\ell _{\min }\). Then we test the SAT instance corresponding to \((\mathscr {A},\frac{\ell _{\max }+\ell _{\min }}{2})\), etc.

We implemented the algorithm outlined above in C++ and compiled with GCC 4.9.2. In our experiments we used a personal computer with an Intel(R) Core(TM) i5-2520M processor with 2.5 GHz CPU and 4 GB of RAM. The code can be found at https://github.com/hananshabana/SynchronizationChecker.

As a sample of our experimental findings, we present here our results on synchronization of PFAs with a unique undefined transition. Observe that the problem of deciding whether or not a given PFA is carefully synchronizing remains PSPACE-complete even if restricted to this rather special case [25]. We considered random PFAs with \(n\le 100\) states and two input letters. The condition (C1) in the definition of a carefully synchronizing PFA implies that such a PFA must have an everywhere defined letter. We denoted this letter by a and the other letter, called b, was chosen to be undefined at a unique state. Further, it is easy to see that for a PFA \(\langle Q,\{a,b\}\rangle \) with a, b so chosen to be carefully synchronizing, it is necessary that \(|Q.a|<|Q|\). Therefore, we fixed a state \(q_a\in Q\) and then selected a uniformly at random from all \(n^{n-1}\) maps \(Q\rightarrow Q\setminus \{q_a\}\). Similarly, to ensure there is a unique undefined transition with b, we fixed a state \(q_b\in Q\) (not necessarily different from \(q_a\)) and then selected b uniformly at random from all \((n-1)^n\) maps \(Q\setminus \{q_b\}\rightarrow Q\). For each fixed n, we generated up to 1000 random PFAs this way and calculated the average length \(\ell (n)\) of their shortest carefully synchronizing words. We used the least squares method to find a function that best reflects how \(\ell (n)\) depends on n, and it turned out that our results are reasonably well approximated by the following expression:

The next graph shows the relation between the relative standard deviation of our datasets and the number of states. We see that the relative standard deviation gradually decreases as the number of states grows.

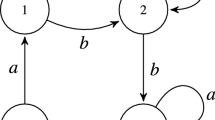

We performed similar experiments with random PFAs that have two or three undefined transition. We also tested our algorithm on PFAs from the series \(\mathscr {P}_n\) suggested in [7]. The state set of \(\mathscr {P}_n\) is \(\{1,2,\dots ,n\}\), \(n\ge 3\), on which the input letters a and b act as follows:

We examined all automata \(\mathscr {P}_n\) with \(n=4,5,\dots ,11\), and for each of them, our result matched the theoretical value predicted by [7, Theorem 3]. The time consumed ranges from 0.301 s for \(n=4\) to 4303 s for \(n=11\). Observe that in the latter case the shortest c.s.w. has length 116 so that the “honest” binary search started with \((\mathscr {P}_{11},1)\) required 14 calls of MiniSat, namely, for the encodings of \((\mathscr {P}_{11},\ell )\) with \(\ell =1,2,4,8,16,32,64,128,96,112,120,116,114,115\). Of course, if one just wants to confirm (or to disprove) a theoretical prediction \(\ell \) for the minimum length of carefully synchronizing words for a given PFA \(\mathscr {A}\), two calls of a SAT solver—on the encodings of \((\mathscr {A},\ell )\) and \((\mathscr {A},\ell -1)\)—suffice.

In our experiments, we kept track of “slowly synchronizing” PFAs, that is, PFAs with the minimum length of carefully synchronizing words close to the square of the number of states. Whenever we encountered such examples, we made an attempt to generalize them in order to get infinite series of provably “slowly synchronizing” PFAs. The following statements present two of the results we found this way.

Proposition 3

For each \(n>4\), let \({\mathscr {H}}'_n\) be the PFA with the state set \(\{0,1,\dots ,n-1\}\) on which the input letters a and b act as follows:

The automaton \({\mathscr {H}}'_n\) is carefully synchronizing and the minimum length of carefully synchronizing words for \({\mathscr {H}}'_n\) is equal to \((n-2)^2\).

Proposition 4

For each \(n>4\), let \({\mathscr {H}}''_n\) be the PFA with the state set \(\{0,1,\dots ,n-1\}\) on which the input letters a and b act as follows:

The automaton \({\mathscr {H}}''_n\) is carefully synchronizing and the minimum length of carefully synchronizing words for \({\mathscr {H}}'_n\) is equal to \(n^2-3n+3\).

We omit the proofs of Propositions 3 and 4 due to space constraints. The proofs (which are not difficult) can be obtained by a suitable adaptation of the approach developed for the case of CFAs in [3, Section 4].

From the viewpoint of our studies, the series \({\mathscr {H}}'_n\) and \({\mathscr {H}}''_n\) are of interest as they exhibit two extremes with respect to amenability of careful synchronization to the SAT solver approach. The series \({\mathscr {H}}'_n\) has turned to be a hard nut to crack for our algorithm: the maximum n for which the algorithm was able to find a c.s.w. of minimum length is 13, and computing this word (of length 121) took almost 4 h. In contrast, automata in the series \({\mathscr {H}}''_n\) turn out to be quite amenable: for instance, the algorithm found a c.s.w. of length 343 for \({\mathscr {H}}''_{20}\) in 13.38 s. At present, we have no explanation for what causes such a strong contrast: is this an intrinsic structure of the PFAs under consideration, or the nature of the algorithm built in MiniSat, or just a peculiarity of our implementation?

We made also a comparison with the only approach to computing carefully synchronizing words of minimum length that we had found in the literature, namely, the approach based on partial power automata; see [27, p. 295]. Given a PFA \(\mathscr {A}=\langle Q,\varSigma \rangle \), its partial power automaton \(\mathcal {P}(\mathscr {A})\) has the subsets of Q as the states, the same input alphabet \(\varSigma \), and the transition function defined as follows: for each \(a\in \varSigma \) and each \(P\subseteq Q\),

It is easy to see that \(w\in \varSigma ^*\) is a c.s.w. of minimum length for \(\mathscr {A}\) if and only if w labels a minimum length path in \(\mathcal {P}(\mathscr {A})\) starting at Q and ending at a singleton. Such a path can be found by breadth-first search in the underlying digraph of \(\mathcal {P}(\mathscr {A})\).

The result of the comparison is presented in the picture on the next page. In this experiment we had to restrict to PFAs with at most 16 states since beyond this number of states, our implementation of the method based on partial power automata could not complete the computation due to memory restrictions (recall that we used rather modest computational resources). However, we think that the exhibited data suffice to demonstrate that the approach based on SAT solvers shows a by far better performance.

5 Conclusion and Future Work

We have presented an attempt to approach the problem of computing a c.s.w. of minimum length for a given PFA via the SAT solver method. For this, we have developed a new encoding, which, in comparison with encodings used in our earlier papers [36, 37], requires a more sophisticated proof but leads to more economic SAT instances. In our future experiments, we plan to employ more advanced SAT solvers. Using more powerful computers constitutes other obvious direction for improvements. Clearly, the approach is amenable to parallelization since calculations needed for different automata are completely independent so that one can process in parallel as many automata as many processors are available.

Now we are designing new experiments. For instance, it appears to be interesting to compare the minimum lengths of a synchronizing word for a synchronizing CFA and of carefully synchronizing words for PFAs that can be obtained from the CFA by removing one or more of its transitions. We also plan to extend the SAT solver approach to so-called exact synchronization of PFAs which is of interest for certain applications.

Notes

- 1.

The conventional concept of an NFA includes distinguishing two non-empty subsets of Q consisting of initial and final states. As these play no role in our considerations, the above simplified definition well suffices for the purpose of this paper.

- 2.

We refer the reader to [4, Chapters 3 and 10] for a detailed account of profound connections between codes and automata.

- 3.

- 4.

In principle, it may happen that we never reach such an instance (which indicates that either \(\mathscr {A}\) is not carefully synchronizing or the minimum length of carefully synchronizing words for \(\mathscr {A}\) is too big so that MiniSat cannot handle the resulting SAT instance) but we have not observed such “bad” cases in our experiments with randomly generated PFAs.

References

Altun, Ö.F., Atam, K.T., Karahoda, S., Kaya, K.: Synchronizing heuristics: speeding up the slowest. In: Yevtushenko, N., Cavalli, A.R., Yenigün, H. (eds.) ICTSS 2017. LNCS, vol. 10533, pp. 243–256. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-67549-7_15

Ananichev, D.S., Volkov, M.V.: Some results on Černý type problems for transformation semigroups. In: Araújo, I.M., Branco, M.J.J., Fernandes, V.H., Gomes, G.M.S. (eds.) Semigroups and Languages, pp. 23–42. World Scientific, Singapore (2004). https://doi.org/10.1142/9789812702616_0002

Ananichev, D.S., Volkov, M.V., Gusev, V.V.: Primitive digraphs with large exponents and slowly synchronizing automata. J. Math. Sci. 192(3), 263–278 (2013). https://doi.org/10.1007/s10958-013-1392-8

Berstel, J., Perrin, D., Reutenauer, C.: Codes and Automata. Cambridge University Press, Cambridge (2009). https://doi.org/10.1017/CBO9781139195768

Burkhard, H.-D.: Zum Längenproblem homogener Experimente an determinierten und nicht-deterministischen Automaten. Elektronische Informationsverarbeitung und Kybernetik 12, 301–306 (1976)

Biere, A., Heule, M., van Maaren, H., Walsh, T. (eds.): Handbook on Satisfiability. IOS Press, Amsterdam (2009)

de Bondt, M., Don, H., Zantema, H.: Lower bounds for synchronizing word lengths in partial automata. Int. J. Foundations Comput. Sci. 30(1), 29–60 (2019). https://doi.org/10.1142/S0129054119400021

Eén, N., Sörensson, N.: An extensible SAT-solver. In: Giunchiglia, E., Tacchella, A. (eds.) SAT 2003. LNCS, vol. 2919, pp. 502–518. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-24605-3_37

Eén, N., Sörensson, N.: The MiniSat Page. http://minisat.se

Gomes, C.P., Kautz, H., Sabharwal, A., Selman, B.: Satisfiability solvers. In: van Harmelen, F., Lifschitz, V., Porter, B. (eds.) Handbook of Knowledge Representation, vol. I, pp. 89–134. Elsevier, Amsterdam (2008). https://doi.org/10.1016/S1574-6526(07)03002-7

Goralčík, P., Hedrlín, Z., Koubek, V., Ryšlinková, J.: A game of composing binary relations. RAIRO Inform. Théor. 16(4), 365–369 (1982)

Güniçen, C., Erdem, E., Yenigün, H.: Generating shortest synchronizing sequences using answer set programming. In: Fink, M., Lierler, Y. (eds.) Answer Set Programming and Other Computing Paradigms, 6th International Workshop, ASPOCP 2013, pp. 117–127 (2013). https://arxiv.org/abs/1312.6146

Imreh, B., Steinby, M.: Directable nondeterministic automata. Acta Cybernetica 14, 105–115 (1999)

Ito, M.: Algebraic Theory of Automata and Languages. World Scientific, Singapore (2004). https://doi.org/10.1142/4791

Ito, M., Shikishima-Tsuji, K.: Some results on directable automata. In: Karhumäki, J., Maurer, H., Păun, G., Rozenberg, G. (eds.) Theory Is Forever. LNCS, vol. 3113, pp. 125–133. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-27812-2_12

Ito, M., Shikishima-Tsuji, K.: Shortest directing words of nondeterministic directable automata. Discrete Math. 308(21), 4900–4905 (2008). https://doi.org/10.1016/j.disc.2007.09.010

Karahoda, S., Erenay, O.T., Kaya, K., Türker, U.C., Yenigün, H.: Parallelizing heuristics for generating synchronizing sequences. In: Wotawa, F., Nica, M., Kushik, N. (eds.) ICTSS 2016. LNCS, vol. 9976, pp. 106–122. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-47443-4_7

Karahoda, S., Kaya, K., Yenigün, H.: Synchronizing heuristics: speeding up the fastest. Expert Syst. Appl. 94, 265–275 (2018). https://doi.org/10.1016/j.eswa.2017.10.054

Kowalski, J., Roman, A.: A new evolutionary algorithm for synchronization. In: Squillero, G., Sim, K. (eds.) EvoApplications 2017. LNCS, vol. 10199, pp. 620–635. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-55849-3_40

Kari, J., Volkov, M.V.: Černý’s conjecture and the road coloring problem. In: Pin, J.-É (ed.) Handbook of Automata Theory, vol. I. EMS Publishing House (in print)

Natarajan, B.K.: An algorithmic approach to the automated design of parts orienters. In: Proceedings 27th Annual Symposium Foundations Computer Science, pp. 132–142. IEEE Press (1986). https://doi.org/10.1109/SFCS.1986.5

Natarajan, B.K.: Some paradigms for the automated design of parts feeders. Int. J. Robot. Res. 8(6), 89–109 (1989). https://doi.org/10.1177/027836498900800607

Martyugin, P.V.: Lower bounds for the length of the shortest carefully synchronizing words for two- and three-letter partial automata. Diskretn. Anal. Issled. Oper. 15(4), 44–56 (2008)

Martyugin, P.V.: A lower bound for the length of the shortest carefully synchronizing words. Russian Math. 54(1), 46–54 (2010). https://doi.org/10.3103/S1066369X10010056. (Iz. VUZ)

Martyugin, P.V.: Synchronization of automata with one undefined or ambiguous transition. In: Moreira, N., Reis, R. (eds.) CIAA 2012. LNCS, vol. 7381, pp. 278–288. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-31606-7_24

Martyugin, P.V.: Careful synchronization of partial automata with restricted alphabets. In: Bulatov, A.A., Shur, A.M. (eds.) CSR 2013. LNCS, vol. 7913, pp. 76–87. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-38536-0_7

Martyugin, P.V.: Complexity of problems concerning carefully synchronizing words for PFA and directing words for NFA. Theor. Comput. Syst. 54(2), 293–304 (2014). https://doi.org/10.1007/s00224-013-9516-6

Papadimitriou, C.H.: Computational Complexity. Addison-Wesley, Boston (1994)

Pixley, C., Jeong, S.-W., Hachtel, G.D: Exact calculation of synchronization sequences based on binary decision diagrams. In: Proceedings 29th Design Automation Conference, pp. 620–623. IEEE Press (1992)

Podolak, I.T., Roman, A., Jȩdrzjczyk, D.: Application of hierarchical classifier to minimal synchronizing word problem. In: Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2012. LNCS, vol. 7267, pp. 421–429. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-29347-4_49

Podolak, I.T., Roman, A., Szykuła, M., Zieliński, B.: A machine learning approach to synchronization of automata. Expert Syst. Appl. 97, 357–371 (2018). https://doi.org/10.1016/j.eswa.2017.12.043

Roman, A.: Genetic algorithm for synchronization. In: Dediu, A.H., Ionescu, A.M., Martín-Vide, C. (eds.) LATA 2009. LNCS, vol. 5457, pp. 684–695. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-642-00982-2_58

Rystsov, I.K.: Asymptotic estimate of the length of a diagnostic word for a finite automaton. Cybernetics 16(1), 194–198 (1980). https://doi.org/10.1007/bf01069104

Rystsov, I.K.: Polynomial complete problems in automata theory. Inf. Process. Lett. 16(3), 147–151 (1983). https://doi.org/10.1016/0020-0190(83)90067-4

Rystsov, I.K.: Reset words for commutative and solvable automata. Theor. Comput. Sci. 172(1), 273–279 (1997). https://doi.org/10.1016/s0304-3975(96)00136-3

Shabana, H.: \(D_2\)-synchronization in nondeterministic automata. Ural Math. J. 4(2), 99–110 (2018). https://doi.org/10.15826/umj.2018.2.011

Shabana, H., Volkov, M.V.: Using Sat solvers for synchronization issues in nondeterministic automata. Siberian Electron. Math. Rep. 15, 1426–1442 (2018). https://doi.org/10.17377/semi.2018.15.117

Skvortsov, E., Tipikin, E.: Experimental study of the shortest reset word of random automata. In: Bouchou-Markhoff, B., Caron, P., Champarnaud, J.-M., Maurel, D. (eds.) CIAA 2011. LNCS, vol. 6807, pp. 290–298. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-22256-6_27

Volkov, M.V.: Synchronizing automata and the Černý Conjecture. In: Martín-Vide, C., Otto, F., Fernau, H. (eds.) LATA 2008. LNCS, vol. 5196, pp. 11–27. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-88282-4_4

Acknowledgements

The authors are very much indebted to the referees for their valuable remarks.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Shabana, H., Volkov, M.V. (2019). Using Sat Solvers for Synchronization Issues in Partial Deterministic Automata. In: Bykadorov, I., Strusevich, V., Tchemisova, T. (eds) Mathematical Optimization Theory and Operations Research. MOTOR 2019. Communications in Computer and Information Science, vol 1090. Springer, Cham. https://doi.org/10.1007/978-3-030-33394-2_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-33394-2_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33393-5

Online ISBN: 978-3-030-33394-2

eBook Packages: Computer ScienceComputer Science (R0)