Abstract

There are many collaborative studies where the data are discrepant while uncertainty estimates reported in each study cannot be relied upon. The classical commonly used random effects model explains this phenomenon by additional noise with a constant heterogeneity variance. This assumption may be inadequate especially when the smallest uncertainty values correspond to the cases which are most deviant from the bulk of data. An augmented random effects model for meta-analysis of such studies is offered. It proposes to think about the data as consisting of different classes with the same heterogeneity variance only within each cluster. The choice of the classes is to be made on the basis of the classical or restricted likelihood. We discuss the properties of the corresponding procedures which indicate the studies whose heterogeneity effect is to be enlarged. The conditions for the convergence of several iterative algorithms are given.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 INTRODUCTION: HETEROSCEDASTIC META-ANALYSIS MODEL

In a research synthesis problem one has to combine several estimates of the quantity of interest \(\mu\). The popular random effects model (REM) postulates the form of these estimators \(x_{i},i=1,\ldots,N\),

Here, the parameter of primary focus \(\mu\) is the common mean representing the treatment effect in biostatistics or the reference value in metrology. The measurement error \(\epsilon_{i}\) of the \(i\)th study is supposed to have zero mean and the variance representing the within-study variability. The protocol requires that the participants who possibly use different measuring techniques report not only their estimates of \(\mu\), but also the estimates \(s_{i}^{2}\) of this variance (within study uncertainty).

The term \(\lambda_{i}\) is commonly taken to have zero mean with some heterogeneity variance traditionally denoted by \(\tau^{2}\). One can view \(\lambda_{i}\) as the additional noise imposed by Nature on the experiment, where one observes the results of independent individual studies all measuring the same overall effect \(\mu\). This variance can be estimated by one of the developed procedures [8, 10]. The classical fixed effects model (FEM) corresponding to the situation when \(\tau^{2}=0\) provides a poor fit in many practical situations [1]. Indeed the heterogeneity in most research synthesis studies can be quite substantial (Thompson and Sharp, 1999). Moreover, some medical researchers believe that ‘‘examination of heterogeneity is perhaps the most important task in meta-analysis’’ [7, p. 30]. In many heterogeneous studies the assumption that all \(\lambda\)’s have the same dispersion seems to be violated if the smallest reported uncertainties correspond to the cases which are most deviant from the rest of data. Indeed this condition appears to be due mainly to mathematical expediency and limits the applicability of REM. We advocate a more detailed analysis by admitting several distinct heterogeneity variances whose larger values are assigned to the aberrant, outlying cases. The most homogeneous data subset gets zero heterogeneity variance.

By independence of \(\lambda_{i}\) and \(\epsilon_{i}\), the variance of \(x_{i}\) cannot be smaller than the variance of \(\epsilon_{i}\) typically estimable by \(s_{i}^{2}\) although it commonly includes non-statistically derived components. In many situations there is doubt about the validity of these estimates especially when they are identified with the unknown uncertainties. Hoaglin [10] discusses possible dire consequences of such identification in the homogeneity hypothesis testing problem \(\tau^{2}=0\). The case of REM allowing \(\tau^{2}\) to depend on the study was investigated by Rukhin (2019a) who suggested to use \(s_{i}\) only as a lower bound on the unknown \(i\)th uncertainty so that \(\tau^{2}_{i}\geq s_{i}^{2}\). In this work \(s_{i}^{2}\) also serves as the lower bound for the unknown variance of \(x_{i}\).

More generally one can entertain an augmented random effects model (AREM) which puts the studies into different classes or clusters according to the several values of the unknown heterogeneity variance, say, \(\tau^{2}=\tau^{2}_{k}>0,k=1,\ldots,K.\) Then the cluster corresponding to \(\tau^{2}_{0}=0\) plays a special role: it includes all conforming studies satisfying FEM. This class represents the largest consistent subset of all studies allowing to identify cases affected by ‘‘excess-variation’’ from likelihood calculations. Its definition is a controversial issue in metrology [3, 20, 4]. We illustrate our definition of this concept by two practical examples in Section 6. The situation when each heterogeneous cluster consists of just one element corresponds to the mentioned setting with \(s_{i}^{2}\) representing a lower bound on the variance of \(x_{i}\).

There is a body of work aimed at extending REM for meta-analysis needs. See Ohlssen et al., 2007, Lee and Thompson, 2008, or Kulinskaya and Olkin, 2014 for more flexible parametric and nonparametric models. The main objective of this paper is to suggest a methodology for selecting one of AREM models with distinct heterogeneity variances. We use maximum likelihood estimators of the mean and variances which provide likelihood-based information criterions studied numerically in Section 7. For this purpose in the next section iterative algorithms to find the maximum likelihood procedures and the conditions for their convergence are discussed. Section 3 contains a necessary condition for the global extremum, and Section 4 gives an example where \(s_{i}^{2}\) are equal to illustrate that the traditional REM have smaller likelihood than some other AREM models. Section 5 discusses the algebraic difficulty of the likelihood equations. The proofs are collected in the Appendix.

2 MAXIMUM LIKELIHOOD ESTIMATORS

Assume that the data are represented by a series of independent but not equally distributed random variables \(x_{i}\sim N(\mu,\sigma^{2}_{i})\) with the unknown common mean \(\mu\) and variances \(\sigma^{2}_{i},\sigma^{2}_{i}=\tau^{2}_{i}+s^{2}_{i},\) where \(\tau^{2}_{i}\geq 0\) is unknown and \(s_{i}^{2}\) represents the within-study variance, \(i=1,\ldots,N\). Supposing that there are no more than \(K\) different positive \(\tau\)’s, the variance of \(x_{i}\) for \(i\) in the class \(I_{k}\) is \(s^{2}_{i}+\tau^{2}_{k}\). Clusters \(I_{0},I_{1},\ldots,I_{K}\) define a partition of the set \(\{1,\ldots,N\}\) with \(I_{k}\) containing \(n_{k}\) elements, \(n_{0}+n_{1}+\cdots+n_{K}=N.\) The total number of heterogeneous clusters \(K\) cannot exceed \(N-n_{0}\), and there is no generality loss in assuming that \(n_{1}\geq\cdots\geq n_{K}\). The special cluster \(I_{0}\) corresponds to the vanishing heterogeneity variance \(\tau^{2}_{0}=0\).

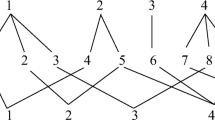

For given \(n_{0}\) and \(K\), the number of non-empty heterogeneity clusters is the Stirling number of the second kind \(\Big{\{}\begin{matrix}N-n_{0}\\ K\end{matrix}\Big{\}}\) (Graham et al., 1994). Thus the total number of all different partitions is

which allows \(I_{0}\) to be empty. When \(K=1\), this number is \(2^{N}-1\), for \(K=2\) it is \((3^{N}-2^{N+1}+1)/2\), for \(K=N-1\), it is \(N(N+1)/2.\) An implication is that in practice either \(K\) should be chosen to be small or close to \(N\).

For given clusters \(I_{0},I_{1},\ldots,I_{K}\) the (classical) log-likelihood function (times \(-2\)) is

We call (2) the augmented random effects model (AREM.CL.\(K\)) likelihood. A standard argument shows that for given clusters the model AREM.CL.\(K\) is identifiable.

The form of the restricted likelihood function \(\tilde{L}\) (AREM.RL.\(K\)) is also well known:

Here the cluster weighted mean

is the best linear unbiased estimator of \(\mu\) which also minimizes (2) in \(\mu\) for given \(\tau\)’s.

Thus to find strictly positive maximum likelihood estimates \(\hat{\tau}^{2}_{k}\), one has to solve equations

with positive definite matrix of second derivatives. These equations can be written in the form

The following Theorem 2.1 gives an explicit form of the Hessian and offers an iterative algorithm motivated by (5).

Let \(a\) and \(d\) be \(K\)-dimensional vectors with the coordinates

and

Theorem 2.1. For AREM.CL.\(K\) the Hessian \(H=(\partial^{2}L/[\partial\tau_{k}^{2}\partial\tau^{2}_{\ell}])_{k,\ell=1}^{K}\) has the form

With \(\hat{\tau}^{2}_{k}\) substituted for \(\tau_{k}^{2}\) in the definition of \(a,d\), and \(\tilde{\mu}\) to get \(\hat{a}\), \(\hat{d}\), \(\hat{\mu}\), the sufficient condition for the minimum of (2) to be attained is:

A necessary condition is that \(\sum_{k=1}^{K}\hat{a}_{k}^{2}/\hat{d}_{k}\leq 1\).

Provided that (7) holds and

the positive maximum likelihood estimators \(\hat{\tau}^{2}_{k},k=1,\ldots,K\) exist and can be determined by iterations as

There are many numerical methods like the Newton–Raphson rule which need only condition (7) for convergence. They are more reliable than the EM algorithm and can be used instead of (9) or (14) in the following Theorem 2.2. An attractive feature of iterations in Theorem 2.1 is that they decrease the value of (2) at each step (Rukhin, 2011). This fact matters since the likelihood may not be a unimodal function of \(\tau^{2}\); indeed the (polynomial) Eq. (5) can have several positive solutions. Therefore the choice of a good starting value is important.

To find the global optimizers \(\hat{I}_{0},\ldots,\hat{I}_{K}\), enumerate all \(\Big{\{}\begin{matrix}N+1\\ K+1\end{matrix}\Big{\}}\) partitions \(I_{0},\ldots,I_{K}\) of the index set \(\{1,\ldots,N\}\) and for each partition determine the solution of (5) via Theorem 2.1. Failure to converge is interpreted as non-existence of solutions satisfying (8) resulting in rejection of the candidate clustering. The partition \(\hat{I}_{0},\ldots,\hat{I}_{K}\) which provides the overall minimizer is taken as the final choice delivering the maximum likelihood estimators \(\hat{\tau}^{2}_{1},\ldots,\hat{\tau}^{2}_{K}\) and \(\hat{\mu}\). A necessary optimality condition is provided in Section 3.

In the simplest but important case, \(K=1\) (AREM.CL.\(K=1\)), we have to choose two clusters, one with zero heterogeneity \(I_{0}\), another its heterogeneous complement \(I_{1}\). Then one has to determine the likelihood defined by the conditions: for \(i\in I_{1}\), \(\tau^{2}_{i}=\tau^{2}\) with unknown but positive \(\tau^{2}\), while \(I_{0}\) corresponds to \(j\)’s for which \(\tau^{2}_{j}=0\). The maximum likelihood estimator of \(\mu\) is of the form (4) with \(\tau^{2}=\hat{\tau}^{2}\),

and \(\hat{\tau}^{2}=\hat{\tau}^{2}(I_{1})\) is a solution of the equation

To get the \(\mu\)-estimator for a fixed \(I_{1}\), it suffices to solve (10) choosing the true minimizer of \(\min_{\mu,\tau^{2}}L(\mu,\tau^{2})\) out of possibly several solutions with positive second derivative, i.e.,

When \(I_{0}=\emptyset\), \(I_{1}=\{1,\ldots,N\}\), one gets the setting of traditional REM with just one \(\tau^{2}\) and (9) presents a commonly used procedure to determine this parameter (e.g., Rukhin, 2019b). However in all examples the likelihood of this model cannot exceed that of models with non-empty \(I_{0}\) (see Section 4). The seemingly new convergence conditions (7) and (8) mean that in this situation \(\hat{a}_{1}^{2}<\hat{d}_{1}<2\sum_{I_{1}}(\hat{\tau}^{2}+s_{i}^{2})^{-2}+\hat{a}_{1}^{2}\). The likelihood of this case typically is smaller than that of some other AREM.CL.\(K=1\) models.

Another special case is the model AREM.CL.\(K=N-n_{0}\), with lower bounded variances, \(n_{k}\equiv 1,k\not\in I_{0}\). In this situation

Then the iteration scheme of Theorem 2.1 can be reduced to a one-dimensional problem involving only \(\hat{\mu}.\)

Theorem 2.2. A sufficient condition for the minimum in AREM.CL.\(K=N-n_{0}\) is that for any \(k\not\in I_{0},\)

A non-strict version of (12) and inequalities

form necessary conditions. The iteration scheme to find the maximum likelihood estimator

converges if (12) holds. Under condition (13) the maximum likelihood estimators \(\hat{\tau}^{2}_{k}=(x_{k}-\hat{\mu})^{2}-s_{k}^{2},k\not\in I_{0},\) are positive.

Similar results can be derived for restricted maximum likelihood estimators of \(\tau^{2}\) with \(K\) simultaneous equations

Define the \(K\)-dimensional vectors \(b\) and \(c\) by their coordinates

and

\(k=1,\ldots,K\). By substituting the restricted maximum likelihood estimators \(\tilde{\tau}_{k}^{2}\), one gets vectors \(\tilde{b},\tilde{c}\) as well as \(\tilde{a}\).

Theorem 2.3. For AREM.RL.\(K\) the Hessian corresponding to (15) has the form

\(C=\textrm{diag}(\tilde{c})\). A sufficient condition for the minimum of \(\widetilde{L}(\tau^{2}_{1},\ldots,\tau^{2}_{K})\) in (3) to be attained at \(\tilde{\tau}_{k}^{2},k=1,\ldots,K\) is that for all \(k\)

and

For any minimizer non-strict inequalities in (17) and (18) must hold. Under these conditions an iteration scheme to find positive restricted maximum likelihood estimators \(\tilde{\tau}^{2}_{k},\) \(k=1,\ldots,K,\)

\(\tilde{\mu}=\tilde{\mu}(\tilde{\tau}_{1}^{2},\ldots,\tilde{\tau}_{K}^{2})\), converges if (17) and (18) are valid.

Specification of Theorem 2.3 for AREM.RL.\(K=1\) and AREM.RL.\(K=N-n_{0}\) is given in Section 9.4; heuristic discussion of the conditions in Theorems 2.1–2.3 is postponed until Section 4.

3 CONDITION FOR GLOBAL EXTREMUM

In this section we give a necessary condition for attaining the global extremum of (2) over all clusters \(I_{0},I_{1},\ldots,I_{K}\).

Given the partition \(I_{0},I_{1},\ldots,I_{K}\), consider \(L(\tilde{\mu},\tau_{1}^{2},\ldots,\tau_{K}^{2})\) as a function of \(\tau_{1}^{2},\ldots,\tau_{K}^{2}\). Let

so that \(\tilde{\mu}=\sum_{m}\sum_{I_{m}}x_{i}(\tau_{m}^{2}+s_{i}^{2})^{-1}/S\).

For a fixed \(q=0,\ldots,K\), choose any \(n\in I_{q}\) which is moved from \(I_{q}\) to \(I_{p}\) and put \(\bar{I}_{q}=I_{q}\!\setminus\!\{n\}\) with the set \(I_{p}\) (\(p\neq q\)) replaced by \(\bar{I}_{p}=I_{p}\,\bigcup\,\{n\}\). Let \(\bar{I}_{k}=I_{k},k\neq p,q\), and denote the similar modification of \(\tilde{\mu}\) by

\(\Delta=(\tau_{p}^{2}+s_{n}^{2})^{-1}-(\tau_{q}^{2}+s_{n}^{2})^{-1}.\) In this notation one has for any \(\tau^{2}_{k},k=1,\ldots,K\)

To prove (21) write the sum in its left-hand side as

and employ (20) to simplify.

This formula and the representation (2) of the likelihood function lead to the following result.

Theorem 3.1. If in AREM.CL.\(K\) the clusters \(I_{0},I_{1},\ldots,I_{K}\) with estimates \(\hat{\tau}_{1}^{2},\ldots,\hat{\tau}_{K}^{2}\) and \(\hat{\mu}\) provide the global minimum of (2), then for any \(n\in I_{q}\) and \(p,0\leq p\neq q\leq K\), one must have

Here \(S\) is defined by (19) with \(\hat{\tau}_{1}^{2},\ldots,\hat{\tau}_{K}^{2}\) replacing \(\tau_{1}^{2},\ldots,\tau_{K}^{2}\).

According to Theorem 3.1 the distance between elements of heterogeneity clusters \(I_{k},k\geq 1\) and \(\hat{\mu}\) measured relative to \(\tau_{k}^{2}\) cannot be too small. Therefore the optimal homogeneity cluster \(I_{0}\) must consist of data points which are fairly close to the consensus estimate \(\hat{\mu}\).

4 EQUAL VARIANCES

To elucidate the conditions of Theorems 2.1–2.3 in this section we look at the simplest case \(K=1\) when all heterogeneity is in \(x\)’s, i.e., \(s_{i}^{2}\) are equal, \(s_{i}^{2}\equiv s^{2}\). Then \(\hat{\mu}=\bar{x}=\sum x_{i}/N\), \(\hat{\tau}^{2}=\max[0,\sum_{i}(x_{i}-\bar{x})^{2}/N-s^{2}]\). Provided that \(\hat{\tau}^{2}>0\),

which can be assumed smaller than \(\mathcal{L}(\{1,\ldots,N\},\emptyset)\). For a heterogeneity cluster \(I=I_{1}\) of cardinality \(M\),

where \(\omega=M(\tau^{2}+s^{2})^{-1}/[(N-M)s^{-2}+M(\tau^{2}+s^{2})^{-1}],\omega\leq\omega_{0}=M/N,\bar{x}_{I}=\sum_{i\in I}x_{i}/M,\bar{x}_{I^{c}}=\sum_{i\notin I}x_{i}/(N-M),N\geq 3\).

By putting \(\alpha_{I}=\sum_{i\in I}(x_{i}-\bar{x}_{I})^{2}/(Ms^{2})\), \(\beta_{I}=\sum_{i\notin I}(x_{i}-\bar{x}_{I^{c}})^{2}/[(N-M)s^{2}]\), \(\gamma_{I}=(\bar{x}_{I}-\bar{x}_{I^{c}})^{2}/s^{2}\), it is convenient to work with the difference between likelihoods

Here

The quantities \(a_{1}\) and \(d_{1}\) from Theorem 2.1 are such that

The condition (7) there means that at maximum likelihood solution

which means that \(\alpha_{I}\geq[\omega_{0}(2\omega-1)(1-\omega)][2(1-\omega_{0})\omega^{2}]\) and then the iteration algorithm is convergent. The condition 12 of Theorem 2.2 is much simpler, \(\omega<\frac{1}{2},\) indicating that the relative weight of increased variance cases cannot exceed one-half.

The restricted likelihoods (up to a constant term) are \(\tilde{{\mathcal{L}}}(\emptyset,\{1,\ldots,N\})=(N-1)[1+\log(Nv/(N-1))],\) \(v>(N-1)/N\), and

In Theorem 2.3 one has \(b_{1}=\omega^{2}(\tau^{2}+s^{2})^{-2}\) and

so that the condition (17) means that

Since \(K=1\), (18) is equivalent to (17) and under this condition the iteration algorithm converges.

The situation with equal uncertainties is also helpful to find out if the classical random effects model (\(I_{0}=\emptyset\)) can have a higher likelihood than AREM.CL.\(K\) with a non-empty homogeneous data set. Define for any \(K\)

Conjecture. If \(\mathcal{L}\) is defined by (25) and \(K=1\), then

This conjecture holds in many practical examples. It is a feature of the model with lower bounded variances (Rukhin, 2019a). We confirm (26) here for the equal variances case for all \(N\geq 3,K=1\).

For \(0<\omega\leq\omega_{0},\) and \(v\geq 1\) given in (24) we define \(F_{I}(\omega;\alpha_{I},\beta_{I},\gamma_{I})\) as the left hand side of (23),

The goal is to show that for some \(M,1\leq M<N\) there is \(I\) such that \(\min_{0<\omega\leq\omega_{0}}F_{I}(\omega;\alpha_{I},\beta_{I},\gamma_{I}))<0\). One has \(F_{I}(\omega_{0};\alpha_{I},\beta_{I},\gamma_{I})=v-\log(v)-1>0\) and \(\lim_{\omega\to 0}F_{I}(\omega;\alpha_{I},\beta_{I},\gamma_{I})=+\infty\). Thus the sought minimum cannot be attained close to the boundary.

For fixed \(I\) the desired minimizer \(\omega\) satisfies the cubic equation

If (28) does not have a solution \(\omega\) in the interval \((0,\omega_{0})\), for which the second derivative is positive then \(\min F_{I}(\omega;\alpha_{I},\beta_{I},\gamma_{I})=0\) and the homogeneity cluster \(I\) cannot have larger likelihood than \(\emptyset\). The existence of a solution implies that \(\alpha_{I}+\gamma_{I}(1-\omega_{0})^{2}>1\).

One can express \(\alpha_{I}\) through \(\omega\) and \(\gamma_{I}\) to get the inequality in terms of these two variables

which for fixed \(\omega\) allows to find the region \(\alpha_{I},\beta_{I},\gamma_{I}\), where (27) holds.

Indeed it suffices to look at the simplest situation when \(M=N-1\) in which case \(b_{I}=0\). If \(\min_{\omega}\sum_{I}F_{I}(\omega,v)\leq 0\) then \(\sum_{I}\min_{\omega}F_{I}(\omega,v)\leq 0\) so that for some \(I\), \(F_{I}(\omega,v)\leq 0\). To evaluate \(\min_{\omega}\sum_{I}F_{I}(\omega,v)\) averages of \(\alpha_{I}\) and \(\gamma_{I}\) are needed. These quantities can be obtained by summing over all \(M\)-element sets \(I\),

which vanishes when \(M=N-1\), and

Thus for \(M=N-1\) the inequality \(\min_{\omega}\sum_{I}F_{I}(\omega,\alpha_{I},0,\gamma_{I})<0\) means that (27) holds with \(\gamma_{I}=v/[(N-1)\omega_{0}(1-\omega_{0})]=v[N/(N-1)]^{2}\), \(\alpha_{I}=(N-2)v/[(N-1)\omega_{0}]=vN(N-2)/(N-1)^{2}\). The direct calculation given in Section 9.4 implies that this inequality holds for all \(N\geq 3\). Indeed the region where (29) does not hold is convex and \(\alpha_{I}^{0}=N(N-2)/(N-1)^{2}\) and \(\gamma_{I}^{0}=[N/(N-1)]^{2}\) provide its extreme point. Thus if \(\alpha_{I}-\alpha_{I}^{0}\geq\lambda_{N}(\gamma_{I}-\gamma_{I}^{0})\), (29) holds. Here \(\lambda_{N}\) denotes the slope of the boundary at \((\alpha_{I}^{0},\gamma_{I}^{0})\). It is shown in the Appendix that \(\lambda_{N}=(N^{2}-2N-2+(N-1)\sqrt{N^{2}-N-2})[N(N+2)]^{-1}\). Thus for \(N\geq 3\), \(\max_{I}\alpha_{I}-\alpha_{I}^{0}\geq\lambda_{N}(\min_{I}\gamma_{I}-\gamma_{I}^{0})\), and one can determine the optimal cluster \(I\) form the condition \(I^{c}=\{\arg\min_{k}\gamma_{k}\}\).

The counterpart of (27) for the restricted likelihood (up to a constant term) is

where

However this inequality typically does not hold. The simplest example is given by a centered data set: \(N=3,x_{1}-\bar{x}=-5/(2\sqrt{3}),x_{2}-\bar{x}=1/(2\sqrt{3}),x_{3}-\bar{x}=2/\sqrt{3},s^{2}=1\). Then the cluster \(\{1,2,3\}\) provides the restricted likelihood solution with \(\tilde{\tau}^{2}=7/4\). In contrast the maximum likelihood method chooses heterogeneity cluster \(I_{1}=\{2,3\}\) with a smaller variance estimate \(\hat{\tau}^{2}=0.73\).

Now we formulate the main results of this section.

Theorem 4.1. When \(s_{i}^{2}\equiv s^{2}\), for the optimal cluster \(I\), \(\omega=M(\tau^{2}+s^{2})^{-1}/[(N-M)s^{-2}+M(\tau^{2}+s^{2})^{-1}]\) solves cubic equation (28). For all \(N\geq 3\), (26) holds when \(M=N-1\), with \(I^{c}=\{i\},i=\arg\min_{k}\gamma_{k}\). For the restricted likelihood function the corresponding inequality does not hold.

The estimates of \(\tau^{2}\) obtained for \(I_{0}\neq\emptyset\) minimizing \(\mathcal{L}(I_{0},I_{0}^{c})\) are typically (much) larger than those derived from AREM.CL.\(K=1\). Indeed the inequality (27) cannot be true for \(\omega_{1}=\omega_{0}/[\omega_{0}+(1-\omega_{0})v]<\omega_{0}\), as \(\omega_{0}(1-\omega_{1})/[(1-\omega_{0})\omega_{1}]=v.\) Thus the minimizer \(\omega\) in (27) cannot exceed \(\omega_{1}\) which corresponds to \(\hat{\tau}^{2}\).

In the general case of arbitrary uncertainties one cannot restrict attention to the case \(M=N-1\). As a matter of fact the cluster leading to a larger likelihood value can correspond to any \(M,M=1,\ldots,N-1\). According to Theorem 3.1 all elements of the heterogeneity class must be far away from the consensus mean estimate.

5 MAXIMUM LIKELIHOOD DEGREE

The goal here is to give the formula for the degree of (polynomial) likelihood equations (11) representing the algebraic complexity of the problem. In the situation of the previous section this degree is three. Our formula is based on a different representation of the likelihood function (2),

which follows from the Lagrange identity. It will be assumed that all \(x\)’s in each cluster and all \(s\)’s are distinct (i.e., that the data is generic). This is a usual condition imposed when studying the maximum likelihood degree (cf. Gross et al., 2012) for a homogeneous variance component problem.

To represent the solutions of likelihood equations as those of polynomial equations let

be a polynomial of degree \(n_{1}+\cdots+n_{K}=N-n_{0}>0\). Then with \(S_{0}=\sum_{j\in I_{0}}s_{j}^{-2}\),

We also define two polynomials

\(\tau^{2}_{0}=0\), and

The degree of \(Q\) is \(N-\max(n_{0},2)\), that of \(R\) is \(N-\max(n_{0},1).\)

The scaled likelihood function \(L\) from (2) takes the form

and the likelihood equations for \(\tau^{2}_{\ell},\ell=1,\ldots,K\) become

For the restricted likelihood function \(\widetilde{L}=Q/R+\log R,\) these equations are simpler

Combining these facts with information about the degree of \(P,Q\), and \(R\), one gets the next result.

Theorem 5.1. The degree \(DL\) of the polynomial Eqs. (30) has the form

the degree \(RL\) of the Eqs. (31) is

6 TWO PRACTICAL EXAMPLES

In many applications the smallest reported values \(s_{k}^{2}\) often seem to belong to the outlying cases. Two examples are provided in this section; many more can be found in metrology literature.

6.1 Key Comparison CCL-K1

Length key comparison CCL-K1 was carried out to compare the deviations from nominal length of steel and tungsten carbide gauge blocks (Thalmann, 2002). The measurement results for one of these blocks, namely, the deviations from a tungsten carbide gauge blocks of nominal length \(1\)mm by eleven participating national institutes are given in Table 1.

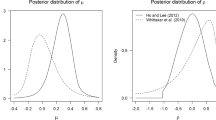

When \(K=1\), according to both maximum likelihood estimators \(\hat{I}_{1}=\tilde{I}_{1}=\{6,7,8\}\) increasing the uncertainties of these three labs to \(23.61\), \(23.95\), and \(24.27\) nm (or to \(23.81\), \(24.12\), and \(24.47\) nm) with \(\hat{\tau}=22.75\) nm, \(\hat{\mu}=18.07\) nm. For the restricted maximum likelihood estimator \(\tilde{\tau}=22.76\) nm, \(\tilde{\mu}=18.09\) nm.

Cox (2007) analyzed the same data set with the conclusion that two laboratories \(6\) and \(7\) are to be excluded from the largest consistent subset which he defined to be formed by the studies for which \(\sum_{j}(x_{j}-\tilde{\mu})^{2}/s_{j}^{2}\) does not exceed the critical point of \(\chi^{2}\)-distribution for \(0.05\) significance level. This approach which gives the consensus deviation as \(20.3\) nm has been criticized by Toman and Possolo, 2009 and Elster and Toman, 2010.

The maximum of the likelihood function is not attained at \(I_{1}=\{6,7\}\), so that a better definition of the largest consistent subset is \(\hat{I}_{0}=\hat{I}_{1}^{c}=\{1,2,3,4,5,9,10,11\}\). The same homogeneity cluster persists when \(K=2\). The estimate \(\hat{\tau}_{1}=22.55\) nm is then replaced by \(\hat{\tau}_{1}=11.07\) nm and \(\hat{\tau}_{2}=26.70\) nm. The classical maximum likelihood estimator of \(\tau\) based on the cluster \(\hat{I}_{0}\) above is positive, but small: \(0.056\) nm; the commonly used DerSimonian–Laird estimator of this parameter vanishes so that \(\hat{I}_{0}\) looks homogeneous.

Larger values of \(K\) do not lead to substantial gains in the likelihood functions. The best (classical likelihood) choice for \(K=3\) recommends to remove from \(\hat{I}_{0}\) lab \(3\), i.e., to take \(\{1,2,4,5,9,10,11\}\) as a new consistent subset; that for the restricted likelihood is \(\{1,2,3,4,5,9,11\}\).

6.2 CIPM CCQM. FF-K3 Study

Another example of discrepant data is the international fluid flow comparisons of air speed measurement (CCM.FF-K3) (Terao et al., 2007). An ultrasonic anemometer chosen as a transfer standard was circulated between four national metrology institutes who reported calibration results at certain speeds. The (dimensionless) data given in the Table 2 represents the ratio of the laboratory’s reference air speed to the one measured by the transfer standard.

Since the data is aberrant, the organizers of this study decided to use the median as an estimator of \(\mu\). When \(K=1\), the maximum likelihood estimator is \(\hat{I}_{0}=\{3\},\hat{I}_{1}=\{1,2,4\},\hat{\mu}=1.0193\) while the restricted maximum likelihood estimator gives \(\tilde{I}_{0}=\emptyset,\tilde{I}_{1}=\{1,2,3,4\},\tilde{\mu}=1.0138\). All increased uncertainties are about \(0.0137\) (MLE) (\(\hat{\tau}^{2}=1.81\times 10^{-4})\) or \(0.0118\) (REML) (\(\tilde{\tau}^{2}=1.49\times 10^{-4}\)), respectively. If \(K=2\), the maximum likelihood estimator practically remains the same, \(\hat{\mu}=1.020\), but the negative likelihood (2) is \(-36.35\) (attained at \(\hat{I}_{0}=\emptyset,\hat{I}_{1}=\{1,2\},\hat{I}_{2}=\{3,4\}\)) while the corresponding value is \(-35.45\) for \(K=1\).

For the restricted maximum likelihood estimator \(\tilde{I}_{0}=\{3\},\tilde{I}_{1}=\{2\},\tilde{I}_{2}=\{1,4\}\), \(\tilde{\mu}=1.020,\tilde{\tau}_{1}=0.0199,\tilde{\tau}_{2}=0.0087\), with the first increased uncertainty larger than that for \(K=1\), but the second one smaller. The negative restricted likelihood (3) decreases from \(-22.89\) to \(-23.52\). For \(K=2\) both \(\mu\)-estimators evaluated according to different likelihood methods turn out to be practically equal to \(1.0196\) (larger than the median \(1.0143\)).

Table 3 summarizes the results. It indicates that from the view of information criterions AREM with \(K=2\) provides the best fit to the data. Then maximum likelihood estimators coincide, and \(\hat{I}_{0}=\{3\},\hat{I}_{1}=\{2\},\hat{I}_{2}=\{1,4\}.\) The value \(K=3\) gives only small gains in the likelihood.

7 SIMULATION RESULTS FOR INFORMATION QUANTITIES

The quantities (25) provide statistics for Akaike’s information criterion (AIC) or for the Bayesian information criterion (BIC) based on the classical or restricted likelihood (Claeskens and Hjort, 2008). Thus one can get the information criteria numbers for these models, e.g.,

and

In the corresponding formulas for \(AIC_{\textrm{AREM.RL}}\) and \(BIC_{\textrm{AREM.RL}}\) through \(\tilde{{\mathcal{L}}}(I_{0},\ldots,I_{K})\) one has to replace in the second term \(K+1\) by \(K\). These numbers are employed in Section 6 in two practical examples. Here we compare them numerically for AREM.\(K=1\) and AREM.\(K=N-n_{0}\).

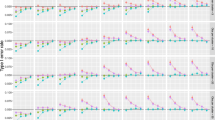

To compare the properties of the considered likelihood procedures we performed Monte Carlo simulations involving AREM when \(I_{0}^{c}=\{1,\ldots,M\}\) with \(M=1\) or \(2\), \(N=5\), \(K=1\). In these models \(x_{i}\sim N(0,\tau^{2}+s_{i}^{2}),i=1,\ldots,M\) and \(x_{j}\sim N(0,s_{j}^{2})\) for \(j\geq M+1\). The variances \(s_{k}^{2}\) were obtained from realizations of standard exponential random variables for \(50\,000\) Monte Carlo runs.

Figure 1 displays the percentage of correct identification by information criterions \(AIC_{\textrm{AREM.CL}}\) and \(AIC_{\textrm{AREM.RL}}\). The Bayes information criterions \(BIC_{\textrm{AREM.CL}}\) and \(BIC_{\textrm{AREM.RL}}\) are not reported as their values differ only by a constant.

This quantity ranges from \(0.12\) (\(\tau^{2}=0)\) to \(0.31\) (\(\tau^{2}=3)\) for AREM.CL when \(M=1\) which is reasonable in view of the total number of models \((2^{N}-1=31)\). When \(M=2\) it grows from \(0.02\) to \(0.17\). The probabilities of the correct choice behave similarly for \(K=N-n_{0}\) but are somewhat smaller e.g., when \(M=1\) they increase from \(0.09\) to \(0.21\), and from \(0.02\) to \(0.16\) (\(M=2\)).

Neither classical nor restricted likelihood procedures perform well in the case of the traditional REM (\(M=N,K=1\)) favoring AREM with smaller \(M\).

8 DISCUSSION

This work proposes a class of models to handle discrepant heterogeneous data in research synthesis. The traditional random effects model is extended to allow for different heterogeneity variance values. The algorithms for determination of these values along with the convergence conditions are presented. The procedures are based on the Gaussian likelihood although this distribution can be replaced by a non-normal location/scale parameter family. However the underlying density cannot be well estimated because usually the data is too scarce.

The suggested approach prescribes additional error to some outlying studies whose summary results are given larger uncertainties but still enter the final answer. Typically the uncertainty enlargements apply only to a few cases. The straightforward iterative numerical algorithms to evaluate the maximum likelihood estimators can be employed for small/moderate number of studies. If there are many studies, the likelihood calculations become impractical but the present methodology still can be used provided that the sizes \(n_{k}\) of heterogeneity clusters \(I_{k},k=1,\ldots,K\) are small.

We do not recommend more than two clusters unless there are good practical reasons to believe in so many categories. Then the \(K=N-n_{0}\) model can be implemented. The largest consistent subset obtained when \(K=1\) or \(2\) as a rule gets smaller if \(K\) increases while the chance of the correct model identification diminishes.

REFERENCES

M. Borenstein, L. Hedges, J. Higgins, and H. Rothstein, Introduction to Meta-Analysis (Wiley, New York 2009).

G. Claeskens and N. Hjort, Model Selection and Model Averaging (Cambridge University Press, Cambridge, UK. 2008).

M. G. Cox ‘‘The evaluation of key comparison data: determining the largest consistent subset,’’ Metrologia 44, 187–200 (2007)

C. Elster and B. Toman, ‘‘Analysis of key comparisons: estimating laboratories biases by a fixed effects model using Bayes model averaging,’’ Metrologia 47, 113–119 (2010).

R. L. Graham, D. E. Knuth, and D. Patashnik, Concrete Mathematics: A Foundation for Computer Science (2nd ed. Addison Wesley, Reading, MA 1994).

E. Gross, M. Drton, and S. Petrovic, ‘‘Maximum likelihood degree of variance component models,’’ EJS 6, 983–1016 (2012).

A. B. Haidich, ‘‘Meta-analysis in medical research,’’ Hippokratia 14 (Suppl. 1), 29–37 (2010).

J. Higgins, S. Thompson, and D. J. Spiegelhalter, ‘‘A re-evaluation of random effects meta-analysis,’’ J. Royal Statist. Soc. Ser A. 172, 137–159 (2009).

D. C. Hoaglin, ‘‘Misunderstandings about Q and ‘Cochran’s Q test’ in meta-analysis,’’ Statist. Med. 35, 485–495 (2016).

E. Kulinskaya and I. Olkin, ‘‘An overdispersion model in meta-analysis,’’ Statist. Model. 14, 49–76 (2014).

D. Langan, J. Higgins, D. Jackson, J. Bowden, A. A. Veroniki, W. Kontopantelis, W. Viechtbauer and M. Simmonds ‘‘A comparison of heterogeneity variance estimators in simulated random-effects meta-analyses,’’ Res. Synth. Meth. 10, 83–98 (2019).

K. Lee and S. Thompson ‘‘Flexible parametric models for random-effects distributions,’’ Statist. Med. 27, 418–34 (2008).

D. I. Ohlssen, L. D. Sharpless, and D. J. Spiegelhalter, ‘‘Flexible random-effects models using Bayesian semi-parametric models: Applications to institutional comparisons,’’ Statist. Med. 26, 2088–112 (2007).

J. M. Ortega and W. C. Rheinboldt, Iterative Solutions of Nonlinear Equations in Several Variables (SIAM, Philadelphia 2000). https://doi.org/10.1137/1.9780898719468

A. L. Rukhin, ‘‘Maximum likelihood and restricted maximum likelihood solutions in multiple-method studies,’’ J. Res. Nat. Inst. Stand. Techn. 116, 539–56 (2011).

A. L. Rukhin, ‘‘ Estimating heterogeneity variances to select a random effects model,’’ J. Statist. Plan. Inf. 202, 1–13 (2019a).

A. L. Rukhin, ‘‘ Homogeneous data clusters in interlaboratory studies,’’ Metrologia 56, 874–882 (2019b).

Y. Terao, M. van der Beek, T. T. Yeh, and H. Müller, ‘‘Final report on the CIPM air speed key comparison (CCM.FF-K3),’’ Metrologia 44, Tech. Supp. 07009 (2007).

R. Thalmann, ‘‘CCL key comparison: calibration of gauge blocks by interferometry,’’ Metrologia 39, 165–177 (2002).

S. Thompson and S. Sharp, ‘‘Explaining heterogeneity in meta-analysis: a comparison of methods,’’ Statist. Med. 18, 2693–708 (1999).

B. Toman and A. Possolo, ‘‘Laboratory effects models for interlaboratory comparisons,’’ Accred. Qual. Assur. 14, 553–563 (2009).

Author information

Authors and Affiliations

Corresponding author

Appendices

PROOF OF THEOREM 2.1

Proof. Since for \(\tilde{\mu}\) defined by (4)

the diagonal elements \(h_{kk}\) of the Hessian are \(\partial^{2}L/\partial\tau_{k}^{4}=d_{k}-a_{k}^{2},\) its off-diagonal elements have the form: \(h_{k\ell}=\partial^{2}L/[\partial\tau_{k}^{2}\partial\tau^{2}_{\ell}]=-a_{k}a_{\ell}\), \(k\neq\ell.\) Thus (6) holds, and the matrix

is positive definite if and only if (7) is valid.

The vector function \(\psi=(\psi_{1},\ldots,\psi_{K})\) of \(\tau^{2}_{1},\ldots,\tau^{2}_{K},\) with

defines iterations (9): \(\psi(\tau^{2}_{1},\ldots,\tau^{2}_{K})\) \(=(\tau^{2}_{1},\ldots,\tau^{2}_{K}).\) In these iterations \(\mu=\tilde{\mu}(\tau^{2}_{1},\ldots,\tau^{2}_{K})\), as in (4). The conditions (7) and (8) mean that the spectral radius of the Jacobian \(\psi^{\prime}\) evaluated at \(\hat{\tau}^{2}_{1},\ldots,\hat{\tau}^{2}_{K}\) is smaller than \(1\) which is a sufficient condition for the convergence of iteration process (9) defined by \(\psi\) (cf. Ortega and Rheinboldt, 2000). \(\Box\)

PROOF OF THEOREM 2.2

Proof. According to (5) one has for stationary points \((x_{k}-\hat{\mu})^{2}=\hat{\tau}^{2}_{k}+s_{k}^{2}.\) It follows that \(\hat{d}_{k}=(\hat{\tau}_{k}^{2}+s_{k}^{2})^{-2}\) and \(\hat{a}_{k}^{2}/\hat{d}_{k}=2(\hat{\tau}^{2}_{k}+s_{k}^{2})^{-1}[\sum_{\ell}(\hat{\tau}_{\ell}^{2}+s_{\ell}^{2})^{-2}+\sum_{j}s_{j}^{-2}]^{-1}\). Therefore condition (7) in this situation means that (12) is valid.

The (scalar) iteration function is now given by (14) and its (positive) derivative at \(\hat{\mu}\),

is smaller than \(1\) if and only if the first inequality in (12) holds. \(\Box\)

PROOF OF THEOREM 2.3 AND COMMENTS

Proof. Now the elements of the Hessian \(\tilde{H}\) have the form

where \(\delta_{k\ell}\) is the Kronecker symbol. Thus (16) holds. Provided that \(\tilde{c}_{k}>0,\) \(\tilde{H}\) is positive definite if and only if the similar matrix \(I-C^{-1/2}\tilde{a}\tilde{a}^{T}C^{-1/2}-C^{-1/2}\tilde{b}\tilde{b}^{T}C^{-1/2}\), is. The two eigenvalues \(\lambda\) of this matrix, which are different from \(1,\) solve the equation

and they are positive when and only when (18) holds. \(\Box\)

For AREM.RL.\(K=1\) the restricted maximum likelihood estimator of \(\tau^{2}\) satisfies (15) and the inequality \(\tilde{a}_{1}^{2}+\tilde{b}_{1}^{2}<\tilde{c}_{1}\) which implies (18).

For AREM.RL.\(K=N-n_{0}\) all stationary points \(z_{k}=z_{k}(\mu,\zeta)=[(x_{k}-\mu)^{2}+1/(\zeta+\sum s_{j}^{-2})]^{-1}\) depend only on two variables \(\mu\) and \(\zeta=\sum(\tilde{\tau}_{k}^{2}+s_{k}^{2})^{-1}\). The two-dimensional iteration function \(\Psi=(\mu,\zeta)\) in these variables,

defines an iteration method which converges under conditions (17) and (18). Indeed in this case \(\tilde{c}_{k}=(\tilde{\tau}_{k}^{2}+s_{k}^{2})^{-2}\), and for \(2\times 2\) Jacobian \(\Psi^{\prime}\) evaluated at \(\tilde{\tau}^{2}_{1},\ldots,\tilde{\tau}^{2}_{K}\) one has

Therefore (17) implies that (positive) diagonal elements of \(\Psi^{\prime}\) cannot exceed \(1\) while (18) means that \(\textrm{tr}(\Psi^{\prime})<1+\det(\Psi^{\prime})\). Then the spectral radius of \(\Psi^{\prime}\) is smaller than \(1\).

PROOF OF THEOREM 4.1

Proof. For fixed \(N,M=N-1,I\) let \(\Phi(\alpha,\gamma)=F_{I}(\omega,\alpha,0,\gamma)\) where \(\omega=\omega(\alpha,gl)\) is the solution of (28). We start with the derivatives

Since \(\partial F_{I}(\omega,\alpha,0,\gamma)/\partial\omega=0\), one has

The second derivatives depend on \(\omega^{\prime}_{\alpha},\omega^{\prime}_{\gamma},\)

If the function \(\phi(\gamma)\) is defined by the equation \(\Phi(\phi(\gamma),\gamma)=0\) then

and \(\phi^{\prime}(\gamma)\) vanishes when \(\omega=\omega_{0}/v\). Thus \(\phi(\gamma)\) increases when \(\gamma\leq\tilde{\gamma}\) and then (sharply) decreases until it vanishes at \(\bar{\gamma}\) such that

where

(As \(N\) increases \(\bar{\gamma}\) grows up very fast: \(\log\bar{\gamma}\sim N\log N\). This asymptotics also governs \(\tilde{\gamma}\).)

The second derivative

is negative when \(\gamma^{0}=(N/(N-1)^{2}\leq\gamma\leq\tilde{\gamma}\). Because of concavity of function \(\phi(\gamma)\) the region where \(\Phi(\alpha,\gamma)\geq 0,\gamma^{0}\leq\gamma\leq\tilde{\gamma}\) is convex.

It remains to evaluate the Hessian of \(\Phi\) at \((\alpha^{0},\gamma^{0})\),

which leads to the formula for the slope

\(\Box\)

About this article

Cite this article

Rukhin, A.L. Selecting an Augmented Random Effects Model. Math. Meth. Stat. 29, 197–212 (2020). https://doi.org/10.3103/S1066530720040043

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3103/S1066530720040043