Abstract

Report 1 of a three-part review examines the conceptual formulation and relevance of the problem, including weak penetration of specific methodologies for causation proving into experimental and descriptive disciplines that study the effects of the radiation factor on living organisms. The philosophical and scientific concepts necessary for understanding the meaning, essence, and possibility of practical application of the criteria (rules, principles) for establishing the truth of associations revealed in medical and biological disciplines are presented. Five types of definitions of the causes and causality were found, which vary from the simplest explanatory (“by production”) to the complex, for deterministic and stochastic effects (necessary and sufficient causes, component causes, probabilistic causes, and counterfactual causes). Many of these definitions originate from famous philosophers (mostly D. Hume). A selection of statements revealing the scientific, practical and social goals of epidemiology and other causality studies important for human life and activity is presented. These goals are primarily related with evidence of the truth of the revealed dependences of effects on agents and impacts, however methods for their achievement can be based on different rules and ethical foundations established on scientific or social tasks. In the second case, the “precautionary principle” is used, and the norms of research developed for application in the scientific community are simplified, in may respects being replaced by prevention or at least reduction of risks, even if the reality of the latter does not have strict scientific evidence. Examples of false but statistically significant associations from various biomedical and social spheres (including estimates of effects of radiation exposure) caused by confound factors are presented. These examples indicate the need to use standardized criteria for assessing the truth of causality.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

The essence of the biomedical concept of causality is not well known and is rarely considered in experimental and descriptive disciplines related to the effects of ionizing and nonionizing radiation (radiation biophysics, radiobiology, radiation medicine, radiation epidemiology, radiation hygiene, etc.). In addition to the extensive experience of the author of this study, this is also evidenced by the material of voluminous domestic specialized manuals on listed radiation disciplines. Of note is the small number of sources identified through PubMed using specific thematic keyword combinations (for example, “Bradford-Hill criteria & radiation” or “Hill’s criteria & radiation”). The basic epidemiological concept of causality and the criteria for the truth of statistical associations remains little in demand in areas that could have a cardinal effect even on the very existence of society (due to the spread of the radiation factor during peacetime and, probably, during wartime). Moreover, it is apparently little known to most researchers (at least in Russia).

The presented review that consists of three reports made an attempt to fill the indicated gap. Report 1 considers the general concept that determined the formulation of the problem, well-known philosophical and scientific definitions of causes and causality, and scientific and social goals of research, while listing examples of false associations due to the effect of confound factors.

CONCEPTUAL FORMULATION OF THE PROBLEM

The ultimate goal of descriptive and experimental natural science disciplines is to confirm the existence of certain events and/or to establish a relationship with the causes that have produced them [1, 2]. According to the philosophy of science of this plan, the task of biomedical areas is to identify the causes of phenomena (cases) such as a disease [2], or, in the general sense, health-related states or events in populations [3, 4]. The principle of causality seems obvious in everyday terms; it is the basis of the first steps of science (de nihilo nihil—“nothing comes from nothing”)1 [5] (the list of notes follows the main text).

However, what is an event, or a case? It would seem that the question is strange, and it is almost not considered in literature on the causality of biomedical effects (‘health effects’). According to an explanatory dictionary, an “event” or “case” is what has happened, taken place; it is a fact [6]. Let us try to imagine different events in medicine and biology. On the one hand, an event and fact is, for example, a case of an infectious disease or radiation burn in a particular individual who has undergone corresponding exposure. A case is also a certain change at the molecular/cellular level if the magnitude of the impact exceeds a certain threshold. In other words, a case in this respect is what has happened to a specific and discrete biological object. On the other hand, if we take a population of people exposed to the effect of, for example, radiation or chemical agents in significant doses, we can observe an increase in the incidence of cancer, but at the same time cases of cancer do not necessarily occur in specific individuals [2–5]. It is also possible to irradiate a cell culture in a very large dose, observe the death of most cells, but still there will be individual cells that will survive due to interscreening and other unpredictable effects [7]. As a result, as for the human population, it will never be possible to say exactly which cells will die and which ones will survive. Is this an event that is equivalent to those mentioned above? Since it does not necessarily happen to an observable single object under any conditions? Probably yes, since it is also a fact, i.e., a consequence of an already stochastic effect. The causes for those and other events may turn out to be of different nature. According to, for example, the study [8], they may be of deterministic and probabilistic nature (randomness is also assumed).

Another logical question arises: what can be called the cause of an event? Again, it seems that everything is clear from the ordinary point of view and, at the same time, nothing is clear. According to the mentioned explanatory dictionary, “a cause is a phenomenon that produces, determines the appearance of another phenomenon” [6]. It is noted in [9] with reference to [10] that “… most researchers would find it difficult to define the words in any but a circular fashion; causes are conditions and events that produce effects, and effects are conditions and events produced by causes.”2

In previous centuries, famous philosophers nevertheless tried to determine the cause and causality more accurately [2, 5, 11]. The results were not unambiguous (more details will be given below). A special study of 2001 [8] on sources with definitions for the period from 1966 demonstrated, first, that the term “causality” appeared as a MeSH term in PubMed only in 1990 (although a similar term ‘causation’ can be already found in scientific sources of the 19th century [12]). Second, there are as many as five categories of causality [5, 8] (they will be discussed below). However, as indicated in [5], the definitions found in literature alone are not enough to provide a basis for understanding the causes of pathology. In a hitherto program (for example, [2, 4, 5, 10, 12]) publication on the criteria of causality that was published in 1965, its author, English statistician Sir Austin Bradford Hill (1897–1991) immediately said, “I have no wish, nor the skill, to embark upon a philosophical discussion of the meaning of ‘causation’” [13].3

Another founder of causality principles, Mervin Wilfred Susser (1921–2014; the United States),4 defined the cause as “… any factor, whether event, characteristic, or other definable entity, so long as it brings about change for better or worse in a health condition” [17] (cited from [9]).5 Later, by 1991 [11], M. Susser rethought the definition of a cause in a pragmatic sense as simply “… something that makes a difference.”6 Thus, everything returned to the level of the explanatory dictionary.

The next question is as follows: is it always clear where the cause is and where the effect is? Is it always obvious “what arose earlier—the chicken or the egg?” At first glance, this is just sophism, however the situation changes if we turn to the phenomenon of “reverse causation” [18]. When a phenomenon that appears to be the result of a certain cause is not actually connected with it, but is connected with its own previous sources that have determined this very “cause” (this definition is ours).7 Although there are enough examples, it is clear that the relevant examples are those associated with the radiation factor. More than a dozen epidemiological studies conducted over the past five to six years have shown an increase in the risk of cancer and/or leucoses after computed tomography (CT; see a list of sources in [19, 20]). However, the analysis of the specifics carried out by the main (apparently) US radiation epidemiologist, John Boyce (John Boice, Jr.), led to the conclusion on the leading role of the confound factor of “reverse causation.” CT was more often performed in those who were suspected to have malignant neoplasms [19]. This viewpoint is also shared by the UN UNSCEAR [21, 22]. Similarly, the cases of thyroid cancer after the therapeutic effects of radioiodine are attributed, not to the radiation factor, but to those initial no-cancerous pathologies that caused this therapy (since hyperthyroid states, benign neoplasms, etc., increase the risk of thyroid cancer) [23].

Finally, how can we not only simply determine as strictly and scientifically as possible, but also prove that something (an event, factor, impact) was the cause of one or another phenomenon, which is important in the biological, epidemiological, medical, environmental, or social respects [2, 5, 8–11]? The common scientific idea implies that one should identify the association, establish the magnitude and statistical significance of correlation, and also show the presence of a dose-effect relationship (rarely something more). The world of experimental biology and medicine and descriptive and statistical epidemiology (together with sociology, which contributes to epidemiology [2, 9, 11, 17]) is a world of associations and correlations; they fill scientific journals, often as the last word of evidence. This problem concerns molecular disciplines and population studies. It is unlikely to be a mistake to say that the vast majority of researchers in the field of biomedical effects of various exposures (including radiation) imagine the scientific evidence of causality just in this way. This applies, not only to Russia and neighboring countries, but also to a significant part of the rest of the world, which was shown, in particular, by mass alarmist conclusions based only on identified associations in the period after the Chernobyl nuclear power plant accident [24, 25].

Meanwhile, association does not mean an obligatory “causation” (causality) [13], and everyone has a spontaneous idea about it, however this often fails to help when the matter concerns scientific research. The sun does not rise because the rooster has crowed. The frequency of deaths from drowning [26, 27] or from killing [28] is in no way related to the level of ice cream consumption, no matter how strict, statistically significant and distinct correlations are, perhaps, even with the presence of a “dose dependence” [26–28]. The male sex does not determine mortality from lung cancer, no matter what statistics evidence this [5, 29].8 And there may be more than a dozen associations of this type (a number of them are discussed below).

In addition to philosophers [2, 5, 11], researchers in the field of biomedical effects were fully aware of the indicated problem since a recent period (let us recall the establishment of a “causal relationship” in the above-cited publication of the 19th century [12]). A set of simple criteria for establishing the causation of then particularly significant infectious diseases appeared (Henle–Koch criteria or postulates; F.G. Jacob Henle, R. Koch, 1877, 1882), which did not prove to be absolute [2, 9, 16, 32–34]. As for noninfectious pathologies, especially stochastically induced, the centuries of development of medicine and biology in this area passed in the dark: the generally accepted approaches to establishing the truth of associations were absent until the 1950s–early 1960s, when, at long last, strong evidence of at least harm of smoking was obtained.

Guesses about the possibility of smoking leading to lung cancer appeared in the late 19th century; in 1912, they took shape in the specific assumption about smokers (Isaac Adler; the United States), which strengthened in the 1920s–1940s, when the first evidence-based works were carried out using the case-control method, although they were still imperfect (1939 and 1943; Germany). By the early 1960s, all doubts had disappeared: dozens of retrospective and prospective studies all over the world had already been accumulated [35].9 This led to realizing the need for certain rules, criteria, or some kind of guiding principles, with the help of which it would be possible to confirm the truth and plausibility of detected associations for noninfectious pathologies. In the 1950s, a number of authors formulated individual principles-criteria [11]10; five of them were included in the program report of the Surgeon General (Chief Physician [33]) of the USA with the accompanying document of 1964 on the consequences of smoking [43].11 Then, in 1965, the already mentioned brief program publication of A.B. Hill appeared [13], where there were already nine criteria (the known “Hill criteria” or “Bradford–Hill criteria” [2, 5, 11, 15, 16, 29, 32–34, 36–42, 44–46]). These criteria (or “guidelines”) are planned to be analyzed in the following reports; here we say that Hill added only one criterion, and the rest can be found in the works of earlier authors.

The first above-mentioned monograph on the criteria of causality [17] was published in 1973; author M. Susser claimed to have developed these criteria independently [11]. An exhaustive set of 10 criteria (later they began to be called “postulates” [15, 16]) of causality for pathologies of all types appeared in 1976 (‘unified concept’ [34]). These postulates were formulated by Alfred Evans (Alfred Spring Evans; 1917–1996; the United States) [34, 45].

Since then, as stated in [9], “these guidelines have generated a talmudic literature on their nature, logic, and application.”12 Our study has fully confirmed this statement. One can find sources listing these criteria (but without much analysis, i.e., not “talmudically”) even in the Russian-language literature, the translated and the original ([32, 33] and relatively massively in RuNet. For many decades that have passed since the 1960s, the clarity of using the criteria has somewhat faded in connection with the attempts to narrowly regulate and specify various concepts, as well as sometimes scholastic criticism of specific issues, etc. (for example, [2, 5, 9–11, 17, 29, 34, 36, 38–40, 44–46]).

Meanwhile, it is obvious that research in the field of biomedical problems without making allowance for the principles of causality are almost blind research, whether these be research on radiation effects at any level or effects of other factors. A molecular radiobiologist reveals certain effects and associations and believes that he has received evidence that is important for medicine. A researcher of in vitro effects, setting up experiments, also considers them evidence-based and extends them to human health, rarely thinking about the obvious abnormality of almost any long-lasting cell cultures.13 However, what is the potential of transferring the revealed patterns even from animals to humans, not to mention experiments at the molecular-cellular level? [47].14 A specialist in the field of clinical medicine once again considers his own observations to be final and suitable for practice, but does he have a sufficient sample and adequate “controls” to draw conclusions about the true cause of the discovered relationships? Finally, an epidemiologist, looking at them from above, believes that only his area makes it possible to obtain data for calculating risks [8, 11, 17, 45] and for using them within, for example, radiation safety [50, 51]. However, from a formal point of view, an epidemiologist only identifies associations that are similar to the aforementioned relationship between ice cream consumption and mortality from drowning or killing.

At what levels of evidence of causality is the activity of these specialists? Are they all needed and can they replace one another here? At one time, we tried to clarify this issue [50, 51], although not in full. By all indications, the concept of causality criteria and principles of evidence continues to be almost absent in the Russian scientific area that studies the effects of the radiation factor. Apparently, it is absent in other near-medical areas of Russia, despite the analysis of the Hill criteria [13] and the corresponding developments of K. Rothman (K. Rothman; the United States) [10, 44] in a manual on epidemiology (2006) [33] and that they are widely represented in RuNet.15

One review, even if it consists of three reports, cannot cover all available literature within this topic over the past more than half a century. However, as we hope, our study has covered the main sources (including most of the basic originals) and, most importantly, the provisions worked out over the indicated period together with the cardinal issues of the historical and critical plan. The presented cycle of reports has the task of reproducing the main provisions and significant stages in the formation of the principles of causality of effects, their essence, limitations, and the possibility and breadth of application. Although the actual part (examples) is often within the radiation field, the conceptual importance of information goes far beyond its borders.

HISTORICAL AND PHILOSOPHICAL FOUNDATIONS OF THE CONCEPT OF CAUSES AND CAUSALITY

The very concept of “causality” and the developed criteria (principles) of establishing causal relationships in biomedical disciplines go back to the philosophical developments of past centuries. Therefore, almost all relevant sources that consider the theory of causality mention and even consider the provisions of a number of philosophers (Table 1).

From Table 124 the greatest contribution to the concept of causality in the field of biomedical disciplines was made by the ideas of a subjective idealist, agnostic D. Hume, on whose provisions the empirio-criticism of E. Mach and R. Avenarius was once based [71].25 D. Hume is mentioned in 17 of 22 specialized sources (Table 1). Second place is taken by J.S. Mill (seven of 22 sources) who is more inclined to the recognition of reality, including on the basis of experience.

On the whole, when getting acquainted with philosophical and natural science literature on causality, one gets the impression of the complete domination of David Hume’s ideas, which are notable for an unprecedented breadth of coverage for all eras [59]. Some provisions of D. Hume are considered in almost every specialized source.

At first glance, it seems that similarity with the provisions of the subjective idealist, moreover, the one who denies any reality in the nature of causal relationships, is not a very good position in developing scientific rules for establishing these relationships. However, as in the still memorable development of dialectical materialism that is based on, in particular, Hegel’s idealistic provisions, the success in this respect has also been achieved [38].

We, just as Sir Austin B. Hill in 1965 [3] (see the previous section), do not have only the “wish or skill,” but also a sufficient space in this review for an in-depth historical and philosophical discussion of the concept of “causality.” In addition, as noted in [8], philosophers and epidemiologists have different objects of study. While philosophers develop general principles of causality by seeking definitions, epidemiologists are usually interested in specific examples of causal relationships, building causal models [8].

Therefore, it is proposed not to go beyond the information on the mentioned topic, which is presented in Table 1, and the corresponding “Hume Rules” and other relevant points from philosophical ideas will be described below, with reference to modern criteria (Report 2). Here, we will consider only the main publications, which analyze the concept of “causality” in natural sciences.

CONCEPTS OF CAUSALITY IN MEDICAL AND BIOLOGICAL DISCIPLINES

Making a preamble to the section, we cannot but mention statements such as “Physics and chemistry have completely abandoned the concepts of cause and effect. These terms are no longer used in these sciences [since the end of the 19th century]” [5]. This state of affairs has developed in connection with the introduction of the quantum theory, which has led physics to accept indeterminism and to abandon the classical concepts of cause and effect [72, 73].

Although well-known statistician Karl Pearson (1857–1936) rejected the concept of causality as a “secret fetish” as early as 1892 [73] (cited from [72]), the situation remains somewhat different for medicine and biology. So, in 1934, no less famous statistician Robert Fisher (1890–1962) noted that uncertainty must not be incompatible with causality; simply an action (cause) influences the corresponding probabilities “as if it predetermined some of them, excluding others” (cited from [72]).

As already mentioned in the previous section, biomedical and, specifically, epidemiological sources present no single formulation of the concepts of “cause” and “causality.” The study by M. Parascandola and D.L. Weed, 2001 [8] presented a literature review to identify and systematize the definitions used. These definitions fit into five categories having strengths and weaknesses depending on the situation, the scope and type of effects (pathologies). It was noted [8] that the scientific definition of causality should, on the one hand, make it possible to differentiate causality from simple correlation (association), and, on the other hand, it should not be so narrow as to exclude obvious causal phenomena from consideration. M. Kundi, 2006; 2007, studies [5, 29] supplemented and discussed these approaches from [8]. The author of [5, 29] cited the data from [8] in his publications with additions and remarks. Table 2 presents the combined information from [5, 8, 29].

A total of five definition categories that are given in Table 2 correspond to the main approaches to causality in philosophical literature. Although these definitions are not mutually exclusive, there is a fundamental difference between deterministic and probabilistic concepts of causality, which can lead to consequences in preferring some definitions over others. So, if the philosophical deterministic definition of causality is taken as the basis, only deterministic models will be recognized as causal in epidemiology. Hence, it is important that epidemiologists understand the practical consequences of adopting a specific type of definition of causality [8].

All categories of concepts are not absolute and are not notable for completeness, as can be seen from the material below.

Cause “by Production”

According to D. Hume, “a cause is when one object (event) follows another, and when all objects similar to the first are accompanied by objects similar to the second” (the “strong concept”) [9, 40, 59, 61]. The “working” definition of M. Susser has already been already mentioned above [11]: “… something that makes a difference” (see note 6). Thus, a cause is what produces, makes, or creates something, or leads to something, “leads to a result(s)” [8].

The foregoing shows an ontological difference between causal and noncausal associations. However, there is no clarity as to what “production” or “creation” means. Causality is defined in terms of another equally elusive concept [8, 75]. It is in connection with this that philosophers such as D. Hume [2, 11, 38, 44, 57–59, 61] and B. Russell [2, 8, 11] rejected the concept of causality. The defectiveness of this definition was noted in epidemiological sources [8, 75].

Necessary and Sufficient Causes

A necessary cause is a condition without which an effect cannot occur, and a sufficient cause is a condition under which an effect must occur [2, 5, 8, 10, 11, 29, 32, 33, 41, 44] (a “sufficient cause is a cause that inevitably produces an effect” [10]). Four types of causal relationships are derived from the following:

(1) necessary and sufficient,

(2) necessary, but insufficient,

(3) sufficient, but not necessary, and

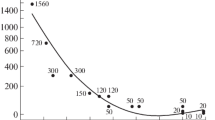

(4) neither necessary nor sufficient [2, 8, 33] (Table 3 and Fig. 1).

Schemes of necessary and sufficient reasons in “2 × 2 tables”. The general meaning is presented in [36, 64]. The example (a) is widespread in the sources on the causality of biomedical effects [2, 8, 10, 15, 33, 41] (etc.); (b) and (c) are our examples. In ablation of thyroid tissue in hyperthyroid states (b), therapeutic administration of 131I in an appropriate dose (according to [76], the necessary dose) is a sufficient cause, however a similar effect can also be achieved via surgical removal of organ tissues [76]. Local exposure of the skin, for example, exposure to β‑radiation in doses of 5–10 Gy leads to radiation burns (c), which have specificity in their development, therapy, and prognosis, differing from other types of burns [7, 21, 77]. That is, when a threshold absorbed dose is reached, radiation burns always occur, and it will not be possible to completely replace the radiation exposure with another physical or chemical factor that also causes burns.

Discussions concerning what types of cause-and-effect relationships should receive an advantage in epidemiology were and are conducted, and, according to [8], there is even an opinion that the term “cause” should be limited by specific necessary conditions (the sources of this approach are the microbial theory of diseases). Some authors have also extended these patterns to noninfectious diseases. So, the W.E. Stehbens publication in 1985 [78] states that each pathology has its own specific, unique cause, and multicausal models simply indicate gaps in scientific knowledge, the fact that the desired cause has not yet been disclosed (some confirmation of this approach is, for example, the discovery in the early 1980s of the etiology of gastric ulcer caused by Helicobacter pylori infection [79]). B.G. Charlton noted in his study published in 1996 that epidemiology, like other disciplines, must search for the only necessary cause for an effect to be considered a scientific discipline (“… risk factor epidemiology cannot be considered as a scientific discipline because it aims at concrete usefulness rather than abstract truthfulness”) [80].27

Of course, this strict determinism that requires an unambiguous correspondence between cause and effect, when the same cause must invariably lead to a similar effect, without the role of chance, is not applicable to the field of radiation effects. It follows from the summary of sources given in [8] that the causes of complex chronic diseases such as cancer and cardiovascular pathologies usually do not fall under the type of either necessary or sufficient causality.

Component Causes

The general idea of necessary and sufficient components of a single cause of a disease was spontaneously developed many decades ago (see the I.V. Davydovskii, 1962, study [74]), however the wording of the definition of causality on this basis is associated with the name of Kenneth Rothman, whom has already been mentioned in the previous section (from the first publication on the topic in 1976 [10] to a number of fundamental subsequent studies up to 2008 [44, 82–84]28). The model of this author, moreover, with mandatory personalization, is now analyzed/presented in various English-language [8, 11, 15, 46, 57, 58, 72, 86–94] and Russian-language sources (including Runet) [33, 95, 96].29 Apparently, Kenneth Rothman is currently one of the main authorities in establishing the rules for the multicausality of effects in epidemiology, although his main provisions do not seem so original; they are perhaps more detailed from the epidemiological standpoint. The manual on epidemiology (V.V. Vlasov, 2006 [33]) says: “Modern epidemiology uses a causality model called a component. Its most developed version belongs to K. Rothman.”

The idea of the totality and interaction of various causes for a single effect was put forward a little earlier in the work of D. Lewis, 1973 [97] (in philosophical aspect). In 1974, a philosopher J.L. Mackie formulated the now well-known [2, 8, 41, 57] concept of the condition INUS (‘Insufficient and Non-redundant part of an Unnecessary but Sufficient condition’) in the monograph [98].30 In addition, as noted in [99], J.L. Mackie first developed a model of component causality in the same 1974 [98], i.e., before K. Rothman.

Finally, it was indicated [8, 68] that the sources of the concept of multicausal phenomena go back to John Mill’s philosophy [62] (the provision that the real cause of an effect (a sufficient condition) is a combination of a number of conditions (that are necessary, but not sufficient if taken separately) [68]).

Figure 2a presents our conditional modification31 of Rothman’s “Causal Pie Model” [10, 44, 82].

The concept is based on the fact that most of the causes that are important in the medical and epidemiological aspect are components that constitute sufficient causes, however they are not sufficient in themselves. K. Rothman mentions the “constellation” of phenomena (sectors in Fig. 2), which is a sufficient cause (circles in Fig. 2). Moreover, the entire “constellation” and its components are called “causes.” As a result, the term “cause” alone does not indicate whether the phenomenon will be a sufficient cause or a component of a sufficient cause [10, 44].

Developing the concept in a 2005 publication [44], K.J. Rothman and S. Greenland provide figurative examples of what must be understood as component causes. Since the first assessment of the concept of causality is based on direct observations, it is often limited only by their scope. For example, when a light switch is in the “on” position, a light effect is usually observed. However, it is clear that the causal mechanism for generating light includes more components than just turning the switch. Nevertheless, the tendency to identify a certain “switch” as a unique and sole cause follows from its usual, observable role as the final factor acting in the causal mechanism. Electrical wiring and other components, starting with the production of electricity at a remote station, can be considered as part of the causal mechanism, but once they are put into operation; so, they rarely require additional attention. Thus, the switch may appear to be the only part of the mechanism that must be activated to obtain the effect of switching on a light. The inadequacy of the assumption of the sufficient role of the switch will manifest when a light bulb burns out or something happens in the power supply [44].

There are many examples of component causes associated with infectious and noninfectious diseases. Just drinking contaminated water is not enough for falling ill with cholera (which Max Pettenkofer partially demonstrated at one time), and smoking is not enough alone to induce lung cancer, however both factors are necessary components of sufficient causes, as indicated in [10]. No single component (“sectors”) of the constellation is sufficient, although, as can be seen from Fig. 2a, some component (A) is included in all causes. This is a necessary component (cause), such as, for example, the presence of infection with tuberculosis bacterium in all sufficient component causes of this disease (Fig. 2b), just like the presence of infection with another agent in other infectious pathologies, such as the mentioned cholera pathogen.

The following question arises in light of the biomedical effects of radiation: “Can radiation be a necessary component of the “constellation” of a sufficient cause of certain radiation effects? At the level of an organism, or at least a cell?” Apparently, it cannot, because it is known that specificity is absent for radiation effects at these levels. There is no pathology or change that would be induced, not solely by irradiation, but by some other factor (at least reactive oxygen species), just like there is no corresponding radiation biomarker [7, 21]. As already mentioned, in our opinion, some exceptions may be radiation burns, which have differences in their development, therapy, and prognosis from other burns [7, 21, 77] (Fig. 1c); the other exception is probably radiation syndrome. The lack of radiation specificity of the effects is illustrated by the schemes of sufficient component causes of radiogenic cancers (a similar composition can be built for leucoses) and radiation cardiovascular pathologies (Fig. 3).

From the diagrams in Fig. 3, a completely similar pathology, the component cause of which nevertheless does not include irradiation, can be theoretically found for a seemingly radiogenic cancer. The situation is the same for cardiovascular and cerebrovascular pathologies, the attribution of which to radiation exposure is difficult to establish even at doses less than 0.5–1 Gy of radiation with low LET [101–103] (the list of components of causes can be continued in both cases considered).

The identification of all components of one or another sufficient cause is not necessary to prevent an effect, since blocking of the causal role of even one component makes the joint action of other components already insufficient and, therefore, eliminates the entire action [10]. In his original study [10] K. Rothman gives an example of removing the smoking component from a sufficient cause that provokes lung cancer, which can completely neutralize the effect of the entire constellation of causes, many of which cannot be recognized.

The presented model also illustrates the concept such as “strong” and “weak” component causes. A component cause that can complete the whole complex of a sufficient cause (that is, 1–3 in Fig. 2), but only in combination with other components with a low prevalence, is a “weak cause.” The presence of this component cause changes the probability of an outcome insignificantly, from zero to a value slightly more than zero, which reflects the rarity of additional components. However, if these additional causes are not rare, but are almost ubiquitous, then this makes “strong” the component cause that completes the entire causal complex together with them. A weak cause brings only a small increase in the risk of a disease, while a strong cause will significantly increase the risk [10].32

Thus, the ‘strength of a causal risk factor’ depends on the prevalence of additional components in the same sufficient cause. However, this prevalence often depends on customs, circumstances, or accidents, not being a scientifically based characteristic [10]. At this point, K. Rothman considers the corresponding example of a component sufficient cause from the study by B. MacMahon, 1968 [104], which is related to the phenylketonuria disease. In a population where most people have a diet with a high content of phenylalanine, the inheritance of the rare phenylketonuria gene is a “strong” risk factor for mental retardation, and phenylalanine in the diet will be a low risk factor. However, in another population in which the phenylketonuria gene is very common, but at the same time few people have a diet with a high level of phenylalanine, gene inheritance will be a low risk factor, however phenylalanine in the diet will be a strong risk factor. Thus, the strength of a causal risk factor for a relative risk parameter depends on the distribution of other causal factors in the population within the same sufficient cause. The term “strength of a causal risk factor” has meaning as a description of the importance of this indicator for healthcare, although it is devoid of biological meaning in describing the etiology of the disease [10, 44].

In relation to the sufficient component causes, which include the radiation factor, many situations similar to those described for phenylketonuria can be proposed [10, 104]. For example, when radioactive iodine fell out in the early period after the Chernobyl accident, the component sufficient cause of thyroid cancer could include, among other things, an individual’s age and a diet with stable iodine as components. As a result, the component associated with the radioiodine dose affecting the thyroid gland could be a weak or strong causative factor, depending on the prevalence of the mentioned additional causes. In a low prevalence of normal iodine supply, this could be a strong factor, and, in a high prevalence, it could be a weak factor; the same is true for an individual’s age.

Another example is some hereditary pathology associated with DNA repair defects [105]. When there is a significant level of radiation exposure on the population, for example, in a high natural radiation background and/or medical care that involves many X-ray diagnostic procedures (an additional cause), the causative factor of the repair defect will be strong, otherwise it will be weak.

Finally, based on his model, K. Rothman considers the process of synergy, i.e., the interaction of causal components. Synergy means that two component causes are parts of the same sufficient cause [10]. Although the indicated author is of the opinion that synergistic components do not have any effect separately [10], nevertheless, many analogies can be found for the field of radiation exposure. For example, the UNSCEAR-2000 (Appendix N) that is devoted to the combined action of radiation and other agents [106] reflects the synergistic interactions of radiation with chemicals, smoking, asbestos exposure, etc. There are also recent domestic monographs on this subject (V.I. Legeza et al., 2015 [107], V.G. Petin, J.K. Kim, 2016 [108]).

In light of the foregoing, of particular relevance is the provision of K. Rothman, according to which small doses of an agent in comparison with large doses may require a more complex set of additional components to complete a sufficient cause [10]. In 1988, K. Rothman et al. [82] proposed epidemiological approaches to enhance weak associations. The first approach is to limit the study population to a subgroup of individuals with deliberately low background risk levels, and the second is to reduce an undifferentiated erroneous classification (increasing specificity for measuring exposure, classifying pathology and length of the latency period).33

In recent years, the use of the “Causal Pie” model has been moving from theory to practice, not only in medicine and epidemiology [58], although at the beginning of 2018 there were only 12 sources found in PubMed in searching for ‘Causal Pie,’ if we take studies specifically on the topic (starting with 2011); five of them are theoretical developments.

We know the following examples (not only from PubMed).

—Risk assessment for myocardial infarction in the ‘European Prospective Investigation into Cancer and Nutrition-Potsdam Study’ [86].

—A study of the dependence of the frequency of multiple sclerosis on lifestyle factors and the environment [109].

—Analysis of cervical cancer risk in papilloma virus infection [110].

—Assessment of liver cancer risk factors (hepatitis viruses; alcohol) [111].

—Finding the genetic determinants of hypertension at young age [112].

—Risk of bipolar disorders depending on heritable factors and family conditions [113].

—Determination of tick-borne causation of Rosacea [114].

—Construction of sufficient component causes of oral cavity cancer [92].

—The same task for flu (H1N1 virus) [115].

—The same task for tuberculosis [91] (Fig. 2b).

—Use of the “Causal Pie” in evolutionary biology and ecology [88].

Of the listed works, five are Chinese (including Taiwan) and one is Korean (i.e., more than half). The list can be supplemented with our developments for radiation effects (Fig. 3).

Thus, although the cases of direct application of the model developed as early as 1976 [10] are clear to be available, they are few. Perhaps, this is due to some triviality of the idea, which leads to the failure to mention the specific term with ‘pie’. Nevertheless, the similar strategy “according to Rothman” was called “powerful” [58].

To conclude the subsection, we should briefly consider the “Web of Causation” (or “Web of Causal Determination” according to the translation [15]), with which K. Rothman’s scheme is somewhat similar in idea. Since multicausality is the main canon of modern epidemiology [5, 8–11, 13–17, 29, 32–34, 41, 43–46, 68, 72, 75, 78, 80, 82–95], a “metaphor” of “Web of Causation” [15, 58, 87, 91, 93] was introduced for the description of a complex, interdependent, and multifactorial causation of effects, as stated in [15, 87, 93]. Probably, the term first appeared in the 1960s [15, 93].34

The “Web of Causation” is usually an extensive diagram of the conditions that are necessary for the occurrence (and/or prevention) of a disease in a particular person, as well as in an epidemiological study of the causes of pathology in a population. A web usually looks like rows of rectangles that represent factors and have highly branched and interconnected causal relationships between them (lines) [87, 91, 94]. According to [58], the model is applied to empirical data, including in the WHO.

The web includes direct and indirect causes. Direct causes are “proximal” to pathogenic events (i.e., they are closer to them). Indirect causes are “distal” pathological events (they are more distant, ‘upstream’). For example, obstruction of the coronary artery is a direct cause of myocardial infarction, while the social and environmental factors that lead to hyperlipidemia, obesity, sedentary lifestyle, atherosclerosis, and coronary stenosis are indirect causes. In considering this disease, indirect and direct causes form a hierarchical causal web, which often has reciprocal relationships between factors. The web is divided into levels: the macro-level (social, economic, and cultural determinants), individual level (personal, behavioral, and physiological determinants), and micro-level (organ, tissue, cellular, and molecular causes) [87, 93].

The manuals [87, 94] present an extremely complex scheme of the “Web of Causation” for myocardial infarction, which, in our opinion, can compete in complexity with the scheme of signal transduction of mitogenic and damaging stimuli in a cell (for example, [49]). A no less impressive web is illustrated [91] for the causes of obesity. Persons interested can also find many complex ‘Webs of Causation’ on the English-language Internet.35

It remains unclear how this complex model of the often hardly quantifiable, interpenetrating causal dependences can be used in healthcare practice; unless it is used in a very simplified form or just as an illustration/teaching aid (which is very illustrative, for example, for representing the dubious radiation attribution of cardiovascular and cerebrovascular pathologies after irradiation in small doses [77, 101–103]). The idea arises that there is no adequate verification of the operation of these models for biology and medicine, or even that they are difficult to falsify according to K. Popper’s criterion [11, 52, 64–67].

Probabilistic Cause

This was considered as early as through D. Hume (“probability arising from causes”) [59].

According to the considered M. Parascandola and D.L. Weed, 2001, study [8] on the definitions of causality in epidemiological literature, the “probabilistic” or “statistical” definition of causality is widely used when a cause increases the likelihood of an effect (for example, the development of cancer). The occurrence of cancer in an individual is partly random (a stochastic, or “nondeterministic” process). In this regard, the probabilistic cause can be neither necessary nor sufficient for the manifestation of the effect, although this definition does not exclude either necessary or sufficient causes. According to [8], a sufficient cause is one that increases the probability that an effect will reach unity, and a necessary cause makes the probability simply greater than zero.

The probabilistic definition of causality is more constructive than the determination of a cause based on a sufficient component. Necessary and sufficient causes can be described in probabilistic terms, however probabilistic causes cannot be expressed from determinate positions. Also important is that the probabilistic definition narrows the number of assumptions about biological mechanisms, since it does not require belief in countless modifiers of a latent effect for each less perfect correlation [8].

It is also possible to use the concept of probability for K. Rothman’s “Causal Pie,” just as it is necessary to keep in mind that components contribute to the probability of an effect and are not determined for it. If one of the components is absent, then the probability of the effect decreases [8]. In 2005, K.J. Rothman and S. Greenland [44] suggested that the presence or absence of one component could be determined via random process; however, in this approach by the mentioned authors, the relationship between cause and effect nevertheless remained determined, although more difficult to predict. Causal relationships often seem probabilistic in practice, however, according to the indicated authors (cited from [72]), this may be due to ignorance of the complete set of components that make up the complex sufficient cause of a pathology.

One of the problems that seems to be against the probabilistic definition is that it cannot explain why, for example, some smokers develop lung cancer, while others do not. That is, “probability” is a kind of euphemism of ignorance. The study [8] resolves this issue, stating that the probabilistic determination also admits the possibility of action of some yet unknown causes, giving the example of a discovered genetic factor that sharply increases the risk of developing lung cancer. Therefore, there is no reason to believe that some cause increases the risk of an effect for each person by the same amount: due to the mathematical continuum of probability, the model allows a wide range of possible effects [8] (see note 32).

Since, as said, it is not possible to distinguish between causal and noncausal associations for the probabilistic determination of causality, a counterfeit element is added to the definition to improve the situation [8].

A Counterfeit Cause

Counterfeit causality is based on the contrast between an effect in the presence of a cause and an effect in its absence. We again find a similar construction in studies by D. Hume: a cause is determined on the basis of whether there would be a second object in the temporal sequence of two objects if there were no previous object [9, 40, 59, 99] (identifies the necessary cause in “the strong sense” [99]).

Such causes can be deterministic and probabilistic [8] (Table 2). For example, according to the definition of N. Cartwright [119] cited in [8], factor C causes E if probability E given by C is greater than probability E in the absence of C, and all other conditions remain constant. As noted above in Table 2, the counterfactual determination of a cause is in the strictest case unprovable, because time does not reverse, and no one can observe the same event, the same individual, or the same population twice with a specific condition (“cause”) and without it [2, 8, 37, 53, 89]. For a strict sense, this problem cannot be solved by experimental approaches involving the use of seemingly completely adequate controls: there are no two absolutely identical cells or organisms, even if they are identical twins. Similarly, a cell and an organism will not be completely identical at different points in time. An absolutely adequate control for a cell and an organism with exposure can be only that cell or the organism without exposure, but in the same time period [89, 91].

Theoretically, even seemingly correct counterfeit “causality” may not be true causality. The S. Greenland and J.M. Robins, 1986, publication [120] provides a speculative example (“bivariant counterfeit” [9]), which, although it seems puzzling, nevertheless is not completely implausible. Let us assume that half of the individuals in a population are sensitive to exposure and can die from it (i.e., they will live only if they are not exposed), and the other half can die precisely because of the absence of exposure (i.e., they will live only if they are exposed). If the exposure is distributed randomly across the population, then the expected average causal effect will be zero: there will be no relationship between exposure and mortality in an infinitely large population. Nevertheless, the observed result for each individual will be due to exposure or nonexposure [9, 120].

All of this looks somewhat abstract and even scholastic from practical biomedical standpoints, but nevertheless counterfeit causality is used quite widely, starting with a well-known experiment of one of the founders of the epidemiological approach, J. Snow, in 1854 in London (when the closure of water taps in specific areas of the city eliminated cases of cholera) [15, 33, 94, 95].

The counterfeit approach was also attempted to be used in radiation and epidemiological studies. For example, a group of authors (J.J. Mangano, J.M. Gould, and others) from the nonprofit organization Radiation and Public Health Project (New York) published several works that cite data on a decrease in child mortality, including cancer mortality [121] and leukemia mortality [122], not far from American nuclear power plants after shutting down their operation (according to J.J. Mangano et al., during the operation of nuclear power plants, these indicators were increased compared to regions without nuclear power plants [121, 122]). Although the studies of these authors were criticized (“junk” works [123]), were not confirmed in any way by other epidemiological studies (for example, [124]), the very fact of using the counterfactual approach in radiation epidemiology is important36 (another example is a comparison of various indicators in patients that have undergone radiotherapy and, for example, chemotherapy [126]).

Summing up their review study, M. Parascandola and D.L. Weed, 2001 [8] come to the conclusion that the probabilistic determination of the cause in combination with counterfeit conditions is the most promising for epidemiology. A similar approach is consistent with deterministic and probabilistic causal models, considering the former as an extreme case of the latter.37 In addition, the mentioned combined model implies fewer assumptions about unobservable natural phenomena, eliminating the need to always establish hidden determinants that make up causes. The determination of causality must allow the possibility that randomness is inherent in some natural processes [8].

Probably, the presented complex causality model has not become universal. Ten years have passed since the publication of the review [8]; a new review by the same author on a similar topic has been published (M. Parascandola, 2011 [72]), and its summary includes the same words: “… epidemiology lacks an explicit, shared theoretical account of causation. Moreover, some epidemiologists exhibit discomfort with the concept of causation, concerned that it creates more confusion than clarity.”38

Nevertheless, the probabilistic causality model is the most acceptable for radiation and epidemiological studies, which are currently concentrated mainly on the stochastic effects of radiation in the low-dose range [21, 22, 24, 25, 50, 51, 77, 101–103, 124]. As for the counterfeit supplement, it is likely to be the lot of disciplines with the possibility of setting up controlled experiments (radiobiology and radiation medicine).

Causality at Different Levels of Biological Organization

According to the authors of [8], there is no reason to argue that causality at one level (for example, molecular) is more significant than causality at other levels, including social. This standpoint does not seem trivial, therefore the priority of causality at different levels of organization has previously been the subject of discussion (see, for example, the work by M. Susser, 1973 [17]). Over the past decades, the question of the importance of the “black box” strategy for the study of causal relationships in epidemiology has been repeatedly raised [99, 130–132]. On the one hand, critics of this strategy believe that it is necessary to constantly reduce the volume of the “black box,” minimizing the yet unclear mechanisms of causality at the biological level, which are considered as basic. On the other hand, proponents of the primary consideration of social factors for the development of pathologies argue that causal phenomena at the social level cannot be completely reduced to biology or to the determinants of individual behavior, such as, for example, smoking [8].

The two indicated standpoints generally coincide with the two categories of causality (deterministic and probabilistic) [8]. The differences correspond to different types of scientific explanations. So, according to [8], the tendency to give priority to knowledge at the molecular level in developing a causal explanation of an observed association is present among researchers and in the unscientific environment. For example, in the 1950s, an attempt was made to find some specific necessary cause for lung cancer from smoking (such as a specific molecule in cigarette smoke), which would provide only one strict correlation with the occurrence of this pathology [133]. A similar situation arose in the radiobiology of the 1950s–1960s, when researchers of radioprotectors searched for an “atomic bomb pill” in the figurative expression, which also wandered later into respective circles. Among biologists, this reductionism has been criticized; moreover, it has been assumed that causal conclusions in epidemiology should integrate all multilevel events varying from molecular to social [8, 134].

SCIENTIFIC, PRACTICAL, AND SOCIAL OBJECTIVES OF EPIDEMIOLOGY AND STUDIES of CAUSALITY

Some authors point to the difference between the scientific or “logical” definition of causality and its more flexible practical definition, since it is believed that the goals of science and healthcare are different [8, 39]. The main goal of healthcare is “to intervene to reduce morbidity and mortality from pathology” [118] (cited from [8]). The sociological area of epidemiology was developed mainly by M. Susser [134]. As is usually believed, the main goal of science is to explain the world [1, 2, 8] (“reduction of uncertainty” [2]). In opposition to M. Susser, K. Rothman et al. believed that epidemiologists should strive for scientific objectivity and not allow themselves to be guided by the specific goals of healthcare [135].

Therefore, scientific researches, especially fundamental ones, may or may not serve as the basis for effective health protection strategies [8].

Based on the latter fact, the recommendation was developed that epidemiology should abandon the traditional scientific concept of necessary and sufficient causes in favor of a broader concept that is not related to strict determinism and has a higher practical significance [8, 11] (such as the concept of risk; see note 27). Although probabilistic causality models are not completely accurate, they can provide a faster description of phenomena, which is important in terms of benefits [136] (cited from [8]).

At this point, it is appropriate to briefly consider the question of what can be considered scientific from a public and social point of view. Sociological researches in the field of science have become the basis for developing the concept of “Sociology of Knowledge” (SoK), according to which science is also social activity [2]. The question of what research objects in the world cannot be resolved without taking into account how a social group consisting of scientific researchers (“scientists”) imagines these objects [2]. Entities, whether these be electrons or DNA, cannot be attributed a role in our world, regardless of symbols and attached meaning, and the specifics of symbolism depend, in particular, on the social status of the one who has developed them [2, 9]. Therefore, while the traditional philosophy of science had special criteria for distinguishing between the scientific and unscientific, such as K. Popper’s criteria of falsifiability [2, 11, 52, 64–67], the “Sociology of Knowledge” applies social criteria for these purposes [2].

As noted by a famous philosopher of science Thomas Samuel Kuhn (T.S. Kuhn; 1922–1996) [137], when researchers must choose between competing theories, two people can come to different conclusions, although they may profess the same standard evaluation criteria. This depends on the inclinations, personality, biography, and other characteristics of the individual. The following examples can be added: a psychometric study (2001) by Australian and New Zealand epidemiologists on which causality determination methods are prioritized [138], as well as a survey (2009) of specialists from different countries regarding the preference of a particular “dose-effect” model for radiation safety (linear nonthreshold, superlinear or sublinear threshold safety) [139].

To eliminate reliance only on the subjective opinions of authorities and experts, “evidence-based medicine” was developed in the 1990s [55].

A foreign academic manual on the philosophy of science published in 2007 [2]39 says the following: “There is no question about what the theory represents (either nature or culture), but rather it is a question of negotiation between different scientific groups with regard to what will be considered to compromise facts. Hence, … the issues are not the relationship between theory and nature/culture (epistemological and representational), but what scientists regard and treat as real (ontological and processual).”40

Similar positions can be partly found in the documents of the UNSCEAR, for example, in the UNSCEAR-2012 published in 2015 (highlighted by the author below) [21]:

“The scientific method does not operate in isolation, but is conducted by the scientific community, which has specific internal norms to guide the activities of scientists in applying the scientific method. These norms include truthfulness, consistency, coherence, testability, reproducibility, validity, reliability, openness, impartiality and transparency [140]… Incorporation of nonscientific concerns …—may or may not use conditional predictions. In this case, account is taken of norms external to science such as social responsibility, ethics, utility, prudence, precaution and practicality of application … The precautionary approach41 can be described in the following way: “When human activities may lead to morally unacceptable harm that is scientifically plausible but uncertain, actions shall be taken to avoid or diminish that harm””42 [21].

In our opinion, this principle may have disadvantages of a significant conjuncture of unscientific approaches in the formation of practical conclusions on an allegedly scientific basis, but beyond the scope of scientific ethics. So, criteria are not always available for an accurate assessment of the social significance of a problem under study, and they clearly will not be, so to speak, understated by the authors.

In the widely acclaimed lecture by Michael Crichton, which was later published in The Wall Street Journal [151] (see also an in-depth discussion of this issue in [55]), the author considered consensus science “as an extremely pernicious development that ought to be stopped.” He emphasized, “Let’s be clear: the work of science has nothing whatsoever to do with consensus. Consensus is the business of politics. Science, on the contrary, requires only one investigator who happens to be right, which means that he or she has results that are verifiable by reference to the real world… There is no such thing as consensus science. If it’s consensus, it isn’t science. If it’s science, it isn’t consensus.”43

CONFOUND FACTORS AND FALSE ASSOCIATIONS

‘Confound factors,44 i.e., “confounding” factors in the main translation from English [15, 32, 33, 153] (sometimes there are “confounding” [94]), with already widespread slang calque “confounders” or “confoundings” [33, 153, etc.], represent one of the deviations from true causality, which can lead to systematic subjective deviations or biases [154] (sometimes the term “systematic error, confounding bias” is met) [15, 155]).

According to the definition from the fundamental dictionary of epidemiology [15], a confounder is “a variable that can cause or prevent a studied outcome, but is not intermediate in the causal chain and is associated with a studied effect.” The manual [153] addresses “strengthening, weakening, perversion” instead of “cause or prevent.”

At this point, we will not dwell on the theory of confound factors, which is fairly common material in manuals on epidemiology [3–5, 15, 29, 50, 51, 83, 87, 89, 91, 94, 95, 153] (see also reviews on definitions, theories, and history of the formation of the concept together with a retrospective look at relevant studies [9, 120, 152, 154]). Likewise, we will not dwell on the methodological and statistical approaches that can eliminate the effect of potential confounders (randomization, stratification, standardization, etc. [33, 120, 153]).

We give only a summary of the examples of the influence of confound factors leading to statistically significant but false associations from various fields of natural sciences and sociological disciplines (Table 4—nonradiation factors; Table 5—radiation exposure).45 Although the points reflected in Table 4 suggest some sensationalism, all the relevant links are nevertheless strictly scientific (on the Internet, we can find many examples of graphs with false associations, however almost none are provided with an indication of sources).

Data summaries with similar false associations can be made, not only for confound factors, but also for the most varied types of subjective biases. These (more than a dozen types can be found) are also considered in all epidemiology manuals and have been identified in many relevant studies [2, 15, 18, 20–22, 24, 25, 29, 32, 33, 43, 44, 46, 50, 51, 89, 91, 94, 95, 101–103, 106, 153, 172, 173]; we will not dwell on them.

Many biologically unrelated associations can occur when searching for genetic markers.46 The A.V. Rubanovich and N.N. Khromov-Borisov, 2013 [195], study concluded that the predictive and classification efficiency of the results of most published associative genetic studies was low.

The mass of false associations listed in Tables 4 and 5, which often includes even statistically significant trends for dependences on radiation dose (!), unequivocally indicates the need to use certain epidemiological criteria or at least generally accepted rules of assessment that would make it possible to determine the degree of truthfulness of the identified relationships. The criteria for determining the causation of effects from exposures as well as the relative contribution of epidemiology and experimental disciplines towards them are planned for presentation in the following reports.

CONCLUSIONS

The presented Report 1 has considered, first, the conceptual formulation of the problem and the purpose of the report cycle, as well as preliminary philosophical and scientific concepts that are necessary for the subsequent understanding of the main problem— the meaning, essence, and possibility of practical application of the criteria (rules, principles) for establishing the truth of associations detected in biomedical disciplines. The idea and relevance of our study was determined, first of all, by the weak penetration of specific methodologies for proving causality into experimental and descriptive disciplines related to the influence of the radiation factor (especially in Russia).

The formulation of a comprehensive definition of causes and causality is not a very simple task, despite its apparent clarity. Five types of definitions varying from the simplest explanatory definition to complex integrated scientific definitions for deterministic and stochastic effects have been identified. These five types of concepts, many of which go back to the positions of famous philosophers (mainly David Hume [59] and others, in particular John Stuart Mill [62]), were fragmented in epidemiology as early as the early 2000s [8]. However, judging by the publications of researchers specialized in this problem [5, 29, 44, 72, 84], they have not become a universal base platform for studying biomedical effects from various agents and impacts even 10 or more years after. There is criticism of certain types of definitions, which is related to restrictions for use in specific areas of natural sciences.

Second, this report has presented a set of provisions from significant sources (publications of researchers of the theory of causality, manuals on the philosophy of natural sciences, and UNSCEAR documents) that reveal the scientific, practical, and social goals of epidemiology and, in fact, any scientific researches that are important for human life and activity. These goals are primarily associated with evidence of the truth of the revealed dependences of effects from agents and impacts, however methods for their achievement can be based on different rules and ethical foundations proceeding from the existing fundamental scientific or social tasks. In the second case, the “precautionary principle” is used, and research standards developed for scientific application are significantly narrowed and simplified, being largely replaced by one thing: the prevention or, at least, reduction of risks, even if the reality of the latter does not have rigorous scientific evidence.

This fragment of Report 1 is necessary for ascertaining which areas researcher work may be of primary importance in proving the reality of effects—whether it is important only for scientific areas, or also for public and social. This article will help find out what limits of scientific evidence should be reached depending on one or another socially significant conjuncture.

Finally, third, this publication yields examples of false, but statistically significant, associations from a wide variety of biomedical as well as public and social spheres. The falsity was caused by third—confound— factors. Some of the information presented looks sensational, and some is absurd. There is also one that is perceived as very plausible (for example, the relationship between smoking and suicides, coffee consumption and miscarriages, hormone replacement therapy and a decrease in the risk of cardiovascular pathologies, the dependence of the frequency of malignant neoplasms in nuclear workers and their offspring on irradiation dose, etc.; Tables 4 and 5). Nevertheless, all turned out to be false, although they do not seem to be “worse” by external signs than many true associations published in scientific sources.

The given examples of the effects of confound factors that form false associations indicate the need to use standard criteria for assessing the truth of causal relationships. Several complexes of these criteria are known, one for infectious pathologies (initially the Henle–Koch criteria with further modern modifications by other authors, up to the universal Evans complex), and the other two for noninfectious pathologies (the Hill and Susser criteria). This material is planned for consideration in susbsequent reports.

Conflict of Interests and Possibility of Subjective Biases

There is no conflict of interest. This study that was conducted within the broader budget topic of research development at Russia’s Federal Medical Biological Agency was not supported by any other sources of funding. There were no timeframes, official requirements, restrictions, or other external objective or subjective interfering factors in carrying out the study.

NOTES

(1) We can add to this the words of King Lear: “Nothing will come of nothing” The source of these phrases is the treatise of Titus Lucretius Cara “On the Nature of Things”, which reproduces the teachings of Epicurus.

(2) “… most researchers would find it difficult to define the words in anything but a circular fashion; causes are conditions and events that produce effects, and effects are conditions and events produced by causes” [9] (hereinafter, the translations were presented by the author—A.K.).

(3) “I have no wish, nor the skill, to embark upon a philosophical discussion of the meaning of “causation”” [13].

(4) M.W. Susser, who, in fact, made probably one of the largest contributions to the rules for establishing causality [9, 14] (for more detail, see Report 3), is not mentioned in any Russian-language manuals on epidemiology and evidence-based medicine known to us. The exception is the Epidemiological Dictionary [15], which is a translation of the famous Oxford publication edited by J.M. Last (2001). The domestic publication by V.V. Shkarin and O.V. Kovalishena, 2013 [16] was found to mention the surname of M. Susser in the context of “modifications of Hill’s criteria of causality” (which is incorrect) with reference only to the indicated translation of the dictionary [15].

(5) “… any factor, whether event, characteristic, or other definable entity, so long as it brings about change for better or worse in a health condition” [17] (cited from [9]).

(6) “… something that makes a difference” [11].

(7) In the majority of relevant studies and documents, the essence of the term “reverse causation” is assumed to be clear and is not explained in any way, just specific example(s) is given. The single definitions of “reverse causation” that are known to us are not clear and understandable, and, therefore they are probably also always accompanied by a real example. So, in [18], the phenomenon has the following explanation: “Pre-existing symptoms of the outcome that influence the exposure could generate the observed associations.” A well-known example is presented that the curve of alcohol-related mortality is J-shaped, i.e., complete nondrinkers live moderately less than drinkers (it is understood that nondrinkers may not drink because of their initially worse health status).

(8) The assumption that the male sex determines the occurrence of lung cancer, which seemed anecdotal from the standpoint of modern knowledge, did not seem as such relatively recently. For example, in 1947, Dr. Evarts Graham in Missouri conducted therapy for this pathology by administering female sex hormones to patients, since it was assumed that women were protected from lung cancer (the therapy was unsuccessful) [30, 31].

(9) There are many reviews on the history of establishing a causal relationship between smoking and lung cancer. Here, we present only a single source— the work of one of the main researchers of this problem in the 1950s [35]. A more detailed review is planned in Report 2.

(10) Information that the main criteria (rules) of causality for noninfectious pathologies were proposed by several authors as early as the 1950s is not presented in most known English-language sources of the topic, including monographs, reviews, study guides (in particular, online), and fundamental dictionaries on epidemiology (for example, [3, 15]), not to mention Russian-language publications. Information of this type is found only in studies specifically devoted to the historical aspects of the formation of causality criteria. There are also few of them. We can mention the relatively recent reviews of the main researchers: A.S. Evans, 1976 [34]; D.L. Weed, 1988 [36]; and M. Susser, 1991 [11] (the original monograph of the last author as of 1973 [17] is not available to us). Some information with reference to the study by M. Susser (1991) [11] is presented in one of the latest official reports of the US Department of Health on the health effects of smoking (2004) [37]. This information is also available in relatively few reviews and manuals of later authors that discussed the philosophical and epidemiological problems of causality in the 2000s–2010s [38–41]. We will talk about the deplorable state of priorities in this area further, especially in Report 2. The “criteria of (Bradford) Hill” formally are not Hill’s criteria. Besides us, the question of inexplicable noncompliance with copyright ethics by the most famous founders of the causation theory was also raised in the H. Blackburn and D. Labarthe, 2012, historical study [42].

(11) Again, without reference to sources, i.e., to the authors who proposed those principles. This can be seen from the document [43] and is also emphasized in studies by M. Susser, 1991 [11] and H. Blackburn, D. Labarthe, 2012 [42].

(12) “… these guidelines have generated a talmudic literature on their nature, logic, and application” [9].

(13) This “evidence” is often limited when advertising nutritional supplements, etc.

(14) At this point, we can recall the situation in the field of aging genetics. The authors receive Nobel Prizes for extending the life of the nematode and fruit fly, while the discovered genes that may be responsible for the life expectancy are rarely significant when studying the genomes of “long livers,” if they are significant at all (at least, this was the state of affairs in 2012 [48, 49]). Of course, extending the life of the nematode and fruit fly could also be regarded as helping nature, however, in our opinion, the awarding of the Nobel Prizes for this is still somewhat premature.

(15) In 2016 and 2017, the author of this publication made two almost similar reports at the FSBI SSC Burnazyan Federal Medical Biophysical Center of Russia’s Federal Medical Biological Agency (the Academic Council) and at the FSBIS “Federal Research Center of Food and Biotechnology” (the Council of Young Scientists and Specialists). The topic was the criteria of causality and the hierarchy of biomedical disciplines in giving evidence of effects from impacts. In the second organization, only one representative of a fairly young audience knew about the existence of causality criteria. In the first, in short, the situation also left much to be desired.

(16) “… deductive logic could never be predictive without the fruits of inductive inference” [57].

(17) “… argued that ‘true causes’ are both necessary and sufficient to produce a given effect. It further followed from Galileo’s analysis that true causes are universal” [58].

(18) “… reasoning was to imply the principle of causality from the assumption that it is among the conditions of every experience” [5].

(19) “Rules by which to judge of causes and effects” [38, 59].

(20) Mill’s Eliminative Methods of Induction (System of Logic, 1843) [63].

(21) “… alluding to Hume, stressed the psychological nature of these concepts [causality] and pointed out that “in nature there is no cause and no effect” and that these concepts are results of an economical processing of perceptions by the human mind” [5].

(22) “… that causation ‘is a relic from a bygone age, surviving, like the monarchy, only because it is erroneously supposed to do no harm’” (Russell, 1959, p. 180) [2].

(23) “… refuted Hume’s subjective view of the world by the demonstration, which he attributed to Immanuel Kant, that a priori knowledge exists independent of experience. Russell himself showed that relationships, too, can exist independent of experience” [11].

(24) We note that we have managed to find a consistent and complete summary of the development of the concept of causality over the centuries in almost no manuals on the philosophy of science (English-speaking manuals and many voluminous Russian-speaking manuals, for example, those cited above [1, 2, 52, 54, 56, 60, 63] and others [69, 70]). The presented information is discrete and fragmentary, often mixed; personalities are presented in different sources in a dotted manner (sometimes they are included; sometimes they are not); a complete summary was not found anywhere (this caused an excessive number of general references in Table 1, since the views of all philosophers involved in the problem of causality were not considered in any manual). As mentioned, the exception known to us is only a very brief chapter in the English monograph of 2002 [68], however it is of philosophical rather than natural scientific nature and does not contain provisions regarding modern philosophers, ending with the views of J. Mill.

(25) Perhaps, due to V.I. Lenin’s [71] stereotypes, even the mention of D. Hume is missing in some voluminous Russian manuals on the philosophy of science (for example: [69]—2006, 136 pages; [70]—2007, 731 pages).

(26) As indicated, Table 2 presents the data from the review [8] (additions from [5, 29]) with a fairly in-depth search for relevant sources in PubMed. Of course, the definitions of causality in biomedical disciplines have been the subject of consideration in all epochs (we recall once again the reference to the question of “causation” of fever in connection with the arrival of a frigate in Liverpool in 1861 [12]). This problem was not left out of account in domestic medicine either; it is enough to recall the monograph The Problem of Causality in Medicine (Etiology) by I.V. Davydovskii, which was published in 1962 [74]. Its author noted as early as this time that “a huge philosophical and natural-historical literature that covers the problems of causality in biology has been accumulated”; moreover, the cited sources (which were, however, few) included the whole collection The Problem of Causality in Modern Biology (Moscow: Izd. SSSR, 1961). Thus, mass profile discussions took place long before the “talmudic period” [9] after 1965 [13]. For our time, one cannot but mention a very comprehensive section on the causality of effects in the manual on epidemiology of the leading Russian expert in the field of evidence-based medicine V.V. Vlasov (2006) [33].