Abstract

In this review, various aspects of the causality criterion “Biological Plausibility,” which is sometimes replaced by the criterion “Coherence” (consistency with well-known medical and biological knowledge), are considered. The importance of the criterion for epidemiological evidence of causation, especially for disciplines such as ecology, toxicology, and carcinogenesis, in which there are difficulties not only to perform experiments, but even to observe the effect, is noted. Only statistical approaches in epidemiology are incapable of proving the true causality for association (possibly the effect of chances, confounders, biases, and reverse causation). Without knowledge of the biological mechanism and a plausible model, such a relationship (especially for weak associations) cannot be regarded as confirmation of the true causation of the effect of the factor. The essence of the criterion is the integration of data from various biomedical disciplines, including molecular and experiments on animals and in vitro. There are three (D.L. Weed and S.D. Hursting, from 1998) and four (M. Susser from 1977 and 1986) levels of attaining biological plausibility and coherence with the existing knowledge. The methodologies for integrating data from various disciplines through Bayesian meta-analysis, based on Weight of Evidence (WoE) and teleoanalysis, are considered. The latter is a combination of data from different types of studies to quantify the causal relationship between two such associations, each of which can be proved. However, it is difficult for several reasons, including an ethical plan, to determine the relationship between the causality of the first and the final effect of the second. The approach by teleoanalysis seems doubtful. Despite the need for the “Biological Plausibility” criterion, it, similarly to almost all of Hill’s criteria (except for “Temporality”), is neither necessary nor sufficient for evidence. Examples are presented (including the effects of radiation) that show that, first, “Biological Plausibility” depends upon the biological knowledge of the day and, second, there are real though seemingly implausible associations, and vice versa. This is basis for criticism by some authors (A.R. Feinstein; K.J. Rothman and S. Greenland; B.G. Charlton; K. Goodman and C.V. Phillips) both specifically for the criterion “Biological Plausibility” and for the entire inductive approach based on causal criteria. However, the “Biological Plausibility” criterion remains important for proving causality in epidemiological studies, especially for those disciplines that public health relies on in making preventive decisions and developing safety standards.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

To date, our cycle of publications in the journal “Radiation Biology: Radioecology,” devoted to causality issues in observational disciplines, including the radiation profile, has expanded from the originally planned three reviews to a minimum of four. Two reviews have already been published [1, 2], and two are planned. They are devoted to causation models and description of the historical origins, essence, limitations, breadth of application, and radiation aspect of the guidelines/criteria by which causality is established [1, 2], starting from Henle–Koch’s postulates for infectious diseases (J. Henle and R. Koch, 1877–1893, Germany) with their subsequent improvements and ending with Hill’s criteria (A.B. Hill, 1965, Great Britain; M. Susser, 1973, United States ), Evans (A.S. Evans, 1976, United States), and other authors who are less well-known today [1, 2] (and subsequent communications).

The material published on the topic since the early 1950s (almost entirely from the United States [2]), of both epidemiological and scientific–philosophical nature, including articles and voluminous Western textbooks on epidemiology and carcinogenesis (for the hundreds of sources and more than 40 textbooks used by us, see [3]), is so ample that, in addition to the unifying Reports nos. 1–4 per se, there was a need for separate detailed publications on the most important criteria. Two reviews on the “Strength of Association” [4, 5] and one review on “Temporality” with description of evidence of reverse causality [3] have already been published. This work is devoted to another criterion, “Biological Plausibility,” because the corresponding material in terms of its volume, importance, and relevance, including for experimental radiobiology, is difficult to reflect only in a separate fragment of the article.

Thus, reviews [3–5] and the review presented below are some detailed preambles to Reports 3 and 4Footnote 1.

The importance of the problems under consideration lies in the fact that, in all descriptive disciplines, which includes not only natural sciences but also retrospective (history), socioeconomic, psychological, etc., disciplines (see [3, 4]), evidence can be based on identifying statistically significant associations between cause and effect, between exposure and effect, between the characteristics of a group and its subsequent behavioral features, etc. In experimental disciplines, where it is possible to determine the conditions of the experiment, obtaining such evidence is quite clear (an approach is called experimental when at least one out of many variable factors can be controlled [8]). Experimental identification of a statistically significant association or correlation is the final stage of evidence [9] (which also applies to randomized controlled trials (RCT) in medicine [8, 10]). However, for descriptive/observational disciplines, association does not mean causality, whatever the statistical significance of the correlation is [1–7, 9–12].

The identified association in observational studies in the absence of control over the variant(s) can be explained by the following unaccounted factors [13] (and other sources; see [1–5]):

• chance;

• interfering factors (“third factor,” “confounder”);

• systematic errors (“bias”);

• reverse causation.

All these factors with numerous examples were considered by us earlier [1–5] (except for the biases, a list of which can be found in many textbooks, and more than three dozen of which are given in the Oxford Epidemiological Dictionary [14]).

In this regard, for epidemiology, the establishment of a statistically significant association between two phenomena, in contrast to experimental disciplines, is only the very first, initial stage of proving causality [9] (and other works, see [3, 4]).

To confirm the causality of association, following the Henle–Koch postulates of the 19th century for infectious diseases, in the 1950s–1970s in epidemiology a number of “precautions,” “viewpoints,” “guidelines,” “judgments,” “criteria,” “postulates,” etc., were developed (these are essentially synonyms; see sources in [1–3]) to assess the causality of chronic, noninfectious pathologies. The most well-known are Hill’s nine causality criteria, eight of which this authoritative English statistician in the field of medicine in the past only collected together, having taken them from other authors [11]. Nevertheless, now the criteria of causality in epidemiology [1–5, 14] and even in ecoepidemiology [7, 15, 16] are almost always called Hill’s criteria or Hill’s guidelines.

Hill himself emphasized that, with the exception of “Temporality” (the exposure should precede the effect) [3], these are not strict rules-criteria but some principles, guidelines for assessing the degree of probability that the association is causal (“viewpoints”) [11].

According to the analysis of authoritative authors (Weed and Gorelic [17], etc.), as well as the data of our study of publications for 2013–2019 (we plan to consider these materials in more detail in Reports 3 and 4), some criteria seem to be the most important and are used more often.Footnote 2 Therefore, following, as already mentioned, the above-mentioned preamble reviews [3–5], devoted to two main criteria, in this work we consider the third of them, “Biological Plausibility.”

HISTORY OF THE “BIOLOGICAL PLAUSIBILITY” CRITERION: OVERLAPPING MEANINGS WITH “COHERENCE”

A suitable point, which was later called a “postulate,” first appeared in the work by E.L. Wynder in 1956 [18], containing the rules for establishing the causality of cancer: the agent must be shown to be carcinogenic in some animal species (not essential).Footnote 3 But in 1961 Wynder and Day [19] removed this point from the list when the postulates were extended to all chronic pathologies (i.e., the author seemed to change his mind).

However, A.M. Lilienfeld in 1957 [20] developed this point. The term “Biological Plausibility [of a causal hypothesis]” per se was introduced. The point was ensured by experiments on animals and had gradations of magnitude. Then, A.M. Lilienfeld in 1959 [21] wrote about the biological plausibility of an association that depends on our basic knowledge of the biology of these specific pathologies.Footnote 4 In 1960, P.E. Sartwell [22], among his five “points,” after the strength of the association, its reproducibility, the correlation itself (for some reason, in third place), and the temporal dependence (for some reason, in fourth place), called the last one “The Biological Reasonableness [of the association].” Pondering the logical order of the point was, probably, not the purpose of the short paper by Sartwell in 1960 [22]; only their meaning was important.

In the Report of the Chief Physician of the United States on the consequences of smoking, published in 1964 [23], this point is absent; it was replaced by “Coherence,” agreement with the known facts of the natural history and biology of the disease.Footnote 5 It can be seen that this point almost literally coincides with the “Biological Plausibility” from Lilienfeld in 1959 [21].

In 1965, Hill [11] supplemented “Biological Plausibility” (probably from the works by Lilienfeld from 1957 and 1959 [20, 21]) with the point “Coherence,” probably from the Report of 1964 [23] (there are no references in [11] to anyone [2]). However, Hill’s first criterion received a slightly different meaning, namely, the actual plausibility of dependence in a broad biological sense (not only in relation to a specific pathology).

M. Susser, at least from 1977 [25], developed the “Coherence” criterion, which essentially included “Biological Plausibility”.Footnote 6 In the widely cited textbook “Pharmacoepidemiology” (edited in 2000), “Coherence” and “Biological Plausibility” also completely coincide [27].

Thus, the “Coherence” criterion has supplanted “Biological Plausibility” in a number of sources. It should also be noted that the meaning of “Biological Plausibility” sometimes coincides with what is now understood under the criterion “Experiment” [16, 27].

In Hill’s criteria modified for ecoepidemiology, “Biological Plausibility” and “Coherence” are replaced by “Biological Concordance” [16].

According to the aforementioned study by Weed and Gorelic [17], among the 14 reviews on the topic, the “Biological Plausibility” criterion was mentioned in eight sources (fourth place among the nine criteria). In our analysis of publications for 2013–2019 (35 papers in which Hill’s criteria were used as a methodology), this statement in terms of its use was in third place (in first place, “Strength of Association”; in the second place, with the same results, were “Temporality,” “Consistency,” and “Biological Gradient,” i.e., the “dose–effect” relationship).

ESSENCE OF THE CRITERION: INTEGRATION OF DATA FROM VARIOUS DISCIPLINES

The topic of introducing data from biology, medicine (“biomedical knowledge” [28]), toxicology, pharmacology, ecology, and other disciplines [2, 11, 15, 16, 23–59] into epidemiological evidence, as well as the reverse approach—verification biological facts by epidemiological patterns (this also takes place) [52]—is very broad and, indeed, deserves a separate review, which was carried out before us, though long ago by Weed and Hursting in 1998 [29].Footnote 7

“Epidemiology, molecular pathology (including chemistry, biochemistry, molecular biology, molecular virology, molecular genetics, epigenetics, genomics, proteomics, and other molecular approaches), and in vitro animal and cell experimentation should be considered an important integration of evidence into determination of carcinogenic effects for humans” (Cancer Institute, United States, 2012) [53].Footnote 8 From the first paragraph, we can add here medicine, toxicology, pharmacology, and ecology.

This list shows that data from almost every area of biomedical and molecular–cellular disciplines can make an important contribution to the search for evidence for epidemiological research of any practical importance. This, clearly, gives practical significance to the research of any seeming fundamental and theoretical nature.

The essence of the criterion is generally clear; it is verbosely, but vaguely, explained in numerous works (“No clear rule-of-thumb exists for this criterion” [51]). This is probably why the available material sometimes resembles a “stream of consciousness” [29, 54, 60, 61].Footnote 9 Such material is difficult to systematize and categorize according to meanings and levels of significance.

Compliance with general scientific knowledge. The latter include scientific facts and laws that relate to the alleged causation (general scientific) [55]. The criterion refers to the scientific plausibility of the association [43].

Support by in vitro and animal laboratory experiments [27, 62, 63]. A question arises as to what extent the models obtained for cells and animals are applicable to humans? If the effect is observed in rats or monkeys, then how is it known that it will be observed in humans? Conversely, just because the effect is not found in animals, does it follow that it cannot occur in humans [62]? (The deplorable example of thalidomide taken by pregnant women is given below.)

“Despite these uncertainties, experience has shown that animal evidence can matter and, where it exists, should be taken into account” (2019 handbook) [62].Footnote 10 For example, teratogens in humans were also found to be teratogens in at least one animal species and exhibit some toxicity in the majority of the species tested. However, as a rule, the extrapolation of effects between species requires knowledge of their physiology [15] (the issues of the applicability of the laboratory approach, within the framework of the “Experiment” criterion, are planned to be considered in our further works).

The presence of biological and social models and mechanisms that explain the relationship [13, 34, 38, 62, 64, 65]. They are considered at different levels of biological and social organization, from molecular–cellular to population (for example, at the level of behavioral reactions contributing to the carcinogenicity of the factor (“social”)) [29]. “A causal relationship is considered biologically plausible when evidence of a probable causal mechanism is found in the scientific literature” [66] (based on [66], the idea arises that it is not necessary to carry out experiments for confirmation, because synthetic studies are sufficient).Footnote 11 T. Hartung in 2007 called it “mechanistic validation” [56].

Biological plausibility reflects the consistency/inconsistency of the theory explaining how or why exposure causes a disease, with other known mechanisms of causation of that disease [31]. For example, was it shown that the agent or metabolite can reach the target organ at all? [67]. “Scientific or pathophysiological theory” [27]. “Biological common sense” [15]. In medicine, it is customary to begin the study of a condition, for example, a rare congenital disease, with observations and epidemiological data, and end it with the study of the mechanisms of its inheritance and the essence of metabolic “breakdown” [24].

International and national agencies have compiled lists of agents and effects that are considered carcinogenic to humans. The criteria are, in addition to the actual epidemiological studies, data from animal experiments and ideas about the mechanisms. However, data on the mechanism alone can rarely be considered adequate for establishing the carcinogenicity of an agent in relation to humans, despite all the advances in understanding the molecular basis of this process [47] and all the “precautionary principles” [1]. According to [68], to make a conclusion about causality, in addition to the existence of a plausible mechanism, epidemiological data on the frequency of the effect are, nevertheless, required. Likewise, review [54] indicates that mutual support of mechanisms and dependencies is required to establish causal relationships. The same principle is followed by the International Agency for Research on Cancer (IARC) for carcinogenic factors: the assessment of causality depends on the presence of a plausible mechanism and probabilistic data, i.e., on the increased incidence of cancer in the population or on the relative risk [54, 57].Footnote 12

Three levels of achievement of biological plausibility according to D.L. Weed (cited in the thematic review by Weed and Hursting [29] (see also [51]):

(1) When a reasonable mechanism for association can be assumed, but there is no biological evidence (the beginning was made, according to [29], in [11] and repeated by subsequent authors, for example, [13]).

(2) When facts from the field of molecular biology and molecular epidemiology can be added to the mechanism (we add the following: including the data on “surrogate EndPoints,” i.e., biomarkers [7]). In [29], the IARC monograph from 1990 is given as an illustration; however, there are similar constructions in later documents of this organization. Particular attention is given to molecular and cellular indicators: adducts and DNA lesions, their repair, proliferation disorders, level of cell death, changes in intercellular connections, etc. (IARC-2006) [57].

(3) If there is sufficient evidence of how the causative factor affects the known mechanism of pathology [69, 70]. This is the most rigorous of the three approaches to biological plausibility [29]. Weed [39] indicates that, although it is difficult to propose a rule of thumb for determining the biological plausibility, if the factor of interest is the key factor in a biological mechanism or in its pathways, then it is more likely that the dependence will be causal. Mechanisms or pathways are demonstrated using laboratory experiments and molecular epidemiology [39].

For these three levels, Weed and Hursting [29] developed scholastic reasoning, not devoid of theoretical meaning but difficult to translate into practice. They point out that it is unclear how much evidence and what evidence will turn the “suggested” (Hill and other authors) [11, 13] or “hypothezed” (Susser [71]) mechanism into a “coherent” mechanism (Report of the Chief Physician of the United States on the consequences of smoking from 1964) [23], i.e., the one that not only “makes sense” [72] but is also defined by our detailed understanding of each step in the chain of events [23].Footnote 13 The question also arises as to what is required to assert that we “know” the mechanism [69, 70]? The fact is that an explanation (i.e., a mechanism) is understood in a broad sense, because it is difficult to define what a “good explanation” is and how to take into account the “success” of mechanisms [54].

After all, it is all a matter of judgment whether there is enough evidence to rule out alternative explanations (“Weight of Evidence,” WoE [16, 34, 38, 40, 54, 56, 67]) [30]. Earlier, the Committee on Diet and Health of the National Research Council’s Commission on Life Sciences for a complex of six of Hill’s criteria (strength of association, dose dependence, temporal dependence, consistency of association, specificity, and biological plausibility) gave equal weight to all criteria except plausibility, which was downgraded as this provision depends on subjective interpretation [73], which later was considered “very subjective” [74].

Four levels of acquisition of biological plausibility according to M. Susser. This author, as mentioned above, had been developing the concept of the “Coherence” criterion, including “Biological Plausibility,” since 1977 [25] (if not earlier; see note 6), and in 1986 [36, 37] he outlined his concepts in detail. The criterion reflects the pre-existing theory and knowledge (the “Coherence” of other authors [11, 23]) and is interpreted broadly, including the following elements of coherence: (1) with theoretical plausibility (also named in [54]), (2) with facts, (3) with biological knowledge (i.e., “Biological Plausibility”), and (4) with statistical patterns including the dose–effect relationship (we plan to consider the set of criteria proposed by Susser in more detail in Report 4).

THE IMPORTANCE OF INTEGRATION OF BIOLOGICAL AND OTHER DISCIPLINES WITH EPIDEMIOLOGY (RADIATION EPIDEMIOLOGY): QUOTES

It is appropriate to provide notes of other authors (mainly from significant sources) reflecting this provision. They can be useful for experimenters for substantiation (for this purpose, the originals are also presented in the section “Notes”).

— Advances in the biological sciences and their integration into health care through molecular epidemiology make one causal criterion, “Biological Plausibility” (sometimes called “Biological Coherence”), an increasingly important consideration in causal inference (1998) [29].Footnote 14

— Biological plausibility represents fundamental concepts of data integration—the criterion suggests that epidemiology and biology must interact (2015) [34].Footnote 15

— Correlations and data must have biological and epidemiological sense (1983) [75].Footnote 16 Causality must have biological meaning (2016) [33].Footnote 17

— Biological plausibility is a criterion where biology and epidemiology merge (2016) [46].Footnote 18

— Demonstrating biological plausibility is not part of epidemiological methods. This, however, does not mean that epidemiologists can forget about it. Epidemiologists should understand the biology of the diseases they are studying, explain their hypotheses in biological terms, and propose and promote (sometimes even conduct) biological research to test hypotheses (2016) [33].Footnote 19

— Ultimately, biological processes regulate all pathologies and adverse health effects, without exception. This also applies to social and physiological processes, so that the harmful effects of economic losses must ultimately be carried out through biology (2016) [33].Footnote 20

— Data integration refers to the combination of data, knowledge, or reasoning from different disciplines or from different approaches in order to generate a level of understanding or knowledge that no discipline has achieved alone (2015) [34].Footnote 21

— Today, researchers considering causal inference must pool data from various scientific disciplines (2015) [34].Footnote 22

— Medical progress develops best when disciplines that focus on subcellular and molecular basic research work in tandem with epidemiology (2003) [31].Footnote 23

In the US judicial system, causality in toxic offenses is sometimes judged on the basis of a wide variety of evidence of varying scientific value—from epidemiology, animal studies, chemical analogies, case reports, regulations, and other secondary sources (2004) [32], although epidemiology is of paramount importance (see [4]).Footnote 24

The Guidelines for Weight of Evidence analysis (WoE; see sources above) used by the International Chemical Safety Program (WHO; toxicology, chemistry, environment, including biota) for assessing impact of agents includes a modified version of Hill’s criteria, with “Biological Plausibility” being listed first (2017) [40]. The fact is that environmental protection agencies (USEPA in particular) form their conclusions sometimes only on the basis of animal experiments [31].

Data integration also applies to radiation disciplines:

— Radiation protection experts, both legislators and practitioners, maintain an understanding of current knowledge in radiobiology and epidemiology to support appropriate decisions (2009) [76].Footnote 25

— When epidemiology reaches its limits, it calls on radiobiology for help (2000) [77].Footnote 26

— “Radiation epidemiology and radiobiology work in tandem for the practice of radiation protection” (2010) [78].

— Integration of fundamental radiobiology and epidemiological research: why and how. Great importance is given to the use of basic radiobiological data in the development of methods for radiation risk assessment (2015) [79].Footnote 27

The meaning of the material presented is clear if we remember that epidemiology itself, similarly to all descriptive/observational disciplines, is almost only established associations [1–5, 78]. As a result, there are facts of the previously cited absurd statistically significant direct or inverse correlations between the consumption of ice cream and mortality from drowning [1], between the export of lemons to the United States and the number of car accidents there [3], between the linear size of the penis in the male population of the country and the level of gross domestic product (GDP) in it [80].Footnote 28 The seemingly more real correlations from medicine and epidemiology may only seem so, because the absurd is perhaps just more hidden and intricate.

V.V. Vlasov [24] in his manual writes about “Biological Plausibility” that, in fact, this feature is a variant of the character “explainability of correlation.”

Most likely, this definition is the most concise and accurate. It can be used as a guide in everyday life: with all the exceptions, there is little probability that an absurd and inexplicable correlation will be true and a true correlation will be inexplicable.

BAYESIAN ANALYSIS (META-ANALYSIS)

A systematic approach based on the integration of data from various disciplines in some works is referred to as “Bayesian analysis” [31] = Confidence Profile Method = Bayesian method [81, 82] and sometimes to “Bayesian meta-analysis” [82–84].Footnote 29 It relies on the Weight of Evidence (proofs) rather than on one specific study, following the same principles as Bayesian decision making [31, 81–84]. The method implies inclusion of all available biomedical data (from case reports to animal studies) into one updated and standardized estimate that determines the overall strength of causation (see [31]).

According to authors from the United States [31], the majority of clinicians in their daily practice use Bayesian analysis in conjunction with inductive reasoning, a practice known as differential diagnosis.

TELEOANALYSIS: A QUESTIONABLE KNOWLEDGE LINKAGE

Meta- and pooled analyses are used in a wide range of disciplines [85] (and many others), whereas the term “teleoanalysis” is barely known to many people. Three sources for this term can be retrieved from PubMed, and the first of them, by N.J. Wald and J.K. Morris (2003) [86], is foundational. The authors of [86] thank J. Aronson for proposing the term as such (from “teleos,” which means “complete”). The literal meaning is a combination of data from various types of studies [86]. The difference from meta- and pooled analyses is that data from different classes of evidence are combined. The purpose is to implement the linkage (quantitative) in terms of the final causation between two such associations, each of which can be proven, but it is difficult to establish the correlation between the first and the final effect of the second for a number of reasons, including ethical concerns (experiments on people).

The following example is given in [86]. Consumption of saturated fat is known to increase the risk of coronary heart disease, but the magnitude of this effect cannot be established experimentally, because long-term trials based on dietary habits are hampered practically. To estimate the overall magnitude of the effect, it is proposed to solve the problem by pooling data using the cholesterol concentration as an intermediate factor in the causal pathway. The effects of (a) reduced fat consumption on the serum cholesterol concentration (which can be done in small experimental studies) and (b) cholesterol concentration on the risk of coronary heart disease (which can be done in epidemiological studies) are investigated. These data are then made quantitatively consistent to assess the effect of dietary fats on the pathology.

The teleoanalytic approach, as far as we can judge, is not very widespread. In addition to [86], we encountered this term in three other papers (PubMed did not identify one of them) [87–89]. Its application (mainly for studying the effect of drugs) was found only in [86], although a similar approach (without the term) can be found in studies on the assessment of the toxicity of compounds for humans (see, e.g., [34]). However, it should be noted that teleoanalysis differs from simple data integration for revealing biological plausibility in that it is a quantitative estimate linking two separate associations, which is questionable. No one can know which quantitative biological patterns at the in vivo level will be in a place where it is difficult or impossible to determine them. The conclusion that a certain quantitative diet through the concentration of cholesterol will lead to a certain quantitative frequency of coronary heart disease, without a direct study of the diet–pathology correlation seems far-fetched and even partly unfalsifiable, because the influence of confounders is likely.

For example, the authors of [90] give a conditional example of a causal chain of events consisting of five units, each of which is characterized by a probability of 90%. In this case, the probability of a common linkage, from the first to the last unit, will be 0.95 = 0.59 or 59%. If we imagine a 75% probability (which is also quite high), then at the output we will obtain only 24%. It is interesting to know what will remain of the teleoanalysis of the correlation between the diet and the coronary heart disease in this case.

Possibly, this approach is justified only for the “precautionary principle” [1, 51], when at least some data are needed for making decisions.

On the other hand, ideologically, the method of teleoanalysis allows various speculations on the joining of refined and clear fragments of generally unfinished and unclear studies. In addition, the term appears impressive, like meta- and pooled analysis and like “speaking in prose” in Moliere.

NON-ABSOLUTION OF CRITERION: WHAT IS BIOLOGICALLY PLAUSIBLY DEPENDSON THE CURRENT BIOLOGICAL KNOWLEDGE

It seems that this Hill’s statement from 1965 [11] (“What is biologically plausible depends upon the biological knowledge of the day”) is often considered as an original thought [28, 29, 49, 87, 91], but it can be found already in works by previous researchers.

In a publication by A.M. Lilienfeld from 1957 [20], the following is stated:

(a) There are historical examples in which a statistical association did not initially conform to current biological concepts. The advancement of knowledge changed concepts that were already consistent with the previously identified associations.

(b) On the contrary, there are examples where an association was interpreted as consistent with existing biological concepts, but a later interpretation of the association revealed an error.Footnote 30

Similar provisions are expressed in the article by Lilienfeld from 1959 [21] with reference to the previous article of 1957 [20]: the interpretation of any correlation is limited by our biological knowledge, and it may be that an association that does not seem biologically plausible today will turn out to be such when our knowledge expands.Footnote 31

In 1960, P.E. Sartwell [22] noted about his point “the biological reasonableness of an association” that one must be careful with this provision, because judgments made on its basis are supported by imperfect knowledge existing at a particular time.Footnote 32

After Hill, a repetition of this thesis can also be found in serious sources (1991–2018) [15, 30, 43, 50, 67, 92]. Once again, the danger of the situation should be noted: there is a possibility that incorrect and absurd associations declared by someone, as well as data of this kind, can be justified by the fact that the time has not yet come and that science does not yet know.

Historical examples illustrating the two points cited by Lilienfeld (1957, 1959) [20, 21] are of interest:

• The haberdasher J. Graunt investigated the risk factors for plague in London in 1662. All four points of his advice (to avoid contaminated air brought by ships in the port, crowding, and contacts with animals and sick people) turned out to be constructive, despite the absence of any biological theories [48, 93].

• An extreme increase in scrotal cancer in English chimney sweeps was identified by P. Pott in 1776 [11, 15, 48, 93], 150 years before the start of research on chemical carcinogens. However, after the work by Pott, the Danish parliament issued a decree on “prevention”: chimney sweeps were ordered to wash every day [15].

• In 1842, D. Rigoni-Stern, having analyzed the statistics for Verona, discovered that mortality from cervical cancer was characteristic of married rather than single women, indicating the influence of sexual or reproductive activity. The fact was forgotten until the early 1950s, when the rarity of this pathology among Catholic nuns was noted. In the 1960s, a search for a disease-causing factor associated with sexual behavior was carried out, and in the 1970s the herpes virus (HSV II) was proposed as such a factor, which at that time was regarded as a biologically plausible hypothesis. However, subsequent epidemiological studies have not confirmed this correlation. In the 1980s, with the development of the DNA hybridization method, the true factor was discovered. It turned out to be human papillomavirus (HPV), although of specific genetic subtypes [70]. The author of the review [70] noted that it is difficult to imagine how the evidence would have been obtained without a fruitful intersection of epidemiological and biological approaches.

• In 1848–1849, W. Farr found an inverse association between living height above sea level and cholera mortality in London [94]. The phenomenon was consistent with the theory of “miasmas,” which prevailed at that time, and was interpreted as plausible. Later it was found that, according to the microbial theory, the situation is the same: the height of water sources was inversely correlated to the etiological factor (in publications on the topic, an example is given in [20]).

• In 1849–1854, J. Snow identified a correlation between drinking water pollution and cholera incidence in London [95]. Snow’s findings did not appear biologically plausible in light of the miasma theory. However, when the microbial theory became generally accepted, the correlation between water pollution and cholera became scientifically substantiated (in publications on the topic, an example is given in [20] and later repeated in [11, 68, 96, 97]).

• In 1861, D.W. Cheever (United States), warning about the danger of “meaningless correlations,” pointed out that it would be ridiculous to attribute typhus, which someone caught while spending the night on an emigrant ship, to parasites on the bodies of sick people. In his opinion, this was just a coincidence [98] (in publications on the topic, the example was originally given by Sartwell in 1960 [22] and later repeated by Hill [11] and then by Rothman and Greenland [43, 45, 99]).

• At the dawn of AIDS research, its causes were associated with the use of amyl nitrites (“poppers,” inhaling of which increases, say, temporary temperament) by homosexuals [100].

These are the most popular examples (apart from the second and the last one) regarding the temporal conjuncture of plausibility, which are given in the textbooks on epidemiology and reviews. However, we can recall more cases from the history of medicine, at least with the therapy of all diseases by bloodletting or with the drinking of a living culture of Vibrio cholerae by M. von Pettenkofer without consequences, which “confirmed” the hygienic theory of “miasmas” [101].

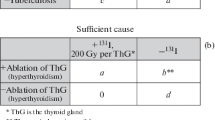

The concepts of biological plausibility have evolved greatly in both radiation epidemiology and radiobiology. Figure 1 shows the development of global radiation safety standards (RSS) based on data from [102].

The evolution of radiation protection standards (RPSs). Plotted (Statistica, ver. 10) according to U.S. Department of Energy (DOE-1995) [102], in abbreviation. For 1958, 1990, and 1999, RPSs are presented for nuclear workers and the public; for 1990, RPSs are presented from BEIR-V (50 mSv) and ICRP (20 and 10 mSv), supplemented by RPS-99 (Russia). The initial doses in [101] were presented in rem/year (rem is roentgen equivalent man; 1 rem = 0.01 Sv [103]). The values of dose standards are shown on the plot.

The decrease in the permissible dose by two orders of magnitude in less than 70 years fully illustrates the evolution of ideas about the biological plausibility of the effect of radiation. At first, the concepts of “tolerance doses” (until 1942) and then the “maximum permissible exposure” (until 1950), meaning something “harmless,” were used. In 1950, the concept changed to the harm of irradiation at any dose, followed by a reduction in the dose standard as much as it is “as low as reasonably achievable” [102].

While the concept of the threshold radiation harmlessness prevailed (note that that “threshold” was very high), the ideas about its effects were, in the modern view, anecdotal in nature. Radiologists during the First World War scanned the patient’s chest standing directly behind him and holding the X-ray film in their hands [104].

“Healthy” radium-containing water “Radithor” (2 µCi in 16.5 mL per bottle; half 226Ra and 228Ra [105, 106]), which in 1932 the famous celebrity E.M. Byers (entrepreneur and golfer ) [105–108]Footnote 33, as well as rectal suppositories with radium (1930), which “charge like a battery” [114, 115] (recall the advertisement for the “Energizer” bunny), are not the most aggregious examples.Footnote 34 Popular in France in the 1930s were face creams and other perfumes “enriched in thorium and radium,” manufactured under the Tho-Radia brand, as well as the similar Doramad toothpaste in Germany [105, 116], surprising, though not overly so, after all that happened in those days. Radium was then added everywhere: in chocolates, in eye drops, in Christmas tree decorations, and in children’s books with luminous letters. Peculiar siphons to enrich drinking water with radium were widely used. Many such examples are given in the manual on radiation ecology by G.N. Belozersky published in 2019 [116], and photos of all these wonders can be found in Russian and English-language Internet blogs.

However, most impressive is the relatively recent (1950–1951) children’s construction game “Gilbert U-238 Atomic Energy Lab” (there are also other similar games), with teases like “Build yourself a nuclear power plant, buddy!” (“Showing you the real atomic decay of radioactive material!” [117]).Footnote 35 The box with the game, in addition to the electroscope, etc., included containers with four types of uranium ores, as well as with 210Pb, 106Ru, 65Zn, and 210Po [117].

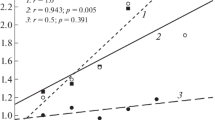

The evolution of ideas about hereditary genetic changes in humans after radiation exposure (i.e., about defects, pathologies, and anomalies in unirradiated children of irradiated parents) is also very illustrative. Such changes were found first in Drosophila (1927) and then in mice and other animals (1950s) [118, 119]. The world froze in anticipation of the mass of radiation mutants in people as the results of nuclear weapons tests, radioactive pollution of the environment from nuclear energy, the progressive use of radiation in medicine, etc. All this was reflected in the mass media, cinema, and science fiction literature of the 1960s–1970s (and later in computer games), where horrible mutants with incredible abilities appeared [119].

As time passed, mutants were not born even in the victims of Hiroshima and Nagasaki, nor in tens of thousands of survivors after radiotherapy. Finally, it was concluded that the hereditary genetic effects in irradiated people cannot be detected in real studies, because even the theoretical increase in the natural mutational background is so small at any plausible doses that do not eliminate fertility. If at present someone detects radiation mutants in humans, this does not meet the plausibility criterion (more precisely, coherence with the available epidemiological data), as well as the provisions of the UNSCEAR, ICRP, and BEIR [8, 118–122].

In fact, according to recent data of Russian authors, at the level of microsatellite polymorphisms, gene exons, and mtDNA heteroplasmy, such a possibility was apparently shown for irradiation with high doses (2–3 Gy) shortly before conception (workers of the Mayak PA) [123, 124].Footnote 36 However, in other pilot studies by the groups headed by A.V. Rubanovich (Kuzmina N.S. et al., 2014; 2016 [125, 126]), no transgenerational transmission of even epigenetic changes (hypermethylation of CpG islands in the promoters of a number of genes) was detected in the descendants of the liquidators of the Chernobyl accident and workers in the nuclear industry, which fits into the big picture. But, for the parental contingent itself, including the workers at the Mayak PA, the above-mentioned epigenetic changes depending on radiation exposure and age were clearly identified [125–127].Footnote 37

Thus, the influence of temporal conjuncture on the biological plausibility is evident in many disciplines. Nevertheless, according to Daubert’s rule [4, 31, 32], expert testimony in US courts should be judged on the basis of current scientific knowledge only, and not on the possibility that additional information may become available in the future.Footnote 38 No guesswork as to what new discoveries might reveal is allowed: the courtroom is not a place for scientific guesswork, not even as inspiration. The law lags behind science, but does not lead it [32].Footnote 39

NON-ABSOLUTION OF THE CRITERION: REAL BUT UNPLAUSIBLE ASSOCIATIONS AND PLAUSIBLE BUT NOT REAL ASSOCIATIONS

Earlier, we gave examples of the effects of confounders [1, 4], chances, and reverse causality [3] in the formation of associations. Correlations such as ice cream consumption–drowning frequency, the correlation between alcoholism and lung cancer, between smoking and liver cirrhosis, between the use of amyl nitrites and the incidence of AIDS, between smoking cessation and an increase in pneumonia and lung cancer, as well as other correlations considered are all examples of the first part of the heading—the associations seem to be real, but their biological (and sometimes logical) plausibility is absent.

More similar facts can be added.

(1) There was a deplorable situation with thalidomide, which was not a teratogen for most experimental animals, but in 40% of cases it was teratogen when taken by pregnant women, causing severe pathologies such as underdevelopment of the limbs, etc. (report of 1961) [93, 128].

(2) The second tragedy (the authors of [129] call it “intellectual pathology” of physicians”) is associated with the rather long-term use (1942–1954) of oxygenation for premature infants (high-dose oxygen therapy). Although the biological basis and a plausible mechanism were well understood, nobody could have foreseen the consequences—retrolental fibroplasia (overgrowth of connective tissue under the lens) leading to blindness [35, 129]. The procedure has blinded approximately 10 000 children. Evidence of causality was difficult to obtain, because the defect was initially assumed to be of neonatal origin. Associations were also found with fetal abnormalities, multiple gestations, maternal infections, light, vitamin and iron supply, vitamin E deficiency, and hypoadrenalism. In addition, in animal experiments, no data confirming the corresponding effect of both hyperoxia and hypoxia were obtained. However, some time later, both a cohort study showed that the defect developed in children with initially normal eyes and a counterfactual experiment involving the cancellation of exposure (for the concept, see [1, 11], etc.), which led to a sharp decrease in the iatrogenic epidemic, demonstrated the reality of the correlation (however, with the cessation of oxygenation, an increase in the mortality of premature infants from respiratory distress syndrome was observed) [129].Footnote 40

(3) The recommendation by Dr. B.M. Spock to put infants to sleep prone to reduce the risk of sudden infant death syndrome is based on the plausible explanation that it prevents suffocation with vomiting. However, it turned out that mortality in the supine position was two times lower than in other positions [33, 90].

In a number of cases, the results of randomized controlled trials (RCTs) fundamentally contradicted the data of numerous epidemiological studies, which significantly and systematically reveal certain associations (by the way, biologically plausible). The publication by C. Lacchetti et al. in 2008 [130], which is included in the American Medical Association guidelines on the use of medical literature in evidence-based medicine, gives 22 such examples.

In principle, RCTs are regarded as the “gold standard” of evidence [58, 67, 93, 96]. Although they have many limitations [131–133] (and many others), so that the “gold standard” of RCTs is directly denied in a number of works [134–136], the official doctrine assigns this purely experimental approach the highest rank among the methods of evidencing causality for humans (see manuals for the past two decades [58, 93, 96]). Below are the most well-known examples of the discrepancy between the results of RCTs and observational studies.

(4) Potassium fluoride reduced spinal fractures in an observational study in 1989, but not in the subsequent RCT in 1990 (see [131]).

(5) Epidemiological and laboratory data led to the conclusion that β-carotene reduces the risk of cancer (article in “Nature” in 1981 [137]). However, two RCTs in 1990s demonstrated that β-carotene increased lung cancer mortality in smokers, although this contradicted the entire body of epidemiological and biological evidence (see [51]).

(6) Vitamin E in observational studies in 1994 reduced the risk of coronary heart disease, but an RCT in 1994 did not confirm this effect (see [131]).

(7) The most well-known example of the inconsistency between the results of RCTs and observational epidemiological studies is hormone replacement therapy (HRT) in menopause. This example, mainly with an emphasis on the absoluteness of RCT data (with few exceptions [96]), is discussed in textbooks and guidelines on epidemiology and evidence-based medicine [24, 35, 93, 96, 131, 138]. Early observational studies demonstrated the beneficial effects of estrogen therapy, including a reduction in the incidence of coronary and cardiovascular pathologies, as well as in overall mortality (six case-control studies, three cross-sectional studies, and 15 prospective studies; see the review by M.J. Stampfer and G.A. Colditz from 1991 [139]). It should have been alarming, however, that the incidence of mortality from homicide and suicide in women with HRT was also reduced: this implausible dependence is a negative control for the presence of confounders (for example, lifestyle, habits, and social status) [142]. Indeed, the very first RCT in 1998, as well as the subsequent RCTs (2002–2012; see [138, 143]), did not confirm the effects. Moreover, an increased risk of coronary diseases, strokes, thrombosis, pulmonary embolism, and breast cancer was found [35, 138, 143, 144], so that one of the RCTs had to be ceased before the planned date [144]. All this was reflected in the media, sometimes in a distorted form (according to [143], the relative risk of breast cancer (1.24) was transformed into a 24-fold increase in the risk of breast cancer after HRT). Nevertheless, the positive effects of HRTs prevail over the negative ones for women aged 50–60 years, whereas at an older age mainly adverse consequences become significant (see [96, 143]).

There are other, no less spectacular facts from other areas.

(8) For example, the lack of a correlation between psychoemotional stress (including severe stress, such as the death of children and loved ones [145]), including depression, and an increase in the risk of various types of cancer [145–149] seems strange. Associations between psychological stress and malignant breast tumors, found in some studies [145, 150], do not meet the criterion “consistency of association” (not found in a large Danish study [151]; see also [145, 147, 150]). However, the biological plausibility here reaches a high degree of significance: depression of the immune system, hormonal (neuroendocrine) shifts [93, 146, 152, 153], and oxidative stress associated with psychoemotional stress, which leads to DNA damage and cytogenetic disorders [121, 150, 152–154]. Moreover, experiments on animals have repeatedly demonstrated that psychoemotional stress in various models actually increases the level of DNA damage (see [121, 150, 154]).

However, epidemiological studies have failed to demonstrate this association [145–149]. Nevertheless, it is indicated that stress can act indirectly, reinforcing bad habits and unfavorable lifestyle, which may cause cancer [146, 148]. Theoretically, stress can also promote tumor growth and progression through decreased immunity and changes in hormonal status [148, 152, 155, 156]. However, stress correction in cancer patients did not change in their survival [148, 157].

It can be seen that all of the listed biological plausibility [93, 121, 146, 150, 152–154] does not necessarily indicate epidemiologically real consequences [145–149]. On the website of the National Cancer Institute of the United States, the answer to the question as to whether psychological stress can cause cancer states that the evidence is weak and inconsistent [148]. On another official website of Cancer Research UK, the world’s largest cancer research charity from England, it is also written that the majority of studies did not show an increase in cancer risk due to stress [149]. Finally, in the IARC document (IARC 2014; updated 2018), it is noted (probably with reference to [147]) that meta-analysis of data for 12 European cohorts did not reveal an increase in cancer incidence (including major cancer types) under various types of stress at work [158].

(9) The mechanism of the anesthetic effect of acupuncture is unclear. Many researchers consider it biologically implausible that the insertion of needles into the body and their rotation there can relieve pain and, therefore, do not believe in the effectiveness of acupuncture [58].

(10) Similarly, the effect of homeopathy is regarded as not having a biological mechanism [87] (except for the weak and long exaggerated [159] placebo effect [87]).

NON-ABSOLUTION OF THE CRITERION: A BIOLOGICALLY PLAUSIBLE MECHANISM CAN EASILY BE FOUND FOR EXPLANATION OF EACH ASSOCIATION

This is a statement from the editorial by G. Davey Smith in 2002 [160], which is a response to a certain “standard argument” according to which hypotheses based on a good scientific understanding of pathogenesis can hardly be false. Here, it is appropriate to draw an analogy with the same statement for the criterion “Analogy,” about which K. Rothman (including with various coauthors) said that “analogies abound” [74], since they are associated with the imagination of researchers [42, 43, 45, 99].

Such statements, in our opinion, hardly make sense, because they contradict the above thesis reproduced in many epidemiological sources: proof of causality is ultimately a matter of judgment [16, 37, 38, 67].

CRITICISM OF THE CRITERION: LACK OF SCIENTIFIC STANDARDIZATION, SUBJECTIVISM, DOGMATISM, STATEMENT THAT THE COMBINATION OF EVIDENCE FROM VARIOUS DISCIPLINES CANNOT BE AN INDEPENDENT DISCIPLINE, AND OTHER DISAGREEMENTS

We are aware of individual authors who have criticized the inductive approach to proving causality using guiding principles or criteria. Actually, in all hundreds of sources of different levels, no others were found. Therefore, most likely, there are no other such authors, at least among those that are known.

(1) Chronologically first is the study of 1979 [161] performed by a wide-profile critic, creator of the term “protopathic bias” (see [3]), one of the “fathers” of clinical epidemiology [162], A.R. Feinstein (1925–2001, United States). In the previous section, we mentioned the work by Jacobson and Feinstein from 1992 [129], in which the “intellectual pathology of physicians” who biblically blinded 10 000 infants by oxygenation, was considered. We also know the publication by Feinstein about meta-analysis, where this approach (but not pooled analysis) is called “statistical alchemy of the 21st century,” because it is “the idea of getting something for nothing, while simultaneously ignoring established scientific principles” [163]. Feinstein also did not pass over Hill’s criteria [161]. As applied to “Biological Plausibility” (in the form of “Coherence”), in [161] he noted that this demand provides for biological logic. The latter, however, is based on paradigmatic appropriateness rather than on rigorous evidence. The author concluded that there are no scientific considerations or scientific standards when applying the criteria of causality, because scientific principles are mentioned neither among the statistical nor among the logical components of the complex [161].Footnote 41

According to Feinstein, the inclusion of some experimental “standards,” similar to those contained in Henle–Koch’s postulates of infectious diseases, will lead to the appearance of scientificity [161]. However, as can be seen in Report 2 [2], Henle–Koch’s postulates are also not amenable to standardization.

In general, Feinstein’s criticism of the “Biological Plausibility” (and “Coherence”) criterion can be formulated as follows: since the criterion is based only on biological logic, it requires acceptance of the initial paradigms of appropriateness and requires judgment, but not rigorous proof. Therefore, the criterion really does not work. This conclusion by Feinstein, however, abolishes the acceptability in practice of the evidence-based methodologies used by environmental agencies [7, 15, 38, 50, 164] and IARC [57, 165, 166], as well as by other organizations that use weight-of-evidence approaches (WoE; see sources above) and Bayesian analysis (meta-analysis) discussed here earlier.

(2) The second is Professor of Epidemiology K.J. Rothman (born in 1945) [41, 42, 44, 74, 167] and his frequent coauthor, no less famous researcher of causality, Professor of Biostatistics and Epidemiology S. Greenland (born in 1951) [43, 45, 65, 99, 168], both from the United States, whose works were mentioned by us many times. We hope to consider their platform in more detail in Report 4. Here, we will only mention briefly that these authors completely deny the inductive approach and, judging by all the signs, the probabilistic causality, reducing everything to a finite multifactorial determinism [41–43, 45, 74, 99] (we have already discussed this in part in [2]). For Rothman, such views are found at least since the first edition of his ”Modern Epidemiology” in 1986 [41] (information is taken from the manual [169]) and are then reproduced in the second edition of 1998 [42] (guiding principles are burdened with reservations and exceptions; cited by [170]) and in the third edition of 2008 [43].Footnote 42 We had only the latter at hand.

Rothman and Greenland for each criterion, including the need for the association itself, found a certain exception, sometimes speculative.Footnote 43 As a result, it turned out that no one criterion (except for “Temporality,” which was recognized) is an even weak “point” that allows any judgments [41–43, 45, 74, 99]. In other words, Rothman and Greenland always offered a rebuttal to eight of Hill’s nine propositions, somewhat similar to the argument of a character in Soviet cinema “What if he was carrying rifle cartridges?” (or, even worse, the character by V.M. Shukshin who “cut off”; both sentence from Russian film and book respectively).

However, the authority of Rothman and Greenland in a kind of “theoretical epidemiology” (the current system of epidemiology is largely determined by Rothman’s thinking; manual of 2016 [169]), that is, in epidemiology materials reflected not in the methodological documents of organizations that make decisions and are responsible for them but in textbooks or reviews, is quite large.Footnote 44 Rothman is often referred to in the latter as having buried causal criteria. Based only on manuals and reviews, sometimes one can make the erroneous conclusion that today no one uses any causal criteria. We plan to consider in detail the issue of the breadth of application of Hill’s criteria and, in our opinion, their logical inseparability from any evidence in descriptive disciplines in Report 4.

As for “Biological Plausibility,” the authors mentioned call it an “important problem.” However, in their opinion, this criterion is both biased and non-absolute, since it is often based only on previous beliefs rather than on logic or data. According to Rothman and Greenland, attempts to quantify on a scale from 0 to 1 the probability of what is based on previous beliefs and what is based on new hypotheses using a Bayesian approach demonstrate dogmatism or adherence to the current public fashion. This leads to bias in evaluating the hypothesis [45, 99]. Neither Bayesian [45, 99] nor any other [43] approach can turn plausibility into an objective causal criterion [43, 45, 99].Footnote 45 In assessing a new hypothesis, this criterion can be used only in a negative sense (in order to indicate the difficulty of its application) [45, 99].Footnote 46

In the last publication by Rothman on this topic known to us (2012), the characteristic of the criterion of interest is very brief: “very subjective” [74].

(3) The next in time (after the first edition of ”Modern Epidemiology” by Rothman in 1986 [41]) is the publication by Professor of Theoretical Medicine B.G. Charlton in 1996 [171] (England). In 2019, according to data from the Internet, Charlton was still teaching. Judging by PubMed (last publication in 2012), he is also a wide-profile critic with rather unusual works: “The Zombie Science of Evidence-Based Medicine…,” 2009 [172]; “Are you an Honest Scientist?”, 2009 [173]; “The Cancer of Bureaucracy: How It Eill Destroy Science, Medicine …,” 2010 [174], etc. For example, the following statements are impressive: “it is obvious that Evidence-Based Medicine was, from its very inception, a zombie science: reanimated from the corpse of clinical epidemiology” [172].

Regarding the significance of the criteria of causality, Charlton, similarly to the above-mentioned researchers, takes quite extreme positions of denial (like the firemaster of the Russian classics, who was so right-wing that he did not even know which party he belonged to).

Speaking about multidisciplinary and interdisciplinary approaches in epidemiology, Charlton notes their mosaic nature. Each piece of the mosaic consisting of specific data can be assessed for scientific validity, but the method by which these elements are connected (glued together) is not scientific, because, according to [175, 176], a combination of evidence from different disciplines cannot be an independent scientific discipline. If gaps in evidence for one discipline are replaced or circumvented using data from another discipline, and vice versa [177], then incompatibility is combined. Indeed, since data obtained using a number of “incommensurable” (or qualitatively different) approaches, methodologies, and methods of evidence are integrated, epidemiology should rely on judgment, on “common sense,” to a greater extent than other sciences [171].

Charlton compared evidence in epidemiology with a “network” of related data from various disciplines. Insufficiency or incorrectness in any cell does not break and does not eliminate the entire network, but only weakens it, because it is a network. That is, epidemiological hypotheses are very weakly falsifiable (according to the provisions by K. Popper [1, 37]): conflicting results can only change the equilibrium probability of multifactorial causation. This explains the long life and stability of other epidemiological hypotheses even in the face of conflicting facts (see the examples above in the previous section). On the contrary, “true” scientific hypotheses are built on a chain of evidence and separate evidential links and, therefore, the elimination of any link as incorrect immediately completely eliminates the hypothesis itself [171].

Among the sources cited by Charlton on the unscientific integration of disciplines, publication [175] is a 1987 monograph entitled “Molecules and Mind” and work [176] published in 1990 belongs to Charlton himself; it is devoted to criticism of “biological psychiatry.”

In general, Charlton’s reasoning is not devoid of sense when it is not about epidemiology, but, for example, about psychology or, say, about retrospective disciplines unverifiable in practice. This also applies to the cases when pretensions for quantitative connections between fragments of epidemiological evidence are criticized, as declared for teleoanalysis (see above). However, in the case of an approach predominantly in a qualitative sense, which provides, as said, “the Weight of Evidence,” such absolutist reasoning renders senseless many practical steps of international organizations aimed at preserving health and the environment.

The considered fairly old publication by Charlton from 1996 [171] is cited by a number of authors dealing with the philosophy of epidemiological causality. It seems appropriate (see why below) to provide a summary of such sources with an indication of the meaning of the citation:

• D.L. Weed, 1997 [59]. Information about the authority Weed has been cited by us several times earlier [2, 3] (see also note 3). Charlton, on the other hand, stated that the use of causal criteria diminishes the validity of causal inferences (in fact, the statements in the original [171] are not so unambiguous; see the original quote in [2]).Footnote 47

• M. Parascandola and Weed, 2001 [178]. The first author is also very authoritative for his publications on the topic. “Charlton also states that basic science is built on the concept of necessary causes and that epidemiology, in order to be scientific, must follow this model.”Footnote 48

• J.C. Arnett, 2006 [179]. Author of the Competitive Enterprise Institute (CEI), discussion of USEPA approaches. OMB [The Office of Management and Budget] just aggregates or compiles estimates from various agencies, but never reviews or endorses all the “different methodologies” used and does not provide valuable and successful estimates (Charlton, 1996).Footnote 49

• A.Z. Bluming, 2009 [180]. Presentation from the University of California at the Symposium on HRT, as well as an accompanying publication in the journal. An excerpt from Charlton [171] about the “true” hypothesis from a chain of causes and about the epidemiological hypothesis, which is a “network” of connected data from many disciplines is presented (see above).Footnote 50

• A. Morabia, 2013 [181]. Again, a highly cited author, see [1, 2]. Cited from Charlton [171] in light of the discussion of criteria after 1965.

• US manual on statistics and causality published in 2016 [169]. So far Rothman (1986) did not criticize all assumptions [about criteria] as inconclusive (see also Charlton in 1996).Footnote 51

• P.J.H.L. Peeters, 2016 [182]. A voluminous (246 pp.) report of a study on European programs. A total of 13 citations of Charlton 1996 [171], most on the topic and without discussion. For example, the way by which epidemiological evidence is weighed was criticized by Charlton (1996, p. 106).Footnote 52

We had seven more sources (1997–2011) where the provisions from [171] are mentioned/cited, but these works are either older or appear less sound.

Thus, there are 14 sources (half of them are definitely sound), in which the sharply negative provisions by Charlton from 1996 [171] are considered without any criticism. It turns out again that, given the necessary conjuncture, an interested person with the help of authoritative sources, having added the publications by Rothman and Greenland, can not only refute the criterion “Biological Plausibility” but also the majority of Hill’s “logical” criteria, as well as put into doubt the adequacy of the approaches and conclusions of USEPA, IARC, etc., or other studies that synthesize data from different disciplines or are at the intersection of them.

(4) The last of the critics known to us are Professors K.J. Goodman and C.V. Phillips from Canada, who are frequent authors of articles on causality in various kinds of encyclopedias and textbooks [30, 183]. It should be noted that Phillips has ties to tobacco companies [184, 185].

A 2005 article by these researchers said that, with the exception of Temporality, none of the criteria is necessary or sufficient. And it is not clear how to quantify the degree of importance of each criterion, let alone to generalize such an approach to the judgment of causality [183]. Regarding the “Biological Plausibility” criterion, it can be noted that Goodman (with another co-author) considers it together with “Coherence” and “Analogy” [30].

An important statement is the following: there appears to be no empirical assessment to date of the validity or usefulness of causal criteria (e.g., retrospective studies of how the use of criteria improves analytical conclusions). In short, the value of the set of criteria for causal inference is strictly limited and has not been tested [183].Footnote 53

Similar provisions can be found in other authors, for example, in G.B. Gori from 2004 [186]: deprived of both quantitative and qualitative benchmarks, these criteria remained subjective and unrelated to independent experimental verification.Footnote 54

This, so to speak, is the quintessence of the entire criticism of the set of criteria: it is declared that the success of their application has never been tested in practice by comparing it with other approaches. However, articles [183, 186] were published in 2005 and in 2004, respectively, and in 2009 the Dutch authors proposed a methodology for weighing the criteria and successfully tested the validity of using this technique for known carcinogens (G. Swaen and L. van Amelsvoort [67]). We plant to consider data of this kind on the gradation and weighting of criteria, as well as other approaches to establishing causation [187], in Report 4. We should only note that Rothman and Greenland [42, 43, 99], as well as Goodman and Phillips [188, 189], attempted to propose such approaches (in our subjective opinion, only in theory).

Summing up this section, we will formulate only the following indisputable statement: the criterion “Biological Plausibility” is not absolute. It is neither necessary, despite all its importance, nor sufficient [11, 13, 27, 29, 38, 49, 58, 64], despite its role in the set of rules of causality for environmental protection and toxicology agencies [16]. And yet, without it, any proof appears both incomplete and flawed. Something like a relationship between ice cream consumption and drowning deaths [1] or between the linear size of the penis and the reciprocal of the country’s GDP [80] will always come to mind.

CONCLUSIONS

In this section, the main array of references is not provided; they can be found above.

In the absence of direct experiments, biological plausibility has been an important component of causality hypotheses in the biomedical disciplines since at least the 19th century. During the formation of the guidelines/criteria for assessing the plausibility of a causal relationship between exposure and effect (1950–1960s), the point “Biological Plausibility” was one of the first to be proposed (Lilienfeld in 1957). Later, this statement was sometimes replaced with the similar criterion “Coherence” (agreement with the known facts of natural history and biology of the disease, 1964); however, in 1965 Hill introduced both points into his list.

Since then, the guideline “Biological Plausibility” has gained increasing importance, especially in disciplines (ecology, toxicology, the study of carcinogens) in which there are difficulties not only in setting up adequate experiments, but even in the very observation of the effect. In such cases, to assess the probable effects of exposure for subsequent decision-making in the healthcare sector, one has also to rely on molecular epidemiology (“surrogate EndPoints,” biomarkers), as well as animal and in vitro experiments. This situation is observed, among other things, in US courts when making decisions on compensation for various impacts (“Daubert’s rule”).

The criterion “Biological Plausibility” remains important in classical epidemiology; adherence to it is highly desirable and sometimes necessary even when the correlations are statistically established. Epidemiological approaches alone are not able to prove the true causality of the relationship (possibly the influence of chance, confounders, biases, and reverse causality). Statistical approaches are aimed only at proving the reality and persistence of associations. Without knowledge of the biological mechanism and plausible model, such correlations (especially weak ones) cannot be regarded as confirmation of a proposed hypothesis about the true causality of the effect of exposure.

The essence of this criterion is reduced to the integration of data from various disciplines of medical and biological profiles. This approach is so important in establishing causality that authors specifically emphasize its necessity in a number of sources, in specialized publications, and in textbooks on epidemiology. This review provides relevant citations, including for radiobiology and radiation epidemiology. It turns out that data from almost every area of the biomedical and molecular–cellular disciplines can make an important contribution to the search for evidence for epidemiological research. This imparts practical significance to almost any experimental research, no matter how fundamental and theoretical it may seem.

Several leading authors have detailed the levels of attaining biological plausibility. In the work by Weed and Hursting from 1998 [29], three levels are proposed, from the first, when there is an assumption about the mechanism, but there is no biological evidence, through the second, when evidence is provided by molecular biology and molecular epidemiology, to the third, highest level, when there are data on the influence of a particular factor of interest on the mechanism. Similarly, Susser in 1977 and 1986 [25, 36, 37] formulated four levels for the “Coherence” criterion with a similar meaning. Attaining its significance includes the following elements of consistency with (1) theoretical plausibility, (2) biological knowledge (i.e., “Biological Plausibility”), (3) facts, and (4) statistical patterns, including the dose–effect dependence.

Ultimately, it is all a matter of judgment: is there enough evidence to rule out alternative explanations (“Weight of Evidence”). In addition, the decision on causality is largely based on the intuition of researchers [33, 163], which was mentioned by Hill in 1965 [11]. The last statement fully applies to attaining an acceptable level of biological plausibility. However, the aforementioned Phillips and Goodman in 2004 [190] noted that the correct approach to intuitive assessment is now almost completely lost. For example, studies performed in the 1970s and 1980s demonstrated that both ordinary people and experts have poor quantitative intuition and, therefore, intuition cannot eliminate the need for modern methods of quantitative estimation of uncertainty [190].

One of the well-known methodologies for integrating data from different disciplines is Bayesian analysis (Bayesian meta-analysis). It relies on the Weight of Evidence (proofs) rather than on a certain specific study, following the same principles as Bayesian decision-making. This method implies the integration of all available biomedical data into one updated and standardized estimate that determines the overall strength of causation.

Regardless of the importance and necessity of the “Biological Plausibility” criterion, it, similarly to almost all of Hill’s criteria (eight out of nine, except for Temporality), is neither necessary nor sufficient for evidence. There are a number of examples (including radiation exposure) given in this publication that show, first, that “plausibility” depends on the current biological knowledge and, second, that there are real but seemingly implausible associations, and vice versa.

From this follows the criticism of both the “Biological Plausibility” criterion as such and the entire inductive approach based on causal criteria by some authors. They point to the insufficient scientificity and accuracy of the approach (Feinstein), subjectivity, dogmatism, and non-absoluteness of criteria (Rothman and Greenland), to the incorrectness of methods that integrate data from different disciplines that are not the same in approach, methodology, or evidence (Charlton), and to the fact that the criteria of causality have never been tested in practice (Goodman and Phillips). The last statement was made in 2005; however, since then the situation has changed: in 2009, Hill’s criteria were modified by determining the weight of each, and the methodology was tested on standard carcinogenic agents (Swaen and van Amelsvoort [67]).

Despite the expressed criticism and doubts and despite the fact that the criterion “Biological Plausibility” was said to be not absolute, any epidemiological evidence without it looks both incomplete and flawed. As noted in [191], the fact that epidemiology is a biological science related to human diseases and based on clinical disciplines cannot be neglected.

This publication, together with the previous special reviews on the “Strength of Association” [4, 5] and “Temporality” [3], constitutes a detailed preamble to the planned Reports 3 and 4 of our cycle on the essence, limitations, breadth of application, and radiation aspect of the guiding principles/criteria for causality.

Notes

It would be useful to present the material on the problem of causality and methodologies for its establishment (criteria, etc.) in the form of a monograph, similarly to the first edition of this kind by M. Susser in 1973 [6] and, for example, the collection of 2015 (497 pp.) on causality in ecoepidemiology edited by the leading authors from the US Environmental Protection Agency (USEPA) S.B. Norton, S.M. Cormier, and G.W. Suter II [7]. A monograph on this topic in Russian may be relevant due to weak corresponding concepts in Russian natural science, medical, and epidemiological areas, as well as in everyday scientific and everyday life in Russia (see [1–5]). However, there is no incentive to create such a monograph.)

The currently active D.L. Weed (United States) for decades has paid great attention to the theory and practice of causation (many publications and synthetic studies), as a consulting expert on problems at the intersection of biomedical disciplines, law, commerce, and public policy (see also [2]). In our works on causality [1–4], Weed’s publications are cited probably more often than the works of other authors.

“The agent should be shown to be carcinogenic to some animal species (not obligatory)” [18].

“… biological plausibility of the association, which is dependent upon our general knowledge of the biology of these specific diseases” [21]. The conceptual combination “biologically implausible” took place much earlier, for example, in the decision of the British public health authority in 1854, according to which epidemiological evidence of cholera infection through London water (researcher J. Snow) was not supported by significant laboratory evidence (cited from [22]).

The 1964 report of the US Surgeon General (Chief Physician [24]) on the consequences of smoking [23] of wide international significance [23] is the first key milestone in the formalization of methods for proving the causality of chronic pathologies, including cancer. It is regarded as the official date of the final establishment of the association between smoking and lung cancer (see details in [2]).

All relevant references used in this review are provided.

“Epidemiology, molecular pathology (including chemistry, biochemistry, molecular biology, molecular virology, molecular genetics, epigenetics, genomics, proteomics, and other molecular-based approaches), and animal and tissue culture experiments should all be seen as important integrating evidence in the determination of human carcinogenicity” (National Cancer Institute (United States), workshop 2003) [53].

Particularly impressive is the review by Ward [61], where 19 pages of the PDF test (preceding the list of references) have the headings “Abstract,” “Introduction,” “Analysis,” and “Conclusion.”

“Despite these uncertainties, experience has shown that animal evidence can be relevant, and where it exists it should be taken into account” [62].

“The causal relationship was considered biologically plausible when evidence for a probable cause–effect mechanism was found in the scientific literature” [66].

The provision from [54, 57, 68], according to which, for the conclusion about causality, in addition to the very fact of association and time dependence, only “Biological Plausibility” in the form of a mechanism shown experimentally is sufficient and can be used with the necessary conjuncture (significant sources). The latter, however, can be done only with caution, since, as will be shown below, there are enough exceptions and the very concept of “mechanism” is limited by the framework of the achieved level of knowledge.