Abstract

Single image dehazing has received a lot of concern and achieved great success with the help of deep-learning models. Yet, the performance is limited by the local limitation of convolution. To address such a limitation, we design a novel deep learning dehazing model by combining the transformer and guided filter, which is called as Deep Guided Transformer Dehazing Network. Specially, we address the limitation of convolution via a transformer-based subnetwork, which can capture long dependency. Haze is dependent on the depth, which needs global information to compute the density of haze, and removes haze from the input images correctly. To restore the details of dehazed result, we proposed a CNN sub-network to capture the local information. To overcome the slow speed of the transformer-based subnetwork, we improve the dehazing speed via a guided filter. Extensive experimental results show consistent improvement over the state-of-the-art dehazing on natural haze and simulated haze images.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Image dehazing is a hot topic in classic computer vision, whose goal is to restore a clean image from the input. The quality of the captured image is affected by the air particle, which absorbs the ray emitted from objects and reflects other light into the camera. We can describe the hazing process as:

where I is the input hazy image, and J is the corresponding clean image, t represents how much the light reflected from objects is received by the camera, A is the air-light.

In the traditional image dehazing, computing the transmission map and air-light is a highly ill-posed problem if there is no extra information available. To address dehazing problem, a lot of dehazing methods are designed based on the various types of priors1,2,3,4,5,6 or additional information7,8,9. Requiring additional information restricts the application scope of these methods. The priors used for dehazing maybe fail in some cases, such as images containing white objects or the sky. To boost the robustness of dehazing methods, deep learning-based methods10,11 are introduced to predict the transmission map. But the dehazing performance of these methods is influenced by the precision of the estimated transmission map. To overcome this problem, some End-to-End deep learning dehazing methods12,13,14,15,16,17,18,19,20 are proposed. Li et al. fuse the transmission map and airlight into a new parameter and design a low-time consumption dehazing method. Qu et al. designed a dehazing method, which transfers the dehazing problem into a transferring problem. Liu et al.employ the attention mechanism and multi-scale network to boost dehazing performance. Dong et al. employ boosting strategy and dense features fusion21 to design a dehazing network. Zhang et al. propose a transmission map guided dehazing network22. Song et al. propose a wavelet-based dehazing method23. Although these methods have their great power in dehazing, we note the performance can be further boosted by introducing a model which can capture long dependency.

CNN has shown its effectiveness in low-level computer vision tasks, while transformers have shown great power ability for high-level computer vision tasks. Recently some works although introduce it into low-level computer vision tasks24. The prior work25 introduced a transformer into a computer vision task and achieved an impressive performance, which shows the potential of computer vision tasks. However, the computational burden of the transformer is very high, which limits its application of transformer. To boost the dehazing performance, Dehamer26 propose a transformer-based module to estimate the density of haze, and then combine it with CNN features to obtain the final dehazed results. However, Dehamer ignores the information in hazy images, and cannot achieve a high dehazing for dense hazy images. Furthermore, Dehamer inherits the problem of the transformer, which has a high time complexity. Zhao et al. propose a Pyramid dehazing network27, which can extract large contextual information. However, this work also inherits the limitation of CNN. The proposed model extracts the large contextual information using a transformer and reduces the consumption time using the deep guided filter.

To address this issue, we propose a novel highly efficient dehazing method based on the transformer and guided filter, which is called Deep Guided Transformer Dehazing Network (DGTDN). Haze is depend on the distance between the camera and the objects, which results in the haze density being different from pixel to pixel. The distribution of haze is global, which is hard for CNN to capture the long distance. To capture the long dependency, we design a transformer-based model to capture the global information of haze. However, the transformer cannot capture the local information well. To deal with this case, we propose a lightweight CNN sub-network to capture the local information. Based on the advance of the transformer and CNN, we propose to restore the global haze-free image with the transformer and then refine the details with the CNN sub-network. To achieve the goal of further improving the dehazing speed, we introduce the guided filter to reduce the dehazing time. The contributions of the DGTDN can be summered as follows:

-

1.

We introduce transformer-based sub-network to restore the coarse haze-free image and then the details are refined via a CNN-based sub-network. Restoring the coarse haze-free image depends on global information while refining the details needs more local information, which encourages us to design such a dehazing model using CNN and a transformer.

-

2.

We introduce the guided filter to improve the dehazing speed. Transformer is time-consuming, which may limit the application of transformer-based dehazing methods. We address this issue by introducing the guided filter into the proposed model. We reduce the input size of the transformer, which reduces the execution time of the transformer-based model.

-

3.

We do extensive experiments to show the superiority of the proposed method on natural hazy images and simulated hazy images. We also conduct ablation studies to show the effectiveness of the proposed modules.

Related work

We show some previous works related to dehazing. In this paper, we divide the related dehazing works into two groups, which include learning-based and prior-based methods.

Learning-based dehazing methods

The CNN-based methods have swept the computer vision tasks28,29,30. With the development of CNNs, a lot of works10,11,12,13,14,15,17,31,32,33,34,35,36 attempt to solve dehazing using deep learning models. These dehazing methods often attempt to compute the key factor of the physical model or the corresponding haze-free image directly. The works10,11 employ CNN model to compute the transmission map. However, these methods may boost the error of the transmission map and result in poor dehazing results. To deal this problem, End-to-End dehazing methods12,13,14,15,17,31,32,33,34,37,38,39,40 are proposed. For example, Zhang et al. design a CNN model that incorporates the physical model. Li et al. propose an all-in-one dehazing model13, which fuses the transmission map and airlight into a new parameter. Liu et al. design a novel dehazing model20 based on attention and multi-scale network. However, all these dehazing methods are based on CNNs, which are limited by the local property of convolution. To capture the long dependency of hazy images, Guo et al. propose a transformer-based dehazing method26, which employs the transformer-based encoder to capture the density of haze. Different from the above-mentioned methods, we overcome the problem of CNN by introducing the transformer block into the dehazing model, which can capture the long-range dependency. Some works note the difference between the simulated hazy and real hazy images, which results in a drop of dehazing performance on real hazy images when the model is trained with simulated hazy images. To address these issues, PSD17 proposes to combine the traditional priors to improve the dehazing quality of real hazy images. Domain adaptation dehazing method (DA)19 improves the dehazing quality on real hazy images by converting simulated hazy images into real hazy images. We note that these methods are hard to train. Furthermore, the proposed method focuses on improving the learning ability on simulated hazy images, which has a different goal from PSD and DA.

Prior-based dehazing methods

To address the ill-posed of single image dehazing, a lot of prior-based dehazing methods1,2,3,4,5,6 or additional information7,8,9 has been proposed. These methods discover the prior based on the statistical analysis of clean images or hazy ones. The famous work is Dark Channel Prior (DCP), which is derived from the observation that a clean image patch contains at least one pixel that has a channel value close to zero. Zhu et al. discover a color attenuation prior5, which is that the divergence between intensity and saturation positively is correlated to the depth. Fattal et al.2 use a color-line prior to removing haze. Berman et al. find a haze-line prior4 based on the observation that one haze-free image can be presented by a small number of color clusters. However, all these priors are simple, and cannot be held in real word complex scenes.

Transformer for vision tasks

Natural language processing (NLP) has applied Transformer41 to capture long dependency and improved the performance of learned models. Transformer shows its effectiveness in NLP and image classification task25 also employs Transformer to improve the performance. With the success of Vision Transformer (ViT)25 and its follow-ups42,43, researchers have shown the potential of transformers to image segmentation43 and object detection42. Although visual transformers have shown their success in visual tasks, it is hard to directly apply it in single image dehazing. First, Transformers often depend on large-scale datasets. However, there is no existing large-scale dataset to train a transformer-based for image dehazing. Second, it is hard to capture local representation for transformers, which may result in the loss of image details. To overcome this issue, we proposed combining the advantage of CNN and transformer to capture the local texture and global structure jointly to boost the dehazing quality.

The rough structure of the Deep Guided Transformer Dehazing Network. The proposed network contains main three parts: BaseNet, DetailNet, and GuidedFilterNet. Swin represents the Swin-Block, which is used to enlarge the receptive field of the proposed model. Bilinear represents the bilinear downsampling. LI is the output of the bilinear downsampling. LO represents the output of the haze remove network, which is a low-resolution dehazing result.

Methodology

In this section, we explain the motivation behind Deep Guided Transformer Dehazing Network (DGTDN) and then show the details of DGTDN. The structure of the proposed DGTDN is shown in Fig. 1, which consists of three parts. The first part is BaseNet, which is used to estimate the baselayer of the low-resolution dehazed result. The second part is DetailNet, which is used to estimate the missed details of base layer. The low-resolution dehazed result is generated by adding the base layer to the detail layer. The third part is GuidedFilerNet, which obtains the final high-quality dehazed result by upsamping the low-resolution dehazed result.

Motivation

The thickness of the haze is dependent on the depth of the objects, which results in the distribution of haze is global information. Based on the fact that the dehazing task needs to restore the image details, which is dependent on the local features. Single image dehazing is dependent on global and local features44. The transformer has shown its ability to capture long-range dependency, which is critical to improve the dehazing quality. However, the transformer cannot capture the local feature details which leads to coarse details for dehazing. According to the prior works45, CNN can provide local connections and capture local features. It is known to all that transformer-based methods are time-consuming. To reduce the inference time, we propose to introduce the deep guided filter into the dehazing network. Based on the above analysis, we combine the advantages of CNN, transformer, and deep guided filter to boost the dehazing quality and reduce the running time. In this paper, we propose a Deep Guided Transformer Dehazing Network (DGTDN). DGTDN consists of BaseNet, DetailNet, and GuidedFilerNet. BaseNet is designed to capture long-rang dependency and restore the coarse haze-free image. DetailNet is designed to capture the local features and restore the image details. GuidedFilterNet is designed to enlarge the low-resolution dehazed result and reduce the dehazing time.

The structure of the proposed model

Based on the motivation in subsection 3.1, we introduce the CNN, transformer, and guided filter into the proposed dehazing network. As shown in Fig. 1, we propose a model containing three parts: BaseNet, DetailNet, and GuidedFilerNet. We enlarge the details of haze remove network, which consists of BaseNet and DetailNet. As shown, the proposed model process a hazy image and outputs a high-resolution dehazed result via series steps: (1) Downsampling the input hazy image via bilinear downsampling, and obtaining a low-resolution haze image, we mark it as LI; (2) Feeding the LI into haze remove network, and obtaining a low-resolution dehazed result, we mark it as LO; (3) Feeding the LI, input hazy image, and LO into the GuidedFilterNet, and obtain the final high-resolution deazed result. Next, we introduce the BaseNet, DetailNet, and GuidedFilterNet in detail.

BaseNet

The BaseNet consists of an encoder that extracts features and a decoder that restores the haze-free image. The encoder contains four stages, and the decoder also contains four stages. Specifically, each encoder stage contains one transformer block, which followed one down-sampling layer. Similar to the encoder stage, each decoder stage contains one transformer block, which is followed by one up-sampling layer. The down-sampling layer is designed to downscale the size of feature maps, which is implemented by \(3 \times 3\) convolution with stride 2. The up-sampling layer is designed to enlarge the size of feature maps, which is implemented by \(2 \times 2\) transposed convolution operation with stride 2. The input of the BaseNet is a low-resolution version of a hazy image. The low-resolution hazy image is generated by using a bilinear, which is used to obtain a hazy image with half the size of the original input. We define the output of BaseNet as follows:

where BaseNet is the BaseNet, the \(I_l\) is the low-resolution of input hazy image, \(\hat{B}\) is the base layer of a dehazed result.

DetailNet

The DetailNet is designed to restore missed details. The DetailNet contains four Residual Dilation Blocks (RDBs), whose structure is shown in Fig. 2. Each RDB contains two common convolution layers and two dilation convolution layers. We pass the low-resolution input hazy into the DetailNet and obtain the detail layer.

where DetailNet is the DetailNet, \(\hat{D}\) is the detail layer of a dehazed result.

After obtaining the structure layer of dehazed result and the image detail layer, we can obtain the dehazed result as follow:

where \(\hat{H}_l\) represents the predicted low-resolution haze-free image.

GuidedFilterNet

GuidedFilterNet is based on the guided filter, which is based on the local linear model. We can express the local linear model as:

where \(q_o\) is the output, \(I_g\) is the guidance image, l is the location in \(I_g\), \(\omega \) is a local window in \(I_g\) with radius r, \((A_{\omega }, B_{\omega })\) are the linear const coefficients in a local window. This model can preserve the edges in \(q_o\) if \(I_g\) has the edges, because that \(\nabla q_o= \nabla I_g\). To obtain the \((A_{\omega }\) and \( B_{\omega })\), we solve the problem (5) that reduces the difference between the output \(q_o\) and the filtering input p. To solve the problem (5), we minimizes the error:

where \(\epsilon \) is used to penalize large \(A_{\omega }\), p is the filtering input.

We employ guided filter to perform joint upsampling, which receives a low-resolution hazy image, the corresponding low-resolution dehazed result, and the original hazy image as input, obtaining the final high-resolution dehazed result. Based on the local linear model, the relation between a low-resolution hazy image and the corresponding low-resolution haze-free image can be expressed:

where \(H_l\) is the low-resolution dehazed result and \(I_l\) is the low-resolution hazy image, i is the index of the \(I_l\). To obtain \(A_{\omega }^l\) and \( B_{\omega }^l\), we reduce the error between \(\hat{H}_l\) and the \(H_l\):

After obtaining \(A_{\omega }^l\) and \( B_{\omega }^l\), we simple the Eq. (7) to :

where \(.*\) is element-wise multiplication. Based on the local linear model, we also can express the relation between a high-resolution hazy image and the corresponding haze-free image as:

Based on Eq. (10) and (9), we can construct the relation between the high-resolution and the low-resolution hazy images. According to46, we can obtain the high-resolution \(A^h\) and \(B^h\) via bilinearly upsample:

Algorithm 1 lists the main steps of the guided filter in DGTDN. U is a bilinearly upsample operation, Box represents the box filtering. As shown in Fig. 1, GuidedFilterNet receives the output of haze remove network as input and enlarges the low-resolution dehazed result according to the original hazy image. In the proposed model, GuidedFilterNet interacts with haze remove network and bilinear downsampling, and performs a joint upsampling function. GuidedFilterNet is designed to enlarge the dehazed result and reduce the dehazing time of the proposed model.

Loss functions

Loss functions are critical to obtaining high quality dehazing results. The proposed method can obtain two-scale dehazed results. To utilize this useful information, we propose a multi-scale content loss function:

where N denotes the number of training samples, \(\Vert \cdot \Vert _1\) denotes \(L_1\) norm, \(H_h\) is the ground-truth haze-free image, and \(H_l\) is the low-resolution ground-truth. To make the predicted base layer similar to the low-resolution ground truth, we employ a \(L_1\) loss between the low-resolution ground truth and the predicted base layer:

where \(\mathscr {L}_{\text {baseloss}}\) is defined as a base loss. To further boost the quality of dehazed result, we introduce perceptual loss to train the proposed model:

where VGG represents the VGG-16 model, which is a classic model trained on ImageNet, and j indicates which layer is used to estimate the perceptual loss.

Finally, we combine the perceptual loss, the multi- scale content loss, the base loss, and the perceptual loss to train the whole network, which can be defined as:

where \(\lambda _1\) is used to determine the contribution of the base loss, and \(\lambda _2\) is used to determine the contribution of the perceptual loss.

Experimental results

In this section, we focus on showing the high performance of the proposed method. First, we introduce the implementation details of the proposed method and dataset. Second, we compare the proposed method with other dehazing methods on simulated haze images and real haze images. Third, we show the effectiveness of the proposed modules and loss functions.

Implementation details

In this subsection, we show the details of the proposed model. The proposed BaseNet is implemented based on the Swin-Transformer block. The configurations of the proposed RDB are listed in Table 1. The proposed DGTDN is implemented in a popular deep learning tool (PyTorch) using a single GPU ( TITAN V ) with 12GB memory. When training, we crop the training dataset into image patches with size \(240\times 240\). The learning rate is set to 0.001 and then is decreased by 0.8 every 10000 steps. We set the batch size to 16. We employ the adam to train the proposed model and initialize the \(\beta _1\) and \(\beta _2\) to 0.5 and 0.999, respectively. We set \(\lambda _1\) and \(\lambda _2\) to 1.0 and 0.01, respectively.

According to the strategy adopted by20,21,36, ITS from RESIDE is chosen to train the proposed model and indoor hazy images from SOTS subset are used to evaluate the dehazing performance. In addition, we evaluate the performance on NH-HAZE.

Experimental results on simulated hazy images

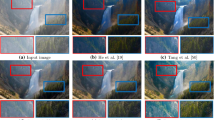

In this part, we show the dehazing performance of the proposed DGTDN and other dehazing methods on the simulated indoor hazy images. Due to the fact, it is hard to find a ground truth haze-free image for a real haze image, simulated indoor hazy images are used to evaluate the dehazing performance. We show quantitative and visual dehazing results in Table 2 and Fig. 3. As shown in Table 2, traditional dehazing methods can obtain low quantitative results. Traditional dehazing methods derive prior from haze-free images, which may not be held by some hazy images. This is the main reason why traditional dehazing cannot achieve a high dehazing performance. The learning based dehazing methods include two kinds. The first is learning to predict transmission map, such as MSCNN10 and DehazeNet11. The second kind is learning to predict clean images directly, such as DCPDN15, GFN12, MSBDN21, and Dehamer26. The learning-based methods10,11 that learn the relationship between transmission map and hazy images. However, the relationship between transmission maps and dehazing quality is not highly correlated, which results in a low dehazing performance. End-to-end dehazing methods12,15,21,26 construct the relationship between hazy images and dehazed results. However, the dehazing ability of these models depends on the model capacity. The transformer-based dehazing method has a high model capacity and achieves the second dehazing performance. To summarize, the proposed method achieves outstanding performance among famous dehazing methods. As shown in Fig. 3, we note that traditional dehazing methods, such as DCP, NLD, and BCCR often have the problem of color distortion. The learning-based methods10,11,12,15 have the problem of retaining haze. Other leaning-based methods21,26 can obtain dehazed results that are similar to ground truth. The proposed can obtain high-quality visual dehazing results, which are more similar to ground truth.

Visual results of dehazing methods on the dense non-homogeneous haze images48. The proposed method restores more haze-free images with clearer structures and textures.

We also test the dehazing performance on NH-HAZE48, which is a widely used dataset. NH-HAZE is a famous dehazing dataset, which contains non-homogeneous haze. The non-homogeneous haze is much harder to remove than the traditional homogeneous haze. The dehazing performance tested on non-homogeneous haze can show the model’s capability well. We listed the dehazing quantitative performance of dehazing methods in Table 3. As shown in Table 3, DCP, BCCR, and NLD achieve a low quantitative dehazing performance. We note that DehazeNet achieves lower quantitative dehazing performance than DCP, BCCR, and NLD. learning-based methods10,12,13,15,21 achieve higher dehazing performance. Dehamer achieves the second-best quantitative dehazing performance. The proposed method demonstrates the best PSNR and SSIM among the listed dehazing methods. The results demonstrate the effectiveness of the proposed method, which benefits from the combination of CNN and transformer. We also show visual dehazed results of the proposed method and other state-of-the-art methods. As shown in Fig. 4, we can see that the traditional dehazing methods often over-enhance the dehazed results, which contain obvious color distortion. The learning-based methods tend to retain haze in dehazed results. In contrast to these methods, the proposed method often obtains visually pleasing dehazed results, which are vivid color and contain rich image details.

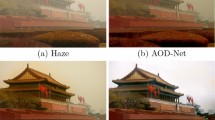

Visual results of dehazing methods. The dehazed result obtained by other state-of-the-art methods tends to show a hazed or dark appearance. The dehazed results of MSCNN and AOD-Net lose some details. In contrast, the proposed method often shows a sharp dehazed result and removes haze more completely.

Visual results of some recently dehazing methods and the proposed method. The dehazed results obtained by other state-of-the-art methods tend to show a dark or hazed appearance. DA is designed for natural image dehazing with domain adaption. However, we note that the area marked with a black rectangle retains a lot of haze. In contrast, the proposed method often shows a colorful and sharp dehazed result and removes haze more completely.

Experimental results on real-world haze images

To further show the performance, we choose some typical real-world hazy images. The density and distribution of haze in real hazy images are more multiplicative than in synthetic images. Hence, the real-world hazy image dehazing is a more challenging problem. In this part, we choose three hazy images, which include dense haze, large haze distribution, and dark haze images. These haze images can show the generalization and dehazing performance of deep-learning-based models.

Firstly, we conduct an experiment on a dense haze image. The dehazed results of state-of-the-art methods and the proposed method are shown in Fig. 5. As shown, we can see that the image tends to show dense haze over the whole image, which is hard for CNN-based dehazing methods. The dehazed results of AOD-Net13 and DCPDN15 tend to retain haze. The dehazed result of GFN12 contains visible color distortion and haze. The dehazed result of cGAN49 contains less color distortion than GFN and can remove haze better than AOD-Net, DCPDN, and GFN. We note that the dehazed results of EPDN, Dehamer, and the proposed method are better than other learning-based methods. We note that the area in the lake is not well dehazed in a result of EPDN. The proposed method can remove haze more completely than EPDN and Dehamer. Due to the fact the transformer can capture long dependency, which can boost the dehazing quality. The proposed method and Dehamer remove haze from dense haze images. The proposed method employs CNN to restore the image details, which makes the proposed method can restore more image details than Dehamer.

Secondly, we conduct an experiment on a hazy image with large haze distribution. This hazy image is a typical image, which has been employed to evaluate the dehazing performance widely. This image contains dense haze areas, a middle haze area, and light haze areas, which are marked using black, red, and green circles, respectively. Due to its large haze distribution, the learning-based methods often fail to remove haze well. As shown in Fig. 6, we note that the traditional methods1,4 often show a better dehazed results than learning-based methods12,13,15. The dehazed result of Non-local dehazing tends to lose image details and shows a dark appearance. The dehazed result of DCP1 tends to retain a small amount of haze. The dehazed results of CAP, DCPDN, FFA-Net, and AOD-Net tend to retain a large amount of haze. The dehazed results of GDN20 and GFN12 contain color distortion. Dehazenet and MSCNN are based on deep learning and Koschmieder’s law. We note that the dehazed result of MSCNN is better than DehazeNet, which can remove more haze. We also note that the dehazed result of MSCNN losses some image details. The dehazed result of PGAN34. However, we note the dehazed result of PGAN still contains haze. The dehazed results of EPDN and Dehamer can remove haze better. However, these methods tend to generate a dark dehazed result and tend to show some haze around the green circle area. The proposed method can remove haze more completely and keep the image details well.

Thirdly, we conduct an experiment on a more challenging image, which looks dark. The dehazed results of this image often have the problem of losing image details and retaining haze. As shown in Fig. 7, we can see that the dehazed result of DCP, AOD-Net, AECR, AirNet14, EPDN, and Dehamer tend to show a dark appearance. The dehazed result of DCPDN, FFA, and PGAN looks brighter. However, the dehazed results of these methods tend to retain haze in the dehazed result. The dehazed result of DA, PSD, and DGTDN can generate a much brighter dehazing result. However, the dehazed result of PSD tend to retain haze in the whole image while the result of DA tends to leave haze in a black rectangle and show a blur dehazed result. AirNet is based on the assumption that the whole image shares similar degradation. In contrast, the proposed method can remove haze more completely and obtain a sharp dehazed result. To show the quality of dehazed results obtained by the proposed method and other dehazing methods quantitatively, we use the metric proposed in50. As shown in Table 4, we can see that the proposed method can remove haze better than other dehazing methods.

Ablation studies

To the effectiveness of the proposed module in DGTDN, we design a series of experiments. Firstly, we design a model to show the effectiveness of the transformer. We remove the transformer from the proposed model, and keep other parts unchanged, we term it as model1. Secondly, we show the effectiveness of the DetailNet. We remove the DetailNet from the proposed model, and keep other parts unchanged, we term it as model2. Finally, we show the effectiveness of the GuidedFilterNet, which can boost the dehazing speed of the proposed model. To show the influence of the GuidedFilterNet, we design a model which removes the GuidedFilterNet and keeps other parts unchanged, we term it as model3. We show the quantitative comparison in Table 5 and a visual example in Fig. 8. As shown in Table 5, we can see that the model1 achieves the lowest dehazing performance due to the limitation of the receptive field. As we can see that the BaseNet can boost the dehazing performance dramatically, which shows the transformer module is necessary for dehazing. The transformer module can improve the dehazing performance by enlarging the receptive field. We note that the application of guided filter reduces the dehazing performance. However, it is necessary to improve the dehazing speed while only reducing the dehazing performance slightly. We show the difference dehazed result of model1, model2, model3, and the proposed model in Fig 8. We can see that model1 cannot remove haze in remote areas, which are dense haze. The transformer module is necessary for removing dense haze areas. By adding the DetaiNet, we can see that the model can remove haze more completely. The guided filter improve the dehazing quality in remote areas.

To show the influence of loss functions, we design an ablation that involves the models are trained with different losses. First, we train the model without \(\mathscr {L}_{\text {perc}}\). Second, we train the proposed model without \(\mathscr {L}_{\text {baseloss}}\). Third, we train the proposed model without \(\mathscr {L}_{\text {con}}\). We show the quantitative results in Table 6. As shown, \(\mathscr {L}_{\text {con}}\) is critical to obtain a high quantitative dehazing result. \(\mathscr {L}_{\text {con}}\) is designed to boost the details of the dehazed results. \(\mathscr {L}_{\text {con}}\) is designed to make the dehazed results similar to the ground truths. \(\mathscr {L}_{\text {baseloss}}\) is used to reduce the difficulty of dehazing problem, which can boost the dehazing quality. We also show dehazed results of the model trained with different loss functions in Fig. 9. As shown, we note that the model trained without \(\mathscr {L}_{\text {con}}\) obtains a dehazed result that losses image details. The dehazed results obtained by models trained without \(\mathscr {L}_{\text {baseloss}}\) or \(\mathscr {L}_{\text {perc}}\) generate results with color distortion or over-enhancement. As shown in Fig. 9, the model trained with all losses can generate high quality dehazing results.

Analysis of run states

We test the dehazing speed of dehazing methods on 500 images with size \(256\times 256\). The test hazy images are from the outdoor part of RESIDE, we resize these images into a fixed size (\(256\times 256\)). We conduct the experiment on a notebook, which is equipped with an Intel(R) Core i5 CPU@2.3GH, 8GB memory, and a 3GB RTX 1060 GPU. The average running times of state-of-the-art dehazing methods and the proposed method is shown in Table 7. The traditional dehazing methods1,4 are slower than learning-based methods. These methods are executed without parallelization technology, which increases the execution time. The early learning-based method11 is faster. However, the dehazing performance of this method is poor. The proposed method achieves state-of-the-art dehazing performance while keeping a lower execution time. In addition, we show the rum states of each method in Table 7. The running states include language, platform, execution time, parameters, and consumption of GPU memory.

As shown in Table 7, the proposed model has a suitable parameter number and consumes suitable GPU memory, while achieving the highest quantitative performance. We also show the effectiveness of the GuidedFilterNet, which can reduce the execution time and GPU memory compared with model3. As shown in Table 5, the proposed method is with almost no visible degradation compared with model3. We can obtain the conclusion the GuidedFilterNet can improve the execution speed while avoiding performance degradation.

Extended applications

Based on the fact the proposed model can capture the local and global features jointly, we can apply the proposed model to solve the problem, such as underwater enhancement51,52,53,54,55, detain56, and human image generation57. Single image underwater enhancement is a challenging problem due to its ill-posed nature. The global information and local details of underwater images are degraded by water, which results in the degeneration of each pixel may be different. Based on this observation, the high-performance model requires global features to capture the degeneration. The underwater enhancement also needs to restore the fine details, which requires the local features. The underwater enhancement is similar to dehazing, which also needs global and local features jointly and a low compute resource requirement. The proposed model can capture the global and local features jointly, which also can be applied to underwater enhancement.

Conclusion

Deep Guided Transformer Dehazing Network (DGTDN) is proposed based on the transformer and guided filter, which boosts the speed of transformer-based dehazing methods and the image quality of dehazed result. The proposed model consists of BaseNet, DetailNet, and GuidedFilerNet. BaseNet and DetailNet are proposed to capture the local and global features jointly. To boost the advantages of the transformer module and the CNN module, we employ the transformer module to predict the base layer of a clean image, and the CNN module to predict the detail layer. To address the dehazing speed problem of the transformer module, we employ the guided filter model to perform a joint up-sampling, which can improve the dehazing speed while keeping the quality of dehazed result. We show the effectiveness of the proposed method by comparing it with state-of-the-art dehazing methods on real and simulated haze images. We also show the effectiveness of the novel modules by comparing the performance of the different architectures and loss functions. In the future, we will study strategies of combining CNN and the transformer, which is critical to capture the local and global features. We also will study the domain shift of simulated-haze and real-haze images, which is critical to boost the dehazing performance on real haze images.

Data availability

The datasets generated and/or analyzed during the current study are available in the RESIDE repository, which can be found at: https://sites.google.com/view/reside-dehaze-datasets/reside-standard. The natural hazy images are from: http://live.ece.utexas.edu/research/fog/fade_defade.html and https://www.cs.huji.ac.il/w~raananf/projects/dehaze_cl/results/.

References

He, K., Sun, J. & Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33, 2341–2353 (2011).

Fattal, R. Dehazing using color-lines. ACM Trans. Graph. 34, 13 (2014).

Meng, G., Wang, Y., Duan, J., Xiang, S. & Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In IEEE International Conference on Computer Vision (2013).

Berman, D., Avidan, S. et al. Non-local image dehazing. In IEEE Conference on Computer Vision and Pattern Recognition (2016).

Zhu, Q., Mai, J. & Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 24, 3522–3533 (2015).

Fattal, R. Single image dehazing. ACM Trans. Graph. 27, 72 (2008).

Schechner, Y. Y., Narasimhan, S. G. & Nayar, S. K. Instant dehazing of images using polarization. In IEEE Conference on Computer Vision and Pattern Recognition (2001).

Narasimhan, S. G. & Nayar, S. K. Chromatic framework for vision in bad weather. In IEEE Conference on Computer Vision and Pattern Recognition (2000).

Shwartz, S., Namer, E. & Schechner, Y. Y. Blind haze separation. IEEE Conf. Comput. Vis. Pattern Recogn. 2, 1984–1991 (2006).

Ren, W. et al. Single image dehazing via multi-scale convolutional neural networks. In European Conference on Computer Vision (2016).

Cai, B., Xu, X., Jia, K., Qing, C. & Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 25, 5187–5198 (2016).

Ren, W. et al. Gated fusion network for single image dehazing. In IEEE Conference on Computer Vision and Pattern Recognition (2018).

Li, B., Peng, X., Wang, Z., Xu, J. & Feng, D. An all-in-one network for dehazing and beyond. In IEEE International Conference on Computer Vision (2017).

Li, B. et al. All-In-One Image Restoration for Unknown Corruption. In IEEE Conference on Computer Vision and Pattern Recognition (New Orleans, LA, 2022).

Zhang, H. & Patel, V. M. Densely connected pyramid dehazing network. In IEEE Conference on Computer Vision and Pattern Recognition (2018).

Chen, W.-T., Ding, J.-J. & Kuo, S.-Y. Pms-net: Robust haze removal based on patch map for single images. In IEEE Conference on Computer Vision and Pattern Recognition (2019).

Chen, Z., Wang, Y., Yang, Y. & Liu, D. Psd: Principled synthetic-to-real dehazing guided by physical priors. In IEEE Conference on Computer Vision and Pattern Recognition, 7180–7189 (2021).

Qu, Y., Chen, Y., Huang, J. & Xie, Y. Enhanced pix2pix dehazing network. In IEEE Conference on Computer Vision and Pattern Recognition (2019).

Shao, Y., Li, L., Ren, W., Gao, C. & Sang, N. Domain adaptation for image dehazing. In IEEE Conference on Computer Vision and Pattern Recognition (2020).

Liu, X., Ma, Y., Shi, Z. & Chen, J. Griddehazenet: Attention-based multi-scale network for image dehazing. In IEEE International Conference on Computer Vision (2019).

Dong, H. et al. Multi-scale boosted dehazing network with dense feature fusion. In IEEE Conference on Computer Vision and Pattern Recognition (2020).

Zhang, H., Sindagi, V. & Patel, V. M. Joint transmission map estimation and dehazing using deep networks. IEEE Trans. Circuits Syst. Video Technol. 30, 1975–1986. https://doi.org/10.1109/TCSVT.2019.2912145 (2020).

Song, X. et al. Wsamf-net: Wavelet spatial attention-based multistream feedback network for single image dehazing. IEEE Trans. Circuits Syst. Video Technol. 33, 575–588. https://doi.org/10.1109/TCSVT.2022.3207020 (2023).

Wang, Z. et al. Uformer: A general u-shaped transformer for image restoration. In IEEE Conference on Computer Vision and Pattern Recognition, 17683–17693 (2022).

Dosovitskiy, A. et al. An image is worth 16x16 words: Transformers for image recognition at scale. Preprint at arXiv:2010.11929 (2020).

Guo, C.-L. et al. Image dehazing transformer with transmission-aware 3d position embedding. In IEEE Conference on Computer Vision and Pattern Recognition, 5812–5820 (2022).

Zhao, D., Xu, L., Ma, L., Li, J. & Yan, Y. Pyramid global context network for image dehazing. IEEE Trans. Circuits Syst. Video Technol. 31, 3037–3050. https://doi.org/10.1109/TCSVT.2020.3036992 (2021).

Zhang, Y. et al. Image super-resolution using very deep residual channel attention networks. In ECCV, 286–301 (2018).

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. Densely connected convolutional networks. In IEEE Conference on Computer Vision and Pattern Recognition (2017).

Fu, X. et al. Removing rain from single images via a deep detail network. In IEEE Conference on Computer Vision and Pattern Recognition (2017).

Ren, W., Pan, J., Zhang, H., Cao, X. & Yang, M.-H. Single image dehazing via multi-scale convolutional neural networks with holistic edges. Int. J. Comput. Vision 128, 240–259 (2020).

Bai, H., Pan, J., Xiang, X. & Tang, J. Self-guided image dehazing using progressive feature fusion. IEEE Trans. Image Process. 31, 1217–1229 (2022).

Deng, Z. et al. Deep multi-model fusion for single-image dehazing. In IEEE International Conference on Computer Vision, 2453–2462 (2019).

Pan, J. et al. Physics-based generative adversarial models for image restoration and beyond. IEEE Trans. Pattern Analy. Mach. Intell.https://doi.org/10.1109/TPAMI.2020.2969348 (2020).

Zhang, J. & Tao, D. Famed-net: A fast and accurate multi-scale end-to-end dehazing network. IEEE Trans. Image Process. 29, 72–84 (2020).

Wu, H. et al. Contrastive learning for compact single image dehazing. In IEEE Conference on Computer Vision and Pattern Recognition, 10551–10560 (2021).

Zhang, J. et al. Hierarchical density-aware dehazing network. IEEE Transactions on Cybernetics (2021).

Zhang, S. et al. Semantic-aware dehazing network with adaptive feature fusion. IEEE Trans. Cybern. 53, 454–467 (2021).

Ren, W., Sun, Q., Zhao, C. & Tang, Y. Towards generalization on real domain for single image dehazing via meta-learning. Control. Eng. Pract. 133, 105438. https://doi.org/10.1016/j.conengprac.2023.105438 (2023).

Liu, Y., Yan, Z., Tan, J. & Li, Y. Multi-purpose oriented single nighttime image haze removal based on unified variational retinex model. IEEE Trans. Circuits Syst. Video Technol. 33, 1643–1657. https://doi.org/10.1109/TCSVT.2022.3214430 (2023).

Vaswani, A. et al. Attention is all you need. Advances in neural information processing systems30 (2017).

Carion, N. et al. End-to-end object detection with transformers. In European Conference on Computer Vision (ed. Carion, N.) 213–229 (Springer, 2020).

Xie, E. et al. Segformer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural. Inf. Process. Syst. 34, 12077–12090 (2021).

Li, H., Zhang, Y., Liu, J. & Ma, Y. Gtmnet: A vision transformer with guided transmission map for single remote sensing image dehazing. Sci. Rep. 13, 9222 (2023).

Zamir, S. W. et al. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 5728–5739 (2022).

He, K., Sun, J. & Tang, X. Guided image filtering. In European Conference on Computer Vision, 1–14 (2010).

Li, B. et al. Benchmarking single image dehazing and beyond. IEEE Transactions on Image Processing (2018).

Ancuti, C. O., Ancuti, C. & Timofte, R. Nh-haze: An image dehazing benchmark with non-homogeneous hazy and haze-free images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops (2020).

Li, R., Pan, J., Li, Z. & Tang, J. Single image dehazing via conditional generative adversarial network. In IEEE Conference on Computer Vision and Pattern Recognition (2018).

Choi, L. K., You, J. & Bovik, A. C. Referenceless prediction of perceptual fog density and perceptual image defogging. IEEE Trans. Image Process. 24, 3888–3901 (2015).

Zhou, J. et al. Ugif-net: An efficient fully guided information flow network for underwater image enhancement. IEEE Transactions on Geoscience and Remote Sensing (2023).

Zhang, D. et al. Rex-net: A reflectance-guided underwater image enhancement network for extreme scenarios. Expert Systems with Applications 120842 (2023).

Zhou, J., Sun, J., Zhang, W. & Lin, Z. Multi-view underwater image enhancement method via embedded fusion mechanism. Eng. Appl. Artif. Intell. 121, 105946 (2023).

Zhou, J., Pang, L., Zhang, D. & Zhang, W. Underwater image enhancement method via multi-interval subhistogram perspective equalization. IEEE Journal of Oceanic Engineering (2023).

Zhou, J., Zhang, D. & Zhang, W. Cross-view enhancement network for underwater images. Eng. Appl. Artif. Intell. 121, 105952 (2023).

Zhang, H. & Patel, V. M. Density-aware single image de-raining using a multi-stream dense network. In IEEE Conference on Computer Vision and Pattern Recognition, 695–704 (2018).

Wu, H., He, F., Duan, Y. & Yan, X. Perceptual metric-guided human image generation. Integr. Comput.-Aided Eng. 29, 141–151 (2022).

Funding

This work is supported by the National Natural Science Foundation of China (Nos. 62101387, 62271321). This work is partially supported by the Science Project of Shaoxing University (Nos. 20205048, 20210026, and 2022LG006), and in part by the Science and Technology Plan Project in Basic Public Welfare class of Shaoxing city (No.2022A11002).

Author information

Authors and Affiliations

Contributions

S.Z.: Conceptualization, Methodology, Software, Writing – original draft. L.Z., K.H. and S.F.: Visualization, Methodology, Software, Writing – original draft. E.F.: Writing – review and editing. L.Z.: Supervision, Writing – review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, S., Zhao, L., Hu, K. et al. Deep guided transformer dehazing network. Sci Rep 13, 15333 (2023). https://doi.org/10.1038/s41598-023-41561-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-41561-z

- Springer Nature Limited