Abstract

Computer-aided detection of brain lesions from volumetric magnetic resonance imaging (MRI) is in demand for fast and automatic diagnosis of neural diseases. The template-matching technique can provide satisfactory outcome for automatic localization of brain lesions; however, finding the optimal template size that maximizes similarity of the template and the lesion remains challenging. This increases the complexity of the algorithm and the requirement for computational resources, while processing large MRI volumes with three-dimensional (3D) templates. Hence, reducing the computational complexity of template matching is needed. In this paper, we first propose a mathematical framework for computing the normalized cross-correlation coefficient (NCCC) as the similarity measure between the MRI volume and approximated 3D Gaussian template with linear time complexity, \({\mathbf{\mathcal{O}}}\left( {{\varvec{a}}_{{{\varvec{max}}}} {\varvec{N}}} \right)\), as opposed to the conventional fast Fourier transform (FFT) based approach with the complexity \({\mathbf{\mathcal{O}}}\left( {{\varvec{a}}_{{{\varvec{max}}}} {\varvec{N}}\log {\varvec{N}}} \right)\), where \({\varvec{N}}\) is the number of voxels in the image and \({\varvec{a}}_{{{\varvec{max}}}}\) is the number of tried template radii. We then propose a mathematical formulation to analytically estimate the optimal template radius for each voxel in the image and compute the NCCC with the location-dependent optimal radius, reducing the complexity to \({\mathbf{\mathcal{O}}}\left( {\varvec{N}} \right)\). We test our methods on one synthetic and two real multiple-sclerosis databases, and compare their performances in lesion detection with FFT and a state-of-the-art lesion prediction algorithm. We demonstrate through our experiments the efficiency of the proposed methods for brain lesion detection and their comparable performance with existing techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

A lesion in the brain represents a diseased area or one with abnormal tissue due to many factors such as infection, traumatic brain injury, tumors, multiple sclerosis (MS), stroke, etc1. The complications resulting from brain lesions are numerous including neurodegeneration, memory or vision loss, and behavioral changes2. The presence of a lesion in the brain often demands prompt clinical investigation to initiate the treatment process. Magnetic resonance imaging (MRI) contributes to the diagnosis significantly by visualizing the neuroanatomy with high soft-tissue contrast in vivo. Detection of the exact location of lesion is essential for clinical implications and thus assists in diagnosis and treatment planning. Manual assessment (i.e. visual screening) of individual two-dimensional (2D) slices from the whole MRI volume is the conventional practice of identifying the presence of lesions. This is a cumbersome task, especially in the presence of multiple lesions (e.g., MS, metastasis, etc.) or when performing it on a large dataset. Inter- and intra-rater variabilities are other caveats of manual lesion detection. The limitations of manual screening and volumetric analysis by trained experts have led to the development of semi-automatic and automatic segmentation algorithms in the last two decades, which can assist the clinician in identifying the region of interest (ROI) from the magnetic resonance (MR) image. The study conducted by Egger et al.3 on fluid attenuation inversion recovery (FLAIR) MRI of MS lesions over 50 cases, reported that the segmentation methods such as lesion growth4 and lesion prediction algorithm (LPA)5 underperformed for quantifying the number of lesions on the subjects with high lesion load. According to them, the difficulties in the evaluation of the confluent lesions in periventricular region might be the cause of poor performance, which emphasizes the importance of prior detection of lesions in MRI volume, especially when multiple lesions are present in brain.

Machine learning, especially deep learning, has been successfully applied for automatic segmentation of brain lesions6. Deep neural networks are popular because of their high performance over conventional machine-learning techniques. For instance, Lu et al.7 adopted transfer learning using AlexNet convolutional architecture to determine the pathological brain slices (images) of various diseases, such as Glioma, MS, Alzheimer’s, Huntington’s, etc., from a T2 MR image database. They employed one layer from each of the fully connected, softmax, and classification layers as the replacement for the last three layers in the original AlexNet, and with this modified network, achieved cent percentage accuracy for detection of the pathological brain from normal slices. In the following year, Lu et al.8 proposed a deep neural architecture comprising different steps, such as: batch normalization to improve pre-trained AlexNet, training on normal and abnormal brain MR images, and implementation of extreme learning machine classifier to replace several layers at the end of the network (in which chaotic bat algorithm was used to optimize the number of end layers for replacement). This methodology outperformed different variants of AlexNet with comprehensive accuracy for binary classification of abnormal MR images from healthy samples. The present-day deep learning requires a large amount of training data as well as high computational power. In the context of MRI, different scanners use different acquisition parameters leading to variations in image characteristics. This might create difficulty for supervised approaches, for instance when testing on a previously unseen image that deviates from the training data. The key advantage of using unsupervised approaches is that they do not require any prior knowledge or training data. Cabezas et al.9 developed a two-stage unsupervised method consisting of modified expectation–maximization (EM) for initial soft-tissue segmentation from T1-weighted (T1), T2-weighted (T2), and proton-density (PD) images and thresholding of FLAIR images for hyperintense-lesion segmentation. They reported 55% accuracy as the best mean sensitivity obtained in hyperintense lesion detection. Generally, thresholding-based approaches depend on intensity histogram to find the cutoff intensity, based on which the target lesion (hyper- or hypo-intense) could be differentiated. This technique faces difficulties in classifying non-lesion regions having similar intensity profile as lesions, where voxel intensity values are influenced by intensity inhomogeneity or artifacts10. Template matching, a fully unsupervised technique, has been employed for automatic lesion detection from medical images because of its satisfactory performance in localization of lesions. A general choice for the template could be a radially-symmetric shape such as a circle in 2D or a sphere in 3D, where the template with varying radii is matched at each image voxel. In other words, the image is locally compared with the template by some similarity measure, and this operation is repeated for a set of radii until the optimal radius is found11,12. Tourassi et al.13 developed a template-matching technique with a mutual-information similarity measure to detect mass from digital mammograms. Later on, Lochanambal et al.14 defined the template based on the shape and the brightness of target lesion and micro calcifications for lesion detection in mammographic images. Template matching has also been employed for detection of lung nodules; for instance, Farag et al.15 proposed deformable 2D and 3D templates for spiral computed tomography (CT) scan images, Osman et al.16 developed a 3D template to perform convolution with 3D ROI image for serial CT images, and Wang et al.17 proposed a 3D spherical template with varying size to detect lesions having a diameter of 4–20 mm for typical CT scan images. Later, Moltz et al.18 developed a generalized method based on template matching for detection of various types of lesions, such as lung nodules, liver metastasis, and lymph nodes, from CT images. Next, we discuss the applications of template matching in MRI.

Warfield et al.19 developed an “adaptive, template moderated, spatially varying statistical classification” framework for segmentation of brain anatomy of neonates, MS lesions, brain tumors, and damaged knee cartilage from MR images by using an anatomical template to moderate the statistical classification. Ambrosini et al.10 proposed a 3D spherical template and used the normalized cross-correlation coefficient (NCCC) as the similarity measure between templates (with varying radii) and the brain volume for automatic detection of metastatic brain tumor from post-contrast T1 MRI (spoiled gradient echo pulse sequence). Later, Farjam et al.20 used 3D post-Gd-DTPA T1 volumes to localize hyperintense metastatic lesions with a 3D spherical-shell template. In a different study, Yang et al.21 considered contrast-enhanced MRI black-blood pulse sequence for metastatic lesion detection using 3D template. Wang et al.22 performed another study with an adaptive template using NCCC as similarity measure for tumor detection using T1 MR images. We found that an exhaustive search was a common choice to find the optimal size of the template in the literature. Such an exhaustive search is responsible for the elevated computational cost of the algorithm, as it increases the runtime proportionally to the number of tried template sizes12. Another factor affecting the computational complexity is the choice of FFT for convolution to compute the NCCC; however, its overall complexity of \({\mathcal{O}}\left( {a_{max} N\log N} \right)\) is still costly (\(a_{max}\) is the number of tried template radii), especially when processing large MRI datasets.

In this work, we develop a new computational framework for the NCCC with the 3D Gaussian template, where we approximate the convolution with the Gaussian as multiple convolutions with a box kernel, per the principle of central limit theorem (inspired by a fast method of computing continuous wavelet transform23), which follows linear time, \({\mathcal{O}}\left( N \right)\), for a fixed radius with respect to the number of voxels in the MRI volume, \(N\). This method is fast with the overall complexity of \({\mathcal{O}}\left( {a_{max} N} \right)\), and produces equivalent results compared to the FFT-based algorithm (Eqs. 1–15), which have been presented in our preliminary work12). We then extend this approach by developing a new template-matching algorithm to compute the NCCC with the optimal-size template in linear time, \({\mathcal{O}}\left( N \right)\), for automatic detection of brain lesions from volumetric MR images. To accomplish this, we propose a mathematical derivation to analytically optimize the maximum template radius for each voxel in the MRI volume, leading to a computational cost that is independent of the radius of template (lesion size). The addition of such a radius-optimized template-matching approach is possible via extending our previous algorithm, but not with the FFT-based method. We tested the performances of our two methods [with overall complexities of \({\mathcal{O}}\left( {a_{max} N} \right)\) and \({\mathcal{O}}\left( N \right)\)] on synthetic data and real MR image databases of MS lesions, and then compared them with those of the conventional FFT-based method and the LPA. Experimental results over synthetic data validate the proposed approach, and lesion-detection results on real MRI volumes show its effectiveness and applicability.

Methods

Template matching in \({\mathbf{\mathcal{O}}}\left( {a_{max} N} \right)\)

Let \(f\left( {\mathop{X}\limits^{\rightharpoonup} } \right)\) be the \(D\)-dimensional input image the lesions in which we intend to detect. In this work, the \(D\)-dimensional Gaussian is proposed as the template, which is approximated—following the central limit theorem—by convolving a \(D\)-dimensional symmetric and normalized box kernel, \(h_{D} \left( \cdot \right)\), with itself \(n - 1\) times, denoted as \(h_{D}^{\left( n \right)} \left( \cdot \right)\) (aka B-splines24). In our experiments, a small value of \(n = 2\) or \(n = 3\) turned out to be sufficient. The sizes of the box kernel and the engulfing template are \(2a\) and \(2b\), respectively, in all dimensions; i.e. \(h_{D} \left( {\mathop{X}\limits^{\rightharpoonup} } \right)\) is \(1/\left( {2a} \right)^{D}\) if \(\mathop{X}\limits^{\rightharpoonup} \in {\Omega }_{a}\), and \(0\) otherwise, where \({\Omega }_{l} { := }\left\{ {\mathop{X}\limits^{\rightharpoonup} {|}\left| {X_{1} } \right|, \ldots ,\left| {X_{D} } \right| \le l} \right\}\). To ensure that the box kernel fits in the engulfing template after the convolutions, we restrict its size as: \(0 < a \le a_{max} < b/n\). The similarity between the given image and the symmetric template with a varying \(a\) can be computed from the following formula for the NCCC:

where \(\overline{f}\left( {\mathop{X}\limits^{\rightharpoonup} } \right)\) and \(\overline{{h_{D}^{\left( n \right)} }}\) represent the mean of the image inside the template centered at \(\mathop{X}\limits^{\rightharpoonup}\), and the mean of the template, respectively. Our goal is to maximize NCCC with respect to both \(a\) and \(\mathop{X}\limits^{\rightharpoonup}\), to accurately localize the lesions. In the following, we proceed to calculate different terms in Eq. (1).

Since by definition \(\int_{{\Omega_{b} }} {\left( {h_{D}^{\left( n \right)} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } \right) - \overline{{h_{D}^{\left( n \right)} }} } \right)d\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} = 0}\), we can omit \(\overline{f}\left( {\mathop{X}\limits^{\rightharpoonup} } \right)\) from the numerator of Eq. (1). Noting that \(h_{D}^{\left( n \right)} \left( { - \mathop{X}\limits^{\rightharpoonup} } \right) = h_{D}^{\left( n \right)} \left( {\mathop{X}\limits^{\rightharpoonup} } \right)\), we compute \(\int_{{\Omega_{b} }} {f\left( {\mathop{X}\limits^{\rightharpoonup} + \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } \right)h_{D}^{\left( n \right)} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } \right)d\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } = \int_{{{\mathbb{R}}^{D} }} {f\left( {\mathop{X}\limits^{\rightharpoonup} + \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } \right)h_{D}^{\left( n \right)} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } \right)d\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} = \left( {f*h_{D}^{\left( n \right)} } \right)\left( {\mathop{X}\limits^{\rightharpoonup} } \right)}\). Thanks to the separability property of the template, i.e. \(h_{D}^{\left( n \right)} \left( {\mathop{X}\limits^{\rightharpoonup} } \right) = \prod\nolimits_{j = 1}^{D} {h_{1}^{\left( n \right)} } \left( {X_{j} } \right)\), we can first find the solution to this convolution for \(D = 1\) and then apply it sequentially for each dimension. For \(D = 1\), we note that:

We assume (and then verify) the following solution for the convolution:

where \(F_{n} \left( X \right): = \int_{ - \infty }^{X} {F_{n - 1} } \left( {X^{\prime}} \right){\text{d}}X^{\prime}\), with \(F_{0} { := }f\). Let \(\gamma_{n,k} { := }0\) for \(\left| k \right| > n\), and also \(\gamma_{0,0} { := }1\) for the case with no convolution. Now, to verify that Eq. (3) is indeed a solution and to compute \(\gamma_{n,k}\), we substitute Eq. (3) in Eq. (2):

According to Eq. (3), replacing \(n\) with \(n + 1\) should result in:

This validates our assumption in Eq. (3) by induction, as Eq. (3) is true for the base case \(n = 0\), and provided that it is true for \(n\), it holds for \(n + 1\) [thanks to similarities between Eqs. (4) and (5)] with the following recursive relationship for \(\gamma\), which is obtained by coefficient matching between Eqs. (4) and (5):

For example, \(\gamma_{{1,\left\{ { - 1,0,1} \right\}}} = \left\{ { - 1,0,1} \right\}\) and \(\gamma_{{2,\left\{ { - 2, \ldots ,2} \right\}}} = \left\{ {1, 0, - 2, 0, 1} \right\}\). Being closely related to Pascal’s triangle, Eq. (6) is solved as follows:

where \(\left( {\begin{array}{*{20}c} n \\ s \\ \end{array} } \right) = n!/s!\left( {n - s} \right)!\) is the binomial coefficient. We now extend this to \(D\) dimensions and solve the first numerator term of Eq. (1) with the help of \(\gamma_{n,k}\):

where \(\sum\nolimits_{{\mathop{k}\limits^{\rightharpoonup} = - n}}^{n} {\left( \cdot \right)}\) is short for \(\sum\nolimits_{{k_{1} , \ldots ,k_{D} = - n}}^{n} {\left( \cdot \right)}\). The operator \({\mathcal{S}}_{n,D} \left\{ f \right\}\) facilitates integration of \(f\) in all dimensions, and is defined recursively as:

Using the fact that \(\overline{{h_{D}^{\left( n \right)} }} = 1/\left( {2b} \right)^{D}\) (see below), the remainder of the numerator of Eq. (1) can be computed similarly to Eq. (8) (by fixing \(n = 1\)):

Next, we rewrite the first factor of the denominator of Eq. (1) using the common expansion of the variance as \(\int_{{{\Omega }_{b} }} {\left( {f\left( {\mathop{X}\limits^{\rightharpoonup} + \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } \right) - \overline{f}\left( {\mathop{X}\limits^{\rightharpoonup} } \right)} \right)^{2} {\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} = \left( {2b} \right)^{D} \left( {\overline{{f^{2} }} \left( {\mathop{X}\limits^{\rightharpoonup} } \right) - \overline{f}\left( {\mathop{X}\limits^{\rightharpoonup} } \right)^{2} } \right)}\), where \(\overline{{f^{p} }} \left( {\mathop{X}\limits^{\rightharpoonup} } \right)\), \(p = 1,2\), is calculated similarly to Eq. (8) (by fixing \(n = 1\)) as:

Lastly, we calculate the second factor in the denominator of Eq. (1), again using the common expansion of the variance, as: \(\mathop \int \nolimits_{{\Omega _{b} }} \left( {h_{D}^{{\left( n \right)}} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } \right) - ~\overline{{h_{D}^{{\left( n \right)}} }} } \right)^{2} d\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} = \left( {2b} \right)^{D} \left( {\overline{{h_{D}^{{\left( n \right)^{2} }} }} - \overline{{h_{D}^{{\left( n \right)}} }} ^{2} } \right).\) To that end, we calculate the Fourier transform of the box function as \({\mathcal{F}}\left\{ {h_{D} } \right\} = \prod\nolimits_{j = 1}^{D} {{\text{sinc}}} \left( {a\omega_{j} } \right)\), where \({\text{sinc}}\theta = \left( {\sin \theta } \right)/\theta\), which leads to the Fourier transform of the kernel \({\mathcal{F}}\left\{ {h_{D}^{\left( n \right)} } \right\} = \, {\mathcal{F}}\left\{ {h_{D} } \right\}^{n} = \prod\nolimits_{j = 1}^{D} {{\text{sinc}}^{n} } \left( {a\omega_{j} } \right)\) via the convolution theorem. The integral of the template then is \(\int_{{{\Omega }_{b} }} {h_{D}^{\left( n \right)} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } \right){\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } = \int_{{{\mathbb{R}}^{D} }} {h_{D}^{\left( n \right)} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} } \right){\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} = \left. {{\mathcal{F}}\left\{ {h_{D}^{\left( n \right)} } \right\}} \right|_{{\mathop{\omega }\limits^{\rightharpoonup} = 0}} = \prod\nolimits_{j = 1}^{D} {{\text{sinc}}^{n} } \left( 0 \right) = 1}\), from which the template mean is computed as \(\overline{{h_{D}^{\left( n \right)} }} = 1/\left( {2b} \right)^{D}\). As for the mean of the square of the template, we use Parseval’s theorem as follows:

We now define and compute25,26:

Therefore, \(\overline{{h_{D}^{{\left( n \right)^{2} }} }} = \left( {\frac{{\beta _{n} }}{{2ab}}} \right)^{D}\), and subtracting \(\left( {2b} \right)^{D} \overline{{h_{D}^{\left( n \right)} }}^{2}\) from it leads to:

Substituting all the above in Eq. (1) will result in the following formula for the proposed fast approach of NCCC computation:

NCCC values range from \(- 1\) to \(1\). In this subsection, we compute the NCCC for several different values of \(a\). However, note that \({\mathcal{S}}_{n,D} \left\{ {f^{p} } \right\}\) are volumes independent of \(a\) and can be pre-computed, along with the second term in the numerator and the first factor in the denominator of Eq. (15). Furthermore, the second factor in the denominator is a scalar that is computed fast in \({\mathcal{O}}\left( 1 \right)\) for each \(a\). Thus, in the proposed approach to estimate NCCC with the Gaussian template, the bulk of the computational cost is only due to the first term in the numerator of Eq. (15), which can be computed in \({\mathcal{O}}\left( N \right)\) for each \(a\), with \(N\) the number of voxels in the image. On the contrary, the computational complexity of FFT is \({\mathcal{O}}\left( {N\log N} \right)\) for each \(a\)27, making the overall computational cost of lesion detection noticeably higher for the FFT-based algorithm (template has to be zero-padded to the size of the image) than the proposed approach. This difference in computational cost is particularly amplified given that the NCCC needs to be repeatedly computed for many values of \(a = 1,2, \ldots , a_{max}\).

Template matching in \({{\mathcal{O}}}\left( {\text{N}} \right)\)

Defining NCCC

We intend to optimize the NCCC with respect to the template radius; however, analytical maximization of Eq. (15) with respect to the template radius is difficult. Therefore, we aimed at redefining NCCC, which would be suitable for voxel-wise maximization of template radius. A flowchart of the proposed method is illustrated in Fig. 1. Let us assume the template, \(g_{D} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right){\text{: = exp}}\left( { - \frac{1}{{2a^{2} }}\left\| {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right\|^{2} } \right)\), and the ROI are both Gaussian, where \(\Vert \bullet \Vert\) denotes the Euclidean norm. As in the previous section, we solve the NCCC by considering all terms of numerator and denominator, individually. We begin by focusing on the first term of the numerator of Eq. (1). For a Gaussian-shaped lesion, this term can be solved as:

where, \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {\mu } (\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} )\) and \(\sigma(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} )\) are the mean (with respect to \((\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} )\)) and standard deviation (SD) of the ROI, i.e. the location and the radius of the lesion, respectively. We now simplify Eq. (16) by reducing the power of exponential via completing the square as,

A flowchart depicting the proposed \(\mathcal{O}\left(\mathrm{N}\right)\) method. We first calculate a closed-form formula for the optimal radius by Gaussian modeling of the lesion, which we then apply to the local image statistics (estimated from a smoothed version of the MRI volume) to compute the optimal radius at each voxel. We then use an approximated Gaussian template (by consecutive convolutions with the boxcar kernel) to compute the NCCC at each voxel, from which we keep a pre-determined number of top detected lesions.

Now we can rewrite Eq. (16) as,

We define \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {t} { := }\frac{{\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} ~ - ~\frac{{\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {\mu } \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)}}{{1 + \frac{{\sigma ^{2} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)}}{{a^{2} }}}}}}{{a\sigma \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)\sqrt {\frac{2}{{a^{2} ~ + ~\sigma ^{2} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)}}} }}\); hence, \({\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} = \left( {a\sigma \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)\sqrt {\frac{2}{{a^{2} ~ + ~\sigma ^{2} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)}}} } \right)^{{\text{D}}} {\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {t}\). After the change of variables, and assuming the radius of engulfing template to be sufficiently large, i.e. \(b\to \infty\), Eq. (18) can be reduced by using the properties of the error function (\(\mathrm{erf}\left(v\right)=\frac{1}{\sqrt{\pi }}{\int }_{-v}^{v}{e}^{{-t}^{2}}\mathrm{d}t;\mathrm{erf}\left(\infty \right)=1\)) to the following, which completes the calculation of the first term of the numerator:

We now focus on the first term of denominator, i.e. image variance inside the template. We use the expansion of variance, \(\int_{{\Omega _{b} }} {\left( {f\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} + \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} ^{\prime}} \right) - \bar{f}\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)} \right)^{2} } {\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} ^{\prime} = \left( {2b} \right)^{D} \left( {\overline{{f^{2} }} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right) - \bar{f}\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)^{2} } \right)\), and compute \(\overline{{f }^{2}}(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} )\) and \({\overline{f }(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} )}^{2}\) as follows.

Again, for a large \(b\to \infty\), Eq. (20) can be simplified with the help of the error function as:

Similarly, \({\overline{f }(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} )}^{2}\) is computed in the same way as in Eq. (20), and for a large \(b\), one can see that,

Subtracting Eq. (22) from Eq. (21), we get an approximation for the first term of denominator of NCCC for a large \(b\) as,

Next, we took on the second term of denominator, i.e. the template variance. We followed the expansion solution to initiate its computation. First, the mean of the square of the template can be computed as,

Like previous steps, for a large \(b\to \infty\), Eq. (24) can be simplified as, \({\left(2b\right)}^{D}\overline{{{g }_{D}}^{2}}= {\left(\sqrt{\pi }a\right)}^{D}.\) Similarly to above, the mean of the template, \({\left(2b\right)}^{D}{\overline{{g }_{D}}}^{2}\), is zero for \(b\to \infty\), and we obtain the approximate solution (for large \(b\)) of the second term of denominator of NCCC as,

Next, we compute the second term of numerator of NCCC. We have, \(\overline{{g }_{D}}=0\); therefore, \(\int_{{\Omega _{b} }} f \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} + \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} ^{\prime}} \right)\overline{{g_{D} }} {\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} ^{\prime} = 0\).

Substituting the obtained solutions of all terms associated with NCCC in Eq. (1), and simplifying it further, results in the following formula as the final solution for computing NCCC, when both image and template are Gaussian.

Optimization of template radius

The present form of NCCC in Eq. (26) is suitable for analytical maximization with respect to the template radius, \(a\). The voxel-wise maximizer, \(a\), would allow the computation of NCCC in a single step, thus reducing the computational complexity to the linear time, given that the NCCC no longer needs to be computed for a set of template radii. Let us define \(\alpha = a/\sigma \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)\). With this notation, Eq. (26) can be simplified as,

where, \(\rho := \frac{{\left\| {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {\mu } \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)} \right\|^{2} }}{{2\sigma ^{2} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)}}\). After substitution, we maximize the NCCC by equating the derivative of Eq. (27) with respect to \(\alpha\), \(\frac{{{\text{d}}Y}}{{{\text{d}}\alpha }} = e^{{ - \frac{\rho }{{\alpha ^{2} + 1}}}} \left( {\frac{{2\alpha }}{{\alpha ^{2} + 1}}} \right)^{{D/2}} \left( {\frac{{2\alpha \rho }}{{\left( {\alpha ^{2} + 1} \right)^{2} }}{-}\frac{{D\left( {\alpha ^{2} - 1} \right)}}{{2\alpha \left( {\alpha ^{2} + 1} \right)}}} \right)\), to zero, resulting in the following analytically optimized voxel-wise template radius,

Local statistics of image volume

The local statistics, mean \(\mathop{\mu }\limits^{\rightharpoonup} (\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} )\) and SD \(\sigma (\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} )\) above, are the key parameters to compute the voxel-wise optimized radius. We measured these two parameters from Gaussian smoothed image to counter the small intensity variations in MRI. Gaussian smoothing was performed on the MRI volume with a 3D Gaussian filter of size \(W\times W\times W\), where \(W\) = 2 \({b}^{\mathrm{^{\prime}}}+1\) and \({b}^{\mathrm{^{\prime}}}<b\), and an SD of 2 voxels. We then computed the background minimum image, \({f}_{BG}\), via moving minimum filtering over the smoothed image with a kernel of the same size to record the local minimum value inside the sliding window, which we then used in computing local statistics. The time complexity of this step is still kept \(\mathcal{O}(N)\) by sequentially applying 1D filters in each dimension in both filtering steps.

Mean

We compute the local (moving) mean at each voxel in the smoothed image. The moving minimum is locally subtracted from the local image, in order to remove the effect of the background in the local neighborhood. We define the D-dimensional mean as described below.

where \(f_{s} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right)\) is the Gaussian-smoothed image. The second term of the numerator is zero due to symmetry. To simplify the calculation of the above expression, we make use of a linear change of variables. Assume, \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} = \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {t} - \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X}\), so \({\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} = {\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {t}\). Hence, we can rewrite Eq. (29) as,

The above equation can be computed in linear time using our proposed solution for convolution in D dimensions [refer to Eq. (8)] to solve all the integrals (with n = 1) as shown below.

Standard deviation

SD is computed for each voxel by using a \(W\times W\times W\) kernel in Gaussian-smoothed background-subtracted image. From the definition of variance, the SD (\(\sigma\)) can be written as,

The covariance of a vector is defined as a \(D\times D\) matrix. However, given that the template is a radially symmetric Gaussian, we consider a scalar variance here as \(1/D\) of the trace of the covariance matrix. We proceed by computing \(\overline{{\left\| {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right\|^{2} }}\) as,

The denominator of \(\mathop{\mu }\limits^{\rightharpoonup} (\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} )\) and \(\overline{{\left\| {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right\|^{2} }}\) are the same, which is computed in Eq. (31). We compute the first term of numerator of \(\overline{{\left\| {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right\|^{2} }}\), by a change of variables, \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} = \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {t} - \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X}\), so \({\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{X^{\prime}}} = {\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {t}\),

Similarly to the case of the mean, we apply our approach to computation of convolution in the above equation (with n = 1) as described below,

We compute the second term in the numerator of Eq. (33) the same way as in Eq. (35), by replacing \(f_{s} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {t} } \right)\) with the constant \(1\). Finally, we have the solution of the terms necessary to calculate local SD, \(\sigma (\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} )\), from Eq. (32) in linear time.

Template matching with maximized radius

In this step, the mean and SD are substituted in Eq. (28) to obtain the “\(a\)”-maximized volume. Further, the optimized \({a}^{*}\) volume should be thresholded as \({a}^{*}\leftarrow \mathrm{min}\left({a}^{*},b/n-1\right)\) before being substituted in the proposed solution of NCCC as described in Eq. (15). This is how the NCCC computational complexity can be reduced form \(\mathcal{O}\left({a}_{max}N\right)\) to \(\mathcal{O}(N)\), i.e. we no longer need to use a set of templates with varying radii. In contrast, the FFT approach cannot make use of an optimal radius map to reduce its complexity any lower than \(\mathcal{O}\left({a}_{max}N\mathrm{log}N\right)\), given that FFT-based convolution cannot be performed with a locally varying kernel.

Results

In this section, we present experimental results comparing the proposed methods and other existing techniques, on 3D synthetic and real MRI data (\(D=3\)). We first describe the FFT-based NCCC computation, to which we will compare our methods next.

FFT-based template matching

We describe here how to use FFT to calculate the NCCC via Eq. (1). We define a truncated Gaussian kernel (\(n\to \infty\)) as, \(h_{D}^{{\left( \infty \right)}} \left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right) = \exp \left( { - \left\| {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right\|^{2} /\left( {2a^{2} } \right)} \right) \times Box_{{kernel}}\) for \(a=1, . . . , {a}_{max}\), which is used as the template. \(Bo{x}_{kernel}\) is a volume of equal size to the image, with the value of 1 inside \({\Omega }_{b}\) and 0 outside. We solve the convolution in the frequency domain by multiplying the FFT of \(f\) by that of \({h}_{D}^{(\infty )}\) and inverse Fourier transforming (IFFT) it, resulting in the first term of numerator of Eq. (1). The remainder of the numerator is computed similarly by using \(Bo{x}_{kernel}\) instead of \({h}_{D}^{(\infty )}\). The mean of the template is calculated as,\(~\overline{{h_{D}^{{\left( \infty \right)}} }} = \mathop \smallint \nolimits_{{\Omega _{b} }} \exp \left( { - \left\| {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} } \right\|^{2} /\left( {2a^{2} } \right)} \right){\text{d}}\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {X} /\left| {\Omega _{b} } \right|\). The denominators can be similarly computed. In the FFT-based approach, NCCC needs to be computed for a set of template radii, resulting in the computational complexity of \(\mathcal{O}\left({a}_{max}N {\text {log}}N\right)\).

Experiment on synthetic data

We first evaluated the proposed methods on synthetic data and compared their performances with that of FFT. We compared the detection accuracy and runtime of the three methods. We created twenty artificial volumes of size \(513\times 513\times 513\) voxels, where each volume contained an enhancing sphere located at a random location with a random radius (varying in between 8 to 21 pixels) to generate the synthetic data. In our first experiment, we aimed to determine how accurately the enhancing spheres could be detected with their approximated radii from the respective volumes. During the experiments, we used the same input parameters for all methods such as \(b=50\) and \({a}_{max}=24\) (except for the \(\mathcal{O}(N)\) method, instead \({b}^{^{\prime}}=24\)). Note that \(n\) is \(\infty\) for FFT and 2 for our methods. \({a}_{max}\) was calculated as \({a}_{max}=b/n-1\). During this experiment, we observed that both the FFT-based and our \(\mathcal{O}\left({a}_{max}N\right)\) template matching accurately identified the centers of the enhancing spheres in the respective volumes. For the proposed \(\mathcal{O}(N)\) method, we calculated the analytically optimized \({a}^{*}\) for each voxel, using which we computed the NCCC in linear time over the entire image. We then simply looked for the voxel with maximal NCCC value to identify the center of the detected enhancing sphere, and the \({a}^{*}\) at that location was considered to be the detected radius. Since the SD of the Gaussian is proportional—but not necessarily equal—to the radius of the detected binary sphere, we conducted a linear regression analysis to reveal the ratio of the gold-standard binary radius to the detected Gaussian SD. From the experiment, we found their ratio to be 1.61 ± 0.05 and 1.74 ± 0.06 for the \(\mathcal{O}\left({a}_{max}N\right)\) and the FFT methods, respectively, both values significant (\(p=9\times {10}^{-18}\))12.

As for the \(\mathcal{O}\left(N\right)\) method, we first obtained the abovementioned ratio as 1.96 ± 0.11 (\(p=5\times {10}^{-16}\)). The centers of the enhancing spheres, however, were not detected by the \(\mathcal{O}\left(N\right)\) method with pinpoint accuracy; the mean distance between the gold-standard and detected centers was 1.6 ± 1.8 voxels. Consequently, we looked up the values of \({a}^{*}\) at the known centers of the spheres, and recomputed the gold-standard-to-detected ratio as 2.12 ± 0.11. Next, we repeated the \(\mathcal{O}\left(N\right)\) experiment with the corrected \({a}^{*}\leftarrow 2.12\times {a}^{*}\). This helped us to reach absolute localization accuracy, with the new gold-standard-to-detected ratio of 1.01 ± 0.03 (\(p=8\times {10}^{-21}\)).

We performed another experiment on the synthetic data to analyze the runtime. The data were generated by creating 20 different volumes with linearly increasing number of voxels from \(N={10}^{6} (100\times 100\times 100)\) to \(N=1.25\times {10}^{8} (500\times 500\times 500)\), each of which containing a homogeneous bright sphere (all ones) of radius 17 pixels at the center. We ran the scripts of the algorithms 10 times for each synthetic volume for sphere detection with the same parameters as above. We measured the mean and SD of the 10 runtimes and compared them among the different methods, as depicted in Fig. 2. We ran the experiments in MATLAB on a Linux compute cluster with each node including 8 processors with 56 GB shared virtual memory (cluster allots 1 CPU with 7 GB of virtual memory to a single submitted job). To run the scripts on single thread for an unbiased comparison, we used the singleCompThread option, but we found that MATLAB still sometimes multithreaded the codes on multiple cores. Therefore, we report both the wall time (the real-world time elapsed between the start and end of the code) and the CPU time (the total time spent by all cores to execute the script). The \(\mathcal{O}\left(N\right)\) method (green curve in Fig. 2) was respectively 3.3 ± 0.7 and 1.4 ± 0.1 times faster than the FFT and \(\mathcal{O}\left({a}_{max}N\right)\) methods in terms of CPU time, and 2.2 ± 0.6 and 1.2 ± 0.2 times faster in terms of wall time. Additionally, the \(\mathcal{O}\left({a}_{max}N\right)\) approach was 2.5 ± 0.4 and 1.9 ± 0.4 times faster than the FFT method in terms of CPU and wall times, respectively. The non-monotonicity seen in the FFT runtime is partially because the radix-2 implementation is faster when each dimension is a power of 2. We also observed that the \(\mathcal{O}\left({a}_{max}N\right)\) method required 1.7 ± 0.3 and 2.5 ± 0.3 times less resident memory then the FFT and \(\mathcal{O}\left(N\right)\) methods, respectively, and that the FFT-based template matching used 1.5 ± 0.3 times less memory than the \(\mathcal{O}\left(N\right)\) method.

Analysis of runtime (CPU and wall time) and resident memory of \(\mathcal{O}\left(N\right)\) (green), \(\mathcal{O}\left({a}_{max}N\right)\) (red), and FFT-based (blue) approaches on synthetic data. As expected, the \(\mathcal{O}\left(N\right)\) approach is the fastest of the three, with a linear trend with respect to the number of voxels. The runtime of the FFT method fluctuates due to the necessary zero-padding to the nearest power of 2.

Experiments on clinical MRI

We tested all three methods on in vivo MRI data of human brain for automatic detection of MS lesions. MS is a chronic demyelinating disease that generally affects the central nervous system, with lesions appearing in various regions of the brain, predominantly the white matter (WM)4,28. Given that the cerebrospinal fluid (CSF) is attenuated in FLAIR [though sometime lesions are iso-intense to the hyper-intense gray matter (GM)], FLAIR MRI is suitable for understanding the lesion burden and measuring the amount of damage due to demyelination3,6. We tested the methods over the FLAIR sequence of two different MS lesion MRI databases; the first one is the training data of ‘MS lesion segmentation challenge 2008’ (MSLS 2008), which was conducted in conjunction with MICCAI 2008 (http://www.ia.unc.edu/MSseg), and the other dataset is the training data of the ‘MS segmentation challenge using a data management and processing infrastructure’ (MSSEG 2016), which was conducted in MICCAI 2016 (https://portal.fli-iam.irisa.fr/msseg-challenge/overview).

MRI scans of MS subjects

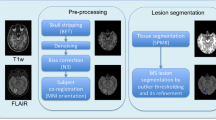

We used 20 cases of training data of MSLS 2008 to evaluate the methods using the gold-standard annotation from the Boston Children’s Hospital (BCH) rater as the reference. Isotropic-voxel (0.5 × 0.5 × 0.5 mm3) FLAIR images of size 512 × 512 × 51229 were preprocessed through N4 bias field correction (https://www.slicer.org), brain extraction from T1 images using the volBrain tool (http://volbrain.upv.es), and then the resulting brain masks were multiplied with bias-corrected FLAIR images to remove the skulls.

The 15 cases of preprocessed (nonlocal means (NLM) filtering, rigid registration, skull stripping, and N4 bias field correction) MSSEG 2016 training data, as provided in the challenge website, were used in this study6. We sampled the 3D FLAIR images in the slice direction of each volume (with linear interpolation for FLAIR and nearest-neighbor for the gold-standard lesion and brain masks) to obtain isotropic-voxel (1 × 1 × 1 mm3) data. After sampling, images were transformed into the new sizes, including 315 × 512 × 512, 154 × 224 × 256, and 245 × 336 × 336. The consensus segmentation obtained from all seven delineations (by seven expert raters) was used as the gold-standard for the performance evaluation of all methods implemented in this study6.

We min–max normalized the images of both databases before all experiments, and also kept the same input parameters during the experiments, such as \(b=18\) and \({a}_{max}=8\) for the \(\mathcal{O}\left({a}_{max}N\right)\) and FFT-based methods, whereas the \(\mathcal{O}\left(N\right)\) method dealt with all similar parameters, except \({a}_{max}\); instead we had \({b}^{^{\prime}}=8\). We chose \(n=\infty\) for the FFT methods and \(n=2\) for the other two methods, but eventually following a similar approach to find \({a}_{max}\) in the FFT and \(\mathcal{O}\left({a}_{max}N\right)\) algorithms.

Performance evaluation on clinical MRI data

The performance of all methods applied for lesion detection were quantified with respect to the expert-made manual annotation of MS lesions in MRI volumes by evaluating the popular statistical metrics such as the true positive fraction (TPF, or sensitivity) and false positive fraction (FPF, or false discovery rate)9 as defined below,

where \({L}_{A}\) and \({L}_{M}\) are the label volumes corresponding to automatic detection and manual annotation, respectively, and \(\neg\) denotes negation. A TPF value close to 100% signifies that all voxels representing lesions in the annotated gold-standard are correctly detected, while the \(0\) value indicates absolutely no detection. Conversely, an FPF value of 100% means no detection, and a value of 0 means that all detected voxels were correctly inside the label.

We now discuss how we used the above metrics to evaluate the performance of the \(\mathcal{O}\left({a}_{max}N\right)\), \(\mathcal{O}\left(N\right)\), and FFT approaches. We first computed the \(a\)-maximized NCCC from the FLAIR volume using each method. Many methods in the literature threshold the NCCC to keep putative lesion voxels, on which various performance metrics are evaluated10,17,20. In this work, instead of selecting a threshold, we considered \({N}_{Top}\) detected voxels with the highest NCCC value. We found the voxel with maximal NCCC from the resulting \(a\)-maximized NCCC volume, masked a sphere around it with a radius twice the optimal value of \(a\), updated the NCCC volume by filling the sphere with the value of -∞, then repeated this process to find all \({N}_{Top}\) maxima.

Next, as described in Algorithm 1, we created a sphere of all ones with the radius 1.61 times the optimally detected \(a\), centered at the location of top detection, inside an all-zero volume, \(M\), with the same size as the input image. We compared \(M\) with the gold-standard label to calculate the TPF and FPF for the case of \({N}_{Top}=1\). We then added an enhancing sphere in M for the next detection to compute the TPF and FPF for \({N}_{Top}=2\) and so on.

The mean TPF vs. FPF graphs over the 20 subjects of MSLS 2008 and the 15 subjects of MSSEG 2016 are presented in Fig. 3 for the three methods. We also recorded the computation times and resident memory usage of the three algorithms while running them over the real data as summarized in Table 1. The runtimes and required memory while executing the entire MATLAB scripts (termed as Algorithm) and those of the script till getting the \(a\)-maximized NCCC volume (termed as NCCC), are shown in Table 1. All the experiments over real data were performed within the same computational setup as used in the synthetic data analysis. The MATLAB scripts of all three methods were run only once on each image. The visualization of the top 30 detected lesions resulting from all three template matching algorithms are presented in Figs. 4 and 5.

Performance analysis (TPF vs. FPF) of proposed \(\mathcal{O}\left(N\right)\) (green), \(\mathcal{O}\left({a}_{max}N\right)\) (red), and other FFT-based (blue) and LPA (magenta) approaches over real MRI image database of (a) MSLS 2008 and (b) MSSEG 2016. The \(\mathcal{O}\left(N\right)\) method has the highest accuracy among the three NCCC-based approaches. Note that the superior performance of the LPA approach is expected, given that it is supervised segmentation, as opposed to unsupervised template-matching lesion detection.

The visualization of the top 30 detected lesions resulting from the proposed \(\mathcal{O}\left(N\right)\)-based template matching algorithm on the first case of MSSEG 2016 training data. The green and cyan circles indicate the true and false positives, respectively. Thick and thin circles are detected in the shown and nearby slices, respectively. The top-30 detections are mostly true positives and in the white matter region. False positives are often hyperintense regions mimicking lesions.

The top 30 detected lesions resulting from the \(\mathcal{O}\left({a}_{max}N\right)\)- (left) and FFT-based (right) template matching algorithms on the first case of MSSEG 2016 training data. The red (\(\mathcal{O}\left({a}_{max}N\right)\)) and blue (FFT) circles indicate the true lesions, and the false lesions are identified with cyan colored circles. Thick lesions are those centered at the shown slice, whereas thin circles represent the intersections with other detected spheres in the nearby slices. The performance of the two methods are similar, given that they both exhaustively search for the lesion size that maximizes the same NCCC, albeit computed with different algorithms. However, as seen in Fig. 3, these two methods do not achieve the accuracy of the \(\mathcal{O}\left(N\right)\) method.

Comparison with a state-of-the-art technique

We compared our methods with the lesion prediction algorithm (LPA)5, which is a logistic-regression based supervised learning technique (fundamentally different from our methods) and requires only the FLAIR image with no input parameters for MS lesion detection. We performed binary thresholding over the resulting volume of lesion probability map to compute the TPF and FPF. To maintain the parity with the proposed method in the performance evaluation, we chose the threshold in between 0 and 1 with an interval of \(2\times {10}^{-4}\). The LST toolbox (https://www.applied-statistics.de/lst.html) was utilized in statistical parametric mapping (SPM12; https://www.fil.ion.ucl.ac.uk/spm) for the implementation of LPA. The mean TPF vs. FPF plots over the MS lesion databases are illustrated in Fig. 3. We can see the better performance of LPA especially on the MSSEG 2016 data compared to the proposed and other methods used in this study.

Discussion

This paper focuses on fast template-matching based automatic brain lesion detection from MRI volumes. The proposed methods have complexities of \(\mathcal{O}\left(N\right)\) and \(\mathcal{O}\left({a}_{max}N\right)\), compared to \(\mathcal{O}\left({a}_{max}N\mathrm{log}N\right)\) of the FFT approach. From the results of time complexity experiment over synthetic data (see Fig. 2a,b), it is clear that \(\mathcal{O}(N)\) method is the fastest of the three template-matching algorithms for sphere detection. We derived a new analytical solution to obtain the voxel-wise optimal template radius from local image statistics. The proposed analytical solution is a computational alternative to exhaustive search for the template size. The \(\mathcal{O}(N)\) method could detect the bright spheres at their exact centers in the synthetic data (after using the appropriate multiplier). On the other hand, the FFT and \(\mathcal{O}\left({a}_{max}N\right)\) based template matching with exhaustive search possess similar accuracy for detection of the bright spheres as expected. The only small deviation persisting between them for the prediction of the radius of the sphere is due to employment of approximated Gaussian template in the \(\mathcal{O}\left({a}_{max}N\right)\) technique. The results on synthetic data validate the theoretical prediction of achieving the linear complexity in template matching by our methods (Fig. 2a,b). Regarding the resident memory usage, the \(\mathcal{O}\left({a}_{max}N\right)\) method appears to be quite efficient holding less memory than the other two methods. The \(\mathcal{O}\left(N\right)\) method, however, uses high memory. The analytically optimized template radius can be computed from mean and SD, which are computed from the local 3D neighborhood. The \(\mathcal{O}\left(\mathrm{N}\right)\) method, therefore, requires storing auxiliary variables (such as mean and variance volumes) in the memory, thereby using more memory than the other two methods. In the exhaustive-search cases, we performed loop operation inside the MATLAB script to execute the similarity measure computation step for a set of template radii. We reduced the memory requirement by updating NCCC with the voxel-wise maximized values, thereby avoiding the storage of a separate NCCC volume for each tried radius. Figure 2c shows that that the memory requirement increases linearly with the number of voxels in the volumes. In case of real data, the results follow a similar trend. The FFT method requires more memory than the \(\mathcal{O}\left({a}_{max}N\right)\) method does, because during template matching with the set of varying radii (loop operation) in the FFT method, we need to create a convolution kernel (template) that is as large as the input image, for every tried radius (so they can be multiplied elementwise in the frequency domain).

Regarding the runtimes of the methods on real MR images, Table 1 shows that the CPU time of the \(\mathcal{O}\left(N\right)\) algorithm is higher than that of the \(\mathcal{O}\left({a}_{max}N\right)\) method, and close (till NCCC computation) to that of the FFT-based template matching on the MSLS 2008 data, and that \(\mathcal{O}\left({a}_{max}N\right)\) is the fastest of the three on the MSLS 2008 data. These results, which contradict those on the synthetic data, are because NCCC was computed for a set of only 8 different radii for both the \(\mathcal{O}\left({a}_{max}N\right)\) and FFT methods and this loop-based operation was proven to be faster than the computation of 10 more convolutions for optimizing the template radius in the \(\mathcal{O}\left(N\right)\) algorithm. In contrast, for a large set of template radii as considered in the experiments on synthetic data, the \(\mathcal{O}\left(N\right)\) method outperformed the others. The CPU times of the MSSEG 2016 experiments were very close for the three algorithms, since for small images, the gap between \(N\mathrm{log}N\) and \(N\) becomes narrow. Overall, the \(\mathcal{O}\left(N\right)\) method is expected to run faster for large MRI volumes, or for the detection of larger lesions. Ex vivo imaging of human brain, which has attracted researchers to explore microstructural neuroanatomy, can produce brain images with (0.1 mm)3 isometric voxel resolution30. As the resolution increases, the ROI volume size increases proportionally, necessitating the reduction in computational complexity of the algorithms for processing very large MRI volumes to automatically detect lesions. Note that in real clinical scenario, we do not need to test the detection for top 5000 lesions, instead it would be for fewer numbers depending on the characteristics of the lesions and the clinician’s needs. Also note that the computation of the \(a\)-maximized NCCC can be seen to take up only a small fraction of the entire runtime (see Table 1), and has more variability.

Prior studies on MS lesions, as discussed in the next section, however, generally segment the lesions and report the detection accuracy from their automatic delineation. Therefore, our approach of lesion detection is different from most available tools. During the performance evaluation, we observed the monotonically increasing characteristics of both TPF and FPF with \({N}_{Top}\) for all three methods (except LPA). Thus, a high sensitivity can be achieved with large \({N}_{Top}\), which can be interpreted from Fig. 3. The mean TPF vs. FPF plots (Fig. 3) show very similar performance by FFT and our \(\mathcal{O}\left({a}_{max}N\right)\) methods over both MRI databases, similar to synthetic data. We can also infer from Fig. 3 that the performance graph of the \(\mathcal{O}\left(N\right)\) method is slightly better than FFT and \(\mathcal{O}\left({a}_{max}N\right)\), making this method a coherent assistive tool. In addition, the practicality of linear-time template matching can be well appreciated in processing of large 3D volumes with a high b (e.g., b = 50 showed the efficiency of \(\mathcal{O}\left(N\right)\) in synthetic data). Throughout the experiment with the \(\mathcal{O}\left({a}_{max}N\right)\) and \(\mathcal{O}\left(N\right)\) methods, we used an approximated 3D Gaussian template, which is almost radially symmetric, and expected to work robustly with spherical or close-to-spherical lesions. On the contrary, the MS lesions are arbitrary in shape and size (largely varying)31. Therefore, the algorithms might not detect the exact centers of such arbitrarily shaped lesions in FLAIR images. This reduced the overlap between the detected region and the gold standard, even when the lesions were correctly localized. This may be one of the reasons behind the rise of false positives. In the context of having large false positives, we would like to mention the work of Cabezas et al.9. Their unsupervised methodology deeply depends on tissue priors to estimate the mean and SD of the GM distribution in FLAIR images, for defining a threshold in order to segment the MS lesions. They reported a mean FPF higher than 90% (with close to 40% of mean TPF), as accuracy of lesion detection, without using any false detection refinement step. So, the presence of high FPF seems to be common among the performance of the algorithms, especially targeted to lesion detection from MS subjects, which we will discuss more in next section. Contrary to the unsupervised approaches, the prior knowledge learnt by LPA during the training phase seems to have been effective in improving the performance of this supervised-learning method. This analysis helps in tallying the performance of supervised and unsupervised techniques (Fig. 3). In template-matching techniques, a general trend is to fix a threshold over the spectrum of NCCC values so as to evaluate the performance. However, the optimal NCCC threshold may depend on acquisition parameters, and fixing it might lead to the detection performance strongly depending on the threshold. In contrast, our strategy of using maximum NCCC from \({N}_{Top}\) detection is not threshold-dependent.

Visualization of the detected lesions

We display the results for top 30 out of 5000 detections in Figs. 4 and 5. Blue, red, and green circles represent the FFT, \(\mathcal{O}\left({a}_{max}N\right)\) and \(\mathcal{O}\left(N\right)\) methods, respectively, on the 2D axial slices of the FLAIR volumes. We present the slices in order of lesion detection. (There may be slice repetition due to multiple detections in the same slice.) Thick circles indicate that the centers of the detected spheres are located in the shown slice. Thin circles represent the intersections between the shown slice and the spheres representing top-30 detected lesions centered in nearby slices. Therefore, the lack of a circle around a lesion means that it has not been in the top 30 (but the algorithm may still have detected it). A limitation that we observed for all techniques is the multiple detection of parts of a large lesion, such as those scattered around the lateral ventricles or on the corpus callosum, which are common in MS31. When lesions of various sizes and shapes appeared mainly in the WM31, multiple lesions were detected over the same large lesion because of the small b that we used. This issue may not be expected to arise in metastatic-lesion detection. The selection of a large b, on the other hand, may cause the algorithm to miss smaller lesions. We can observe the presence of false lesions (cyan circles in Figs. 4 and 5) included in the top 30 detection for all methods. False positives are mostly in the GM, which is hyper-intense in FLAIR images and sometimes confused with lesion by the algorithms. This is the main cause of high FPF in template-matching algorithms. We also found false positives in other brain regions such as the splenium of corpus callosum, hippocampus, and medulla oblongata. The estimated lesion radius in the \(\mathcal{O}\left(N\right)\) method is comparatively less than FFT and \(\mathcal{O}\left({a}_{max}N\right)\) methods, which is expected from the gold-standard-to-detected ratio radius (1.96 ± 0.11) obtained on synthetic data experiment.

Comparison with existing literature

In this section, we first compare the performance among the existing state-of-the-art methods dedicated for MS lesion segmentation with MSLS 2008 data. We have considered some of the well performing techniques of the last decade (summarized in Tables 2 and 3). Challenge organizers evaluated true positive rate (TPR) and false positive rate (FPR) (with the same definition as TPF and FPF used here) to determine the lesion detection ability29 on the test data. Note that the FPR is defined differently here from its standard definition32. The lesion voxels are only a small fraction of the MRI volume, thus the standard formula makes the FPR negligible (impactful for small \({N}_{Top}\)) due to uninformatively high true negatives. In this study, our methods were evaluated on the MSLS 2008 training data with respect to the BCH gold-standards only (performance of existing methods are presented in Table 3 against the BCH gold-standards) due to the unavailability of UNC (University of North Carolina, NC, USA) delineations for all 20 cases. The positive predictive value (PPV) signifies the ratio of the number of true detections to all detections33.

In this study, we intended to develop an algorithm for fast and automatic detection of brain lesions, as opposed to determining lesion contours (but still estimating the radius of the detected lesions). In most of the existing works, multi-contrast MR images were considered to delineate lesions6, with the exception of some methods that used only FLAIR images (as we did). Using multiple MRI sequences is attractive as it provides a larger feature space than using a single sequence, therefore multi-channel MR images are widely used in machine leaning and deep neural networks. In this context, our template matching algorithms, focusing on estimating lesion center with an approximated radius, are fundamentally different from the segmentation approaches. During literature review, we observed that Weiss et al. and Manjon et al. avoided computing the whole image to possibly reduce the computational burden, instead they downsampled the image38 or used candidate ROIs36. We found that MS lesions were detected as regions with outlier intensity values compared to the healthy brain tissue in many works40,41. Some research groups designed two-stage segmentation tasks; they first determine the putative lesion voxels with the help of various techniques such as unsupervised mixture models42, estimation of lesion and tissue label using Markov random field (MRF)43, cascaded convolutional neural network (CNN)44, etc., and then segment the lesions by classifying the voxels. In another two-step approach, brain tissues, mainly WM, GM, and CSF, were segmented using different techniques such as atlas-based EM37, Gaussian mixture model (GMM)45, SPM8/12 segmentation algorithm46, etc., before delineating the lesion while making use of the segmented tissues. These multistage approaches generally require multi-contrast MRI. Our proposed techniques compute the image at the original resolution and no spatial prior information on the tissue or lesion or candidate ROIs needs to be extracted; instead, it can directly localize the lesion region based on the NCCC measures, making it a single-stage method. Another key point we observed in the existing literature is the inclusion of post-processing operations, which is a common practice to refine the results and reduce false positives. The presence of flow artifacts in the FLAIR sequence creates hyperintensity in the CSF space, thus sometimes mimicking the lesion53,56. In addition, the hyperintense border voxels at the GM-WM junction may result in some false positives53,57. As a remedy, various post-processing operators have been adopted, such as morphological operations37, setting a minimum lesion volume46,53,58, connectivity rules, neighborhood similarity46, etc., to reduce false detections and improve FPR and PPV. In this study, we did not perform any post-processing, and only evaluated the raw outcome of the proposed algorithms. The performance of various supervised34,35,36 (Sup. Seg.) and unsupervised37,38,39 (UnSup. Seg.) segmentation techniques, evaluated on MSLS 2008 training data, are shown in Table 2. We also report the results of the proposed methods and the FFT baseline approach for four different values of TPF. One can see that \(\mathcal{O}\left({a}_{max}N\right)\) method’s performance is similar to the FFT approach, consistent with the synthetic-data experiments. The \(\mathcal{O}\left(N\right)\) method seems to perform slightly better than the other two template matching methods. But all three methods yield poor PPV (due to high FPF). Without the post-processing step for MS lesion detection, unsupervised detection has previously shown to result in high FPF9. Besides the fact that a single image (as opposed to three varying-contrast images) was used here, overlapping lesion intensity, flow artifacts, and typical shape and size of MS lesions are likely causes of high FPF, when lesions are detected from FLAIR images without refinement strategies. Having no user tunable learning parameters, as opposed to (supervised or unsupervised) segmentation approaches, the tested unsupervised detection approaches are not meant to be directly compared to the segmentation approaches presented in Tables 2 and 4 (discussed below), especially given that a detection method is designed to locate—but not delineate—a lesion. Nonetheless, to paint a broad picture of the performances and applicability of existing works, we present all methods in the tables side by side. We also observe from Table 2 that both TPR and PPV are low for all methods, which emphasizes the presence of high false positive with poor lesion detection ability. Table 3 presents a review of the performance of existing supervised36,42,43,44,47,48,49,50,51,52 (Sup. Seg.) and unsupervised37,45,46,53,54,55 (UnSup. Seg.) segmentation techniques on the test dataset. The values presented in Tables 2 and 3 were retrieved from the literature and leaderboard results (http://www.ia.unc.edu/MSseg/re-sults_table.php), and show that high FPR is very common among the methods even when the computation is done with multi-contrast MRI. Ghribi et al.45 pointed out the poor quality of MR images in MSLS 2008 as the reason behind the high FPR of their method. As expected, supervised learning methods performed well in both tables compared to unsupervised techniques.

The performance summary of some of the existing works on 15 training images of MSSEG 2016 is presented in Table 4. The table includes both supervised44,59,60,61,62,63,64,65,66,67,68,69 and unsupervised58,70,71 methods. In the MSSEG 2016 challenge, the lesion detection capability was evaluated using various performance metrics, among which we have chosen to compare the Dice coefficient (DICE), TPR, and PPV6 in Table 4. From the table, we can see that different groups explored both multi-channel and single FLAIR MRI for processing. Beaumont et al.58 did not consider lesions appearing at the edges of the brain mask, not dominantly located in the WM, etc., for final refinement of lesion boundaries. In their following work, Beaumont et al.70 reported augmented accuracy after implementing post-processing technique. Knight and Khademi71 showed 3D Gaussian filtering with 0.5 SD to be efficient for noise removal in FLAIR. In this work, we also used the Gaussian filter, however with a higher SD (2.0) in computing local image statistics. They also used three false-positive reduction strategies based on lesion volume, distance from the edge of the brain, and distance from midline of the brain. In other works59,60, handcrafted features and post-processing were used for MS lesion segmentation. Most groups favored working with multi-contrast MRI6 for either designing machine learning algorithms or obtaining tissue prior, and followed with rule-based or morphological-operator-driven post-processing. Table 4 also includes the performances of the proposed and the baseline FFT detection approaches, similarly to MSLS 2008. The results on MSSEG 2016 show the same trend, i.e. the performance of the \(\mathcal{O}\left({a}_{max}N\right)\) and FFT methods are very close, and the \(\mathcal{O}\left(N\right)\) method achieves slightly better accuracy. In general, the sensitivity (TPR) of lesion detection seems moderate with a high false detection in both databases. The lower DICE in the three detection methods that we tested (compared to segmentation methods in the literature) is mainly attributed to the facts that: (1) we used a single image (as opposed to multiple images with different contrasts), (2) we performed no post-processing, (3) we employed no supervision, but most importantly (4) our goal was to detect (find the rough location of) as opposed to segment (delineate) the lesion. As such, the segmentation performance metrics used for the three detection methods should be compared among themselves, but not directly to those of the segmentation methods. The organizers of MSSEG 2016, Commowick et al.6, summarized that the deep-learning based approaches showed promise in narrowing the gap between manual and automatic segmentation of MS lesions. As our proposed method is a fully automatic unsupervised detection technique, the comparison with machine learning (deep neural networks) is not so informative due to the fundamental differences with supervised methods.

In summary, the proposed template matching algorithms do not require tissue prior or any lesion-specific information. They are fully automatic and unsupervised models that can detect lesions from the FLAIR sequence with a lower computational complexity than the conventional FFT-based template matching. We also did not apply any post-refinement approaches to remove the false positives; however, false lesions can be ruled out during the review by the clinician. In lesion detection, we give a higher priority to avoiding false negatives than false positives.

Conclusions

We have proposed a new mathematical framework to compute a radius-optimized normalized cross-correlation coefficient (NCCC) similarity measure for 3D template matching, with application for automatic lesion detection. The key feature of our method is its low linear-time computational complexity, allowing for fast detection. Our alternative way to compute the NCCC exploits the approximation of Gaussian smoothing with multiple convolutions with a box kernel. We further proposed an analytical solution for the template radius that replaces the costly exhaustive search, thereby achieving the computational complexity of \(\mathcal{O}\left(N\right)\), which is not possible with the FFT-based method. The proposed technique provides a new approach to implementing fast template matching for object detection from images. We tested our lesion-detection method on synthetic images as well as two real MRI databases of multiple sclerosis lesions, and compared its performance with existing state-of-the-art methods and the conventional FFT-based template matching algorithm. Future work includes extending our approach for anisotropic-voxel images, and also imaging modalities beyond MRI.

Data availability

The proposed methods were implemented in the MATLAB platform. The codes can be made available upon request. The datasets used in this study for evaluating the proposed methods are available to public from http://www.ia.unc.edu/MSseg/index.html (MICCAI MSLS 2008) and https://portal.fli-iam.irisa.fr/msseg-challenge/data (MICCAI MSSEG 2016).

References

Clinic, C. Brain Lesions, (Accessed 12 September 2019); https://my.clevelandclinic.org/health/diseases/17839-brain-lesions (2019).

WebMD. Brain Lesions: Causes, Symptoms, Treatments, (Accessed 12 September 2019); https://www.webmd.com/brain/brain-lesions-causes-symptoms-treatments#1 (2019).

Egger, C. et al. MRI FLAIR lesion segmentation in multiple sclerosis: Does automated segmentation hold up with manual annotation?. NeuroImage Clin. 13, 264–270 (2017).

Schmidt, P. et al. An automated tool for detection of FLAIR-hyperintense white-matter lesions in multiple sclerosis. Neuroimage 59, 3774–3783 (2012).

Schmidt, P. Bayesian inference for structured additive regression models for large-scale problems with applications to medical imaging, PhD dissertation, IMU (2017).

Commowick, O. et al. Objective evaluation of multiple sclerosis lesion segmentation using a data management and processing infrastructure. Sci. Rep. 8, 1–17 (2018).

Lu, S., Lu, Z. & Zhang, Y.-D. Pathological brain detection based on AlexNet and transfer learning. J. Comput. Sci. 30, 41–47 (2019).

Lu, S., Wang, S.-H. & Zhang, Y.-D. Detection of abnormal brain in MRI via improved AlexNet and ELM optimized by chaotic bat algorithm. Neural Comput. Appl. 1–13. https://doi.org/10.1007/s00521-020-05082-4 (2020).

Cabezas, M. et al. Automatic multiple sclerosis lesion detection in brain MRI by FLAIR thresholding. Comput. Methods Programs Biomed. 115, 147–161 (2014).

Ambrosini, R. D., Wang, P. & O’Dell, W. G. Computer-aided detection of metastatic brain tumors using automated 3-D template matching. J. Magn. Reson. Imaging 31, 85–93. https://doi.org/10.1002/jmri.22009 (2010).

Narasimha, R. et al. Evaluation of denoising algorithms for biological electron tomography. J. Struct. Biol. 164, 7–17 (2008).

Koley, S., Chakraborty, C., Mainero, C., Fischl, B. & Aganj, I. A fast approach to automatic detection of brain lesions. International Workshop on Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries 52–61 (Springer, 2016).

Tourassi, G. D., Vargas-Voracek, R., Catarious, D. M. & Floyd, C. E. Computer-assisted detection of mammographic masses: A template matching scheme based on mutual information. Med. Phys. 30, 2123–2130 (2003).

Lochanambal, K., Karnan, M. & Sivakumar, R. In 2010 Second International Conference on Communication Software and Networks. 339–342 (IEEE).

Farag, A. A., El-Baz, A., Gimel'farb, G. & Falk, R. In Proceedings of the 17th International Conference on Pattern Recognition, 2004. ICPR 2004. 738–741 (IEEE).

Osman, O., Ozekes, S. & Ucan, O. N. Lung nodule diagnosis using 3D template matching. Comput. Biol. Med. 37, 1167–1172 (2007).

Wang, P., DeNunzio, A., Okunieff, P. & O’Dell, W. G. Lung metastases detection in CT images using 3D template matching. Med. Phys. 34, 915–922 (2007).

Moltz, J. H., Schwier, M. & Peitgen, H.-O. In 2009 IEEE International Symposium on Biomedical Imaging: From Nano to Macro. 843–846 (IEEE).

Warfield, S. K., Kaus, M., Jolesz, F. A. & Kikinis, R. Adaptive, template moderated, spatially varying statistical classification. Med. Image Anal. 4, 43–55 (2000).

Farjam, R., Parmar, H. A., Noll, D. C., Tsien, C. I. & Cao, Y. An approach for computer-aided detection of brain metastases in post-Gd T1-W MRI. Magn. Reson. Imaging 30, 824–836 (2012).

Yang, S. et al. Computer-aided detection of metastatic brain tumors using magnetic resonance black-blood imaging. Investig. Radiol. 48, 113–119 (2013).

Wang, X.-F., Gong, J., Bu, R.-R. & Nie, S.-D. Life System Modeling and Simulation 50–61 (Springer, 2014).

Muñoz, A., Ertlé, R. & Unser, M. Continuous wavelet transform with arbitrary scales and O (N) complexity. Signal Process. 82, 749–757 (2002).

Hou, H. & Andrews, H. Cubic splines for image interpolation and digital filtering. IEEE Trans. Acoust. Speech Signal Process. 26, 508–517 (1978).

http://mathworld.wolfram.com/SincFunction.html. (Accessed 24 August 2019); Sinc Function. (2016).

Kogan, S. A note on definite integrals involving trigonometric functions. (1999). (Accessed 18 January 2016)

Cooley, J. W. & Tukey, J. W. An algorithm for the machine calculation of complex fourier series. Math. Comput. 19, 297–301. https://doi.org/10.2307/2003354 (1965).

Johnston, B., Atkins, M. S., Mackiewich, B. & Anderson, M. Segmentation of multiple sclerosis lesions in intensity corrected multispectral MRI. IEEE Trans. Med. Imaging 15, 154–169 (1996).

Styner, M. et al. 3D segmentation in the clinic: A grand challenge II: MS lesion segmentation. Midas J. 2008, 1–6 (2008).

Edlow, B. L. et al. 7 Tesla MRI of the ex vivo human brain at 100 micron resolution. BioRxiv. 649822 (2019).

Barkhof, F. & Scheltens, P. Imaging of white matter lesions. Cerebrovasc. Dis. 13, 21–30 (2002).

Ruz, G. A., Estevez, P. A. & Perez, C. A. A neurofuzzy color image segmentation method for wood surface defect detection. For. Prod. J. 55, 52–58 (2005).

Fletcher, R. H., Fletcher, S. W. & Fletcher, G. S. Clinical Epidemiology: The Essentials. (Lippincott Williams & Wilkins, 2012).

Geremia, E. et al. In International Conference on Medical Image Computing and Computer-Assisted Intervention. 111–118 (Springer).

Brosch, T. et al. In International Conference on Medical Image Computing and Computer-Assisted Intervention. 3–11 (Springer).

Manjón, J. V. et al. MRI white matter lesion segmentation using an ensemble of neural networks and overcomplete patch-based voting. Comput. Med. Imaging Graph. 69, 43–51 (2018).

Souplet, J.-C., Lebrun, C., Ayache, N. & Malandain, G. In MICCAI-Multiple Sclerosis Lesion Segmentation Challenge Workshop (2008).

Weiss, N., Rueckert, D. & Rao, A. In International Conference on Medical Image Computing and Computer-Assisted Intervention. 735–742 (Springer).

Wang, R. et al. Automatic segmentation and volumetric quantification of white matter hyperintensities on fluid-attenuated inversion recovery images using the extreme value distribution. Neuroradiology 57, 307–320 (2015).

Van Leemput, K., Maes, F., Vandermeulen, D., Colchester, A. & Suetens, P. Automated segmentation of multiple sclerosis lesions by model outlier detection. IEEE Trans. Med. Imaging 20, 677–688 (2001).

Prastawa, M. & Gerig, G. Automatic MS lesion segmentation by outlier detection and information theoretic region partitioning. Grand Chall. Work. Mult. Scler. Lesion Segm. Chall. 1–8 (2008).

Jerman, T., Galimzianova, A., Pernuš, F., Likar, B. & Špiclin, Ž. BrainLes 2015. 45–56 (Springer, 2015).