Abstract

In this note we give a combinatorial solution to the general case of the puzzle known as “bridge and torch problem”. It is about finding the shortest time needed for n people to cross a bridge knowing that at most two persons can walk together on the bridge (at the speed of the slowest) and, because it is dark, a torch is needed for each crossing (and they have only one torch). The puzzle can be seen (and solved) as a discrete optimization problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The bridge and torch problem is a well known puzzle. Four people have to cross a narrow bridge at night. The bridge can be crossed by at most two people at the same time. Because it is dark, a torch must be used at every crossing and they have only one, which must be walked back and forth (it cannot be thrown). Each person walks at a different speed, and, when two people cross the bridge together, they must walk together at the rate of the slower person’s pace. We suppose that person i takes time ti to cross the bridge alone. Given the times ti for each i = 1, … , 4 we have to find the shortest time needed for getting all the people across the bridge. In this form, with concrete values ti, the problem first appeared in 1981. An elementary approach in a combinatorial context is given in [5]. Erwig in [3] used the problem to illustrate that a modern functional programming language like Haskell is at least as suitable as Prolog for programming search problems. Rote [4] gives an extensive bibliography and provides a method for solving the general case with n people by transforming the problem into a special kind of weighted degree-constrained subgraph problem.

Backhouse [1] considered the most general case when not only the number of people and the times ti are input parameters, but also the capacity C of the bridge (C is the maximum number of people that are allowed to walk together on the bridge; C = 2 in the classical problem). In [1] and [2] dynamic-programming and integer-programming algorithms for solving the “capacity-C torch problem” are created. It is also proved that the worst-case time complexity of the dynamic-programming algorithm is proportional to the square of the number of people.

In the present paper we propose a combinatorial solution for the standard capacity case C = 2 and arbitrary number of people, n ≥ 3. The main result of the paper is Theorem 1 which states a closed formula for the minimum time needed for crossing. In order to complete the proof, we define a preorder relation on the set of possible solutions (represented as (0,1) matrices) and find the general form of an optimal solution. Then, the appropriate solution for each set of parameters ti,i = 1, … , n is given.

2 The Optimization Problem

Our integer constrained optimization problem can be formulated as follows.

Given the parameters t1,t2, … , tn, find the minimum of the function

where α1,α2, … , αn are nonnegative integers which must verify some constraints.

For simplicity, we can assume that all the times ti are distinct, and

By continuity, the results are also true for non distinct values of times.

As Backhouse [1] noticed, the interesting part of the puzzle is that what seems to be “obvious” may be wrong. For example, our intuition says that the optimal solution would be to let the fastest person accompany the others, one by one, and return with the torch, but this is not always true (for any values of ti). For n = 4, this solution corresponds to the sequence (1, 2)(1)(1, 3)(1)(1, 4) which has a total time of crossing

But, at a second sight, we may think of minimizing the total time of forward trips instead of minimize the time lost with getting the torch back. So we may wander if the sequence (1, 2)(1)(3, 4)(2)(1, 2), with the total time

would be a better choice. The answer is that the first solution is optimal if t1 + t3 < 2t2 and the second one is the best when t1 + t3 > 2t2. If t1 + t3 = 2t2, then both solutions are optimal.

3 Optimal Matrices

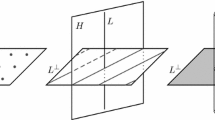

A very intuitive result which is true, as Rote proved ([4], Lemma 1), is that an optimal solution consists of n − 1 forward moves (made by two persons) and n − 2 backward moves (a single person returns with the torch). In what follows, we will write a solution as a (0,1) matrix A of dimensions n × (2n − 3), with all the odd columns of the form A(2k− 1) = ei + ej with i < j and all the even columns of the form A(2k) = eh (e1,e2, … , en denote the columns of the unity matrix In). For instance, in the case n = 4, the solutions (1, 2)(1)(1, 3)(1)(1, 4) and (1, 2)(1)(3, 4)(2)(1, 2) are represented by the matrices

Of course, not any (0, 1) matrix of the above form can be a solution. In order to characterize the set of possible solutions we define the column vectors

A possible solution must verify the condition

We denote by \(\mathcal {A}\) the set of all the matrices as above that verify the condition (1).

For k = 1, 2, … , 2n − 3, if ΣA(k) = (s1,s2, … , sn)T, we denote by SA(k) the set of all i ∈ {1, … , n} such that si = 1 and by \(\bar {S}_{A}(k)\) the complementary set. By mathematical induction,

hence ΣA(2n − 3) = (1,1, … , 1)T.

For any matrix \(A=(a_{i,j})\in \mathcal {A}\), we denote by \(\mu _{A}(k)=\max \limits \{i:a_{i,k}=1\}\), k = 1, 2, … , 2n − 3. Given a vector T = (t1,t2, … , tn) such that t1 < t2 < … < tn, we define

Our optimization problem is to minimize the sum φT(A) for \(A\in \mathcal {A}\). Let \(\mathcal {T}\) be the set of all the vectors \((t_{1},t_{2},\ldots ,t_{n})\in \mathbb {R}^{n}\) with t1 < … < tn.

Definition 1

A matrix \(A\in \mathcal {A}\) is said to be optimal if there exists \(T\in \mathcal {T}\) such that φT(A) ≤ φT(B) for all \(B\in \mathcal {A}\).

For \(A\in \mathcal {A}\) and a permutation σ ∈ S2n− 3, we denote by Aσ the matrix obtained by permuting the columns of A: \(A_{\sigma }^{(k)}=A^{(\sigma (k))}\) for k = 1, 2, … , 2n − 3. We define the following binary relations on the set \(\mathcal {A}\):

-

1.

We say that A is better than B, A ≺ B, if there exists a permutation σ ∈ S2n− 3 such that \(A_{\sigma }\in \mathcal {A}\),

$$\mu_{A}(\sigma(k))\le\mu_{B}(k), k=1, 2,\ldots,2n-3$$$$\text{and there exists} p\in\{1,\ldots,2n-3\} \text{such that} \mu_{A}(\sigma(p))<\mu_{B}(p).$$ -

2.

We say that A is similar to B, \(A\sim B\), if there exists a permutation σ ∈ S2n− 3 such that \(A_{\sigma }\in \mathcal {A}\) and

$$\mu_{A}(\sigma(k))=\mu_{B}(k), \text{for} k=1, 2,\ldots,2n-3.$$ -

3.

If μA(k) ≤ μB(k),forallk = 1, 2, … , 2n − 3, we say that A is better than or similar to B, A ≼ B.

As one can easily notice, “≼” is a preorder relation (reflexive and transitive) and “∼” is an equivalence relation (reflexive, symmetric and transitive).

Remark 1

If \(A\sim B\) then φT(A) = φT(B) for all \(T\in \mathcal {T}\). If A ≺ B then φT(A) < φT(B) for all \(T\in \mathcal {T}\).

As a consequence, if there exists \(B\in \mathcal {A}\) such that B ≺ A, then A is not an optimal matrix.

Remark 2

If \(A\in \mathcal {A}\) is an optimal matrix such that A2k ∈ {e1,e2} for every k = 1, 2, … , n − 2, then all the columns of A (except the last one) are either in pairs of the form A(2k− 1) = ej + e1 and A(2k) = e1, or in quartets of the form A(2k− 1) = e1 + e2, A(2k) = e1, A(2k+ 1) = ei + ej (i > j > 2), A(2k+ 2) = e2 (here e1 and e2 may be swapped). The last column has the form A(2n− 3) = e1 + ej with j ≥ 2.

The next Lemma proves that in an optimal matrix only e1 or e2 can play the role of even columns.

Lemma 1

In an optimal matrix, \(A\in \mathcal {A}\), the even columns are of the form A(2k) = e1 or A(2k) = e2, for every k = 1, 2, … , n − 2.

Proof

We prove that in an optimal matrix, A(2n− 2k) ∈ {e1,e2} by mathematical induction on k = 2, 3, … , n − 1.

For k = 2, A(2n− 4) represents the last backward trip. By Eq. (2), |S2n− 5| = n − 1, so S2n− 5 contains at least one i ∈ {1, 2}. If A(2n− 4) = ej with j > 2, we consider the matrix B obtained by replacing ej by ei in the last two columns of A. Obviously, \(B\in \mathcal {A}\) and B ≺ A, so, by Remark 1, A would not be an optimal matrix. Hence, A(2n− 4) ∈ {e1,e2}.

Now, we suppose that any optimal matrix has A(2n− 2h) ∈ {e1,e2} for h = 2, … , k − 1 and prove that this is true for A(2n− 2k) as well. Suppose that A(2n− 2k) = ej with j > 2. If S2n− 2k− 1 contains at least one i ∈ {1, 2}, we look at the columns A(p), p = 2n − 2k + 1, … , 2n − 3 to find out which one of ei and ej comes first. If ej is the first one (of course, it appears in an odd column A(2p+ 1)), we consider the matrix B obtained by replacing ej with ei in the columns (2n − 2k) and (2p + 1) of A. Then \(B\in \mathcal {A}\) and B ≺ A, which is impossible since A is an optimal matrix. If ei is the first one (A2p = ei), we consider the matrix B obtained by changing ej and ei in the columns (2n − 2k) and (2p) of A. Then \(B\in \mathcal {A}\) and \(B\sim A\), so B is an optimal matrix whose column B(2p)∉{e1,e2}, which is not possible, by the induction hypothesis.

In the other case, when \(\{e_{1},e_{2}\}\in \bar {S}_{2n-2k-1}\), A(2n− 2k− 1) = er + es with r,s > 2. Since A is optimal, it follows that the columns A(2n− 2k+ 1), … , A(2n− 3) form an optimal matrix for the set \(\bar {S}_{2n-2k}\). By the induction hypothesis and Remark 2, since \(j\in \bar {S}_{2n-2k}\), we obtain that we have either A(2m− 1) = ej + e1, A(2m) = e1, or A(2m− 1) = e1 + e2, A(2m) = e1(e2), A(2m+ 1) = ej + el, A(2m+ 2) = e2(e1) for some m > n − k. In the first situation we consider the matrix B with the columns B(p) = A(p) for all p ≤ 2n − 2k − 2 or p > 2m, B(2n− 2k− 1) = e1 + e2, B(2n− 2k) = e1, B(2n− 2k+ 1) = A(2n− 2k− 1), B(2n− 2k+ 2) = e2 and B(p) = A(p− 2) for all p = 2n − 2k + 3, … , 2m. Since \(B\in \mathcal {A}\) and B ≺ A, it follows that A is not optimal. In the second situation we can also construct a matrix \(B\in \mathcal {A}\) such that B ≺ A, so the conclusion is that A(2n− 2k) ∈ {e1,e2} and the Lemma is proved. □

Lemma 1 proves that any optimal matrix has the structure from Remark 2. Therefore, in order to give a complete description of optimal matrices, it is sufficient to specify the form of the odd columns. Obviously, at least one odd column is of the form e1 + e2. All the other elements e3,e4, … , en have a unique occurrence in A, in an odd column which may be either of the form ei + ej with 3 ≤ i < j ≤ n, or of the form e1 + ej with 3 ≤ j ≤ n.

Lemma 2

If \(A\in \mathcal {A}\) is an optimal matrix that contains an odd column of the form A(2k+ 1) = e1 + ej with j ≥ 3, then it contains all the columns e1 + ei with i = 3,4, … , j.

Proof

We suppose that there exists an odd column of the form A(2p+ 1) = ei + eh with i ∈ {3, … , j − 1} and h ≥ 3. If h > j, we consider the matrix \(B\in \mathcal {A}\) such that B(2k+ 1) = e1 + ei, B(2p+ 1) = ej + eh and B(r) = A(r) for any r≠ 2k + 1, 2p + 1. Since μB(2k + 1) = i < j = μA(2k + 1) and μB(r) = μA(r) for any r≠ 2k + 1, it follows that B ≺ A. If i < h < j, we take the same matrix B as above, but in this case we have: μB(2k + 1) = i < h = μA(2p + 1), μB(2p + 1) = j = μA(2k + 1) and μB(r) = μA(r) for any r≠ 2k + 1, so B ≺ A, which contradicts the optimality of A. □

Remark 3

By Lemma 2 it follows that if \(A\in \mathcal {A}\) is an optimal matrix containing an odd column of the form e1 + en, then all the odd columns of A are of the form e1 + ei with i = 2,3, … , n.

Lemma 3

If \(A\in \mathcal {A}\) is an optimal matrix that contains an odd column of the form A(2k+ 1) = en + ej with j ≥ 3, then j = n − 1.

Proof

We suppose that j < n − 1. Let A(2p+ 1) = en− 1 + ei be the column containing en− 1. Consider the matrix \(B\in \mathcal {A}\) such that B(2k+ 1) = en + en− 1, B(2p+ 1) = ej + ei and B(r) = A(r) for any r≠ 2k + 1, 2p + 1. Since μB(2p + 1) < n − 1 = μA(2p + 1) and μB(r) = μA(r) for any r≠ 2p + 1, it follows that B ≺ A, which contradicts the optimality of A. Hence the lemma is proved. □

4 The Main Result

Suppose that \(A\in \mathcal {A}\) is an optimal matrix that contains the odd columns e3 + e1, e4 + e1, …, ep + e1 and has all the other odd columns either of the form e1 + e2, or ei + ej with i > j > p. It follows that n − p is even and, by applying repeatedly Lemma 3, the columns containing ep+ 1, … , en are en + en− 1, en− 2 + en− 3, …, ep+ 2 + ep+ 1.

Now, given T = (t1,t2, … , tn) such that t1 < t2 < … < tn the question is what is the value of p such that the optimal matrix A constructed as above minimizes (3). Or, more precisely, what is the condition that makes the time corresponding to the set of columns e1 + e2, e1, ei + ei+ 1, e2 to be shorter then the time needed for the columns e1 + ei, e1, e1 + ei+ 1, e1? Since the time in the first case is t1 + 2t2 + ti+ 1 and, in the second case, 2t1 + ti + ti+ 1, the condition is:

Let m ∈ {1, 2, … , n} be the greatest value such that tm ≤ 2t2 − t1 (obviously, m ≥ 2) and let \(k=\left \lfloor \frac {n-m}{2}\right \rfloor \), where ⌊x⌋ denotes the greatest integer smaller than or equal to x. Since the condition (4) is verified for every i = n − 1,n − 3, … , n − 2k + 1, any optimal matrix corresponding to T is formed by:

-

k quartets of columns e1 + e2,e1,en− 2i + en− 2i− 1,e2, i = 0,1, … , k − 1, corresponding to the time

$$ kt_{1}+2kt_{2}+\sum\limits_{i=0}^{k-1}{t_{n-2i}} $$(5)(note that this contribution is 0 if k = 0).

-

n − 2k − 2 pairs of columns e1 + ej,e1, j = 3, 4, … , n − 2k and one last column which may be e1 + e2, corresponding to the time

$$ (n-2k-2)t_{1}+t_{2}+\sum\limits_{j=3}^{n-2k}{t_{j}}. $$(6)

Hence, by adding (5) and (6), the following theorem is proved:

Theorem 1

Given T = (t1,t2, … , tn) such that t1 < t2 < … < tn, the minimum of the function (3) is

where \(k=\left \lfloor \frac {n-m}{2}\right \rfloor \) and m ∈ {1, 2, … , n} is the greatest integer such that tm ≤ 2t2 − t1.

References

Backhouse R (2008) The Capacity-C torch problem. In: Audebaud P, Paulin-Mohring C (eds) Mathematics of program construction, MPC 2008, LNCS, Springer, vol 5133, pp 57–78

Backhouse R, Truong H (2015) The Capacity-C torch problem. Sci Comput Program 102:76–107

Erwig M (2004) Escape from Zurg: an exercise in logic programming. J Funct Program 14(3):253–261

Rote G (2002) Crossing the bridge at night. BEATCS 78:241–246

Steinegger R (2016) A systematic solution to the bridge and torch riddle. https://digitalcollection.zhaw.ch/bitstream/11475/1902/1/Steinegger_Bridge_and_Torch_Riddle_Combinatorics.pdf

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jianu, M., Jianu, M. & Popescu, S.A. A Combinatorial Solution for Bridge and Torch Problem. SN Oper. Res. Forum 1, 21 (2020). https://doi.org/10.1007/s43069-020-00022-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43069-020-00022-3