Abstract

High-order discretizations of partial differential equations (PDEs) necessitate high-order time integration schemes capable of handling both stiff and nonstiff operators in an efficient manner. Implicit-explicit (IMEX) integration based on general linear methods (GLMs) offers an attractive solution due to their high stage and method order, as well as excellent stability properties. The IMEX characteristic allows stiff terms to be treated implicitly and nonstiff terms to be efficiently integrated explicitly. This work develops two systematic approaches for the development of IMEX GLMs of arbitrary order with stages that can be solved in parallel. The first approach is based on diagonally implicit multi-stage integration methods (DIMSIMs) of types 3 and 4. The second is a parallel generalization of IMEX Euler and has the interesting feature that the linear stability is independent of the order of accuracy. Numerical experiments confirm the theoretical rates of convergence and reveal that the new schemes are more efficient than serial IMEX GLMs and IMEX Runge–Kutta methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this work, we consider the autonomous, additively partitioned system of ordinary differential equations (ODEs)

where f is nonstiff, g is stiff, and \(y \in \mathbb {R}^d\). Such systems frequently arise from applying the methods of lines to semidiscretize a partial differential equation (PDE). For example, processes such as diffusion, advection, and reaction all have different stiffnesses, CFL conditions, and optimal integration schemes. Implicit-explicit (IMEX) methods offer a specialized approach for solving Eq. (1.1) by treating f with an inexpensive explicit method and limiting the application of an implicit method, which is generally more expensive, to g.

The IMEX strategy has a relatively long history in the context of Runge–Kutta methods [2, 4, 17, 25, 28] and linear multistep methods [3, 19, 21]. Zhang et al. proposed IMEX schemes based on two-step Runge–Kutta (TSRK) and general linear methods (GLM) [33, 34, 36] with further developments reported in [5, 6, 12,13,14, 22, 24, 35]. Similarly, peer methods, a subclass of GLMs, have been utilized for IMEX integration in the literature such as [18, 27, 31, 32].

High-order IMEX GLMs do not face the stability barriers that constrain multistep counterparts and have much simpler order conditions than IMEX Runge–Kutta methods. Moreover, they can attain high stage order making them resilient to the order reduction phenomena seen in very stiff problems and PDEs with time-dependent boundary conditions.

A major challenge when deriving high-order IMEX GLMs is ensuring the stability region is large enough to be competitive with IMEX Runge–Kutta schemes. One can directly optimize for the area of the stability region under the constraints of the order conditions, but this is quite challenging as the objective and constraint functions are highly nonlinear and expensive to evaluate. In addition, this optimization is not scalable, with sixth order appearing to be the highest order achieved with this strategy [24].

Parallelism for IMEX schemes is scarcely explored [16, 18], but it is well studied for traditional, unpartitioned GLMs [8,9,10, 23]. One step of a GLM is

Methods are frequently categorized into one of four types to characterize the suitability for stiff problems and parallelism [7]. Types 1 and 2 are serial and have the structure

When \(\lambda = 0\), the method is of type 1, and of type 2 for \(\lambda > 0\). Of interest to this paper are methods of types 3 and 4, which have \(A = \lambda \, {{\mathbf {I}}_{{s}\times {s}}}\), so that all internal stages are independent and can be computed in parallel. Type 3 methods are explicit with \(\lambda = 0\), while type 4 methods are implicit with \(\lambda > 0\).

This work extends traditional, parallel GLMs into the IMEX setting and proposes two systematic approaches for designing stable methods of arbitrary order. The first uses the popular DIMSIM framework for the base methods. In particular, we use a family of type 4 methods proposed by Butcher [9] for the implicit base and show an explicit counterpart is uniquely determined. This eliminates the need to perform a sophisticated optimization procedure to determine coefficients. The second approach can be interpreted as a generalization of the simplest IMEX scheme: IMEX Euler. It starts with an ensemble of states each approximating the ODE solution at different points in time. In parallel, they are propagated one timestep forward using IMEX Euler, which is only first-order accurate. A new, highly accurate ensemble of states is computed by taking linear combinations of the IMEX Euler solutions. This scheme, which we call parallel ensemble IMEX Euler, can be described in the framework of IMEX GLMs. Notably, it maintains the exact same stability region and roughly the same runtime in a parallel setting as IMEX Euler while achieving arbitrarily high orders of consistency. Again, coefficients are determined uniquely, and we show that they are very simple to compute using basic matrix operations.

To assess the quality of the two new families of parallel IMEX GLMs, we apply them to a PDE with time-dependent forcing and boundary conditions, as well as to a singularly perturbed PDE. Convergence is verified as high as eighth order for these challenging problems which can cause order reduction for methods of low stage order. For the performance tests, the parallel methods were run on several nodes in a cluster using MPI and compared to existing, high-quality, serial IMEX Runge–Kutta and IMEX GLMs run on a single node. The best parallel methods could reach a desired solution accuracy approximately two to four times faster.

The structure of this paper is as follows. Section 2 reviews the formulation, order conditions, and stability analysis of IMEX GLMs. This is then specialized in Sect. 3 for parallel IMEX GLMs. Sections 4 and 5 present and analyze two new families of parallel IMEX GLMs. The convergence and performance of these new schemes are compared to other IMEX GLMs and IMEX Runge–Kutta methods in Sect. 6. We summarize our findings and provide final remarks in Sect. 7.

2 Background on IMEX GLMs

An IMEX GLM [34] computes s internal and r external stages using timestep h according to:

Using the matrix notation for the coefficients

the IMEX GLM can be represented in the Butcher tableau

Assuming the incoming external stages to a step satisfy

an IMEX GLM is said to have stage order q if

and order p if

The Taylor series weights for the external stages are also described in the matrix form

with \(w_{i,0} = {\widehat{w}}_{i,0}\) for \(i = 1, \cdots , r\).

The order conditions for IMEX GLMs are discussed in detail in [34]. Notably, a preconsistent IMEX GLM has order p and stage order \(q \in \{p, p-1\}\) if and only if the base methods have order p and stage order \(q \in \{p, p-1\}\). Here, we present the order conditions in a compact matrix form. First, we define the Toeplitz matrices

and the scaled Vandermonde matrix

Powers of a vector are understood to be component-wise, and \(\mathbb {1}_s\) represents the vector of ones of dimension s.

Theorem 2.1

(Compact IMEX GLM order conditions [34]) Assume \(y^{[n-1]}\) satisfies Eq. (2.3). The IMEX GLM Eq. (2.1) has order p and stage order \(q \in \{p, p-1\}\) if and only if

where \({\mathbf {W}}_{:,0:q}\) is the first \(q+1\) columns of \({\mathbf {W}}\), and \(\widehat{{\mathbf {W}}}_{:,0:q}\) is defined analogously.

Remark 2.1

The first column in each of the matrix conditions in Eq. (2.6) corresponds to a preconsistency condition.

2.1 Linear Stability of IMEX GLMs

The standard test problem used to analyze the linear stability of an IMEX method is the partitioned problem

where \(\xi \, y\) is considered nonstiff and \({\widehat{\xi }} \, y\) is considered stiff. Applying the IMEX GLM Eq. (2.1) to Eq. (2.7) yields the stability matrix

where \(w=h \, \xi \) and \({\widehat{w}}=h \, {\widehat{\xi }}\). The set of \((w, {\widehat{w}}) \in \mathbb {C}\times \mathbb {C}\) for which \({\mathbf {M}}(w,{\widehat{w}})\) is power bounded, and thus, the IMEX GLM is stable, is a four-dimensional region that can be difficult to analyze and visualize. Following [34], we also consider the simpler stability regions

where \({\mathcal {S}}\) and \(\widehat{{\mathcal {S}}}\) are the stability regions of the explicit and implicit base methods, respectively. Equation (2.8a) is referred to as the desired stiff stability region and Eq. (2.8b) as the constrained nonstiff stability region.

3 Parallel IMEX GLMs

An IMEX GLM formed by pairing a type 3 GLM with a type 4 GLM has stages of the form

The only shared dependencies among the internal stages are the previously computed external stages \(y^{[n-1]}_j\). This allows the IMEX method to inherit the parallelism of the base methods.

The tableau for a parallel IMEX GLM is of the form

We note that one could more generally define \(\widehat{{\mathbf {A}}}= {{\,\mathrm{diag}\,}}{(\lambda _1, \cdots , \lambda _s)}\); however, this introduces additional complexity and degrees of freedom that are not needed for the purposes of this paper.

3.1 Simplified Order Conditions

In this paper, we will consider methods with \(p=q=r=s\), distinct \({\mathbf {c}}\) values (nonconfluent method), and an invertible \({\mathbf {U}}\). By transforming the base methods into an equivalent formulation, we can then assume without loss of generality that \({\mathbf {U}}= {{\mathbf {I}}_{{s}\times {s}}}\). With these assumptions, we start by determining the structure of the external stage weights \({\mathbf {W}}\) and \(\widehat{{\mathbf {W}}}\).

Lemma 3.1

For a parallel IMEX GLM with \({\mathbf {U}}= {{\mathbf {I}}_{{s}\times {s}}}\) and \(p=q\), the internal stage order conditions (2.6a) and (2.6b) are equivalent to

respectively.

Proof

This follows directly from substituting \({\mathbf {A}}= {{\mathbf {0}}_{{s}\times {s}}}\), \(\widehat{{\mathbf {A}}}= \lambda \, {{\mathbf {I}}_{{s}\times {s}}}\), and \({\mathbf {U}}= {{\mathbf {I}}_{{s}\times {s}}}\) into Eqs. (2.6a) and (2.6b).

Our main theoretical result on parallel IMEX GLMs is presented in Theorem 3.1 and provides a practical strategy for method derivation.

Theorem 3.1

(Parallel IMEX GLM order conditions) Consider a nonconfluent parallel IMEX GLM with \({\mathbf {U}}= {{\mathbf {I}}_{{s}\times {s}}}\). All of the following are equivalent:

-

i)

the method satisfies \(p=q=r=s\);

-

ii)

the explicit base method satisfies \(p=q=r=s\) and

$$\begin{aligned} \widehat{{\mathbf {W}}}&= {{\mathbf {C}}_{s+1}} - \lambda \, {{\mathbf {C}}_{s+1}} \, {\mathbf {K}}_{s+1}, \end{aligned}$$(3.4a)$$\begin{aligned} \widehat{{\mathbf {B}}}&= {\mathbf {B}}- \lambda \, {{\mathbf {C}}_{s}} \, {\mathbf {E}}_{s} \, {{\mathbf {C}}_{s}^{-1}} + \lambda \, {\mathbf {V}}; \end{aligned}$$(3.4b) -

iii)

the implicit base method satisfies \(p=q=r=s\) and

$$\begin{aligned} {\mathbf {W}}&= {{\mathbf {C}}_{s+1}}, \end{aligned}$$(3.5a)$$\begin{aligned} {\mathbf {B}}&= \widehat{{\mathbf {B}}}+ \lambda \, {{\mathbf {C}}_{s}} \, {\mathbf {E}}_{s} \, {{\mathbf {C}}_{s}^{-1}} - \lambda \, {\mathbf {V}}. \end{aligned}$$(3.5b)

Remark 3.1

With Theorem 3.1, once the implicit base method has been chosen, all coefficients for the explicit counterpart are uniquely determined by the order conditions. Conversely, if the explicit base is fixed, then all implicit method coefficients are uniquely determined, but parameterized by \(\lambda \).

Proof

To start, we will show the first statement of Theorem 3.1 is equivalent to the second. Assume that a nonconfluent parallel IMEX GLM with \({\mathbf {U}}= {{\mathbf {I}}_{{s}\times {s}}}\) has \(p=q=r=s\). By Theorem 2.1, the explicit (and implicit) base method also has \(p=q=r=s\) and satisfies the order conditions in Eq. (2.6). Furthermore, by Lemma 3.1, Eq. (3.4a) holds. Subtracting Eq. (2.6d) from Eq. (2.6c) gives

The three terms summed on the left-hand side of Eq. (3.6) have zeros in the leftmost column. Removing this yields the following equivalent statement:

A bit of algebraic manipulation recovers the desired result of Eq. (3.4b).

Now, assume that a nonconfluent parallel IMEX GLM with \({\mathbf {U}}= {{\mathbf {I}}_{{s}\times {s}}}\) satisfies the properties of the second statement of Theorem 3.1. Condition (3.4a) ensures that the implicit method has stage order q, and Eq. (3.4b) ensures that it has order p,

Now, both base methods have \(p=q=r=s\), so by Theorem 2.1, the combined IMEX scheme also has \(p=q=r=s\).

The process to show statement one is equivalent to statement three, thus completing the proof, follows nearly identical steps, and is therefore omitted.

3.2 Stability

Applying parallel IMEX GLMs to linear stability test Eq. (2.7) gives

where \({\mathbf {M}}(w)\) and \(\widehat{{\mathbf {M}}}({\widehat{w}})\) are the stability matrices of the explicit and implicit base methods, respectively. When the implicit partition becomes infinitely stiff,

Stability matrices evaluated at \(\infty \) are understood to be the value in the limit.

3.3 Starting Procedure

The starting procedure for nontrivial IMEX GLMs is more complex than traditional GLMs because the external stages for IMEX GLMs weight time derivatives of f and g differently. When computing \(y^{[0]}\), the high-order time derivatives are usually not readily available, but can be approximated by finite differences [11, 34]. A one-step method can be used to get very accurate approximations to y, and, consequently, f and g, at a grid of time points around \(t_0\) to construct these finite-difference approximations. While this generic approach is applicable to parallel IMEX GLMs, we also describe a specialized strategy that is simpler and more accurate.

Based on the \({\mathbf {W}}\) and \(\widehat{{\mathbf {W}}}\) weights derived in Eq. (3.3),

Now, a one-step method can be used to get approximations to y and g at times \(t_0 + h \, c_i\) to compute \(y^{[0]}\). This eliminates the need to use finite differences and eliminates the error associated with them. Note that negative abscissae would require integrating backwards in time. Although the interval of integration may be quite short, this could still lead to stability issues, and is easily remedied. If \(c_\text {min}\) is the smallest abscissa, then the one-step method can produce an approximation to \(y^{[\ell ]}\), where \(\ell = \lceil -c_\text {min}\rceil \), instead of \(y^{[0]}\). Note, \(t_\ell + c_i \, h \geqslant t_0\), and the IMEX GLM will start with \(y^{[\ell ]}\) to compute \(y^{[\ell +1]}\) and so on.

3.4 Ending Procedure

We will consider the ending procedure for an IMEX GLM to be of the form

Frequently, IMEX GLMs have the last abscissa set to 1, which allows for a particularly simple ending procedure for high stage order methods. The final internal stage \(Y_s\) can be used as an \({\mathcal {O}}\left( {h^{\min (p, q+1)}}\right) \) accurate approximation to \(y(t_n)\). One can easily verify that the coefficients for such an ending procedure are

where \(e_i\) is the ith column of \({{\mathbf {I}}_{{s}\times {s}}}\). Indeed, all parallel IMEX GLMs tested in this paper have \(c_s=1\); however, we present an alternative strategy to approximate \(y(t_n)\). Suppose a parallel IMEX GLM has \(c_i=0\) for some \(i \in \left\{ 1, \cdots , s - 1 \right\} \) and \(c_s=1\). Then, based on the relation in Eq. (3.8), we have that

This ending procedure has the coefficients

For the parallel ensemble IMEX Euler methods of Sect. 5, numerical tests revealed that this new ending procedure is substantially more accurate. For the parallel IMEX DIMSIMs, the coefficients in Eqs. (3.10) and (3.11) gave similar results in tests as the accumulated global error dominated the local truncation error of the ending procedure.

4 Parallel IMEX DIMSIMs

Diagonally implicit multi-stage integration methods (DIMSIMs) have become a popular choice of the base method to build high-order IMEX GLMs. IMEX DIMSIMs are characterized by the following structural assumptions:

-

i)

\({\mathbf {A}}\) is strictly lower triangular, and \(\widehat{{\mathbf {A}}}\) is lower triangular with the same element \(\lambda \) on the diagonal as in Eq. (1.2);

-

ii)

\({\mathbf {V}}\) is rank one with the single nonzero eigenvalue equal to one to ensure preconsistency;

-

iii)

\(q \in \{p, p-1\}\) and \(r \in \{s, s+1\}\).

Based on Theorem 2.1, to build a parallel IMEX DIMSIM with \(p=q=r=s\), we only need to choose one of the base methods and the rest of the coefficients will follow. If we start by picking an explicit base, it may be difficult to ensure that the resulting implicit method has acceptable stability properties, ideally L-stability. Instead, we start by picking a stable, type 4 DIMSIM for the implicit base method.

In [8, 9], Butcher developed a systematic approach to construct DIMSIMs of type 4 with “perfect damping at infinity”. One of his primary results is presented in Theorem 4.1.

Theorem 4.1

(Type 4 DIMSIM coefficients [9, Theorem 4.1]) For the type 4 DIMSIM,

with \(p=q=r=s\) and \({\mathbf {V}}\, \mathbb {1}_s = \mathbb {1}_s\), the transformed coefficients

satisfy

where

and

Theorem 4.1 fully determines the \(\widehat{{\mathbf {B}}}\) coefficient for a type 4 DIMSIM, but \({\mathbf {c}}\), \(\lambda \), and most of \({\mathbf {V}}\) remain undetermined. Fortunately, this offers sufficient degrees of freedom to ensure that \(\widehat{{\mathbf {M}}}(\infty )\) is nilpotent. In [9, Theorem 5.1], Butcher proved \(\lambda \) must be a solution to.

and

Here, \(L_n(x) = \sum\limits_{i=0}^n \left( {\begin{array}{c}n\\ i\end{array}}\right) (-x)^i / i!\) is the Laguerre polynomial and \(L_n^{(m)}(x)\) is its mth derivative.

With the implicit base method determined, we now turn to the explicit method. Indeed, Theorem 2.1 could be applied to recover \({\mathbf {B}}\), but Theorem 4.1 provides a more direct approach. Equation (4.1b), which is normally used for type 4 methods, remains valid when \(\lambda =0\), and Eq. (4.1a) is fulfilled, because the implicit and explicit base methods share \({\mathbf {V}}\).

In summary, the coefficients for a parallel IMEX DIMSIM with \(p=q=r=s\) are given by

with \({\mathbf {c}}\) remaining as free parameters. The two most “natural” and frequently used choices are \({\mathbf {c}}= [0,1/(s-1),2/(s-2),\cdots ,1]^{\rm{T}}\) and \({\mathbf {c}}= [2-s,1-s,\cdots ,1]^{\rm{T}}\). This presents a trade-off where the first option has smaller local truncation errors, but the second option results in coefficients that grow slower with order, thus reducing the accumulation of finite precision cancellation errors. Table 1 presents the magnitude of these largest coefficients for both strategies.

Before proceeding to the stability analysis, we present two examples of parallel IMEX DIMSIMs. A second-order method has the tableau

where \(\lambda = (3 - \sqrt{3}) / 2\). In a more compact form, a third-order method has the coefficients:

4.1 Stability

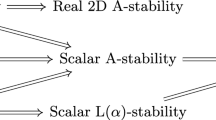

While Eq. (4.2) ensures \(\rho ( \widehat{{\mathbf {M}}}(\infty ) ) = 0\), it is not a sufficient condition for L-stability of a type 4 DIMSIM. In [9], appropriate values of \(\lambda \) for L-stability are provided for orders 2~10, excluding nine. If the weaker condition of L\((\alpha )\)-stability is acceptable, smaller values of \(\lambda \) may be used, as well.

With \({\mathbf {c}}\) available as the free parameters, it is natural to see if they can be used to optimize the stability of parallel IMEX DIMSIMs. It is easy to verify that the stability is, in fact, independent of \({\mathbf {c}}\),

The stability matrix is similar to a matrix completely independent of \({\mathbf {c}}\), and thus, \({\mathbf {c}}\) has no effect on the power boundedness of \({\mathbf {M}}\).

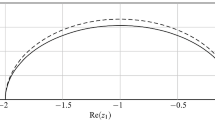

Plots of the constrained nonstiff stability region for several methods appear in Fig. 1. Roughly speaking, the area of the stability region shrinks as the order increases. Furthermore, the smaller values of \(\lambda \) satisfying Eq. (4.2a) tend to provide larger stability regions for a fixed order.

5 Parallel Ensemble IMEX Euler Methods

If one seeks to minimize communication costs for parallel IMEX GLMs, the choice \({\mathbf {U}}= {\mathbf {V}}= {{\mathbf {I}}_{{s}\times {s}}}\) is attractive, as it eliminates the need to share external stages among parallel processes. As we will show in this section, this choice of coefficients also leads to particularly favorable structures for the order conditions and stability matrix.

Theorem 5.1

(Parallel ensemble IMEX Euler order conditions) A nonconfluent parallel ensemble IMEX Euler method, which starts with the structural assumptions

has \(p=q=r=s\) if and only if the remaining method coefficients are

where

Remark 5.1

An alternative representation for Eq. (5.2) is \({\mathbf {F}}_{n} = \varphi _1({\mathbf {K}}_{n})\), where \(\varphi _1\) is the entire function

Proof

With Theorem 3.1, we only need to show that the explicit base method for parallel ensemble IMEX Euler satisfies \(p=q=r=s\) and \({\mathbf {B}}\) and \(\widehat{{\mathbf {B}}}\) are related by Eq. (3.4b). By Lemma 3.1, the internal stage order condition for the explicit method, given in Eq. (2.6a), holds. For the external stage order conditions,

Therefore, the explicit method satisfies all order conditions and has \(p=q=r=s\). Finally,

which completes the proof.

While the parallel IMEX DIMSIMs of Sect. 4 require symbolic tools to derive and have coefficients that can be expressed as roots of polynomials, ensemble methods have simple, rational coefficients that can be derived with basic matrix multiplication. The following parallel ensemble IMEX Euler method, for example, is second order,

A third-order method is given by

and a fourth-order method is given by

When the order of the method increases, so does the magnitude of the method coefficients: a phenomenon previously described for parallel IMEX DIMSIMs. Similarly, the distribution of abscissae can limit the growth of coefficients, and thus, the floating-point errors associated with them. Table 2 lists these maximum coefficients for \({\mathbf {c}}\)’s evenly spaced between [0, 1], as well as \([2-s,1]\).

5.1 Stability

An interesting property of parallel ensemble IMEX Euler methods is that \({\mathbf {B}}\), \(\widehat{{\mathbf {B}}}\), \({\mathbf {A}}\), and \(\widehat{{\mathbf {A}}}\) all simultaneously triangularize. The stability matrix Eq. (3.7) can, therefore, be put into an upper triangular form with a simple similarity transformation,

The diagonal entries of Eq. (5.3) are all \(1 + (w+{\widehat{w}})/(1 - \lambda \, {\widehat{w}}) \) and identically are the eigenvalues of the stability matrix. Note that the geometric multiplicity of this repeated eigenvalue is r when \(w = {\widehat{w}} = 0\) and 1 otherwise. To ensure the L-stability of the implicit base method as well as \(\rho ({\mathbf {M}}(w, \infty )) = 0\), we set \(\lambda = 1\). In this case, the eigenvalues simplify to \((1+w)/(1-{\widehat{w}})\) matching the stability of the IMEX Euler scheme

There are several other interesting stability features for parallel ensemble IMEX Euler methods. First, stability is independent of the order and the choice of abscissae, allowing a systematic approach to develop stable methods of arbitrary order. The constrained nonstiff stability region has the simple form

when \(s > 1\). Except for the origin, the boundary of this circular stability region is carefully excluded, because the 1 eigenvalue of \({\mathbf {M}}\) is defective at those points. This family of methods is stability decoupled in the sense that linear stability of the base methods for their respective partitions implies linear stability of the IMEX scheme.

We note that aside from the origin, \({\mathcal {S}}_{\alpha }\) does not contain any of the imaginary axis, indicating potential stability issues when f is oscillatory. This analysis is a bit pessimistic, however, as \({\mathcal {S}}_{\alpha }\) represents the explicit stability when \({\widehat{w}}\) is chosen in a worst-case scenario. Only when \({\widehat{w}}=0\) is there instability for all purely imaginary w. As the modulus of \({\widehat{w}}\) grows, the range of imaginary w for which the IMEX method is stable also grows.

6 Numerical Experiments

We provide numerical experiments to confirm the order of convergence and to study the performance of our methods compared to other IMEX methods. We use the CUSP and Allen–Cahn problems in our experiments.

6.1 CUSP Problem

The CUSP problem [20, Chapter IV.10] is associated with the equations

where \(v = \frac{u}{u+ 0.1}\) and \(u = (y-0.7) \, (y-1.3)\). The timespan is \(t \in [0,1.1]\), the spatial domain is \(x \in [0,1]\), and the parameters are chosen as \(\sigma = \frac{1}{144}\) and \(\varepsilon = 10^{-4}\). Spatial derivatives are discretized using second-order central finite differences on a uniform mesh with \(N=32\) points and periodic boundary conditions. The initial conditions are

for \(i=1,\cdots ,N\). Note that the problem is singularly perturbed in the y component and the stiffness of the system can be controlled using \(\varepsilon \). Following the splitting used in [24], the diffusion terms and the term scaled by \(\varepsilon ^{-1}\) form g, while the remaining terms form f. The MATLAB implementation of the CUSP problem is available in [15, 29].

We performed a fixed time-stepping convergence study of the new methods. Figure 2 shows the error of the final solution versus number of timesteps. Error is computed in the \(\ell ^2\) sense using a high-accuracy reference solution. In all cases, the parallel IMEX GLMs converge at least at the same rate as theoretical order of accuracy.

Convergence of parallel IMEX DIMSIM and parallel ensemble IMEX Euler methods for the CUSP problem Eq. (6.1)

6.2 Allen–Cahn Problem

We also consider the two-dimensional Allen–Cahn problem described in [35]. It is a reaction-diffusion system governed by the equation

where \(\alpha = 0.1\) and \(\beta = 3\). The time-dependent Dirichlet boundary conditions and source term s(t, x, y) are derived using the method of manufactured solutions, such that the exact solution is

We discretize the PDE on a unit square domain using degree two Lagrange finite elements and a uniform triangular mesh with \(N=32\) points in each direction. The diffusion term and forcing associated with the boundary conditions are treated implicitly, while the reaction and source term are treated explicitly.

The problem is implemented using the FEniCS package [1] leveraging OpenMP parallelism to speed up f and g evaluations, as well as MPI parallelism of stage computations made possible by the structure of the parallel IMEX GLMs. All tests were run on the Cascades cluster maintained by Virginia Tech’s Advance Research Computing center (ARC). Parallel experiments were performed on \(p=q=r=s\) nodes, each using 12 cores. Serial experiments were done on a single node with the same number of cores. The error was computed using the \(\ell _2\) norm by comparing the nodal values of the numerical solution against a high-accuracy reference solution.

Figure 3 summarizes the results of this experiment by comparing several additive Runge–Kutta (ARK) methods and IMEX DIMSIMs with parallel IMEX GLMs derived in this paper. At order 3, serial methods are ARK3(2)4L[2]SA from [25] and IMEX-DIMSIM3 from [14]. At order 4, comparisons are done against ARK4(3)7L[2]SA1 from [26] and IMEX-DIMSIM4 from [35]. Order 5 serial methods are ARK5(4)8L[2]SA2 from [26] and IMEX-DIMSIM5 from [35]. Finally, the order 6 baseline is IMEX-DIMSIM6(\({\mathcal {S}}_{\pi /2}\)) from [24]. The results show that parallel ensemble IMEX Euler methods are the most efficient in all cases. Parallel IMEX DIMSIMs are competitive at orders 3 and 6 and surpass the efficiency of serial schemes at orders 4 and 5.

Figure 4 plots convergence of the methods used in the experiment. We can see that the ARK methods exhibit order reduction for this problem, which explains their poor efficiency results. All other methods achieve the expected order of accuracy. For a fixed number of steps, the parallel IMEX GLMs are less accurate than the serial IMEX GLMs, which indicates that parallel methods have larger error constants and are not the most efficient when limited to serial execution. This is to be expected given parallel methods have a more restrictive structure and fewer coefficients available for optimizing the principal error and stability.

Work-precision diagrams for the Allen–Cahn problem Eq. (6.2)

Convergence diagrams for the Allen–Cahn problem Eq. (6.2)

7 Conclusion

This paper studies parallel IMEX GLMs and provides a methodology to derive and solve simple order conditions for methods of arbitrary order. Using this framework, we construct two families of methods, based on existing DIMSIMs and on IMEX Euler, and provide linear stability analyses for them.

Our numerical experiments show that parallel IMEX GLMs can outperform existing serial IMEX schemes. Between parallel IMEX DIMSIMs and parallel ensemble IMEX Euler methods, the latter proved to be the most competitive. The error for the ensemble methods is generally smaller than that of the DIMSIMs, due in part to the improved ending procedure. Moreover, the magnitude of method coefficients grows slower for ensemble methods as documented in Tables 1 and 2, reducing the impact of accumulated floating-point errors. For orders 5 and higher, we have to carefully select the method and distribution of the abscissae to control these errors. In addition, one notes that parallel ensemble IMEX Euler methods tend to have smaller values of \(\lambda \), which improves convergence of iterative linear solvers used in the Newton iterations.

Owing to their excellent stability properties, the ensemble family shows great potential for constructing other types of partitioned GLMs. Of particular interest are alternating directions implicit (ADI) GLMs [30], as well as multirate GLMs. The authors hope to study these in future works.

References

Alnæs, M.S., Blechta, J., Hake, J., Johansson, A., Kehlet, B., Logg, A., Richardson, C., Ring, J., Rognes, M.E., Wells, G.N.: The FEniCS project version 1.5. Arch. Numer. Softw. 3(100), 9–23 (2015). https://doi.org/10.11588/ans.2015.100.20553

Ascher, U.M., Ruuth, S.J., Spiteri, R.J.: Implicit-explicit Runge–Kutta methods for time-dependent partial differential equations. Appl. Numer. Math. 25(2/3), 151–167 (1997)

Ascher, U.M., Ruuth, S.J., Wetton, B.T.: Implicit-explicit methods for time-dependent partial differential equations. SIAM J. Numer. Anal. 32(3), 797–823 (1995)

Boscarino, S., Russo, G.: On a class of uniformly accurate IMEX Runge–Kutta schemes and applications to hyperbolic systems with relaxation. SIAM J. Sci. Comput. 31(3), 1926–1945 (2009)

Braś, M., Cardone, A., Jackiewicz, Z., Pierzchała, P.: Error propagation for implicit-explicit general linear methods. Appl. Numer. Math. 131, 207–231 (2018). https://doi.org/10.1016/j.apnum.2018.05.004

Braś, M., Izzo, G., Jackiewicz, Z.: Accurate implicit-explicit general linear methods with inherent Runge–Kutta stability. J. Sci. Comput. 70(3), 1105–1143 (2017)

Butcher, J.C.: Diagonally-implicit multi-stage integration methods. Appl. Numer. Math. 11(5), 347–363 (1993)

Butcher, J.C.: General linear methods for the parallel solution of ordinary differential equations. In: Contributions in Numerical Mathematics, pp. 99–111. World Scientific, Singapore (1993)

Butcher, J.C.: Order and stability of parallel methods for stiff problems. Adv. Computat. Math. 7(1/2), 79–96 (1997)

Butcher, J.C., Chartier, P.: Parallel general linear methods for stiff ordinary differential and differential algebraic equations. Appl. Numer. Math. 17(3), 213–222 (1995). https://doi.org/10.1016/0168-9274(95)00029-T

Califano, G., Izzo, G., Jackiewicz, Z.: Starting procedures for general linear methods. Appl. Numer. Math. 120, 165–175 (2017). https://doi.org/10.1016/J.APNUM.2017.05.009

Cardone, A., Jackiewicz, Z., Sandu, A., Zhang, H.: Extrapolated IMEX Runge–Kutta methods. Math. Model. Anal. 19(2), 18–43 (2014). https://doi.org/10.3846/13926292.2014.892903

Cardone, A., Jackiewicz, Z., Sandu, A., Zhang, H.: Extrapolation-based implicit-explicit general linear methods. Numer. Algorithms 65(3), 377–399 (2014). https://doi.org/10.1007/s11075-013-9759-y

Cardone, A., Jackiewicz, Z., Sandu, A., Zhang, H.: Construction of highly stable implicit-explicit general linear methods. In: AIMS proceedings, vol. 2015. Dynamical Systems, Differential Equations, and Applications, pp. 185–194. Madrid, Spain (2015). https://doi.org/10.3934/proc.2015.0185

Computational Science Laboratory: ODE test problems (2020). https://github.com/ComputationalScienceLaboratory/ODE-Test-Problems

Connors, J.M., Miloua, A.: Partitioned time discretization for parallel solution of coupled ODE systems. BIT Numer. Math. 51(2), 253–273 (2011). https://doi.org/10.1007/s10543-010-0295-z

Constantinescu, E., Sandu, A.: Extrapolated implicit-explicit time stepping. SIAM J. Sci. Comput. 31(6), 4452–4477 (2010). https://doi.org/10.1137/080732833

Ditkowski, A., Gottlieb, S., Grant, Z.J.: IMEX error inhibiting schemes with post-processing. arXiv:1912.10027 (2019)

Frank, J., Hundsdorfer, W., Verwer, J.: On the stability of implicit-explicit linear multistep methods. Appl. Numer. Math. 25(2/3), 193–205 (1997)

Hairer, E., Wanner, G.: Solving ordinary differential equations II: stiff and differential-algebraic problems, 2 edn. No. 14. In: Springer Series in Computational Mathematics. Springer, Berlin (1996)

Hundsdorfer, W., Ruuth, S.J.: IMEX extensions of linear multistep methods with general monotonicity and boundedness properties. J. Comput. Phys. 225(2), 2016–2042 (2007)

Izzo, G., Jackiewicz, Z.: Transformed implicit-explicit DIMSIMs with strong stability preserving explicit part. Numer. Algorithms 81(4), 1343–1359 (2019)

Jackiewicz, Z.: General Linear Methods for Ordinary Differential Equations. Wiley, Amsterdam (2009)

Jackiewicz, Z., Mittelmann, H.: Construction of IMEX DIMSIMs of high order and stage order. Appl. Numer. Math. 121, 234–248 (2017). https://doi.org/10.1016/j.apnum.2017.07.004

Kennedy, C.A., Carpenter, M.H.: Additive Runge–Kutta schemes for convection-diffusion-reaction equations. Appl. Numer. Math. 44(1/2), 139–181 (2003). https://doi.org/10.1016/S0168-9274(02)00138-1

Kennedy, C.A., Carpenter, M.H.: Higher-order additive Runge–Kutta schemes for ordinary differential equations. Appl. Numer. Math. 136, 183–205 (2019). https://doi.org/10.1016/j.apnum.2018.10.007

Lang, J., Hundsdorfer, W.: Extrapolation-based implicit-explicit peer methods with optimised stability regions. J. Comput. Phys. 337, 203–215 (2017)

Pareschi, L., Russo, G.: Implicit-explicit Runge–Kutta schemes and applications to hyperbolic systems with relaxation. J. Sci. Comput. 25(1), 129–155 (2005)

Roberts, S., Popov, A.A., Sandu, A.: ODE test problems: a MATLAB suite of initial value problems (2019). arXiv:1901.04098

Sarshar, A., Roberts, S., Sandu, A.: Alternating directions implicit integration in a general linear method framework. J. Comput. Appl. Math., 112619 (2019). https://doi.org/10.1016/j.cam.2019.112619

Schneider, M., Lang, J., Hundsdorfer, W.: Extrapolation-based super-convergent implicit-explicit peer methods with A-stable implicit part. J. Comput. Phys. 367, 121–133 (2018)

Soleimani, B., Weiner, R.: Superconvergent IMEX peer methods. Appl. Numer. Math. 130, 70–85 (2018)

Zhang, H., Sandu, A.: A second-order diagonally-implicit-explicit multi-stage integration method. In: Proceedings of the International Conference on Computational Science, ICCS 2012, vol. 9, pp. 1039–1046 (2012). https://doi.org/10.1016/j.procs.2012.04.112

Zhang, H., Sandu, A., Blaise, S.: Partitioned and implicit-explicit general linear methods for ordinary differential equations. J. Sci. Comput. 61(1), 119–144 (2014). https://doi.org/10.1007/s10915-014-9819-z

Zhang, H., Sandu, A., Blaise, S.: High order implicit-explicit general linear methods with optimized stability regions. SIAM J. Sci. Comput. 38(3), A1430–A1453 (2016). https://doi.org/10.1137/15M1018897

Zharovsky, E., Sandu, A., Zhang, H.: A class of IMEX two-step Runge–Kutta methods. SIAM J. Numer. Anal. 53(1), 321–341 (2015). https://doi.org/10.1137/130937883

Acknowledgements

The authors acknowledge Advanced Research Computing at Virginia Tech for providing computational resources and technical support that have contributed to the results reported within this paper. URL: http://www.arc.vt.edu.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

This work was funded by awards NSF CCF1613905, NSF ACI1709727, AFOSR DDDAS FA9550-17-1-0015, and by the Computational Science Laboratory at Virginia Tech.

Rights and permissions

About this article

Cite this article

Roberts, S., Sarshar, A. & Sandu, A. Parallel Implicit-Explicit General Linear Methods. Commun. Appl. Math. Comput. 3, 649–669 (2021). https://doi.org/10.1007/s42967-020-00083-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42967-020-00083-5