Abstract

Automated and highly automated vehicles still need to interact with the driver at different cognitive levels. Those who are SAE level 1 or 2 consider the human in the loop all the time and require strong participation of the driver at the control level. Yet, to increase safety, trust, and driver comfort with this mode of automation, systems with a strong cooperative component are needed. In this sense, this paper introduces the design of a vehicle controller based on shared control, together with an arbitration system, and the design of a visual human-machine interface (HMI) to foster the mutual understanding between driver and automation while sharing driving task. The driver-automation cooperation is achieved through incremental support, in a continuum spectrum from manual to full automation. Additionally, the design of an HMI to support the driver in a takeover maneuver is presented, considering that this functionality is a key component of vehicle SAE levels 3 and 4. The work presented in this paper represents the latest advances in the integration being carried out within the framework of the PRYSTINE project.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Autonomous vehicles (AV) arise as a technological solution to mitigate the shortcomings of manual driving. The primary motivations are the reduction of human-caused accidents and the realization of a more efficient driving task in terms of energy consumption, traffic flow, and driver workload. However, this technology is not mature enough yet for massive implementation in commercial vehicles (Brown and Laurier 2017) as assigning human a passive role in the driving task, presents technical, social, and legal issues (Marchant and Lindor 2012). A technical limitation is that AVs are not capable of handling all unexpected driving situations, while a social issue is that humans like to drive and have proven to be better drivers than machine until now.

A different approach has been to increase the automated driving functionalities (ADF) to achieve a higher level of automation (LoA) while considering the driver as an active agent, that is, human and machine are both members of a unique team. This corresponds to vehicles with LoA 1, 2, and 3 according to the standard SAE J3016 (SAE-International 2018), where the driver remains partially or fully responsible for the dynamic driving task; in this perspective, rather than a matter of LoAs, it is important to understand which are the resources available and who—between human and machine—can accomplish a given task. This approach considers that there will exist constant and dynamic human-machine interaction at different cognitive levels between the driver and the automated system, which go further than just receiving warning alerts, but instead, more intrusive and continuous support given by the automation. According to the level of cooperation, the mode of operation can be classified into two categories: shared control (Sheridan and Verplank 1978), where driver and automation execute the same task at the same time (e.g., lane-keeping assistance system with the driver in the control loop) and traded control, where both execute the same task but, at different times (e.g., an autopilot limited to highways with driver alert to resume control).

The main design challenge of the shared control mode is to achieve a comfortable control interaction where the system supports the driver without overloading, while at the same time, adapting the level of assistance based on the driver needs, with priority for the safety of the driving task, correcting the driver intention if necessary. On the other hand, the challenge of the traded control mode is handling the transitions between different drivers’ roles (i.e., driver and passenger), as drivers commonly over-trust automation, failing in executing an appropriated take-over maneuver. Future automated driving systems (ADS) will leverage on the potential of both approaches by developing gradual and incremental support to adapt to the ever-changing cognitive and physical state and needs of the driver during the dynamic driving task.

The human-machine interaction strategy has a key role in these future systems, combining haptic steering continuous support at the control level, with complementary assistance of other HMI modalities (e.g., non-control haptic devices, audio alerts and tutoring, and visual interfaces) to improve the usability, trust, and acceptance. This collaborative HMI concept aims to exploit the potential of the technologies and the driver, i.e., to get the most out of the existing driver's skills and cognitive resources according to the different roles he/she can have while the ADS is engaged.

In traditional systems, the role of the HMI is to inform/warn the driver while minimizing the impact on distraction. Conversely, in highly automated systems that consider the driver, the HMI should emphasize the authority on each task, to make the driver always aware of his/her role. Moreover, the HMI should be designed to increase the trust in automation and reduce potential anxiety arising from the unexpected variation of roles. The success of these interaction strategies relies less on extraordinary intelligence and more on sophisticated negotiation of changing context and subsequent behavior (Ju 2015).

Based on these premises, this article, as part of the work carried out within the PRYSTINE (Programmable Systems for Intelligence in Automobiles) project (Druml et al. 2019; Marcano et al. 2020a), presents an initial design and integration between the haptic continuous support (shared controller) and the complementary human-machine interface which will help in the driver-automation collaboration, specifically a visual interface, which will offer a better understanding of the system behavior to the driver. This article is organized as follows: Section 2 gives an overview of the topic with a recompilation of related works in shared control and visual interfaces; Section 3 present a technical explanation of the shared control system, including the lane-keeping controller and the arbitration module description; Section 4 explains the design of the visual interface for both shared and traded control operation mode; Section 5 closes with the conclusions of the work and future research lines.

2 Current systems and related works

The current state of technology in automated vehicles with automatic lateral control that considers the driver as an active agent shows two variants. On the one hand, vehicles with ADF supporting the driver at the steering wheel, which is the case of the Blind Spot Active System (Mercedes-Benz 2020) and Lane Keeping Assistant System. These functionalities activate audio warnings, and vibration on the steering wheel when reaching the system limits (possible collision with the side vehicle o reaching the lane border), and if the driver does not react properly, the system applies a braking force to correct the course. However, although both driver and automation are acting over the steering, the assistance is momentary, and not continuous as the shared control paradigm proposes. On the other hand, there are the SAE L2 vehicles with autopilots with Lane Tracing Systems, which require the driver to have the hands on the wheel each certain time to ensure that he/she is attentive and ready to take control when needed (traded control mode). In this case, the automation fully supports the driver controlling all the driving task, removing almost all possibility for driver-automation control cooperation. Once the driver exerts a low force on the steering, the autopilot is deactivated. This shows a gap between the two types of automated vehicles, with space for a more cooperative approach at the control and tactical level, which is not available in the market yet, but it has indeed been investigated by the research community with an increasing number of works in the last years (refer to (Marcano et al. 2020b)) for a complete review of shared control techniques in automated driving).

2.1 The haptic steering controller for lane-keeping

Lane-keeping controllers have been designed to allow the vehicle to follow a predefined trajectory using the steering wheel as the control mechanism. This mechanism can be controlled in three different modes according to the control signal: (1) position, (2) speed, and (3) torque. Most works agree that using the torque as the control signal for the steering wheel is the best option to improve the driver-automation cooperation at the control level, as both are using the same input to the system (Nagai et al. 2002). This makes it possible to model the cooperation and conflicts on the steering to design human-centered systems. The first algorithms for shared control changed the level of intervention based on the tracking performance, the less driver performance (w.r.t lateral and angular error) and the higher the control intervention from the automation (Abbink et al. 2011). These haptic steering controllers were based on classical control methods (e.g., proportional/derivative strategies). Later, more advance techniques have been implemented using an optimization framework with the inclusion of driver models (Nguyen et al. 2018), showing several advantages such as reduction of conflict and workload, with positive driver acceptance, while at the same time improving the safety of the driving task when the driver does not perform properly. Shared control algorithms have been also considering the inclusion of driver monitoring systems, which allows to get the state of the driver and change the level of assistance in proportion to this variable, as different works have proposed previously, considering the driver distraction and drowsiness (Nguyen et al. 2018; Tran et al. 2019). Other works have considered the risk of collision as the main factor which changed the level of assistance to increase safety (Ercan et al. 2017).

2.2 The visual interface

These previous developments have focused attention on the control design aspects and benefits but have not studied the integration with complementary HMI strategies. Specifically, to shared control, only one work has presented a draft (no implementation on a real device) of a visual interface, with an emphasis in the availability of the side lane to perform a lane change (Benloucif et al. 2016). Nonetheless, the driver interaction with this new generation of ADAS requires strategies of HMI to improve the understanding and bidirectional communication between human and vehicle automation.

In this sense, this work presents an initial design and integration between the shared controller and the complementary visual human-machine interface which will help in the driver-automation collaboration, adding a better understanding of the system behavior to the driver, and also helping in the acceptance of such system towards the implementation in commercial vehicles. This is the goal of the work being developed under the PRYSTINE project.

2.3 The PRYSTINE project

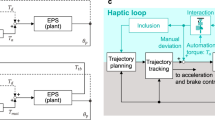

PRYSTINE (Druml et al. 2019) has been realizing Fail-operational Urban Surround perceptION (FUSION), which is based on robust Radar and LiDAR sensor fusion and control functions to enable safe automated driving in urban and rural environments. The ADS developed in the project and described in this paper demonstrates the potential of the FUSION hardware/software architecture and reliable components to handle safety-critical situations which are not reached with not reliable state-of-the-art approaches. The reference architecture for the integration of the modules of decision, control, and HMI used in this project is shown in Fig. 1.

In the PRYSTINE project, a novel ADS is proposed to assist the driver in a lane-keeping task with variable authority considering the driver state and the potential risk of collision with external agents. The system increases the level of intervention if it detects that the driver is distracted or if there is a risk of collision. It also alerts the driver to take full control of the vehicle in a fluid, comfortable, and safe manner. The design of the system requires an adaptive decision and control system able to continuously adapt to the context changes, together with a visual HMI that supports the mutual understanding of the driver-automation system. The functionalities of the system are described in the next sections.

3 Shared control system

The shared control system is composed of two specific submodules as shown in Fig. 1. First, the shared controller, which is a lane tracing system designed to cooperate without conflicting with the driver through the steering. Secondly, the arbitration module, which has the responsibility to distribute the control authority between driver and automation. Both combined represent the system that supports the driver with an adaptive level of assistance. The specific functionalities of the system are described below:

-

1.

A controller to assist the driver in the lane following task, with enough freedom to move along the lane, but with enough force to guide the driver towards the lane center considering comfort in control cooperation.

-

2.

Border protection to avoid departing from lane using the vibration at the steering as a human-machine interface that communicate the driver the limits of the road, or the risk of performing a lane change.

-

3.

A high-level decision system that increases the controller authority over the driver to avoid unsafe actions, especially dangerous lane-change maneuvers.

3.1 Control system

The design of the lateral controller is based on a constrained model predictive control (MPC) for a lane following task. To consider the driver-automation interaction, two parameters are included within the problem formulation: (1) the strength of haptic feedback (SoHF), which is the maximum guidance force felt by the driver and is part of the MPC design and (2) the level of haptic authority (LoHA) which is related to the stiffness of the system around the optimal command and is implemented as a proportional-derivative (PD) controller. These parameters allow to vary the authority of the system and therefore share the control of the vehicle with the driver under different scenarios. The design of the controller requires a model of the system and a representation through differential equations. This is done through the description of three sub-models: (1) vehicle dynamic model, (2) lane-keeping model, and (3) inertial steering model. These three joined to represent the road-vehicle model (for more details refer to (Marcano et al. 2020c)). The optimization problem is divided in three different optimization functions:

-

1.

Tracking performance, to follow the reference trajectory, based on the minimization of lateral and angular errors (ztra = [ey, eφ])

-

2.

Driving comfort, minimizing vehicle lateral velocity, steering wheel angular velocity, and yaw rate, respectively (zcom = [vy, w, r])

-

3.

Control conflicts, to reduce the driver control effort, by minimizing the control torque and the torque rate of change (ztor = [T, ∆T]).

The lane tracking controller is complemented with a level of haptic authority (LoHA) controller. The former assists the driver in the path following task with the freedom to move along the lane. The second, increase the authority of the controller when the driving task increase in risk (either by driver distraction or possible collision with the side vehicle). This controller is designed in such manner that the force increases, but without losing stability, resulting in a PD controller dependent upon the difference between optimal steering wheel angle (θopt) and the driver commanded steering wheel position (θ) as shown in Eq. 1, where KLoHA is the LoHA gain, Beqis the equivalent steering wheel damping that ensures stability, and w is the angular velocity of the steering wheel.

Figure 2 shows the performance of the controller with different values of LoHA. Results indicate a good tracking performance with a lateral error under the 20 cm for all gains and angular error below 0.05 rads. The tests were performed at a speed of 70 km/h, the yaw rate and lateral speed are low in zones with high curvature, and the time to obtain the MPC solution is kept under 1.5 ms, which is a positive indicator for a control loop of 10 ms. Results show that the high lateral acceleration occurs due to a strong change in curvature, which is going to be improved with a real-time planner with soft curvature.

Additionally, this controller is managed by a high-level decision system which indicates the adaptive level of intervention according to the different scenarios. In the present article, the risk during the driving task (either time to lateral collision (TTLC) or the driver state) will be the main factor to change the assistance of the controller in a fluid manner. The decision system is described below.

3.2 Decision system

When both the driver and the automation are part of the dynamic driving task (DDT), an arbitration system is necessary to distribute the authority over the control of the vehicle. This authority can be dependent upon the next variables: (1) driver’s status: which is the driver’s ability at a given time to execute the corresponding DDT. It is commonly measured with a vision-based system that detects the cognitive states of the driver (e.g., distraction and/or drowsiness level), (2) collision risk: time to a collision of the vehicle with external agents. To correctly assess this risk, information about the environment is needed, including the topology of the road, other agents around, and traffic signs, and (3) tracking: the measure of vehicle performance for tracking a pre-defined route. It generally depends on the lateral and angular errors of the vehicle.

If the control is shared between the driver and the automation, the arbitration module assigns the appropriate authority to each agent. For this task, a fuzzy logic algorithm is proposed that receives as input the tracking performance, the risk of the driving task, and the steering conflict and has as its output the LoHA of the system. This output feeds the control module explained in section 3.1.

An initial version of the decision system is developed considering the lateral error ey (low, medium, high), the derivative of the lateral error\( \dot{e_{\mathrm{y}}} \), which indicates if the vehicle is approaching (↓), or leaving (↑) the lane, torque effort (T), represented as zero or positive, and the risk indicator (e.g., increased by possible collision or by inappropriate driver state). The if-then-rules for high driving risk is shown in Table 1. The output of the system is the LoHA represented with three membership function as low (L), medium (M), and high (H). It indicates the level of authority given to the controller to override the driver’s action.

3.3 Use case

Christine is bringing her baby Mark to the kindergarten when she notices that Mark is tired and a bit nervous. Worried and in a hurry, she speeds up continuing looking in the mirror to monitor the baby. After some kilometers, Mark loses the pacifier and starts crying and yelling. Christine gets extremely nervous, tries to calm down the baby with some words, but it seems not to work, so then tries to get the pacifier taking her eyes off the road for some seconds. She cannot concentrate on the street and starts driving erratically. Then, the ADS activates a sequence of incremental support.

The ADS detects this risk behavior and suggests keeping her eyes on the road. Since she maintains the wrong behavior and the lane deviations are becoming dangerous, the ADS applies a micro-control strategy to avoid it, securing the lane boundaries for avoiding a lane departure with a high risk of collision alongside the vehicle, while assigning partial authority to the system to help Christine in the lane-keeping task.

Even this support does not solve the situation, because Mark is crying, and Christine is still distracted. So, the ADS informs Christine that the automation is available for some kilometers, and the vehicle takes full control to allow her to look for the pacifier and take care of Mark to calm him down. Once in full automation mode, the ADS shows its autonomy of 1.4 km, and when the minimum takeover time is reached (see Fig. 3), it alerts Christine to get ready to take back the control. When she executes the takeover maneuver, the system starts decremental support to smoothly go from full automation to fully manual.

4 Visual human-machine interface

In order to complement the haptic interaction strategy (given by the control module in the steering wheel), a visual HMI displayed on a full-digital instrument cluster has been designed and implemented. The scope of the visual HMI is to provide the driver with the information needed to dynamically understand what the expected behavior is, and to react accordingly. In this sense, it has the aim of increasing driver’s awareness about the mode of the automation and thus fosters the adoption of his/her corresponding role. According to the action that is required, in fact, the driver should adapt the response and the type of interaction, at perceptual, decisional, and action level.

4.1 HMI concept and design

In order to facilitate the comprehension at a glance, each driving mode (i.e., full manual mode, shared control mode, and full automated mode) has been assigned with a dominant color in the interface. The relation between the color and the state of automation has been tested with users and will be further described. In order to clarify these aspects, explicit HMI graphic elements (e.g., animations and pictograms) and text have been designed to clarify who is in charge of the DDT. The layout of the HMI reflects the modes of the ADS. The HMI displayed in this chapter has been designed for the specific use case reported in Chapter 3.3, to demonstrate the potential of the incremental support of the ADS developed in PRYSTINE.

Figure 3 shows the layout for the HMI in manual driving mode: in this modality, the ADS is engaged only to alert the driver in case his/her state has been detected as not compatible with the DDT. Moreover, the map is used to inform the driver on the projection of the automation state: the vehicle path reported on the map, in fact, is represented with the color corresponding to the expected automation state.

This feature is used to allow the driver to have a projection, based on digital maps that take into account the ODDs, of the expected automation behavior in the near future. The representation uses a familiar metaphor, currently used by common GPS navigators (including Google Maps), to show areas with high traffic intensity.

In the example reported in Fig. 4, the vehicle, in manual driving mode, informs that driver that a cooperative driving is expected for a while, followed by a transition in automated driving mode.

Figure 5 shows the layout of the HMI when a kind of cooperative driving is activated: when a slight actuation in the steering wheel (i.e., the combination of steering torque and stiffness provided by the above described control system) is applied to avoid lane deviation (so both the driver and automation perform the steering task at the same time), the HMI radically changes its layout to:

-

Inform the driver about the current distribution of the vehicle control task, through a textual label (A area) and a pictogram (B)

-

Inform the driver about the level of authority through a dynamic and familiar representation, i.e., a progress slider (C), that shows the intensity of the intervention provided by the control system

-

Show to the driver basic information on driving dynamics (D and E), since part of the driving task is still in his/her charge.

-

Increase the awareness of the driver about the type of support given by the automation, and the reason that led to this intervention (F). This information is provided through a 3D-animated representation, stylized so as not to visually overload the driver. This depiction reflects the overall concept of the visual HMI, aimed at adding a “why layer” (Miller et al. 2016) to provide the user with explanations instead of a warning, when non-safety-critical situations occur, making transparent and explainable the mental model of the automation, in order to increase the trust and foster the acceptance of automated vehicles. In the use case reported in this paper and showed in Fig. 5, the animation is used to inform the driver about that he/she is driving erratically, and a counter-steering is applied for safety reasons.

Figure 6 shows a first draft of the integration between the control system and the visual interface. On the one hand, the graphics of the simulation show the increase of the LoHA as the risk of the driving task increases (low TTLC). This produces a stronger automation intervention at the control level. It is also shown in the graph, the usefulness of the LoHA which increases the intervention apart from the baseline MPC controller, to ensure safety, but at the same time, ensuring the stability of the driving. On the other hand, the HMI responds through visual representation communicating the driver the intention of automation and the reason for strong intervention. Moreover, the main interface color changes, in order to easily inform the driver about the change of state also with a peripheral view.

Figures 7 and 8 show the layout of the HMI when the full automated mode is activated. It has a twofold objective: (1) to clarify that the ADS is fully in charge of the DDT when no imminent takeover is expected (Fig. 7) and (2) to foster the adoption of an active role of the driver when the takeover is imminent, i.e., 30 s (Fig. 8).

The HMI layout for full automated mode uses a “long-term stylized map”, showing a preview (and a chronicle) of the overall trip. This representation requires a lower amount of information than a traditional map, providing the user only with the basic travel information since at that moment, he/she is not actively engaged in the vehicle control task. Previous studies performed on similar solutions have shown encouraging results in terms of comprehension, mental demand, and acceptability (Castellano et al. 2018). As in the normal map used in manual mode, this map reports the future projection of the expected state of the automation.

When a request of taking over becomes imminent, the stylized map zooms (complemented by acoustic feedback) to bring the driver back into the control loop and warn him/her that an intervention will be needed in a reduced time window. A preview of the explanation of the reason that led to this request is given through pictograms: in the example shown in Fig. 8, the reason is the detection of high traffic flows. As for the shared control mode, the explanation is provided to increase the transparency of the decision system, in order to facilitate the comprehension of the messages and to create human-machine cooperation, based on trust.

The negotiation-based interaction approach just described, however, needs to be complemented with a more traditional warning-based approach. Current solutions, in fact, must deal with human factors such as, among others, unexpected traffic situations, and driver’s emotional and cognitive states. Figure 9 shows an imminent take-over request, aimed at suggesting the urgency of driver’s intervention.

Figure 10 shows the case in which the driver does not intervene (e.g., because he/she is distracted) and the car shows the maneuver that is about to perform. Even if the driver is expected to be “not fit to drive” (since he/she did not intervene after the request), the maneuver is displayed in order to be present in the event that the driver returns in the loop and wants to intervene.

4.2 HMI evaluation: method and results

In order to validate the effectiveness and efficiency of the proposed interaction strategy, an iterative validation approach has been designed. This validation process consists of an incremental testing strategy, aimed at evaluating the HMI in more realistic contexts. The first validation step concerned the assessment of crucial interaction elements, such as the graphical pictograms, the color, and the tone of the proposed communication. The second step will include the evaluation of the HMI component in simulated driving scenarios, while the final step will involve the evaluation of the overall arbitration, control, and interaction system proposed in this paper into realistic driving environments.

In this paper, the first step only results are reported. The test conducted to measure the performance of the visual HMI followed by the main principles of the human-centered design, i.e., to involve the users from the very early phases of the system design. Twenty users (11 males and 9 females) were involved in the testing. They were interviewed to express their preferences on different design options and to give their feedback to guide the re-design. All the people involved in the testing were professional interaction designers (i.e., graphical and technical designers, ergonomists, and human factor specialists, HMI developers).

The users were instructed about the project concept and the main use cases. The aim of the test was to match a concept with its graphical representation. To test the icons, different pictograms representing the same concept have been displayed to the users: four pictograms per each concept (i.e., per each automation mode). Pictogram design has been based on a benchmark of graphical elements currently used in human-automation interaction research (see for example (Eriksson and Stanton 2017) and (Bazilinskyy et al. 2018)). The metrics used to select the best icon were (i) easiness of understanding, (ii) consistency between the concept and the representation, and (iii) esthetic judgment. Users were asked to express the accordance with these parameters on a 7-point Likert scale. The selected icons, associated with the respective mode of automation, are reported in Fig. 11. Among them, the best rated of all has been the “Take Over Request” icon (easiness of understanding = + 2.10, consistency = + 2.25, esthetics = + 1.80).

Moreover, the users were asked to associate a color to the respective driving state. The meaning of each driving mode has been explained to the users. Then, it has asked them to associate it with the color in a list that better fits the implications (e.g., in terms of behavior expected from the user) of each driving mode. The colors associated to each driving mode are reported Fig. 11. As shown in this figure, two states have been associated to the red color (i.e., the take-over request and the emergency mode). The explanation is that both these states have been considered as critical by the users and associated with a color expressing criticality.

Concerning the communication tone, it has been tested by providing the users with different textual expressions, intended to inform the driver about the crucial actions. As an example, in order to notify to the driver about the manual driving mode engaged, the following textual options have been proposed in the “A” area reported in Fig. 5:

-

A.

“Manual”—formal tone

-

B.

“Manual mode”—technical tone

-

C.

“You drive”—informal tone

This process has been replicated creating three messages per all the automation modes (5 modes) and per all the other relevant textual information, i.e., the reason for driver intervention (e.g., the limitation occurred in automation; three messages) and the action required to the user (three messages). A total of 220 feedbacks (20 users per 11 messages) have been collected.

As a result, the informal communication tone achieved the best results (57.73% on the total message samples). Then, formal communication tone has been considered as the most effective by the 37.27% of the sample, while the technical tone has been selected by the 5% of the sample. This is particularly true for the messages related to the action suggested to the driver (85% for the informal tone). More in detail, the users found it as more assistive and more trustful compared with the technical one. From comments left by the users, it has been also found out that they are considered as best option to inform the driver about the reason that led to an indication, especially in case of non-safety critical events. This finding, consistent with previous studies (Castellano et al. 2020) allowed to confirm that the approach based on the transparency of the human-system communication was the most appropriate approach for the design of trustworthy technology.

5 Conclusions and future work

The HMI strategy has been designed to reflect the incremental levels of interaction of the ADS and smoothly and continuously support the driver according to his/her state and current role in the DDT. The performance of the ADS and its HMI will be tested in a driving simulator to assess its impact on safety, acceptability, and comfort-related parameters. The implementation of this system in a real-time platform will be considered as well, with the considerations shown below.

System integration

The reduced size, weight, and power (SWaP) constraints for today’s ECU clashes with the increasing demand for computational power required by graphics framework. To let these two worlds meet, typically engineers employ computing platforms featuring multi-core host and embedded accelerators, such as GPUs (NVIDIA 2020). These computers are typically 1–2 orders of magnitude less powerful than their desktop and server counterpart, respectively, and, as a consequence, software and system engineers must carry on heavy optimizations on their code, to efficiently exploit the underlying hardware. This is currently a hot topic in all research fields involving AD systems.

5.1 Limitations

The work proposed in this article has some limitations. For example, due to the validation stage, it was not possible to test the system in a realistic driving context. Moreover, since the HMI elements have been tested in Italy only, it has not been possible to take into account cross-cultural issues. Due to the relevant impact of demographics factors in the type of study conducted, the results reported in this paper can be affected by biases related to the user population involved in the research. An interesting next step of this research will be to compare the results collected in this study with results collected with users coming from other countries.

References

Abbink DA, Mulder M, Boer ER (2011) Haptic shared control: smoothly shifting control authority? Cogn Tech Work 14:19–28. https://doi.org/10.1007/s10111-011-0192-5

Bazilinskyy P, Petermeijer SM, Petrovych V, Dodou D, Winter JC (2018) Take-over requests in highly automated driving: a crowdsourcing survey on auditory, vibrotactile, and visual displays. Transport Res F: Traffic Psychol Behav 56:82–98. https://doi.org/10.1016/j.trf.2018.04.001

Benloucif MA, Popieul J-C, Sentouh C (2016) Architecture for multi-level cooperation and dynamic authority management in an automated driving system - a case study on lane change cooperation. IFAC-PapersOnLine 49:615–620. https://doi.org/10.1016/j.ifacol.2016.10.631

Brown B, Laurier E (2017) The trouble with autopilots: assisted and autonomous driving on the social road. Proc 2017 CHI Conf Human Factors Comp Syst ACM. https://doi.org/10.1145/3025453.3025462

Castellano, A., Fossanetti, M., Landini, E., Tango, F., & Montanari, R. (2020). Automation as driver companion: findings of AutoMate project. In Advances in Intelligent Systems and Computing (pp. 1048-1054). Springer International Publishing. doi:https://doi.org/10.1007/978-3-030-39512-4_159

Castellano A, Fruttaldo S, Landini E, Montanari R, Luedtke A (2018) Is your request just this? New automation paradigm to reduce the requests of transition without increasing the effort of the driver. 25th ITS World Congress, Copenhagen, pp 17–21

Druml, N., Beekelaar, R., Jany-Luig, J., Corredoira, M. M., Burgio, P., Ballato, C., ..., Villagra, J. (2019). PRYSTINE - technical progress after year 1. 2019 22nd Euromicro Conference on Digital System Design (DSD). IEEE. doi:https://doi.org/10.1109/dsd.2019.00063

Ercan Z, Carvalho A, Tseng HE, Gökaşan M, Borrelli F (2017) A predictive control framework for torque-based steering assistance to improve safety in highway driving. Veh Syst Dyn 56:810–831. https://doi.org/10.1080/00423114.2017.1337915

Eriksson A, Stanton NA (2017) Takeover time in highly automated vehicles: noncritical transitions to and from manual control. Hum Factors: The Journal of the Human Factors and Ergonomics Society 59:689–705. https://doi.org/10.1177/0018720816685832

Ju W (2015) The design of implicit interactions. Synth Lect Hum-Centered Inform 8:1–93. https://doi.org/10.2200/s00619ed1v01y201412hci028

Marcano M, Diaz S, Matute, J., Pérez, J., & Irigoyen, E. (2020a). A cascade steering shared controller with dual-level dynamic authority. IFAC-World Congress - PapersOnLine - Preprint.

Marcano M, Diaz S, Perez J, Irigoyen E (2020b) A review of shared control for automated vehicles: theory and applications. IEEE Trans Hum-Mach Syst 50:475–491. https://doi.org/10.1109/thms.2020.3017748

Marcano M, Diaz S, Pérez J, Castellano A, Landini E, Tango F, & Burgio P (2020c). Human-automation interaction through shared and traded control applications. In Advances in Intelligent Systems and Computing (pp. 653-659). Springer International Publishing. doi:https://doi.org/10.1007/978-3-030-39512-4_101

Marchant GE, Lindor RA (2012) The coming collision between autonomous vehicles and the liability system. Santa Clara Law Rev 52:1321–1340

Mercedes-Benz. (2020). Mercedes-Benz C-class saloon: Active blind spot assist. Retrieved from https://www.la.mercedes-benz.com/en/passengercars/mercedes-benz-cars/models/c-class/c-class-saloon/explore/intelligent-technologies/blind-spot-assistance.html. Accessed 24 Mar 2020

Miller D, Johns M, Mok B, Gowda N, Sirkin D, Lee K, Ju W (2016) Behavioral measurement of trust in automation. Proc Human Factors Ergon Soc Ann Meet 60:1849–1853. https://doi.org/10.1177/1541931213601422

Nagai M, Mouri H, Raksincharoensak P (2002) Vehicle lane-tracking control with steering torque input. Veh Syst Dyn 37:267–278. https://doi.org/10.1080/00423114.2002.11666238

Nguyen A-T, Sentouh C, Popieul J-C (2018) Sensor reduction for driver-automation shared steering control via an adaptive authority allocation strategy. IEEE/ASME Trans Mechatron 23:5–16. https://doi.org/10.1109/tmech.2017.2698216

NVIDIA. (2020). Jetson AGX Xavier Module. Retrieved from https://developer.nvidia.com/embedded/jetson-agx-xavier. Accessed 24 Mar 2020

SAE-International (2018) Taxonomy and definitions for terms related to driving automation systems for on-road motor vehicles - SAE J3016_201806. SAE Int. https://doi.org/10.4271/j3016_201806

Sheridan T, Verplank W (1978) Human and computer control of undersea teleoperators. Man-Machine Systems Laboratory, Department of Mechanical Engineering, Cambridge

Tran D, Du J, Sheng W, Osipychev D, Sun Y, Bai H (2019) A human-vehicle collaborative driving framework for driver assistance. IEEE Trans Intell Transp Syst 20:3470–3485. https://doi.org/10.1109/tits.2018.2878027

Funding

This work was supported by the Electronic Components and Systems for European Leadership Joint Undertaking (ECSEL), which funded the PRYSTINE project under Grant 783190.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there are no conflicts of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Marcano, M., Castellano, A., Díaz, S. et al. Shared and traded control for human-automation interaction: a haptic steering controller and a visual interface. Hum.-Intell. Syst. Integr. 3, 25–35 (2021). https://doi.org/10.1007/s42454-021-00030-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42454-021-00030-6