Abstract

Effective and facile local texture feature descriptors assume a significant role in many image classification and retrieval tasks. However, conventional feature descriptors are impaired to capture salient image features like local inherent structure, orientation and edge information of the image. A typical local binary pattern (LBP)-like feature descriptors elicits information based on the gray level difference from each locality and consequently its value immensely susceptible to noise. To overcome a few deficiencies of traditional methods, the proposed research work acquaint with a lucid, novel, yet robust texture feature descriptor called local mean differential excitation pattern (LMDeP) for efficient content based image retrieval. The main strategy of LMDeP is to elucidate differential excitation using the mean of points over each angular and radial neighbor points. This enables the LMDeP to fetch robust features by skimming the noise effect of enticing neighbors over each local patch. LMDeP performance compared to the LBP variants on Corel-1 K and Corel-5 K datasets establish the superiority of proposed method to its counterparts.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Diversity in image acquisition mechanism has led to mutation in distinctive domains such as multimedia repositories, document registry, medicine, law enforcement and other such advancements. This led to the need for automated on demand information retrieval systems. Content based image retrieval systems (CBIR) emerged as a silver line technology to cater the needs of automated indexing and retrieval of images. The fundamental objective of CBIR system is to access and assemble images which are correlated to the visual inquiries from the open database based on content. Since, content is characterized by a diverse set of parameters, for example, texture, color, shape, position and region of interest and so forth. Evaluations of these parameters become difficult task. Further, a few image types like natural, satellite and medical images have their own distinct features. A few researchers have proposed many feature descriptors dependent on low level features like color, shape and texture. An adaptable image feature descriptor is the need of great importance as the available literature focus to the way that there is no single strategy available to manage such a diverse set of image types.

Real time applications like browsing, retrieving similar images on demand require a potent CBIR system, whose efficiency depends on the methodology adapted for feature description/representation. A feature is a substantial structure in an image. Features can vary from a single pixel to a group of pixels forming an edge or contour, and perhaps, it may be as large as object in the image. Identifying these significant structures in the image is called feature detection. A feature descriptor represents all or subset of feature points detected. Thus, the feature descriptor must have resilience against noise, illumination changes and variety of deformations. Features may alter from one domain to another domain of application, which raises the question of suitability. Thus, domain specific feature detector/descriptors attract the attention of the researchers in the present scenario. A handful feature descriptors have been suggested in the last decade. A wide survey of CBIR techniques has been reported in [1, 2].

The rest of the paper is arranged in the accompanying way. Section 2 talks about the related work on content based image retrieval, fundamental commitments of the paper. The propounded feature extraction methodology and similarity metric is provided in Sect. 3 and Experimental outcomes and discussion are carried out in Sect. 4. Section 5 finishes up the paper.

2 Related works

The search for lucid and powerful feature descriptors is the prevailing research field in image processing. This section provides a brief recap of progressive advancements in feature descriptors. Feature description based on low level features like color, texture and shape fundamentally attracted researchers, as they are easy to extract. A few number of feature descriptors have been suggested to extract low level features for expert image retrieval [3,4,5,6,7,8,9] in the recent times. Among, low level feature descriptors, texture feature descriptors are prominent and compelling features for image retrieval. Textures description is a wing of texture analysis that appeal to many industrial applications such as face recognition [10], textile inspection [11], object tracking [12],and identification of ceramic tiles and marble in granite industry etc [13].

The exemplary local texture pattern that was used in the past decade is local binary pattern(LBP) [14]. The LBP constructs the affiliation of center pixels with their P neighbors existed on a circle of radius R by encoding the sign of grey level difference between them to an 8 bit binary string. As a consequence of circular sampling, LBP lacks the robustness to noise and inability to mimic anisotropic structures. To overcome a few deficiencies associated with LBP several researches extend the LBP operator as depicted in [3, 15,16,17]. Tan and Trigg [18] propounded local ternary pattern (LTP) using ternary encoding to overthrow pattern susceptibility to noise.

The local patterns mainly focused on extracting the relation between the center pixels with its neighbors over a n × n local patch, where n = 3 in general and over looked the mutual relation among neighbors. Later, [5, 19] quoted the need for both sign and magnitude pattern for enriched discriminative power. Verma et al. [20] demonstrated the significance of counting the mutual affiliation of adjoining neighbors for revealing the rapport with the center pixels considering two adjoining pixels at time. However, [5, 20] are based on the grey level difference and disregarded the original stimulus of intensity. Jabid et al. [21] devised a robust local pattern called local directional pattern (LDP) by considering edge data in different directions to encode local textures. LDP uses Kirsch masks to obtain edge data and it was appeared to be less prone to noise than the traditional LBP. Later, Chakraborti et al. [22] suggested a rotation invariant descriptor based on non linear fusion of LBP and LDP named as Local Optimal Oriented Pattern(LOOP).Chen et al. [23] introduced a robust descriptor based on differential excitation and orientation of gradient called Weber law descriptor(WLD) for texture image retrieval.

This research work proposes an original local feature descriptor entitled as local mean differential excitation pattern (LMDeP) for image retrieval. The salient feature of LMDeP is that it uses differential excitation instead of mere grey level difference to enumerate the relationship between center pixels and its neighbors. Further, the LMDeP consider the amalgamation of angular mean and radial mean of neighbors in the computation of differential excitation which endow to high discriminative power and high robustness to noise.

3 Proposed work

In this paper, an expert texture feature descriptor is proposed that uses consolidation of two local patterns inspired by LBP. First, being angular mean differential excitation (AMDeP) using average of intensity values over different angular directions and other is radial mean differential excitation pattern (RMDeP) using the mean of radial points at specified radius range. Both AMDeP and RMDeP are combined to form a unique feature descriptor labeled as local mean differential excitation pattern (LMDeP). LMDeP is competent enough to fetch potent and distinct features from noisy and traditional texture images. LMDeP profits by the accompanying things:

-

Fundamentally, differential excitation [23] is a fraction of two terms. One being the grey level difference between focused pixel and its neighbors and other is original center pixel intensity itself. Utilizing differential excitation to extract local structure curtail any multiplicative noise associated with neighbor pixels.

-

Further, proper choices of distance between radial and angular points empower LMDeP to subside noise effects on adjoining pixels.

-

A few multi-scale descriptors based on LBP elicit large number of features by concatenating the feature vector of distinct neighborhoods which results in expanded length of feature vector. While, LMDeP obtains complementary information with considerably small dimension of feature vector.

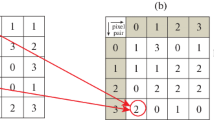

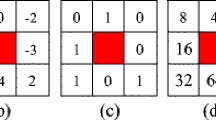

The LMDeP is executed in two stages. First, obtain the angular mean of neighbor pixels with specified angular distance \((\Delta \emptyset )\) and later, compute the differential excitation \((\delta )\) values for center pixel in eight directions. For example, the \(\delta\) value for direction 1 i.e \(0^{0}\) with \(\Delta \varphi = \pi /4\) designated as \(\delta_{1}\) is calculated as shown in Fig. 1a

For example, as shown in Fig. 1c the center pixel values is Z = 141 (marked red in Fig. 1c) and the angular neighbors under consideration are a, b, and c are 148, 142, and 145 respectively in zero degree direction. Therefore, the differential excitation value in zero degree direction according to Eq. 1 is obtained as − 1.62. Similarly, obtain \(\delta_{2} ,\delta_{3} , \ldots ,\delta_{8}\) values for remaining seven directions. Now, the angular mean differential excitation pattern (AMDeP) is obtained by binary encoding \(\delta\) values with given threshold and summing up to decimal values using predefined weights. The AMDeP for R = 1, P = 8 is computed using Eq. (2) as

where,

The example angular pattern value computation is as shown in Fig. 1c. The AMDeP values ranges from 0 to 255, total 256 values. Obtain the histogram of AMDeP mapped image by considering each pixel in the given image as center pixel and designated as PTN1. In second stage, the radial mean values are obtained for specified range of R and compute the differential excitation values in eight directions nominated as \(\delta_{r1,} \delta_{r2,} \ldots ,\delta_{r8}\). The differential excitation for the center pixel Z in radial direction 1 as shown in Fig. 1b is obtained using Eq. (4) as

Therefore, the radial mean differential excitation pattern (RMDeP) is obtained by thresholding and encoding the differential excitation values as given in Eq. (5). Where \(\delta_{rj}\) represents differential excitation values in different directions as shown in Fig. 1c (differential matrix). Now, obtain radial pattern value by binary encoding \(\delta_{rj}\) values and summing up to decimal values utilizing predefined weights as shown in Fig. 1c. In the example, radial mean matrix computation using three radial neighbors are considered as shown in Fig. 1c.

where,

\(S\left( {\delta_{rj} } \right)\) is called threshold function. The histogram of RMDeP mapped image as is designated as PTN2. Now, concatenate PTN1 and PTN2 to form LMDeP feature vector. Hence, feature vector length is \(1 \times 512\) for a given image.

4 Experimental results

To assess the performance of LMDeP, some established datasets with heterogeneous texture content are adapted. The adapted datasets in this work are Corel-1 K [22, 24] and Corel-5 K [22, 24] datasets. To demonstrate the noise robustness of LMDeP, the dataset images are contaminated with Gaussian noise with different signal to noise ratios. The outcomes showed that LMDeP outweigh some dominant strategies like local tetra patterns (LTrP), LDP and LOOP. Figure 2 shows example adapted textures with different SNR values.

4.1 Similarity metric

The d1 distance metric is used for similarity measurement between the query image and dataset images. The d1 distance function [5] for a feature vector of length L is defined as

where, \(f_{Dsi}\) stands for feature vector of ith image in the dataset and \(f_{q}\) stands for query feature vector.

4.2 Performance metric

The performance of LMDeP is evaluated compared to other strategies in terms of average precision rate (APR) and average recall rate (ARR) [5]. The precision and recall values are computed using Eqs. (8) and (9) for dataset with M number of images and m number of top matches as

The average precision for jth category \(Ap_{m} (j)\) with M1 number of images are determined by using the formula

The APR and ARR for a given dataset are computed as

where, M2 stands for number of categories in the dataset, \(Ap_{m}\) stands for average precision per category and \(Ar_{m}\) stands for average recall per category.

4.3 Experiment #1

The experiment #1 is conducted on Corel-1 K dataset. Corel-1 K is composed of 1000 images with ten classes and every class comprises of 100 images. For plotting the outcomes, each picture in the database is utilized as query picture. For each query picture the framework accumulates pictures from database with shortest d1 distance computed using Eq. (7). In those pictures some are significant to inquiry picture and some are non-important outcomes which don’t match to query picture. Pictures which are of user concern are called relevant images and entire pictures retrieved for a given query are called retrieved images. The retrieval performance is computed in terms of precision which is the ratio of relevant images to retrieved images. Recall and precision values are computed using Eqs. (8)–(13). For each query picture the retrieved pictures are accumulated into groups of 10, 20, 30,…, 100. The efficiency of LMDeP compared to other strategies is depicted in Fig. 3 in terms of APR and ARR Vs number of images retrieved without noise. It is obvious from the outcomes that the proposed strategy out played the current strategies. Tables 1 and 2 presents results on noise simulated dataset with different SNR values.

The following conclusions are drawn from the experimental results

-

The APR has essentially enhanced from 60.05, 65.04, and 68% to 68.2% as contrasted with LDP, LTrP and LOOP individually on Corel-1 K dataset without addition of noise. As shown in Fig. 3a. LOOP initially performed comparable to LMDeP but as the number of retrieved images increases LMDeP surpassed LOOP and remaining strategies.

-

The Fig. 3b and Table 1 indicates that the LMDeP surpasses the remaining strategies in terms of ARR.

-

The LMDeP likewise demonstrated noteworthy improvement compared to other methodologies in the presence of noise in texture images with varying SNR values on Corel-1 K dataset as shown in Table 1 and Fig. 4.

4.4 Experiment #2

The Corel-5 K picture database [24] comprises of five thousand pictures with fifty classes and every class is having hundred pictures. The execution of proposed technique with other existing strategies is arranged in Table 2, Figs. 5 and 6.

The following conclusions are drawn from experimental results

-

APR of LMDeP (46.15%) improved significantly compared to LDP (37.15%), LOOP (42.9%), and LTrP (44.4) on Corel-5 K dataset with m = 10 as shown in Fig. 5a.

-

The retrieval performance of LMDeP surpasses remaining strategies with and without noise in texture images as shown in Figs. 5b, 6 and Table 2

The trial results from Figs. 5 and 6 conclude that LMDeP surpasses various strategies in terms of APR and ARR on Corel-5 K dataset with or without noise.

5 Conclusion

In this work, a novel texture pattern which is robust to illumination variations and noise is presented. The proposed work integrated radial and angular point mean to extract local information structure. Utilizing mean of neighbor pixels and differential excitation to obtain relationship between focused pixels and its neighbors considerably reduced the effect of noise on texture pattern. The outcome imply that utilizing both radial and angular mean of points produces enriched score instead of using only gradient or edge data on natural and noisy textures. The retrieval results of proposed LMDeP outweighed the current strategies by 6.3, 3.4, and 1.95% on LDP, LTrP, and LOOP respectively on Corel-1 K dataset. LMDeP also showed significant improvement in precision rate by a factor of 9, 3.25, and 1.75% correlated with LDP, LOOP, and LTrP respectively on Corel-5 K dataset. In conclusion, LMDeP outperformed the state of the art strategies in terms of APR and ARR on benchmark datasets Corel-1 K and Corel-5 K. In this paper, only binary encoding is used for differential excitation values. Results can be further improved by considering ternary encoding or fuzzy encoding. Due to the potency of the proposed methodology, it is additionally appropriate for different pattern recognition applications like bio-medical image retrieval, texture retrieval, fingerprint recognition, and face recognition, etc.

References

Smeulders AWM, Worring M, Santini S, Gupta A, Jain R (2000) Content-based image retrieval at the end of the early years. IEEE Trans Pattern Anal Mach Intell 22(12):1349–1380

Rui Y, Huang TS, Chang SF (1999) Image retrieval: current techniques, promising directions, and open issues. J Vis Commun Image Rep 10(1):9–62

Verma M, Raman B (2015) Center symmetric local binary co-occurrence pattern for texture, face and bio-medical image retrieval. J Vis Commun Image Rep 32:224–236

Verma M, Raman B (2018) Local neighborhood difference pattern: a new feature descriptor for natural and texture image retrieval. Multimed Tools Appl 77(10):11843–11866

Murala S, Maheshwari RP, Balasubramanian R (2012) Local tetra patterns: a new feature descriptor for content-based image retrieval. IEEE Trans Image Process 21(5):2874–2886

Murala S, Maheshwari RP, Balasubramanian R (2012) Local maximum edge binary patterns: a new descriptor for image retrieval and object tracking. Signal Process 92(6):1467–1479

Mural S, Jonathan Wu QM (2014) MRI and CT image indexing and retrieval using local mesh peak valley edge patterns. J Image Commun 29(3):400–409

Mural S, Wu QM (2013) Peak valley edge patterns: a new descriptor for biomedical image indexing and retrieval. In: Conference on computer vision and pattern recognition (CVPR) workshops, IEEE, pp 444–449

Müller H, Rosset A, Vallée JP, Terrier F, Geissbuhler A (2004) A reference data set for the evaluation of medical image retrieval systems. Comput Med Imaging Gr 28(6):295–305

Ahonen T, Hadid A, Pietikäinen M (2006) Face description with local binary patterns: application to face recognition. IEEE Trans Pattern Anal Mach Intell 28(12):2037–2041

Cohen FS, Fan Z, Attali S (1991) Automated inspection of textile fabrics using textural models. IEEE Trans Pattern Anal Mach Intell 13(8):803–808

Histogram C (2009) Robust object tracking using joint color-texture histogram. Int J Pattern Recognit Artif Intell 23(7):1245–1263

Alvarez MJ, González E, Bianconi F, Armesto J, Fernández A (2010) Colour and texture features for image retrieval in granite industry. DYNA 77(161):121–130

Ojala T, Pietikäinen M, Mäenpää T (2002) Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell 24(7):971–987

Liao S, Law MWK, Chung ACS (2009) Dominant local binary patterns for texture classification. IEEE Trans Image Process 18(5):1107–1118

He Y, Sang N, Gao C (2012) Multi-structure local binary patterns for texture classification. Pattern Anal Appl 18(5):1107–1118

Rassem TH, Khoo BE (2014) Completed local ternary pattern for rotation invariant texture classification. Sci World J. https://doi.org/10.1155/2014/373254

Tan X, Triggs B (2010) Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans Image Process 19(6):1635–1650

Guo Z, Zhang L, Zhang D (2010) A completed modeling of local binary pattern operator for texture classification. IEEE Trans Image Process 19(6):1657–1663

Verma M, Raman B (2016) Local tri-directional patterns: a new texture feature descriptor for image retrieval. Digit Signal Process A Rev 51:62–72

Jabid T, Kabir MH, Chae O (2010) Facial expression recognition using local directional pattern (LDP). In: 17th IEEE international conference on image process, ICIP 2010, pp 1605–1608

Chakraborti T, McCane B, Mills S, Pal U (2017) LOOP descriptor: local optimal oriented pattern. IEEE Signal Process Lett 25(5):635–639

Chen J, Shinguang S, Zhao G (2009) A robust descriptor based on Weber’s Law. IEEE Trans Pattern Anal Mach Intell 32(9):1705–1720

Corel-10000 image database. http://www.ci.gxnu.edu.cn/cbir/Dataset.aspx

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Satya Kumar, G.V., Krishna Mohan, P.G. Local mean differential excitation pattern for content based image retrieval. SN Appl. Sci. 1, 46 (2019). https://doi.org/10.1007/s42452-018-0047-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-018-0047-2