Abstract

Microstructural classification is typically done manually by human experts, which gives rise to uncertainties due to subjectivity and reduces the overall efficiency. A high-throughput characterization is proposed based on deep learning, rapid acquisition technology, and mathematical statistics for the recognition, segmentation, and quantification of microstructure in weathering steel. The segmentation results showed that this method was accurate and efficient, and the segmentation of inclusions and pearlite phase achieved accuracy of 89.95% and 90.86%, respectively. The time required for batch processing by MIPAR software involving thresholding segmentation, morphological processing, and small area deletion was 1.05 s for a single image. By comparison, our system required only 0.102 s, which is ten times faster than the commercial software. The quantification results were extracted from large volumes of sequential image data (150 mm2, 62,216 images, 1024 × 1024 pixels), which ensure comprehensive statistics. Microstructure information, such as three-dimensional density distribution and the frequency of the minimum spatial distance of inclusions on the sample surface of 150 mm2, were quantified by extracting the coordinates and sizes of individual features. A refined characterization method for two-dimensional structures and spatial information that is unattainable when performing manually or with software is provided. That will be useful for understanding properties or behaviors of weathering steel, and reducing the resort to physical testing.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The volume fraction, dimension, and morphology of microstructure phases are responsible for the mechanical properties of metallurgical materials [1,2,3,4,5]. Complex microstructural information is often obtained by manual quantification or software quantification with artificial participation [6], which are both very time-consuming processes and virtually impossible to ensure completely objective statistics. Previous material characterization trends [7,8,9,10,11] indicate that microstructure quantification should ultimately accomplish two goals. One is providing a refined understanding of the structures or volumetric data to extract truly useful material characteristics. The other is efficiently analyzing large data volumes using automated processing. This motivation leads us to use deep learning method and high-throughput scanning electron microscope (SEM) to automatically collect images and segment and quantify pearlite, bainite and inclusions in weathering steel.

Microstructural quantification is mainly performed using commercial image processing software such as MIPAR, Image J, and Photoshop [12,13,14]. However, these are no longer sufficient for researchers due to time-consuming artificial selection. Many papers have focused on improving the precision and speed of segmentation and quantification [15,16,17,18]. Dutta et al. [19] developed an automated method for the morphological classification of ferrite–martensite dual-phase microstructures. The secondary phase precipitated in the gamma matrix of a nickel-based alloy was identified using a neuronal network [20]. This method achieved statistical reliability of 95% from 54 SEM images, but the number of test images was still too small to guarantee reliable accuracy. Albuquerque et al. [21] proposed a computational system for analyzing images of ferrous alloys, which optimized the process of segmenting and quantifying microstructures using mathematical morphologies and an artificial neural network. Morphological operators and artificial neural networks are used for segmentation and quantification, respectively, and take 180 s for a single image output because of the separation of the feature extraction and classification operations.

In recent years, artificial intelligence has made breakthroughs in many fields, especially computer vision [22,23,24,25,26,27]. Deep learning methods have also captured attention due to their adaptive, self-learning, and parallel processing abilities [28,29,30,31]. Bulgarevich et al. [32] demonstrated an accurate microstructure pattern recognition/segmentation technique that was combined with other mathematical image processing and analysis methods to handle image data. Such machine learning models may not be able to classify data with fewer characteristics, e.g., bainite–pearlite in weathering steel, and the test set is insufficient (~ 1 mm2). Yoshitaka et al. [33] demonstrated three deep learning methods, including LeNet5, AlexNet, and GoogLeNet, for microstructure classification. Due to the need for fewer training images and more precise segmentation [34, 35], U-Net detection network has demonstrated excellent performance for biomedical images [36,37,38]. For example, Ronneberger et al. [39] proposed the use of U-Net convolutional networks that relied on the use of data augmentation to achieve more precise segmentations with fewer training images.

So far, images from test sets are unordered and insufficient, usually only dozens to a hundred images (~ 1 mm2). The application of deep learning methods like U-Net for the quantitative characterization of materials is still rare; however, Navigator-OPA high throughput SEM (joint research by Focus e-Beam Technology Co., Ltd. and NCS Testing Technology Co., Ltd.) provides an effective images acquisition technology that ensures high resolution while achieving large-scale continuous collection. Previous studies suggest that U-Net is suitable for the recognition and pixel-wise segmentation of microstructures.

In this work, we describe a characterization method based on U-Net, high-throughput SEM, and mathematical statistics to quantify the microstructures in weathering steel. Our method has four advantages—speed, accuracy, comprehensiveness, and refinement—that can simultaneously meet four of these requirements.

-

(1)

Speed The image acquisition speed of high-throughput SEM is 10 times faster than conventional SEM. Four regions of target detection (candidate region generation, feature extraction, classification and location refinement) were unified into a neural network framework to ensure faster running speed.

-

(2)

Accuracy The trained U-Net architecture was used to perform pixel-wise segmentation. The generalization ability of U-Net network is strong enough to predict unknown image, which improved the accuracy of segmentation and quantification.

-

(3)

Comprehensiveness The quantification results were extracted from sequential image data (150 mm2, 62,216 images, 1024 × 1024 pixels), which ensured comprehensive statistics.

-

(4)

Refinement Individual features were quantified by extracting the coordinates and sizes from SEM images. The suitable mathematical statistics provided a refined characterization of two-dimensional structures and spatial information.

We provide a brief introduction to the experimental methods and procedures and then show the segmentation and evaluation results. The extracted binary images, combined with other suitable mathematical methods, were used to represent the area, density, and distribution of the microstructures.

2 Methods

2.1 Overview of proposed method

Figure 1 shows an overall flowchart of the proposed image quantitative method. First, U-Net detection network model was established to realize the classification and segmentation tasks. Second, we obtained high-resolution images by continuous collection using a high-throughput SEM. Third, we used suitable mathematical methods to extract more refined information from the binary images.

2.2 U-Net based deep convolutional networks

We constructed a network (Fig. 2) based on the original U-Net architecture that consisted of a contracting path and an expanding path [39,40,41]. The left side of the structure was the down-sampling layer, which captured the global content using a contracting path. The right side was the up-sampling layer, which propagated contextual information into higher-resolution layers via an expanding path. The entire architecture consisted of 9 blocks, between which there are 4 max-pooling layers and 4 transposed convolutions. The inner part of the block included a convolution layer, ReLu activation function, and a dropout layer, which served to extract deep features from shallow features and deal with nonlinear problems to prevent overfitting.

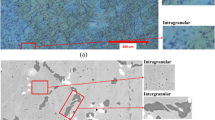

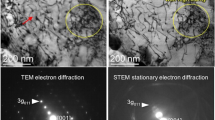

In the experiments, images from SMA490BW weathering steel were chosen as the dataset. A group of material experts and metallographers assigned the objects shown on the polished and corroded surfaces to the corresponding phases according to experience and related records, as shown in Fig. 3. Afterward, manual labels were created using LabelMe software.

Manually labeled data were divided into a training set and validation set, as shown in Fig. 1. The training set was used to obtain the target detection model, and the validation set was used to verify the reliability of the model. The mean pixel accuracy (MPA) of the testing set was regarded as the judgment condition for training termination, and the termination threshold was set to 98% during the training process. When MPA of the training set was greater than or equal to 98% for three consecutive times, the training was terminated and saved as the final feature recognition and extraction model of this method. Cross entropy was used in the loss function during training. The optimization function used Adam during back propagation.

In addition, to solve the over-fitting phenomenon caused by insufficient data, data augmentation was performed before beginning the training. Training the data after enlargement gives the trained model a stronger generalization ability, making it possible to process the features acquired in different scenarios.

2.3 Image data sets

A hot-rolled SMA490BW weathering steel plate (200 mm × 160 mm × 11 mm) was used in the experiment. Two sections from one-third width of the plate were mounted for metallographic sample preparation. Samples were ground using silicon carbide papers to 3000 grit, and then polished using 3, 1, and 0.5 μm diamond slurries. After ultrasonic cleaning, sample No. 1 remained polished, and sample No. 2 was corroded in a solution of nitric acid and alcohol. Two samples were placed into a high-throughput SEM to collect the image data. Collecting results are shown in Table 1. The sampling area of sample No. 1 was 150 mm2, and 62,216 consecutive images with 1024 × 1024 pixels were obtained. The sampling area of sample No. 2 was 70 mm2, and 65,600 consecutive images with 1024 × 1024 pixels were obtained.

2.4 Implementation and statistical analysis

All images representing the surface information of the sample (acquired in Sect. 2.3) were put into the trained U-Net target detection model for segmentation. For sample No. 1, the network eliminated interference from foreign pollutants and scratches on the quantitative results and performed correct identification of inclusions and accurate pixel-wise segmentation. For sample No. 2, the network automatically assigned objects to either the bainite or pearlite phases according to the microstructure features. After segmentation, binary graphs were obtained and marked as bainite and pearlite phases and nonmetallic inclusions.

The size, area, and coordinates of each object in samples No. 1 and No. 2 are extracted from the binary images using the connected region algorithm. According to the sizes of bainite, pearlite, or nonmetallic inclusions, an appropriate threshold value was selected to show the surface distribution. The distribution density of objects was calculated and characterized using a statistical method. The distribution difference of objects in different regions of hot-rolled steel sections was also quantitatively characterized. This method can extract more refined microstructure distribution details from images by quickly handling large volumes of image data, which will be useful for performing quality control on newly developed steels.

2.5 Performance evaluation

The goal during microstructural classification is to classify objects inside steel images based on microstructure classes. These objects can be considered as “background” (scratch and matrix) or “foreground” (bainite, pearlite, inclusions in the present microstructure), as shown in Fig. 3. The substructure of such objects should be classified with the correct label in this work, and the more the objects correctly classified by our method are, the more accurate the system is. Therefore, the classification performance using the metrics described by Eqs. (1)–(4) is evaluated.

where P measures the precision of the system; R measures the recall of the system; F1 is close to the smaller values of P and R; TP and TN are the number of correctly classified objects; FP and FN are the number of objects that were erroneously classified; and A measures the accuracy of the system. Accuracy represents the ratio of the number of samples correctly classified by the classifier to the total number of samples. To evaluate the semantic segmentation performance, the metrics described by Eqs. (5) and (6) is used.

where IOU is intersection over union; DICE is the dice coefficient; Pij is the number of pixels of class i predicted as class j; and Pii is the number of pixels of class i predicted as class i. The hardware used for experimentation was Intel(R) Xeon(R) CPU E5-2695V4 @2.10 GHz. All experimentation was carried out with Python version 3.5, TensorFlow and LabelMe.

3 Results and discussion

3.1 Classification and segmentation using U-Net

In addition to non-metallic inclusions, there were some scratches and pits on the surface of polished sample No. 1, which will interfere with U-Net predictions because of the similarity between the morphology and inclusions. To investigate the classification accuracy, we compared the output images selected from test data with the results marked by experts. Table 2 shows the confusion matrix of U-Net prediction and the actual classifications. In Table 2, 1478 non-metallic inclusions were predicted by U-Net, in which 1278 inclusions were correct segmented non-metallic inclusions (TP), and 200 were wrong segmented scratches and pits (FP). It also included 58 wrong segmented non-metallic inclusions (FN) and 1461 correct segmented scratches and pits (TN). Table 3 shows the evaluation results of the classifications made by U-Net network. The recall and precision numbers show the correct classification percentage of actual classes and predictions. The performance gives a recall rate of 95.66%, a precision rate of 86.47%, an accuracy of 91.39%, and a harmonic mean rate of 90.83%. These values indicate that U-Net is good at correctly classifying inclusions.

Table 2 shows the actual and predicted numbers of bainite and pearlite objects. U-Net misclassified 5 pearlite objects as bainite. However, 34 bainite were misclassified as pearlite because of complex inner structures and their similar morphologies. In Table 3, evaluation values of pearlite and bainite (sample No. 2) were more than 90%. Table 4 presents the results of the pixel-wise semantic segmentation. Pearlite has the highest Iou of 83.54%, and DICE of 90.86%. Inclusion and bainite achieved IOU of 82.18% and 67.04%, and DICE of 89.95% and 79.96%, respectively. IOU of bainite was slightly inferior to that of pearlite due to their similar texture and its discontinuous shape in the interior led to confusion.

The latest image processing software was used to compare the results. In addition to the small training set, our method also showed good segmentation accuracy and processing time, requiring only 0.102 s for a single image, which is far less than that of MIPAR image processing software requiring 163 s for manual processing and 1.05 s for a batch processing. The process consisted of thresholding segmentation, morphological processing and small area deletion requiring manual input and selection. The segmentation accuracy of U-Net is higher than that of MIPAR batch process, especially for bainite and nonmetallic inclusions, as shown in Table 4. The gray value of nonmetallic inclusions is close to that of the background, and the shape of bainite is various, which will reduce the segmentation accuracy of MIPAR.

Figures 4 and 5 show examples of inclusion segmentation using U-Net based on deep convolutional networks. It is noted that inclusions segmented by the network have some common features, including different heights and colors from the matrix, and the contour lines of the inclusions were round and not sharp, which is typically used to distinguish similar pits and scratches. The output details in Fig. 4 indicated that pixel-wise segmentation was more refined and efficient compared with the hand-crafted features, and it better sketched the continuous and clear outline changes of the objects. The final results of bainite and pearlite segmentation are depicted in Fig. 5, which shows that most objects were correctly classified. Single objects were obtained by the target detection network, which is of great significance to the statistical analysis of sample sections. Because of the good generalization ability of U-net network, the image segmentation model established in this paper can also be applicable to other types of steel with similar organization.

3.2 Time consumption

The time consumption of each step is shown in Table 5. First, a U-Net model is constructed based on a convolutional neural network by training it with image data, which involves learning microstructure feature information from labeled images. The corresponding time consumption of this process is shown in the label and train columns. Then, large volumes of image data were quickly obtained by high-throughput SEM, and the time consumption is shown in SEM column. Afterward, the established U-Net model provided image classification and pixel-wise segmentation. For inclusions (sample No. 1) and pearlite (sample No. 2), images of pixels with sizes of 1024 × 1024 required only 0.102 s to output a binary image, which was achieved in real-time. Based on the results in Table 5, for a sample with an area of 70 mm2, 279 min or less (collection and segmentation were performed simultaneously) was required from the surface image collection to the acquisition of individual feature regions. As a comparison, the batch processing method using MIPAR software takes 1148 min while manual drawing will take 41,000 min. Therefore, our method can achieve better speed and accuracy.

3.3 Quantitative results and statistical analysis

The evaluation results of classification and segmentation in Tables 2 and 3 confirm our expectation that the quantitative results extracted from images are trustworthy. The size, area, and coordinates of each object in samples No. 1 and No. 2 are quantified from the binary images using the connected region algorithm. Table 6 shows the quantitative results of the microstructure of SMA490BW weathering steel, and Fig. 6 shows its area percentage.

The single sizes and coordinates of the feature region were extracted using the connected region algorithm. Then, the spatial and dimensional information extracted from images were represented with figures and numbers using a suitable mathematical method. The statistical results and distribution trends were introduced using inclusions as an example. Larger nonmetallic inclusions are more likely to degrade performance due to a greater discontinuity in the matrix [42]. Figure 7 shows the area distribution of individual inclusions over the sample surface of 150 mm2. Based on Fig. 7, most inclusions were small, with an area less than 5 μm2 accounting for 67.23% and an area less than 10 μm2 accounting for 95%.

The three-dimensional distribution of inclusions on the sample surfaces of 150 mm2 was revealed according to their size and corresponding coordinates of individual objects, as shown in Fig. 8. The larger the size of the inclusion, the larger the color bar number. Inclusions with lager section areas were quickly selected from the signal color. In addition, the rolled plate section was divided into the outermost part, the second outer part, and the center part, each of which has a different structure and performance due to their different forming conditions [43]. Figure 8 indicates that our method of quantifying feature regions can be used to compare properties in these different regions, and even predict the performance without physical tests.

The distance distribution of inclusions was quantitatively calculated using U-Net and mathematical statistics to guide the smelting and subsequent processing of materials. Independent and dispersed inclusion spots have little influence on the performance, while dense or continuously distributed spots and blocks greatly degrade the performance [44]. In addition, existing research shows that a higher density of nonmetal inclusions results in a worse comprehensive performance of steel [45].

The spatial distance of inclusions was calculated using this proposed method as follows. First, we named all images from the testing set and created a coordinate system. The number of images in the horizontal direction (x) was 101, and the number of images in the vertical direction (y) was 154. All pixels in the images were associated with the coordinates x and y. Second, the coordinates of the inclusions were identified, and the center point coordinates of inclusions were determined by reading the image name and averaging the inclusion pixel value. Third, the minimum distance was screened. 5000 pixels (50 nm resolution) was set as the threshold value, and 5000 pixels were expanded in x and y positive and negative directions, using the inclusion center as the origin. In this region, all distances between inclusions were accurately calculated. After sorting all distances, the minimum distance was selected as the statistical distance. The distance results formed a normal distribution, as shown in Fig. 9. Bainite and pearlite in the microstructure can also be quantified using suitable mathematical methods to extract refined and comprehensive information.

4 Conclusions

This work demonstrated a high-throughput characterization method for the recognition, segmentation, and quantitative analysis of the microstructure in weathering steel from sequential SEM images. Images were rapidly acquired by high-throughput SEM and subjected to pixel-wise segmentation using a trained U-Net architecture. The result showed that pearlite phases and inclusions had pixel accuracies of 90.86% and 89.95%, respectively. The time consumption of a single image was around 0.102 s, which is ten times less than that of traditional methods. Besides the high accuracy and efficiency, this method can analyze large amounts of image data (150 mm2, 62,216 images, 1024 × 1024 pixels), which ensures the comprehensiveness of statistics and greatly eliminates incomplete statistics in a single field of view.

To demonstrate the diversity of the segmentation results, the distribution tendency of each extracted feature region was quantitatively counted by a mathematical method, using inclusions as an example. The area distribution of individual inclusions, the minimum spatial distance between inclusions, and the three-dimensional density and size distributions were obtained using this method, which provided a refined characterization method for two-dimensional structures or spatial information of microstructures. That will be useful for understanding distribution of microstructure in weathering steel, comparing properties in different regions, and even predicting the performance without physical tests. It is concluded that quantitative characterization of microstructures using the proposed method is an effective, accurate, comprehensive, and refined method for characterizing and analyzing the microstructure of steels.

References

A. Zare, A. Ekrami, Mater. Sci. Eng. A 530 (2011) 440–445.

R. Li, X. Zuo, Y. Hu, Z. Wang, D. Hu, Mater. Charact. 62 (2011) 801–806.

H. Ding, Z.Y. Tang, W. Li, M. Wang, D. Song, J. Iron Steel Res. Int. 13 (2006) No. 6, 66–70.

J. Zhou, D.S. Ma, H.X. Chi, Z.Z. Chen, X.Y. Li, J. Iron Steel Res. Int. 20 (2013) No. 9, 117–125.

J. Zhang, Q.W. Cai, H.B. Wu, K. Zhang, B. Wu, J. Iron Steel Res. Int. 19 (2012) No. 3, 67–72.

T.J. Collins, Biotechniques 43 (2007) No. S1, 25–30.

B.Y. Ma, X.J. Ban, Y. Su, C.N. Liu, H. Wang, W.H. Xue, Y.H. Zhi, D. Wu, Micron 116 (2019) 5–14.

J. Komenda, Mater. Charact. 46 (2001) 87–92.

A.L. Garcia-Garcia, I. Dominguez-Lopez, L. Lopez-Jimenez, J.D.O. Barceinas-Sanchez, Mater. Charact. 87 (2014) 116–124.

V.H.C. de Albuquerque, P.C. Cortez, A.R. de Alexandria, J.M.R.S. Tavares, Nondestr. Test. Eval. 23 (2008) 273–283.

L. Duval1, M. Moreaud, C. Couprie, D. Jeulin, H. Talbot, J. Angulo, in: 2014 IEEE International Conference on Image Processing, Paris, France, 2014, pp. 4862–4866.

C.A. Schneider, W.S. Rasband, K.W. Eliceiri, Nat. Met. 9 (2012) 671–675.

P. Ctibor, R. Lechnerová, V. Bene, Mater. Charact. 56 (2006) 297–304.

S.G. Lee, Y. Mao, A.M. Gokhale, J. Harris, M.F. Horstemeyer, Mater. Charact. 60 (2009) 964–970.

V.H.C. de Albuquerque, P.P. Reboucas Filho, T.S. Cavalcante, J.M.R.S. Tavares, J. Microsc. 240 (2010) 50–59.

R.B. Oliveira, M.E. Filho, Z. Ma, J.P. Papa, A.S. Pereira, J.M.R.S. Tavares, Comput. Met. Programs Biomed. 131 (2016) 127–141.

D. Kim, J.J. Liu, C. Han, Chem. Eng. Sci. 66 (2011) 6264–6271.

S. Zajac, V. Schwinn, K.H. Tacke, Mater. Sci. Forum 500–501 (2005) 387–394.

T. Dutta, D. Das, S. Banerjee, S.K. Saha, S. Datta, Measurement 137 (2019) 595–603.

V.H.C. de Albuquerque, C.C. Silva, T.I. Menezes, J.P. Farias, J.M.R.S. Tavares, Microsc. Res. Technol. 74 (2011) 36–46.

V.H.C. de Albuquerque, J.M.R.S. Tavares, P.C. Cortez, Int. J. Microstruct. Mater. Propert. 5 (2010) 52–64.

B.L. DeCost, E.A. Holm, Comput. Mater. Sci. 110 (2015) 126–133.

D.S. Jodas, A.S. Pereira, J.M.R.S. Tavares, Expert Systems Appl. 46 (2016) 1–14.

L. Staniewicz, P.A. Midgley, Adv. Struct. Chem. Imaging 1 (2015) 9.

I. Arganda-Carreras, V. Kaynig, C. Rueden, K.W. Eliceiri, J. Schindelin, A. Cardona, H. Sebastian Seung, Bioinformatics 33 (2017) 2424–2426.

N. Dalal, B. Triggs, Histograms of oriented gradients for human detection, in: Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Montbonnot, France, 2005.

N. Vyas, R.L. Sammons, O. Addison, H. Dehghani, A.D. Walmsley, Sci. Rep. 6 (2016) 32694.

S.H. Kim, J.H. Lee, B. Ko, J.Y. Nam, in: 2010 International Conference on Machine Learning and Cybernetics, Qingdao, China, 2010, pp. 3190–3194.

J. Long, E. Shelhamer, T. Darrell, in: 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, America, 2015, pp. 640–651.

S.M. Azimi, D. Britz, M. Engstler, M. Fritz, F. Mucklich, Sci. Rep. 8 (2018) 2128.

B.Y. Ma, X.J. Ban, H.Y. Huang, Y.L. Chen, W.B. Liu, Y.H. Zhi, Symmetry 10 (2018) 107.

D.S. Bulgarevich, S. Tsukamoto, T. Kasuya, M. Demura, M. Watanabe, Sci. Rep. 8 (2018) 2708.

A. Yoshitaka, T. Motoki, H. Shogo, Tetsu-to-Hagane 102 (2016) 722–729.

Q. Zhang, Z. Cui, X. Niu, S. Geng, Y. Qiao, International Conference on Neural Information Processing (2017) 364–372.

S.K. Devalla, P.K. Renukanand, B.K. Sreedhar, G. Subramanian, L. Zhang, S. Perera, J.M. Mari, K.S. Chin, T.A. Tun, N.G. Strouthidis, T. Aung, A.H. Thiery, M.J.A. Girard, Biomed. Opt. Express 9 (2018) 3244–3265.

Z. Zhang, Q. Liu, Y. Wang, IEEE Geosci. Remote Sens. Lett. 15 (2018) 749–753.

B. Norman, V. Pedoia, S. Majumdar, Radiology 288 (2018) 177–185.

H. Dong, G. Yang, F. Liu, Y. Mo, Y. Guo, MIUA 723 (2017) 506–517.

O. Ronneberger, P. Fischer, T. Brox, in: 18th International Conference, Munich, Germany, 2015, pp. 234–241.

D. Stoller, S. Ewert, S. Dixon, Wave-U-Net: a multi-scale neural network for end-to-end audio source separation, in: 19th International Society for Music Information Retrieval Conference, Paris, France, 2018.

A. Sevastopolsky, Pattern Recognition Image Anal. 27 (2017) 618–624.

M. Fernandes, J.C. Pires, N. Cheung, A. Garcia, Mater. Charact. 51 (2003) 301–308.

H.Y. Ha, C.J. Park, H.S. Kwon, Corros. Sci. 49 (2007) 1266–1275.

I.I. Reformatskaya, I.G. Rodionova, Y.A. Beilin, L.A. NiselSon, A.N. Podobaev, Prot. Met. 40 (2004) 447452.

Y. Tomita, Mater. Sci. Technol. 11 (1995) 508–513.

Acknowledgements

This work was supported by the National Key Research and Development Program of China (No. 2017YFB0702303).

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Han, B., Wan, Wh., Sun, Dd. et al. A deep learning-based method for segmentation and quantitative characterization of microstructures in weathering steel from sequential scanning electron microscope images. J. Iron Steel Res. Int. 29, 836–845 (2022). https://doi.org/10.1007/s42243-021-00719-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42243-021-00719-7