Abstract

Population-based cancer registry studies are conducted to investigate the various cancer question and have important impacts on cancer control. In order to investigate cancer prognosis from cancer registry data, it is necessary to adjust the effect of deaths from other causes, since cancer registry data include deaths from causes other than cancer. To correct for the effect of deaths from other causes, excess hazard models are often used. The concept of the excess hazard model is that the hazard function for any death in a cancer registry population is the sum of the hazard for cancer deaths, refer to the excess hazard, and the hazard for deaths from other causes. The Cox proportional hazard model for the excess hazard has been developed, and for this model, Perme et al. (Biostatistics 10:136–146, 2009) proposed the inference procedure of the regression coefficients using the techniques of the EM algorithm to compute the maximum likelihood estimator. In this article, we present the large sample properties for the maximum likelihood estimator. We introduce a consistent estimator of the variance for the regression coefficients based on the technique of the semiparametric theory and the consistency and the asymptotic normality of the estimator. The empirical property of variance estimator is investigated by the finite sample simulation studies. We also apply the variance estimator to cancer registry data for stomach, lung, and liver cancer patients from the Surveillance, Epidemiology, and End Results (SEER) database in U.S.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cancer registries are effectively used in various cancer studies and play important roles in cancer control. A series of CONCORD studies addressed differences in cancer survival rates among nations for various cancer types, such as breast, colon, gastric, and prostate cancers (Coleman et al., 2008; Allemani et al., 2015, 2018). Derks et al. (2018) examined differences in survival outcomes due to differences in treatment policies among countries by the relative excess risks for older breast cancer patients in the Netherlands, Belgium, Ireland, England, and Greater Poland. These studies used data from cancer registries.

To address these scientific questions, rather than the overall survival, which is defined as the duration to the all-cause death, the cancer-specific survival is often of interest. Thus, the statistical analysis that accounts for the cause of death is appreciated. A potential approach is to apply methods for the competing risk analysis (Andersen et al.,1993, pp. 512–515; Fine and Gray, 1999). However, in cancer registries, reliable information on cause of death is hard to correct comprehensively. Then, in the field of cancer registry data analysis without the information on the cause of death, special survival analysis methods have been developed, in which the external data such as the life table of the general population are used to adjust the non-cancer death. This framework for inference of the cancer registry data is often referred as the relative survival framework (Perme et al., 2016; Kalager et al., 2021), since the relative survival ratio is one of key measure used in this field. The relative survival ratio is defined as the ratio of the overall survival to the non-cancer survival. Utilizing an external database of the life table for the non-cancer general population, various methods to handle the relative survival ratio has been proposed (Ederer et al., 1961; Hakulinen, 1982) and widely used in population studies (Coleman et al., 2008; Angle et al., 2014; Allemani et al., 2015). The net survival, which is defined as the survival probability if the cancer subject would not die due to reasons other than the cancer, is an alternative measure and is getting popular and popular after (Perme et al., 2012) introduced a novel estimator with sound theoretical justification. An application was reported by Allemani et al. (2018).

All these methods mentioned are for marginal quantities. Since cancer registry data consist of huge number of cancer patients, stratified analysis by age, gender, and so on with these simple methods is preferable in general without any strong statistical assumptions. On the other hand, regression analysis is also important. For example, for rare cancer types, the stratified analysis can be unstable. In assessing some covariates effects jointly, it would be useful to apply some regression models. Various regression models for cancer registry data were proposed including the parametric models (Rubio et al., 2018), the additive hazard model (Lambert et al., 2005; Cortese & Scheike, 2008) and the spline based nonproportional hazard model (Bolard et al., 2002; Gorgi et al., 2003). The Cox proportional hazard model, which is probably one of the most famous regression models for survival analysis, was also examined (Hakulinen & Tenkanen, 1987; Estève et al., 1990; Sasieni, 1996; Dickman et al., 2004; Nelson et al., 2007; Perme et al., 2009). Sasieni (1996) introduced martingale estimating equations motivated by the partial likelihood. The unweighted estimating equation, which corresponds to the score function for the partial likelihood in the standard survival analysis, was not efficient for the Cox proportional hazard model for the net survival. Sasieni (1996) considered a weighted estimating equation, which gave an efficient estimator for the regression coefficients. However, to estimate the optimal weight, a smoothing technique was needed.

Perme et al. (2009) proposed the semiparametric maximum likelihood estimation. They successfully introduced a simple method to obtain the maximum likelihood estimator based on the expectation–maximization (EM) algorithm. The variance of the estimator was obtained with the Louis’ method (Louis, 1982). Derks et al. (2018) applied this method to cancer registry data of older breast cancer patients in the Netherlands, Belgium, Ireland, England, and Greater Poland. Although the goodness of the method by Perme et al. (2009) was examined by their simulation study, asymptotic properties were not discussed. In this paper, we established asymptotic justification of the maximum likelihood estimator of the Cox proportional hazard model for the net survival by applying the general semiparametric efficiency theory. Instead of the Louis’ variance estimator, we consider a variance estimator from the semiparametric theory.

The rest of the paper is organized as follows. In Sect. 2, we introduce cancer registry data and the EM-based inference procedure for the Cox proportional excess hazard model. In Sect. 3, we present the consistency of the maximum likelihood estimator for the regression coefficients. In Sect. 4, the asymptotic normality of the maximum likelihood estimator for the regression coefficients is presented. A consistent estimator of its asymptotic variance is also presented. In Sect. 5, we report the results of a simulation study, and in Sect. 6 we apply the proposed method to a real data from the Surveillance, Epidemiology, and End Results (SEER) Program. Some discussions are made in Sect. 7. All the theoretical details are placed in Appendixes.

2 Maximum likelihood estimation for Cox proportional excess hazard model

2.1 Notations and general settings for the cancer registry data

Analysis of cancer registry data generally requires two datasets: the cancer registry data and the population life tables. Cancer registry data consists of information on characteristics at diagnosis and the survival time for a subject diagnosed with cancer. Table 1 illustrates the data structure of the cancer registry data. Note that no information on the cause of death is included in the cancer registry data. The population life tables are a set of tables of annual mortality rates calculated by demographic variables for the general population, based on demographic statistics. Table 2 shows an example of the life table for the male population by age and calendar year. The information from the life table is used to correct the impact of death due to causes other than the cancer of interest. The mathematical formulations of the cancer registry data and the relative survival framework are given as follows.

Let Z be a bounded vector of baseline covariates in the cancer registry data. Typically, it consists of age at diagnosis, gender, year at diagnosis, and some other additional variables. Let \(T_O\) and C be the time-to-death due to any causes and the potential censoring time from the time of diagnosis. \(T_O\) may be censored by C. We suppose that \(T=T_O \wedge C\) and \(\Delta =I(T_O \le C)\) are observed, where \(A \wedge B\) is the minimum value of A and B and \(I(\cdot )\) is the indicator function, which takes 1 if the event in bracket is true and 0 otherwise.

Let \(T_E\) and \(T_P\) be the potential time-to-death due to cancer and that due to reasons other than the cancer, respectively. Then, \(T_O\) is expressed as \(T_O=T_E \wedge T_P\). Define \(\Delta _E=I(T_E \le T_P)\). We regard \((T, \Delta , \Delta _E, Z)\) as the complete data, although the information of \(\Delta _E\) is unobserved in the cancer registry data. The observed information is the triple \((T,\Delta , Z)\) for each subject in the cancer registry data. Let the corresponding counting process and the at-risk process denoted by \(N(t)=I(T \le t, \Delta =1)\) and \(Y(t)=I(T \ge t)\), respectively. Let \(\tau \) be a constant satisfying \(\Pr (T> \tau |Z) > 0\) for all Z. Suppose n i.i.d. copies of \((T,\Delta , Z)\) are observed and they are denoted by \((T_i,\Delta _i, Z_i)\). For other random variables, the subscript i is also used to represent the quantity for the ith subject.

Let \(F_Z(z)\) be the distribution function for Z. The conditional survival function for \(T_O\) given Z is denoted by \(S_O(t|Z)=\Pr (T_O > t |Z)\), and the corresponding hazard and cumulative hazard functions are denoted by \(\lambda _O(t|Z)\) and \(\Lambda _O(t|Z)\), respectively. These functions for \(T_E\), \(T_P\), and C are denoted in the same way with the subscripts E, P, and C, respectively. Suppose the assumption

holds, where for any random variables A, B, and C, the conditional independence between A and B given C is denoted by \(A\perp B|C\). Then, the hazard function for \(T_O\) is represented as the sum of those for \(T_E\) and \(T_P\),

The hazard function of \(T_E\), \(\lambda _E(t|Z)\), is called the excess hazard, representing the excess risk of death by cancer. The conditional hazard function \(\lambda _P(t|Z)\) and the conditional survival function \(S_P(t|Z)\) are calculated by an external database for population mortality and are regarded as known function.

2.2 Cox proportional excess hazard model

Suppose \(\lambda _E(t|Z)\) is modeled via a Cox-type regression model

where \(\beta \) is a vector of regression coefficients and \(\lambda (t)\) is an unspecified baseline hazard function. Denote the baseline cumulative hazard function by \(\Lambda (t)=\int _0^t{\lambda (u)\mathrm{{d}}u}\). Let \(\beta _0\), \(\lambda _0(t)\), and \(\Lambda _0(t)\) be the true values of \(\beta \), \(\lambda (t)\), and \(\Lambda (t)\), respectively. Furthermore, we assume

Under the assumptions of \(({ \mathrm A1})\) and \(({ \mathrm A2})\), the probability density function of the observed data \((T, \Delta , Z)\) is given by

where \(\mathrm{{d}}\Lambda (t)=\Lambda (t) - \Lambda (t-)\), \(\mathrm{{d}}\Lambda _P(t|Z)=\Lambda _P(t|Z) - \Lambda _P(t-|Z)\), \(\mathrm{{d}}\Lambda _C(t|z)=\Lambda _C(t|z) - \Lambda _C(t-|z)\), and \(\mathrm{{d}}F_Z(z)=F_Z(z)-F_Z(z-)\). The observed likelihood function is

where \(L(\Lambda ,\beta ;T_i,\Delta _i,Z_i)\) is the contribution of the ith subject to the likelihood given by

Perme et al. (2009) proposed the semiparametric maximum likelihood estimator for the regression coefficients \(\beta \), based on the EM algorithm. In constructing the semiparametric likelihood, \(\Lambda (t)\) is regarded as a right-continuous and non-decreasing step function with \(\Lambda (0)=0\) and positive jump size \(\lambda (t)>0\) at all uncensored event time points to treat nonparametrically. The likelihood function for the complete data is

and the log-likelihood after profiling the baseline hazard function out is

Set the initial values of \(\lambda \) and \(\beta \) as \(\lambda ^{(0)}\) and \(\beta ^{(0)}\), respectively. Then, the conditional expectation of \(\ell _{CP}(\beta )\) given the observed data is

The value of \(\beta \) is updated by maximizing the Q function and the updated value is denoted by \(\beta ^{(1)}\). The value of \(\lambda \) is updated using the Breslow estimator as

By updating \(\lambda ^{(k)}\) and \(\beta ^{(k)}\) and repeating the computation and maximization of the Q-function, the estimators \(\hat{\lambda }\) and \(\hat{\beta }\) are obtained. The corresponding estimator of the baseline cumulative hazard function is represented by

3 Consistency

In this section, we prove the consistency of the maximum likelihood estimator. Suppose that \(\beta \) is in a compact set \(\mathscr {B}\) and the covariance matrix of Z is positive definite. The existence of the pair of \((\Lambda , \beta )\) which maximizes the observed likelihood function (3) is proved in Appendix A based on the techniques using in the proof of theorem 1 of Fang et al. (2005). The identifiability of \((\Lambda , \beta )\), in the sense that \(L(\Lambda ,\beta ;t,\delta ,z) = L(\Lambda _0, \beta _0;t,\delta ,z)\) implies \((\Lambda , \beta )=(\Lambda _0, \beta _0)\) on \(t \in [0,\tau ]\), is also shown in Appendix A.

The semiparametric model (2) has a set of the unknown parameters \(\left( \beta , \eta \right) \), where \(\eta =\left\{ \Lambda , \Lambda _C, F_Z \right\} \) is the nuisance parameter. Consider parametric submodels \(\Lambda _{h_1}(t;\gamma _1) = \int _0^t{ \left\{ 1 + \gamma _1 h_1(u) \right\} \mathrm{{d}}\Lambda _0(u) } = \int _0^t{ \left\{ 1 + \gamma _1 h_1(u) \right\} \lambda _0(u) \mathrm{{d}}u }\), \(\Lambda _{C,h_2}(t|Z;\gamma _2) = \int _0^t{ \left\{ 1 + \gamma _2 h_1(u,Z) \right\} \mathrm{{d}}\Lambda _C(u|Z) } = \int _0^t{ \left\{ 1 + \gamma _2 h_2(u,Z) \right\} \lambda _C(u|Z) \mathrm{{d}}u }\), and \(F_{Z,h_3}(z;\gamma _3)=\int _0^t{ \left\{ 1 + \gamma _3 h_3(z) \right\} \mathrm{{d}}F_Z(z) }\) where \(h_1(u)\) and \(h_2(u,Z)\) are an arbitrary function and \(h_3(z)\) is a mean-zero measurable function with finite variance. The log-likelihood function based on (2) under the parametric submodel is defined by

where \(\gamma =(\gamma _1, \gamma _2, \gamma _3)^T\) and \(h=(h_1, h_2, h_3)^T\) Let

Since the maximum likelihood estimator \(\hat{\beta }\) maximizes the likelihood and then maximizes it under any parametric submodel, it satisfies

for any h, where

and \(M_C(t)=I(C \le t, \Delta = 0) - \int _0^t{ Y(u) \mathrm{{d}}\Lambda _C(u|Z) }\) is a square integrable martingale with respect to some filtrations (Fleming & Harrington, 1991). Then, it can be shown that \(E \left[ U_{1}(\beta ,\Lambda ;h) \right] =0\) for all bounded functions h on \(t \in [0,\tau ]\) and \(Z_1\).

Theorem 1

Under the assumptions (A1) and (A2), the maximum likelihood estimators are consistent; as \(n \rightarrow \infty \), \(\hat{\beta }\) converge in probability to \(\beta _0\) and \(\hat{\Lambda }(t)\) converge in probability to \(\Lambda _0(t)\) uniformly in \(t \in [0,\tau ]\).

Proof

The estimator (5) is represented by

Letting \(h_1(t)=1\) in the score equation (6) leads to this estimator. Since the vector of covariates Z is bounded and the parameter space \(\mathscr {B}\) is compact, \(e^{\beta ^TZ}\) is bounded, and its upper bound is denoted by \(K_u\). By the uniform low of large number (Pollard, 1990, page 41), \(n^{-1}\sum _{j=1}^n{ Y_j(u)e^{\beta ^TZ_j} }\) converges almost surely to \(E\left[ Y(u)e^{\beta ^TZ}\right] \in (0,K_u]\), uniformly in \(t \in [0,\tau ]\). By this result and \(W(t|Z;\Lambda ,\beta ) \in [0,1]\) for all \(t \in [0,\tau ]\) and Z, \(W(t|Z;\Lambda ,\beta )\) and \(n^{-1}\sum _{j=1}^n{ Y_j(u)e^{\hat{\beta }^TZ_j} }\) are uniformly bounded on \([0,\tau ]\). Then, we can use the procedures for proof of the consistency in Murphy et al. (1997). We give a sketch of the proof of consistency of \(\hat{\beta }\) and \(\hat{\Lambda }(t)\).

Define

By the Lenglart inequality (Fleming and Harrington, 1991, page 113) and the uniform law of large numbers, we see that \(\tilde{\Lambda }(t)\) converges almost surely to \(\Lambda _0(t)\), uniformly in \(t \in [0,\tau ]\) as \(n \rightarrow \infty \). Since \(\hat{\Lambda }\) and \(\hat{\beta }\) are the maximum likelihood estimator,

where \(L(\Lambda ,\beta ;T_i,\Delta _i,Z_i)\) is defined in (4). Since \(\hat{\Lambda }(t)\) and \(\tilde{\Lambda }(t)\) are bounded, the ratios of their jump sizes are bounded and those ratios are of bounded variation as \(n \rightarrow \infty \) in \(t \in [0,\tau ]\), we can use the results of the equation (A.5) in Murphy et al. (1997), and then those results imply that

The function \(\hat{\Lambda }(t)\) is non-decreasing and bounded function. By Helly’s lemma (van der Vaart, 2000, page 9) and the compactness of \(\mathscr {B}\), any subsequence indexed by n \((n=1,2,\cdots )\) possesses a further subsequence satisfying \(\hat{\beta } \rightarrow \beta ^*\) for some \(\beta ^*\) and \(\hat{\Lambda }(t) \rightarrow \Lambda ^*(t)\) for any \(t\in [0,\tau ]\) and some monotone function \(\Lambda ^*(t)\). Therefore, for any \((t,\delta ,z)\),

By the dominated convergence theorem, the expectation of the right-hand side of Eq. (8) under the true parameters \(\Lambda _0\) and \(\beta _0\), which is a minus of the Kullback–Leibler divergence, is nonpositive, and then by the result of the equation (7), it holds that

By the identifiability of \(\Lambda \) and \(\beta \) and the lemma of (van der Vaart, 2000, page 62), we can conclude \(\Lambda ^* = \Lambda _0\) and \(\beta ^*=\beta _0\). Because any subsequence contains a further subsequence for which \(\hat{\beta }\) and \(\hat{\Lambda }\) converge uniformly to \(\beta _0\) and \(\Lambda _0\), respectively, their uniform convergence also holds for the entire sequence. \(\square \)

4 Asymptotic normality and variance estimation

In this section, the asymptotic normality of the maximum likelihood estimator is presented. To do so, we apply the semiparametric theory, and a consistent estimator of asymptotic variance is presented along the semiparametric theory.

Theorem 2

Suppose that \(\beta _0\) is in the interior of \(\mathscr {B}\). Under the assumptions (A1) and (A2), \(\sqrt{n}\left\{ \hat{\beta } - \beta _0 \right\} \) converge to a mean-zero Gaussian distribution with the variance \(\Sigma _{\beta }(\beta _0,\Lambda _0;h^*)^{-1}\), where

\(M(t)=N(t) - \int _0^t{ Y(u) \left\{ \mathrm{{d}}\Lambda _0(u)e^{ \beta _0^TZ } + \mathrm{{d}}\Lambda _P(u|Z) \right\} }\) is a square integrable martingale with respect to some filtrations (Fleming & Harrington, 1991), and \(V^{\otimes 2}=V V^T\) for any column vector V. A consistent estimator of the asymptotic variance (9) is given by

Proof

The nuisance tangent space for the nuisance parameter \(\eta =\left\{ \Lambda , \Lambda _C, F_C \right\} \) is given by a direct sum of three orthogonal linear spaces,

where

And the orthogonal complement of the nuisance tangent space \(\Gamma \) is written as

where

Details of the derivation of these nuisance tangent spaces and their orthogonal complements are given in Appendix B.

The efficient score function for \(\beta \) is constructed by orthogonal projection of the score function \(U_{1,\beta }(\beta _0;h)\) onto the orthogonal space of \(\Gamma \), and it is given by

where

Since the maximum likelihood estimator \(\hat{\beta }\) satisfies \(U_n(\hat{\beta }; h)=0\), it is the solution to

with any bounded function h including \(h^*\). The efficient influence function for ith subject is defined by

where \(\Sigma _{\beta }(\beta _0,\Lambda _0;h^*)\) is given as (9). Therefore, it holds that \(\sqrt{n}\left( \hat{\beta } - \beta _0\right) =n^{-1/2}\sum _{i=1}^n{ \psi _i(\beta _0,\Lambda _0;h^*) } + o_P(1)\) and it converges in law to the mean-zero Gaussian distribution with the variance function \(\Sigma _{\beta }(\beta _0,\Lambda _0;h^*)^{-1}\).

The asymptotic variance (9) can be consistently estimated by replacing the theoretical quantities with the empirical ones. Then, a consistent estimator is given by (10). \(\square \)

5 Simulation study

We conducted a simulation study to examine the behavior of the two variance estimators by (10) and Louis’ method. The simulation settings were set by mimicking real cancer registry data and life tables. We considered four covariates, age, gender, year, and X. They were the age at diagnosis, the gender, the year of diagnosis, and a continuous variable. Age, gender, year, and X were generated from the normal distribution \(N(60,10^2)\), the Bernoulli distribution B(1/2), the discrete uniform distribution U(2000, 2010), and the standard normal distribution N(0, 1), respectively. We generated \(T_E\) and \(T_P\) from the exponential distributions with hazard rate \(\lambda _E(t|Z)=0.20 \exp \{ \log {1.3} \times \mathrm{{st(age)}} + \log {1.25} \times \mathrm{{gender}} + \log {0.8} \times \mathrm{{st(year)}} + \beta _X X \}\) and \(\lambda _P(t|Z)=0.02 \exp \{ \log {2.0} \times \mathrm{{st(age)}} + \log {1.25} \times \mathrm{{gender}} + \log {0.9} \times \mathrm{{st(year)}} \}\), respectively, where \(\mathrm{{st(age)}}=\mathrm{{(age}}-60)/10\) and \(\mathrm{{st(year)}}=(\mathrm{{year}}-2000)/10\). We considered four scenarios on the magnitude of the association between \(T_E\) and X; \(\beta _X = \log {1.0},\ \log {1.1},\ \log {1.2}\), or \(\log {1.3}\) in Datasets 1-4, respectively. In all datasets, \(T_E\) and \(T_P\) were conditionally independent given the covariates Z. The potential censoring time C was generated from the uniform distribution on (0, 30). We set the number of subjects n=200 or 1000. For each scenario, 1000 datasets were simulated.

We fitted the Cox model (1) with \(Z=\{\mathrm{{st(age),gender,st(year}}), X\}\) in analyses. The regression coefficients were estimated by applying the maximum likelihood method with the EM algorithm by Perme et al. (2009), and the variance of those were estimated by the estimator (10) and the estimator from Louis’s method. Because the survival function for the other cause death \(S_P(t|Z)\) is regarded as a known function in the general cancer registry analyses, we used the true \(S_P(t|Z)\) with \(t= 1,2,\ldots \) in the analyses. We matched three covariates age, gender, and year to extract \(S_p(t|Z)\) for each cancer patient. We evaluated empirical mean of variance estimates, empirical power, and coverage probabilities (CP) for each regression coefficient.

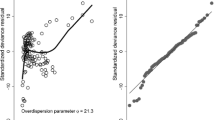

The results for \(n=200\), 500, and 1000 cases are summarized in Tables 3, 4, and 5, respectively. The coverage probabilities of the proposed method (10) were close to the nominal level of 95% with \(n=500\) and \(n=1000\), whereas a little anti-conservativeness was observed with \(n=200\). The average and the empirical coverage probability for the variance estimates were almost identical between the method (10) and Louis’s method throughout the simulation scenarios. It suggested that the two methods gave very similar estimates. To see that, we show the cross-plots the standard errors by the two methods in Fig. 1 for \(n=200\). For all the variables, the standard errors are laid near the diagonal line, indicating agreement between the two methods.

6 Illustration

We illustrate the proposed method with cancer registry data from the Surveillance, Epidemiology, and End Results (SEER) Program. We focused on a subgroup of all adult aged 60–69 years, who was diagnosed as stomach, lung, or liver cancers from 2005 to 2010 in 17 areas covering approximately 26.5% of the U.S. All patients were followed up to 10 years after diagnosis. The data were analyzed by cancer sites (stomach, lung, and liver). For each cancer site, the model (1) was applied with six covariates as explanatory variables; age at diagnosis, gender, year at diagnosis, race (White/Black/Others), stage (Localized/Regional/Distant), and income < $ 55,000, was applied. The regression coefficients were estimated by the EM-based method in Perme et al. (2009), and their variance were calculated by Louis’s method or (10). To calculate \(S_P(t|Z)\), we used the population life table of U.S., which is released from the SEER projects and it has information on annual survival by age, gender, year and race. For each cancer patient in the cancer registry, \(S_P(t|Z)\) was extracted matching the four covariates of age, gender, year, and race.

In Table 6, we summarize patients’ characteristics of the SEER database by cancer sites. For stomach, lung and liver cancers, 3987, 48,741 and 4608 patients were died among 5313, 56,412 and 5446 registered ones. The results of parameter estimation were summarized in Table 7. The two variance estimators gave very similar 95% CIs and p values.

7 Discussion

Similarly to the standard survival analysis, the regression models play very important roles in analysis of cancer registry data. Many regression models were proposed in the relative survival setting (Rubio et al., 2018; Lambert et al., 2005; Cortese & Scheike, 2008; Bolard et al., 2002; Gorgi et al., 2003). With the substantial popularity of the original Cox proportional hazards model (Cox 1972), the Cox excess hazards regression would be one of the most important and appealing regression models in cancer registry data analysis. Successful introduction of a simple EM-based algorithm (Perme et al., 2009) for the maximum likelihood estimator is really appreciated and of practical value, and it was successfully applied in a real population study (Allemani et al., 2018). On the other hand, formal theoretical justification was left unclear. This paper contributes to fill the gap by showing consistency, asymptotic normality, and semiparametric efficiency. Although our theoretical justification covered only the variance estimator (10), it also suggested the validity of Louis’ estimator with the agreement between them observed in the simulation studies.

A typical way to use the regression model for cancer registry data is to evaluate conditional hazards given potential confounders as done by Derks et al. (2018); Schuil et al. (2018); Allemani et al. (2018). In recent years, studies combining cancer registry data with data from other databases have been conducted, and the search for factors that affect cancer prognosis has become increasingly important (Woods et al., 2021; Li et al., 2021). On the other hand, in making inference on marginal hazards, regression models also play very important roles. For example, Komukai and Hattori (2017, 2020) proposed doubly-robust inference procedures for the marginal net survival and relative survival ratio in the presence of covariate-dependent censoring, in which regression models for censoring time and the survival time were very crucial roles. Estimation of causal quantities under the relative survival setting was discussed based on the regression standardization by Syriopoulou et al. (2021). To incorporate the Cox excess hazards model in these settings, the sound theoretical basis of the model is very important. More specifically, the consistency and the efficient influence function (11) results for the estimators will be very useful theoretical results when showing the consistency and deriving the asymptotic variance of estimators incorporating the Cox excess hazards model, respectively. Our development would be helpful in developing rigorous methods for such incomplete data analysis of marginal quantities.

Finally, we conclude our paper by discussing the assumption (A1). It is a fundamental assumption in the analysis of cancer registry data, like the independent censoring assumption (Fleming and Harrington,1991, page 128) in the standard survival analysis. To make the assumption (A1) satisfied, a simple idea is to collect and include many covariates so that (A1) holds. However, it also brings a difficulty specific to cancer registry data; even if additional covariates are collected in the cancer registries, the population life tables may not have them. This new missing data problem has been handled by Touraine et al. (2020) and Rubio et al. (2021). However, their development is not satisfactory, and further research is warranted possibly with an EM-based method like the proposed method in this paper.

References

Allemani, C., Weir, H. K., Carreira, H., Harewood, R., Spika, D., Wang, X. S., et al. (2015). Global surveillance of cancer survival 1995–2009: Analysis of individual data for 25,676,887 patients from 279 population-based registries in 67 countries (CONCORD-2). Lancet, 385, 977–1010.

Allemani, C., Matsuda, T., Carlo, V. D., Harewood, R., Matz, M., Maja, N., et al. (2018). Global surveillance of trends in cancer survival 2000–14 (CONCORD-3): Analysis of individual records for 37,513,025 patients diagnosed with one of 18 cancers from 322 population-based registries in 71 countries. Lancet, 39, 1023–75.

Andersen, P. K., Borgan, O., Gill, R. D., & Keiding, N. (1993). Statistical models based on counting processes. Springer.

Bolard, P., Quantin, C., Abrahamowicz, M., Estève, J., Giorgi, R., Chadha-Boreham, H., et al. (2002). Assessing time-by-covariate interactions in relative survival models using restrictive cubic spline functions. Journal of Cancer Epidemiology and Prevention, 7, 113–122.

Coleman, M. P., Quaresma, Q., Berrino, F., Lutz, J., Angelis, R. D., Capocaccia, R., et al. (2008). Cancer survival in five continents: A worldwide population-based study (CONCORD). Lancet Oncology, 9, 730–756.

Cortese, G., & Scheike, T. H. (2008). Dynamic regression hazards models for relative survival. Statistics in Medicine, 27, 3563–3584.

Derks, M. G. M., Bastiaannet, E., Kiderlen, M., Hilling, D. E., Boelens, P. G., Walsh, P. M., et al. (2018). Variation in treatment and survival of older patients with nonmetastatic breast cancer in five European countries: A population-based cohort study from the EURECCA Breast Cancer Group. British Journal of Cancer, 119, 121–129.

Dickman, P. W., Sloggett, A., Hills, M., & Hakulinen, T. (2004). Regression models for relative survival. Statistics in Medicine, 23(1), 51–64.

Ederer, F., Axitell, L. M., & Cutler, S. J. (1961). The relative survival rate: A statistical methodology. National Cancer Institute Monograph, 6, 101–121.

Estève, J., Benhamou, E., Croasdale, M., & Raymond, L. (1990). Relative survival and the estimation of net survival: Elements for further discussion. Statistics in Medicine, 9, 529–538.

Fang, H.-B., Li, G., & Sun, J. (2005). Maximum likelihood estimation in a semiparametric logistic/proportional-hazard mixture model. Scandinavian Journal of Statistics, 32, 59–75.

Fine, J. P., & Gray, R. J. (1999). A proportional hazards model for the subdistribution of a competing risk. Journal of the American Statistical Association, 94, 496–509.

Fleming, T. R., & Harrington, D. P. (1991). Counting processes and survival analysis. Wiley.

Gorgi, R., Abrahamowicz, M., Quantin, C., Bolard, P., Estève, J., Gouvernet, J., & Faivre, J. (2003). A relative survival regression model using B-spline functions to model non-proportional hazards. Statistics in Medicine, 22, 2767–2784.

Hakulinen, T. (1982). Cancer survival corrected for heterogeneity in patient withdrawal. Biometrics, 38, 933–942.

Hakulinen, T., & Tenkanen, L. (1987). Regression analysis of relative survival rates. Journal of the Royal Statistical Society, Series C., 36, 309–317.

Kalager, M., Adami, H.-O., Lagergren, P., Steindorf, K., & Dickman, P. W. (2021). Cancer outcomes research-a European challenge: Measures of the cancer burden. Molecular Oncology, 15, 3223–3241.

Komukai, S., & Hattori, S. (2017). Doubly robust estimator for net survival rate in analyses of cancer registry data. Biometrics, 73, 124–133.

Komukai, S., & Hattori, S. (2020). Doubly robust inference procedure for relative survival ratio in population-based cancer registry data. Statistics in Medicine, 39(13), 1884–1900.

Lambert, P. C., Smith, L. K., Jones, D. R., & Botha, J. L. (2005). Additive and multiplicative covariate regression models for relative survival incorporating fractional polynomials for time-dependent effects. Statistics in Medicine, 24, 3871–3885.

Li, M., Reintals, M., D’Onise, K., Farshid, G., Holmes, A., Joshi, R., Karapetis, C. S., Miller, C. L., Olver, I. N., Buckley, E. S., Townsend, A., Walters, D., & Roder, D. M. (2021). Investigating the breast cancer screening-treatment-mortality pathway of women diagnosed with invasive breast cancer: Results from linked health data. European Journal of Cancer Care, 31, e13539. https://doi.org/10.1111/ecc.13539

Louis, T. A. (1982). Finding the observed information matrix when using the EM algorithm. Journal of the Royal Statistical Society, Series B, 44, 226–233.

Murphy, S. A., Rossini, A. J., & van der Vaart, A. W. (1997). Maximum likelihood estimation in the proportional odds model. Journal of the American Statistical Association, 92, 968–976.

Nelson, C. P., Lambert, P. C., Squire, I. B., & Jones, D. R. (2007). Flexible parametric models for relative survival, with application in coronary heart disease. Statistics in Medicine, 26, 5486–5498.

Perme, M. P., Stare, J., & Estève, J. (2012). On estimation in relative survival. Biometrics, 68, 113–120.

Perme, M. P., Henderson, R., & Stare, J. (2009). An approach to estimation in relative survival regression. Biostatistics, 10, 136–146.

Perme, M. P., Estève, J., & Rachet, B. (2016). Analysing population-based cancer survival–settling the controversies. BMC Cancer, 16, 933. https://doi.org/10.1186/s12885-016-2967-9

Pollard, D. (1990). Empirical processes: Theory and applications. Institute of Mathematical Statistics.

Rubio F. J., Remontet L., Jewell N. P. & Belot A. (2018). On a general structure for hazard-based regression models: an application to population-based cancer research. Statistical Methods in Medical Research.

Rubio, F. J., Rachet, B., Giorgi, R., Maringe, C., Belot, A., & the CENSUR working survival group,. (2021). On models for the estimation of the excess mortality hazard in case of insufficiently stratified life tables. Biostatistics, 22(1), 51–67.

Sasieni, P. D. (1996). Proportional excess hazards. Biometrika, 83, 127–141.

Schuil, H., Derks, M., Liefers, G.-J., Portielje, J., van de Velde, C., Syed, B., Green, A., Ellis, I., Cheung, K.-L., & Bastiaannet, E. (2018). Treatment strategies and survival outcomes in older women with breast cancer: A comparative study between the FOCUS cohort and Nottingham cohort. Journal of Geriatric Oncology, 9, 635–641.

Syriopoulou, E., Rutherford, M. R., & Lambert, P. C. (2021). Inverse probability weighting and doubly robust standardization in the relative survival framework. Statistics in Medicine, 40, 6069–6092.

Touraine, C., Graféo, N., Giorgi, R., & the CENSUR working survival group. (2020). More accurate cancer-related excess mortality through correcting background mortality for extra variables. Statistical Methods in Medical Research, 29(1), 122–136.

Tsiatis, A. (2006). Semiparametric Theory and Missing Data. New York: Springer.

Van der Vaart, A. W. (2000). Asymptotic statistics (Vol. 3). Cambridge University Press.

Woods, L. M., Rachet, B., Morris, M., Bhaskaran, K., & Coleman, M. P. (2021). Are socio-economic inequalities in breast cancer survival explained by peri-diagnostic factors? BMC Cancer, 21, 485. https://doi.org/10.1186/s12885-021-08087-x

Acknowledgements

The first author’s research was partly supported by Grant-in-Aid for Early-Career Scientists (20K19754) from the Ministry of Education, Science, Sports and Technology of Japan. The second author’s research was partly supported by Grant-in-Aid for Challenging Exploratory Research (16K12403) and for Scientific Research (16H06299, 18H03208) from the Ministry of Education, Science, Sports and Technology of Japan. Computational calculations were performed at the Institute of Medical Science (the University of Tokyo).

Funding

The research leading to these results received funding from the Ministry of Education, Science, Sports and Technology of Japan under Grant-in-Aid for Early-Career Scientists No. 20K19754, for Challenging Exploratory Research No. 16K12403, and for Scientific Research No. 16H06299 and No. 18H03208.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

Appendix A: Existence of the maximum likelihood estimator and identifiability of \(\beta _0\) and \(\Lambda _0\)

The existence of the pair of the parameters \((\beta ,\Lambda )\) maximizing the observed likelihood (3) is proved by using the similar arguments to the proof of theorem 1 in Fang et al. (2005). In this Appendix, along this line, we prove the identifiability of \((\beta _0,\Lambda _0)\) in the sense that \(L(\Lambda ,\beta ;t,\delta ,z) = L(\Lambda _0, \beta _0;t,\delta ,z)\) implies \(\beta = \beta _0\) and \(\Lambda (t)=\Lambda _0\) for all \(t \in [0,\tau ]\).

Suppose the parameter space \(\mathscr {B} \in R^p\) of \(\beta \) is compact, where p is the dimension of \(\beta \). Since the vector of covariates Z is bounded, \(e^{\beta ^TZ}\) is also bounded, and its lower and upper bounds are denoted by \(K_l\) and \(K_u\), respectively. Let \(t_1<t_2<\cdots <t_k\) be the distinct failure times. Then, for any right-continuous and non-decreasing function \(\Lambda (t)\), it holds that

Because forcing \(\Lambda (T_i)=\Lambda (t_k)\) for all \(T_i \ge t_k\) will increase the likelihood if \(t_k\) is sufficiently large value satisfying \(\Lambda (t_k) \ge 1\), it suffices to restrict the space of \(\Lambda (t)\) to the space \(\Omega _0\), where

Let \(A_M = \left\{ \Lambda \in \Omega _0: \Lambda (t_k) \le M \right\} \) for any \(0<M<\infty \). Because \(L(\beta ,\Lambda )\) is continuous in \(\beta \) and \(\Lambda \), it has a maximum in the compact subspace \(\mathscr {B} \times A_M\) for any given M. Let \(L^{(M)}\) be the maximum value of \(L(\beta ,\Lambda )\) in \(\mathscr {B} \times A_M\). By \(e^{-MK_l}M \rightarrow 0\) as \(M \rightarrow \infty \), there exists an \(M_0 \ge 1\) such that the right-hand side of (12) is less than \(L^{(M_0)}\) for all \(\Lambda \) out of \(A_{M_0}\). Therefore, the likelihood evaluated at any sequence \(\Lambda _m\) of \(\Lambda \) with \(\Lambda _m(t_k)\) diverging to infinity as \(m \rightarrow \infty \) will not approach the maximum value of \(L(\beta ,\Lambda )\). As a consequence, when maximizing the observed likelihood (3), we can restrict the compact subspace \(\mathscr {B} \times A_{M_0}\). The existence of the maximum likelihood estimator can be proved by the continuity of the likelihood.

We prove that both of \(\beta _0\) and \(\Lambda _0\) are identifiable. By considering \(L(\Lambda ,\beta ;t,0,z) = L(\Lambda _0, \beta _0;t,0,z)\), we have that \(\Lambda (t)/\Lambda _0(t)=e^{-\left( \beta - \beta _0 \right) ^TZ}\) for all \(t \le \tau \) and Z such that \(\Pr (T> \tau |Z)>0\). Therefore, since \(\left( \beta - \beta _0 \right) ^TZ\) is constant for all Z, it hold that \(\beta =\beta _0\) if the covariance of Z is nondegenerate, and also we have \(\Lambda (t)=\Lambda _0(t)\) for all \(t \le \tau \). By considering \(L(\Lambda ,\beta ;t,1,z) = L(\Lambda _0, \beta _0;t,1,z)\), we also have \(\mathrm{{d}}\Lambda (t)=\mathrm{{d}}\Lambda _0(t)\) for all \(t \le \tau \).

Appendix B: Nuisance tangent space and its orthogonal complement

Let \(\mathcal {H}\) be a Hilbert space consisted of all p-dimensional measurable functions of \((T,\Delta , Z)\) with mean-zero and finite variance equipped with inner product \(\left<h_1,h_2\right>=E\left[ h_1^T(T,\Delta ,Z)h_2(T,\Delta ,Z)\right] \). To derive the nuisance tangent space for the nuisance parameter \(\eta =\left\{ \Lambda , \Lambda _C, F_Z \right\} \), we consider parametric submodels \(\Lambda _{h_1}(t;\gamma _1)\), \(\Lambda _{C,h_2}(t|Z;\gamma _2)\), and \(F_{Z,h_3}(z;\gamma _3)\) for \(\Lambda \), \(\Lambda _C\), and \(F_Z\), respectively, which were defined in Sect. 3, where \(\gamma _1\), \(\gamma _2\), and \(\gamma _3\) are the finite-dimensional nuisance parameters. Then, the nuisance tangent spaces for each nuisance parameter will be derived as the mean-square closure of all parametric submodel nuisance tangent spaces. Since the derivations of the nuisance tangent spaces \(\Gamma _2\) and \(\Gamma _3\) in Sect. 4, which are for the nuisance parameters \(\Lambda _C\) and \(F_Z\), respectively, are the same as those of Section 5.2 in Tsiatis (2006), we only derive here the nuisance tangent space \(\Gamma _1\), which is for the nuisance parameter \(\Lambda \), in Theorem 1.

Again, we consider a parametric submodel \(\Lambda _{h_1}(t;\gamma _1) = \int _0^t{ \left\{ 1 + \gamma _1 h_1(u) \right\} \mathrm{{d}}\Lambda _0(u) } = \int _0^t{ \left\{ 1 + \gamma _1 h_1(u) \right\} \lambda _0(u) \mathrm{{d}}u }\), where \(h_1(u)\) is an arbitrary p-dimensional bounded function. The contribution to the log-likelihood function under the parametric submodel is

Taking derivatives of \(\ell _n(\beta , \gamma _1; h_1)\) with respect to \(\gamma _1\), and evaluating \(\beta =\beta _0\) and \(\gamma _1=0\), we obtain the score function

Then, the score function for this parametric submodel is in the nuisance tangent space \(\Gamma _1\). Since any element of \(\mathcal {H}\) can be approximated by a sequence of bounded function (Tsiatis 2006, Section 4), the score function with parametric submodel without the boundedness of \(h_1(t)\) is also in \(\Gamma _1\).

For any parametric submodel \(\Lambda (t;\gamma _1) = \int _0^t{ \lambda (u;\gamma _1) \mathrm{{d}}u }\), the score function with respect to \(\gamma _1\), setting \(\gamma _1=0\) and \(\beta =\beta _0\), is expressed as

Then, this score function is in the nuisance tangent space \(\Gamma _1\). On the other hand, we can demonstrate that the score function for the some parametric submodel included in \(\Gamma _1\), such as \(\Lambda _{h_1}(t;\gamma _1) = \int _0^t{ \left\{ 1 + \gamma _1 h_1(u) \right\} \mathrm{{d}}\Lambda _0(u) }\), is an element of a parametric submodel nuisance tangent space. Therefore, it holds that the nuisance tangent space for \(\Lambda (t)\) is equal to \(\Gamma _1\).

\(\Gamma _1 \perp \Gamma _2\) can be easily proved under the assumption (A2) and \(\Gamma _i \perp \Gamma _3 \ (i=1,2)\) can be also proved by \(E[\alpha _i^Th_3(Z)]=0\), where \(\alpha _i \in \Gamma _i\ (i=1,2)\) and \(h_3(Z) \in \Gamma _3\). Then the nuisance tangent space for the nuisance parameter \(\eta =\left\{ \Lambda , \Lambda _C, F_Z \right\} \) is given by the direct sum of three orthogonal spaces, \(\Gamma = \Gamma _1 \oplus \Gamma _2 \oplus \Gamma _3\). The orthogonal complement \(\Gamma ^{\perp }\) is obtained by applying the almost same procedures as the proof of Theorem 5.5 in Tsiatis (2006).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Komukai, S., Hattori, S. Asymptotic justification of maximum likelihood estimation for the proportional excess hazard model in analysis of cancer registry data. Jpn J Stat Data Sci 6, 337–359 (2023). https://doi.org/10.1007/s42081-023-00190-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42081-023-00190-6