Abstract

The satisfied user ratio (SUR) curve for a lossy image compression scheme, e.g., JPEG, characterizes the complementary cumulative distribution function of the just noticeable difference (JND), the smallest distortion level that can be perceived by a subject when a reference image is compared to a distorted one. A sequence of JNDs can be defined with a suitable successive choice of reference images. We propose the first deep learning approach to predict SUR curves. We show how to apply maximum likelihood estimation and the Anderson–Darling test to select a suitable parametric model for the distribution function. We then use deep feature learning to predict samples of the SUR curve and apply the method of least squares to fit the parametric model to the predicted samples. Our deep learning approach relies on a siamese convolutional neural network, transfer learning, and deep feature learning, using pairs consisting of a reference image and a compressed image for training. Experiments on the MCL-JCI dataset showed state-of-the-art performance. For example, the mean Bhattacharyya distances between the predicted and ground truth first, second, and third JND distributions were 0.0810, 0.0702, and 0.0522, respectively, and the corresponding average absolute differences of the peak signal-to-noise ratio at a median of the first JND distribution were 0.58, 0.69, and 0.58 dB. Further experiments on the JND-Pano dataset showed that the method transfers well to high resolution panoramic images viewed on head-mounted displays.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Image compression is typically used to meet constraints on transmission bandwidth and storage space. The quality of a compressed image is quantitatively determined by encoding parameters, e.g., the quality factor (QF) in JPEG compression. When images are compressed, artifacts such as blocking and ringing may appear and affect the visual quality experienced by the users. The satisfied user ratio (SUR) is the fraction of users that do not perceive any distortion when comparing the original image to its compressed version. The constraint on the SUR may vary according to the application.

Determining the relationship between the encoding parameter and the SUR is a challenging task. The conventional method consists of three steps. First, the source image is compressed multiple times at different bitrates. Next, a group of subjects is asked to identify the smallest distortion level that they can be perceived. A subject cannot notice the distortion until it reaches a certain level. This just noticeable difference (JND) level is different from one subject to another due to individual variations in the physiological and visual attention mechanisms. Finally, the overall SUR for the image is obtained by statistical analysis. Following this procedure, several subjective quality studies were conducted and yielded JND-based image and video databases, e.g., MCL-JCI [14], JND-Pano [24], SIAT-JSSI [7], SIAT-JASI [7], MCL-JCV [32], and VideoSet [34]. Subjective visual quality assessment studies are reliable but time-consuming and expensive. In contrast, objective (algorithmic) SUR estimation can work efficiently at no extra annotation cost.

In recent years, deep learning has made tremendous progress in computer vision tasks such as image classification [10, 31], object detection [22, 29], and image quality assessment (IQA) [2, 12, 35]. Instead of carefully designing handcrafted features, deep learning-based methods automatically discover representations from raw image data that are most suitable for the specific tasks, and can improve the performance significantly.

Inspired by these findings, we propose a novel deep learning approach to predict the relationship between the SUR and the encoding parameter (or distortion level) for compressed images. Given a pristine image and its distorted versions, we first use a siamese network [3, 5] to predict the SUR at each distortion level. Then we apply the least squares method to fit a parametric model to the predicted values and use the graph of this model as SUR curve.

The main contributions of our work are as follows:

-

1.

We exploit maximum likelihood estimation (MLE) and the Anderson–Darling test to select the most suitable parametric distribution for SUR modelling instead of using the normal distribution as a default like all previous works.

-

2.

We propose a deep learning architecture to predict samples of the SUR curves of compressed images automatically, followed by a regression step yielding a parametric SUR model.

-

3.

We improve the performance of our model by using transfer feature learning from a similar prediction task. We first train the proposed model independently on an IQA task. Given the images for SUR prediction, we extract multi-level spatially pooled (MLSP) [11] features from the learned model, on which a shallow regression network is further trained to predict the SUR value for a given image pair.

Compared to our previous work [6], our new contributions are as follows. (1) We optimize the proposed architecture and apply feature learning instead of a fine-tuning approach, significantly decreasing computational cost and improving performance. (2) We use MLE and the Anderson–Darling test to select the JND distribution model instead of assuming it to be Gaussian. (3) We conduct more experiments using the MCI-JCL dataset to prove the efficiency of our model, providing results for not only the first JND, but for the second and third JNDs as well. (4) We add experiments with the JND-Pano dataset, showing that the method transfers well to high resolution panoramic images viewed on head-mounted displays.

Definitions

We consider a lossy image compression scheme that produces monotonically increasing distortion magnitudes as a function of an encoding parameter. The metric for the distortion magnitude may be the mean squared error, and the encoding parameter is assumed to take only a finite number of values. For example, in JPEG, the encoding parameter is the quality factor \(\text {QF} \in \{1,\ldots ,100\}\). A value of QF corresponds to the distortion level \(n=101-\text {QF}\), where \(n=1\) is the smallest and \(n=100\) is the largest distortion level.

Definition 1

(kth JND) For a given pristine image I[0], we associate distorted images \(I[n], \; n=1,\ldots , N\) corresponding to distortion levels \(n=1, \ldots , N\). Let \(\text {JND}_0\) be the (trivial) random variable with probability \({\mathbb{P}}(\text {JND}_0=0) = 1\). The kth JND, which we denote by \(\text {JND}_k, \; k \ge 1\), is a random variable whose value is the smallest distortion level that can be perceived by an observer when the image \(I[\text {JND}_{k-1}]\) is compared to the images I[n], \(n > \text {JND}_{k-1}\).

For simplicity of notation, the random variable \(\text {JND}_k\) will be denoted by JND when there is no risk of confusion.

Definition 2

(p% \({JND}\)) The p% \(\text {JND}\) is the smallest integer in the set \(\{1,2,\ldots , N\}\) for which the cumulative distribution function of \(\text {JND}\) is greater than or equal to \(\frac{p}{100}\).

Samples of the JNDs can be generated iteratively. The original pristine image I[0] serves as the first anchor image. The increasingly distorted images \(I[n], \; n=1,2, \ldots\), are displayed sequentially together with the anchor image until a distortion can be perceived. This yields a sample of the first JND. This first image with a noticeable distortion then replaces the anchor image, to be compared with the remaining distorted images sequentially until again a noticeable difference is detected, yielding a sample of the second JND, and so on.

The set of random variables \(\{\text {JND}_k \; | \; k \ge 1\}\) in Definition 1 is a discrete finite stochastic process. The number of (non-trivial) JNDs of the stochastic process depends on the image sequence on hand. It is limited by the smallest number of JNDs that a random observer is able to perceive for the given image sequence. In practical applications, the first JND is the most important one. At the following JNDs the image quality is degraded multiple times from the original which implies that a satisfactory usage of the corresponding images may be very limited. In this paper, we have considered only the first three JNDs, which was also the choice made in [34].

Definition 1 is intended for sequences of increasingly distorted images, and the prototype application is given by image compression with decreasing bitrate. However, it can also be applied to other media like sequences of video clips or, more generally, to sequences of perceptual stimuli of any kind. Moreover, these sequences need not be sequences with increasing distortion. JNDs may also be useful, for example, to study the effect of parameter-dependent image enhancement methods.

The notion of a sequence of JNDs obtained by the iterative procedure as considered in this paper was introduced to the field of image and video quality assessment only recently [21]. In that contribution, an empirical study for five sequences of compressed images and video clips was carried out, with 20 subjects contributing their sequences of JND samples for each set of stimuli. In the followup paper [14], a larger dataset of 50 source images was introduced, including subjective tests with 30 participants, and providing the dataset MCL-JCI, that we are using for our studies here. Neither of the mentioned contributions gave a formal definition of JNDs. However, the experimental protocols suggest that in these papers the JND random variables were sampled in the spirit of Definition 1.

At this point it is important to take note of the common (but slightly different) usage of JNDs in psychophysics. Those JND scales are based on the long standing principle in psychology that equally noticed differences are perceptually equal, unless always or never noticed. It is this linear scale of JNDs that has also been used as units of perceptual quality scales for images in [17, 28]. For subjective quality assessment, an input image is compared to a quality ruler, consisting of a series of reference images varying in a single attribute (sharpness), with known and fixed quality differences between the samples, given by a certain number of JND units.

Another application of this JND scale was given in a later paper [34], where the \(k\hbox {th}\) JND for \(k>1\) was obtained differently from the procedure outlined in Definition 1, namely by using the same anchor image for all observers. This anchor image was chosen as the one corresponding to the 25% quantile of the previous JND, i.e., the point at which the fraction of observers that cannot perceive a noticeable difference drops below 75%.

To conclude, let us state that the classical psychophysical JND scale produces a perceptual distance of one JND unit between the reference image and the first JND from Definition 1, as expected. However, it is not hard to see that for the \(k\hbox {th}\) JND, \(k>1\), the perceptual distance to the reference image according to the common psychophysical JND scale may differ from the expected value, i.e., k.

Definition 3

(SUR function and curve) The SUR function is the complementary cumulative distribution function (CCDF) of the JND. The graph of this function is called the SUR curve.

The SUR function, which we denote by \(\text {SUR}(\cdot )\), gives the proportion of the sample population for which the JND is greater than a given value. That is,

Since the range of the JND is discrete (i.e., integers \(\{1,2, \ldots , N\}\)), the SUR function is a monotonically decreasing step function.

The SUR curve can be used to determine the highest distortion level for which a given proportion of the population is satisfied, in the sense that it cannot perceive a distortion. Formally, we apply the definition of the SUR function and curve also for the second and third JND, although for these cases, an interpretation as a proportion of “satisfied” users is not appropriate.

Definition 4

(p% SUR) The p% SUR is the largest integer in the set \(\{1,2, \ldots , N\}\) for which the SUR function is greater than or equal to \(\frac{p}{100}\),

If we set \(p = 75\), we obtain the 75% SUR used in [33].

Related works

Existing research on JND can be classified into three main areas: 1. subjective quality assessment studies to collect JND annotations, 2. mathematical modeling of the JND probability distribution and SUR function, and 3. prediction of the probability distribution of the JND and the SUR curve for a given image or video.

Subjective quality assessment

The JND prediction problem has been addressed for various media, including images and videos, and for different types of applied distortions. Existing JND databases have made this possible.

Jin et al. [14] conducted subjective quality assessment tests to collect JND samples for JPEG compressed images and built a JND-based image dataset called MCL-JCI. The tests involved 150 participants and 50 source images. With JND samples for a given image collected from 30 subjects, they found that humans can distinguish only a few distortion levels (five to seven). Since subjective tests are time-consuming and expensive, a binary search algorithm was proposed to speed up the annotation procedure. The search procedure helps to quickly narrow down the first noticeable difference, resulting in a smaller number of subjective comparisons than the alternative linear search.

Liu et al. [24] created a JND dataset for panoramic images viewed using a head-mounted display. JPEG compressed versions of 40 source images of resolution \(5000 \times 2500\) were inspected by at least 25 observers each. An aggressive binary search procedure was used to identify the corresponding first JNDs.

Wang et al. [32] conducted subjective tests on JND for compressed videos using H.264/AVC coding. They collected JND samples from 50 subjects, building a JND-based video dataset called MCL-JCV.

Wang et al. [34] built a large-scale JND video dataset called VideoSet for 220 5-s source videos in four resolutions (1080p, 720p, 540p, 360p). Distorted versions of the videos were obtained with H.264/AVC compression. To obtain the JND sample from a given subject, they used a modified binary search procedure comparable to the ones adopted in [14] and [32]. For each subject, samples from the first three JNDs were collected.

Fan et al. [7] studied the JND of symmetrically and asymmetrically compressed stereoscopic images for JPEG2000 and H.265 intra-coding. They generated two JND-based stereo image datasets, one for symmetric compression and one for asymmetric compression.

We are interested in studying a widely-encountered type of distortion, the JPEG compression. This is why we rely on the MCL-JCI and JND-Pano datasets, which offer JND values for JPEG compressed images.

Mathematical modeling of JND and SUR

In previous works, the distribution of JND values has been modeled as a normal distribution [32, 34], some works have studied its skewness and kurtosis, and others modeled it as a Gaussian mixture [14].

In [32] and [34], a normal distribution was used to model the first three JNDs. In [34], the Jarque-Bera test was used to check whether the JND samples have the skewness and kurtosis matching a normal distribution. Almost all videos passed the normality test.

In [14], the JND samples are classified into three groups (low QF, middle QF, high QF), and it is assumed that the JND distribution for each group is a Gaussian mixture with a finite number of components. The parameters of the Gaussian mixture model (GMM) are determined with the expectation maximization algorithm. The number of components of the GMM is determined with the Bayesian information criterion. However, this methodology is overly complicated, ambiguous in the choice of the three groups, and not justified.

In [7], the authors assumed that the JND on the QF scale was normally distributed but also noted that an empirical test (\(\beta _2\) test [27]) found that only 29 of the 50 source images passed the normality test. In “Modeling the SUR function” section, we show that other models are more suitable and propose a method to select one, without requiring a complex mixture model.

Prediction of JND and SUR

JND studies evaluate the personal (user-specific) JND and accumulate a distribution of JND values over a population of participants. Existing works have proposed to predict various aspects of the JND distribution, such as the mean value of the JND [13], the 75% JND value [23], or the actual SUR curve as the Q-function of the fitted normal distribution [33].

Huang et al. [13] propose a support vector regression (SVR)-based model to predict the mean value of the JND for HEVC encoded videos. They exploit the masking effect and a spatial-temporal sensitivity map based on spatial, saliency, luminance, and temporal information.

Wang et al. [33] also use SVR to predict the SUR curve. The SVR is fed a feature vector consisting of the concatenation of two feature vectors. The first one is based on the computation of video multi-method assessment fusion (VMAF) [19] quality indices on spatial-temporal segments of the compressed video, while the second one is based on spatial randomness and temporal randomness features that measure the masking effect in the corresponding segments of the source video. For the dataset VideoSet, the average prediction error at the 75% SUR between the predicted quantization parameter (QP) value and the ground truth QP value was found to be 1.218, 1.273, 1.345, and 1.605 for resolutions 1080p, 720p, 540p, and 360p, respectively.

Zhang et al. [36] use Gaussian process regression to model the relationship between the SUR curve and the bitrate for video compression. Three types of features called visual masking features, recompression features, and basic attribute features are used for training and prediction. Visual masking features consist of one spatial and one temporal feature. Recompression features consist of four different bitrates and one variation of the VMAF score over two different bitrates. Basic attribute features are computed from the anchor video and consist of one VMAF score, the frame rate, the resolution, and the bitrate. Experimental results for VideoSet show that the method outperforms the method in [33].

Hadizadeh et al. [9] build an objective predictor (binary classifier) to determine whether a reference image is perceptually distinguishable from a version contaminated with noise according to a JND model. Given a reference image and its noisy version, they use sparse coding to extract a feature vector and feed it into a multi-layer neural network for the classification. The network is trained on a dataset obtained through subjective experiments with 15 subjects and 999 reference images. The predictor achieves a classification accuracy of about 97% on this dataset.

Liu et al. [23] propose a deep learning technique to predict the JND for image compression. JND prediction is seen as a multi-class classification problem, which is converted into several binary classification problems. The binary classifier is based on deep learning and predicts whether a distorted image is perceptually lossy with respect to a reference. A sliding window technique is used to deal with inconsistencies in the multiple binary classifications. Experimental results for MCL-JCI show that the absolute prediction error of the proposed model is 0.79 dB peak signal-to-noise ratio (PSNR) on average.

Our work improves the modeling and prediction of the JND distribution. We use a deep learning approach. For a general introduction to deep learning we recommend the book [8]. Unlike Liu et al. [23], we formulate the SUR curve prediction problem as a regression problem. We find a better suited distribution type that matches the empirical JND samples and predict the entire SUR curve, not just a statistic.

Modeling the SUR function

We defined the JND as a discrete random variable and the SUR function as its CCDF, which is a monotonically decreasing step function. In practice, the SUR function must be estimated from sparse and noisy data, i.e., from a small set of subjective JND measurements. We generalize from these samples by fitting a suitable mathematical model to the data. For this purpose, we consider a set of common continuous random variables that have a mathematical form defined by parameters. After choosing the best fitting one, we evaluate the corresponding CCDFs at the integer distortion levels. Thereby, we again obtain a discrete and fitted JND random variable, which replaces the noisy original one for all subsequent steps. The continuous JND distribution provides the ground truth p% JNDs and SURs for the images of the given JND dataset (see Fig. 1 for an illustration of the procedure).

Illustration of how the ground-truth output values for our prediction model are derived. We start with samples for a JND level from the MCL-JCI dataset [14]. The histogram, in dark blue, shows their summary. We fit an analytical SUR curve, shown in red, to the empirical samples, given an analytical distribution type. The blue dots show the ground-truth analytical samples that are used to train our prediction model

To select the most suitable distribution for a given dataset of samples, we use maximum likelihood estimation (MLE) and the Anderson–Darling (A–D) test. MLE allows us to estimate the parameters of the probabilistic models and also to rank different models according to increasing negative log-likelihood, averaged over the source images in the dataset. For a given distribution model and a set of corresponding samples, the A–D test can be applied for the null hypothesis that the JND samples were drawn from the model at a specified significance level (5% in our experimental settings). This allows us to rank the models according to the number of times the null hypothesis was rejected. The A–D test was a suitable goodness of fit test for the datasets considered in this paper. Unlike the chi-squared test, it can be used with a small number of samples. It is also more accurate than the Kolmogorov–Smirnov test when the distribution parameters are estimated from the data [26].

We considered the 20 parametric continuous probability distribution models that are available in Matlab (R2019b) and fitted them to the JND samples of the MCL-JCI [14] and JND-Pano [24] datasets, expressed in terms of distortion levels and also in the reverse orientation, i.e., with respect to the corresponding JPEG quality factors QF. Considering the two datasets together, the generalized extreme value (GEV) distribution, applied for the QF data, was the most suitable model.

Table 1 shows the results for the QF data. For the 50 source images in the MCL-JCI dataset, the GEV distribution ranked second in terms of both the negative log-likelihood and the A–D test for the first JND. In contrast, the Gaussian distribution, ranked 12th for the negative log-likelihood criterion and 4th for the A–D test. For the JND-Pano dataset, the GEV distribution ranked third for both the log-likelihood and the A–D test.

The probability density function (PDF) of the GEV distribution is given by

where \(x \in {\mathbb{R}}\) satisfies

Here, \(\xi \ne 0\), \(\mu\), and \(\sigma\) are called shape parameter, location parameter, and scale parameter, respectively.

Since convergence of MLE was better for the QF data than for the distortion level data, we built our models based on the QF data. That is, we used the PDF

to model the JND distribution, where \(f_X\) is the PDF of the GEV that models the QF data. Note that \(f_Y\) is not the PDF of a GEV distribution. Finally, the CCDF of \(f_Y\),

served as a model for the SUR function, where we have copied the GEV parameters of \(f_X\) in the notation of \(f_Y\) and \({\overline{F}}\) for convenience.

Finally, to return to a discrete model for the JND, we sample the continuous model \({\overline{F}}(y\,|\,\xi ,\mu ,\sigma )\) at integer distortion levels \(y=1,\ldots ,100\) and arrive at the piecewise constant SUR function

where \(\lfloor y \rfloor\) denotes the greatest integer less than or equal to y. For completeness, the modeled JND is given by the discrete random variable

SUR curve and 75% SUR of the first three JNDs. The data is for the fifth source image in the MCL-JCI dataset [14]

SUR curve and 50% SUR of the first three JNDs. The data is for the 14th source image in the MCL-JCI dataset [14]

Figure 2a shows the histogram of the first JND for the fifth image in the MCL-JCI dataset, the corresponding empirical SUR curve, the model obtained with MLE of the GEV distribution for the QF data, the corresponding SUR curve, and the 75% SUR. Figure 2b, c show similar results for the second and third JND, respectively. Figure 3 shows the results for the 14th image in the MCL-JCI dataset, highlighting the 50% SUR instead of the 75% SUR.

Deep learning for SUR prediction

Structure of training data

We need to predict SUR curves that are calculated from subjective JND studies, given a reference image and a distortion type, e.g., JPEG compression. In order to train a good machine learning model, we considered a few ways to present the available information during training. With respect to the inputs, we could present one (the reference) or more input images (reference and distorted images) to the model. The output has to be a representation of the SUR function.

With regard to the outputs, for a reference image I[0] and its distorted versions \(I[1], \ldots , I[N]\) the SUR curve can be represented as \(\text {SUR}(1), \ldots , \text {SUR}(N)\). The \(\text {SUR}\) function can be calculated from the empirical CCDF, or by first fitting an appropriate analytical distribution to the subjective data. In the latter case, the analytical representation can be sampled similarly to the empirical SUR or the model can be trained to predict the parameters of the analytical CCDF.

For the inputs of the model, if we attempted to predict a representation of the SUR curve from a single reference image, we would be ignoring information about the particular type of degradation that was applied to images in the subjective study. The model is expected to learn better when both the reference and its distorted version(s) are considered. Ideally we should provide the model with the reference and all the distorted images as inputs. In this way, using an appropriate learning method, the model has all the information that participants in the experiments had, and is expected to perform the best. However, in this formulation the problem is more difficult to solve, requiring a different learning model and more training data. We simplify it by inputting pairs of images: a reference I[0] and a distorted version I[k], \(k \in \{1, \ldots , N\}\). In this case we have two options for the outputs: 1. either predict the representation of the entire SUR curve (sampled, or parametric) or 2. predict the corresponding \(\text {SUR}(k)\) value. In both cases (1. and 2.), as predictions are independent of each other, the pairwise predictions need to be aggregated into a single SUR curve over all distortion levels for a given reference.

We chose to do pair-based prediction of sampled analytical SUR functions, as shown in Fig. 1. Predicting the empirical samples of the SUR does not perform as well as predicting the sampled analytical SUR. This is probably due to the denoising effect of first mapping a distribution to the subjective data. Each sample of the SUR is independently predicted, and then the overall SUR is estimated from the samples by least-squares fitting.

Problem definition

The regression problem for predicting SUR curves can be formulated as follows. Let \(I_1[0], I_2[0], \ldots , I_K[0]\) be a training set of K pristine reference images. For each reference image \(I_k[0], \; k \in \{1,\ldots ,K\}\), we associate the N distorted images \(I_k[n], \; n=1,\ldots ,N\) corresponding to the N distortion levels \(n=1, \ldots , N\).

Problem

Let \(\text {SUR}_k(\cdot ),\;k=1,\ldots ,K,\) denote the SUR function of image \(I_k[0]\) and its sequence of distorted images \(I_k[1],\ldots , I_k[N]\). Find a regression model \(f_\theta\), parameterized by \(\theta\), such that

for \(k=1,\ldots ,K,~ n = 1,\ldots ,N\).

Proposed model

Subjective studies are usually time-consuming and expensive, which limits JND datasets to relatively small size. With such small data, training a deep model from scratch may be prone to overfitting. To address this limitation we propose a two-stage model that applies transfer learning and feature learning, as depicted in Fig. 4.

SUR-FeatNet architecture for prediction of the SUR curve. In the first stage (a), a Siamese CNN is used to predict an objective quality score of a reference image and its distorted version, which is similar to the SUR prediction task (b) and allowed us to train on a large-scale dataset to address overfitting. In the second stage (b), MLSP features of a reference image and its distorted version were extracted and fed into a shallow regression network that was used to predict SUR values

In the first stage (Fig. 4a), a pair of images, namely a pristine image \(I_k[0]\) and a distorted version \(I_k[n]\), are fed into a siamese network that uses an Inception-V3 [31] convolutional neural network (CNN) body with shared weights. The network body is truncated, such that the global average pooling (GAP) layer and the final fully-connected layer are removed. Each branch of the siamese network yields a stack of 2,048 feature maps. The feature maps are passed through a GAP layer, which outputs a 2,048-dimensional feature vector \({\mathbf{f}}_{\text {gap}}\) for each branch. Then we calculate \(\varDelta {\mathbf{f}}_{\text {gap}}\), corresponding to feature vector differences between the distorted images \(I_k[n]\) and the pristine image \(I_k[0]\), i.e.,

By concatenating the two feature vectors \({\mathbf{f}}_{\text {gap}}(I_k[0])\), \({\mathbf{f}}_{\text {gap}}(I_k[n])\) and the feature difference vector \(\varDelta {\mathbf{f}}_{\text {gap}}\), we obtain a 6,144 dimensional vector. The latter is passed to three fully connected (FC) layers with 512, 256, and 128 neurons, respectively, where each FC layer is followed by a dropout layer (0.25 ratio) to avoid overfitting. The output layer is linear with one neuron to predict a quality score of the distorted image \(I_k[n]\), obtained from a fixed full-reference (FR)-IQA method.

In the second stage (Fig. 4b), we keep the weights fixed in the Inception-V3 body as trained in the first stage. A reference and a distorted image are presented to the Inception-V3 body, and for each of them MLSP [11] features \({\mathbf{f}}_{\text {mlsp}}\) with 10,048 components each are extracted. As in the first stage, we concatenate \({\mathbf{f}}_{\text {mlsp}}(I_k[0])\), \({\mathbf{f}}_{\text {mlsp}}(I_k[n])\), and \(\varDelta {\mathbf{f}}_{\text {mlsp}} = {\mathbf{f}}_{\text {mlsp}}(I_k[0])-{\mathbf{f}}_{\text {mlsp}}(I_k[n])\). The concatenated 30,144-dimensional feature vector is passed to an FC head to predict the SUR value. This FC head has the same structure as the FC head in the first stage.

Let \((I_r, I_d, q)\) be an item of the training data, where \(I_r\) and \(I_d\) are the reference image and its distorted version, and q corresponds to the FR-IQA score in the first stage and the SUR value in the second stage. Our objective is to minimize the mean of the absolute error, or L1 loss function

Our proposed model, called SUR-FeatNet, has the following properties. We first train a deep model to predict the FR-IQA score of a distorted image relative to its pristine original. This is similar to predicting an SUR value and therefore the features learned in the first stage are expected to be useful for predicting SUR values in the second stage. As it is very convenient to generate distorted images given a large-scale set of pristine reference images and to estimate their quality score by an FR-IQA method, training a deep model on a large-scale image set to address overfitting becomes feasible.

Second, training on these “locked-in” MLSP features in the second stage instead of fine-tuning a very large deep network not only reduces computational time, but also prevents forgetting previously trained information, which may lead to a better performance on a small dataset.

Prediction of the SUR curve and the JND

For any source image I[0], together with its distorted versions \(I[1], \ldots , I[N]\), a sequence of predicted satisfied user ratios \(\text {SUR}(1), \ldots , \text {SUR}(N)\) is obtained from the network. Assuming that the JND of the QF data follows a GEV distribution, we estimate the shape parameter \(\xi\), the location parameter \(\mu\), and the scale parameter \(\sigma\) by least squares fitting,

The fitted SUR curve is given by \({\overline{F}}(n\,|\,{\hat{\xi }},{\hat{\mu }},{\hat{\sigma }})\).

Experiment

Setup

In our experiments, we used the MCL-JCI dataset [14] and the JND-Pano dataset [24] to evaluate the performance of the proposed method. The MCL-JCI dataset contains 50 pristine images with a resolution of \(1920 \times 1080\). Each pristine image was encoded 100 times by a JPEG encoder with QF decreasing from 100 to 1, corresponding to distortion levels 1 to 100. Thus, there are 5,050 images in total. The JND-Pano dataset contains 40 pristine panoramic images with a resolution of \(5000\times 2500\). As for MCL-JCI, each pristine image was encoded 100 times by a JPEG encoder, which resulted in 4,040 images in total.

The annotation provided for the image sequences in MCL-JCI and for each of the \(M = 30\) participants of the study [14] is the QF value corresponding to the first JND (and also those of the second, third, etc.). For each source image \(I_k[0]~(k=1, \ldots , 50)\) in the MCL-JCI dataset, we modeled its SUR function for the given JND samples, according to the GEV distribution (Eq. (1)). Finally, we sampled the fitted SUR model to derive the target values \(\text {SUR}_k(n), k=1,\ldots ,50, n=1,\ldots ,100\) for the deep learning algorithm. Following the same procedure, we derived the target values \(\text {SUR}_k(n), k=1,\ldots ,40, n=1,\ldots ,100\) of the first JND in the JND-Pano dataset, which contains 19 to 21 JND measurements per image (after outlier removal).

k-fold cross validation was used to evaluate the performance (\(k=10\)). Specifically, each dataset was divided into 10 subsets, each containing a certain number of source images (five images in MCL-JCI and four images in JND-Pano) and all corresponding distorted versions of them. Each time, one subset was kept as a test set, and the remaining nine subsets were used for training and validation. The overall result was the average of 10 test results.

The Adam optimizer [18] was used to train SUR-FeatNet with the default parameters \(\beta _1 = 0.9\), \(\beta _2 = 0.999\), and a custom learning rate \(\alpha\). In our experiments, we tried \(\alpha = 10^{-1},10^{-2},\dots ,10^{-5}\) and found that \(\alpha = 10^{-5}\) gave the smallest validation loss. Therefore, we set \(\alpha = 10^{-5}\) and trained for 30 epochs. In the training process, we monitored the absolute error loss on the validation set and saved the best performing model. Our implementation used the Python Keras library with Tensorflow as a backend [4] and ran on two NVIDIA Titan Xp GPUs, where the batch size was set to 16. The source code for our model is available on GitHub [30].

Strategies to address overfitting

For the first stage of our model, we used the Konstanz artificially distorted image quality set (KADIS-700k) [20]. This dataset has 140,000 pristine images, with five degraded versions each, where the distortions were chosen randomly out of a set of 25 distortion types. We used a full-reference IQA metric to compute the objective quality scores for all pairs. For this purpose, we chose MDSI [25] as it was reported as the best FR-IQA metric when evaluating on multiple benchmark IQA databases. As KADIS-700k is a large-scale set, we only trained for five epochs before MLSP feature extraction.

In addition to transfer learning in the first stage, we applied image augmentation in the second stage to help avoid overfitting. Each original and compressed image of both datasets was split into four non-overlapping patches, where each patch has a resolution of \(960 \times 540\) in MCL-JCI and \(2500\times 1250\) in JND-Pano. We also cropped one patch of the same resolution from the center of the image. The SUR values for the patches were set to be equal to those of their source images. With this image augmentation, we had 25,250 annotated patches in MCL-JCI and 20,200 annotated patches in JND-Pano.

After training the networks with these training sets, SUR values were predicted for the test set. To predict the SUR of a distorted image, predictions for its five corresponding patches were generated by the network and averaged.

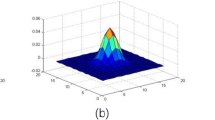

Statistics of experimental results on the MCL-JCI dataset. a Histogram of Bhattacharyya distance between the predicted JND distribution and the ground truth JND distribution. b Histogram of the absolute error between predicted JND (50% JND) and ground truth JND (50% JND). The GEV distribution is used as distribution model

Results and analysis for MCL-JCI

Three metrics were used to evaluate the performance of SUR-FeatNet: mean absolute error (MAE) of the 50% JNDs, MAE of the PSNR at the 50% JNDs, and Bhattacharyya distance [1] between the predicted and ground truth JND distributions of type GEV. The ground truth GEV parameters were obtained by using MLE to fit a GEV distribution to the MCL-JCI QF values.

We first compared the performance of the following four learning schemes.

-

1.

Fine-tune (ImageNet) In the first scheme, we used the architecture in the first stage (Fig. 4a). Its CNN body was initialized with the pre-trained weights on ImageNet and FC layers were initialized with random weights. With the initialized weights, the network was fine-tuned for SUR prediction using the MCL-JCI dataset.

-

2.

Fine-tune (KADIS-700k) The second scheme used the same architecture and same initialized weights as the first scheme. However, it was first fine-tuned on KADIS-700k to predict FR-IQA quality scores before it was fine-tuned on MCL-JCI dataset.

-

3.

MLSP (ImageNet) In the third scheme, we trained a shallow regression network for SUR prediction based on MLSP features, which were extracted from a pre-trained network on ImageNet.

-

4.

MLSP (KADIS-700k) The fourth scheme, which is used by our approach, trained the same regression network as the third scheme. However, its MLSP features were extracted from fine-tuned weights on KADIS-700k instead of ImageNet.

Table 2 shows the performance of the four schemes for the first JND. Clearly, transfer learning from the image classification domain (ImageNet) to the quality assessment domain (KADIS-700k), together with MLSP feature learning, outperformed the remaining schemes (Tables 3, 4).

Tables 5, 6, and 7 present the detailed results of the first, second, and third JND for each image sequence. Figure 5 shows the statistics. For all three JNDs, more than 75% of the images have a Bhattacharyya distance smaller than 0.1 (Fig. 5a). With respect to the first, second, and third JND, the absolute error in 50% JND was less than 5 for 32, 41, and 45 images, respectively (Fig. 5b). For more than 90% of the images, the absolute error in 50% JND was smaller than 10. Figure 6 compares the PSNR at ground truth and predicted 50% JND for the first, second, and third JNDs. The Pearson linear correlation coefficient (PLCC) was very high, reaching 0.9771, 0.9721, and 0.9741, respectively (Fig. 7).

Figure 8 shows the best two predictions, sorted according to the mean Bhattacharyya distance over the three JNDs. The best prediction result was for image 35, with absolute 50% JND errors of 0, 0, and 1, Bhattacharyya distances of 0.0073, 0.0073, and 0.0052, and PSNR differences at the 50% JNDs of 0, 0, and 0.3 dB for the first, second, and third JND, respectively.

The prediction results for a few images were not as good. For example, Fig. 9 presents the worst two predictions. The worst prediction was for image 12, which had absolute 50% JND errors of 27, 13, and 2, Bhattacharyya distances of 0.4884, 0.2373, and 0.0167, and PSNR differences at the 50% JND of 2.55, 1.88, and 0.34 for the first, second, and third JND, respectively. This may be because the size and diversity of the training set are too small for the deep learning algorithm. We expect that this problem can be overcome by training on a large-scale JND dataset.

The overall performance of SUR-FeatNet is displayed in Table 3. The mean Bhattacharyya distances between the predicted and the ground truth first, second, and third JND distributions were only 0.0810, 0.0702, and 0.0522, respectively.

Statistics of experimental results for the JND-Pano dataset. a Histogram of Bhattacharyya distance between predicted JND distribution and ground truth JND distribution. b Histogram of the absolute error between predicted JND (50% JND) and ground truth JND (50% JND). c PSNR comparison between the ground truth JNDs and the predicted JNDs; the PLCC is 0.9651

These performances can be compared with a simple baseline prediction based on the average PSNR at the 50% JND. For that, we use the same data splitting of the k-fold cross validation. For each source image, one subset of compressed images was used for testing, and the remaining nine subsets were joined and used together for “training”. The 50% JNDs for the test set were predicted by the distortion levels corresponding to the average PSNRs at the corresponding 50% JNDs in the training set (see Algorithm 1 for the details).

Table 3 reports the average prediction errors in terms of distortion levels and PSNR. The images in the MCL-JCI dataset corresponding to the 50% JNDs predicted by the baseline method show an average error in PSNR close to 3 dB while those predicted by SUR-FeatNet are much smaller, ranging from 0.58 to 0.69 dB.

Results and analysis for JND-Pano

The overall performance on the JND-Pano dataset is summarized in Table 3. The average Bhattacharyya distance is 0.1053, the absolute JND error is 8.63, and the PSNR difference at the JND is 0.76 dB. This demonstrates that our SUR-FeatNet also works well for panoramic images and head-mounted displays.

Nevertheless, the performance for the JND-Pano dataset is not as good as that for MCL-JCI. This is because the JND-Pano dataset is different in character compared to the MCL-JCI dataset: images are panoramic, thus much larger in resolution, and the JND samples are obtained using a different modality, i.e., head-mounted displays rather than screen images. As a result, participants of subjective JND studies for JND-Pano may be more likely to overlook differences between reference and distorted images. This is supported by an analysis of the JND measurements across all images, which yielded an average standard deviation of 14.93 in JND-Pano, compared to only 10.16 in MCL-JCI.

Table 8 presents the detailed results w.r.t. the first JND for each image sequence in the JND-Pano dataset, and the statistics are shown in Fig. 7.

Comparison with previous work

SUR-FeatNet outperformed the state-of-the-art PW-JND model of Liu et al. [23] for the first and second JND, see Table 4, except for the mean absolute error of the predicted distortion level of the second JND. There are no results listed in [23] for the third JND. Note, that in [23] the ground truth JNDs are slightly different from those used for SUR-FeatNet, as they had been taken from the model in [14] (Table 2). One advantage of our method compared to the work in [23] is that it can predict the distortion level at arbitrary percentiles (e.g., at the 75% SUR).

SUR-FeatNet also showed a better performance when predicting the 75% SUR for the first JND in the MCL-JCI dataset, compared to our previous model SUR-Net [6] (Table 4).

Concluding remarks

Summary

To predict SUR curves, we needed a well-behaved model for the curves themselves. This has led us to search for the best fitting distribution for the empirical JND data. A well-fitting distribution improves the modeling capabilities of any predictive model used subsequently.

We proposed a deep-learning approach to predict SUR curves for compressed images. In a first stage, pairs of images, a reference and a distorted, are fed into a Siamese CNN to predict an objective quality score. In a second stage, extracted MLSP features are fed into a shallow regression network to predict the SUR value of a given image pair.

For a target percentage of satisfied users, the predicted SUR curve can be used to determine the JPEG quality factor QF that provides a compressed image, which is indistinguishable from the original for these users, thereby providing bitrate savings without the need for subjective visual quality assessment.

Limitations and future work

The performance of our model is limited by the small amount of annotated data available. A large scale JND dataset with a large number of diverse-content reference images would significantly improve the performance of our model, as well as other potential models.

We assumed that the image compression scheme is lossy and produces monotonically increasing distortions as a function of an encoding parameter. For input images that are noisy, compression at high bitrates may smooth the images, leading to a higher perceptual image quality. Consequently, the psychometric function associated to the distortion will not fit well with the applied model.

Our model makes independent predictions for each pair of reference and JPEG compression level. The integration of these predictions is implemented as an additional step. A model that is aware of the relations between the predictions at training time could have a better performance. One option would be to directly predict the parameters of the analytical distribution given only the source image, or the source image and all its distorted versions, at the same time. Such an approach may need more training data.

The proposed method can be easily generalized to predict the SUR curves for images compressed with other encoding methods, or different distortion types.

We provided results for 50% JND and 75% SUR. Results for other percentages can be obtained in a similar way.

References

Bhattacharyya A (1943) On a measure of divergence between two statistical populations defined by their probability distributions. Bull Calcutta Math Soc 35:99–109

Bosse S, Maniry D, Müller KR, Wiegand T, Samek W (2018) Deep neural networks for no-reference and full-reference image quality assessment. IEEE Trans Image Process 27(1):206–219

Bromley J, Guyon I, LeCun Y, Säckinger E, Shah R (1993) Signature verification using a siamese time delay neural network. Adv neural inf process sys 7(4):737–744

Chollet F et al (2015) Keras. https://keras.io

Chopra S, Hadsell R, LeCun Y (2005) Learning a similarity metric discriminatively, with application to face verification. In: Computer vision and pattern recognition (CVPR), vol 1. IEEE, pp 539–546

Fan C, Lin H, Hosu V, Zhang Y, Jiang Q, Hamzaoui R, Saupe D (2019) SUR-Net: predicting the satisfied user ratio curve for image compression with deep learning. In: Eleventh international conference on quality of multimedia experience (QoMEX). IEEE, pp 1–6

Fan C, Zhang Y, Zhang H, Hamzaoui R, Jiang Q (2019) Picture-level just noticeable difference for symmetrically and asymmetrically compressed stereoscopic images: subjective quality assessment study and datasets. J Vis Commun Image Represent 62:140–151

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT press, Cambridge

Hadizadeh H, Reza Heravi A, Bajic IV, Karami P (2018) A perceptual distinguishability predictor for JND-noise-contaminated images. IEEE Trans Image Process 28:2242–2256

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE conference on computer vision and pattern recognition (CVPR), pp 770–778

Hosu V, Goldlucke B, Saupe D (2019) Effective aesthetics prediction with multi-level spatially pooled features. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9375–9383

Hosu V, Lin H, Sziranyi T, Saupe D (2020) KonIQ-10k: an ecologically valid database for deep learning of blind image quality assessment. IEEE Trans Image Process 29:4041–4056. https://doi.org/10.1109/TIP.2020.2967829

Huang Q, Wang H, Lim SC, Kim HY, Jeong SY, Kuo CCJ (2017) Measure and prediction of HEVC perceptually lossy/lossless boundary QP values. In: Data compression conference (DCC), pp 42–51

Jin L, Lin JY, Hu S, Wang H, Wang P, Katsavounidis I, Aaron A, Kuo CCJ (2016) Statistical study on perceived JPEG image quality via MCL-JCI dataset construction and analysis. In: IS&T international symposium on electronic imaging. Image quality and system performance, vol XIII, pp 1–9

Johnson NL, Kotz S, Balakrishnan N (1993) Continuous univariate distributions, vol 1. Wiley, Hoboken

Johnson NL, Kotz S, Balakrishnan N (1994) Continuous univariate distributions, vol 2. Wiley, Hoboken

Keelan BW, Urabe H (2003) Iso 20462: a psychophysical image quality measurement standard. In: The proceeding of SPIE-IS&T electronic imaging, image quality and system performance, vol 5294. International Society for Optics and Photonics, pp 181–189

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980

Li Z, Aaron A, Katsavounidis I, Moorthy A, Manohara M (2016) Toward a practical perceptual video quality metric. In: The Netflix Tech Blog, vol 29

Lin H, Hosu V, Saupe D (2019) KADID-10k: a large-scale artificially distorted IQA database. In: Eleventh international conference on quality of multimedia experience (QoMEX). IEEE, pp 1–3

Lin JY, Jin L, Hu S, Katsavounidis I, Li Z, Aaron A, Kuo CCJ (2015) Experimental design and analysis of jnd test on coded image/video. In: Applications of digital image processing XXXVIII, vol 9599. International Society for Optics and Photonics, p 95990Z

Lin TY, Goyal P, Girshick R, He K, Dollar P (2017) Focal loss for dense object detection. In: IEEE International conference on computer vision (ICCV), pp 2999–3007

Liu H, Zhang Y, Zhang H, Fan C, Kwong S, Kuo CJ, Fan X (2020) Deep learning-based picture-wise just noticeable distortion prediction model for image compression. IEEE Trans Image Process 29:641–656

Liu X, Chen Z, Wang X, Jiang J, Kowng S (2018) JND-Pano: database for just noticeable difference of JPEG compressed panoramic images. In: Pacific rim conference on multimedia (PCM). Springer, New York, pp 458–468

Nafchi HZ, Shahkolaei A, Hedjam R, Cheriet M (2016) Mean deviation similarity index: efficient and reliable full-reference image quality evaluator. IEEE Access 4:5579–5590

Nist/Sematech e-Handbook of Statistical Methods (2020). http://www.itl.nist.gov/div898/handbook

Recommendation ITU-R BT.500-11 (2002) Methodology for the subjective assessment of the quality of television pictures

Redi J, Liu H, Alers H, Zunino R, Heynderickx I (2010) Comparing subjective image quality measurement methods for the creation of public databases. In: Proceeding of SPIE-IS&T electronic imaging, image quality and system performance VII, vol 7529. International Society for Optics and Photonics, p 752903

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: IEEE conference on computer vision and pattern recognition (CVPR), pp 779–788

SUR-FeatNet Source Code (2019). https://github.com/Linhanhe/SUR-FeatNet

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: IEEE conference on computer vision and pattern recognition (CVPR), pp 2818–2826

Wang H, Gan W, Hu S, Lin JY, Jin L, Song L, Wang P, Katsavounidis I, Aaron A, Kuo CCJ (2016) MCL-JCV: a JND-based H. 264/AVC video quality assessment dataset. In: IEEE international conference on image processing (ICIP), pp 1509–1513

Wang H, Katsavounidis I, Huang Q, Zhou X, Kuo CCJ (2018) Prediction of satisfied user ratio for compressed video. In: IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 6747–6751

Wang H, Katsavounidis I, Zhou J, Park J, Lei S, Zhou X, Pun MO, Jin X, Wang R, Wang X et al (2017) VideoSet: a large-scale compressed video quality dataset based on JND measurement. J Vis Commun Image Represent 46:292–302

Wiedemann O, Hosu V, Lin H, Saupe D (2018) Disregarding the big picture: towards local image quality assessment. In: International conference on quality of multimedia experience (QoMEX)

Zhang X, Yang S, Wang H, Xu W, Kuo CCJ (2020) Satisfied-user-ratio modeling for compressed video. IEEE Trans Image Process 29:3777–3789

Funding

Funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)—Project-ID 251654672–TRR 161 (Project A05).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lin, H., Hosu, V., Fan, C. et al. SUR-FeatNet: Predicting the satisfied user ratio curve for image compression with deep feature learning. Qual User Exp 5, 5 (2020). https://doi.org/10.1007/s41233-020-00034-1

Received:

Published:

DOI: https://doi.org/10.1007/s41233-020-00034-1