Abstract

Compositional data (CD) is mostly analyzed using ratios of components and log-ratio transformations to apply known multivariable statistical methods. Therefore, CD where some components equal zero represents a problem. Furthermore, when the data is measured longitudinally, and appear to come from different sub-populations, the analysis becomes highly complex. Our objective is to build a statistical model addressing structural zeros in longitudinal CD and apply it to the analysis of radiation-induced lung damage (RILD) over time. We propose a two-part mixed-effects model extended to the case where the non-zero components of the vector might come from a two-component mixture population. Maximum likelihood estimates for fixed effects and variance components were calculated by an approximate Fisher scoring procedure base on sixth-order Laplace approximation. The expectation-maximization (EM) algorithm estimates the mixture model’s probability. This model was used to analyze the radiation therapy effect on tissue change in one patient with non-small cell lung cancer (NSCLC), utilizing five CT scans over 24 months. Instead of using voxel-level data, voxels were grouped into larger subvolumes called patches. Each patch’s data is a CD vector showing proportions of dense, hazy, or normal tissue. Proposed method performed reasonably for estimation of the fixed effects, and their variability. However, the model produced biased estimates of the nuisance parameters in the model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Compositional data are vectors of non-negative real numbers that represent parts of some whole. Therefore, a composition \({\varvec{x}}=[x_1,x_2,\ldots ,x_D]\) is subject to the constraint \(\sum _{i=1}^{D} x_i=k\), with \(x_i>0\). Usually, the parts of a composition are presented as proportions or percentages (Aitchison, 1986). Common examples include the proportion of different cell types in a patient’s blood, proportion of nutrients in a patient’s diet, portions of the chemical elements in the air, the proportion of working time spent on different activities, etc.

Compositional data have been commonly analyzed using multivariate data analysis without any transformation. However, compositional data analysis using traditional multivariate data analysis can lead to paradoxes and misinterpretations of the results given the negative bias induced by the constant-sum constraint (Aitchison et al., 2005; Pawlowsky-Glahn et al., 2015). Aitchison (1986) proposed the first methodology with new geometry to analyze compositional data as relative data, using ratios of components and the log transformation that are more amenable to unrestricted multivariate statistical models. However, compositional data, where at least one of the components equals zero, cannot be passed through these ratio transformations. Some methods for handling this issue have been proposed (Aitchison, 1986; Fry et al., 2000; Bacon-Shone, 2003; Martín-Fernández et al., 2003; Aitchison and Kay, 2003; Martín-Fernández et al., 2012; Chen and Li, 2016). The modified Aitchison zero-replacement method is one of the most frequently implemented method in the analysis of compositional data,(Fry et al., 2000) and it works properly in the presence of rounding zeros or generated by detection limits in the measurement. Nonetheless, in the presence of structural zeros (true zeros), the idea of substituting them for a small amount does not seem entirely adequate.

We address the issue in the context of zero inflated longitudinal compositional data that naturally occurs in the lung tissue changes in early-stage lung cancer patients receiving radiation treatment (RT) as captured through CT scans. Lung tissue surrounding the tumor, observed as a composition of different levels of radiation damage over time, poses a challenging modelling problem. Recent work on a similar problem is addressed in Bear and Billheimer (2016), but the context is not longitudinal. Templ et al. (2017) developed a method for outlier and extreme value detection. Silverman et al. (2018) dealt with a very similar inference problem where they used longitudinal compositional data for microbiota using a dynamic linear model without any special accommodation for zero inflation. The context of lung tissue composition we are dealing with is quite different from other scenarios, and the abundance of zero values significantly restricts the direct application of standard methods. Also, the added complexity in this model is the heterogeneity in the longitudinal pattern depicting a split course of temporal change, possibly based on tissue tolerability of radiation therapy. A two-part mixed-effects mixture model for zero-inflated longitudinal compositional data is proposed to deal with abundance of zeros and heterogeneous temporal changes. Modeling and analyzing these changes leads to our understanding of the responses of lung tissue to high dose radiotherapy, which in turn leads to long term clinical outcomes.

Section 2 briefly outlines the proposed two-part mixed-effect mixture model for zero-inflated longitudinal compositional data. In Section 3, we describe the radiographic lung change process following lung cancer radiotherapy, which partly motivated this research. Section 4 summarizes a simulation study to evaluate the model performance based on data described in Section 3, and the application of the method using the lung cancer data is presented in Section 5. Finally, an overall discussion of the model, its results, usefulness, and areas for further research are presented in Section 6.

2 Two-part Mixed Effects Mixture Model for Zero-Inflated Longitudinal Compositional Data

Consider a compositional data vector of three parts \(p_{it}=(d_{it},h_{it},n_{it})\), where \(i=1,\ldots , N (\text {total number of observations})\), at t (different time points), \(d_{it}\), \(h_{it}\) and \(n_{it}\) represent the proportion of each of the three parts in the observation i at time t. Further, \(0 \le d_{it} \le 1\) and \(0 \le h_{it} \le 1\), and \(n_{it}=1-d_{it}-h_{it}\). This vector is in the form of compositional data measured over time. Now, let \({\varvec{Y}}_{ij}= (d_{ij}/n_{ij},h_{ij}/n_{ij})\) be a transformation of \(p_{it}=(d_{it},h_{it},n_{it})\). Also, assume that a large proportion of \(d_{it}\) and \(h_{it}\) equal zero for most i and t. Hence, modeling this data necessitates a zero inflated longitudinal distribution. Also, the change over time of these parts is rather heterogeneous and thus requires a two-component mixture.

2.1 Model Formulation

Let \({\varvec{Y}}_{ij}\) a semicontinuous bivarite vector for the i-th (\(i=1,...,N\)) observation at time \(t_{ij}\) (\(j=1,...,n_i\)). Then, this outcome vector can be represented by two processes, an occurrence variable \(U_{ij}\) and an intensity vector \({\varvec{V}}_{ij}\), where

and the intensity variable \({\varvec{V}}_{ij}=log({\varvec{Y}}_{ij})\), when \(Y_{ij} \ne {\varvec{0}}\). The log transformation has been used to make \({\varvec{V}}_{ij}\) approximately normal with a observation-time-specific mean, and at the same time, handle compositional data. The joint distribution is specified by the distribution of the occurrence variable \(U_{ij}\), and the conditional distribution of \({\varvec{V}}_{ij}\). Suppose \(U_{ij}\) follows a random effects logistic regression model:

where \({\textbf {X}}_{ij}\) is a \(1 \times p\) covariate vector for the fixed effects, \({\varvec{\beta }}\) is a \(p \times 1\) fixed effects regression coefficient vector, \({\textbf {Z}}_{ij}\) is a \(1 \times q\) covariate vector for the random effects, and \({\textbf {c}}_{i}\) is a \(q \times 1\) observation-level vector of random intercept and random effects. The vector \({\varvec{V}}_{ij}|{\varvec{Y}}_{ij} \ne 0\) follows a bivariate linear mixed model:

where \({\textbf {X}}^*_{ij}\) and \({\textbf {Z}}^*_{ij}\) might have the same variables as \({\textbf {X}}_{ij}\) and \({\textbf {Z}}_{ij}\) in (1), but this is not required; \({\varvec{\gamma }}\) is the regression coefficient matrix \((p^*\times 2)\) for the fixed effects; and \({\textbf {d}}_{i}\) is the subject-level matrix with the random effects \((q^*\times 2)\). Additionally, the error term

The random effects vector \(({\varvec{c}}_i,{\varvec{\widetilde{d}}}_i)\), where \({\varvec{\widetilde{d}}}_i= vec({\varvec{d}}_i)\), is distributed by a mixture of 2 multivariate normal distributions. Correlation between random effects in (1) and (2) is allowed. Then,

with \(\sum _{r=1}^{2} m_r =1\) and \(\sum _{r=1}^{2} m_r {\varvec{\mu }}_r ={\varvec{0}}\). Suppose \(\Delta _{i}=1\) if \({\varvec{{b}}}_i\) is sampled from the first component in the mixture, and 0 otherwise. Then,

and

where \({\varvec{H}}={\varvec{\Psi} }_{dd} - {\varvec{\Psi }}_{dc} {\varvec{\Psi }}_{cc}^{-1} {\varvec{\Psi }}_{cd}\), and \({\varvec{\mu }}_{2}=- \frac{m_1}{1-m_1} {\varvec{\mu }}_{1}\).

2.2 The Likelihood

The estimation of the parameters in the model defined by (1) to (6) is based on the maximization of the likelihood, defined as

which, with the two-parts model and the conditional distribution of the random effects, becomes

The double integral in (8) is intractable, which implies that the likelihood in the equation cannot be maximized analytically. Using Olsen and Schafer (2001) approach with a sixth-order Laplace approximation, we obtained an approximate for the log-likelihood. The complete derivation of the likelihood is shown in the Appendix 2.

2.3 Model Estimation

First, the expectation-maximization (EM) algorithm is implemented. In the E-step, the conditional expectation of the likelihood is obtained, and only the posterior probability of belonging to the first component of the mixture for each i-th patch has to be calculated as:

where

In the M-step, the conditional expectation is maximized with respect of \(m_1\), the probability of belonging to the first component of the mixture, which allow us to obtain an updated estimate \(m_1^{(t+1)}\) as:

However, the other parameters in the model cannot be updated analytically. Hence an approximate Fisher scoring numerical maximization procedure is implemented. Score vectors were derived using the score vectors proposed in Olsen and Schafer (2001), but \(\phi _{cc}\), \(\phi _H\), and \(\phi _{\Sigma }\) represent the vectorized upper triangles of \(\Psi _{cc}\), H, and \(\Sigma\). Additionally, score vectors were extended to accommodate multivariate data. Expressions for the components of the score vectors for the i-th patch are given in the Appendix 3.

At each iteration, the values of the new estimates for the variance and correlation parameters are validated to assess whether the estimates are outside the parameter space. When needed, a step-halving procedure is applied to return the estimates within the parameter space. Also, a second step-halving procedure was implemented for all parameters in the model to guarantee that the deviance of the new set of estimates is lower than in the previous iteration.

Initial values for \({\varvec{\beta }}\), \({\varvec{\gamma }}\), \(\Psi _{cc}\), and the diagonal in \(\Psi _{dd}\) are generated by logistic regression for the occurrence variable and independent linear mixed model for each variable in the intensity vector. Starting values for the off-diagonal elements in \(\Psi _{dd}\) are set using the diagonal values and a correlation of 0.1. Initial values for \(\Sigma\) are set as the variances and correlations of the variables in the intensity vector. For simplicity, the starting values for \(m_1\) and \({\varvec{\mu}} _1\) are set as 0.55 and \((-0.05, 0.05)\), respectively.

Approximate empirical Bayes estimates by Pareto smoothed (Vehtari et al., 2015) importance sampling for unnormalized densities are implemented for the random effects. A thousand samples (\(c_i^{(1)}, \dots , c_i^{(1000)}\)) were taken from a multivariate t distribution with 4 degrees of freedom, centered at the mode \(\widetilde{c}_{i}\) with covariance matrix proportional to \(G_i^{-1}\), as proposed by Gelman et al. (1995) and implemented by Olsen and Schafer (2001). Using the drawn sample for \(c_i\), estimated means for \(c_i\) and \(d_i\) where calculated. All estimation procedures have been implemented in R version 3.4.4.R Core Team (2019).

3 Motivating Example: Radiographic Lung Change Following Radiotherapy of Lung Cancer

Radiation therapy (RT) is the standard treatment for medically inoperable patients in the early stages of Non-Small Cell Lung Cancer (NSCLC) (Jain and Berman, 2018) Stereotactic body radiotherapy (SBRT) is a RT approach that requires high spatial accuracy to deliver high doses to the tumor (Linda et al., (2011). Treatment is delivered over several fractions using beams from different angles to ensure the tumor receives the prescribed dose while limiting exposure to surrounding normal tissue (Matsuo et al., 2012). Nevertheless, healthy tissue near the tumor is exposed to clinically relevant doses of RT, causing changes in the lung surrounding the tumor (Dunne et al., 2018). Following RT, computed tomography (CT) scans are obtained at regular time points to assess tumor control and healthy tissue damage which can be classified according to whether consolidations (dense) or ground-glass opacities (hazy) were observed.

Our preliminary data consists of five CT scans of one NSCLC patient obtained at 3, 6, 12, 18, and 24 months following SBRT with a dose of 57 Gy. Voxels on each CT scan were arranged into larger subvolumes, called patches. Each patch is defined using isodose lines, combining voxels that received a uniform dose (Fig. 3a). Boundaries were drawn at regular dose intervals of 0.2 Gy, and voxels with a received treatment dose within the interval were combined on the same patch. Voxels that did not receive any radiation therapy were combined on the same patch. Figure 3b shows a transverse slice of the isodose-patch approach applied to this patient. The ocean-green region corresponds to voxels that did not receive any RT dose, and the center of the image, where the smallest region is located (dark blue), is the region with the highest received dose. Transition from voxels to the patches was necessary as the temporal integrity of the voxels was very hard to assure, even after a very rigorous registration process. Patients receiving different levels of radiation go through a substantial amount of tumor shrinkage and tissue deformation over time. Therefore, an aggregated approach, using patch level data, is deemed to be more reliable than voxel-level data.

For this study, one physician manually contoured the area with RILD on the CT scans using commercial contouring software (MIM Maestro v6.6, MIM Software, Cleveland, OH). Even though in the CT scan the extent of radiographic injury is observed in a continuous manner with the RT dose, voxels were classified according to their radiographic findings into three-threshold based ordinal categories of radiographic injury, namely, dense (representing areas of consolidation and fibrosis), hazy (representing ground-glass changes) and normal (Linda et al., 2011; Trovo et al., 2010). After RILD contouring, deformable image registration was performed using a multi-pass b-spline for accounting for major changes in lung architecture after RT. Follow-up CT scans were registered to the baseline treatment planning scan using a commercial registration package (Velocity - Varian Medial Systems).

For this patient, there were 288 patches for analysis. Data for each patch is summarized into a vector \(p_{it}=(d_{it},h_{it},n_{it})\), where \(i=1,\ldots , N (\text {total number of patches})\), \(t=3,6,12,18,24\) months, \(d_{it}\) and \(h_{it}\) represent the proportion of dense and hazy voxels in the patch i at time t months, and \(n_{it}=1-d_{it}-h_{it}\). Further, \(0 \le d_{it} \le 1\) and \(0 \le h_{it} \le 1\). This vector is in the form of compositional data measured over time, where the unit of observation is a composition within each patch. Even though some spatial autocorrelation is expected in close patches, the proposed model ignored such a relationship to avoid yet more complexity in the model specification.

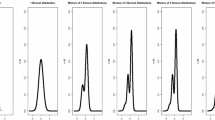

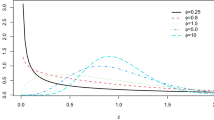

As shown in Fig. 1, a notable feature of these data is that, at each time point, less than 50% of the patches presented some tissue damage, meaning that \(d_{it}\) and \(h_{it}\) equal zero for most of the patches. Also, as shown in Fig. 2, the distribution of the transformed compositional data, for those patches with some tissue damage, seems bimodal.

In Section 5, a two-part mixed-effects heterogeneous mixture model for analyzing the aforementioned data is presented. The model was built as an extension of the two-part mixed model proposed in Olsen and Schafer (2001) and adjusted to fit compositional data, bimodality of the temporal change pattern and the mixture distribution of the random effects.

4 Simulations

Simulations, to evaluate the performance of our proposed model, were conducted using the same framework as the real data. Simulated values of the outcome of interest, namely the composition (%Dense, %Hazy, %Normal) was generated in two steps. \(({\varvec{Y}}_{ij})\), for the i-th (\(i=1,...,N\)) patch at time \(t_{ij}\) (\(j=1,...,n_i\)) was transformed from the simulated values of the bivariate intensity vector \({\varvec{V}}_{ij}\), which is the alr transformation of the composition, when \({\varvec{Y}}_{ij} \ne 0\), and the occurrence variable \(U_{ij}\) was generated using a Bernoulli distribution.

The simulation was carried out with three different number of observations (\(N=300,500,1000\)). Also, three different numbers of follow-up measurements were considered (\(n_i=3,4,5\)). The received dose was simulated following a logarithmic distribution. For both parts of the model (occurrence and intensity), intercept, dose-effect, and time after radiotherapy in years (linear and quadratic) were considered as fixed effects to build the data. Only a random intercept was used in both model parts (\(c_i\) and \({\varvec{d}}_{i}\) for occurrence and intensity models respectively).

The true values for the parameters were chosen to mimic the parameters estimated based on the real data set analyzed in Section 5. For simplicity, true values for the mixture distribution of the random effects for the intensity vector, \(m_1\) and \({\varvec{\mu}} _1\), were set as 0.6 and \((-0.1, 0.1)\), respectively. The simulations were repeated 1000 times within each combination of sample size and follow-up time points.

The performance of the algorithm in the estimation of the regression coefficients was assessed by the standardized bias (SB) (difference between the average estimate and the true value as a percentage of the SD estimate), and the coverage rate of the nominal 95% confidence interval (estimate ± 1.96SE). Additionally, the square of the correlation between the observed and predicted data, as well as the mean absolute scaled error (MASE), were calculated for each simulation. As a comparison method, the square of the correlation and MASE were calculated for the “naive” model, using the initial values obtained by a mixed-effects logistic regression for the occurrence variable and independent linear mixed model for each variable in the intensity vector without correlation between the models.

Additionally, to assess the model’s false positive rate of the fixed effects, the same simulation scenario described above was performed but with the coefficients of dose, time, and time squared set equal to zero. Parameter estimates and respective standard errors were calculated using the proposed model, and 95% confidence intervals were obtained. Type I error was calculated as the proportion of intervals that did not contain zero.

For all the simulation scenarios, the procedure failed to converge in less than 5 samples and for those that the procedure converged, the average number of iterations for convergence was between 11 to 14 steps. The average time estimating fixed effects was less than 10 min with 300 patches (\(N=300\)), between 10 and 20 min with \(N=500\), and between 35 min and 50 min with \(N=1000\). The estimation of the random effects took longer, around 45 min with \(N=300\), 1 to 1.5 h with \(N=500\), and around 2.5 h with \(N=1000\).

4.1 Estimation of Fixed Effects

For each scenario, Table 1 list the average and the standard deviation (SD) of the point estimates, the average standard error (SE), the standardized bias (SB),the coverage rate, and the type I error. In the occurrence model, the coefficients for the intercept and dose are underestimated, particularly with \(N=300\). In the intensity model, for both alr(Dense) and alr(Hazy), the estimation of the parameters went quite good, even in small sample sizes. The number of time points seems to have a substantial effect on the performance of the estimates in occurrence model, with estimation bias decreasing as the number of time points increases.

Summary of the standardized bias (SB) demonstrates that it is consistently lower than 40% for all parameters, therefore bias impact on the efficiency, coverage and error rate with the proposed model is minimal. However, in some scenarios, the SB is larger than 20%, particularly for the estimation of the intercepts and the dose effect. In most of the cases, the average SE is close to the SD of the estimate; however, with \(N=300\), the average SE and the SD differ substantially. This might indicate that the estimation of the standard errors generated by the proposed method may not adequately represent the variation of the estimator in small sample sizes. Coverage percentages were close to 90% or larger for all parameters but the dose coefficient. Contrary to our common experience, relatively higher coverage rates were observed with the smallest sample size (\(N=300\)). This is due to large standard errors resulting from small sample size, not better estimation performance.

The type I error rate for the coefficient associated with dose was equal or less than 0.05 for both model parts for all sample sizes (N) and the number of follow-up time points (\(n_i\)). The coefficient for time showed the highest type I error among the model coefficients, particularly for the first part of the model (occurrence model). This issue is a result of the underestimation of that coefficient, which gets worse as the sample size (N) increases. For the coefficient associated with Time\(^2\), the type I error rate is larger than 0.05 for most of the scenarios. Additionally, an increase in the error is observed with larger sample sizes and number of time points; this is a result of higher precision of the estimates with larger number of time points that led to smaller confidence interval.

4.2 Estimation of Nuisance Parameters

In addition to the estimates of the fixed effects, the proposed model also performs the estimation of the variance components (\(\Sigma\) and \(\Psi\)) and the components associated with the mixture model (m and \(\mu\)). The results obtained in the simulation for these parameters are observed in the Table 2. All the variance components but \(\Psi _{12}\) are underestimated and strongly biased. This bias is basically caused by the inclusion of the step-halving procedure that forces a lower deviance in each iteration, not allowing a large and faster change in some parameters. However, the step-halving procedure is necessary and widely used to deal with common convergence problems when using non-standard link functions, such as the log link binomial model used in the occurrence model.

The model performance on the estimation of the parameters of the mixture model was not satisfactory. The average percentage of membership to the most frequent part of the mixture (m) has been shrunk towards 0.50, and the mean towards zero, indicating a no mixture model. This is attributable to our assumed true model parameters that leads to little difference between the two groups, however, it imitates the real data. Even though the nuisance parameters are usually not the main interest in research, they are used on the estimation of the random effects, and therefore will affect the model’s predictions, and are also used on the hypothesis testing involving these parameters to find a more parsimonious model.

The average squared correlation between observed and predicted values was at least 0.70 for the proposed two-part model, and it was less than 0.40 in the naive approach. Similarly, the two-part model also showed better performance than the naive model when comparing the MASE, with an average MASE lower than 0.71 and 0.58 for dense and hazy, respectively, in the two-part model, and larger than 0.90 in the naive model.

5 Application to Real Data

The two-part mixed-effects mixture model for zero-inflated longitudinal compositional data was applied to patient’s data described in Section 3. As mentioned, the RILD intensity on each patch was consolidated in the compositional vector \(p_{it}=(\)%Dense, %Hazy, %Normal). Then, each patch was classified as without RILD presence (%Normal=100), or with RILD presence (%Normal \(\ne\) 100).

For applying the proposed model, the outcome variable (\({\varvec{Y}}_{ij}\); \(i=1,\dots ,\) \(\text {number of}\) \(\text {patches}\); \(j=1,\dots ,5\)) was the ratio of the vector \(p_{it}\), using the last component (Normal) as denominator (\({\varvec{Y}}_{ij}\)=(%Dense/%Normal, %Hazy/%Normal)). When the whole patch presented RILD (%Normal=0), or when only one of the damage components, dense or hazy, equals zero, the zero replacement method proposed by Fry et al. (2000) whit \(\delta =0.003\) was applied before calculating the alr transformation. Therefore, \({\varvec{Y}}_{ij}={\varvec{0}}\) represents no observed tissue damage after radiation in the patch i at the time point j (%Dense=0,%Hazy=0,%Normal=100).

As commented on Section 3 (Fig. 1), there is a large proportion of zeros on these data set. Hence, the occurrence variable is defined as

and the intensity variable \({\varvec{V}}_{ij}=log({\varvec{Y}}_{ij})\), when \({\varvec{Y}}_{ij} \ne {\varvec{0}}\). Then, \({\varvec{V}}_{ij}\) is the alr transformation of the compositional vector \((\%Dense, \%Hazy, \%Normal)\). Median patch dose, time in years, and time squared were used as explanatory variables for modeling the fixed effects for both outcomes (occurrence and intensity). To account for the interdependence over time, a random intercept was used in both models. No other factors were considered as random effects. Iterative estimation was performed until convergence was attained (tolerance = 1e-8). Table 3 shows the estimates of the fixed effects in the model.

5.1 Results

Images of this patient rendered 288 isodose patches, of which 58.48% present RILD at some point after RT. The model converged after 6 iterations, which took 8.1 min to a maximum relative parameter change of 1.2E-13. Also, the estimation of the random effects took 30 min. The odds of RILD, for a particular patch, increased 26% for each Gy increase in the dose (Table 3). Likewise, the odds of RILD increase until 17 months after RT, then the odds decrease over time. In the results for the intensity vector, the estimated baseline composition was (Dense= 0, Hazy= 0, Normal= 1). An increase of 1 Gy in the dose shifts the baseline composition by (0.35, 0.35, 0.30). Consequently, the dose is positively correlated with both dense and hazy. Furthermore, over time the changes in the compositions are characterized by an increment in the proportion of dense. The estimated value of the parameter capturing the correlation of the occurrence and intensity model, namely \(\mathbf{{ \psi _{dc}}}\)=(0.44, 0.38). This indicates positive dependence between the two components of our model, thus enforcing a joint model for the two processes.

5.2 Assessing Model Adequacy

Assumptions and goodness of fit of the model were assessed and results are presented on the Appendix 4. Apparent deviations of the normality assumption of the random effects for the occurrence model (\(c_i\)) are observed (Fig. 4a), where the tails correspond to patches with RILD. The bivariate vector of random effects in the intensity model is distributed by a mixture of normal distributions. A goodness-of-fit test of a mixture of normal distributions using the Cramér-von Mises Garcia Portugues (2020) can be implemented for this objective. However, these results are not presented in the current work. However, as shown in Verbeke and Lesaffre (1997), and in Butler and Louis (1992), the inference of the fixed effects might be robust to the non-normality of random effects, but it can affect the predictions at the patch level.

Figure 4b shows the q-q plots for the errors of the intensity model. There is also evidence of a lack of normality in the residuals, and mainly patches that move further from the straight line correspond to patches without RILD, which is expected given that the intensity model is conditional on the presence of RILD. However, the number of patches is larger than 250. Therefore, Central Limit Theorem should assure some robustness, provided the model is asymptotically unbiased.

Figure 5 shows the scatter plot of the standardized residuals for dense and hazy versus dose. In these plots, patches with no observed RILD at any occasion cluster along lines, and since the estimates of the random effects (\({\varvec{d}}_i\)) are set at the population-level predictions, large standard errors for the random effects in these patches are expected. Residuals in doses less than 20 Gy have zero variability because those patches did not present RILD at any time point. After 20 Gy, the residuals seem to have larger variability at larger doses of RT.

For assessing the assumption that the two models, occurrence and intensity, are correlated, Fig. 6 shows the estimated log odds for each patch and the expected alr(Dense) and alr(Hazy). As mentioned, the points cluster along lines representing the patches that did not have RILD at any time point after RT. Patches with low propensities to have RILD also tend to have lower RILD intensity. This provides evidence of the correlation between the models and the importance of not ignoring this relationship in the model specification.

Figure 7 shows the observed number of times that each patch presented RILD, and the sum of the predicted probabilities at each time point (\(\sum _{j=1}^{n_i} \hat{U}_{ij}\)). As observed, the prediction in patches of normal tissue during the whole follow-up (Observed = 0) is very good. However, some discrepancies in the fit of the occurrence pattern is observed for patches with RILD at some point. The scaled residuals \((U_{i.} - \hat{U}_{i.})/ \sqrt{\hat{U}_{i.} (n_i-\hat{U}_{i.})/n_i}\) were calculated, and outliers were identified as scaled residuals with magnitude larger than 2.5. In this data set, only 1.0 % of the patches were classified as outliers.

Figure 8 shows the scatter plots of predicted versus observed transformed dense and hazy proportions. The predicted values from the intensity model are labeled as “subpredicted" and are in the first column of this set of plots. The second column shows the predictions from the first model multiplied by the estimated probability of RILD for each patch. This shows the effect of the underestimated probabilities of RILD from the occurrence model on the predicted values from the intensity model adversely resulting in inferior predictions.

6 Discussion

Longitudinal compositional data, with a peak at zero, occur in many applications in health sciences. Different sources of correlation in the data are observed between subjects over time and between parts of the compositional vector. Additionally, the presence of singular zero represents a particular challenge in the analysis of such data. A new approach for the analysis of zero-inflated longitudinal compositional data, which additionally shows bimodal distributions of the intensity component, is proposed in this work. This model is fully parametric and uses asymptotic approximations to estimate the parameters. The likelihood was approximated using a sixth-order multivariate Laplace method, and even though a higher-order could have been used, empirical evidence shows that it would not improve the accuracy (Raudenbush et al., 2000). The EM algorithm was used to estimate the probability of the mixture model, and the approximate Fisher scoring procedure was implemented to estimate other parameters in the model. Finally, approximate empirical Bayes estimates by Pareto smoothed importance sampling were implemented for the estimation of the random effects.

The simulation study showed mixed performance of the proposed method, with reasonable estimates of the model’s fixed effects, but with highly biased estimates of other parameters. The average time that the procedure takes for the estimation of the fixed effects seems to be in the feasible range for statistical analysis.

Although the method seems to have good convergence, in future studies, it is important to assess whether the implemented step-halving procedure is causing false convergence. The implemented procedure is similar to the implemented “loop3" in the R function glm,(Marschner, 2011) which invokes the step-halving until convergence, until the difference in the relative deviance goes from one side of the convergence region to the other, or until this process has been repeated a fixed number of times. In this last situation, the new estimates can be very close to the previous ones and fall inside the convergence region, which could be causing a false convergence (Marschner, 2011). In this study, this step-halving procedure has repeated a maximum of 50 times. The effects in the model performance on those cases when convergence is attained after reaching the maximum number of repetitions need to be assessed.

The proposed method seems to be making an adequate estimate of the variability of the estimates through the SE. However, for \(N=300\) performance of the estimated SEs was inadequate. Since it is very common to find studies with sample sizes smaller than 300, it is advisable to use the method with caution in such cases. In our application, increasing sample size requires more imaging follow up. This is very difficult to achieve.

Estimation of nuisance parameters was somewhat unsatisfactory. As mentioned above, “loop 3" is the most likely cause of poor model performance in estimating variance components. By performing the step-halving procedure in each iteration, the change of each parameter is reduced more and more with each time this loop runs. Therefore, the changes in the estimates between iterations can be tiny for some parameters. Improvements to the step-halving procedure, perhaps limiting it only to fixed effects, could be implemented to mend this lack of performance.

The real data application of this model using the lung scans of a patient with NSCLC shows positive association of RILD with RT dose. Also the results suggest a positive correlation between the random effects of both models (occurrence and intensity). The methodology proposed in this study considers this correlation in the estimation process. Ignoring this relationship, by fitting the logit and the linear models separately, would introduce substantial bias in the estimates of coefficients, particularly in the coefficients of the intensity model.

It is important to consider that the estimated coefficients in the occurrence model represent the effect of changes in the variables on a particular patch, not a population-average effect. That is, the model responds to the probability of RILD for each patch, not to the percentage of patches with RILD. Population-average estimates tend to maintain the same direction as the mixed model estimates but with smaller magnitude, as well as smaller standard errors. Some approaches to estimate the population-averaged effects based on mixed model estimates have been discussed in the literature (Neuhaus et al., 1991), but were not implemented here since the main interest was the effect of the variables in each patch and not the overall effect.

Lack of agreement with the normality assumption was observed, for both the random effects and the error term in the intensity model. Exclusion or misspecification of the variables in the model might be one of the explanations of this finding. In this particular example, the number of patches is larger than 250, Central Limit Theorem should allow asymptotic approximation to a reasonable level. Therefore, the inference of the fixed effects might be robust to the non-normality of random effects (Butler and Louis, 1992; Verbeke and Lesaffre, 1997). Nevertheless, violation of the homoscedasticity assumption of the residuals might have a negative impact on the variance estimates of the parameters in the model.

Notably, the patches with no RILD at all time points represent an issue for both parts of the model. First, in the occurrence model, these patches might have an actual logit of probabilities of RILD of \(- \infty\) (Olsen and Schafer, 2001). Also, even though the intensity model can provide predictions on these patches, these predictions have large standard errors (Olsen and Schafer, 2001). Therefore, these patches have a substantial impact on the model assumptions and model performance. Furthermore, most of the procedures for diagnosing lack of adequacy in this model are based on the independent results or residuals of each of the parts (occurrence and intensity); however, future research should involve methods for model adequacy assessment using the residuals from the two-part model.

Additionally, the model presented in this section is a simplified version of the radiographic lung change following radiotherapy of lung cancer, being able to predict an average trajectory overtime for all patches, which moves higher as the received dose increases, and starts at different points for each patch due to the random intercept. However, this model does not allow us to identify different trajectories over time. Therefore, future applications in this data should include more complex models that better adjust the behavior of different patches over time, e.g. adding interactions between time and received dose.

In conclusion, the two-part mixed-effects mixture model for zero-inflated longitudinal compositional data has shown reasonable performance in estimating fixed effects. However, the model would benefit from improvements in the estimation of the nuisance parameters. Additionally, future research should study the impact of the inclusion of other random effects that represent clustering, or random slopes, as well as the effect of missing data.

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request. However, all analysis code is available at https://github.com/vivifj03/TumorModel.

References

Aitchison J (1986) The statistical analysis of compositional data. Chapman and Hall Ltd, London

Aitchison J, Egozcue JJ (2005) Compositional data analysis: Where are we and where should we be heading? Math Geol 37(7):829–850

Aitchison J, Kay JW (2003) Possible solution of some essential zero problems in compositional data analysis. In: Thió-Henestrosa S, Martín-Fernández JA (eds) CoDaWork’03. Universitat de Girona, Girona, Spain, pp 1–6

Bacon-Shone J (2003) Modelling structural zeros in compositional data. In: Thió-Henestrosa S, Martín-Fernández JA (eds) CoDaWork’03. Universitat de Girona, Girona, Spain, pp 1–4

Bear J, Billheimer D (2016) A logistic normal mixture model for compositional data allowing essential zeros. Austrian J Stat 45:3–23

Butler SM, Louis TA (1992) Random effects models with non-parametric priors. Stat Med 11(14–15):1981–2000 (https://onlinelibrary.wiley.com/doi/abs/10.1002/sim.4780111416)

Chen EZ, Li H (2016) A two-part mixed-effects model for analyzing longitudinal microbiome compositional data. Bioinformatics 32(17):2611–2617

Dunne EM, Fraser IM, Liu M (2018) Stereotactic body radiation therapy for lung, spine and oligometastatic disease: current evidence and future directions. Ann Transl Med 6(14):283

Fry JM, Fry TRL, McLaren KR (2000) Compositional data analysis and zeros in micro data. Appl Econ 32(8):953–959

Garcia Portugues E (2020) Goodness-of-fit tests for distribution models. Available from: https://bookdown.org/egarpor/NP-UC3M/nptests.html

Gelman A, Carlin JB, Stern HS, Rubin DB (1995) Bayesian data analysis. Chapman and Hall/CRC

Jain V, Berman AT (2018) Radiation pneumonitis: old problem, new tricks. Cancers 10(7):222

Linda A, Trovo M, Bradleyc JD (2011) Radiation injury of the lung after stereotactic body radiation therapy (SBRT) for lung cancer: a timeline and pattern of CT changes. Eur J Radiol 79(1):147–154

Marschner IC (2011) glm2: fitting generalized linear models with convergence problems. R J 3(2):12–15

Martín-Fernández JA, Barceló-Vidal C, Pawlowsky-Glahn V (2003) Dealing with zeros and missing values in compositional data sets using nonparametric imputation. Math Geol 35(3):253–278

Martín-Fernández JA, Hron K, Templ M, Filzmoser P, Palarea-Albaladejo J (2012) Model-based replacement of rounded zeros in compositional data: classical and robust approaches. Comput Stat Data Anal 56(9):2688–2704

Matsuo Y, Shibuya K, Nakamura M, Narabayashi M, Sakanaka K, Ueki N et al (2012) Dose-volume metrics associated with radiation pneumonitis after stereotactic body radiation therapy for lung cancer. Int J Radiat Oncol Biol Phys 83(4):e545–e549

Neuhaus JM, Kalbfleisch JD, Hauck WW (1991) A comparison of cluster-specific and population-averaged approaches for analyzing correlated binary data. Int Stat Rev/Rev Int Stat 59(1):25–35 (http://www.jstor.org/stable/1403572)

Olsen MK, Schafer JL (2001) A two-part random-effects model for semicontinuous longitudinal data. J Am Stat Assoc 96(454):730–745. https://doi.org/10.1198/016214501753168389

Pawlowsky-Glahn V, Egozcue JJ, Tolosana-Delgado R (2015) Modeling and analysis of compositional data, 1st edn. John Wiley & Sons Inc, Chichester, United Kingdom

R Core Team. R (2019) A language and environment for statistical computing. Vienna, Austria; Available from: https://www.R-project.org/

Raudenbush SW, Yang ML, Yosef M (2000) Maximum likelihood for generalized linear models with nested random effects via high-order, multivariate laplace approximation. J Comput Graph Stat 9(1):141

Silverman JD, Durand HK, Bloom RJ, Mukherjee S, David LA (2018) Dynamic linear models guide design and analysis of microbiota studies within artificial human guts. Microbiome 6:202

Templ M, Hron K, Filzmoser P (2017) Exploratory tools for outlier detection in compositional data with structural zeros. J Appl Stat 44(4):77–85

Trovo M, Linda A, El Naqa I, Javidan-Nejad C, Bradley J (2010) Early and late lung radiographic injury following stereotactic body radiation therapy (SBRT). Lung Cancer 69(1):77–85

Vehtari A, Simpson D, Gelman A, Yao Y, Gabry J (2015) Pareto smoothed importance sampling

Verbeke G, Lesaffre E (1997) The effect of misspecifying the random-effects distribution in linear mixed models for longitudinal data. Comput Stat Data Anal 23(4):541–556 (http://www.sciencedirect.com/science/article/pii/S0167947396000473)

Acknowledgements

Data collection was partially supported by Award No. P30 CA016059NIH from the NCI Cancer Center Support Grant. VAR’s effort was provided by the Colciencias-Fulbright Scholarship No. 481 of 2014.

Funding

Services in support of this research were provided by the VCU Massey Cancer Center Biostatistics Shared Resource, supported in part with funding from NIH-NCI Cancer Center Support Grant P30 CA016059 and fullbright U.S. Scholar Program (Grant No 481).

Author information

Authors and Affiliations

Contributions

All authors had full access to the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. Conceptualization, N.M., E.W., V.A.R., and R.N.M.; Methodology, N.M., V.A.R.; Software,V.A.R.; Formal analysis, N.M.,V.A.R.; Resources, E.W. R.N.M; Writing—original draft, N.M., V.A.R.; Writing—review and editing, N.M., E.W., V.A.R., and R.N.M.; Visualization, V.A.R.; Supervision, N.M., E.W.; Funding Acquisition, N.M., E.W.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no Conflict of interest

Ethical Approval

This study was approved by the institutional review board at VCU (HM20013924). Anonymized data stored and analyzed on a password-protected computer, which were only accessible to the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Isodose Patch Definition

See Fig. 3

Appendix 2: The Likelihood

assuming \({\varvec{Y}}_{i}\) are i.i.d:

with the two-parts model and using conditional distribution for random effects:

re-expressing internal product:

using that \(exp(log(x))=x\):

Let \(n_i^*\) the number of non-zero vectors in \({\varvec{y}}_i\)

Then, working with the inner integral in (13)

Let \(i^{*}\) identify the group of observations \({\varvec{y}}_{ij} \ne {\varvec{0}}\) in \({\varvec{y}}_i\)

where \({\varvec{\widetilde{V}}}_{i^{*}}=vec({\varvec{V}}_{i^{*}})\), \({\varvec{\widetilde{\gamma }}}=vec(\varvec{\gamma })\), \({\varvec{\widetilde{X}}}^*_{i^{*}}=I_{2 \times 2} \otimes {\varvec{X}}^*_{i^{*}}\), \({\varvec{\widetilde{Z}}}^*_{i^{*}}=I_{2 \times 2} \otimes {\varvec{Z}}^*_{i^{*}}\), and \(\widetilde{\Sigma }^{-1}=\Sigma ^{-1} \otimes I_{n_{i^{*}} \times n_{i^{*}}}\)

Let \({\varvec{A}}_{i^*}={\varvec{\widetilde{V}}}_{i^{*}}-{\varvec{\widetilde{X}}}^*_{i^{*}}{\varvec{\widetilde{\gamma }}}\); \({\varvec{f}}_{i1}={\varvec{\mu }}_{1}+ {\varvec{\Psi }}_{dc} {\varvec{\Psi }}_{cc}^{-1} {\varvec{c}}_i\), and \({\varvec{f}}_{i2}={\varvec{\mu }}_{2}+ {\varvec{\Psi }}_{dc} {\varvec{\Psi} }_{cc}^{-1} {\varvec{c}}_i\). Then,

Let \({\varvec{B}}_i={\varvec{\widetilde{Z}}}^{*T}_{i^{*}} \widetilde{\Sigma }^{-1}{\varvec{\widetilde{Z}}}^*_{i^{*}} + {\varvec{H}}^{-1}\)

Note that \({\varvec{H}}^{-1} - {\varvec{H}}^{-1} {\varvec{B}}^{-1}_i {\varvec{H}}^{-1} = {\varvec{H}}^{-1} {\varvec{B}}^{-1}_i {\varvec{\widetilde{Z}}}^{*T}_{i^{*}} \widetilde{\Sigma }^{-1} {\varvec{\widetilde{Z}}}^{*}_{i^{*}}\)

Let \({\varvec{E}}^T_i={\varvec{A}}^T_{i^{*}} \widetilde{\Sigma }^{-1}{\varvec{\widetilde{Z}}}^*_{i^{*}} {\varvec{B}}^{-1}_i {\varvec{H}}^{-1} {\varvec{\Psi }}_{dc} {\varvec{\Psi }}_{cc}^{-1}\), \({\varvec{M}}^T_i=\Delta _{i} {\varvec{\mu }}^{T}_{1} + (1-\Delta _{i}) {\varvec{\mu }}^{T}_{2}\),

and \({\varvec{D}}_i= {\varvec{\Psi }}_{cc}^{-1} {\varvec{\Psi }}^T_{dc} ({\varvec{H}}^{-1} {\varvec{B}}^{-1}_i {\varvec{\widetilde{Z}}}^{*T}_{i^{*}} \widetilde{\Sigma }^{-1} {\varvec{\widetilde{Z}}}^{*}_{i^{*}}) {\varvec{\Psi }}_{dc} {\varvec{\Psi }}_{cc}^{-1}\). Then,

Let \(\mathcal {G}^T_i= {\varvec{E}}^T_{i} - {\varvec{M}}^{T}_{i} {\varvec{H}}^{-1} {\varvec{B}}^{-1}_i {\varvec{\widetilde{Z}}}^{*T}_{i^{*}} \widetilde{\Sigma }^{-1} {\varvec{\widetilde{Z}}}^{*}_{i^{*}} {\varvec{\Psi }}_{dc} {\varvec{\Psi }}_{cc}^{-1}\)

Now, going back to the integral in (13):

The integral in (15) is intractable, but it is similar to the likelihood in a mixed effects logistic regression where the random effects follow a normal distribution with different location and scale parameters. As discussed for different authors, several strategies have been implemented to evaluate this integral. Using Olsen and Schafer (2001) approach with a sixth-order Laplace approximation, we have that:

where:

-

\(\widetilde{c}_{i}=({\varvec{\Psi }}^{-1}_{cc} + {\varvec{D}}_i + {\varvec{Z}}^T_i \widetilde{W}_i {\varvec{Z}}_i)^{-1}({\varvec{Z}}^T_i \widetilde{W}_i ({\varvec{U}}^{*}_{i}-{\varvec{X}}_i {\varvec{\beta }})+ \mathcal {G}_{i})\)

-

\({\varvec{U}}^{*}_{i}=\widetilde{W}^{-1}_i({\varvec{U}}_i-\widetilde{{\varvec{\pi }}}_i)+\widetilde{{\varvec{\eta }}}_i\)

-

\({\varvec{W}}_i\) is a diagonal matrix with elements \(\pi _{ij}(1-\pi _{ij})\)

-

\(\widetilde{W}_i\), \(\widetilde{W}^{-1}\), \(\widetilde{\varvec{\pi }}_i\), and \(\widetilde{\varvec{\eta }}_i\) are evaluated at \({\varvec{c}}_i=\widetilde{c}_{i}\)

-

\(-f^{(2)}(\widetilde{c}_{i})={\varvec{Z}}^T_i \widetilde{W}_i{\varvec{Z}}_i +{\varvec{\Psi }}^{-1}_{cc} + {\varvec{D}}_i={\varvec{G}}_{i}\)

-

\(\widetilde{m}^{(k)}_{ij}\) is the \((k-1)\)th derivative of \(\pi _{ij}\) with respect to \(\eta _{ij}\) evaluated at \(\widetilde{c}_{i}\)

Now, going back to (15),

Then,

Finally, the approximate log-likelihood is:

where

-

\(P_{i} = 1-\frac{1}{8}\sum _{j=1}^{n_i}\widetilde{m}^{(4)}_{ij} ({\varvec{Z}}_{ij} {\varvec{G}}^{-1}_{i} {\varvec{Z}}^T_{ij})^2 - \frac{1}{48} \sum _{j=1}^{n_i}\widetilde{m}^{(6)}_{ij} ({\varvec{Z}}_{ij} {\varvec{G}}^{-1}_{i} {\varvec{Z}}^T_{ij})^3\) \(+ \frac{15}{72} \Big ( \sum _{j=1}^{n_i} {\varvec{Z}}^T_{ij} \widetilde{m}^{(3)}_{ij} {\varvec{Z}}_{ij} {\varvec{G}}^{-1}_{i} {\varvec{Z}}^T_{ij} \Big )^T {\varvec{G}}^{-1}_{i} \Big ( \sum _{j=1}^{n_i} {\varvec{Z}}^T_{ij} \widetilde{m}^{(3)}_{ij} {\varvec{Z}}_{ij} {\varvec{G}}^{-1}_{i} {\varvec{Z}}^T_{ij} \Big )\)

Appendix 3: The Score Vectors

Score vectors were derived using the proposed score vectors by Olsen and Schafer (2001) and extended to accommodate multivariate data.

where

and

Appendix 4: Assumptions and Goodness of Fit of the Model

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rodriguez, V.A., Mahon, R.N., Weiss, E. et al. Two-Part Mixed Effects Mixture Model for Zero-Inflated Longitudinal Compositional Data. J Indian Soc Probab Stat (2024). https://doi.org/10.1007/s41096-024-00189-6

Accepted:

Published:

DOI: https://doi.org/10.1007/s41096-024-00189-6