Abstract

Debris flows are the most dangerous geological hazard in steep terrain. For systematic debris flow mitigation and management, debris flow evaluation is required. Over the past few decades, several methods for figuring out a debris flow's susceptibility have been created. The current study was carried out to examine the global debris flow susceptibility from 2003–July 2022. The findings demonstrated a growth in the number of papers published during the investigation period that dealt with the susceptibility of debris flows. From the study, it has been seen that China has the highest number of debris flow study as of now. This article discusses the most often used models with their advantage and disadvantage. There are 96 causative factors responsible for the occurrence of the debris flow, among which the top five are slope, aspect, curvature, lithology and rainfall. In 14.5 per cent of the publications, the slope is regarded as the most significant causative factor for the occurrence of debris flows. In comparison, the support-vector machine (SVM) has been utilised as the most popular approach for assessing debris flow susceptibility in 8.5 per cent of the articles. Lastly, it is determined that new advances in technology in the areas of geographic information systems (GIS), remote sensing and computing, and the expansion of data accessibility are important considerations in boosting interest in research in debris flow susceptibility.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Debris flow is a unique mass movement caused by heavy rain or snowmelt on steep hilly areas. A phrase used to describe mass movement occurrences is the landslide. Landslides can be classified into different categories depending upon the utilization of materials and how they move, including spread, fall, topple, and flow (Varnes 1978). Varnes categorized landslides based on the materials employed and the patterns of motion involved (Varnes 1978). There are two types of materials used in landslides: rock and soil. Two more forms of soil are debris and earth. The various types of movement include spreads, falls, topples, slides, and flows. A flow is a continuous dimensional movement with short-lived, closely placed, and rarely preserved shear surfaces. Many researchers have created their debris flow idea, which has evolved. According to Varnes, debris flow is a landslide that mimics a flow consisting of a high proportion of coarse particles (Varnes 1978). It is frequently the result of abnormally heavy rain, which results in torrential flow on steep terrain and a rapid flow through predefined drainage systems. Varnes also claimed that the factors behind debris flow induction are rainfall rate and duration, the physical properties of materials and deposition, pore-water pressure, slope angle, and movement mechanism (Varnes 1978). Moreover, debris flow happens whenever the water content of the debris materials becomes saturated, which creates rapid movement of the same in a regular confined channel (Hungr et al. 2001). According to his research, the debris flow rate can exceed one m/s and approach ten m/s. Debris flows as a continuous fluid mix of water and silt (Sassa et al. 2007). Three main factors are responsible for the debris flow (Sassa et al. 2007). The first reason is channel bed erosion due to heavy rainfall. A landslide caused the second reason, which resulted in material movement. Another factor is the disintegration of a natural dam on the slope's higher reaches. Debris flow is classified into channelized debris flow and hill slope debris flow (also known as open slope debris flow). The topographic and geological characteristics of the region where the debris flow occurred are used to classify these two types of debris flows. Hillslope debris flows create their course down the slope, whereas channelized debris flows flow along a pre-existing path, such as gullies, rivers, valleys, or depressions. (Nettleton et al. 2005). Debris flow susceptibility zonation (DFSZ) mapping identifies debris flow vulnerable zones in hilly areas. Debris flow susceptibility maps divide debris flow-prone areas into various susceptibility regions and rank them according to the likelihood of debris flow hazards occurring. Recently, this has become a widely accepted and extensively used debris flow research worldwide. The current study will review global debris flow susceptibility literature between 2003 and July 2022. For this purpose, a database comprising 90 research papers has been prepared after extraction from the Web of Science portal.

2 Methodology

A bibliographic search has been carried out on “Web of Science” Database (2003–2022) for the following combination of keywords: "susceptibility*", "Debris flow*". For this analysis, only peer-reviewed journal articles written in English have been considered, as they possess the best quality articles. The English language has been selected because this is understandable to the international scientific community. After screening the studies on the given topic, articles were finally selected, the critical analysis of which is stated in the following paper.

3 The Chronological Evolution of Published Articles

In the 90 papers on debris flow susceptibility (Fig. 1) that were published between 2003 and July 2022, only ten articles were included between 2003 and 2012; however, in 2013, there was a rapid increase, and there were at least four publications published every year on debris flow susceptibility. Figure 1 shows that after 2012 researchers started focusing on the debris flow susceptibility model. Amongst the 90 research papers on debris flow susceptibility (Fig. 1.) published between 2003 and July 2022, only ten articles have been included between 2003 and 2012; however, a sudden hike in the publication has been observed in 2013. It can be observed from Fig. 1. that after 2012, researchers started focusing on the debris flow susceptibility model.

4 The Pattern of Journal Publication

As presented in Fig. 2, 64.7% of the total articles reviewed on debris flow susceptibility between 2003 and July 2022 are published in following ten journals like Natural Hazards and Earth System Sciences, Journal of Mountain Science, Natural Hazards, Remote Sensing, Geomorphology, Landslides, Earth Surface Processes and Landforms, Environment Earth Sciences, Water and Bulletin of Engineering Geology and the Environment. Out of these ten journals, Natural Hazards and Earth System Sciences, Journal of Mountain Science, and Natural Hazards have published 54.3% of the papers.

In terms of the number of publications listed in the literature database, the top ten journals (out of 35) are as follows. A horizontal bar's colour indicates the number of articles in four classes. The height of the horizontal bars displays the average number of citations across four classes. Square brackets indicate class limit inclusion, and round brackets indicate exclusion

5 Authors and Study Area

The authors' study of 90 papers on debris flow susceptibility revealed that 200 authors have written those manuscripts. Amongst those, only 3.44 per cent of the articles were written by a single author, while two or more authors co-wrote 96.5 per cent. Between 2003 and July 2022, each author contributed to the publication of an average of 0.44 publications. Figure 3 shows the contribution of top ten authors in terms of publications of debris flow susceptibility assessment articles for 2003–July 2022.

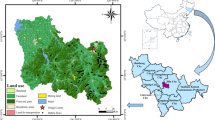

A total of 118 study areas were given in the 90 papers. The maximum percentage of studies is located in China, as shown in Fig. 4.

6 Thematic Variables

The authors have utilised a total of 96 distinct input thematic variables. After the study, it has been observed that minimum 5 and maximum of 18 thematic variables have been utilised for the application of the susceptibility model. One can infer from Table 1 that out of the 96 distinct variables, 75 variables appeared just once or twice in the database, which depicts that these are less significant with respect to the variables which are used frequently for study.

We divided this vast amount of input theme variable names into 17 categories that authors seem to utilise more frequently. The following two key criteria were used to classify each variable name. For example, "Plane Curvature" and "Profile Curvature" were placed into the class "Curvature" since they were synonyms for the same thematic variable name. Second, similar descriptors with different meanings but connected to the same themes were grouped. For instance, the themes of "geology" and "lithology" were combined into the class "geology". Five theme clusters were created from the 17 identified classes. i.e. other variables, hydrological, morphological, land cover, and geological (Fig. 5). These five cluster consists of 72.3% of the thematic variables the author frequently uses.

7 Methods for Investigating the Susceptibility of Debris Flow

Debris flow is a severe natural disaster that can occur anywhere on the globe. Rapid debris flows demolish structures and put people's lives and property at risk [5, 38, and 65]. Therefore, developing efficient strategies for mitigating the disastrous implications of debris flows is critical. The spatial pattern of the likelihood of debris flows caused by severe weather is depicted by the debris flow susceptibility map (DFSM). It is widely used for anticipating debris flows and mitigating their serious consequences. (Chen et al. 2016; Li et al. 2017; Polat and Erik 2020; Qiao et al. 2021). These are largely branched into two classes: quantitative and qualitative approaches (Intarawichian and Dasananda 2011; Ayalew and Yamagishi 2005; Kanungo et al. 2009; Aleotti and Chowdhury 1999). In qualitative techniques, professionals used the field experience and observation of the study area to provide weights to numerous conditioning parameters (Du et al. 2019; Yalcin et al. 2011). Qualitative approaches may include different approaches like the weighted linear combination methods and analytical hierarchy process (AHP). The correlation between the influencing factors and existing debris flow and past debris flow is represented numerically as causative factor weights and their categories in the quantitative approach (Kanungo et al. 2009; Yalcin et al. 2011). Quantitative approaches may include statistical, probabilistic, and distribution-free approaches (Kanungo et al. 2009). Different qualitative and quantitative methodologies for landslide susceptibility mapping have been used worldwide. Over the last few decades, statistical methodologies such as (Banerjee et al. 2018; Chen et al. 2014; Xu et al. 2013; Sarkar et al. 2008; Saha et al. 2005; He et al. 2012; Merghadi et al. 2020), frequency ratio (Intarawichian and Dasananda 2011; Angillieri 2020; Demir 2019; Lee and Pradhan 2007; Xiong et al. 2020; Dou et al. 2019), the weight of evidence (Chen et al. 2019; Sujatha et al. 2014; Youssef et al. 2016), and certainty factor (Wubalem 2021; Wang et al. 2015; Kanungo et al. 2011) models have been used. In recent years, researchers have also utilised the different susceptibility mapping techniques that have used different machine learning methods such as artificial neural network (Gao et al. 2021; Chen et al. 2020; Elkadiri et al. 2014), Bayesian network [29,3 0], random forest (Liang et al. 2020; Xiong et al. 2020; Dou et al. 2019), decision tree (Zhang et al. 2019; Arabameri et al. 2021), Naïve Bayes algorithm (Zhang et al. 2019; Qing et al. 2020; Chen et al. 2017). The information value method for debris flow susceptibility map for Sichuan Province (China) (Xu et al. 2013). In India, Sujatha and Sridhar used an analytical network process to create a debris flow susceptibility map (Sujatha and Sridhar 2017). Achour created such a map in Portugal using logistic regression and frequency ratio models (Achour et al. 2018). Qin also prepared the DFSZ maps using the frequency ratio method (Qin et al. 2019). When appropriate geotechnical and hydrological data are available, the physical model is a solid alternative for debris flow forecast on a regional scale. Several models, such as EDDA (Erosion–Deposition Debris flow analysis) and FLO-2D, can correctly predict debris flow erosion, moving, and build-up (Gomes et al. 2013; Chen and Zhang 2015; Shen et al. 2018). When statistical approaches were utilised in the past, debris flow was commonly considered a point. However, awareness of the start and the source of regional debris flows is crucial in determining their susceptibility (Ciurleo et al. 2018). It is highly tough to analyse debris flow and landslide separately, as seen in the Yongji County study (Park et al. 2016). As a result, landslide inventory research is critical for precisely forecasting the source region of debris flows as most of the debris flow is due to landslides, as seen in Yongji County (Blahut et al. 2010). Numerous established physical models (Kang and Lee 2018) can replicate the process of the debris flow that is produced by shallow landslides, primarily including LISA (Level I stability analysis) (Hammond et al. 1992), SMORPH method (Shaw and Johnson 1995), SHETRAN method (Ewen et al. 2000), SINMAP method (Pack et al. 2001), the SHALSTAB method (Dietrich et al. 1993) and TRIGRS method (Baum et al. 2008). Sometimes collecting sufficient precise hydrological and geotechnical data in the field is too challenging, so the data-driven model uses statistical principles to make DFSM (Qiao et al. 2019; Zhang et al. 2014). Each technique has its strengths in terms of outcomes. The model integration is usually an appropriate approach when a single model cannot meet various requirements simultaneously. In some studies, DFSM reliability has been reduced due to duplicated factors to avoid this merging the factor selection procedure with DFSM modelling to pick significant factors (Yang et al. 2019). The concept of fusing several statistical methods to increase the accuracy of debris flow prediction has become increasingly popular in research. It involves merging classic statistical methods and layering machine learning models (Dou et al. 2019) (Table 2).

The methods mentioned above of analysis have been used in the previous debris flow research from 2003 to July 2022. This section explains the model used in more than 2 per cent of the total paper from 2003 to July 2022 are explained briefly.

7.1 Semi-quantitative Approaches

Debris flow susceptibility can be assessed using semi-quantitative methods (Li et al. 2021b). Fuzzy set-based analysis (Zhang et al. 2022), analytic hierarchy process (AHP), and other methodologies are included in multi-criteria decision analysis.

7.1.1 Analytic Hierarchy Process (AHP) approach

The AHP can be classified as a multi-criteria decision-making approach that can be used to assess the susceptibility of debris flow hazards. It is a methodical process that includes problem definition, objective, and alternate determination, paired-wise comparison matrix formulation, weight determination, and overall priority determination. (Saaty 2008). Debris flow is a complicated process caused by several factors (Li et al. 2021b). The AHP method can measure the link between causative factors and debris flow in absolute or relative terms. (Pham et al. 2016; Qiao et al. 2019; Sun et al. 2021). In absolute terms, each alternative is measured against one ideal alternative, whereas in terms of relative measurement, each alternative is compared to a large number of other alternatives. Absolute and total measurement is a controlling approach based on what is known to be the finest. The comparative measuring access, on the other hand, is descriptive and is conditioned by the evaluator's competence and ability to check observations (Pardeshi et al. 2013). Each of the debris flow causes might be considered as an alternative. Furthermore, these causal elements are given absolute values (1–9) based on their respective importance in causing slope instability (Dou et al. 2019; Li et al. 2021b). To calculate the Consistency Ratio (CR) and Consistency Index (CI), comparison matrices are created (Li et al. 2021b). Because of the influence of the weight assigner's subjectivity, the AHP technique, as a subjective weighting method, allocates a significant weight to those factors having imperfect correlations with debris flow occurrence in the research area, reducing the model's prediction capacity.

7.2 Quantitative Approaches

Predicting debris flow susceptibility using quantitative methodologies is founded on real-world data and analysis. Furthermore, quantitative tools eliminate the biased inherent in qualitative techniques.

7.2.1 Statistical Approaches

Statistical approaches are the most commonly used methods for debris flow susceptibility (Sun et al. 2021; Dash et al. 2022). It must be understood that an evaluator's capability, technical skills, and expertise in applying a specific statistical model are more essential than the technique on its own. Overall, there are no defined standards or practice guidelines for evaluating debris flow susceptibility using statistical modelling. As a result, selecting a suitable method for assessing debris flow susceptibility is often a challenging issue. Different methods defined under statistical approaches are classified as follows.

7.2.1.1 The Information Content Model (ICM)

Shannon'S (1948) communication theory, which first proposed the concept of information as well as the computation formula of information entropy (Shannon 1948), is being used to assess the information content model (ICM). ICM refers to a statistical analysis and forecasting method. This method analyses the information content values of each influencing factor and builds an evaluation and prediction model based on known debris flow information and its influencing factors (Li et al. 2021b). The debris flow susceptibility of the entire research region can then be assessed using the analogy principle. The ICM technique can help to eliminate subjective judgment and provide a more objective evaluation model. However, this method undervalues several factors, lowering the model's predictive effectiveness.

7.2.1.2 Principal component analysis (PCA)

A unique multivariate statistical method called "PCA" was proposed to create debris flow susceptibility maps of the research area in a GIS system (Li et al. 2021b). To decrease the redundant information of the variables and translate them into variables, the principal component analysis (PCA) approach was used to select the most influential factors and their corresponding weights based on the percentage of variability acquired. Correlative variables into uncorrelated variables (Gorsevski 2001). The results are then fed into a (GIS) geographic information system model, which is used to assess and map the research area's susceptibility to debris flow. The study uses a linear model, which assesses the probability that each pixel contains debris flow. This study minimises the influence of redundancy between the components analysed by automating the analysis of most of the characteristics connected to the incidence of slope failures while decreasing factors not influencing the triggering of debris flow.

7.2.1.3 Information Value (IV) Model

This technique is a bivariate statistical analysis method (Yin et al. 1988) that can measure the effect of independent factors on the distribution of a dependent variable (Melo et al. 2012). Researchers worldwide have used it to analyse debris flow susceptibility (Li et al. 2021a; Xu et al. 2013).

The following Eq. (1) can be used to calculate the information value:

In the x-th causative factor, the information value of the y-th class is IVxy. The debris flow density is referred to as densclas within factor class, Densmap is the overall factor map's debris flow density, the number of pixels impacted by debris flow in the y-th class of the x-th causative factor is NDxy, Nxy is the pixels in the y-th class of the x-th causative factor, in the x-th causative factor map, NDx would be the overall number of pixels impacted by debris flow, and Nx is the pixel value in the x-th causative factor map. This model has been utilised extensively in previous investigations in Indian Himalayan terrain. An IVm picture for a causative factor is created by combining the associated IVxy images for distinct classes of that causative factor. The arithmetic overlay procedure is used to integrate these IVm pictures expressing the information values for the classes (IVxy) of the causal factors. In the GIS environment, each pixel's debris flow susceptibility index (DFSI) is then computed using the relation below Eq. (2).

The total number of causal factors is denoted by the letter Z.

7.2.1.4 Index Entropy Model

Vlčko proposed the index entropy model (Vlčko et al. 1980). This model may determine the area percentages and weights of various debris flow effect factors at all levels. This model is a binary statistical model. The entropy index model's weight parameters have a Gaussian distribution. In this method entropy index reveals the key regulating element influencing origin development under natural conditions. It is possible to determine the weight range of 0 to 1. The more significant the factor's contribution to debris flow generation, the closer the weight value is to 1. On the GIS platform, the layers of each factor are overlaid on the debris flow pattern data layers. Each impact factor weight, as well as the average probability density of debris flow, can be computed by the index entropy model. The dominant factors are eventually identified. The entropy methodology has been extensively used to calculate the weight index of natural disasters. It has been used for integrated environmental impact studies of natural processes such as droughts, sand storms, and debris flows (Chen et al. 2017).

7.2.1.5 Logistic Regression (LR)

Among the various multivariate statistical techniques, logistic regression is the most widely used method for spatially predicting debris flow susceptibility and hazard zonation (Li et al. 2021a; Liang et al. 2020; Xiong et al. 2020; Achour et al. 2018). Using categorical and continuously scaling factors, the logistic regression approach can successfully forecast a binary response parameter, such as the presence or absence of debris flows. (Liang et al. 2020). After a logistic regression statistical study, this method forecasts the likelihood of debris flow. Equation (3) can define the link between the presence of debris flow inside a specific area and the variables that influence it. (Achour et al. 2018).

On a 'S shaped' curve, 'P' reflects the expected probability of a debris flow that runs from 0 to 1. A linear combination is represented by the term 'z'. The logistic regression uses an Eq. (4) that is fitted to the data set.

The model's intercept is represented by ‘b0’, the bi (i = 0, 1, 2 …, n) are the slope coefficient of the logistic regression model and the xi (i = 0, 1, 2, 3………., n) represents the independent variable. When the dataset seems continuous or discrete or a combination of the two, logistic regression can be employed. On the other hand, the logistic regression results cannot distinguish between the impacts of various classes on the frequency of debris flows. In logistic regression, the dependent variable should be binary, including yes or no, zero or one, presence or absence, and so forth. (Chen et al. 2017). The frequency ratio model is simple to use; however, the LR model is more challenging because it needs to convert data from a GIS to external statistical software. The FR method evaluates the relationship between one dependent variable (debris flows) and many independent variables using only discrete data (predisposing factors). However, in addition to discrete forms, the LR allows for evaluating continuous independent variables.

7.2.1.6 Frequency Ratio

Among bivariate statistical approaches, frequency analysis is the most extensively employed (Angillieri 2020). The spatial distribution of prior debris flow in the area, and the association between these key causative factor groups are utilised in this method (Achour et al. 2018; Qin et al. 2019; Wu et al. 2019; Kurilla and Fubelli 2022).

The following Eq. (5) is used to determine the frequency ratio (FR):

The frequency ratio value of the j-th class in the i-th causative factor is represented by FRij, the number of pixels affected by debris flow in the ith causative factor's j-th class is NDij. In the i-th causative factor layer, NDi denotes the total number of debris flow-affected pixels (i.e. the total number of pixels in the study area that were affected by debris flow), Nij is the number of pixels in the j-th class of the i-th causative factor and Ni is the total number of pixels in the i-th causative factor (i.e. the total number of pixels in the study area that were affected by debris flow). The FRij > 1 indicates stronger relationship and FRij < 1 indicates weaker relationship. To build a FRl image for a certain causative factor, the matching FRij images for multiple classes of that causative factor are combined. The arithmetic overlay technique is used to integrate these FRl images reflecting the frequency ratio values for the classes (FRij) of the causative factors. In a GIS environment, the debris flow susceptibility index (DFSI) of each pixel is then determined using Eq. (6) (Angillieri 2020).

The total number of causal factors is denoted by the letter t. (i.e. the corresponding thematic layers).

7.2.1.7 Shannon’s Entropy

CE Shannon developed the "Shannon entropy" notion in 1948 (Shannon 1948). Shannon coined the term "information entropy" to describe the average amount of data after redundancy was removed, and he presented a mathematical equation for computing it based on thermodynamics. Shannon's entropy model improves the frequency ratio model. The frequency ratio model only considers sub-factors weighting, not causative factors' weighting. Shannon's entropy is a measure of a system's uncertainty or instability. One and all index in the assessment index system represents unlike qualities of the objects and different dimensions of their values. Some indicators are as small as possible for a specific system, while others are as large as possible. As a result, direct comparison of these evaluation indicators is impossible.

7.2.1.8 The Certainty Factor (CF)

A function of probability, the certainty factor (CF), is defined. Shortliffe and Buchanan proposed it, and Heckerman later improved it (Shortliffe and Buchanan 1975; Heckerman et al. 1986; Kurilla and Fubelli 2022). As previously stated, this model can handle heterogeneity and uncertainty in many input data layers. The CF can be stated in the following Eqs. (7) and (8) (Heckerman et al. 1986):

where ppij is the conditional probability of a number of debris flow events occurring in the i-th factor's j-th class, and can be written as given by Eq. (9):

In the i-th causative factor map, NDi is the total number of debris flow impacted pixels. (i.e. the total number of pixels in the study area that were affected by debris flow), and the number of pixels in the i-th causative factor map is given by Ni (i.e. the total number of pixels in the area under study). The CF values vary from − 1 to 1. A positive CF number indicates that debris flow activities are more likely, whereas a negative CF value indicates that same activity is less likely. A CF value close to 0 does not provide a clear indicator of the likelihood of debris flow. The related CFij images for various classes of a particular causative factor are similarly combined to generate a CFl image for that causative factor, as in the other two models. The arithmetic overlay procedure is used to integrate these CFl images reflecting the certainty factor values for the classes (CFij) of the causative factors.

As a result, in the GIS context, the debris flow susceptibility index (DFSI) of each pixel is determined using Eq. (10).

The total number of causal factors is denoted by the letter t. (i.e. the corresponding thematic layers). Several authors have adopted the certainty factor approach for mapping debris flow and landslide susceptibility [5, 23, and 56].

7.3 Artificial Intelligence (AI) Methods

Some statistical principles are used in artificial intelligence (AI) methods. In contrast, these methods are based on speculation, predefined algorithms, and outcome. When a direct mathematical relationship between cause and effect cannot be demonstrated, AI approaches are appropriate (Chowdhury and Sadek 2012). For debris flow investigations, there are a variety of AI or machine learning technologies that can be applied (Gao et al. 2021). These can be categorized as; random Forest (RF), artificial neural network (ANN), support-vector machine (SVM) (Qiu et al. 2022; Jiang et al. 2022), etc. These approaches effectively handle continuous and discrete data irrespective of data dimension. Moreover, they can demonstrate high generalisation performance on various real-world challenges and have few parameters to alter and give learning machine architecture without experimenting (Pawley et al. 2017). As a result, AI methods are better suited to analysing high-dimensional data and complicated systems.

7.3.1 Artificial Neural Network (ANN)

The artificial neural network (ANN) models human mind neuron operations such as processing information, retention, and exploration. It has a solid concurrent processing capacity and has emerged as the fastest in nonlinear problem handling. It determines how to get the network's weights and structure through training, demonstrating a solid ability to self-learn and adapt to its surroundings. ANN was commonly utilised in debris flow susceptibility mapping because of the above advantages (Gao et al. (2021), Chen et al. (2020), Bui et al. (2016) and Aditian et al. (2018)). The artificial neural network (ANN) method is a technique that uses artificial neural networks to solve problems. The ANN method makes things simpler to acquire, depict, and undertake mapping of debris flow susceptibility through one multivariate knowledge space into another by providing data collection or relevant information for fair representation mapping (Gao et al. 2021). The debris flow is a complicated process resulting from a mix of causative and triggering events. Furthermore, the strong correlations between debris flow and the causal and activating aspects are believed to be nonlinear. As a consequence, the ANN method is used to address such complex nonlinear interactions between both the elements as well as the debris flow. The ANN method's main drawback is the time it takes to convert data from one format to another in a GIS environment.

7.3.2 Random Forest (RF)

The RF method uses machine learning to generate debris flow susceptibility maps (Gao et al. 2021; Xiong et al. 2020; Dou et al. 2019; Zhang et al. 2019). The RF is a standard ensemble learning bagging algorithm that chooses the decision tree like a weak learner and strengthens the decision tree's establishment (Chen et al. 2018). The RF algorithm follows the following procedure: (1) to generate k decision trees, bootstrap a sample of the input data. (2) Again, for the division of each node in a decision tree, m characteristics are chosen at random. (3) The attribute with the most robust prediction accuracy for each node is utilised to separate the nodes. (4) Following a clear vote amongst k decision trees, the final forecast result might be achieved. The number of trees (k) and the number of forecasting variables that detach the nodes (m) were taken into account. On the one hand, the RF models' great generalisation capacity is based on many decision trees. However, once the no of trees reaches a certain threshold, the models' efficiency does not improve, and the computation cost increases. By randomly selecting the original data, the RF approach, as just a machine learning method, avoids the problem of over-fitting. It also has a greater tolerance for errors and missing data, resulting in excellent prediction accuracy.

7.3.3 Support-vector Machine (SVM)

SVM method is classified as a supervised learning model. The SVM method can change the more dimensional complex problems into easily separable problems that can be easily calculated (Xu et al. 2012). To accomplish this operation, kernel functions are typically used. Sigmoid functions (SF), linear functions (LF), radial basis functions (RBF), sigmoid functions (SF), and polynomial functions are all common kernel functions (PF). The RBF function is the most adaptable of the four types of kernel functions to the classification task of data. Debris flow susceptibility maps are created using SVM (Gao et al. (2021), Liang et al. (2020), Qing et al. (2020), Chen et al. (2020), Sun et al. (2021)) (Table 3).

8 Model Validation

Using training and validation sets, debris flow susceptibility zonation models rebuild the links between the independent and dependent variables. Field observations and statistical measures are used to test all statistical methods, artificial intelligence or machine learning, as well as semi-quantitative approaches. Different criteria can be used to distinguish and separate the validation and training sets, dictating the type of validation analysis. Random and temporal selection procedures have both been applied in the previous literature. When using a temporal validation, the information about debris flow is divided into two groups depending on temporal. (Sujatha et al. 2014; Dash et al. 2022). When using a random validation, the validation set is chosen randomly from a geographic region (Xiong et al. 2020). 86.7% of the articles that discussed the model performance validation used a random selection, while 13.3% used a temporal selection, according to the analysis of the literature collection. Validation method used for debris flow susceptibility for the period 2003–July 2022 are classified in Fig. 6 and found that the most common were receiver operating characteristic (ROC) curve (43.5%) (Qin et al. 2019; Wu et al. 2019), success/prediction rate curve (33.3%), Kappa coefficient (2.6%), Seed cell area index (SCAI) (2.6%), spatial consistency test (2.6%) (Sun et al. 2021), contingency tables (2.6%), precision (2.6%), recall (2.6%), F1 score (2.6%), field Survey Data (2.6%), and R index (2.6%) (Sujatha and Sridhar 2017).

9 Discussion and Conclusions

The topic's significance has been increasing since 2013, and so the number of research papers. The reasons behind this increase could be the advancement in remote sensing technologies, availability of modelling softwares, data accessibility, GIS, as well as the need to identify at-risk areas for land utilization planning and to prevent or mitigate debris flow losses. Out of the total 90 articles reviewed, 42% of articles are from Natural Hazards and Earth System Sciences, Journal of Mountain Science, Natural Hazards, and Remote Sensing. While if we look at the study areas, it can be inferred that maximum research on the given topic has been done in countries like China, followed by Italy, while the rest world lacks the same. This directly reflects the scope of the research on this topic in India. As per the study, it can be said that there are 96 causative factors responsible for the occurrence of the debris flow, amongst which the top five are slope, aspect, curvature, lithology and rainfall. After the study, a clear research gap can be found like amongst the thirty-seven methods available for the study; only the top five, namely support-vector machine (SVM), analytic hierarchy process (AHP), logistic regression (LR), random Forest (RF), and frequency ratio (FR) have been explored significantly. This implies that others must also be explored to get an in-depth comparison of the utility of all available methods. Talking about validation of the models, eleven methods have been used in previous studies to validate the result, where ROC and success/prediction rate curve are the ones that are used in maximum. This highlights the scope for the exploration of other validation methods. Apart from this, maximum papers have focused on the single model approach and not the mix of multiple models, which could be a good option for future study. After reading this paper, one gets to know of the scope of the work yet to be explored.

References

Achour Y, Garçia S, Cavaleiro V (2018) GIS-based spatial prediction of debris flows using logistic regression and frequency ratio models for Zêzere River basin and its surrounding area, Northwest Covilhã. Port Arabian J Geosci 11(18):1–17

Aditian A, Kubota T, Shinohara Y (2018) Comparison of GIS-based landslide susceptibility models using frequency ratio, logistic regression, and artificial neural network in a tertiary region of Ambon, Indonesia. Geomorphology 318:101–111

Aleotti P, Chowdhury R (1999) Landslide hazard assessment: summary review and new perspectives. Bull Eng Geol Env 58(1):21–44

Angillieri MYE (2020) Debris flow susceptibility mapping using frequency ratio and seed cells, in a portion of a mountain international route. Dry Central Andes Argent Catena 189:104504

Arabameri A, Chandra Pal S, Rezaie F, Chakrabortty R, Saha A, Blaschke T, Di Napoli M, Ghorbanzadeh O, Thi Ngo PT (2021) Decision tree based ensemble machine learning approaches for landslide susceptibility mapping. Geocarto Int 37:1–35

Ayalew L, Yamagishi H (2005) The application of GIS-based logistic regression for landslide susceptibility mapping in the Kakuda-Yahiko mountains. Central Japan Geomorphol 65(1–2):15–31

Banerjee P, Ghose MK, Pradhan R (2018) Analytic hierarchy process and information value method-based landslide susceptibility mapping and vehicle vulnerability assessment along a highway in Sikkim Himalaya. Arab J Geosci 11(7):1–18

Baum RL, Savage WZ, Godt JW (2008) TRIGRS—a Fortran program for transient rainfall infiltration and grid-based regional slope-stability analysis, version 2.0 Open-File Report.

Blahut J, van Westen CJ, Sterlacchini S (2010) Analysis of landslide inventories for accurate prediction of debris-fow source areas. Geomorphol 119:36–51

Bui DT, Tuan TA, Klempe H et al (2016) Spatial prediction models for shallow landslide hazards: a comparative assessment of the efficacy of support vector machines, artificial neural networks, kernel logistic regression, and logistic model tree. Landslides 13:361–378

Chen HX, Zhang LM (2015) EDDA 1.0: integrated simulation of debris flow erosion, deposition and property changes. Geosci Model Develop 8(3):829–844

Chen W, Li W, Hou E, Zhao Z, Deng N, Bai H, Wang D (2014) Landslide susceptibility mapping based on GIS and information value model for the Chencang district of Baoji. China Arabian J Geosci 7(11):4499–4511

Chen X, Chen H, You Y, Chen X, Liu J (2016) Weights-of-evidence method based on GIS for assessing susceptibility to debris flows in Kangding county, Sichuan province. China Environ Earth Sci 75(1):1–16

Chen W, Xie X, Peng J, Wang J, Duan Z, Hong H (2017) GIS-based landslide susceptibility modelling: a comparative assessment of kernel logistic regression, Naïve Bayes tree, and alternating decision tree models. Geomat Nat Haz Risk 8(2):950–973

Chen W, Xie XS, Peng JB et al (2018) GIS-based landslide susceptibility evaluation using a novel hybrid integration approach of bivariate statistical based random forest method. CATENA 164:135–149

Chen W, Shahabi H, Shirzadi A, Hong H, Akgun A, Tian Y, Liu J, Zhu AX, Li S (2019) Novel hybrid artificial intelligence approach of bivariate statistical methods-based kernel logistic regression classifier for landslide susceptibility modeling. Bull Eng Geol Env 78(6):4397–4419

Chen Y, Qin S, Qiao S, Dou Q, Che W, Su G, Yao J, Nnanwuba UE (2020) Spatial predictions of debris flow susceptibility mapping using convolutional neural networks in Jilin province. China Water 12(8):2079

Chowdhury M, Sadek AW (2012) Advantages and limitations of artificial intelligence. Artif Intell Appl Crit Transp Issues 6(3):360–375

Ciurleo M, Mandaglio MC, Moraci N (2018) Landslide susceptibility assessment by TRIGRS in a frequently affected shallow instability area. Landslides 16:175–188

Dash R, Falae P, Kanungo D (2022) Debris flow susceptibility zonation using statistical models in parts of Northwest Indian Himalayas—implementation, validation, and comparative evaluation. Nat Hazards 111(2):2011–2058

Demir G (2019) GIS-based landslide susceptibility mapping for a part of the North Anatolian Fault zone between Reşadiye and Koyulhisar (Turkey). CATENA 183:104211

Dietrich WE, Wilson CJ, Montgomery DR, McKean J (1993) Analysis of erosion thresholds, channel networks, and landscape morphology using a digital terrain model. J Geol 101:259–278

Dou Q, Qin S, Zhang Y, Ma Z, Chen J, Qiao S, Hu X, Liu F (2019) A method for improving controlling factors based on information fusion for debris flow susceptibility mapping: a case study in Jilin province. China Entropy 21(7):695

Du G, Zhang Y, Yang Z, Guo C, Yao X, Sun D (2019) Landslide susceptibility mapping in the region of eastern Himalayan syntaxis, Tibetan plateau, China: a comparison between analytical hierarchy process information value and logistic regression-information value methods. Bull Eng Geol Env 78(6):4201–4215

Elkadiri R, Sultan M, Youssef AM, Elbayoumi T, Chase R, Bulkhi AB, Al-Katheeri MM (2014) A remote sensing-based approach for debris-flow susceptibility assessment using artificial neural networks and logistic regression modeling. IEEE J Sel Topics Appl Earth Obs Remote Sens 7(12):4818–4835

Ewen J, Parkin G, O’Connell PE (2000) SHETRAN: distributed river basin fow and transport modeling system. J Hydrol Eng 5(3):250–258

Gao RY, Wang CM, Liang Z (2021) Comparison of different sampling strategies for debris flow susceptibility mapping: a case study using the centroids of the scarp area, flowing area and accumulation area of debris flow watersheds. J Mt Sci 18(6):1476–1488

Gomes R, Guimarães R, de Carvalho JO, Fernandes N, do Amaral Júnior E (2013) Combining spatial models for shallow landslides and debris-flows prediction. Remote Sens 5:2219–2237

Gorsevski, P. 2001. Statistical modeling of landslide hazard using GIS, Proceedings of the Seventh Federal Interagency Sedimentation Conference, March 25 to 29, 2001, Reno, Nevada.: 103–109.

Hammond CJ, Prellwitz RW, Miller SM, Bell D (1992) Landslide hazard assessment using monte carlo simulation christchurch. N. z. Rotterdam Neth. AA 10:959–964

He S, Pan P, Dai L, Wang H, Liu J (2012) Application of kernel-based fsher discriminant analysis to map landslide susceptibility in the Qinggan river delta. Three Gorges China Geomorphol 171–172:30–41

Heckerman D (1986) Probabilistic interpretation of MYCIN’s certainty factors. In: Kanal LN, Lemmer JF (eds) Uncertainty in artificial intelligence. Elsevier, New York, pp 298–311

Hungr O, Evans SG, Hutchinson IN (2001) A review of the classification of landslides of the flow type. Environ Eng Geosci 7:221–238

Intarawichian N, Dasananda S (2011) Frequency ratio model-based landslide susceptibility mapping in lower Mae Chaem watershed. North Thail Environ Earth Sci 64(8):2271–2285

Jiang H, Zou Q, Zhou B, Hu Z, Li C, Yao S, Yao H (2022) Susceptibility assessment of debris flows coupled with ecohydrological activation in the Eastern Qinghai-Tibet plateau. Remote Sens 14(6):1444

Jin T, Hu X, Liu B, Xi C, He K, Cao X, Luo G, Han M, Ma G, Yang Y, Wang Y (2022) Susceptibility prediction of post-fire debris flows in Xichang, China, using a logistic regression model from a spatiotemporal perspective. Remote Sens 14(6):1306

Kang S, Lee S (2018) Debris flow susceptibility assessment based on an empirical approach in the central region of South Korea. Geomorphology 308:1–12

Kanungo DP, Arora MK, Sarkar S, Gupta RP (2009) Landslide susceptibility zonation (LSZ) mapping—a review. J South Asia Disaster Stud 2:81–106

Kanungo DP, Sarkar S, Sharma S (2011) Combining neural network with fuzzy, certainty factor and likelihood ratio concepts for spatial prediction of landslides. Nat Hazards 59(3):1491–1512

Kurilla LJ, Fubelli G (2022) Global debris flow susceptibility based on a comparative analysis of a single global model versus a continent-by-continent approach. Nat Hazards 113(1):1–20

Lee S, Pradhan B (2007) Landslide hazard mapping at Selangor, Malaysia using frequency ratio and logistic regression models. Landslides 4(1):33–41

Li Y, Wang H, Chen J, Shang Y (2017) Debris flow susceptibility assessment in the Wudongde dam area, China based on rock engineering system and fuzzy C-means algorithm. Water 9(9):669

Li Y, Chen J, Tan C, Li Y, Gu F, Zhang Y, Mehmood Q (2021a) Application of the borderline-SMOTE method in susceptibility assessments of debris flows in Pinggu district, Beijing. China Nat Hazards 105(3):2499–2522

Li Z, Chen J, Tan C, Zhou X, Li Y, Han M (2021b) Debris flow susceptibility assessment based on topo-hydrological factors at different unit scales: a case study of Mentougou district. Beijing Environ Earth Sci 80(9):1–19

Liang Z, Wang CM, Zhang ZM (2020) A comparison of statistical and machine learning methods for debris flow susceptibility mapping. Stoch Env Res Risk Assess 34(11):1887–1907

Melo R, Vieira G, Caselli A, Ramos M (2012) Susceptibility modelling of hummocky terrain distribution using the information value method (Deception Island, Antarctic Peninsula). Geomorphology 155–156:88–95

Merghadi A, Yunus AP, Dou J et al (2020) Machine learning methods for landslide susceptibility studies: a comparative overview of algorithm performance. Earth Sci Rev 207:103225

Nettleton IM, Martin S, Hencher S, Moore R (2005) Debris flow types and mechanisms In: Scottish Road Network Landslides Study (August), The Scottish Executive, Edinburgh, pp. 1–119.

Pack RT, Tarboton DG, Goodwin CN (2001) Assessing terrain stability in a GIS using SINMAP.

Pardeshi SD, Autade SE, Pardeshi SS (2013) Landslide hazard assessment: recent trends and techniques. Springer plus 2:1–11

Park DW, Lee SR, Vasu NN, Kang SH, Park JY (2016) Coupled model for simulation of landslides and debris flows at local scale. Nat Hazards 81:1653–1682

Pawley S, Hartman G, Chao D (2017) Landslide susceptibility modelling of Alberta, Canada: comparative results from multiple statistical and machine learning prediction method. Geol Soc Am. https://doi.org/10.1130/abs/2017AM-304456

Pham BT, Pradhan B, Bui DT, Prakash I, Dholakia MB (2016) A comparative study of different machine learning methods for landslide susceptibility assessment: a case study of Uttarakhand area (India). Environ Model Softw 84:240–250

Polat A, Erik D (2020) Debris flow susceptibility and propagation assessment in West Koyulhisar. Turk J Mountain Sci 17(11):2611–2623

Qiao S, Qin S, Chen J, Hu X, Ma Z (2019) The application of a three-dimensional deterministic model in the study of debris flow prediction based on the rainfall-unstable soil coupling mechanism. Process 7(2):99

Qiao SS, Qin SW, Sun JB, Che WC, Yao JY, Su G, Chen Y, Nnanwuba UE (2021) Development of a region-partitioning method for debris flow susceptibility mapping. J Mt Sci 18(5):1177–1191

Qin S, Lv J, Cao C, Ma Z, Hu X, Liu F, Qiao S, Dou Q (2019) Mapping debris flow susceptibility based on watershed unit and grid cell unit: a comparison study. Geomat Nat Haz Risk 10(1):1648–1666

Qing F, Zhao Y, Meng X, Su X, Qi T, Yue D (2020) Application of machine learning to debris flow susceptibility mapping along the China-Pakistan Karakoram highway. Remote Sensing 12(18):2933

Qiu C, Su L, Zou Q, Geng X (2022) A hybrid machine-learning model to map glacier-related debris flow susceptibility along Gyirong Zangbo watershed under the changing climate. Sci Total Environ 818:151752

Saaty T (2008) Decision making with the analytical hierarchy process. Int J Services Sci 1(1):83–98

Saha A, Saha S (2021) Application of statistical probabilistic methods in landslide susceptibility assessment in Kurseong and its surrounding area of Darjeeling Himalayan, India: RS-GIS approach. Environ Dev Sustain 23:4453–4483

Saha AK, Gupta RP, Sarkar I, Arora MK, Csaplovics E (2005) An approach for GIS-based statistical landslide susceptibility zonation—with a case study in the Himalayas. Landslides 2(1):61–69

Sarkar S, Kanungo DP, Patra AK, Kumar P (2008) GIS based spatial data analysis for landslide susceptibility mapping. J Mt Sci 5(1):52–62

Sassa K, Fukuoka H, Wang F, Wang G (2007) Progress in landslide science. Springer Verlag, Berlin

Shannon CE (1948) A mathematical theory of communication. Bell Labs Tech J 27(4):379–423

Shaw S, Johnson D (1995) Slope morphology model derived from digital elevation data Northwest Arc/Info Users Conference.

Shen P, Zhang L, Chen H, Fan R (2018) EDDA 2.0: integrated simulation of debris fow initiation and dynamics considering two initiation mechanisms. Geosci Model Develop 11:2841–2856

Shortliffe EH, Buchanan BG (1975) A model of inexact reasoning in medicine; math. Biosci 23(3):351–379

Sujatha ER, Sridhar V (2017) Mapping debris flow susceptibility using analytical network process in Kodaikkanal hills, Tamil Nadu (India). J Earth Syst Sci 126(8):1–18

Sujatha ER, Kumaravel P, Rajamanickam GV (2014) Assessing landslide susceptibility using Bayesian probability-based weight of evidence model. Bull Eng Geol Env 73(1):147–161

Sun J, Qin S, Qiao S, Chen Y, Su G, Cheng Q, Zhang Y, Guo X (2021) Exploring the impact of introducing a physical model into statistical methods on the evaluation of regional scale debris flow susceptibility. Nat Hazards 106(1):881–912

Varnes DJ (1978) Slope movement types and processes. Spec Rep 176:11–33

Vlčko J, Wagner P, Rychlíková Z (1980) Evaluation of regional slope stability. Mineralia Slovacal 2(3):275–283

Wang Q, Li W, Chen W, Bai H (2015) GIS-based assessment of landslide susceptibility using certainty factor and index of entropy models for the Qianyang county of Baoji city. China J Earth Syst Sci 124(7):1399–1415

Wu S, Chen J, Zhou W, Iqbal J, Yao L (2019) A modified Logit model for assessment and validation of debris-flow susceptibility. Bull Eng Geol Env 78(6):4421–4438

Wubalem A (2021) Landslide susceptibility mapping using statistical methods in Uatzau catchment area, northwestern Ethiopia. Geoenviron Disasters 8(1):1–21

Xiong K, Adhikari BR, Stamatopoulos CA, Zhan Y, Wu S, Dong Z, Di B (2020) Comparison of different machine learning methods for debris flow susceptibility mapping: a case study in the Sichuan Province. China Remote Sens 12(2):295

Xu C, Dai F, Xu X et al (2012) GIS-based support vector machine modeling of earthquake-triggered landslide susceptibility in the Jianjiang river watershed, China. Geomorphology 145:70–80

Xu W, Yu W, Jing S, Zhang G, Huang J (2013) Debris flow susceptibility assessment by GIS and information value model in a large-scale region, Sichuan province (China). Nat Hazards 65(3):1379–1392

Yalcin A, Reis S, Aydinoglu AC, Yomralioglu T (2011) A GIS-based comparative study of frequency ratio, analytical hierarchy process, bivariate statistics and logistics regression methods for landslide susceptibility mapping in Trabzon. NE Tur Catena 85(3):274–287

Yang Y, Yang J, Xu C, Xu C, Song C (2019) Local-scale landslide susceptibility mapping using the B-Geo SVC model. Landslides 16:1301–1312

Yin KL, Yan TZ (1988) Statistical prediction model for slope instability of metamorphosed rocks. In: Bonnard C (ed) Proceeding’s 5th International Symposium in Landslides, Lausanne, 2. Balkema, Rotterdam, Netherlands, pp 1269–1272

Youssef AM, Pourghasemi HR, El-Haddad BA, Dhahry BK (2016) Landslide susceptibility maps using different probabilistic and bivariate statistical models and comparison of their performance at Wadi Itwad basin, Asir region, Saudi Arabia. Bull Eng Geol Env 75(1):63–87

Zhang S, Yang H, Wei F, Jiang Y, Liu D (2014) A model of debris flow forecast based on the water-soil coupling mechanism. J Earth Sci 25:757–763

Zhang Y, Ge T, Tian W, Liou YA (2019) Debris flow susceptibility mapping using machine-learning techniques in Shigatse area. China Remote Sens 11(23):2801

Zhang Y, Chen J, Wang Q, Tan C, Li Y, Sun X, Li Y (2022) Geographic information system models with fuzzy logic for susceptibility maps of debris flow using multiple types of parameters: a case study in Pinggu district of Beijing, China. Nat Hazard 22(7):2239–2255

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kumar, A., Sarkar, R. Debris Flow Susceptibility Evaluation—A Review. Iran J Sci Technol Trans Civ Eng 47, 1277–1292 (2023). https://doi.org/10.1007/s40996-022-01000-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40996-022-01000-x